- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

利用IntelXPU加速Web人工智能应用 - 胡宁馨

展开查看详情

1 .利用 加速 基于 的人工智能应用 胡宁馨 平台工程团队

2 .人工智能(AI)概况 人工智能 AI 机器学习 Machine learning 深度学习 Deep learning 2

3 .深度学习基础 训练( ) Human Bicycle Forward “Strawberry” ? “Bicycle” Lots of Error labeled data! Backward Strawberry Model Weights and Topology Description, integrated into application 推理( ) Forward “Bicycle” % confidence ??????

4 .深度学习的应用场景 图像识别 语言处理 物体检测 图像 语音生成 图像分割 推荐系统 4

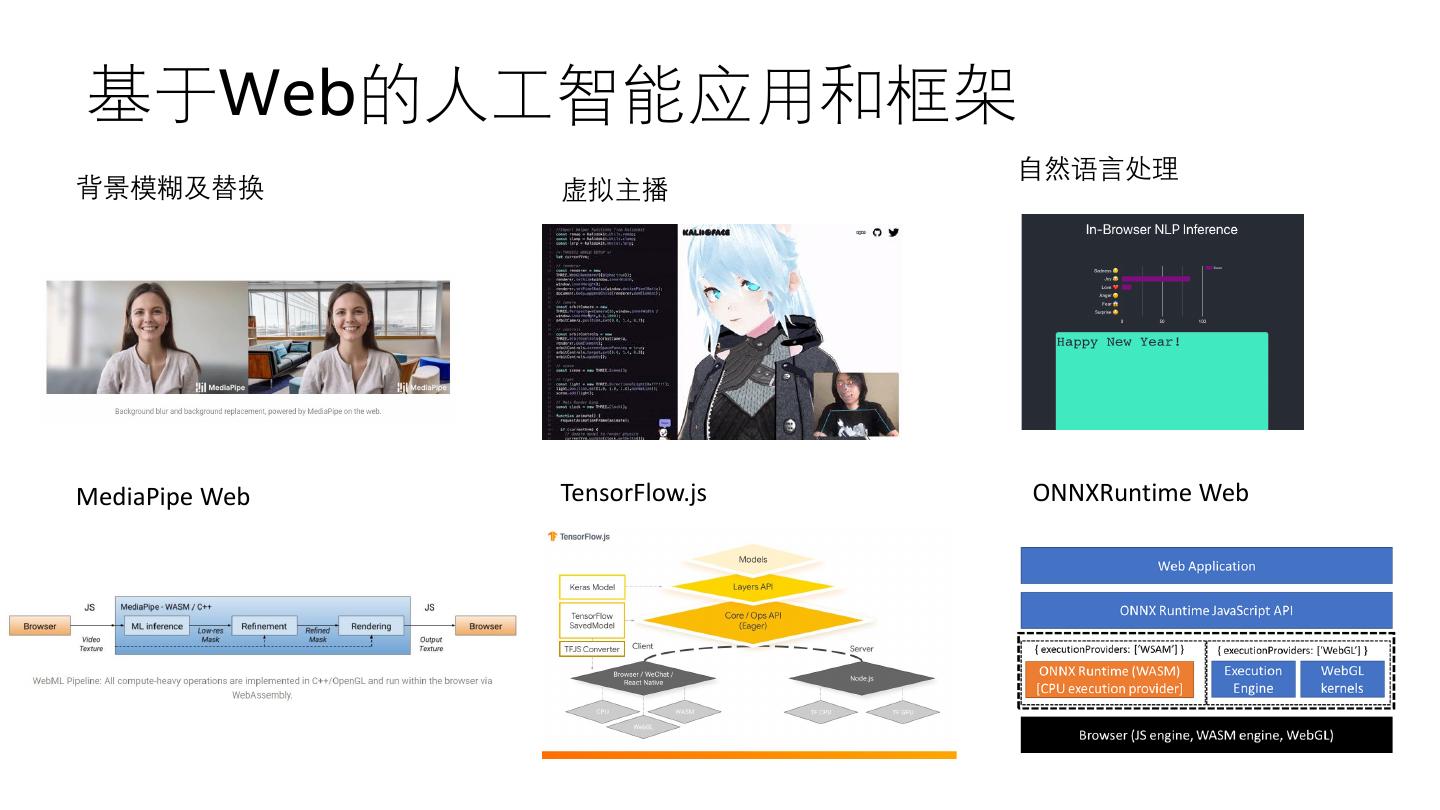

5 .基于Web的人工智能应用和框架 自然语言处理 背景模糊及替换 虚拟主播 MediaPipe Web TensorFlow.js ONNXRuntime Web

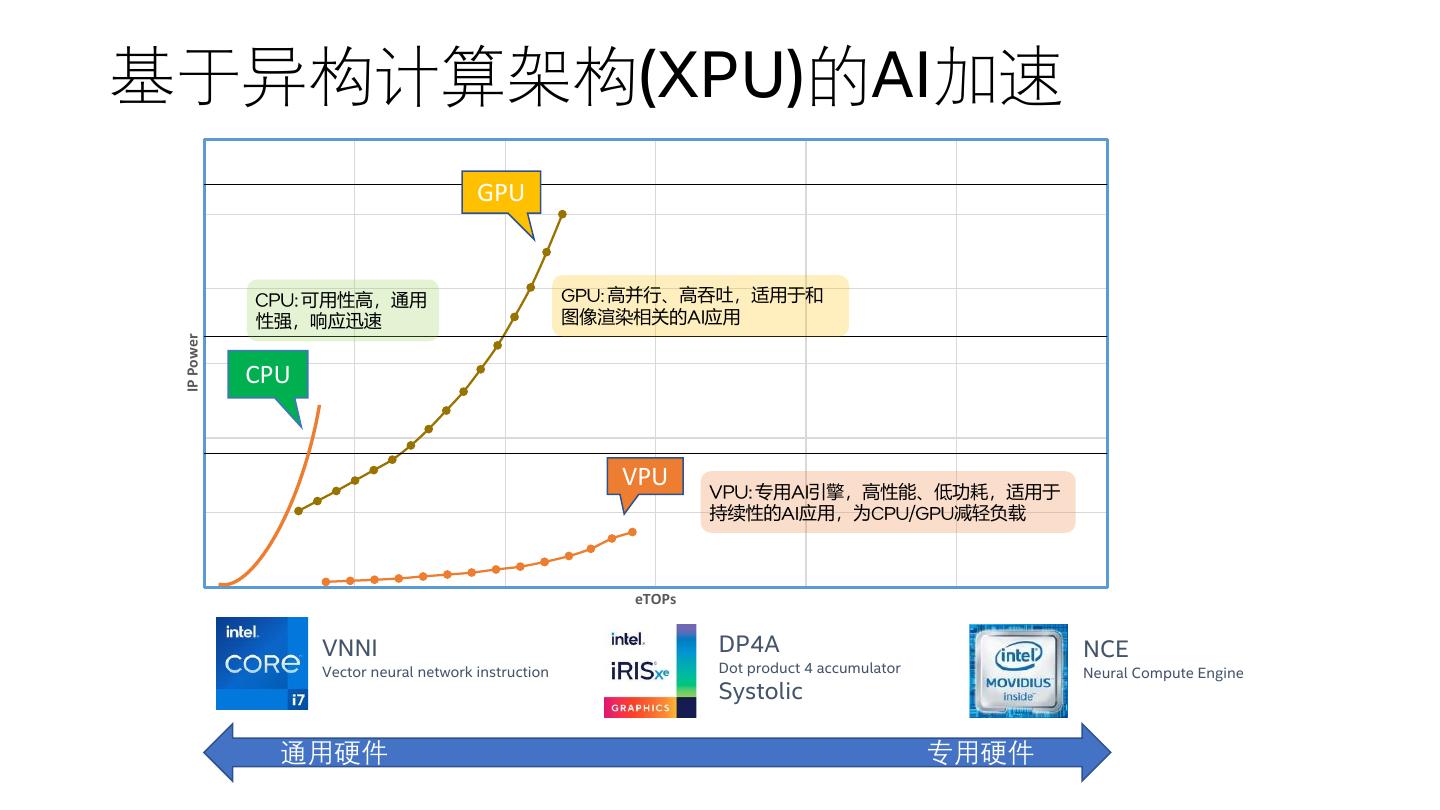

6 .基于异构计算架构(XPU)的AI加速 GPU 可用性高,通用 高并行、高吞吐,适用于和 性强,响应迅速 图像渲染相关的 应用 IP Power CPU VPU 专用 引擎,高性能、低功耗,适用于 持续性的 应用,为 减轻负载 eTOPs VNNI DP4A NCE Vector neural network instruction Dot product 4 accumulator Neural Compute Engine Systolic 通用硬件 专用硬件

7 . Web和原生应用之间的性能鸿沟 MobileNetV2* Inference Latency on TGL§ (smaller is better) 20 18.2 18 OpenCL Intel extensions (SIMD-group/block I/O) vs. OpenGL fragment shaders 16 Inference Latency (ms) 14 WebGL 5.5X 11.2 AVX256/TBB vs. SIMD128 (SSE4.2)/ Web workers 12 10 AVX512/VNNI/TBB vs. SIMD128 (SSE4.2)/Web workers 8 Wasm 3.6X 7X 6 VNNI:Vector Neural Network Instruction 4 3.3 3.08 (向量神经网络指令) 2.26 2 1.6 2 0.9 WebNN WebNN Native£ Native WebNN Native 0 GPU CPU CPU VNNI WebGL/FP16 WebNN/GPU/FP16 OpenVINO/GPU/FP16 * Batch size: 1, input size: 224x224, width multiplier: 1.0 Wasm/SIMD/MT/FP32 WebNN/CPU/FP32 OpenVINO/CPU/FP32 § TGL i7-1165G7 WebNN/VNNI/INT8 OpenVINO/VNNI/INT8 £ Native means OpenVINO

8 .Web神经网络(WebNN)API Image Object Background Noise Natural Use cases Classification Detection Segmentation Suppression Language • Web AI用例驱动 TensorFlow.js / ONNX Runtime MediaPipe • 兼容多种Web AI框架 Frameworks OpenCV.js TFLite Web Web (live streaming) Web API WebAssembly WebNN WebGL/WebGPU • 统一的神经网络抽象 • 互操作性,可扩展性 Web Web Browser JavaScript Runtime Engines (e.g., Chrome/Edge) (e.g., Electron/Node.js) Native XNNPACK NNAPI DirectML oneDNN OpenVINO • 基于操作系统构建 ML APIs • 访问异构硬件加速 Hardware CPU GPU VPU

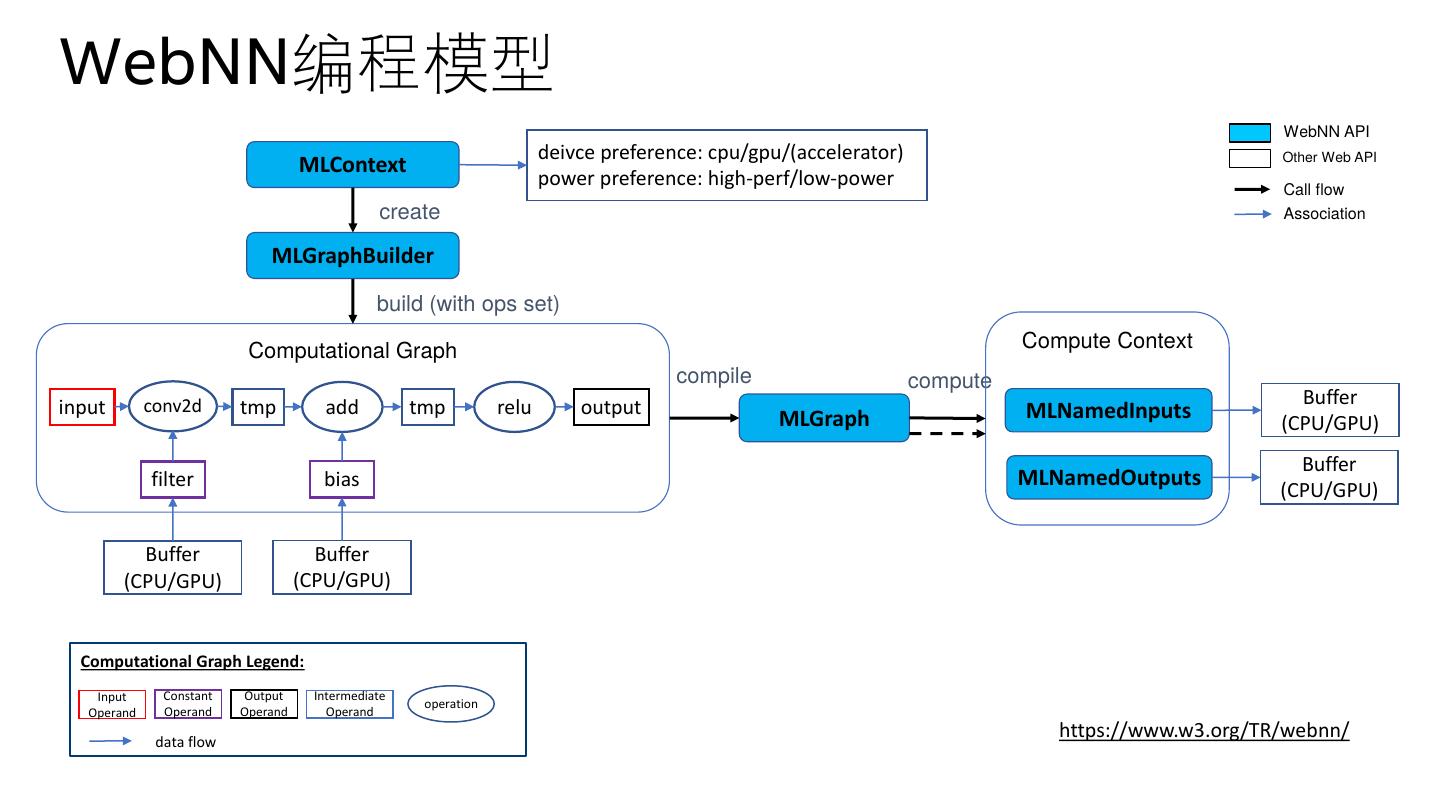

9 .WebNN编程模型 WebNN API deivce preference: cpu/gpu/(accelerator) Other Web API MLContext power preference: high-perf/low-power Call flow create Association MLGraphBuilder build (with ops set) Computational Graph Compute Context compile compute input conv2d tmp add tmp relu output Buffer MLGraph MLNamedInputs (CPU/GPU) Buffer filter bias MLNamedOutputs (CPU/GPU) Buffer Buffer (CPU/GPU) (CPU/GPU) Computational Graph Legend: Input Constant Output Intermediate operation Operand Operand Operand Operand data flow https://www.w3.org/TR/webnn/

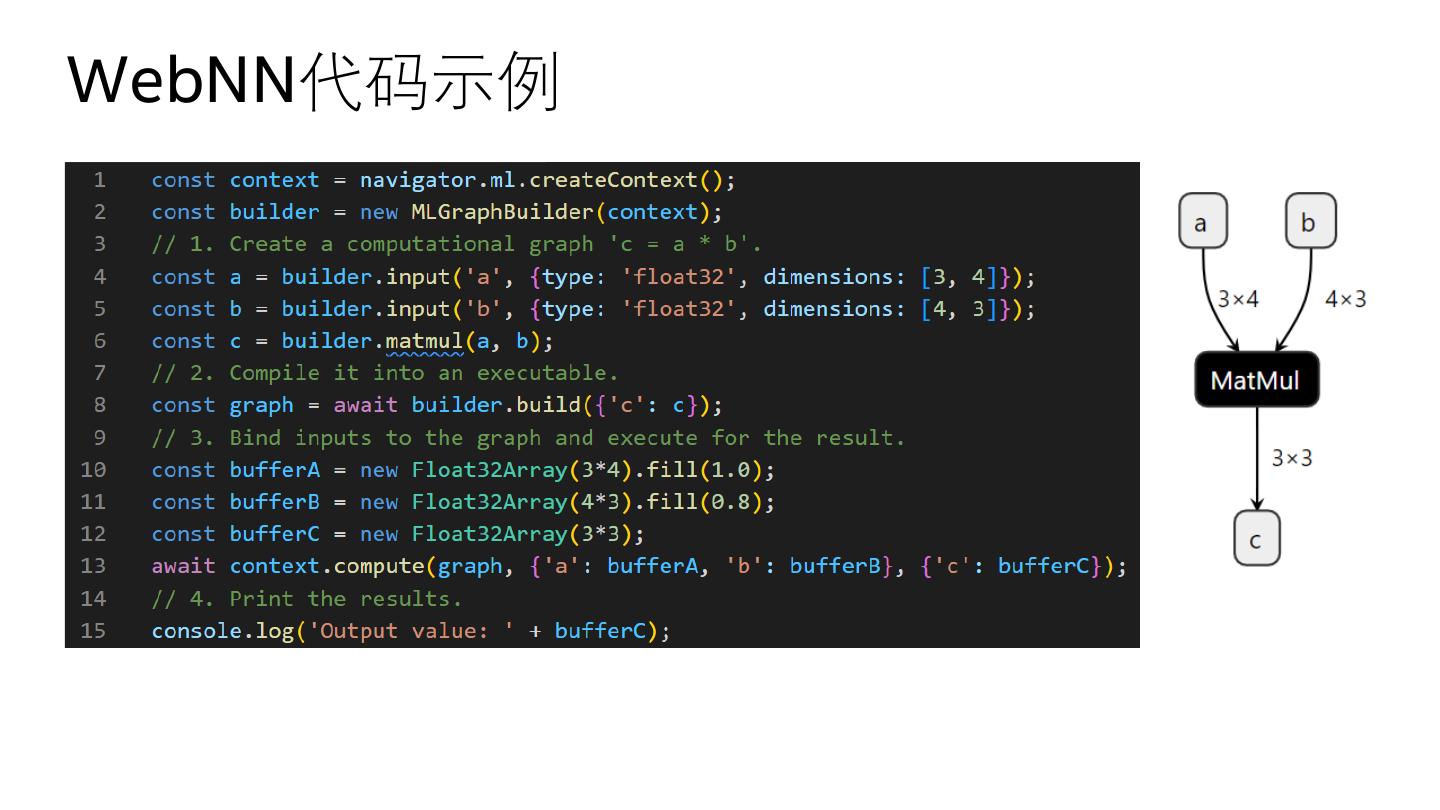

10 .WebNN代码示例

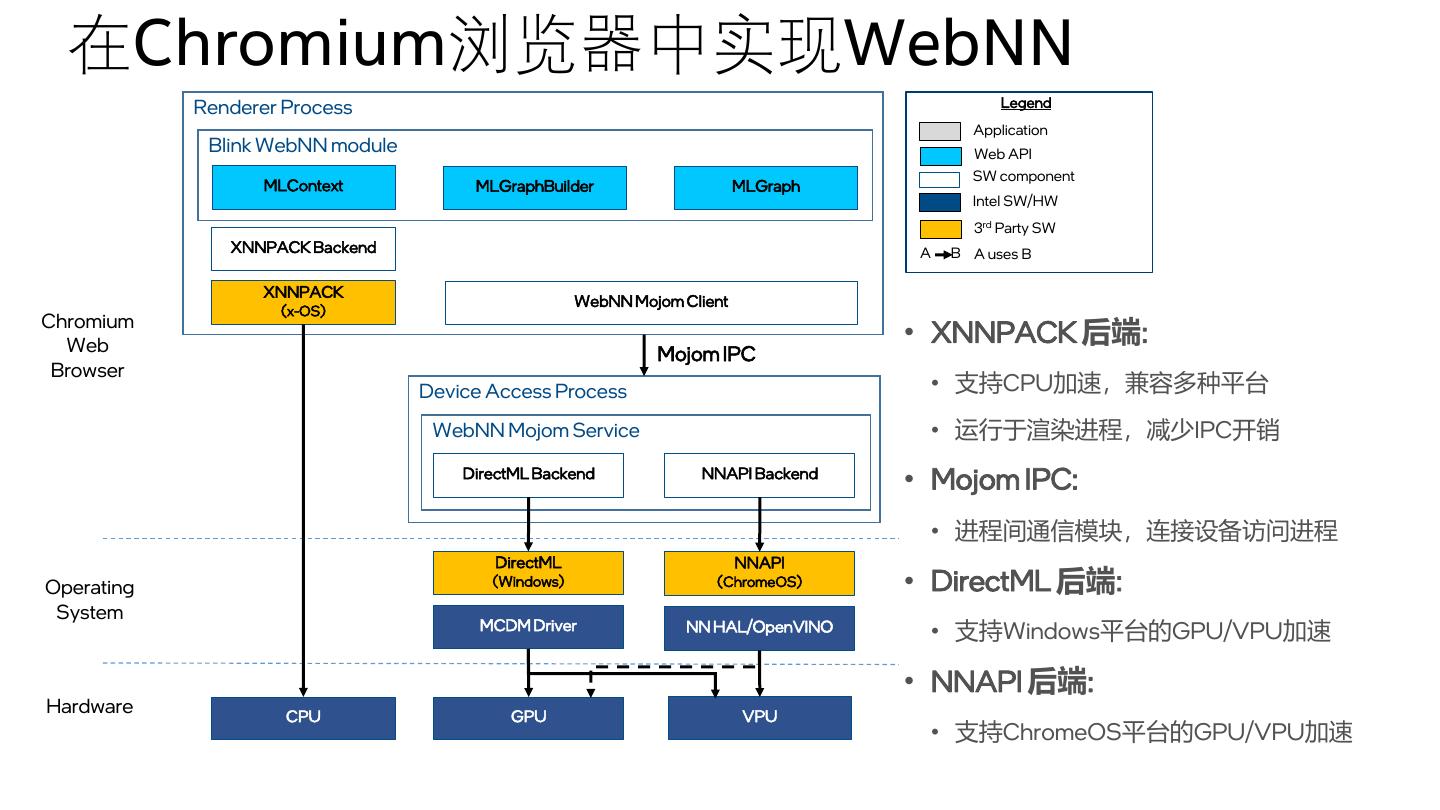

11 . 在Chromium浏览器中实现WebNN Renderer Process Legend Application Blink WebNN module Web API SW component MLContext MLGraphBuilder MLGraph Intel SW/HW 3rd Party SW XNNPACK Backend A B A uses B XNNPACK WebNN Mojom Client (x-OS) Chromium Web • XNNPACK 后端: Mojom IPC Browser Device Access Process • 支持CPU加速,兼容多种平台 WebNN Mojom Service • 运行于渲染进程,减少IPC开销 DirectML Backend NNAPI Backend • Mojom IPC: • 进程间通信模块,连接设备访问进程 DirectML NNAPI Operating (Windows) (ChromeOS) • DirectML 后端: System MCDM Driver NN HAL/OpenVINO • 支持Windows平台的GPU/VPU加速 • NNAPI 后端: Hardware CPU GPU VPU • 支持ChromeOS平台的GPU/VPU加速

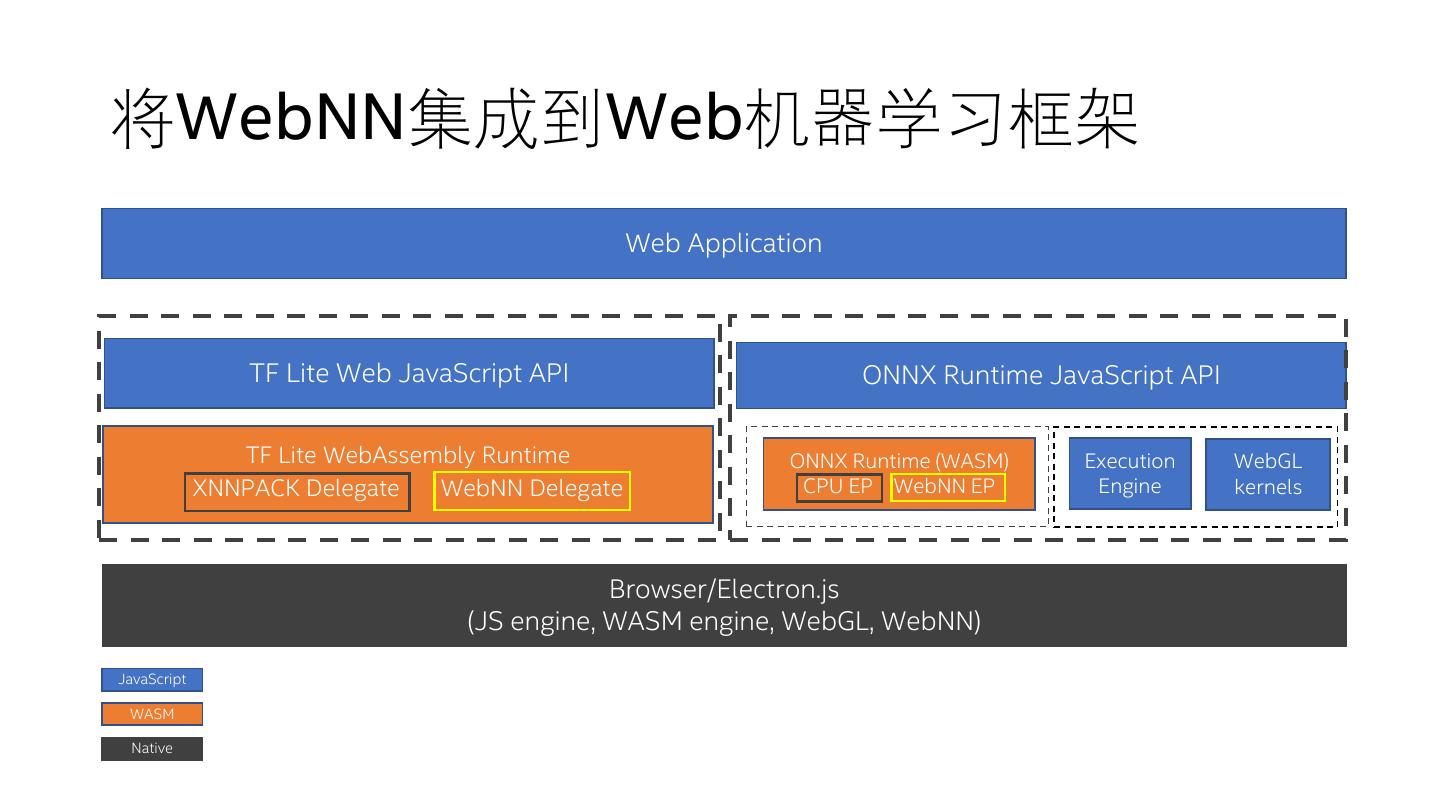

12 .将WebNN集成到Web机器学习框架 Web Application TF Lite Web JavaScript API ONNX Runtime JavaScript API TF Lite WebAssembly Runtime ONNX Runtime (WASM) Execution WebGL XNNPACK Delegate WebNN Delegate CPU EP WebNN EP Engine kernels Browser/Electron.js (JS engine, WASM engine, WebGL, WebNN) JavaScript WASM Native

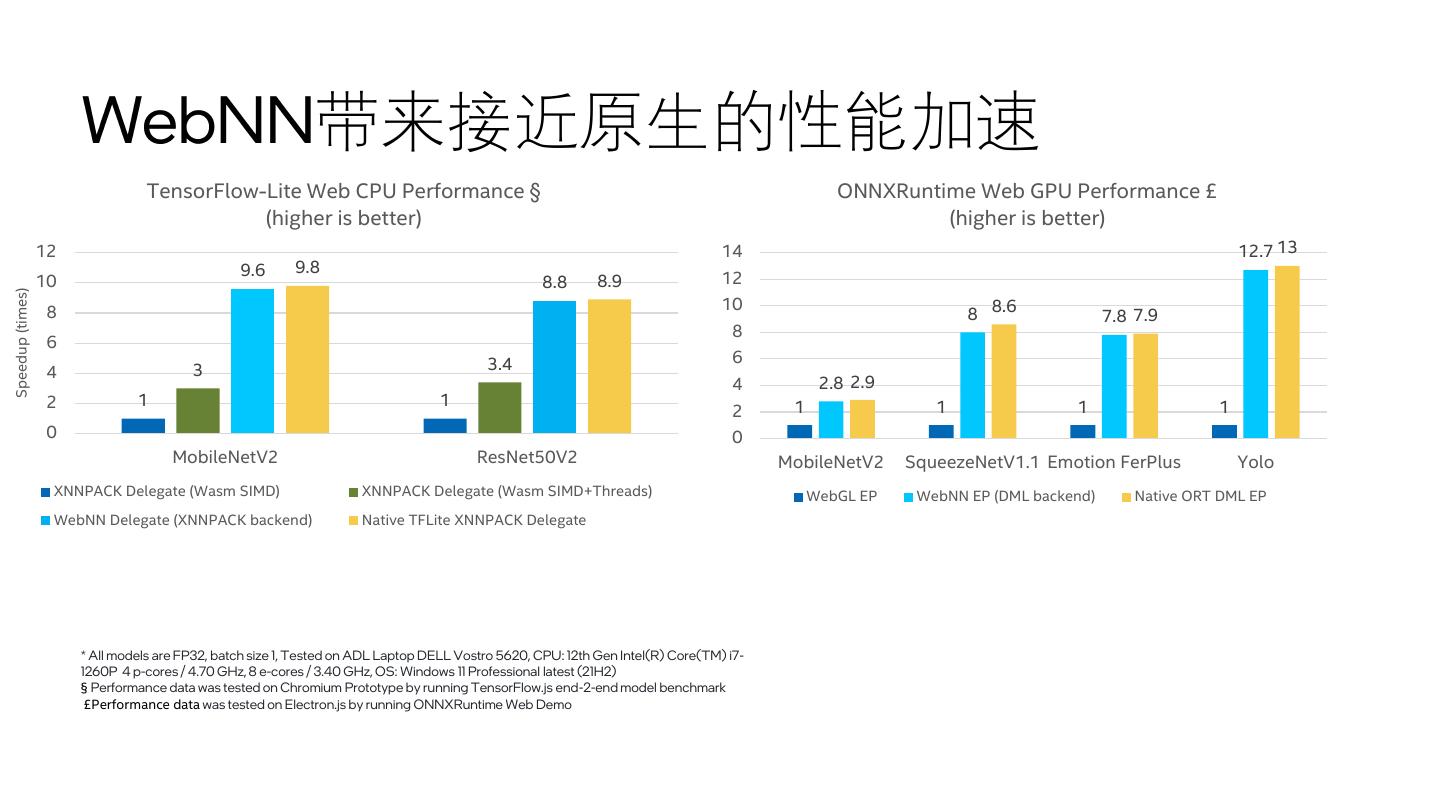

13 . 带来接近原生的性能加速 TensorFlow-Lite Web CPU Performance § ONNXRuntime Web GPU Performance £ (higher is better) (higher is better) 12 14 12.7 13 9.6 9.8 10 8.8 8.9 12 Speedup (times) 10 8 8 8.6 7.8 7.9 8 6 3.4 6 4 3 4 2.8 2.9 2 1 1 1 1 1 1 2 0 0 MobileNetV2 ResNet50V2 MobileNetV2 SqueezeNetV1.1 Emotion FerPlus Yolo XNNPACK Delegate (Wasm SIMD) XNNPACK Delegate (Wasm SIMD+Threads) WebGL EP WebNN EP (DML backend) Native ORT DML EP WebNN Delegate (XNNPACK backend) Native TFLite XNNPACK Delegate * All models are FP32, batch size 1, Tested on ADL Laptop DELL Vostro 5620, CPU: 12th Gen Intel(R) Core(TM) i7- 1260P 4 p-cores / 4.70 GHz, 8 e-cores / 3.40 GHz, OS: Windows 11 Professional latest (21H2) § Performance data was tested on Chromium Prototype by running TensorFlow.js end-2-end model benchmark £Performance data was tested on Electron.js by running ONNXRuntime Web Demo

14 .总结 • 人工智能和深度学习为Web应用带来全新的用户体验 • 基于异构计算架构(XPU)的硬件加速器为Web平台带来挑战 • Web神经网络(WebNN) API将深度学习/神经网络抽象引入Web平台, 提供统一的编程接口,支持多种操作系统,访问XPU硬件加速器,加 速Web上的人工智能应用和框架 • 欢迎广大开发者参与到W3C WebNN API的标准制定和基于开源Web浏 览器的实现中来!