- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

利用云-端协同AI应用开发框架快速开发端上加速的AI应用 - Austin Zhang

展开查看详情

1 .利用云-端协同AI应用开发框架 快速开发端上加速的AI应用 Austin Zhang https://github.com/intel/cloud-client-ai-service-framework

2 .Preface • More and more applications have AI assistant functions • Powerful cloud-based AI usages • Some additional limitations for AI based application development on client • AI programing knowledge and skills • Compute intensive process in training and inference on hardware • Environment requirement from latency, privacy, bandwidth cost • How to meet all those requirement but keep simple and leverage client capability

3 . app development models Client Apps / Web pages Cloud-Client app dev model REST APIs Cloud Client apps AI Frameworks, Cloud-centric CSP (3rd party) SDK AI models AI Client app dev model Gstreamer, MKL-DNN, CUDA Service APIs OS AI services models HW platforms Traditional AI Service Runtime Client app dev model App AI engines (supported: frwk. OpenVINO, TensorFlow, PyTorch, Client Apps ONNX) oneAPI App frameworks Cloud Client AI service framework (w/ Intel AI enabling or extensions) Dep libs, AI models OS, container runtime Gstreamer, OpenVINO, oneAPI Intel XPUs OS Intel XPUs

4 .What is Intel Cloud Client AI service framework • Service – abstract the AI capabilities as services APIs • Framework - facilitate CSPs/ISVs to develop AI services for client • Client AI - expose client HW AI capabilities to application developers • Cloud – provide seamless dev experience for cloud/web app developers • Intel - platform differentiating capability developed by Intel

5 .CCAI architecture (Jul’22 v1.3) opensource Product specific Container E2E Solution Reference Implementation / Web Based Demo Application Vision Video Audio Text Language Scenarios OV IE service APIs service APIs service APIs service APIs service APIs service APIs APIs AI service framework in container API gateway (RESTful, gRPC) M Cloud-Client o AI Service FCGI server RESTful services gRPC server gRPC services Sec- Simula- Update Health d Framework urity Log Video tion lib service monitor e (CCAI) sys- Policy Health Capability Model tem AI Inference Pipeline management l Engine Agent Manager Agent s runtime OpenVINO Intel- Intel- ONNX Paddle- Inference optimized optimized Runtime Paddle Engine PyTorch TensorFlow Low Level Stacks oneDNN, oneDAL, oneTBB, oneMKL, oneVPL, oneCCL Container Base (e.g. Ubuntu 20.04) Host OS (Linux, Windows) Bare Metal Intel XPUs

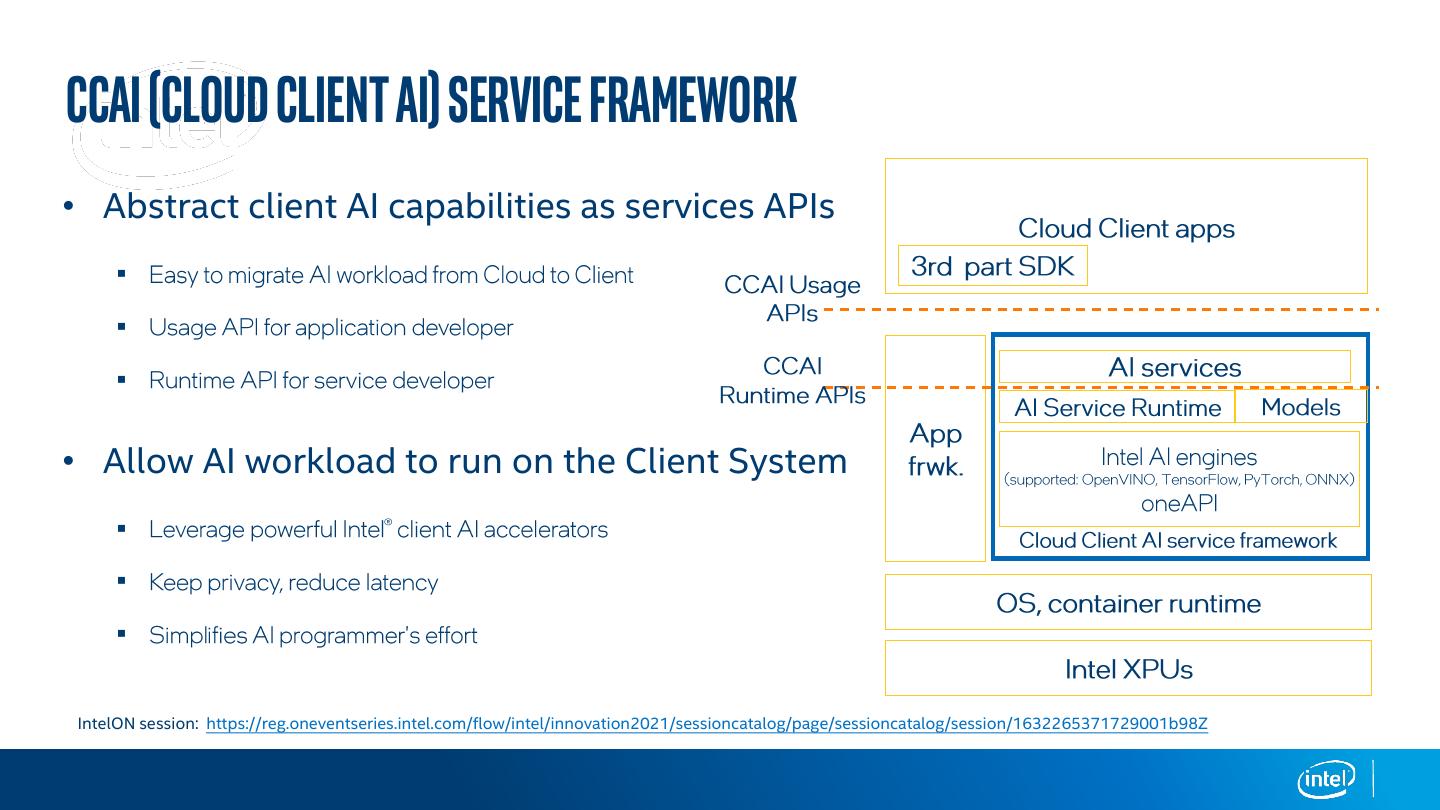

6 .CCAI (Cloud Client AI) Service Framework • Abstract client AI capabilities as services APIs ▪ ▪ ▪ • Allow AI workload to run on the Client System ▪ ▪ ▪ IntelON session: https://reg.oneventseries.intel.com/flow/intel/innovation2021/sessioncatalog/page/sessioncatalog/session/1632265371729001b98Z

7 .CCAI usage API and Runtime API Application developer • CCAI provides 2- levels API targeted for different developers Service • developer fcgi_py_tts (for TTS usage) fcgi_live_asr (for ASR usage) fcgi_face_detection (for face detection usage) fcgi_classification (for image Classification usage) fcgi_py_formula (for formula recognition usage) • enum irtStatusCode irt_infer_from_image(struct irtImageIOBuffers& tensorData, const std::string& modelFile, const enum irtInferenceEngine backendEngine, struct serverParams& remoteServerInfo); Speech inference API enum irtStatusCode irt_infer_from_speech(const struct irtWaveData& waveData, std::string configurationFile, std::vector<char>& inferenceResult, const enum irtInferenceEngine backendEngine, struct serverParams& remoteServerInfo);

8 .CCAI usage API • CCAI usage APIs provide ready-to-use AI usage functions but keep flexibility to do the algorithm customization. ……… Setup inference Using framework Find algorithm framework runtime Pre-processing Post-processing specific DL APIs //Logic A ……… ……… //AI function ……… ……… //Logic B Using usage API Replacing ……… (default) algorithms ………

9 .CCAI runtime API • CCAI runtime APIs provide inference functions according to various task types with underlaying HW optimization. Optimization ……… Setup inference Using framework Find algorithm framework runtime Pre-processing Post-processing specific DL APIs //Logic A ……… ……… //AI function ……… ……… Using runtime //Logic B Pre-processing API Post-processing ……… ……… algorithms

10 .Intel CCAI (Cloud Client AI) Service Framework – Case Study Current popular Inference Engine (e.g., OpenVINO/PyTorch) is a setting a high bar for programmer: ▪ Must have enough knowledge of AI programing (matrix, vector, input/output blob structure, pro/post processing, models …) ▪ Know how/where to prepare algorithm model(s) ▪ Programing with specific framework language and APIs (OpenVINO/PyTorch)

11 .Intel CCAI (Cloud Client AI) Service Framework – Case Study (Cont’d)

12 .E2E usage APIs (as examples) CCAI 1.3 provided 2 End-2-End user scenarios APIs: • Smart photo search reference implementation • Student pose estimation reference implementation Provide ready-to-use reference solutions user scenarios driven APIs by: • Assemble multiple models • Hidden complicated pre-post processing and glue codes • Provide straight-forward APIs

13 .CCAI FEATURES LIST (To CCAI 1.3) • Provide RESTFul and gPRC AI usage (sample) APIs for application developers • vision, video, audio, text, scenarios • Provide python and C++ APIs for services developers • Multiple inference backend support • OpenVINO, PyTorch, TF, ONNX, PaddlePaddle • Support inference policy (local, remote, by device) • Provide services health monitor mechanism • Provide video pipeline manager to easily construct video process • E2E Reference solution for quickly customization • Smart photo search reference implementation • Student pose estimation reference implementation • Authentication support • DNS Interceptor • Containerized deployment • Windows support via WSL

14 .CCAI Latest Release • Codes: https://github.com/intel/cloud-client-ai-service-framework • Document: https://intel.github.io/cloud-client-ai-service-framework/

15 .Q&A • Suggestions / Proposals / Features requests • Need help for using CCAI on Intel IA platforms