- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

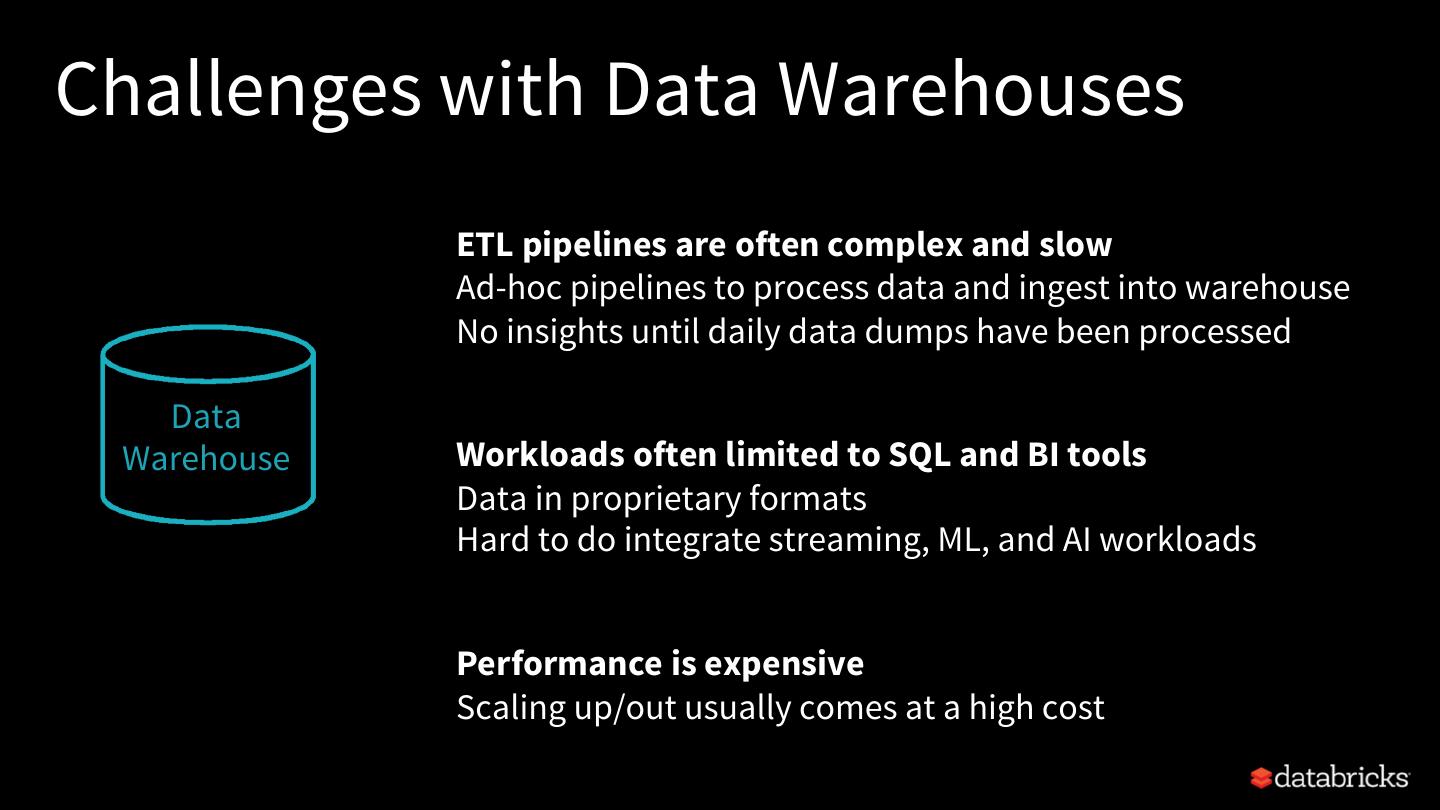

- 已成功复制到剪贴板

Databricks Delta: A Unified Data Management System for Real-time Big Data

越来越多的数据量和种类以及更快地从中获得价值的迫切需要为数据管理带来了重大挑战。原始业务数据需要被吸收、整理和优化,以使数据科学家和业务分析师能够回答他们复杂的查询和调查。传统的建筑通常需要结合

-用于低延迟接收的流媒体系统,

-用于廉价、大规模、长期存储和

-数据仓库需要数据湖无法提供的高并发性和可靠性(但成本更高)。

跨各种存储系统构建解决方案会导致复杂且容易出错的ETL数据管道。在Databricks,我们在所有规模的组织中都看到了这些问题。为了从根本上简化数据管理,我们构建了Databricks Delta,这是一种新型的统一数据管理系统,它提供了

1.数据仓库的可靠性和性能:delta支持事务性插入、删除、升迁和查询;这可以从数百个应用程序实现可靠的并发访问。此外,delta索引、压缩和缓存数据,从而比在Parquet上运行的apache spark获得高达100倍的性能。

2.流系统的速度:delta以事务方式在几秒钟内合并新数据,并使这些数据立即可用于使用流或批处理的高性能查询。

3.数据湖的规模和成本效率:delta以开放式Apache拼花格式将数据存储在类似S3的云blob存储中。从这些系统中,它继承了低成本、巨大的可扩展性、对并发访问的支持以及高读写吞吐量。

使用delta,组织不再需要在存储系统属性之间进行权衡。

展开查看详情

1 .Databricks Delta A Unified Data Management Platform for Real-time Big Data Tathagata “TD” Das @tathadas XLDB 2019 4th April, Stanford

2 .About Me Started Streaming project in AMPLab, UC Berkeley Currently focused on building Structured Streaming Senior Engineer on the StreamTeam @

3 .Building Data Analytics platform is hard ???? Data streams Insights

4 .Traditional Data Warehouses ETL SQL Data streams Data Warehouse Insights

5 .Challenges with Data Warehouses ETL pipelines are often complex and slow Ad-hoc pipelines to process data and ingest into warehouse No insights until daily data dumps have been processed Data Warehouse Workloads often limited to SQL and BI tools Data in proprietary formats Hard to do integrate streaming, ML, and AI workloads Performance is expensive Scaling up/out usually comes at a high cost

6 .Data Lakes SQL scalable ETL ML, AI streaming Data streams Data Lake Insights

7 .Data Lakes + Spark = Awesome! STRUCTURED SQL, ML, STREAMING STREAMING Data streams Data Lake Insights The 1st Unified Analytics Engine

8 .Advantages of Data Lakes ETL pipelines are complex and slow simpler and fast Unified Spark API between batch and streaming simplifies ETL Raw unstructured data available as structured data in minutes Workloads limited not limited anything! Data Lake Data in files with open formats Integrate with data processing and BI tools Integrate with ML and AI workloads and tools Performance is expensive cheaper Easy and cost-effective to scale out compute and storage

9 .Challenges of Data Lakes in practice

10 .Challenges of Data Lakes in practice ETL @

11 . Evolution of a Cutting-Edge Data Pipeline Events ? Streaming Analytics Data Lake Reporting

12 . Evolution of a Cutting-Edge Data Pipeline Events Streaming Analytics Data Lake Reporting

13 . Challenge #1: Historical Queries? λ-arch 1 λ-arch Events 1 1 λ-arch Streaming Analytics Data Lake Reporting

14 . Challenge #2: Messy Data? λ-arch 1 λ-arch Events 1 2 Validation 1 λ-arch Streaming Analytics 2 Validation Data Lake Reporting

15 . Challenge #3: Mistakes and Failures? λ-arch 1 λ-arch Events 1 2 Validation 1 λ-arch Streaming 3 Reprocessing Analytics 2 Validation Partitioned 3 Reprocessing Data Lake Reporting

16 . Challenge #4: Query Performance? λ-arch 1 λ-arch Events 1 2 Validation 1 λ-arch Streaming 3 Reprocessing Analytics 4 Compaction 2 Validation Partitioned 2 4 Scheduled to Avoid Compaction Reprocessing Data Lake 4 Compact Reporting Small Files

17 .Challenges with Data Lakes: Reliability Failed production jobs leave data in corrupt state requiring tedious recovery Lack of consistency makes it almost impossible to mix appends, deletes, upserts and get consistent reads Lack of schema enforcement creates inconsistent and low quality data

18 .Challenges with Data Lakes: Performance Too many small or very big files - more time opening & closing files rather than reading content (worse with streaming) Partitioning aka “poor man’s indexing”- breaks down when data has many dimensions and/or high cardinality columns Neither storage systems, nor processing engines are great at handling very large number of subdir/files

19 .THE GOOD THE GOOD OF DATA WAREHOUSES OF DATA LAKES • Pristine Data • Massive scale out • Transactional Reliability • Open Formats • Fast SQL Queries • Mixed workloads

20 . DELTA The The The SCALE RELIABILITY & LOW-LATENCY of data lake PERFORMANCE of streaming of data warehouse

21 . Scalable storage DELTA = + Transactional log

22 . DELTA Scalable storage pathToTable/ +---- 000.parquet table data stored as Parquet files +---- 001.parquet on HDFS, AWS S3, Azure Blob Stores +---- 002.parquet + ... | Transactional log +---- _delta_log/ sequence of metadata files to track +---- 000.json operations made on the table +---- 001.json ... stored in scalable storage along with table

23 .Log Structured Storage | INSERT actions +---- _delta_log/ Changes to the table Add 001.parquet are stored as ordered, +---- 000.json Add 002.parquet atomic commits +---- 001.json UPDATE actions Remove 001.parquet Each commit is a set of ... actions file in directory Remove 002.parquet _delta_log Add 003.parquet

24 .Log Structured Storage | INSERT actions Readers read the+---- log in _delta_log/ Add 001.parquet atomic units thus reading +---- 000.json Add 002.parquet consistent snapshots +---- 001.json UPDATE actions Remove 001.parquet ... readers will read Remove 002.parquet either [001+002].parquet Add 003.parquet or 003.parquet and nothing in-between

25 .Mutual Exclusion Concurrent writers 000.json need to agree on the Writer 1 Writer 2 001.json order of changes 002.json New commit files must be created mutually only one of the writers trying exclusively to concurrently write 002.json must succeed

26 .Challenges with cloud storage Different cloud storage systems have different semantics to provide atomic guarantees Cloud Storage Atomic Atomic Solution Files Put if Visibility absent Azure Blob Store, ✘ Write to temp file, rename to Azure Data Lake final file if not present AWS S3 ✘ Separate service to perform all writes directly (single writer)

27 .Concurrency Control Pessimistic Concurrency Avoid wasted work Block others from writing anything Hold lock, write data files, commit to log Distributed locks Optimistic Concurrency Mutual exclusion is enough! Assume it’ll be okay and write data files Try to commit to the log, fail on conflict Breaks down if there a lot Enough as write concurrency is usually low of conflicts

28 .Solving Conflicts Optimistically 1. Record start version User 1 User 2 2. Record reads/writes R: A 000000.json R: A 3. If someone else wins, W: B 000001.json W: C check if anything you read has changed. 000002.json 4. Try again. new file C does not conflict with new file B, so retry and commit successfully as 2.json

29 .Solving Conflicts Optimistically 1. Record start version User 1 User 2 2. Record reads/writes R: A 000000.json R: A 3. If someone else wins, W: A,B 000001.json W: A,C check if anything you read has changed. 4. Try again. Deletions of file A by user 1 conflicts with deletion by user 2, user 2 operation fails