- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Percona XtraDB Cluster Tutorial

以前从未使用过Percona Xtradb群集?加入这个半天的教程,我们将向您介绍全功能PerconaXtradb集群的概念。

在本教程中,我们将向您展示如何使用proxysql安装percona xtradb集群,并使用pmm监控它。

我们还将讨论引导、IST、SST、认证、常见故障情况和在线模式更改等主题。

整个教程非常实用。我们将提供实例供您在自己的笔记本电脑上使用。您将与导师一起执行所有命令,以获得第一手经验。

展开查看详情

1 .Percona XtraDB Cluster Tutorial http://www.percona.com/training/

2 . Table of Contents 1. Overview & Setup 6. Incremental State Transfer 2. Application HA 7. Node Failure Scenarios 3. Monitoring Galera with 8 Avoiding SST PMM 4. Galera Replication 9. Tuning Replication Internals 5. Online Schema Changes © 2011 - 2018 Percona, Inc. 2 / 107

3 .XtraDB Cluster Tutorial OVERVIEW © 2011 - 2018 Percona, Inc. 3 / 107

4 . Resources http://www.percona.com/doc/percona-xtradb-cluster/5.7/ http://www.percona.com/blog/category/percona-xtradb- cluster/ http://galeracluster.com/documentation- webpages/reference.html © 2011 - 2018 Percona, Inc. 4 / 107

5 . Lab Instructions Get a USB stick (there are only 5) Copy the 2018-pxc-tutorial.ova to your laptop Copy VirtualBox installer to your laptop, if necessary EJECT USB STICK and had over to someone else Install VirtualBox, if necessary Open VirtualBox and import the OVA Start all 4 virtual machines Hide/Minimize the console windows; you will not use them Windows users - you need an SSH client (download one now!) © 2011 - 2018 Percona, Inc. 5 / 107

6 . Standard Replication Replication is a mechanism for recording a series of changes on one MySQL server and applying the same changes to one, or more, other MySQL server. The source is the "master" and its replica is a "slave." © 2011 - 2018 Percona, Inc. 6 / 107

7 . Galera Replication Developed by Codership Synchronous multi-master database cluster Write to any node; every node stays in sync. © 2011 - 2018 Percona, Inc. 7 / 107

8 . Quick Tutorial Setup 3 unconfigured MySQL Servers (mysql1, mysql2, mysql3) app will run sysbench Sample application, simulating activity © 2011 - 2018 Percona, Inc. 8 / 107

9 . Verify Connectivity and Setup Connect to all VMs Use separate terminal windows; we will be switching between them frequently. Username and password is vagrant DO NOT USE VIRTUAL BOX CONSOLE WINDOWS! ssh -p 2200 vagrant@localhost ssh -p 2201 vagrant@localhost ssh -p 2202 vagrant@localhost ssh -p 2222 vagrant@localhost © 2011 - 2018 Percona, Inc. 9 / 107

10 . Verify Connectivity and Setup (cont) Be sure to sudo to root Stop MySQL if running on all nodes # systemctl stop mysql When you restart the Virtual Machines, they auto-start MySQL and create a default setup Erase MySQL's $DATADIR # rm -rf /var/lib/mysql/* © 2011 - 2018 Percona, Inc. 10 / 107

11 . Configure mysql3 for PXC /etc/percona-xtradb-cluster.conf.d/wsrep.cnf ... wsrep_provider = /usr/lib64/galera3/libgalera_smm.so wsrep_cluster_address = gcomm://mysql1,mysql2,mysql3 wsrep_node_address = 192.168.70.30 wsrep_cluster_name = mycluster wsrep_node_name = mysql3 wsrep_sst_auth = "sstuser:s3cretPass" Make sure any of the above are NOT COMMENTED! Start mysql3 with: mysql3# systemctl start mysql@bootstrap © 2011 - 2018 Percona, Inc. 11 / 107

12 . Checking Cluster State MySQL 5.7 generates random root password. Find it and reset it. $ grep password /var/log/mysqld.log ... password is generated for root@localhost: :#xfgy*aD9ea $ mysql -e "SET PASSWORD='Percona1234#'" -uroot -p \ --connect-expired-password Persist password in ~/.my.cnf on all 3 nodes to make things easier $ cat ~/.my.cnf [client] password="Percona1234#" © 2011 - 2018 Percona, Inc. 12 / 107

13 . Checking Cluster State Can you tell: How many nodes are in the cluster? Is the cluster Primary? Use 'myq_status'* to check the state of Galera on mysql3: mysql3# /usr/local/bin/myq_status wsrep Check status counters manually mysql> SHOW GLOBAL STATUS LIKE 'wsrep%' (*) https://github.com/jayjanssen/myq-tools/releases/tag/1.0.4 © 2011 - 2018 Percona, Inc. 13 / 107

14 . mysql2's Config /etc/percona-xtradb-cluster.conf.d/wsrep.cnf ... wsrep_provider = /usr/lib64/galera3/libgalera_smm.so wsrep_cluster_address = gcomm://mysql1,mysql2,mysql3 wsrep_node_address = 192.168.70.20 wsrep_cluster_name = mycluster wsrep_node_name = mysql2 wsrep_sst_auth = "sstuser:s3cretPass" Don't start MySQL yet! © 2011 - 2018 Percona, Inc. 14 / 107

15 . What's going to happen? Don't start MySQL yet! When mysql2 is started, what's going to happen? How does mysql2 get a copy of the dataset? Can it use the existing data it already has? Do we have to bootstrap mysql2? © 2011 - 2018 Percona, Inc. 15 / 107

16 . State Snapshot Transfer (SST) Transfer a full backup of an existing cluster member (donor) to a new node entering the cluster (joiner). We configured our SST method to use ‘xtrabackup-v2'. © 2011 - 2018 Percona, Inc. 16 / 107

17 . Additional Xtrabackup SST Setup What about that sst user in your wsrep.cnf? [mysqld] ... wsrep_sst_auth = "sstuser:s3cretPass" ... User/Grants need to be added for Xtrabackup mysql3> CREATE USER 'sstuser'@'localhost' IDENTIFIED BY 's3cretPass'; mysql3> GRANT PROCESS, RELOAD, LOCK TABLES, REPLICATION CLIENT ON *.* TO 'sstuser'@'localhost'; The good news is that once you get things figured out the first time, it's typically very easy to get an SST the first time on subsequent nodes. © 2011 - 2018 Percona, Inc. 17 / 107

18 . Up and Running mysql2 Watch mysql3's error log for progress mysql3# tail -f /var/log/mysqld.log Now you can start mysql2 mysql2# systemctl start mysql © 2011 - 2018 Percona, Inc. 18 / 107

19 . mysql1's Config ... wsrep_provider = /usr/lib64/galera3/libgalera_smm.so wsrep_cluster_address = gcomm://mysql1,mysql2,mysql3 wsrep_node_address = 192.168.70.10 wsrep_cluster_name = mycluster wsrep_node_name = mysql1 wsrep_sst_auth = "sstuser:s3cretPass" Now you can start mysql1 mysql1# systemctl start mysql © 2011 - 2018 Percona, Inc. 19 / 107

20 .Where are We Now? © 2011 - 2018 Percona, Inc. 20 / 107

21 .XtraDB Cluster Tutorial APPLICATION HIGH AVAILABILITY © 2011 - 2018 Percona, Inc. 21 / 107

22 .ProxySQL Architecture © 2011 - 2018 Percona, Inc. 22 / 107

23 . Setting up ProxySQL Create monitor user mysql3> CREATE USER 'monitor'@'%' IDENTIFIED BY 'monit0r'; Create Cluster Admin User mysql3> CREATE USER 'cuser'@'%' IDENTIFIED BY 'cpass'; mysql3> GRANT ALL ON *.* TO 'cuser'@'%'; © 2011 - 2018 Percona, Inc. 23 / 107

24 . Setting up ProxySQL Create monitor user mysql3> CREATE USER 'monitor'@'%' IDENTIFIED BY 'monit0r'; Create Cluster Admin User mysql3> CREATE USER 'cuser'@'%' IDENTIFIED BY 'cpass'; mysql3> GRANT ALL ON *.* TO 'cuser'@'%'; Do we have to create these users on the other nodes? © 2011 - 2018 Percona, Inc. 23 / 107

25 . Configure ProxySQL Create and edit the file app# nano /etc/proxysql-admin.cnf export PROXYSQL_USERNAME="admin" export PROXYSQL_PASSWORD="admin" export PROXYSQL_HOSTNAME="localhost" export PROXYSQL_PORT="6032" export CLUSTER_USERNAME="cuser" export CLUSTER_PASSWORD="cpass" export CLUSTER_HOSTNAME="mysql3" export CLUSTER_PORT="3306" export MONITOR_USERNAME="monitor" export MONITOR_PASSWORD="monit0r" export CLUSTER_APP_USERNAME="sbuser" export CLUSTER_APP_PASSWORD="sbPass1234#" export WRITE_HOSTGROUP_ID="10" export READ_HOSTGROUP_ID="11" export MODE="singlewrite" export HOST_PRIORITY_FILE="/var/lib/proxysql/host_priority.conf" © 2011 - 2018 Percona, Inc. 24 / 107

26 . Start ProxySQL Start ProxySQL with the following command: app# systemctl restart proxysql app# proxysql-admin --config-file=/etc/proxysql-admin.cnf \ --write-node=192.168.70.30:3306 --enable © 2011 - 2018 Percona, Inc. 25 / 107

27 . Start ProxySQL Start ProxySQL with the following command: app# systemctl restart proxysql app# proxysql-admin --config-file=/etc/proxysql-admin.cnf \ --write-node=192.168.70.30:3306 --enable mysql3 is the writer node Grant all privileges to app user mysql3> CREATE DATABASE sysbench; mysql3> GRANT ALL ON sysbench.* TO 'sbuser'@'192.%'; © 2011 - 2018 Percona, Inc. 25 / 107

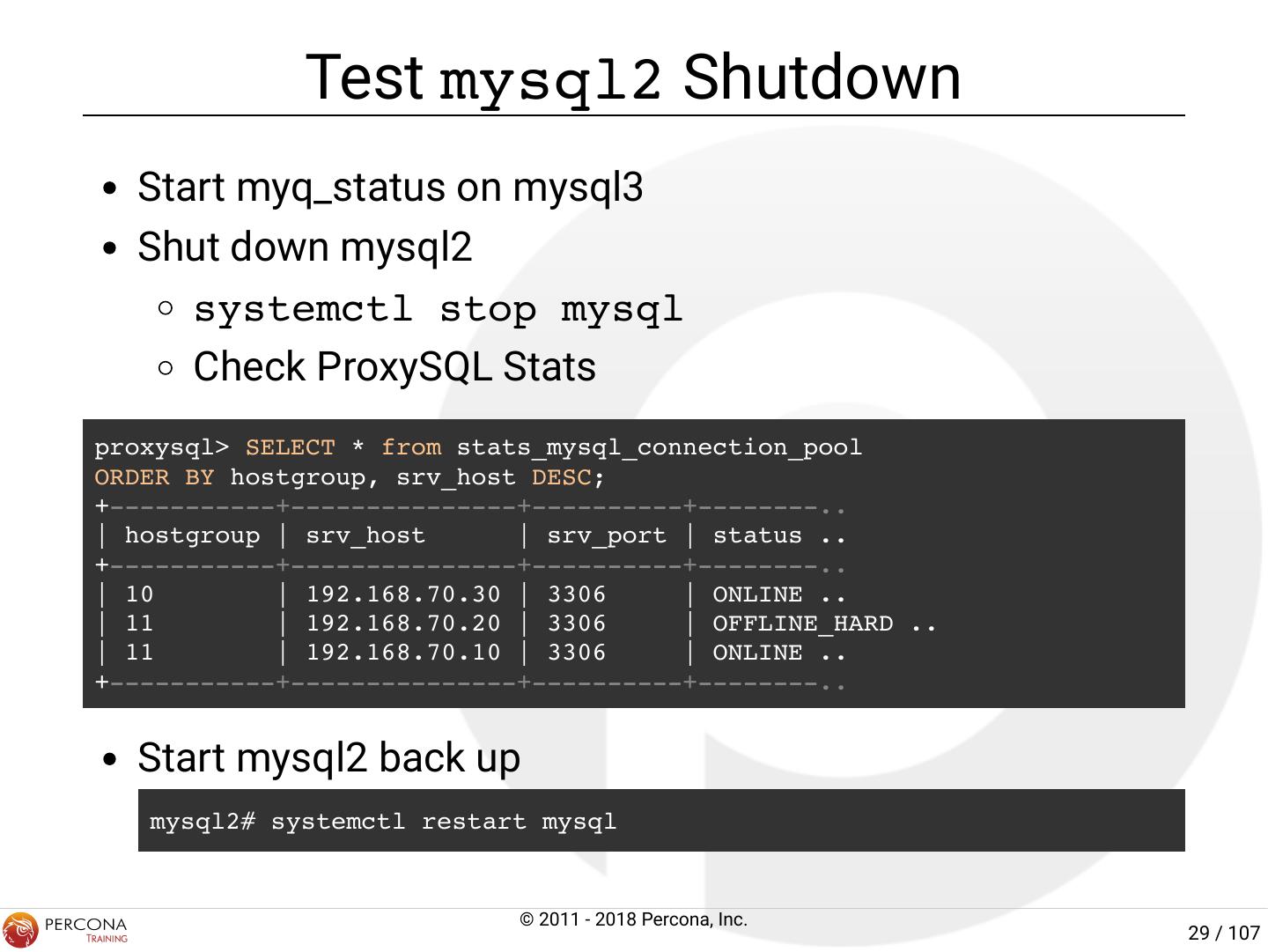

28 . Check ProxySQL Stats app# mysql -P6032 -uadmin -padmin -h 127.0.0.1 proxysql> SELECT * FROM stats_mysql_connection_pool ORDER BY hostgroup, srv_host DESC; +-----------+---------------+----------+--------+...------------+ | hostgroup | srv_host | srv_port | status |... Latency_us | +-----------+---------------+----------+--------+...------------+ | 10 | 192.168.70.30 | 3306 | ONLINE |... 461 | | 11 | 192.168.70.20 | 3306 | ONLINE |... 820 | | 11 | 192.168.70.10 | 3306 | ONLINE |... 101 | +-----------+---------------+----------+--------+...------------+ © 2011 - 2018 Percona, Inc. 26 / 107

29 . Prepare Test Data Create some test data for our sample application app$ /usr/local/bin/prepare_sysbench.sh sysbench 1.0.14 (using bundled LuaJIT 2.1.0-beta2) Initializing worker threads... Creating table 'sbtest1'... Inserting 1000000 records into 'sbtest1' Creating a secondary index on 'sbtest1'... © 2011 - 2018 Percona, Inc. 27 / 107