- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Networking Products Based on DPDK Integrated with Kubernetes Container Networking Contiv

展开查看详情

1 . x DPDK Based Networking Products Enhance and Expand Container Networking zhouzijiang@jd.com Jingdong Digital Technology

2 .Kubernetes Overview master kube-controller- kube-scheduler kube-apiserver manager • Pod to Pod communication • Pod to Service communication node1 node2 kubelet kube-proxy kubelet kube-proxy pod1 pod2 pod3 pod4 pod5 pod6 2

3 . Flannel Overview node1 node2 pod1 pod2 pod3 pod4 eth0 eth0 eth0 eth0 10.10.10.2/24 10.10.10.3/24 10.10.20.2/24 10.10.20.3/24 bridge: cni0 bridge: cni0 10.10.10.1/24 10.10.20.1/24 vxlan: flannel.1 vxlan: flannel.1 10.10.10.0/32 10.10.20.0/32 eth0 eth0 192.168.0.1 192.168.0.2 VXLAN encapsulation underlying network Outer IP header Inner IP header Outer Outer Inner src: 192.168.0.1 Vxlan header src: 10.10.10.2 Payload Ethernet header UDP header Ethernet header dst: 192.168.0.2 dst: 10.10.20.3 3

4 . node2 node1 pod2 pod1 non kubernetes nodes 1、pods communicate with endpoints in k8s cluster, packets must be encapsulated 2、pods communicate with endpoints out of k8s cluster, packets must be masqueraded It will lead to extra overhead. Besides, it can’t meet some demands, e.g. pod wants to access white-list enabled application outside of k8s cluster Our goals: • no encapsulation • no network address translation • pods can be reached from everywhere directly Our Choice: • contiv with layer3 routing mode 4

5 .Contiv Overview 10.10.0.1 nexthop 192.168.1.1 10.10.0.2 nexthop 192.168.1.1 layer3 witch 10.10.0.3 nexthop 192.168.1.2 • OVS to forward pod packets 10.10.0.4 nexthop 192.168.1.2 • BGP to publish pod ip netplugin netplugin bgp bgp inb01 inb01 eth0 eth0 192.168.1.1 192.168.1.2 ovs ovs vvport1 vvport2 vvport1 vvport2 eth0 eth0 eth0 eth0 10.10.0.1/24 10.10.0.2/24 10.10.0.3/24 10.10.0.4/24 pod1 pod2 pod3 pod4 5

6 .Contiv Implementation Detail layer3 switch 1、user creates a new pod in k8s cluster 2、netplugin requests a free ip 10.10.0.1 from netmaster 3、netplugin creates a veth pair, such as vport1 and vvport1 4、netplugin moves interface vport1 to pod network netplugin namespace and rename it to eth0 bgp 5、netplugin sets ip and route in the pod network namespace 6、netplugin adds vvport1 to ovs inb01 eth0 7、netplugin publishes 10.10.0.1/32 to bgp neighbor switch 192.168.1.1 ovs vvport1 vvport2 • nw_dst=10.10.0.1 output:vvport1 • nw_dst=10.10.0.2 output:vvport2 eth0 eth0 10.10.0.1/24 10.10.0.2/24 pod1 pod2 6

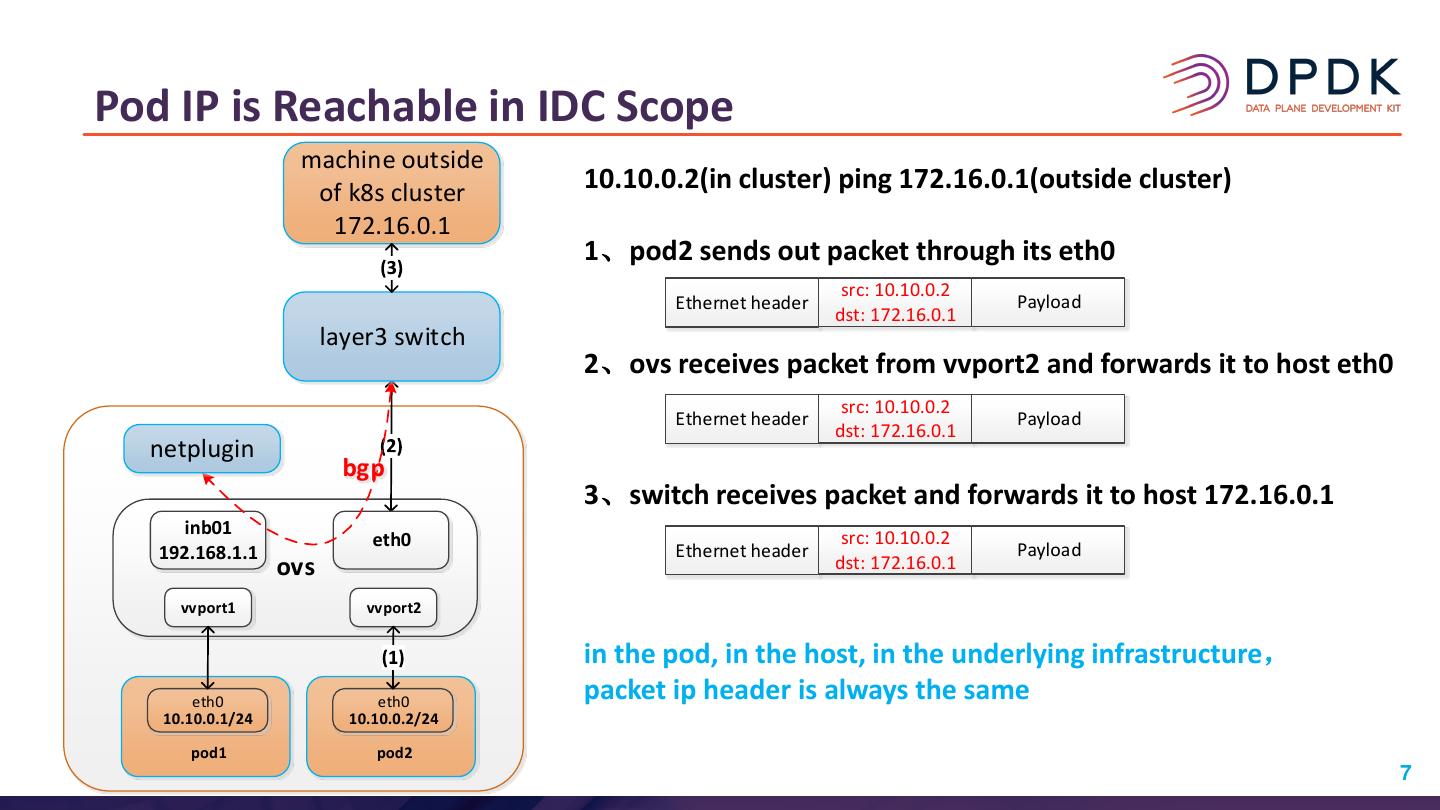

7 .Pod IP is Reachable in IDC Scope machine outside of k8s cluster 10.10.0.2(in cluster) ping 172.16.0.1(outside cluster) 172.16.0.1 1、pod2 sends out packet through its eth0 src: 10.10.0.2 Ethernet header Payload dst: 172.16.0.1 layer3 switch 2、ovs receives packet from vvport2 and forwards it to host eth0 src: 10.10.0.2 Ethernet header Payload dst: 172.16.0.1 netplugin bgp 3、switch receives packet and forwards it to host 172.16.0.1 inb01 src: 10.10.0.2 eth0 Ethernet header Payload 192.168.1.1 dst: 172.16.0.1 ovs vvport1 vvport2 in the pod, in the host, in the underlying infrastructure, eth0 eth0 packet ip header is always the same 10.10.0.1/24 10.10.0.2/24 pod1 pod2 7

8 .Contiv Optimization 1、multiple bgp neighbors support 2、reduce number of node’s ovs rules from magnitude of cluster to node 3、remove dns and load balance module from netplugin 4、add non-docker container runtime support, e.g. containerd 5、add ipv6 support 8

9 .Load Balance: Native KubeProxy control flow kube-apiserver data flow services services endpoints endpoints kube-proxy kube-proxy iptables eth0.100 eth0.100 iptables service traffic eth0 layer3 switch eth0 inb01 vvport1 eth0.200 eth0.200 vvport1 vvport2 eth0 eth0 eth0 10.10.0.1/24 10.10.0.2/24 10.10.0.3/24 pod1 pod2 pod3 9

10 .Load Balance: DPDK-SLB services control flow kube-apiserver endpoints slb-controller data flow • Kube-Proxy on all nodes not needed • SLB-Controller watches services and SLB DPDK Cluster SLB Cluster endpoints in K8S, dynamically sends VS and RS info to DPDK-SLB kube-proxy eth0.100 eth0 layer3 switch eth0 eth0.100 kube-proxy service traffic inb01 vvport1 eth0.200 eth0.200 vvport1 vvport2 eth0 eth0 eth0 10.10.0.1/24 10.10.0.2/24 10.10.0.3/24 pod1 pod2 pod3 10

11 .DPDK-SLB: Control Plane slb-controller • SLB-Daemon: core process which does load balance and full NAT • SLB-Agent monitors and configures SLB- Daemon • OSPFD publishes service subnets to layer3 slb-agent ospfd switch slb-daemon • Admin core configures VS and RS info to (3) (3) worker cores (3) • KNI core forwards OSPF packets to kernel, the kernel then sends them to OSPFD config config config • Worker cores do the load balance admin worker_1 worker_2 worker_n kni All data (config data, session data, local addrs) is per CPU, fully parallelizing packets processing 11

12 .DPDK-SLB: OSPF Neighbor slb-agent ospfd slb-daemon config config config • OSPF uses multicast address 224.0.0.5 admin worker_1 worker_2 worker_n kni • Flow Director: destination ip 224.0.0.5 bound to queue_x • Dedicated KNI core to process OSPF packets queue_1 queue_2 queue_n queue_x • OSPFD publishes service subnets to layer3 (2) switch eth0 (1) layer3 switch 12

13 .DPDK-SLB: Data Plane queue_1 worker_1 client nic rss queue_2 worker_2 nic server queue_n worker_n 1、{client_ip, client_port, vip,vport} 2、rss selects a queue according to 5 tuple 3、worker_1 does fullnat {local_ip1, local_port, server_ip, server_port} 4、worker_1 saves session {cip,cport,vip,vport,lip1,lport,sip,sport} the key point is that server-to-client packet must be placed on queue1, because only worker_1 has the session queue_1 worker_1 server nic fdir queue_2 worker_2 nic client queue_n worker_n 1、{server_ip, server_port, local_ip1, local_port} 2、fdir selects a queue according to destination ip addr(local_ip1 bound to queue_1) 3、worker_1 lookups session {cip,cport,vip,vport,lip1,lport,sip,sport} 4、worker_1 does fullnat {vip, vport, client_ip, client_port} 13

14 . Make Apps Run in the Container Cloud Seamlessly node1 eth0 vvport1 10.10.0.1 pod1 • layer3 switch routes: ovs 10.10.0.1 nexthop node1 DPDK-SLB 10.10.0.4 nexthop node2 vm 1 eth0 vvport2 10.10.0.2 service subnets nexthop dpdk-slb pod2 • Pod IP can be reachable from layer3 switch vm1 outside k8s cluster node2 • Service IP can be reachable from vvport1 eth0 10.10.0.3 vm2 outside k8s cluster vm 2 DPDK-SLB pod3 • Help apps to run in the container ovs cloud and traditional eth0 environment at the same time vvport2 10.10.0.4 pod4 14

15 .“ Thank You! Q&A ” zhouzijiang@jd.com Jingdong Digital Technology