- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

1-Wayne Gao

Wayne Gao-Principle Storage Solution Architect

Wayne是前EMC资深首席软件工程师,领导完成了全闪对象存储的开发。也曾就职与阿里巴巴,多年前领导EMC SourceOne DiskXtender的文件系统归档开发,也在AMD负责存储和网络驱动开发。近些年在英特尔和Solidigm从事用户态FTL和Cache方案的研发工作,我们的产品在阿里云商用发布作为第三代大数据本地盘服务。Wayne有4个美国专利,和两篇国际会议发布和一篇计算机顶会Eurosys2024。

分享介绍:

随着大数据运算和大模型GPT4-5,chatgpt和AIGC得到发展,对存储方案提出了新的要求,性能越来越高,空间越来越大,性能层和容量层需要更好的软件解决方案来提供更加平滑的存储给大数据运算Spark和大模型人工智能运算。Solidigm的CSAL是一个log structure的cache方案同时是一个用户态FTL完美兼容了大容量存储和data placement技术,这些技术结合软件生态会大大改进存储系统,进而提高大数据人工智能大模型应用的体验。

展开查看详情

1 .Solidigm AI storage solution foundation - CSAL Wayne Gao – Principal storage solution architect Contributors: Yi Wang, Wayne Gao, Sarika Mehta, Jeniece Wnorowski, Roger Corell, Dave Sierra, Jie Chen 1

2 .Solidigm Mission for AI Storage Design and deliver an industry leading storage portfolio that optimizes and accelerates AI infrastructure, scales efficiently and improves operational efficiency from Core to Edge Solidigm Information. ©2023, Solidigm. All rights reserved. 2

3 . Outline AI Environment and Why Storage Matters Key Storage Requirements for AI phase Solidigm CSAL SW family for AI Q&A Solidigm Information. ©2023, Solidigm. All rights reserved. 3

4 . Environment Solidigm Information. ©2023, Solidigm. All rights reserved. 4

5 . Environment. Overview. AI chip spending growing from $44B 2022 to $119B 20271 Compute and storage increasingly decentralized Core is 5X larger, but edge storage is growing 2X faster at 50% CAGR1 Storage content on AI servers is ~3X higher than typical servers1 ~90% of storage in core data centers is still on HDDs2 1. AI chip growth. Solidigm AI Impact on SSD. October 2023 2. HDD share. Solidigm and consensus on industry analyst. Source – Solidigm AI Market Segment Analysis. 5

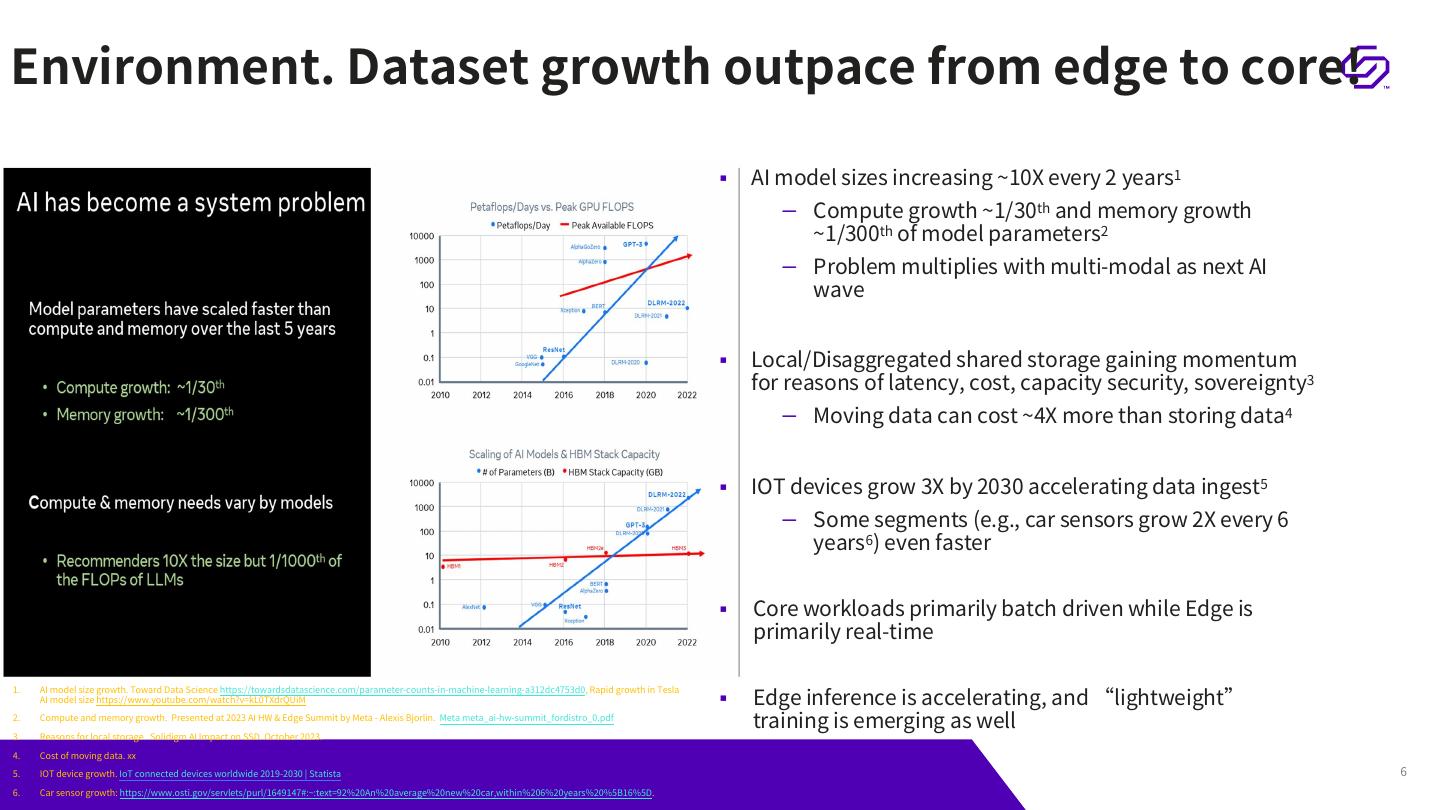

6 .Environment. Dataset growth outpace from edge to core! AI model sizes increasing ~10X every 2 years1 – Compute growth ~1/30th and memory growth ~1/300th of model parameters2 – Problem multiplies with multi-modal as next AI wave Local/Disaggregated shared storage gaining momentum for reasons of latency, cost, capacity security, sovereignty3 – Moving data can cost ~4X more than storing data4 IOT devices grow 3X by 2030 accelerating data ingest5 – Some segments (e.g., car sensors grow 2X every 6 years6) even faster Core workloads primarily batch driven while Edge is primarily real-time Edge inference is accelerating, and “lightweight” 1. AI model size growth. Toward Data Science https://towardsdatascience.com/parameter-counts-in-machine-learning-a312dc4753d0, Rapid growth in Tesla AI model size https://www.youtube.com/watch?v=kL0TXdrQUiM 2. Compute and memory growth. Presented at 2023 AI HW & Edge Summit by Meta - Alexis Bjorlin. Meta meta_ai-hw-summit_fordistro_0.pdf training is emerging as well 3. Reasons for local storage. Solidigm AI Impact on SSD. October 2023. 4. Cost of moving data. xx 5. IOT device growth. IoT connected devices worldwide 2019-2030 | Statista 6 6. Car sensor growth: https://www.osti.gov/servlets/purl/1649147#:~:text=92%20An%20average%20new%20car,within%206%20years%20%5B16%5D.

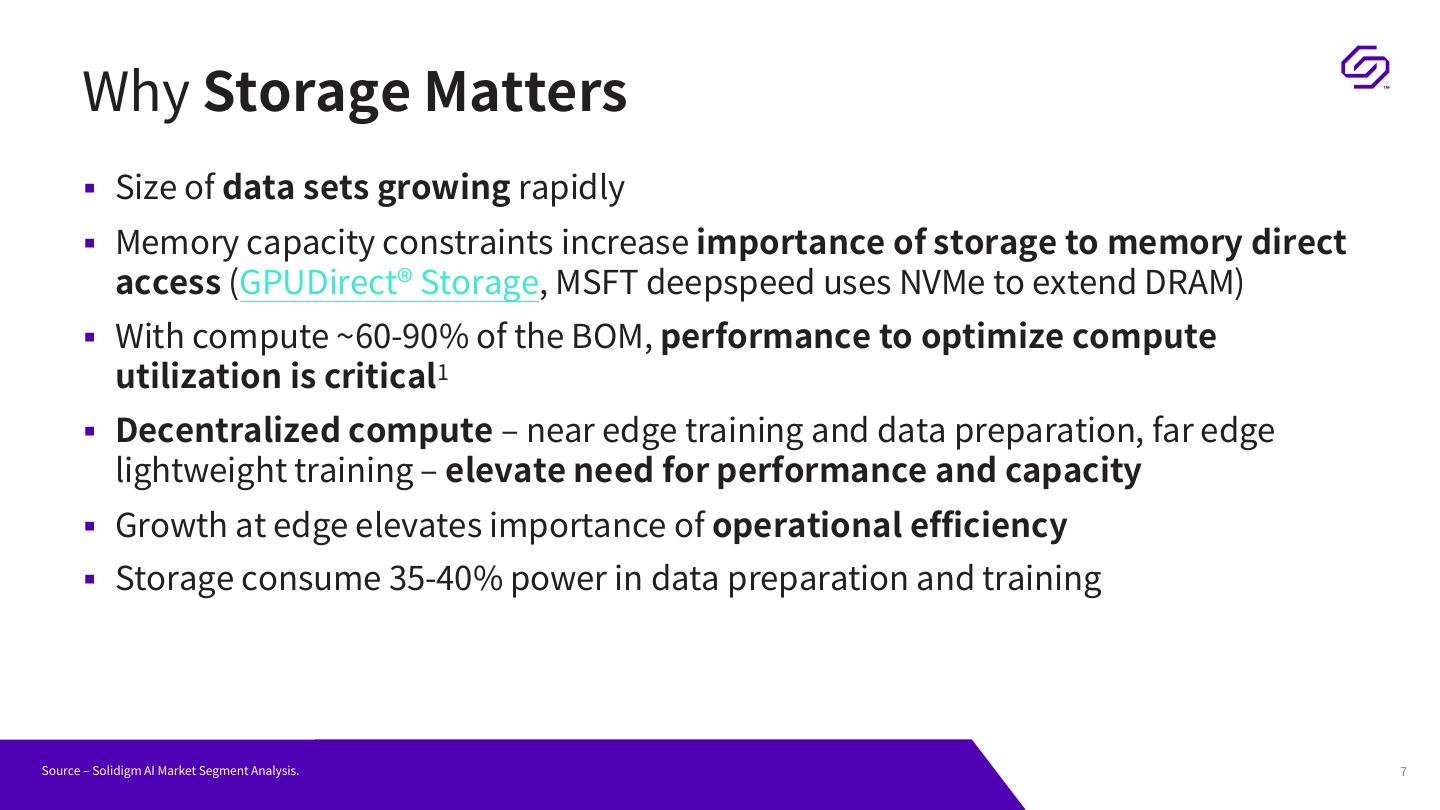

7 . Why Storage Matters Size of data sets growing rapidly Memory capacity constraints increase importance of storage to memory direct access (GPUDirect® Storage, MSFT deepspeed uses NVMe to extend DRAM) With compute ~60-90% of the BOM, performance to optimize compute utilization is critical1 Decentralized compute – near edge training and data preparation, far edge lightweight training – elevate need for performance and capacity Growth at edge elevates importance of operational efficiency Storage consume 35-40% power in data preparation and training Source – Solidigm AI Market Segment Analysis. 7

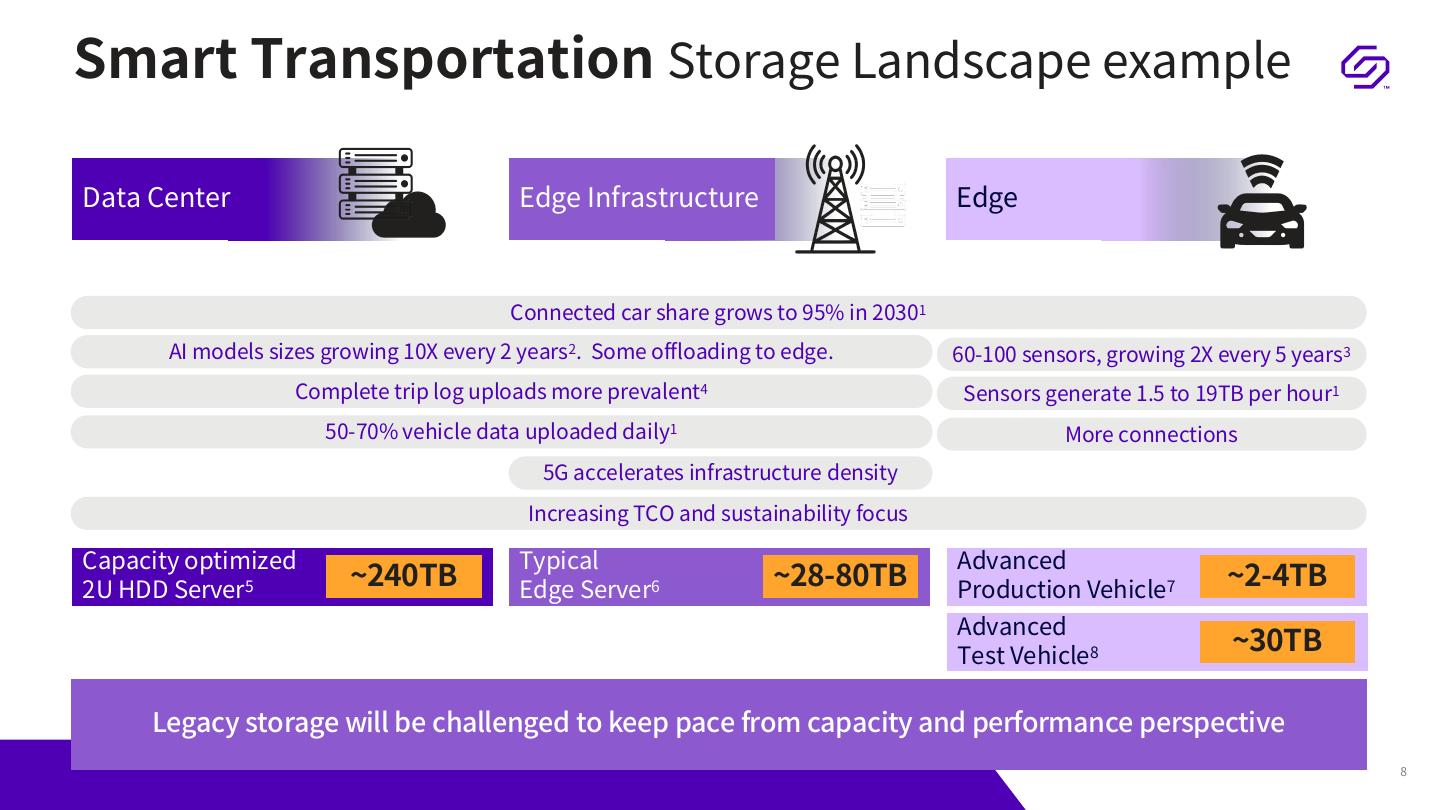

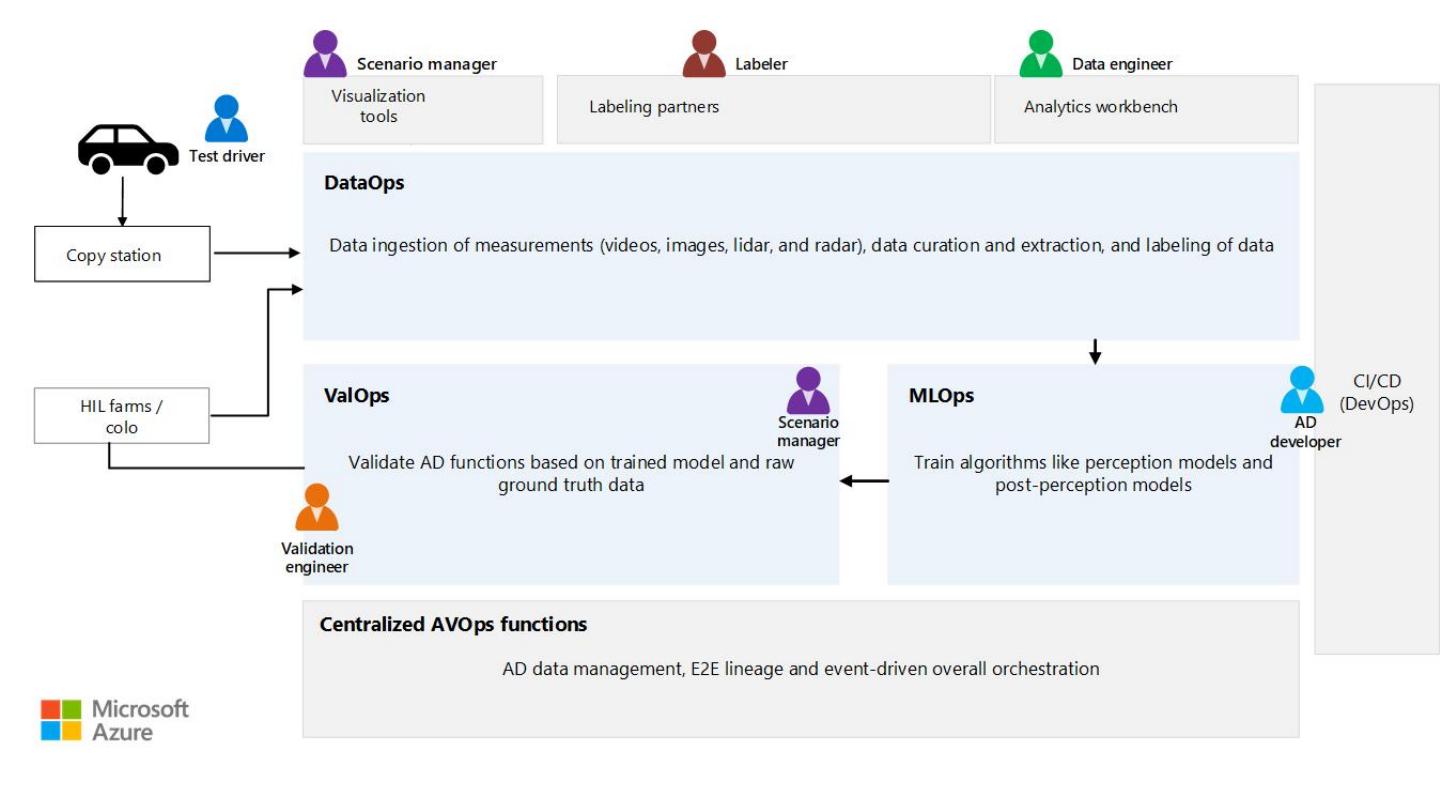

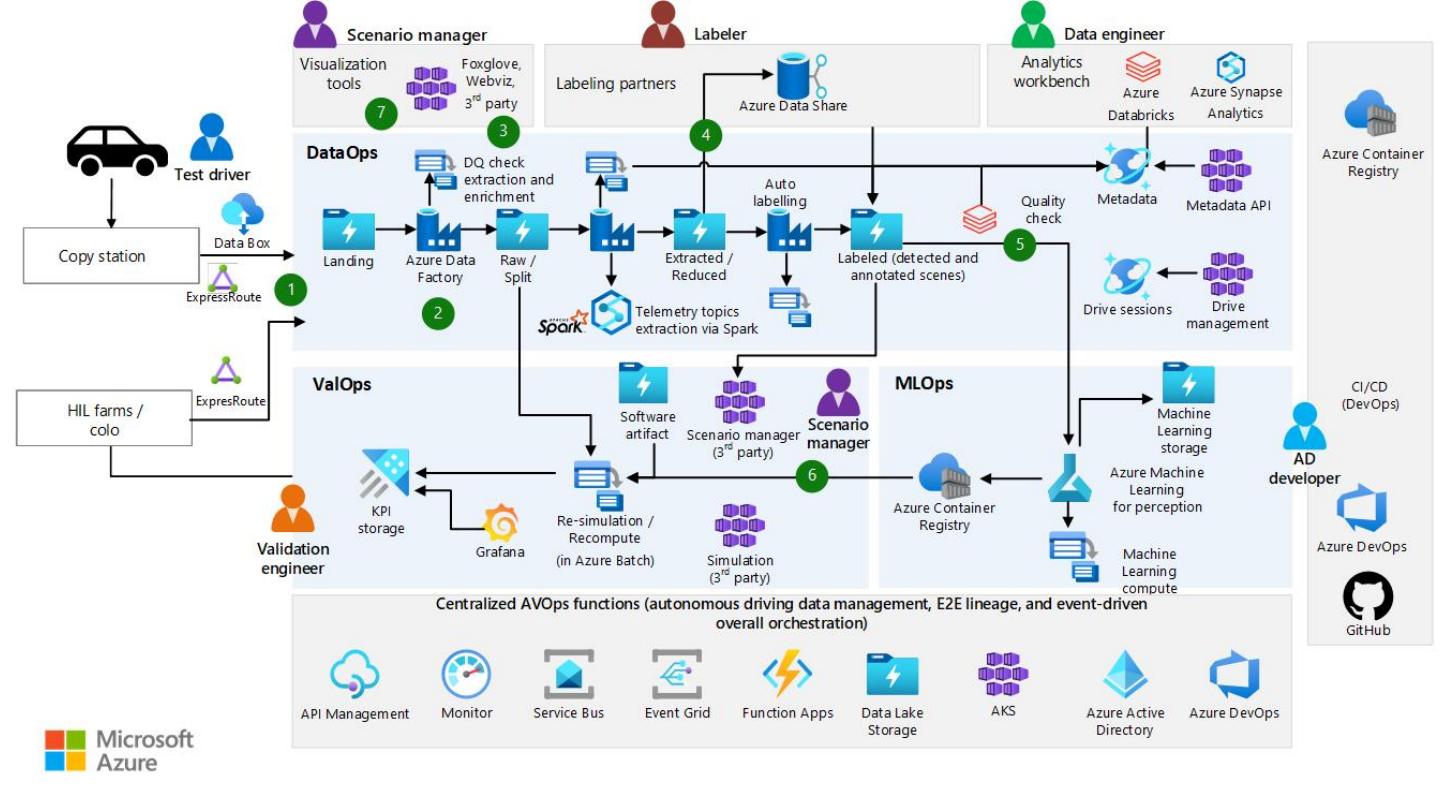

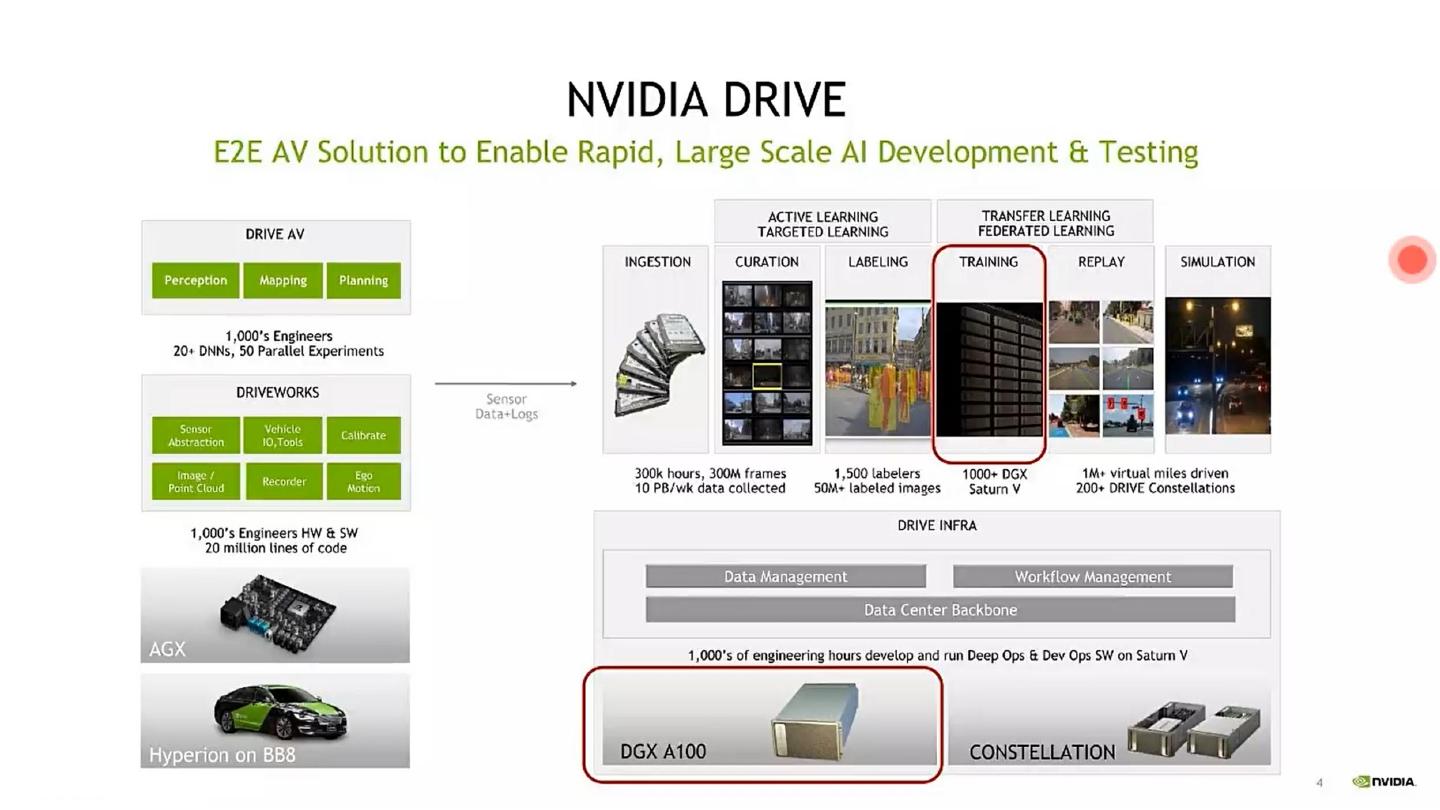

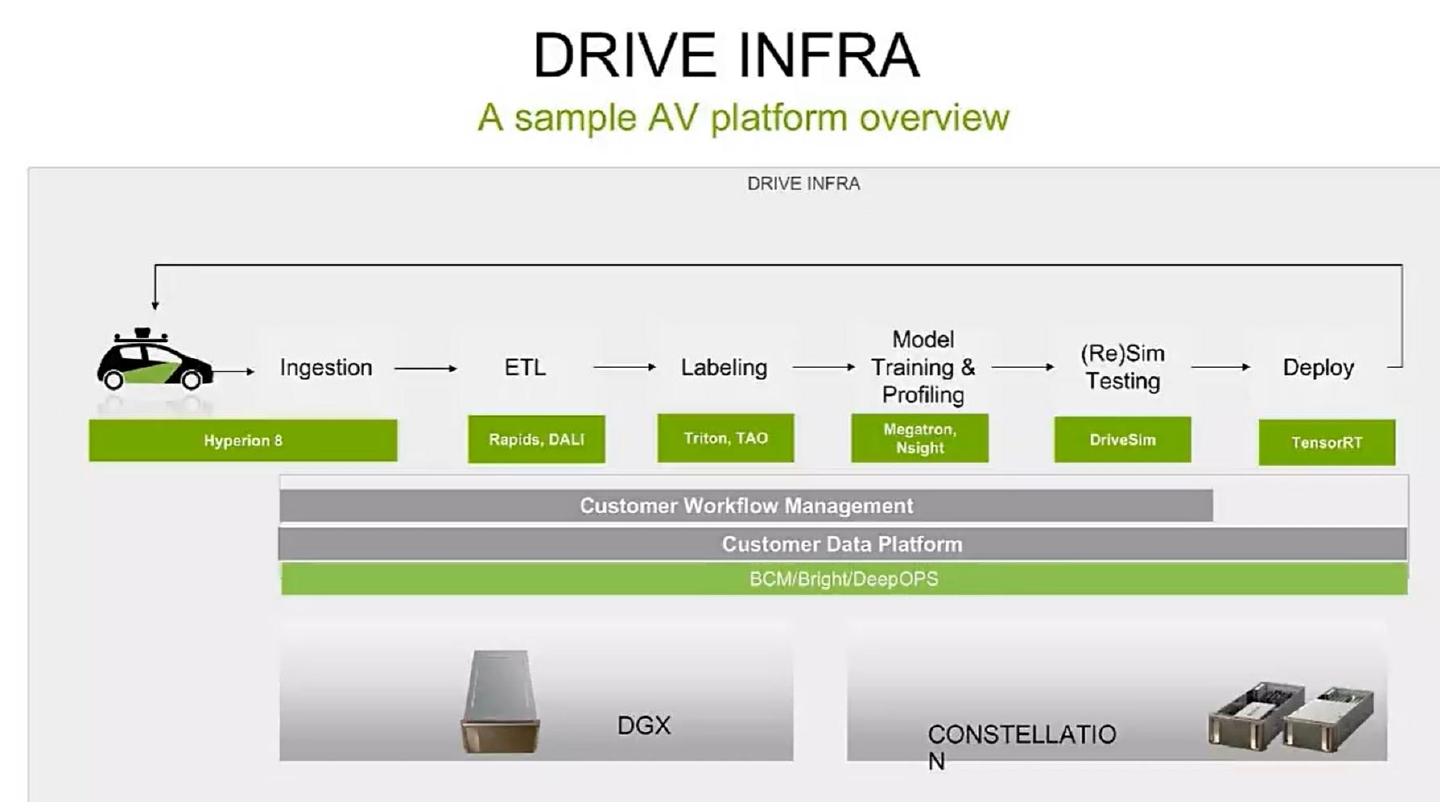

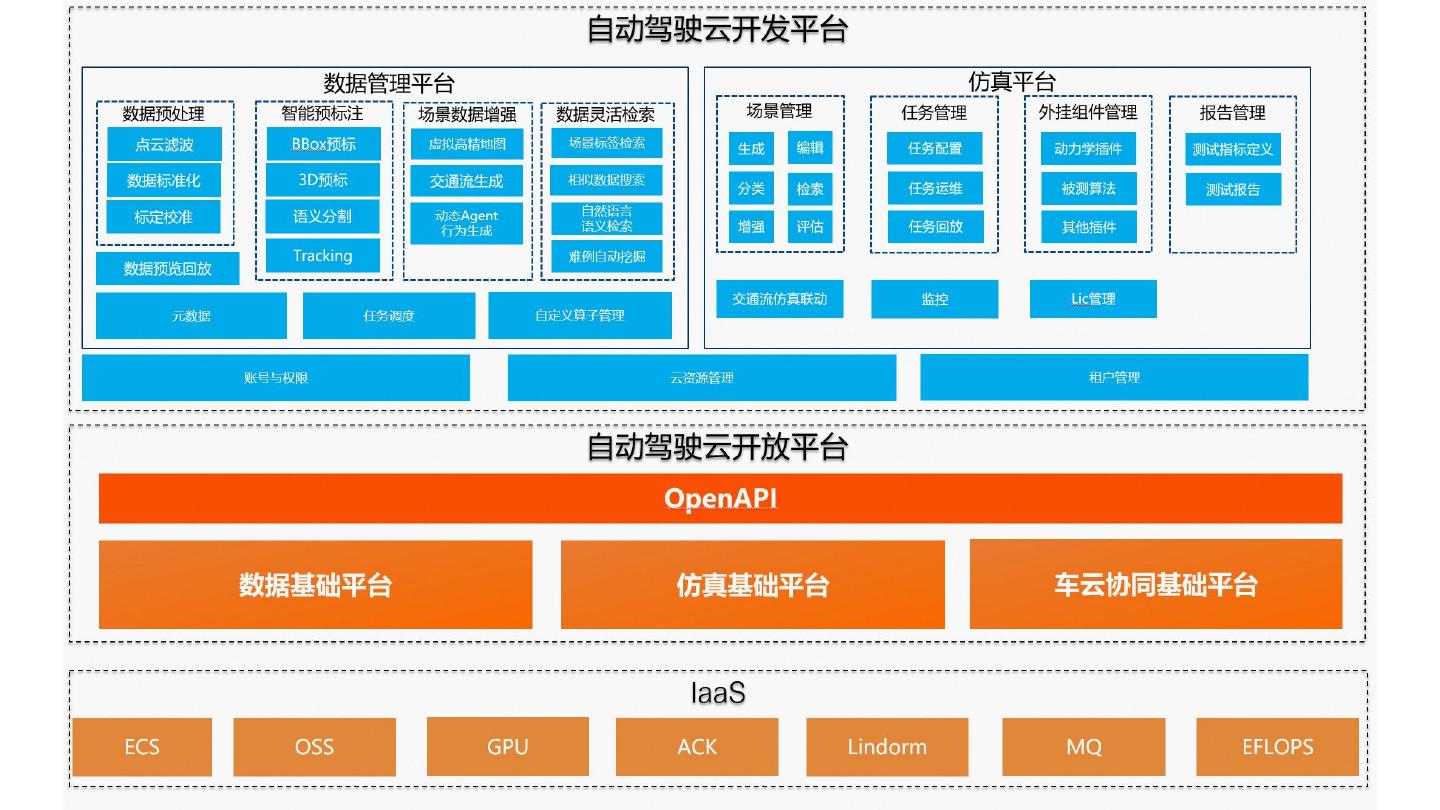

8 .Smart Transportation Storage Landscape example Data Center Edge Infrastructure Edge Connected car share grows to 95% in 20301 AI models sizes growing 10X every 2 years2. Some offloading to edge. 60-100 sensors, growing 2X every 5 years3 Complete trip log uploads more prevalent4 Sensors generate 1.5 to 19TB per hour1 50-70% vehicle data uploaded daily1 More connections 5G accelerates infrastructure density Increasing TCO and sustainability focus Capacity optimized Typical Advanced 2U HDD Server5 ~240TB Edge Server6 ~28-80TB Production Vehicle7 ~2-4TB Advanced Test Vehicle8 ~30TB Legacy storage will be challenged to keep pace from capacity and performance perspective 8

9 . Workload Description and Key Storage Requirements Solidigm Information. ©2023, Solidigm. All rights reserved. 9

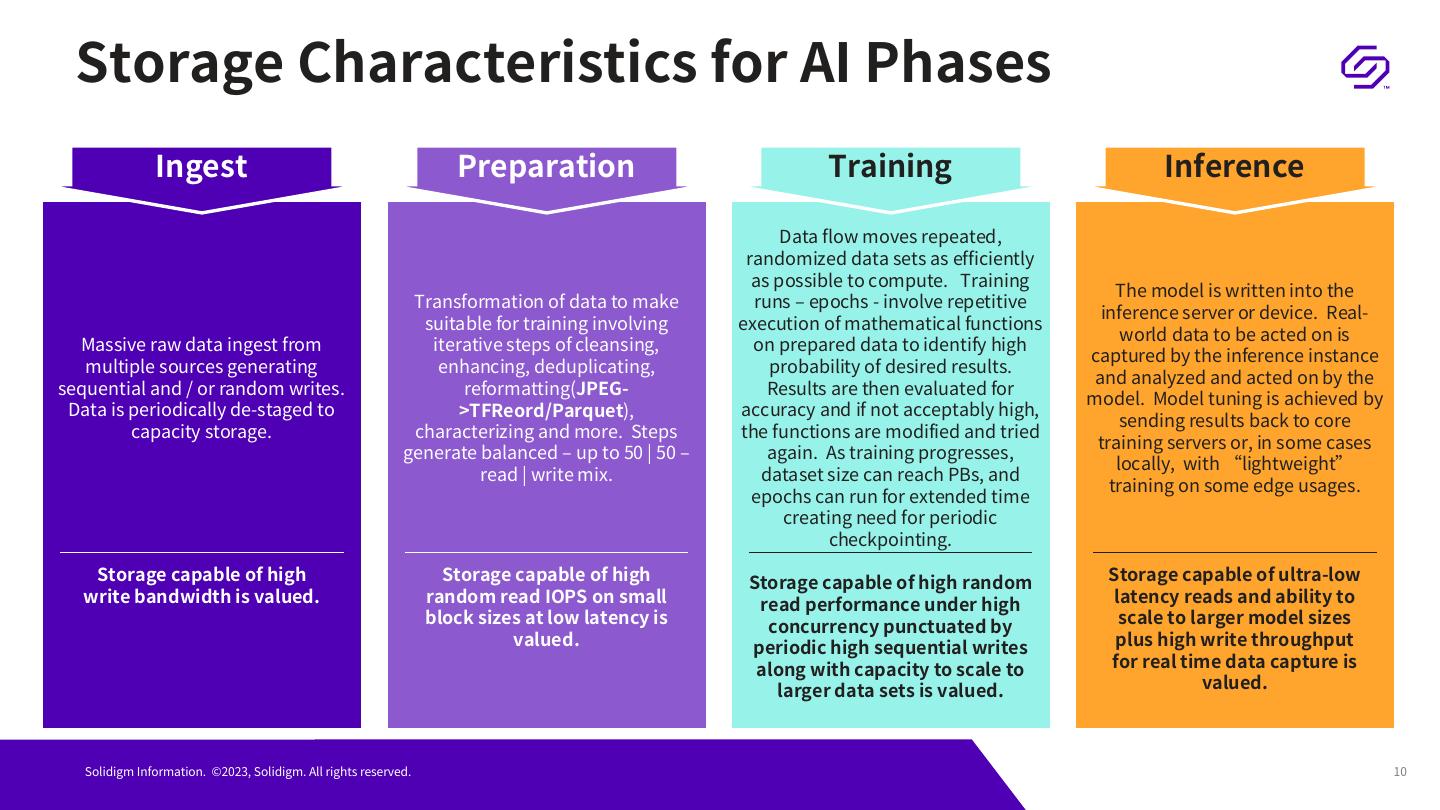

10 . Storage Characteristics for AI Phases Ingest Preparation Training Inference Data flow moves repeated, randomized data sets as efficiently as possible to compute. Training The model is written into the Transformation of data to make runs – epochs - involve repetitive inference server or device. Real- suitable for training involving execution of mathematical functions world data to be acted on is Massive raw data ingest from iterative steps of cleansing, on prepared data to identify high captured by the inference instance multiple sources generating enhancing, deduplicating, probability of desired results. and analyzed and acted on by the sequential and / or random writes. reformatting(JPEG- Results are then evaluated for model. Model tuning is achieved by Data is periodically de-staged to >TFReord/Parquet), accuracy and if not acceptably high, sending results back to core capacity storage. characterizing and more. Steps the functions are modified and tried training servers or, in some cases generate balanced – up to 50 | 50 – again. As training progresses, locally, with “lightweight” read | write mix. dataset size can reach PBs, and training on some edge usages. epochs can run for extended time creating need for periodic checkpointing. Storage capable of high Storage capable of high Storage capable of high random Storage capable of ultra-low write bandwidth is valued. random read IOPS on small read performance under high latency reads and ability to block sizes at low latency is concurrency punctuated by scale to larger model sizes valued. periodic high sequential writes plus high write throughput along with capacity to scale to for real time data capture is larger data sets is valued. valued. Solidigm Information. ©2023, Solidigm. All rights reserved. 10

11 . CSAL based reference framework for Storage AI workload Solidigm Information. ©2023, Solidigm. All rights reserved. 11

12 .QLC density help you get 3X RACK Savings 8:1 Drive Consolidation => 3X RACK Saving QLC: 8倍密度, 8倍写入性能, 6GB/s 随机读 , 3倍Rack级别节省

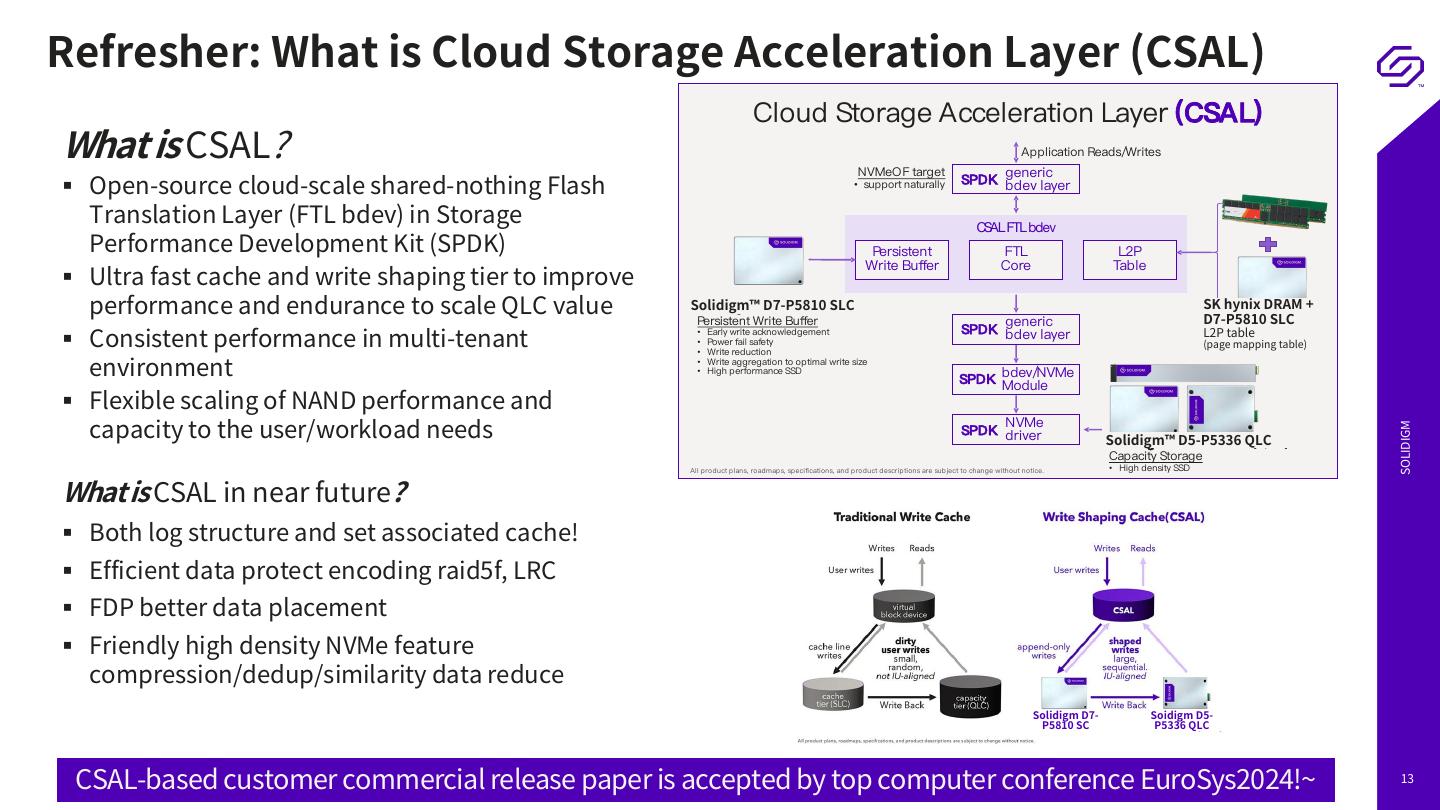

13 .Refresher: What is Cloud Storage Acceleration Layer (CSAL) What is CSAL? Cloud Storage Acceleration Layer (CSAL) Application Reads/Writes Open-source cloud-scale shared-nothing Flash NVMeOF target generic • support naturally SPDK bdev layer Translation Layer (FTL bdev) in Storage Performance Development Kit (SPDK) CSAL FTL bdev Ultra fast cache and write shaping tier to improve Persistent FTL L2P Write Buffer Core Table performance and endurance to scale QLC value Solidigm™ Solidigm 1D7-P5810 SLC st Gen SLC SK hynix D7-P5810 DRAM +1 st Gen SLC SLC DRAM + Solidigm Consistent performance in multi-tenant Persistent Write Buffer generic • Early write acknowledgement SPDK bdev layer L2P table L2P table (page mapping table) environment • Power fail safety • Paged mapping table • Write reduction • Write aggregation to optimal write size SPDK bdev/NVMe • High performance SSD Flexible scaling of NAND performance and Module capacity to the user/workload needs NVMe SOLIDIGM Solidigm™ D5-P5336 P5336QLC SPDK driver Solidigm™D5- (QLC) Capacity Storage What is CSAL in near future? All product plans, roadmaps, specifications, and product descriptions are subject to change without notice. • High density SSD Both log structure and set associated cache! Efficient data protect encoding raid5f, LRC FDP better data placement Friendly high density NVMe feature compression/dedup/similarity data reduce Solidigm D7- Soidigm D5- P5810 SC P5336 QLC CSAL-based customer commercial release paper is accepted by top computer conference EuroSys2024!~ 13

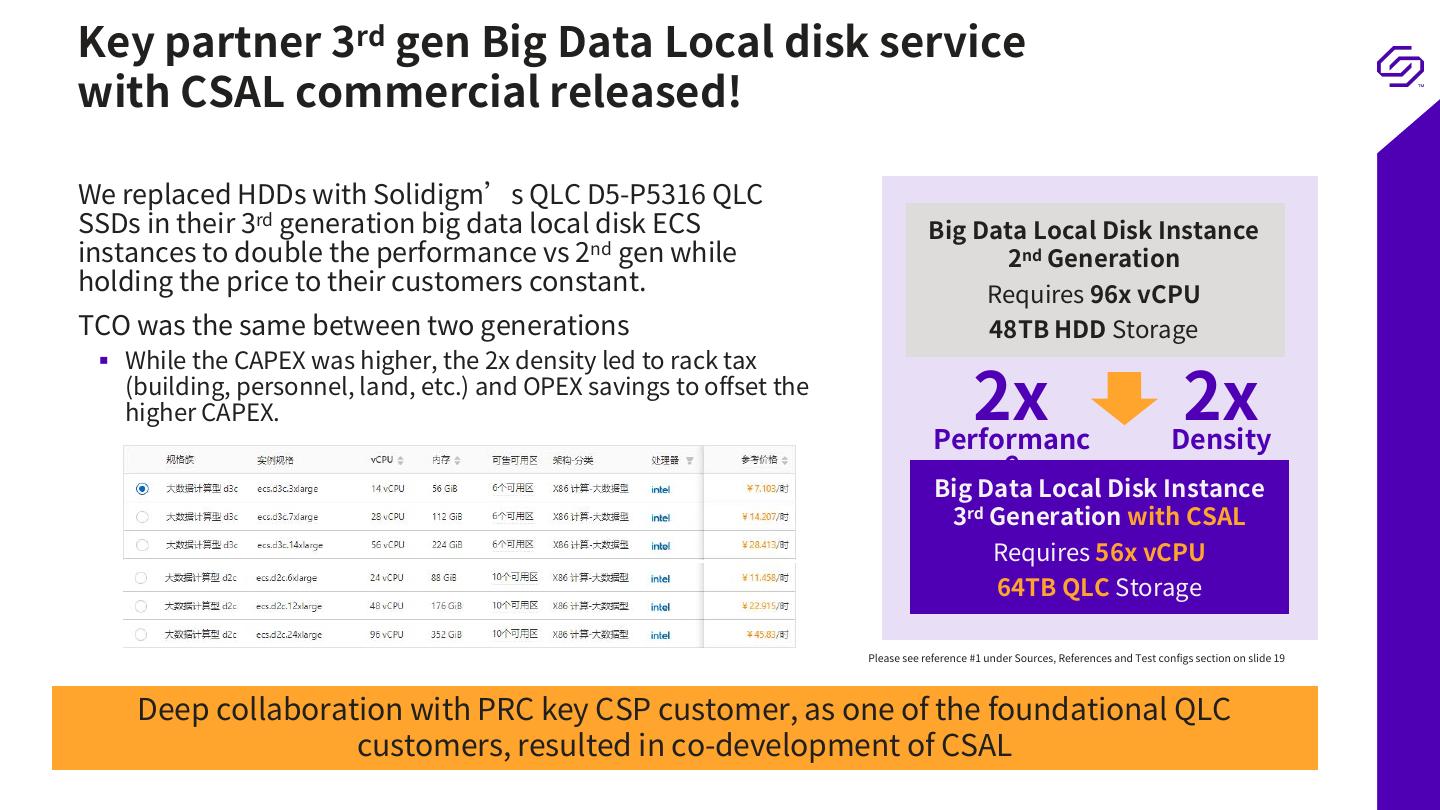

14 . Key partner 3rd gen Big Data Local disk service with CSAL commercial released! We replaced HDDs with Solidigm’s QLC D5-P5316 QLC SSDs in their 3rd generation big data local disk ECS Big Data Local Disk Instance instances to double the performance vs 2nd gen while 2nd Generation holding the price to their customers constant. Requires 96x vCPU TCO was the same between two generations 48TB HDD Storage 2x 2x While the CAPEX was higher, the 2x density led to rack tax (building, personnel, land, etc.) and OPEX savings to offset the higher CAPEX. Performanc Density e Big Data Local Disk Instance 3rd Generation with CSAL Requires 56x vCPU 64TB QLC Storage Please see reference #1 under Sources, References and Test configs section on slide 19 Deep collaboration with PRC key CSP customer, as one of the foundational QLC customers, resulted in co-development of CSAL

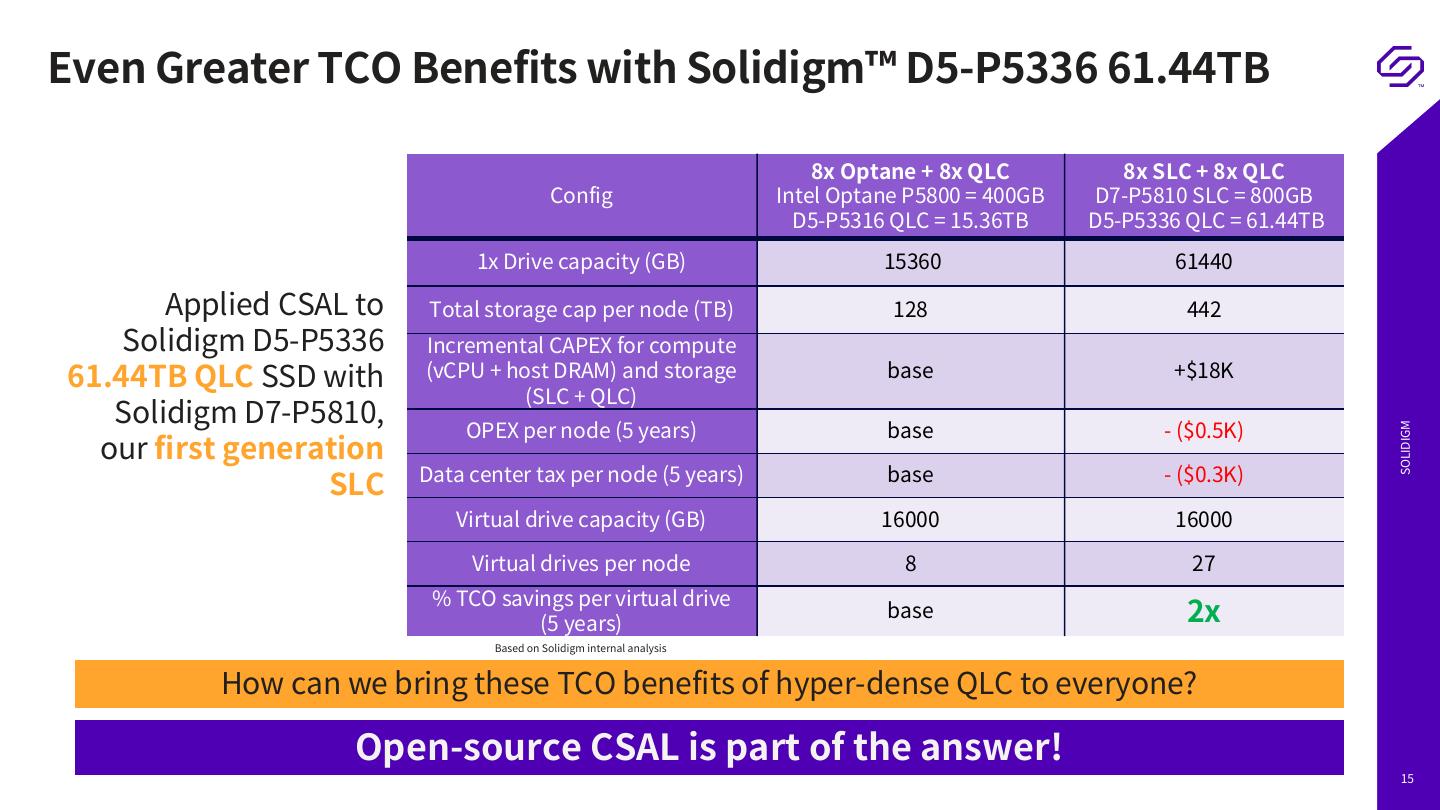

15 .Even Greater TCO Benefits with Solidigm™ D5-P5336 61.44TB 8x Optane + 8x QLC 8x SLC + 8x QLC Config Intel Optane P5800 = 400GB D7-P5810 SLC = 800GB D5-P5316 QLC = 15.36TB D5-P5336 QLC = 61.44TB 1x Drive capacity (GB) 15360 61440 Applied CSAL to Total storage cap per node (TB) 128 442 Solidigm D5-P5336 Incremental CAPEX for compute 61.44TB QLC SSD with (vCPU + host DRAM) and storage base +$18K (SLC + QLC) Solidigm D7-P5810, OPEX per node (5 years) base - ($0.5K) our first generation SOLIDIGM SLC Data center tax per node (5 years) base - ($0.3K) Virtual drive capacity (GB) 16000 16000 Virtual drives per node 8 27 % TCO savings per virtual drive (5 years) base 2x Based on Solidigm internal analysis How can we bring these TCO benefits of hyper-dense QLC to everyone? Open-source CSAL is part of the answer! 15

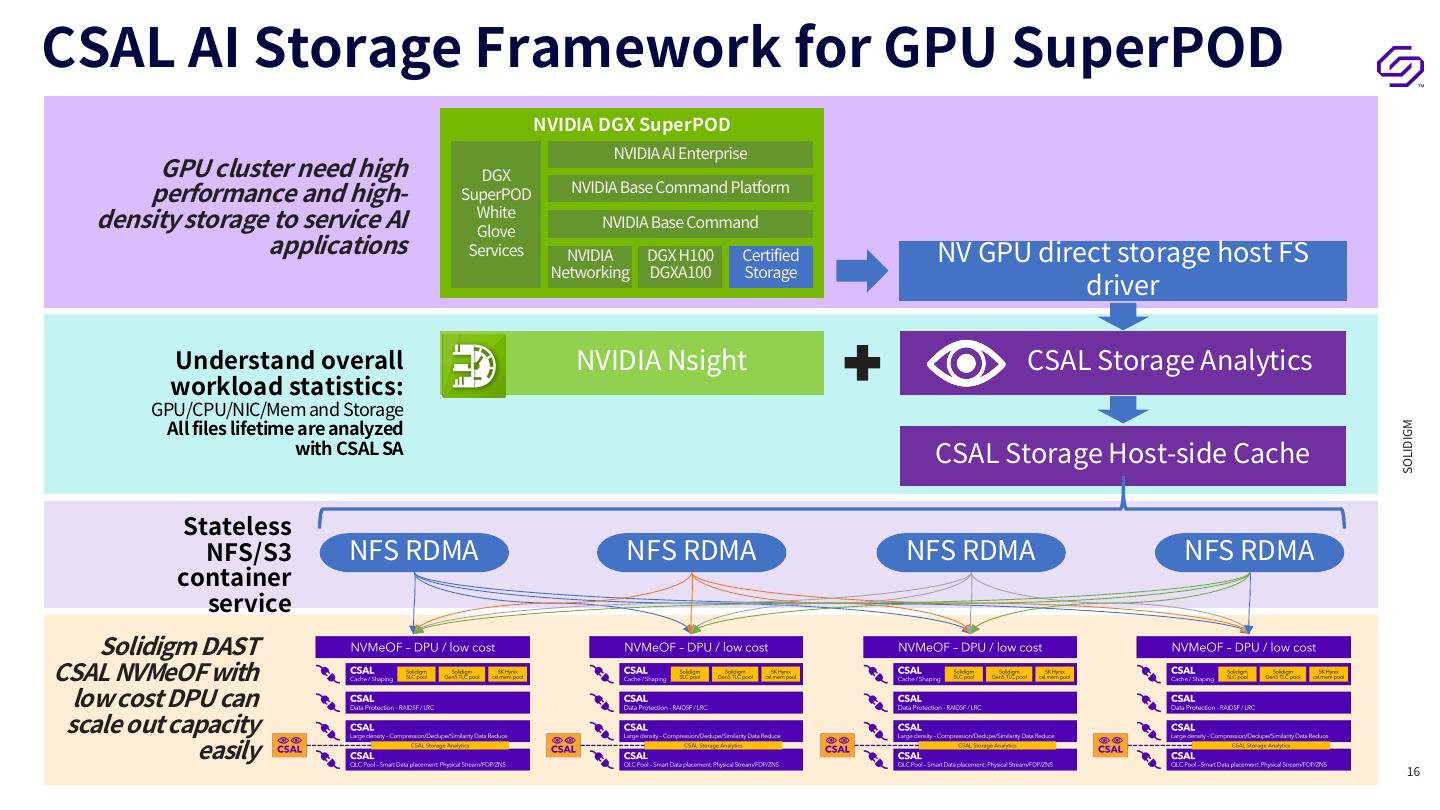

16 .CSAL AI Storage Framework for GPU SuperPOD NVIDIA DGX SuperPOD NVIDIA AI Enterprise GPU cluster need high DGX performance and high- SuperPOD NVIDIA Base Command Platform density storage to service AI White Glove NVIDIA Base Command applications Services NVIDIA DGX H100 Certified NV GPU direct storage host FS driver Networking DGXA100 Storage Understand overall NVIDIA Nsight CSAL Storage Analytics workload statistics: GPU/CPU/NIC/Mem and Storage All files lifetime are analyzed CSAL Storage Host-side Cache SOLIDIGM with CSAL SA Stateless NFS/S3 NFS RDMA NFS RDMA NFS RDMA NFS RDMA container service Solidigm DAST CSAL NVMeOF with low cost DPU can scale out capacity easily 16

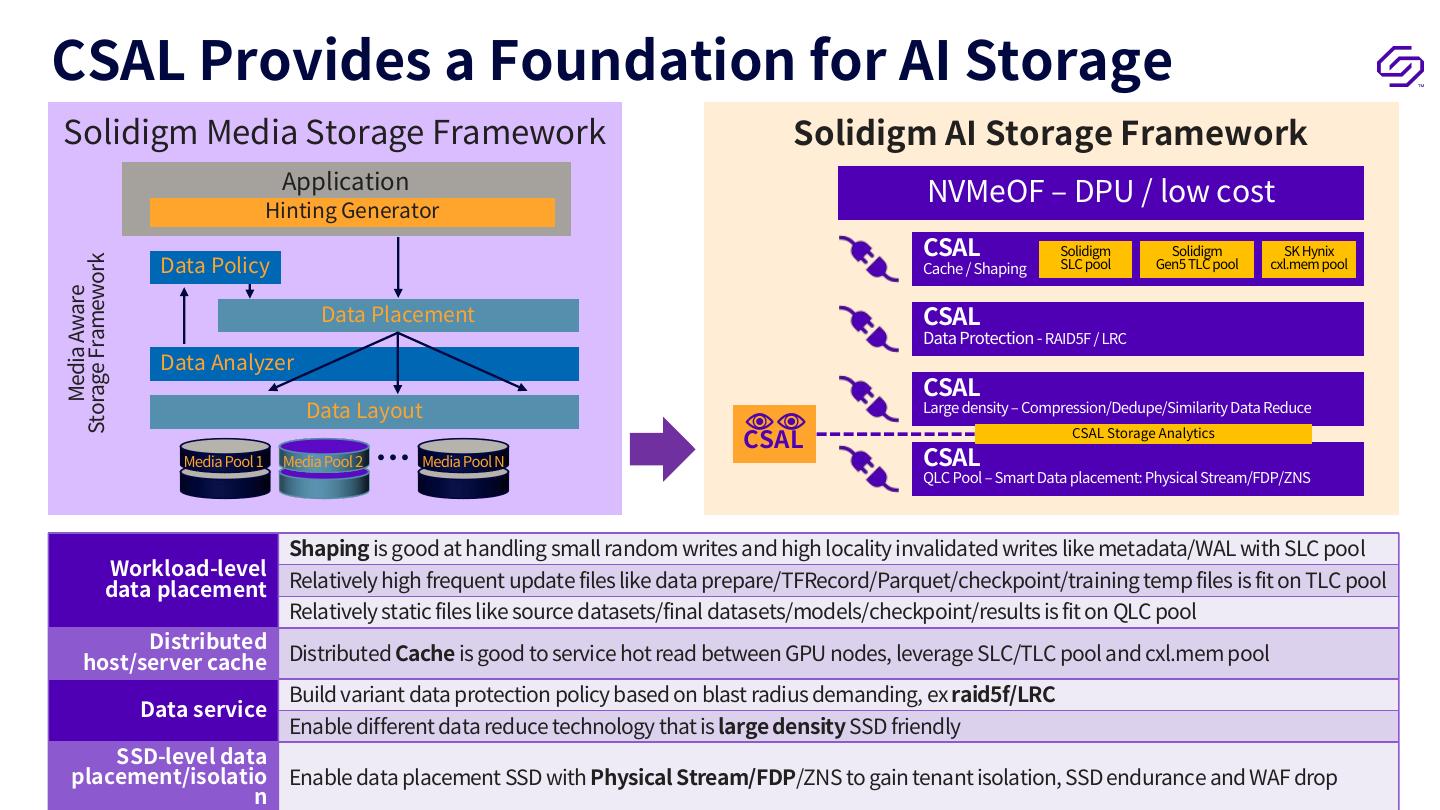

17 . CSAL Provides a Foundation for AI Storage Solidigm Media Storage Framework Solidigm AI Storage Framework Application NVMeOF – DPU / low cost Hinting Generator CSAL Solidigm Solidigm SK Hynix Data Policy Cache / Shaping SLC pool Gen5 TLC pool cxl.mem pool Storage Framework Data Placement CSAL Media Aware Data Protection - RAID5F / LRC Data Analyzer CSAL Data Layout Large density – Compression/Dedupe/Similarity Data Reduce CSAL … Media Pool N CSAL Storage Analytics Media Pool 1 Media Pool 2 CSAL QLC Pool – Smart Data placement: Physical Stream/FDP/ZNS Shaping is good at handling small random writes and high locality invalidated writes like metadata/WAL with SLC pool Workload-level data placement Relatively high frequent update files like data prepare/TFRecord/Parquet/checkpoint/training temp files is fit on TLC pool Relatively static files like source datasets/final datasets/models/checkpoint/results is fit on QLC pool Distributed host/server cache Distributed Cache is good to service hot read between GPU nodes, leverage SLC/TLC pool and cxl.mem pool Build variant data protection policy based on blast radius demanding, ex raid5f/LRC Data service Enable different data reduce technology that is large density SSD friendly SSD-level data placement/isolatio Enable data placement SSD with Physical Stream/FDP/ZNS to gain tenant isolation, SSD endurance and WAF drop n

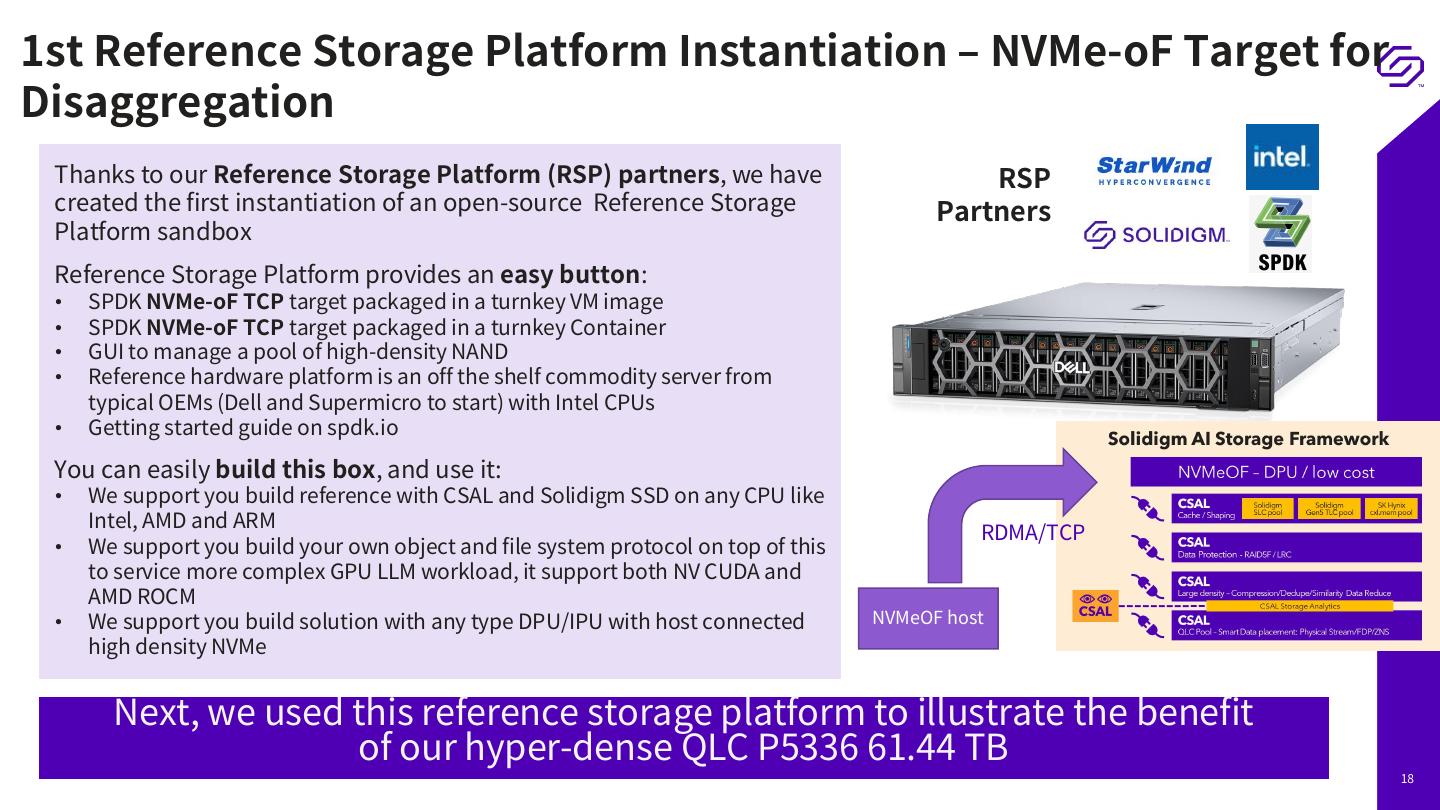

18 .1st Reference Storage Platform Instantiation – NVMe-oF Target for Disaggregation Thanks to our Reference Storage Platform (RSP) partners, we have RSP created the first instantiation of an open-source Reference Storage Partners Platform sandbox Reference Storage Platform provides an easy button: • SPDK NVMe-oF TCP target packaged in a turnkey VM image • SPDK NVMe-oF TCP target packaged in a turnkey Container • GUI to manage a pool of high-density NAND • Reference hardware platform is an off the shelf commodity server from typical OEMs (Dell and Supermicro to start) with Intel CPUs • Getting started guide on spdk.io SOLIDIGM You can easily build this box, and use it: • We support you build reference with CSAL and Solidigm SSD on any CPU like Intel, AMD and ARM RDMA/TCP • We support you build your own object and file system protocol on top of this to service more complex GPU LLM workload, it support both NV CUDA and AMD ROCM • We support you build solution with any type DPU/IPU with host connected NVMeOF host high density NVMe Next, we used this reference storage platform to illustrate the benefit of our hyper-dense QLC P5336 61.44 TB 18

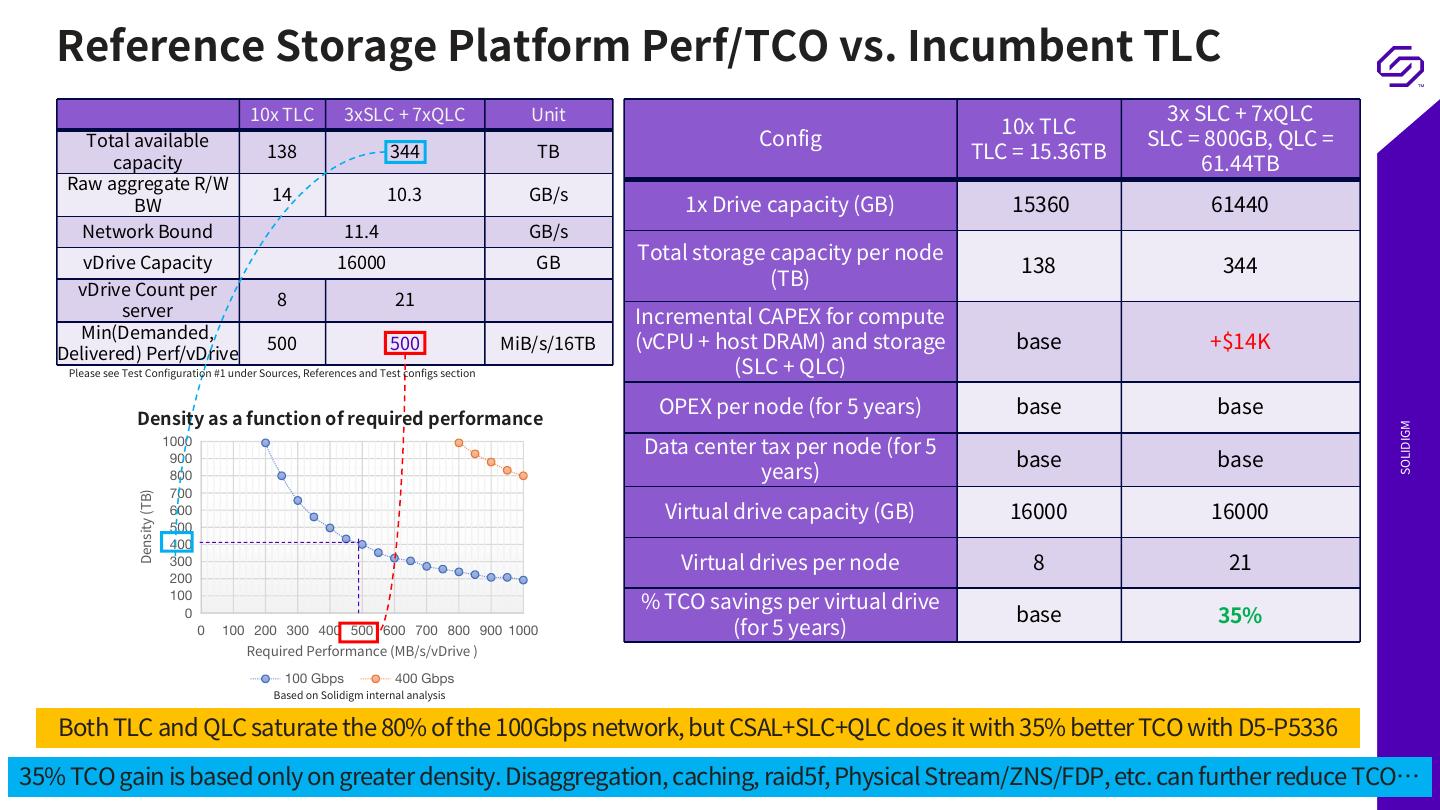

19 . Reference Storage Platform Perf/TCO vs. Incumbent TLC 10x TLC 3xSLC + 7xQLC Unit 3x SLC + 7xQLC 10x TLC Total available Config SLC = 800GB, QLC = 138 344 TB TLC = 15.36TB capacity 61.44TB Raw aggregate R/W 14 10.3 GB/s BW 1x Drive capacity (GB) 15360 61440 Network Bound 11.4 GB/s Total storage capacity per node vDrive Capacity 16000 GB 138 344 vDrive Count per (TB) 8 21 server Incremental CAPEX for compute Min(Demanded, 500 500 MiB/s/16TB (vCPU + host DRAM) and storage base +$14K Delivered) Perf/vDrive Please see Test Configuration #1 under Sources, References and Test configs section (SLC + QLC) Density as a function of required performance OPEX per node (for 5 years) base base Data center tax per node (for 5 SOLIDIGM base base 1000 years) 900 800 Virtual drive capacity (GB) 16000 16000 700 Density (TB) 600 500 Virtual drives per node 8 21 400 300 200 % TCO savings per virtual drive base 35% 100 (for 5 years) 0 0 100 200 300 400 500 600 700 800 900 1000 Required Performance (MB/s/vDrive ) Based on Solidigm internal analysis 100 Gbps 400 Gbps Based on Solidigm internal analysis Both TLC and QLC saturate the 80% of the 100Gbps network, but CSAL+SLC+QLC does it with 35% better TCO with D5-P5336 35% TCO gain is based only on greater density. Disaggregation, caching, raid5f, Physical Stream/ZNS/FDP, etc. can further reduce TCO… 19

20 .20

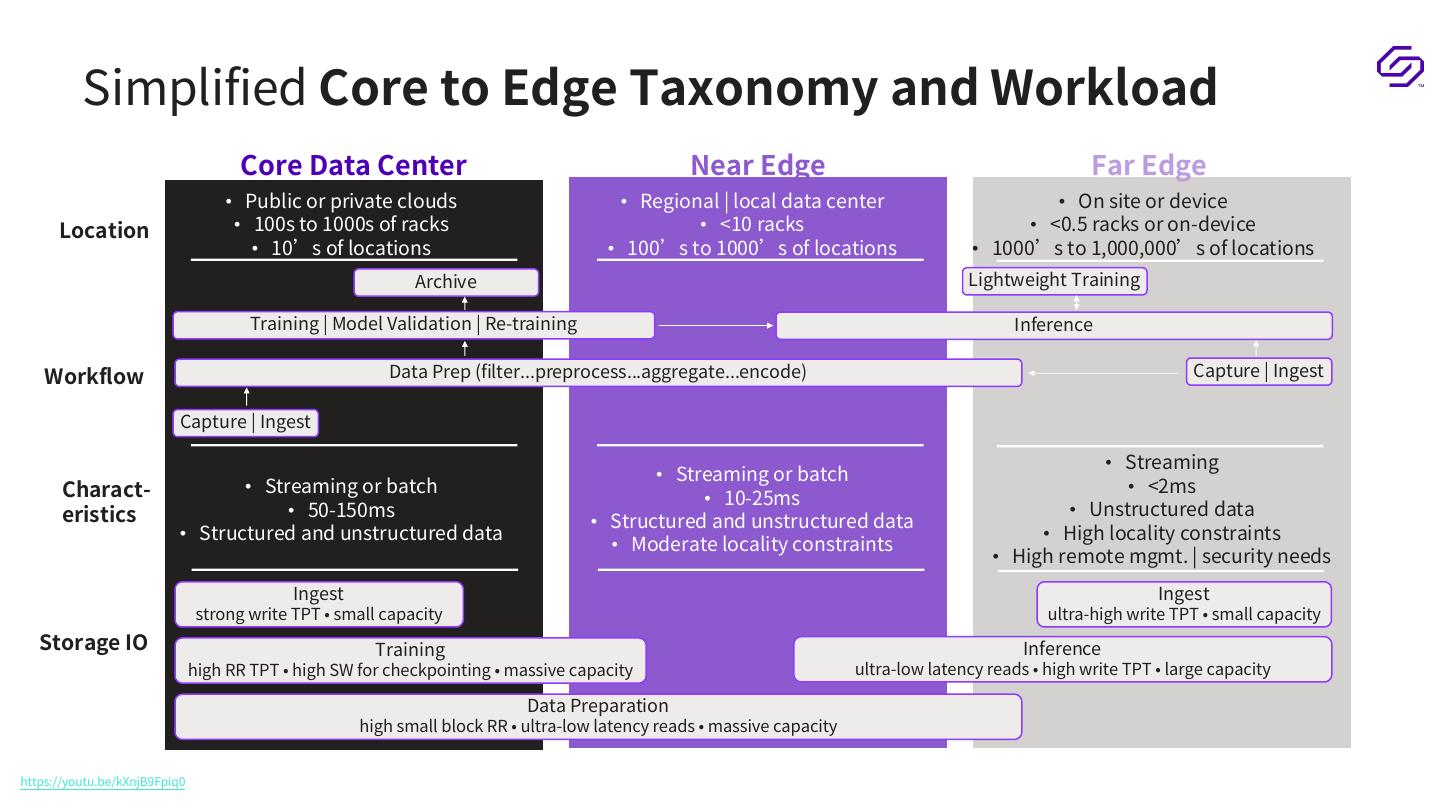

21 . Simplified Core to Edge Taxonomy and Workload Core Data Center Near Edge Far Edge • Public or private clouds • Regional | local data center • On site or device Location • 100s to 1000s of racks • <10 racks • <0.5 racks or on-device • 10’s of locations • 100’s to 1000’s of locations • 1000’s to 1,000,000’s of locations Archive Lightweight Training Training | Model Validation | Re-training Inference Workflow Data Prep (filter...preprocess...aggregate...encode) Capture | Ingest Capture | Ingest • Streaming • Streaming or batch Charact- • Streaming or batch • <2ms • 10-25ms eristics • 50-150ms • Unstructured data • Structured and unstructured data • Structured and unstructured data • High locality constraints • Moderate locality constraints • High remote mgmt. | security needs SOLIDIGM CONFIDENTIAL Ingest Ingest strong write TPT • small capacity ultra-high write TPT • small capacity Storage IO Training Inference high RR TPT • high SW for checkpointing • massive capacity ultra-low latency reads • high write TPT • large capacity Data Preparation high small block RR • ultra-low latency reads • massive capacity 21 https://youtu.be/kXnjB9Fpiq0

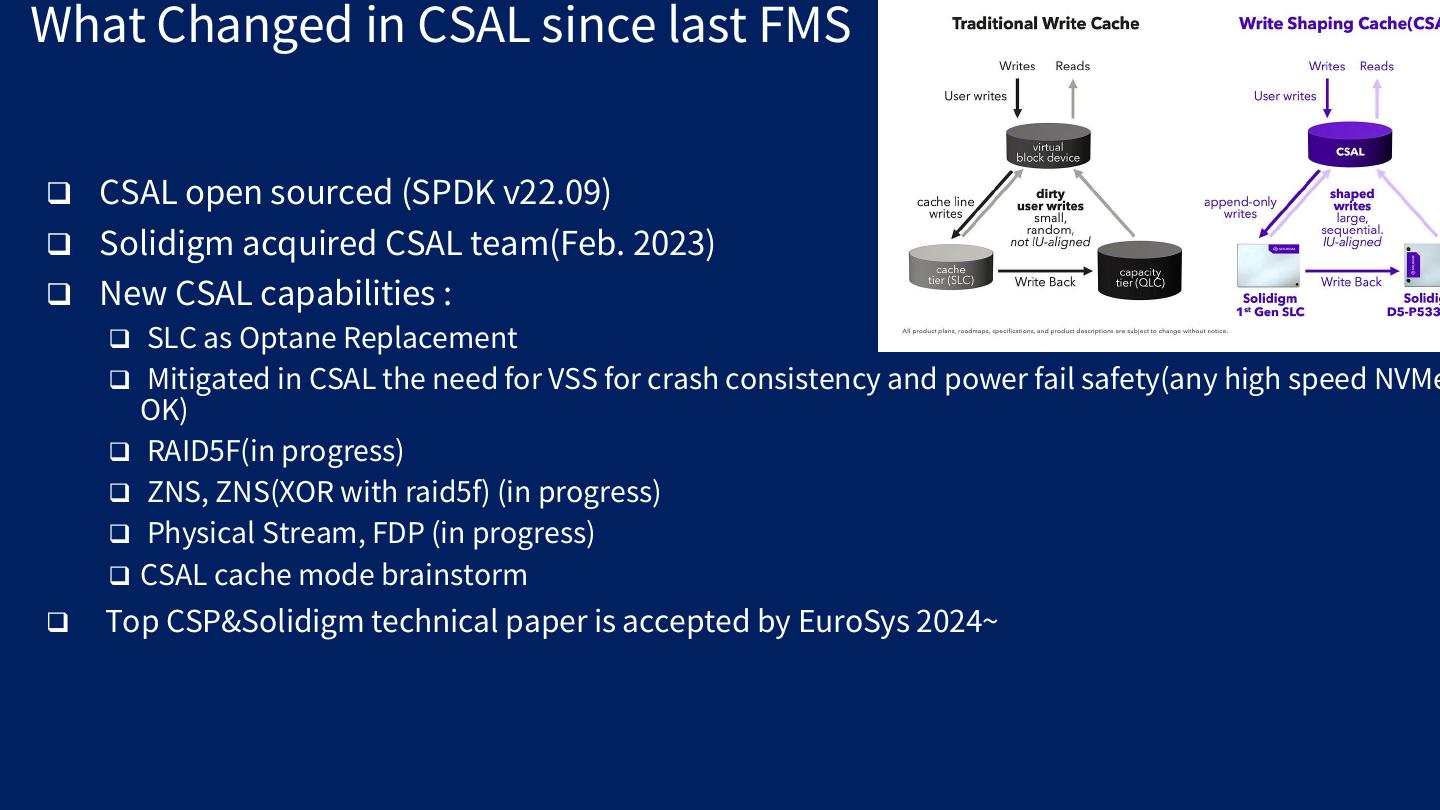

22 .What Changed in CSAL since last FMS CSAL open sourced (SPDK v22.09) Solidigm acquired CSAL team(Feb. 2023) New CSAL capabilities : SLC as Optane Replacement Mitigated in CSAL the need for VSS for crash consistency and power fail safety(any high speed NVMe OK) RAID5F(in progress) ZNS, ZNS(XOR with raid5f) (in progress) Physical Stream, FDP (in progress) CSAL cache mode brainstorm Top CSP&Solidigm technical paper is accepted by EuroSys 2024~

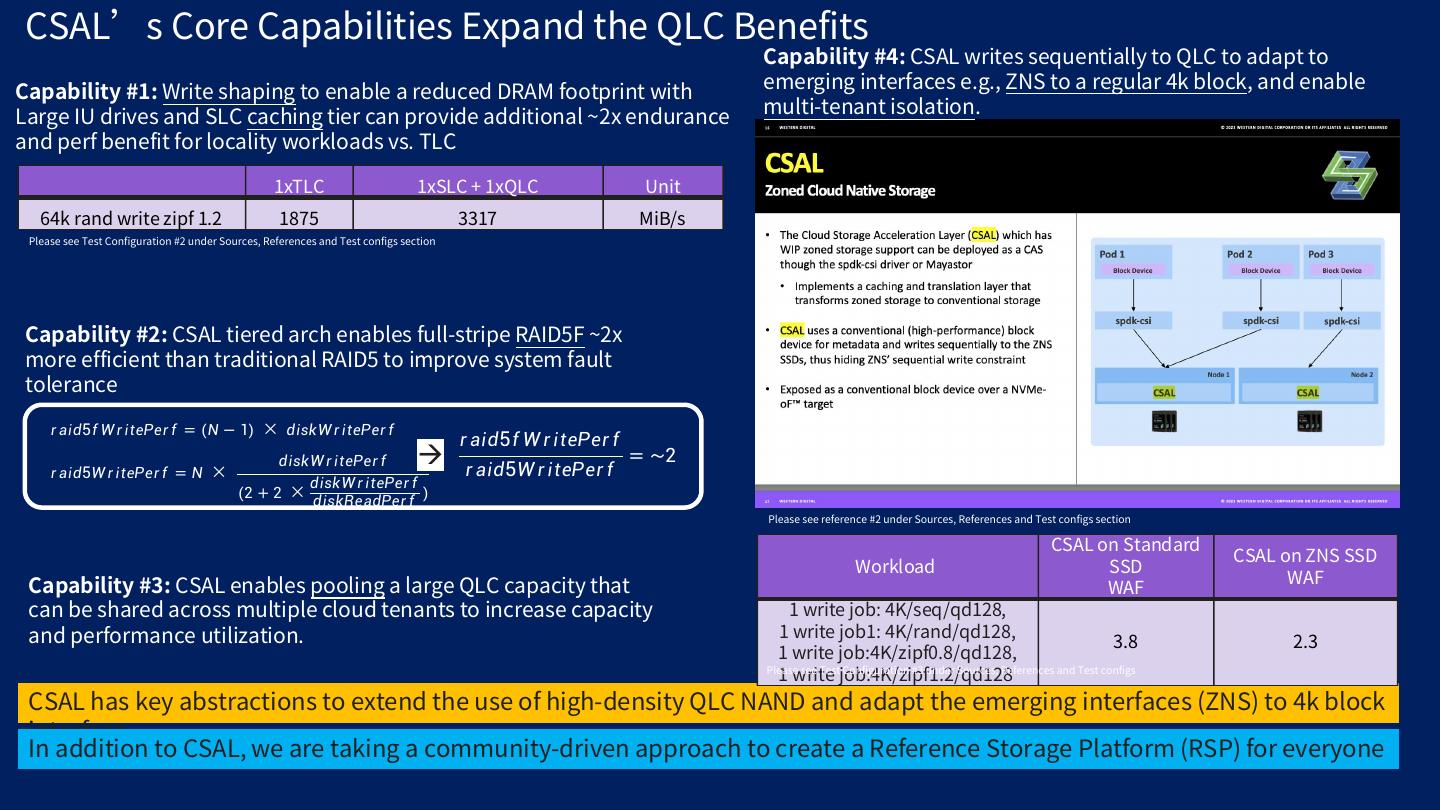

23 . CSAL’s Core Capabilities Expand the QLC Benefits Capability #4: CSAL writes sequentially to QLC to adapt to Capability #1: Write shaping to enable a reduced DRAM footprint with emerging interfaces e.g., ZNS to a regular 4k block, and enable Large IU drives and SLC caching tier can provide additional ~2x endurance multi-tenant isolation. and perf benefit for locality workloads vs. TLC 1xTLC 1xSLC + 1xQLC Unit 64k rand write zipf 1.2 1875 3317 MiB/s Please see Test Configuration #2 under Sources, References and Test configs section Capability #2: CSAL tiered arch enables full-stripe RAID5F ~2x more efficient than traditional RAID5 to improve system fault tolerance 푟푎 5푓푊푟 푡 ሀ 푟푓 = � − 1 × 푠푘푊푟 푡 ሀ 푟푓 푟푎 5푓푊푟 푡 ሀ 푟푓 = ~2 푟푎 5푊푟 푡 ሀ 푟푓 = � × 푠푘푊푟 푡 ሀ 푟푓 푟푎 5푊푟 푡 ሀ 푟푓 2+2 × 푠푘푊푟 푡 ሀ 푟푓 푠푘푅 푎 ሀ 푟푓 Please see reference #2 under Sources, References and Test configs section CSAL on Standard CSAL on ZNS SSD Workload SSD WAF Capability #3: CSAL enables pooling a large QLC capacity that WAF can be shared across multiple cloud tenants to increase capacity 1 write job: 4K/seq/qd128, and performance utilization. 1 write job1: 4K/rand/qd128, 3.8 2.3 1 write job:4K/zipf0.8/qd128, 1 write job:4K/zipf1.2/qd128 Please see Test Configuration #3 under Sources, References and Test configs CSAL has key abstractions to extend the use of high-density QLC NAND and adapt the emerging interfaces (ZNS) to 4k block interface In addition to CSAL, we are taking a community-driven approach to create a Reference Storage Platform (RSP) for everyone

24 .

25 .

26 .

27 .

28 .

29 .