- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

XEON在AIOPS中的应用

AI近年在OPS中的运用越来越广泛。XEON由于其灵活性并能兼顾AI的特点,特别适合在AIOPS中的应用。本议题对于某大客户采用XEON执行AI算法并进行部署的案例做一些深入介绍。

展开查看详情

1 .December 2020 Intel AI products and use case introduction Bian Fengwei

2 .Notices and Disclaimers ▪ Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Performance varies depending on system configuration. ▪ No product or component can be absolutely secure. ▪ Tests document performance of components on a particular test, in specific systems. Differences in hardware, software, or configuration will affect actual performance. For more complete information about performance and benchmark results, visit http://www.intel.com/benchmarks . ▪ Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors. Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more complete information visit http://www.intel.com/benchmarks . ▪ Intel® Advanced Vector Extensions (Intel® AVX) provides higher throughput to certain processor operations. Due to varying processor power characteristics, utilizing AVX instructions may cause a) some parts to operate at less than the rated frequency and b) some parts with Intel® Turbo Boost Technology 2.0 to not achieve any or maximum turbo frequencies. Performance varies depending on hardware, software, and system configuration and you can learn more at http://www.intel.com/go/turbo. ▪ Intel's compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel microprocessors. These optimizations include SSE2, SSE3, and SSSE3 instruction sets and other optimizations. Intel does not guarantee the availability, functionality, or effectiveness of any optimization on microprocessors not manufactured by Intel. Microprocessor-dependent optimizations in this product are intended for use with Intel microprocessors. Certain optimizations not specific to Intel microarchitecture are reserved for Intel microprocessors. Please refer to the applicable product User and Reference Guides for more information regarding the specific instruction sets covered by this notice. ▪ Cost reduction scenarios described are intended as examples of how a given Intel-based product, in the specified circumstances and configurations, may affect future costs and provide cost savings. Circumstances will vary. Intel does not guarantee any costs or cost reduction. ▪ Intel does not control or audit third-party benchmark data or the web sites referenced in this document. You should visit the referenced web site and confirm whether referenced data are accurate. ▪ © Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others. 2

3 .3 Agriculture Energy Education Government Finance Health Analytics & AI Everywhere Part of every top 10 strategic technology trend for 2020 Industrial Media Retail Smart Home Telecom Transport 3

4 . Many Approaches to Analytics & AI No one size fits all Regression Supervised Learning AI Classification Clustering Unsupervised Machine Decision Trees Learning learning Data Generation Image Processing Semi-Supervised Speech Processing Learning Deep Natural Language learning Processing 4 Reinforcement Recommender Systems Learning Adversarial Networks 4

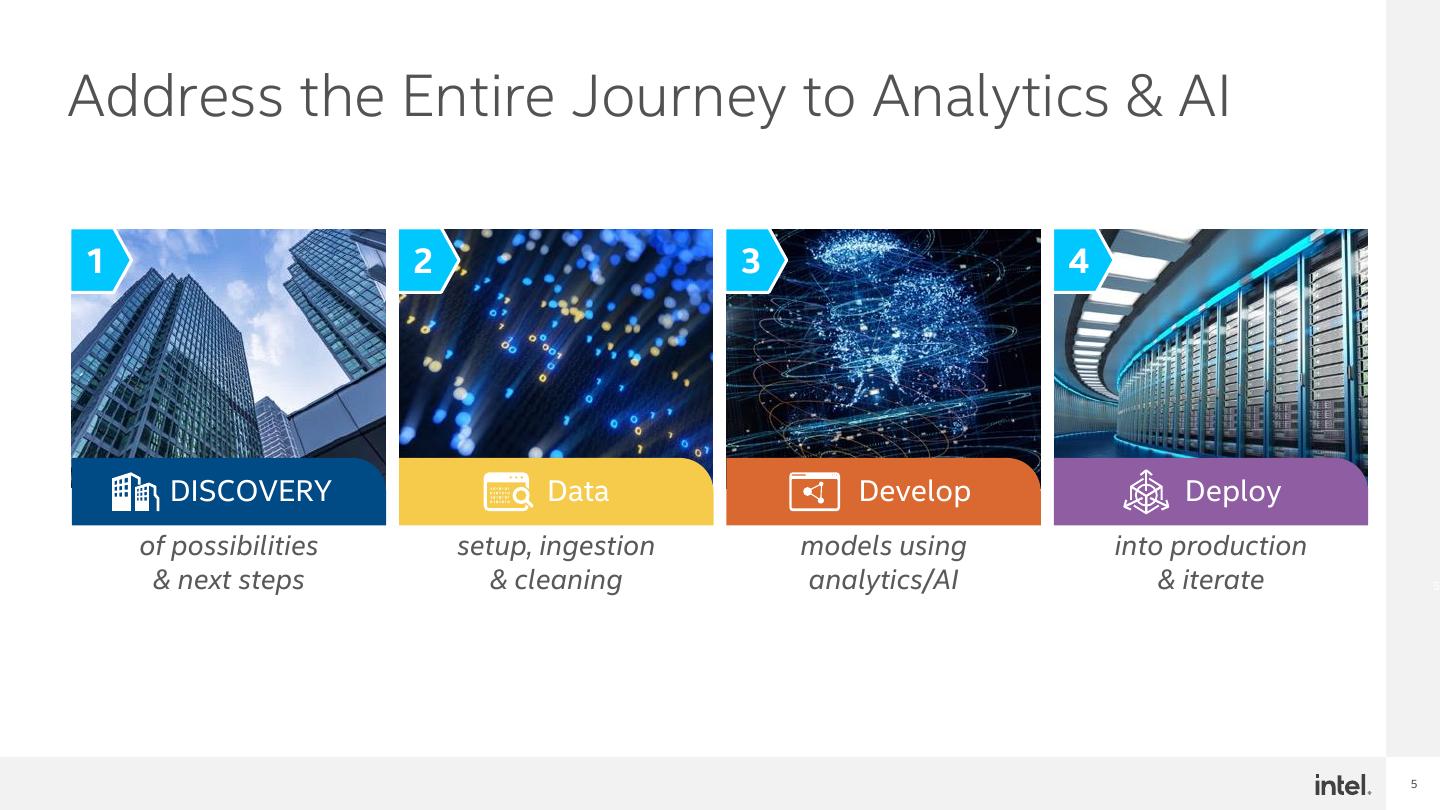

5 .Address the Entire Journey to Analytics & AI 1 2 3 4 DISCOVERY Data Develop Deploy of possibilities setup, ingestion models using into production & next steps & cleaning analytics/AI & iterate 5 5

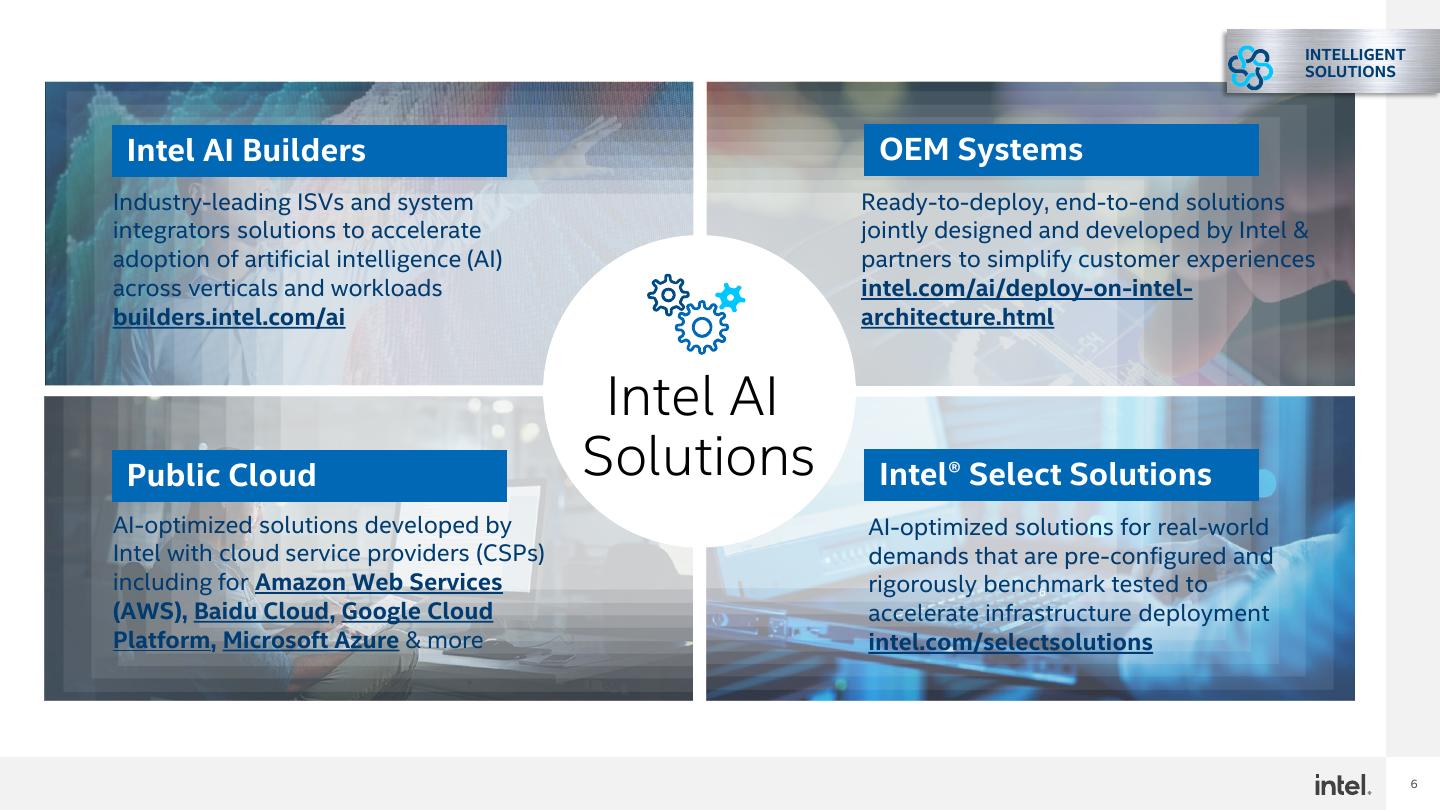

6 . INTELLIGENT SOLUTIONS Intel AI Builders OEM Systems Industry-leading ISVs and system Ready-to-deploy, end-to-end solutions integrators solutions to accelerate jointly designed and developed by Intel & adoption of artificial intelligence (AI) partners to simplify customer experiences across verticals and workloads intel.com/ai/deploy-on-intel- builders.intel.com/ai architecture.html Intel AI Public Cloud Solutions Intel® Select Solutions AI-optimized solutions developed by AI-optimized solutions for real-world Intel with cloud service providers (CSPs) demands that are pre-configured and including for Amazon Web Services rigorously benchmark 6 tested to (AWS), Baidu Cloud, Google Cloud accelerate infrastructure deployment Platform, Microsoft Azure & more intel.com/selectsolutions 6

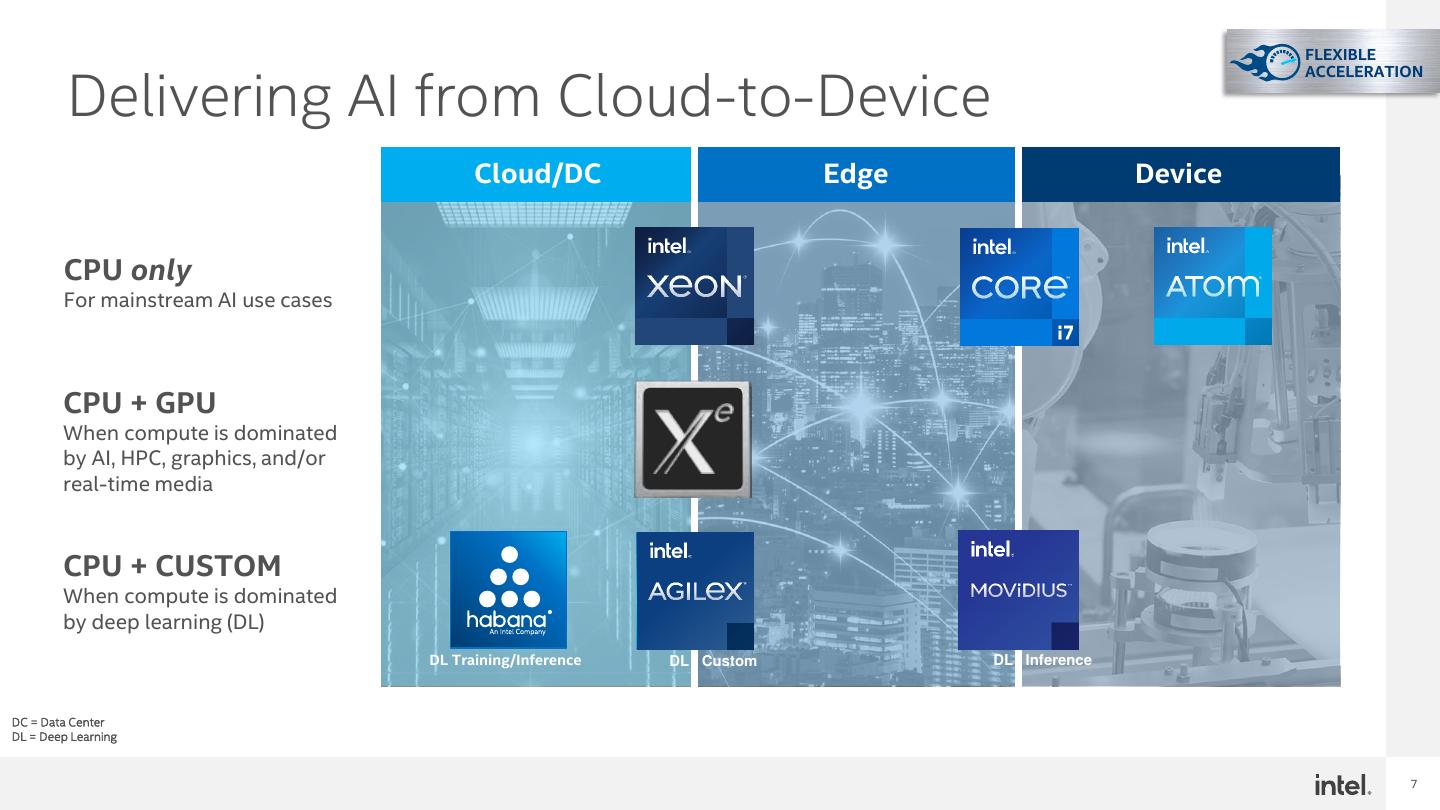

7 . FLEXIBLE Delivering AI from Cloud-to-Device ACCELERATION Cloud/DC Edge Device CPU only For mainstream AI use cases CPU + GPU When compute is dominated by AI, HPC, graphics, and/or real-time media CPU + CUSTOM When compute is dominated by deep learning (DL) DL Training/Inference DL Custom DL Inference DC = Data Center DL = Deep Learning 7

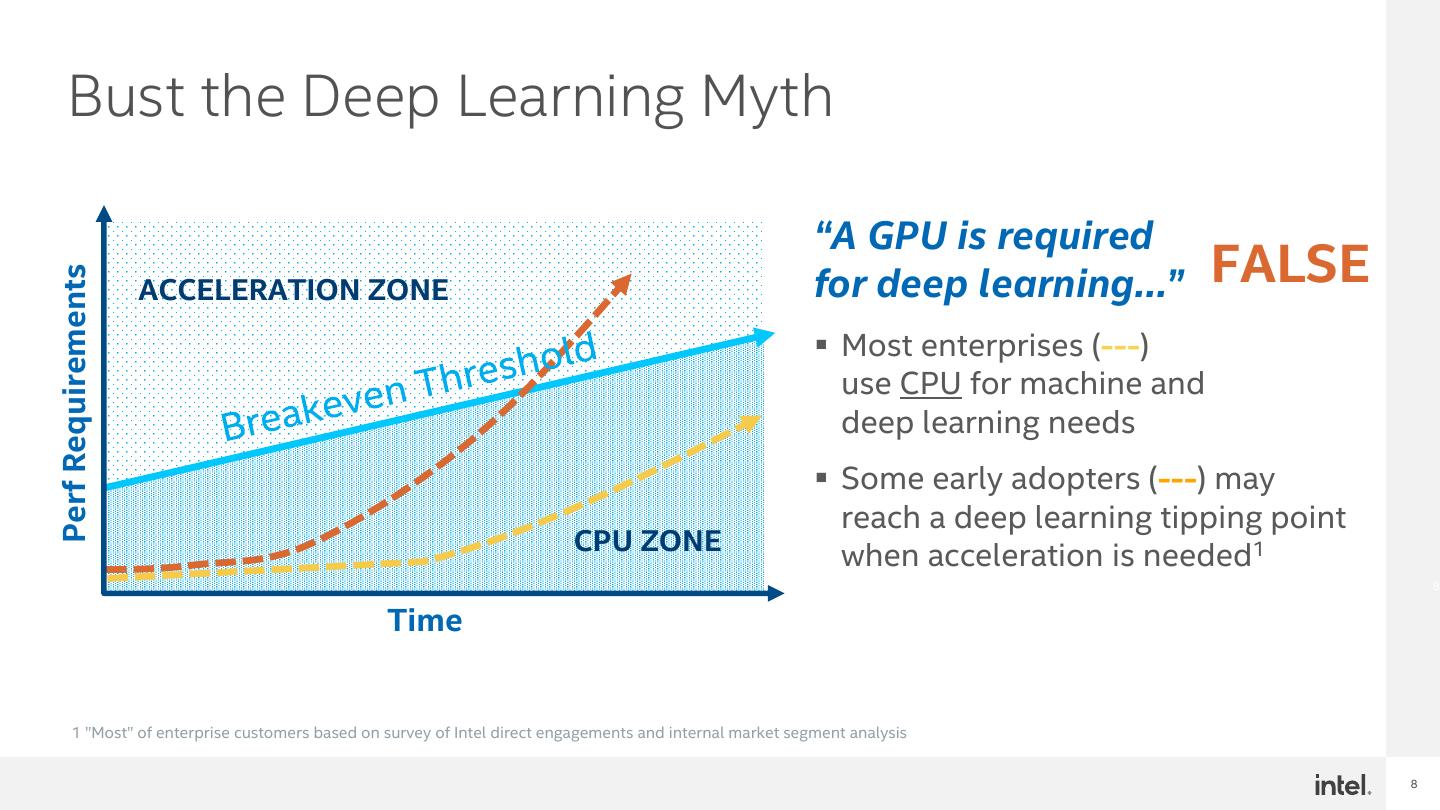

8 . Bust the Deep Learning Myth “A GPU is required for deep learning…” FALSE Perf Requirements ACCELERATION ZONE ▪ Most enterprises (---) use CPU for machine and deep learning needs ▪ Some early adopters (---) may reach a deep learning tipping point CPU ZONE when acceleration is needed1 8 Time 1 ”Most” of enterprise customers based on survey of Intel direct engagements and internal market segment analysis 8

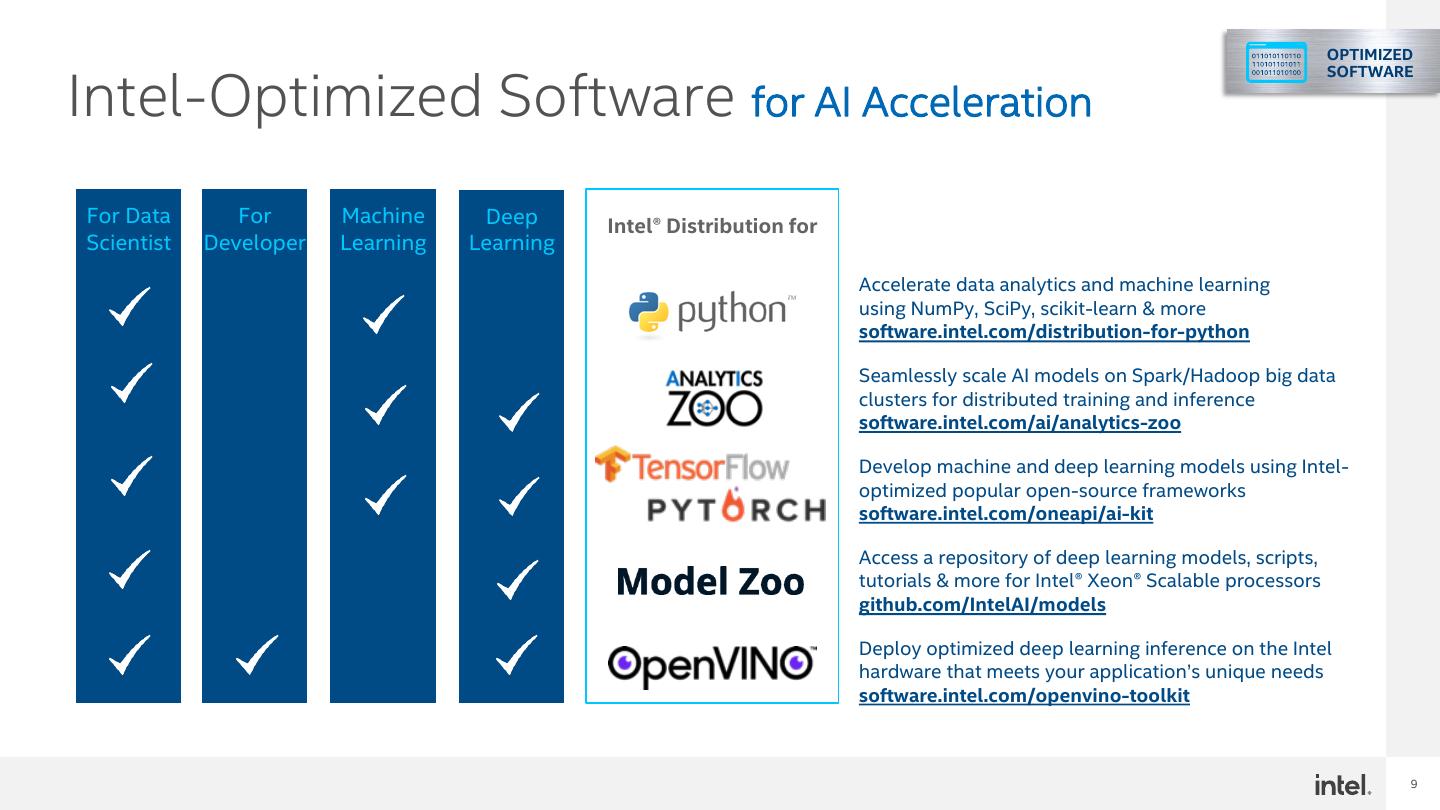

9 . OPTIMIZED Intel-Optimized Software for AI Acceleration SOFTWARE For Data For Machine Deep Intel® Distribution for Scientist Developer Learning Learning Accelerate data analytics and machine learning using NumPy, SciPy, scikit-learn & more software.intel.com/distribution-for-python Seamlessly scale AI models on Spark/Hadoop big data clusters for distributed training and inference software.intel.com/ai/analytics-zoo Develop machine and deep learning models using Intel- optimized popular open-source frameworks software.intel.com/oneapi/ai-kit Access a repository of deep learning models, scripts, tutorials & more for Intel® Xeon® Scalable processors github.com/IntelAI/models Deploy optimized deep learning inference on the Intel hardware that meets your application’s unique needs software.intel.com/openvino-toolkit 9

10 . UNIFIED Intel® oneAPI Toolkits APIS ✓ OpenCV Intel Distribution of OpenVINO™ toolkit ✓ Intel Deep Learning Deployment Toolkit Deploy high-performance inference ✓ Inference Support applications from device to cloud ✓ Deep Learning Workbench A single programming Intel AI Analytics Toolkit ✓ Intel optimization for TensorFlow model to deliver Develop machine and deep learning ✓ PyTorch optimized for Intel technology models to generate insights ✓ Intel Distribution for Python cross-architecture performance Intel oneAPI DL Framework Developer Toolkit ✓ Intel oneAPI Collective Library Build deep learning frameworks or ✓ Intel oneAPI Deep Neural Network Library (oneDNN) customize existing ones *Hardware support varies by individual Intel 10 oneAPI tool. FPGA architecture is supported only by the Intel oneAPI base toolkit. Architecture Other support will be expanded over time. CPU GPU FPGA https://software.intel.com/oneAPI accelerators https://software.intel.com/content/www/us/en/d evelop/tools/oneapi/dl-framework-developer- toolkit.html 10

11 . CPU INFUSED … Foundation for AI WITH AI 100+ OPTIMIZED TOPOLOGIES AI PERFORMANCE 44 OPTIMIZED TOPOLOGIES 24 OPTIMIZED More built-in TOPOLOGIES AI acceleration & optimized 2017 1ST GEN 2019 2ND GEN 2020 3RD GEN 2021 NEXT GEN topologies with Intel® Advanced Intel Deep Intel Deep Intel Deep each new gen Vector Extensions Learning Boost Learning Boost Learning Boost 512 (Intel AVX-512) (with VNNI) (VNNI, BF16) (AMX) 11 OPTIMIZED LIBRARIES AND FRAMEWORKS 11

12 .不同领域AI on XEON 的应用案例 12

13 .某公司 AIOps 案例 网络的趋势: 传统网络 SDN : 提供的网络自动化的能力。 问题: 网络规模变大,网络调整变多,网络内部的复杂度变大。 需要网络能够更智能的实现业务的支撑。 13

14 .网络架构 AIOPs 服务中心 网络控制器 分析器 自动化 网络基础架构 14

15 .测试环境 ▪ Hardware info: ▪ CPU: 2 * Intel(R) Xeon(R) Gold 6240 CPU @ 2.60GHz ▪ Memory: 192G ▪ Software info: ▪ CentOS Linux release 7.4.1708 (Core) ▪ Intel Python3 ▪ Intel Optimized tensorflow 1.13.1 ▪ AI algorithm : LSTM ▪ Workload: ▪ 1. 训练: IMDB电影评论情感验证 ▪ 2. 推理: 服务器CPU,内存,存储利用率验证 15

16 .测试模型说明: ▪ 测试模型: IMDB电影评论情感验证 ▪ 测试模型:服务器CPU,内存,存储利用率验 证 ▪ 测试目的: 训练性能 ▪ 测试目的:推理性能 ▪ 算法: LSTM ▪ 利用率数据集 ▪ 黑白名单数据集 ▪ 数据集包含了432条数据,从2016年到2018 ▪ 数据集包含了20000条黑样本,30000白名 年每月的数据(cpu,内存,存储),其中, 单10000条数据作为测试集。label为 pos(positive)和neg(negative)。 使用20%作为测试集,80%作为训练集。 16

17 .Intel 优化方案效果 Intel优化方案在CLX6240上的深度学习加速性能(higher is better) 7 5.9 6 5 4 3.2 3 2 1 1 1 0 IMDB电影评论情感验证模型 训练性能加速比 服务器利用率验证模型 推理性能加速比 CLX6240(tensorflow_base) CLX6240(tensorflow_intel_opt) 17

18 .部署 ▪ 在国内某大学部署后,WiFi在线率由70%上升为90%以上 18

19 .小结 1. Intel 提供端到端的AI硬件解决方案(ATOM,VPU, Core, GPU, XEON, FPGA, Habana)以及统一的软件接口。 2. 对于实时性要求比较高,并发性不高的推理应用场合,比较适合XEON。 3. 由于XEON的灵活性, 非常适合在AIOPS 应用。 19

20 .Thank you

21 .21