- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

2.戴金权-从笔记本到云 无缝扩展大数据AI

从笔记本到云 无缝扩展大数据AI 戴金权 英特尔院士、大数据人工智能首席构架师、大数据分析和人工智能创新院院长

展开查看详情

1 .从笔记本到云 无缝扩展大数据 AI Seamlessly Scale Big Data AI from Laptops to Cloud 戴金权 (Jason Dai) 英特尔院士

2 . Intel’s Open Source Big Data AI Project Domain PPML Specific Privacy Preserving Chronos Friesian Time Series Recommendation System Toolkits Machine Learning End-to-End Orca DLlib Nano Distributed AI E2E Distributed AI Pipeline Distributed Deep Learning Integration and Abstraction (TensorFlow / PyTorch / Pipelines OpenVINO / Ray) Library for Apache Spark of IA-specific Accelerations Laptop K8s Apache Hadoop/Spark Ray Cloud https://github.com/intel-analytics/BigDL/ * “BigDL 2.0: Seamless Scaling of AI Pipelines from Laptops to Distributed Cluster”, 2022 Conference on Computer Vision and Pattern Recognition (CVPR 2022) * “BigDL: A Distributed Deep Learning Framework for Big Data”, ACM Symposium on Cloud Computing 2019 (SOCC’19)

3 . Intel’s Open Source Big Data AI Project Domain PPML Specific Secure Big Data AI Time Series and Recommendation Chronos Friesian Privacy Preserving Toolkits Framework Machine Learning Time Series Frameworks Recommendation System End-to-End Orca DLlib Nano Distributed AI Scalable, Distributed E2E Distributed AI Pipeline (TensorFlow / PyTorch / Big Distributed Deep Data AIIntegration Learning System and Abstraction Pipelines OpenVINO / Ray) Library for Apache Spark of IA-specific Accelerations Laptop K8s Apache Hadoop/Spark Ray Cloud https://github.com/intel-analytics/BigDL/ * “BigDL 2.0: Seamless Scaling of AI Pipelines from Laptops to Distributed Cluster”, 2022 Conference on Computer Vision and Pattern Recognition (CVPR 2022) * “BigDL: A Distributed Deep Learning Framework for Big Data”, ACM Symposium on Cloud Computing 2019 (SOCC’19)

4 . Seamless Scale Big Data AI from Laptop to Cloud It Requires Scalability Performance Security

5 .Scalability Performance Security

6 .Unified Big Data AI Infrastructure Traditional, Segregated Infrastructure … Distributed Data Lake / Big Data Cluster Deep Learning Cluster File System Warehouse Intermediate Files Unified Infrastructure (using BigDL) Driver Node Worker Node Worker Worker Node Node ... ... ... … Distributed Data Lake / File System Warehouse K8s, YARN, Ray, Standalone, Cloud Unified Big Data AI Cluster

7 . Building End-to-End Distributed Data + AI Pipeline #1. Distributed data processing using Spark Dataframe 1 Distributed Data processing raw_df = spark.read.format("csv").load(data_source_path) \ Distributed Python APIs in Orca .select("Cardholder Last Name", "Cardholder First Initial", \ Spark TensorFlow PyData "Amount", "Vendor", "Year-Month") \ Dataframe, Dataset, (pandas, PyImage (pillow, ... Ray Dataset PyTorch sklearn, opencv, …) DataLoader numpy, ...) #2. Building model using TensorFlow import tensorflow as tf ... 2 ML/DL Model model = tf.keras.models.Model(inputs=input, outputs=output) Standard TensorFlow/PyTorch APIs for model.compile(optimizer='rmsprop', building models loss='sparse_categorical_crossentropy', metrics=['accuracy’]) #3. Distributed training on Orca 3 Distributed Training (& from zoo.orca.learn.tf.estimator import Estimator Inference) est = Estimator.from_keras(model, model_dir=args.log_dir) est.fit(data=trainingDF, batch_size=batch_size, epochs=max_epoch, \ sklearn-style APIs for transparently distributed training & inference feature_cols=['features’], label_cols=['labels’], ...) https://github.com/Mastercard/udap-analytic-zoo-examples

8 .“AI at Scale” in Mastercard with BigDL Distributed Training ~5 hours training Distributed Inference Hadoop Cluster 100s of Python servers Feature Engineering Auto NCF … Encoder ETL DataFrame SQL Training Data YARN Models Parquet Models 100s of billions 2.2B users transaction records https://www.intel.com/content/www/us/en/developer/articles/technical/ai-at-scale-in-mastercard-with-bigdl0.html

9 .Scalability Performance Security

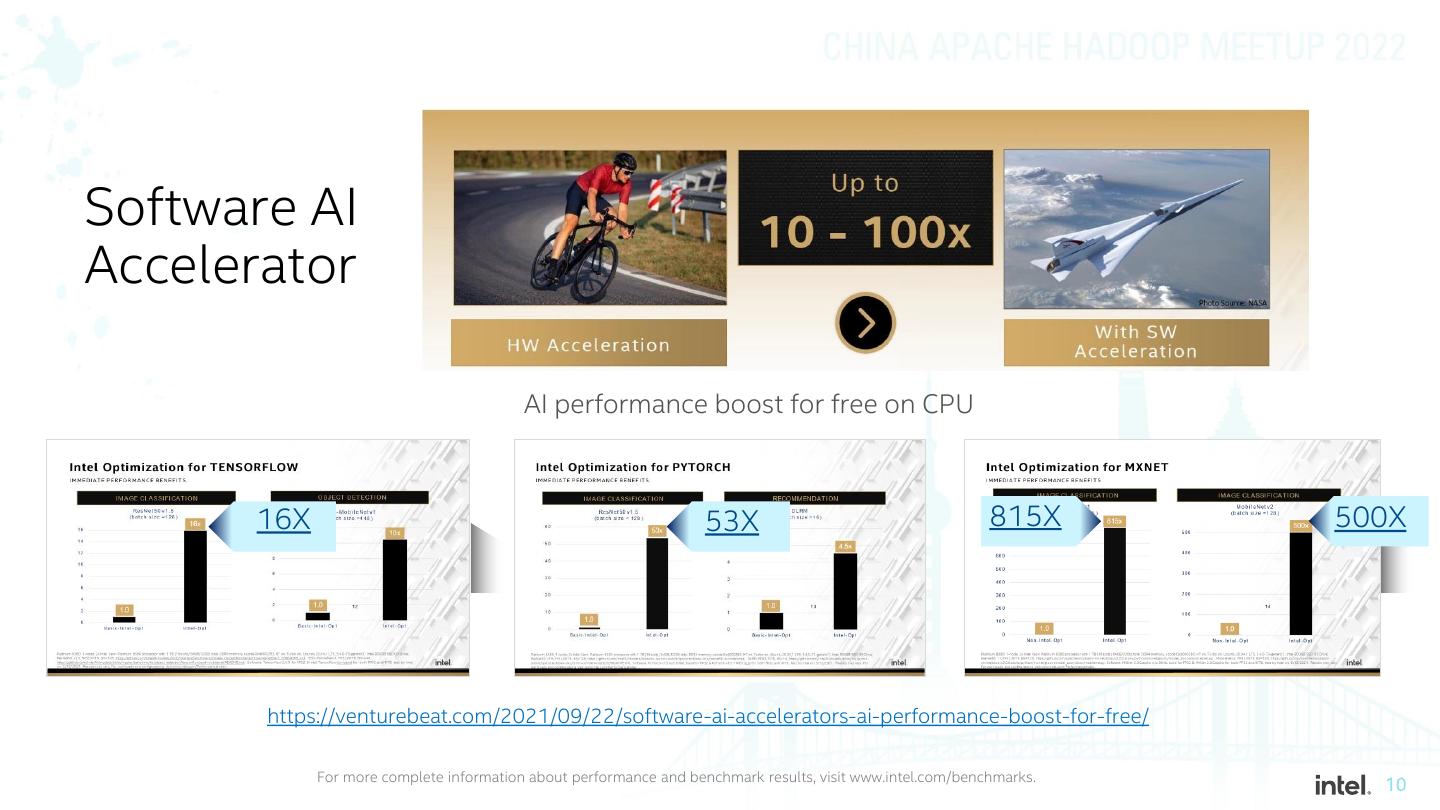

10 .Software AI Accelerator AI performance boost for free on CPU 16X 53X 815X 500X https://venturebeat.com/2021/09/22/software-ai-accelerators-ai-performance-boost-for-free/ For more complete information about performance and benchmark results, visit www.intel.com/benchmarks.

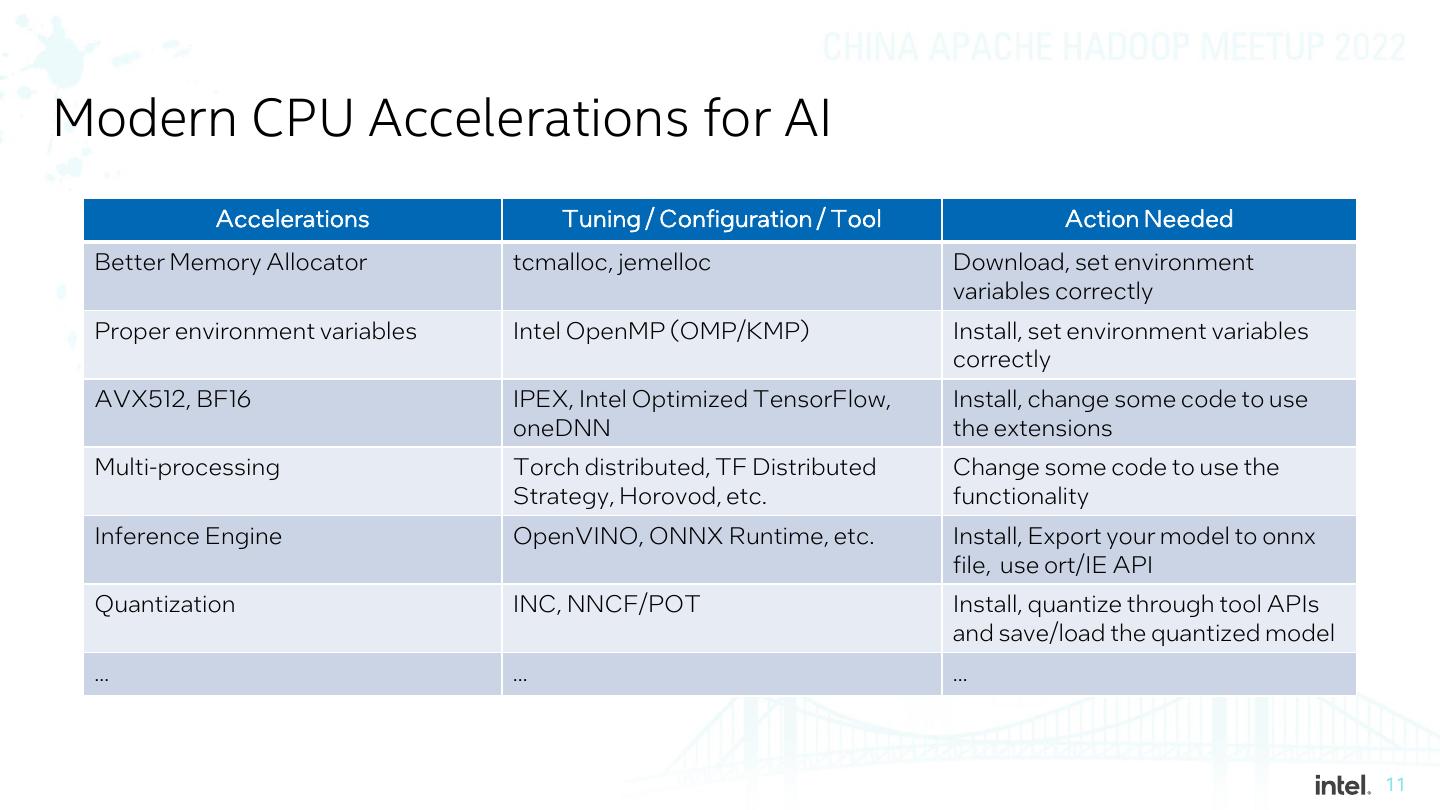

11 .Modern CPU Accelerations for AI Accelerations Tuning / Configuration / Tool Action Needed Better Memory Allocator tcmalloc, jemelloc Download, set environment variables correctly Proper environment variables Intel OpenMP (OMP/KMP) Install, set environment variables correctly AVX512, BF16 IPEX, Intel Optimized TensorFlow, Install, change some code to use oneDNN the extensions Multi-processing Torch distributed, TF Distributed Change some code to use the Strategy, Horovod, etc. functionality Inference Engine OpenVINO, ONNX Runtime, etc. Install, Export your model to onnx file, use ort/IE API Quantization INC, NNCF/POT Install, quantize through tool APIs and save/load the quantized model … … …

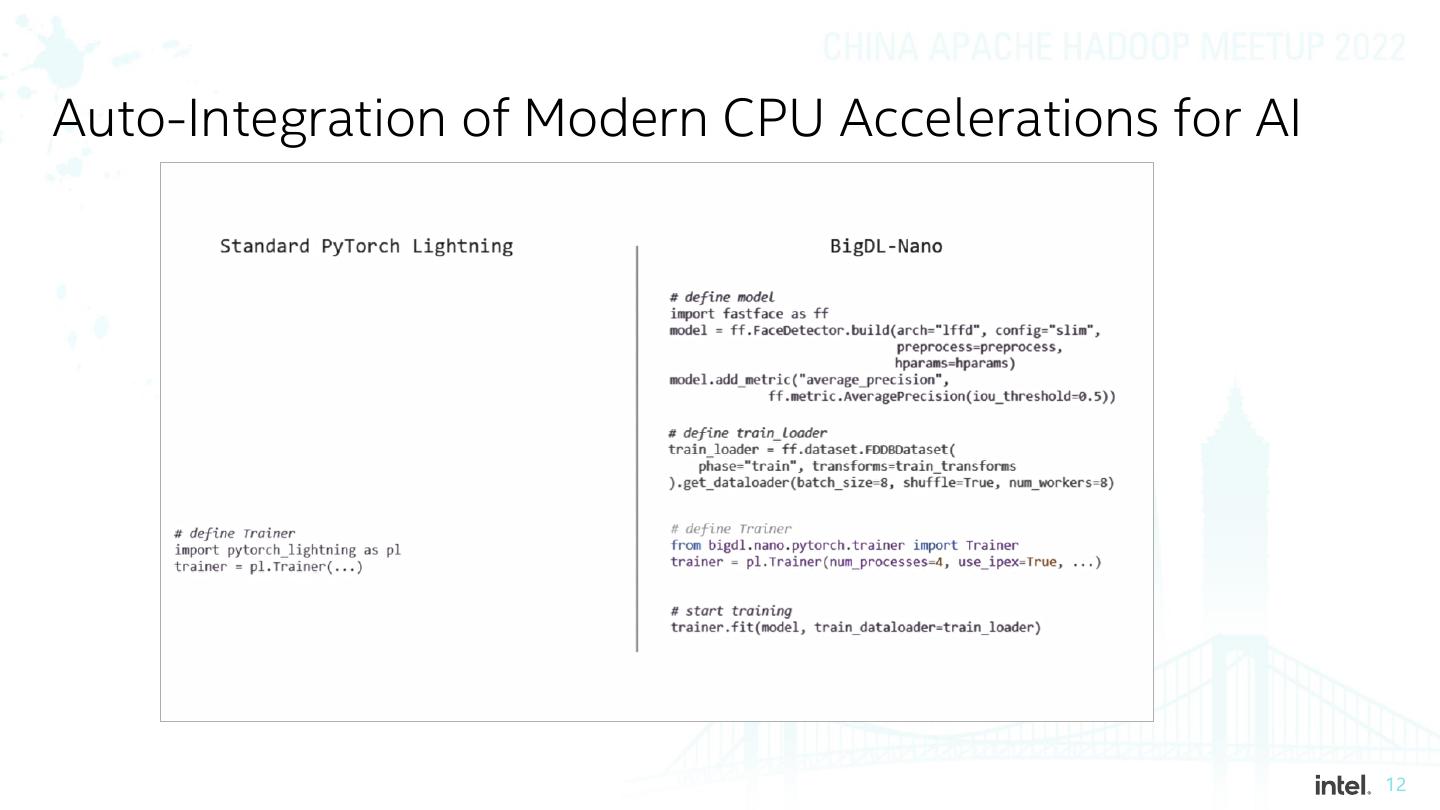

12 .Auto-Integration of Modern CPU Accelerations for AI

13 .Scalability Performance Security

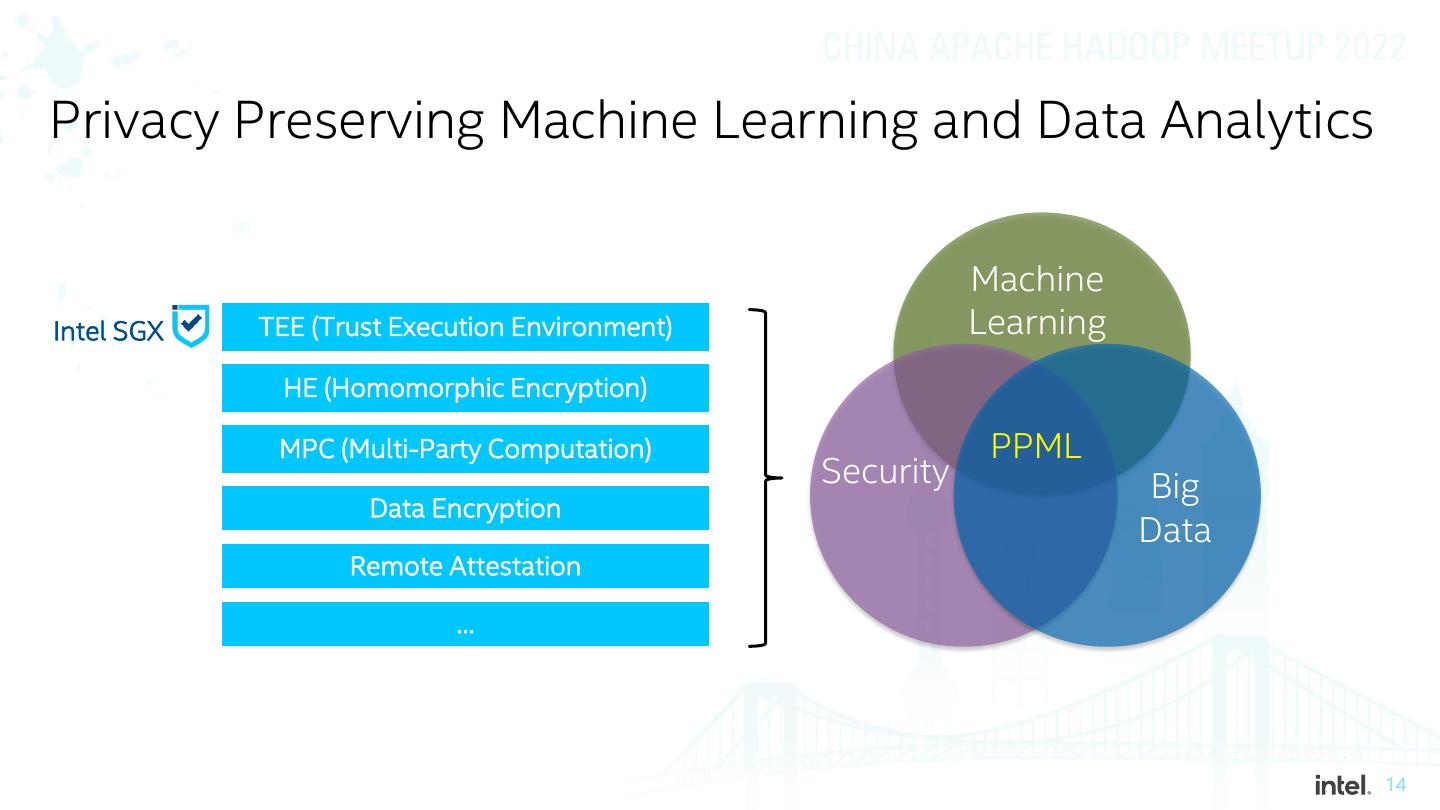

14 .Privacy Preserving Machine Learning and Data Analytics Machine Intel SGX TEE (Trust Execution Environment) Learning HE (Homomorphic Encryption) MPC (Multi-Party Computation) PPML Security Big Data Encryption Data Remote Attestation …

15 .BigDL PPML Hardware (SGX) protected Big Data AI for confidential computing Trusted Cluster Environment for Big Data AI Driver Node Worker Node Worker Worker PPML Node Node ... ... ... … Distributed Data Lake / Storage Warehouse K8s (on-prem or cloud) ▪ Standard, distributed AI applications on encrypted data ▪ Hardware (Intel SGX) protected computation (and memory) ▪ End-to-end confidential computing enabled for the entire workflow ▪ Provision and attestation of “trusted cluster environment” on K8s (of SGX nodes) ▪ Secrete key management through KMS for distributed data decryption/encryption ▪ Secure distributed compute and communication (via SGX, encryption, TLS, etc.)

16 .Summary ▪ BigDL (https://github.com/intel-analytics/bigdl) • Distributed Big Data AI pipelines (seamless scaling and transparently accelerated) • Privacy preserving Big Data AI for confidential computing (on Intel SGX) ▪ Technical paper/tutorials • CVPR 2022 paper: https://arxiv.org/abs/2204.01715 • CVPR 2021 tutorial: https://jason-dai.github.io/cvpr2021/ • ACM SoCC 2019 paper: https://arxiv.org/abs/1804.05839 请关注 分论坛四:机器学习 15:00-15:30 “使用BigDL构建和扩展大规模端到端的人工智能应用”

17 .