- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Optimizing Delta - Parquet Data Lakes for Apache Spark

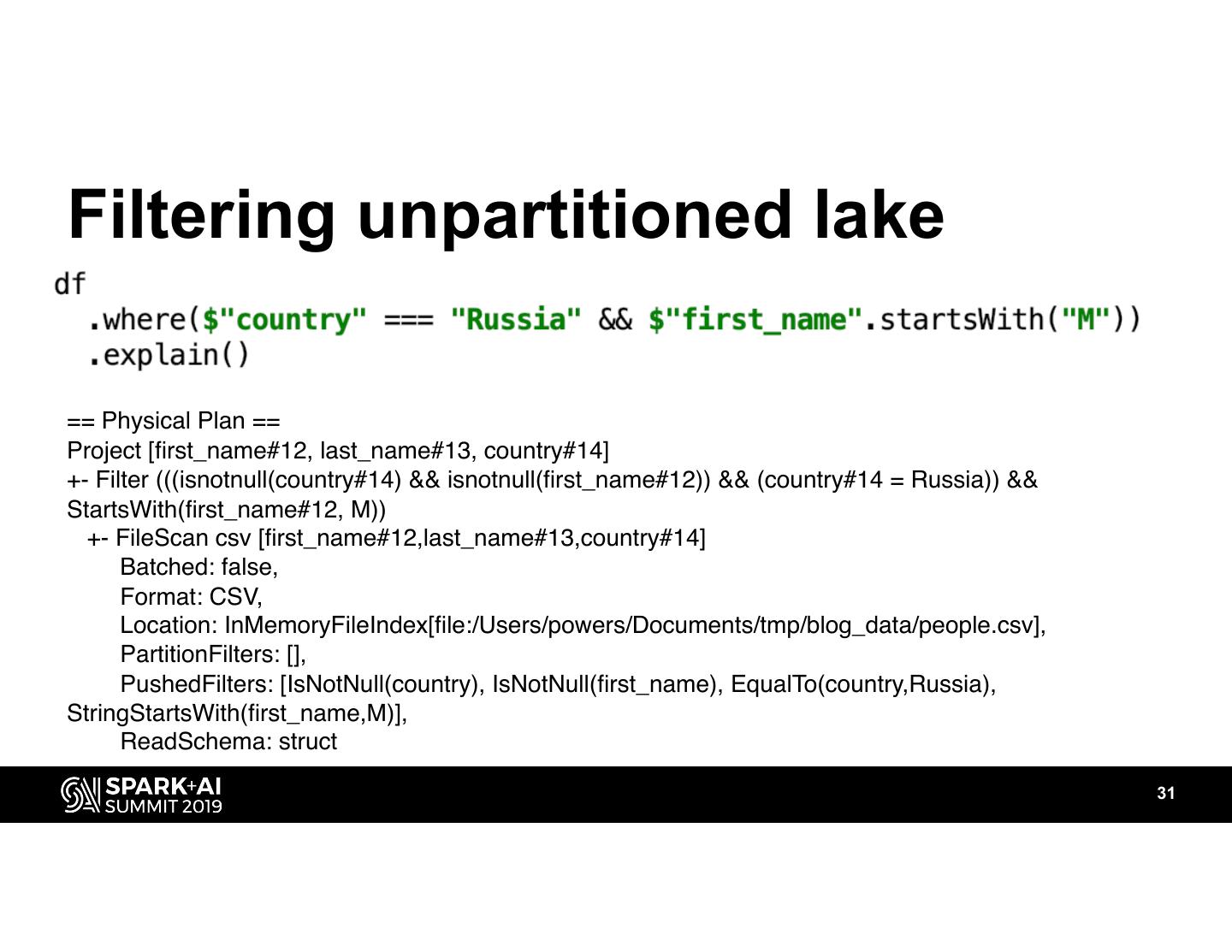

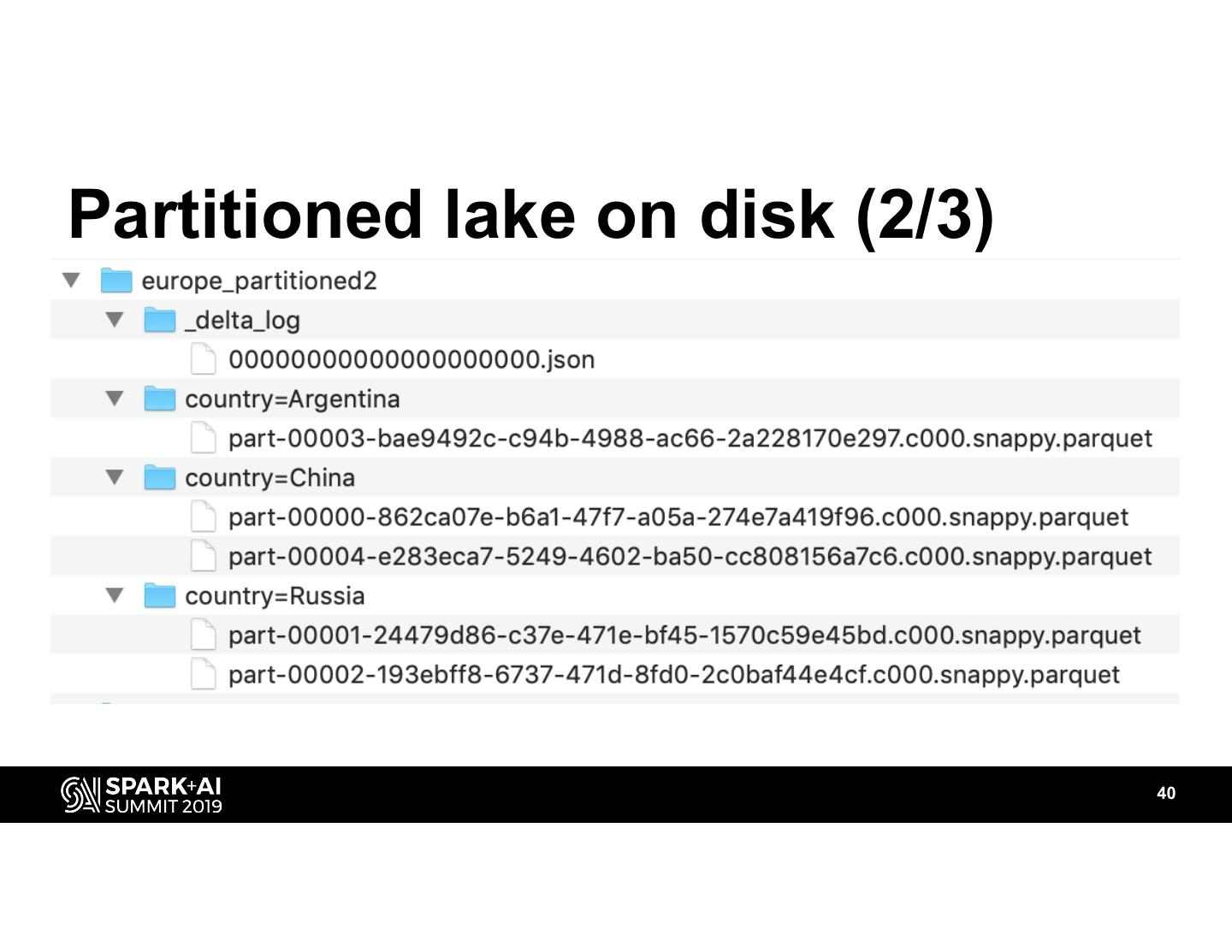

This talk will start by explaining the optimal file format, compression algorithm, and file size for plain vanilla Parquet data lakes. It discusses the small file problem and how you can compact the small files. Then we will talk about partitioning Parquet data lakes on disk and how to examine Spark physical plans when running queries on a partitioned lake.

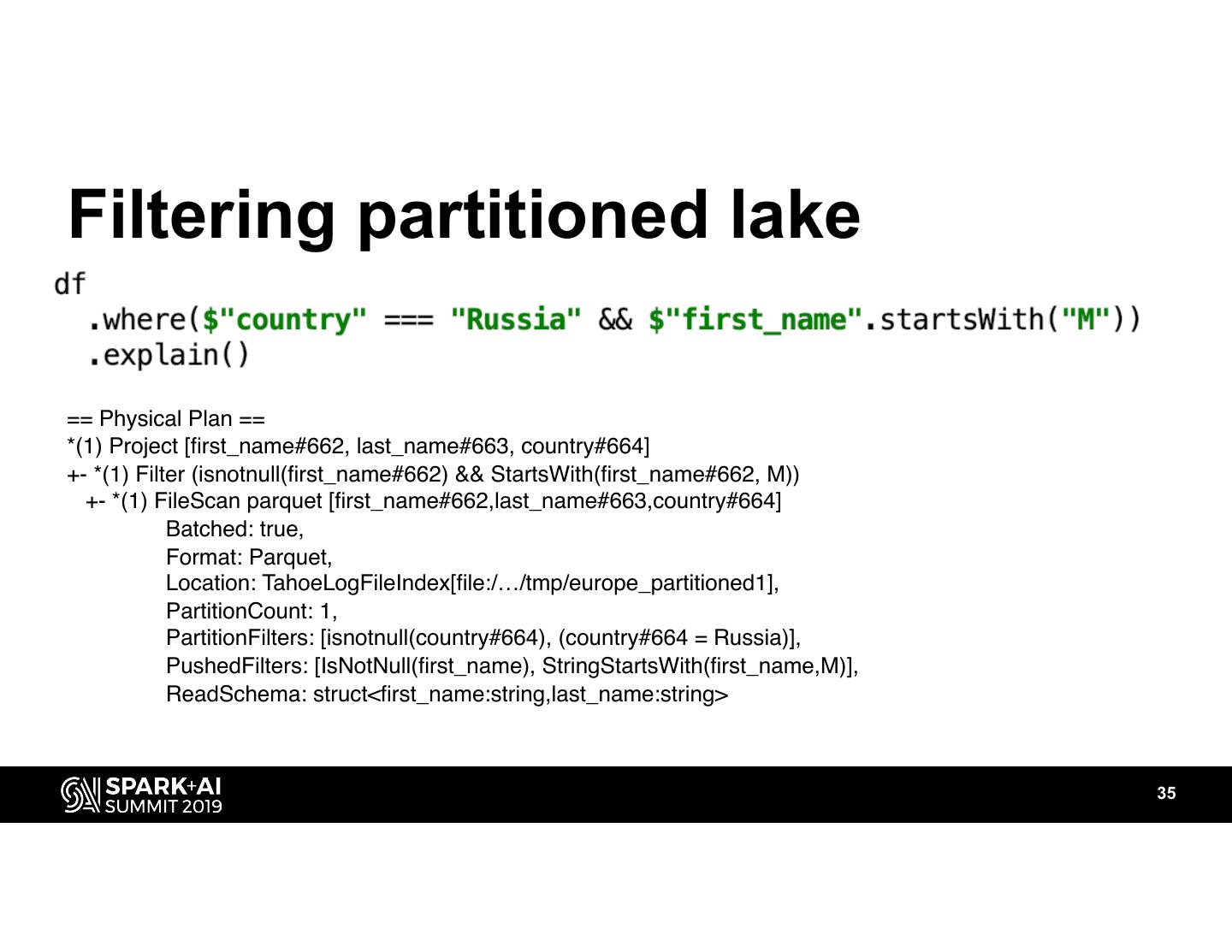

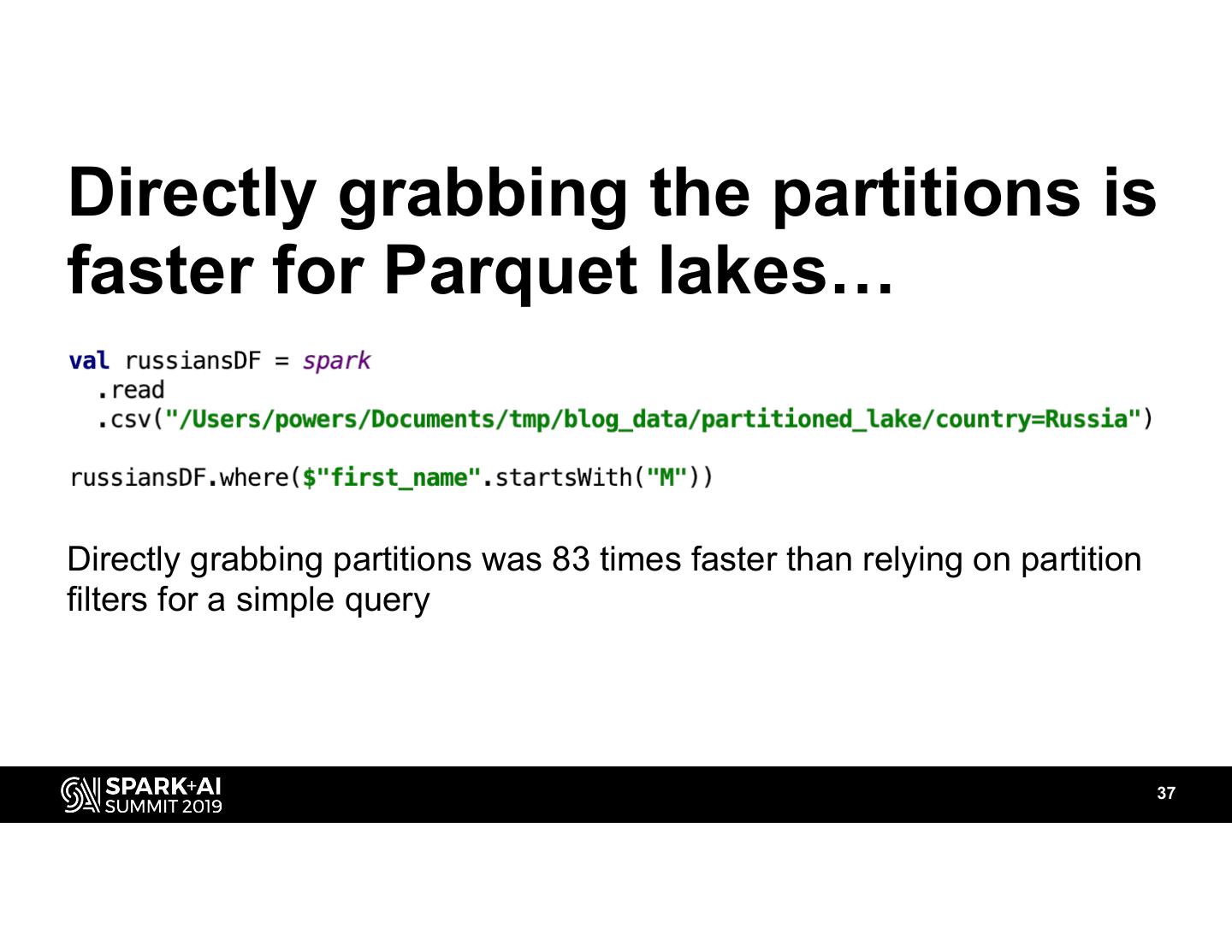

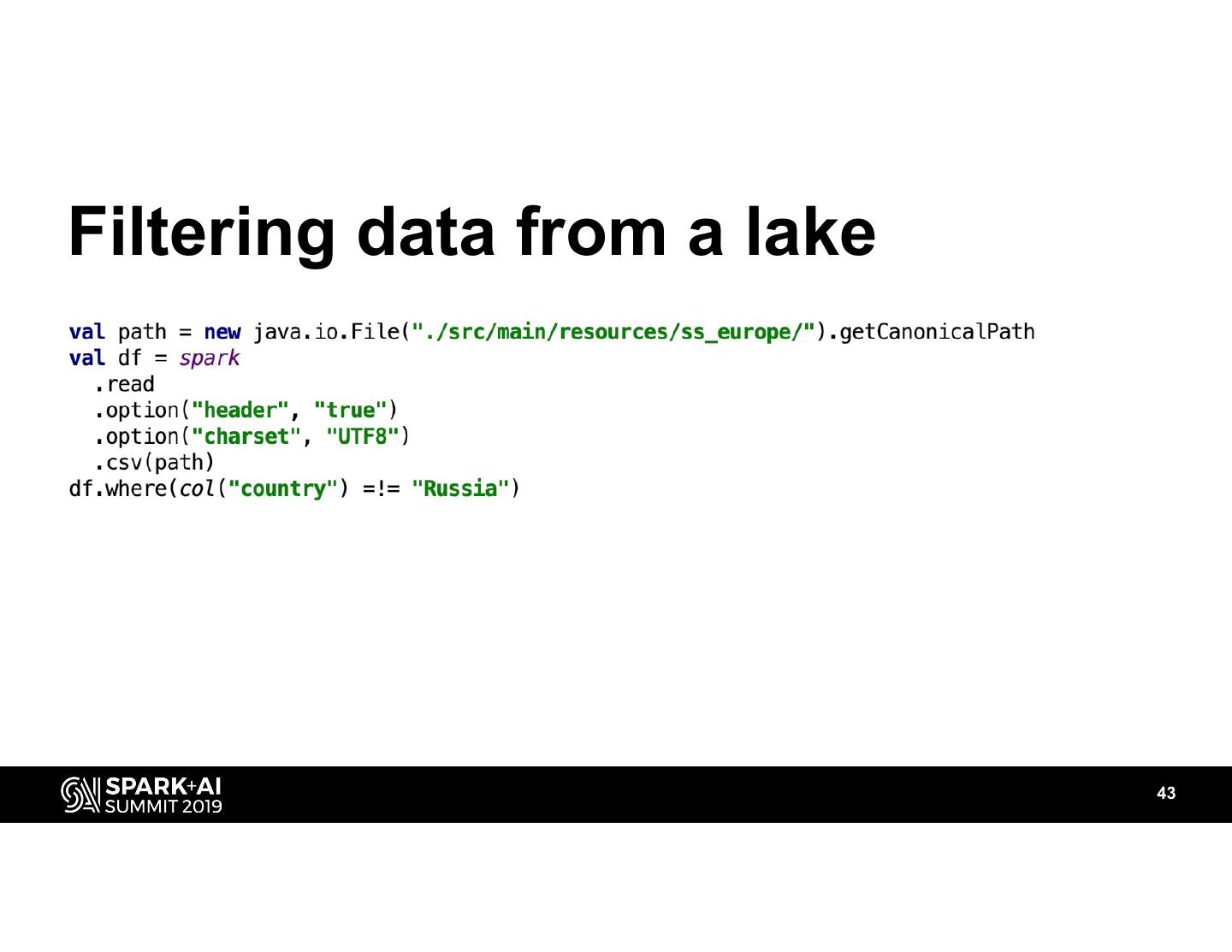

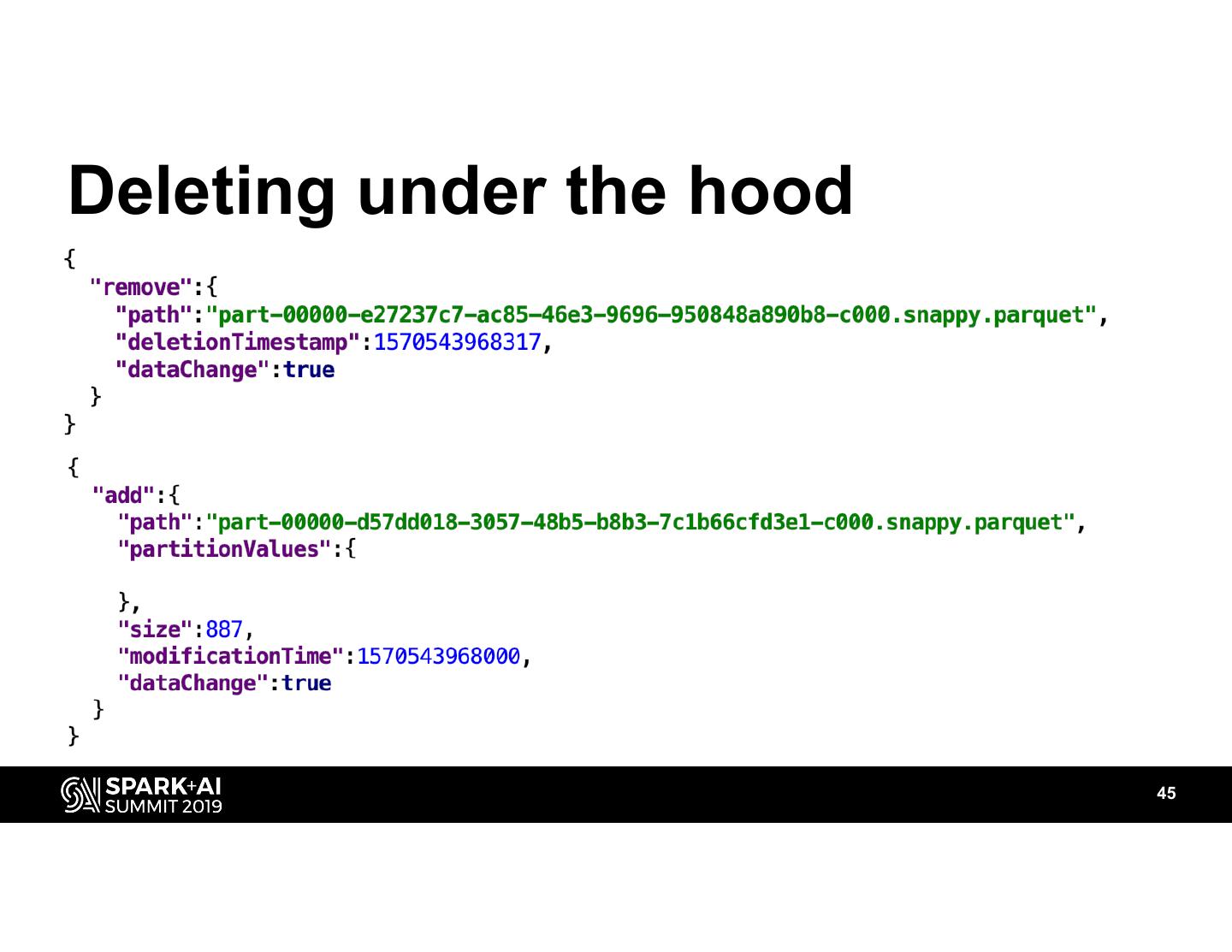

We will discuss why it’s better to avoid PartitionFilters and directly grab partitions when querying partitioned lakes. We will explain why partitioned lakes tend to have a massive small file problem and why it’s hard to compact a partitioned lake. Then we’ll move on to Delta lakes and explain how they offer cool features on top of what’s available in Parquet. We’ll start with Delta 101 best practices and then move on to compacting with the OPTIMIZE command.

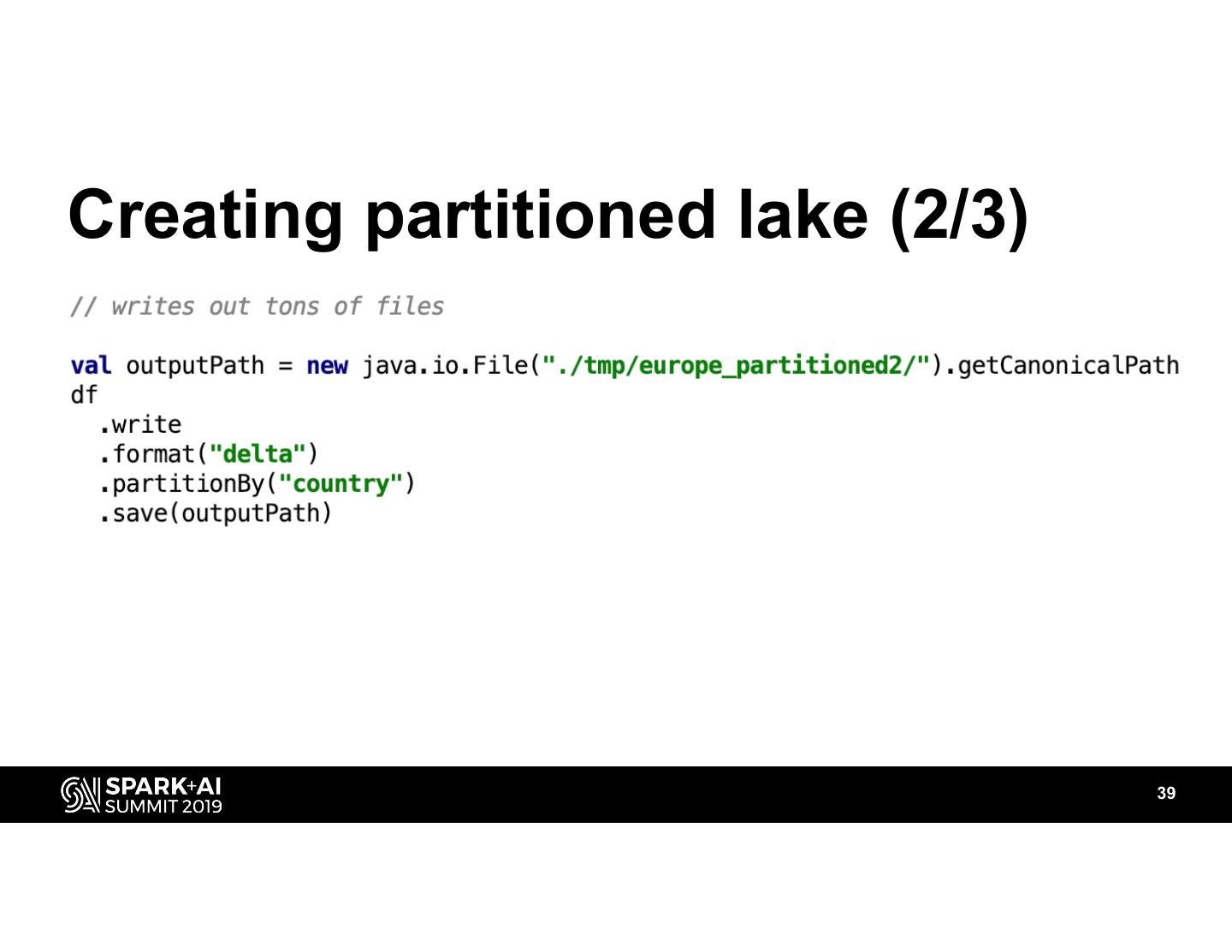

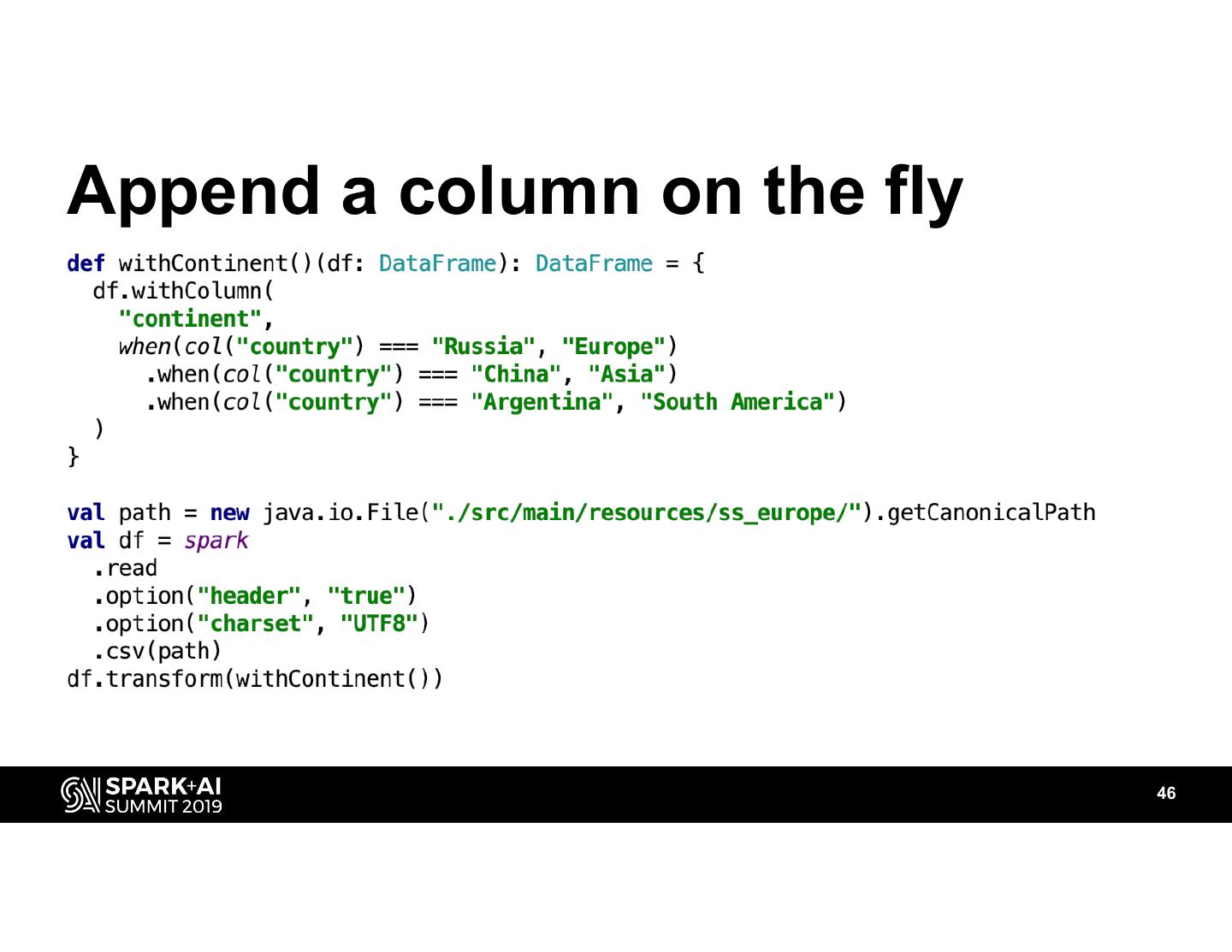

We’ll talk about creating partitioned Delta lake and how OPTIMIZE works on a partitioned lake. Then we’ll talk about ZORDER indexes and how to incrementally update lakes with a ZORDER index. We’ll finish with a discussion on adding a ZORDER index to a partitioned Delta data lake.

展开查看详情

1 .WIFI SSID:Spark+AISummit | Password: UnifiedDataAnalytics

2 .Optimizing Delta / Parquet Data Lakes Matthew Powers, Prognos Health #UnifiedDataAnalytics #SparkAISummit

3 .Agenda • Why Delta? • Delta basics and transaction log • Compacting Delta lake • Vacuuming old files • Partitioning Delta lakes • Deleting rows • Persisting transformations in columns 3

4 .About MungingData • Time travel • Compacting • Vacuuming • Update columns 4

5 .Contact me • GitHub: MrPowers • Email: matthewkevinpowers@gmail.com • Delta Slack channel • Open source hacking 5

6 .What is Delta lake? • Parquet + transaction log • Provides awesome features for free! 6

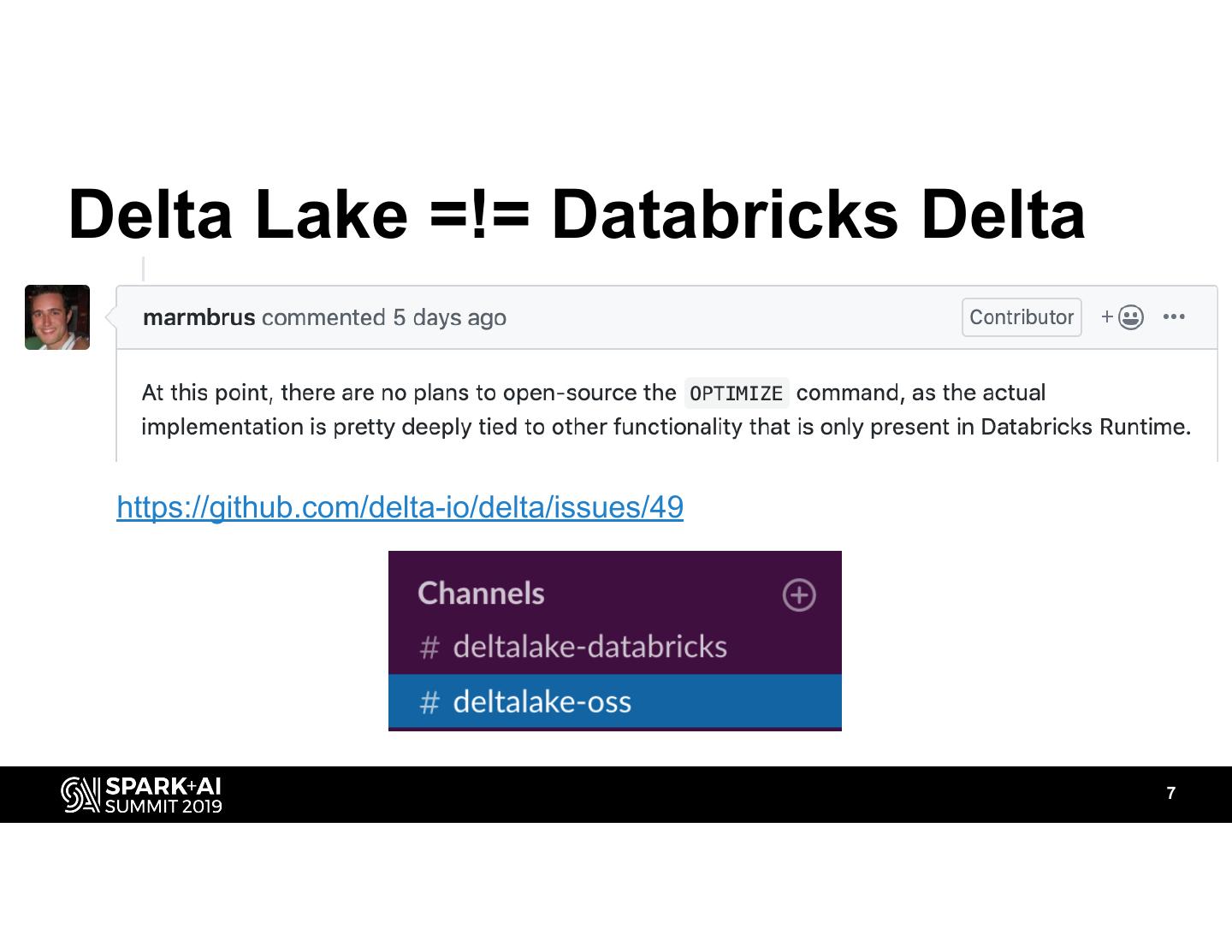

7 .Delta Lake =!= Databricks Delta https://github.com/delta-io/delta/issues/49 7

8 .TL;DR • 1 GB files • No nested directories #UnifiedDataAnalytics #SparkAISummit 8

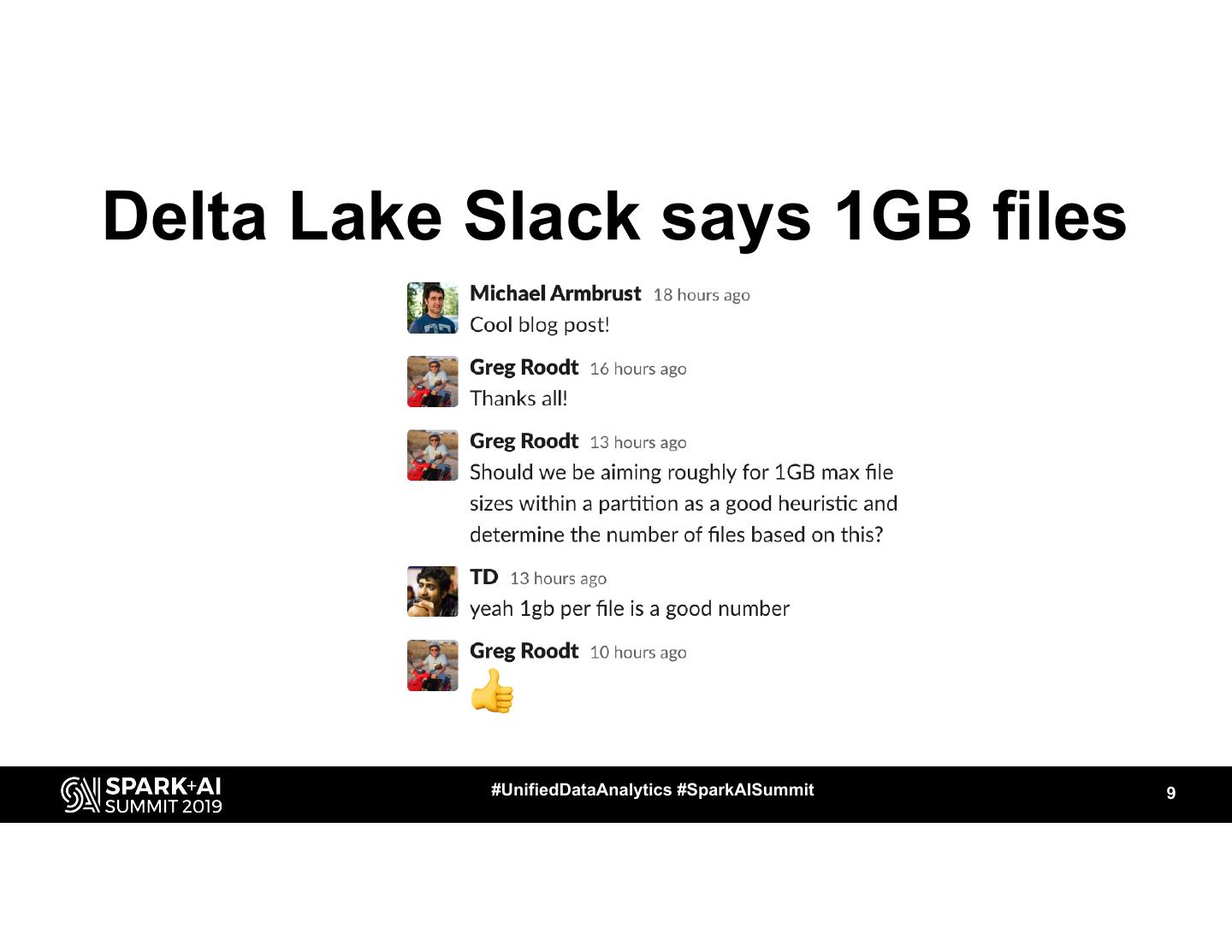

9 .Delta Lake Slack says 1GB files #UnifiedDataAnalytics #SparkAISummit 9

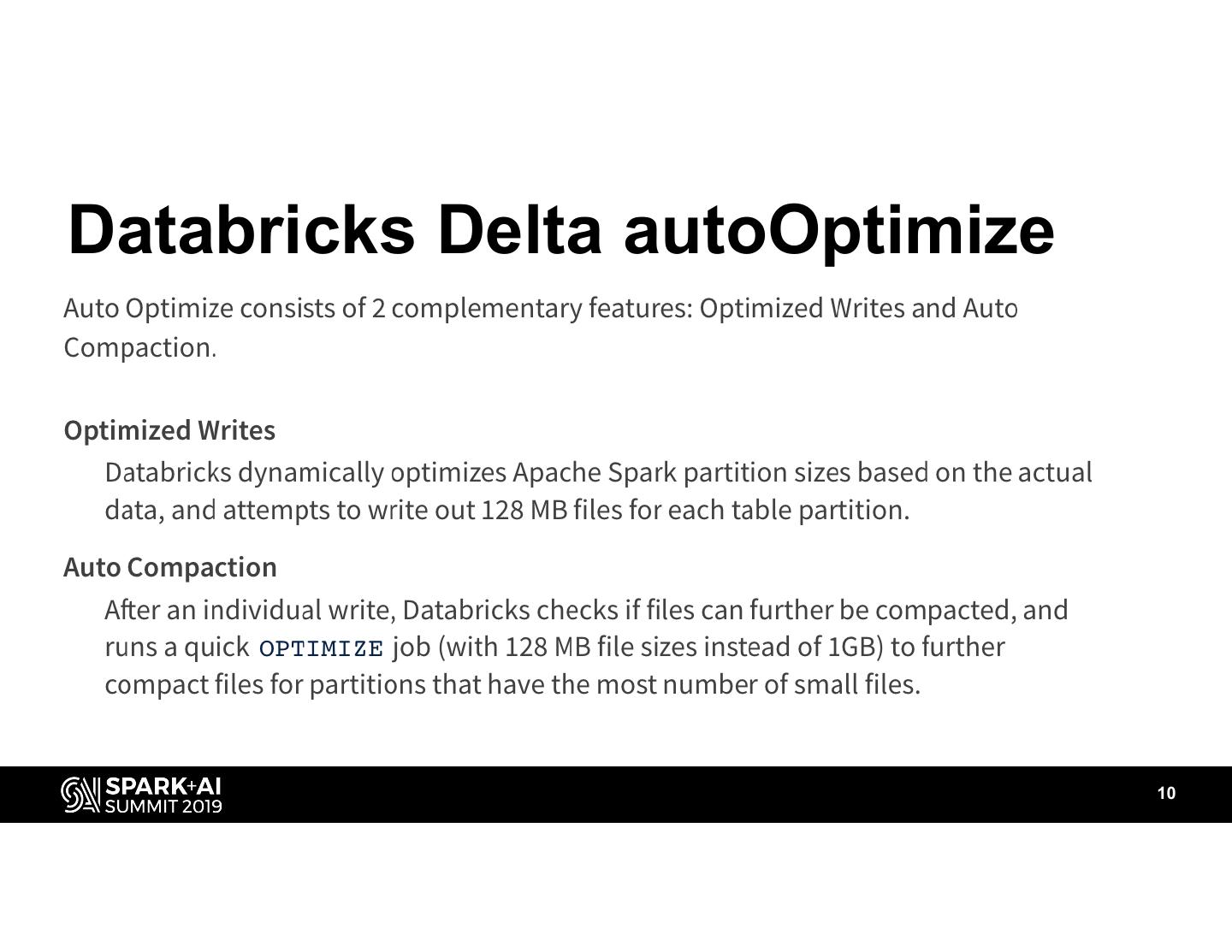

10 .Databricks Delta autoOptimize 10

11 .Why does compaction speed up lakes? • Parquet: files need to be listed before they are read. Listing is expensive in object stores. • Delta: Data is read via the transaction log. • Easier for Spark to read partitioned lakes into memory partitions. 11

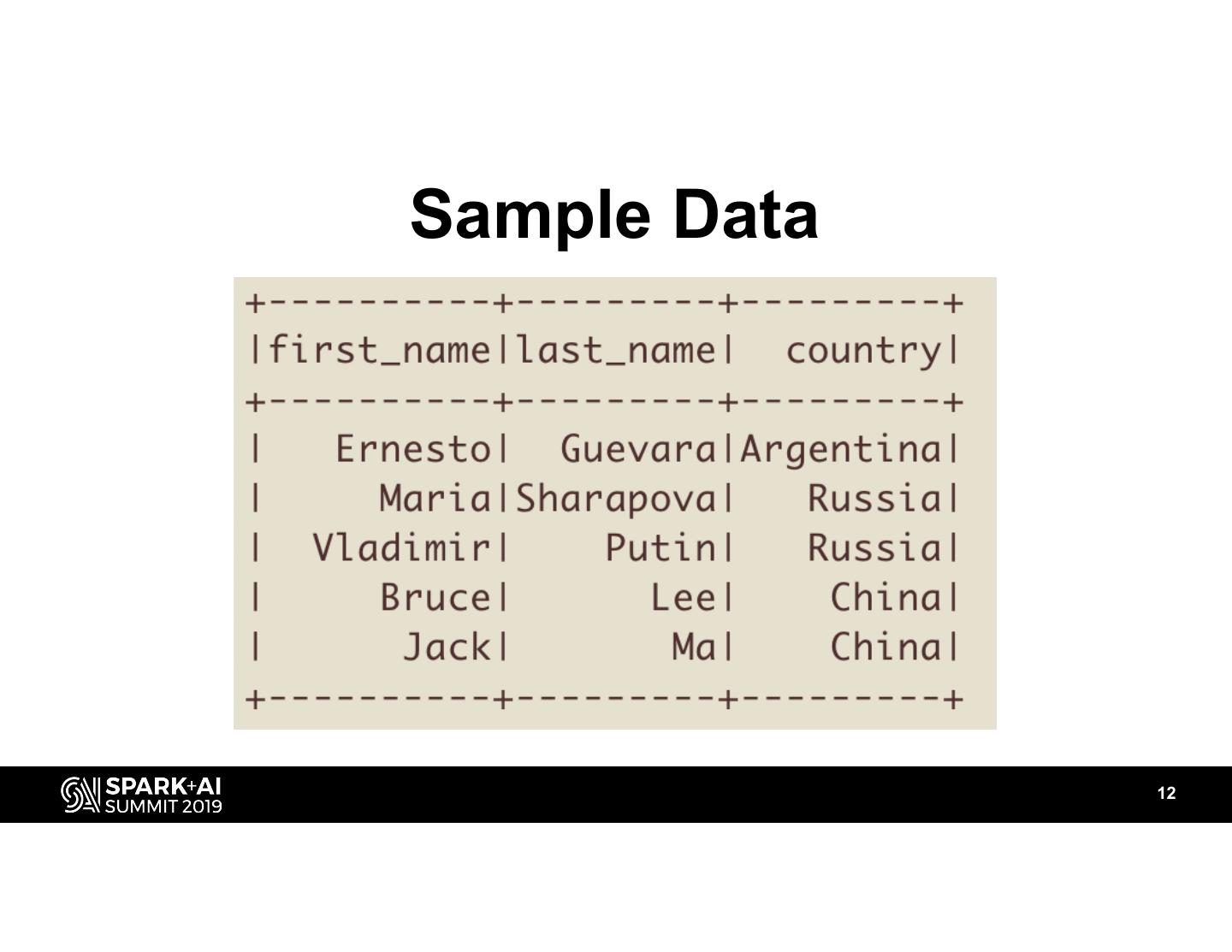

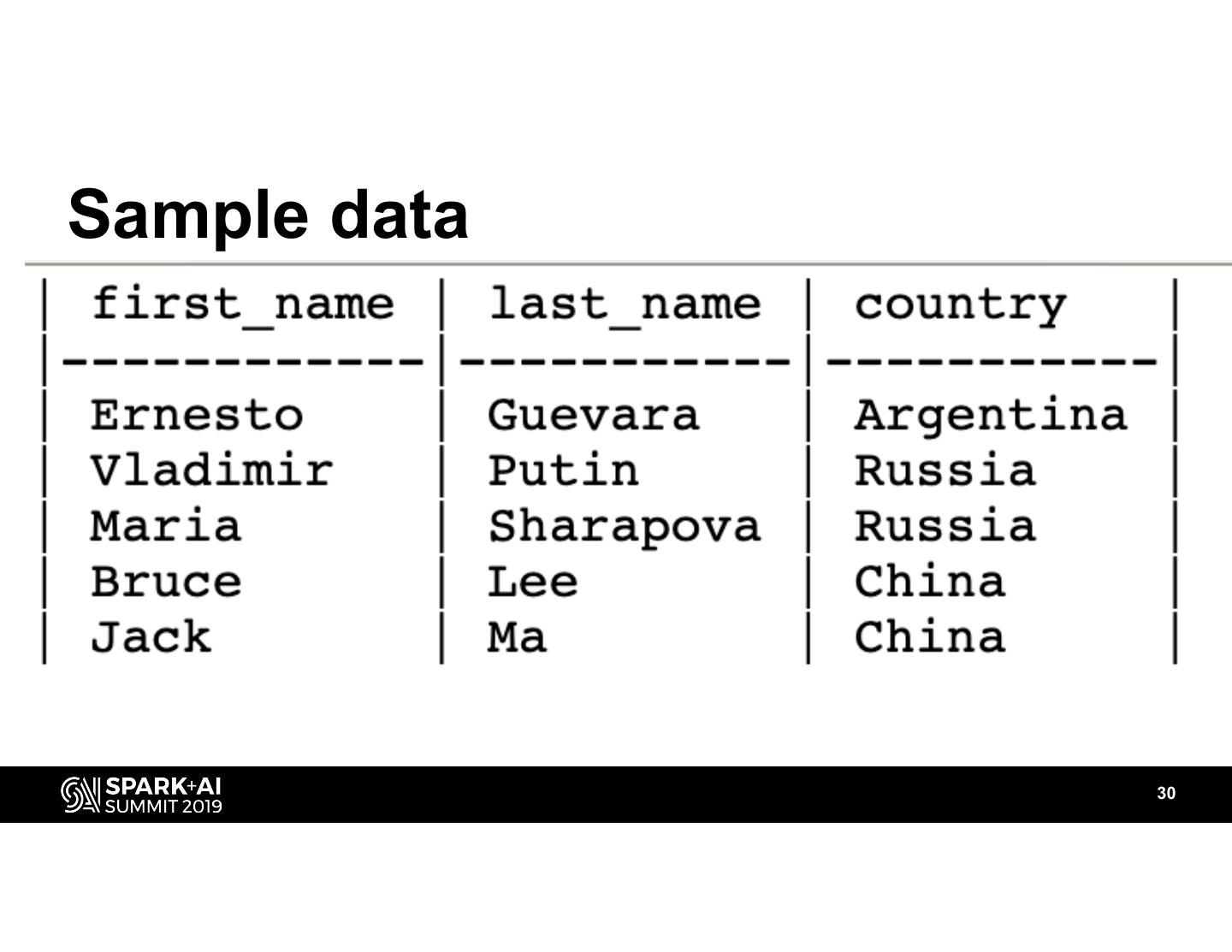

12 .Sample Data 12

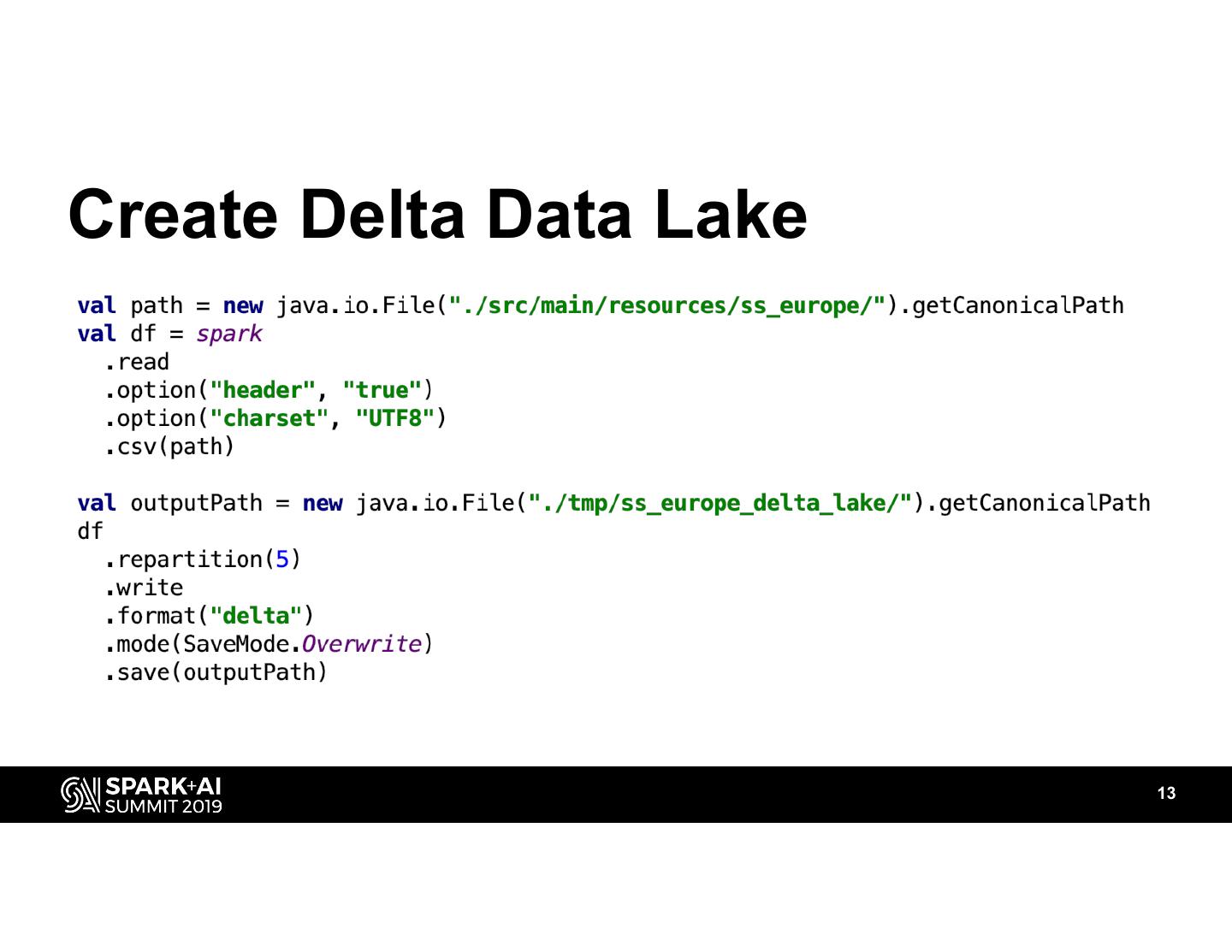

13 .Create Delta Data Lake 13

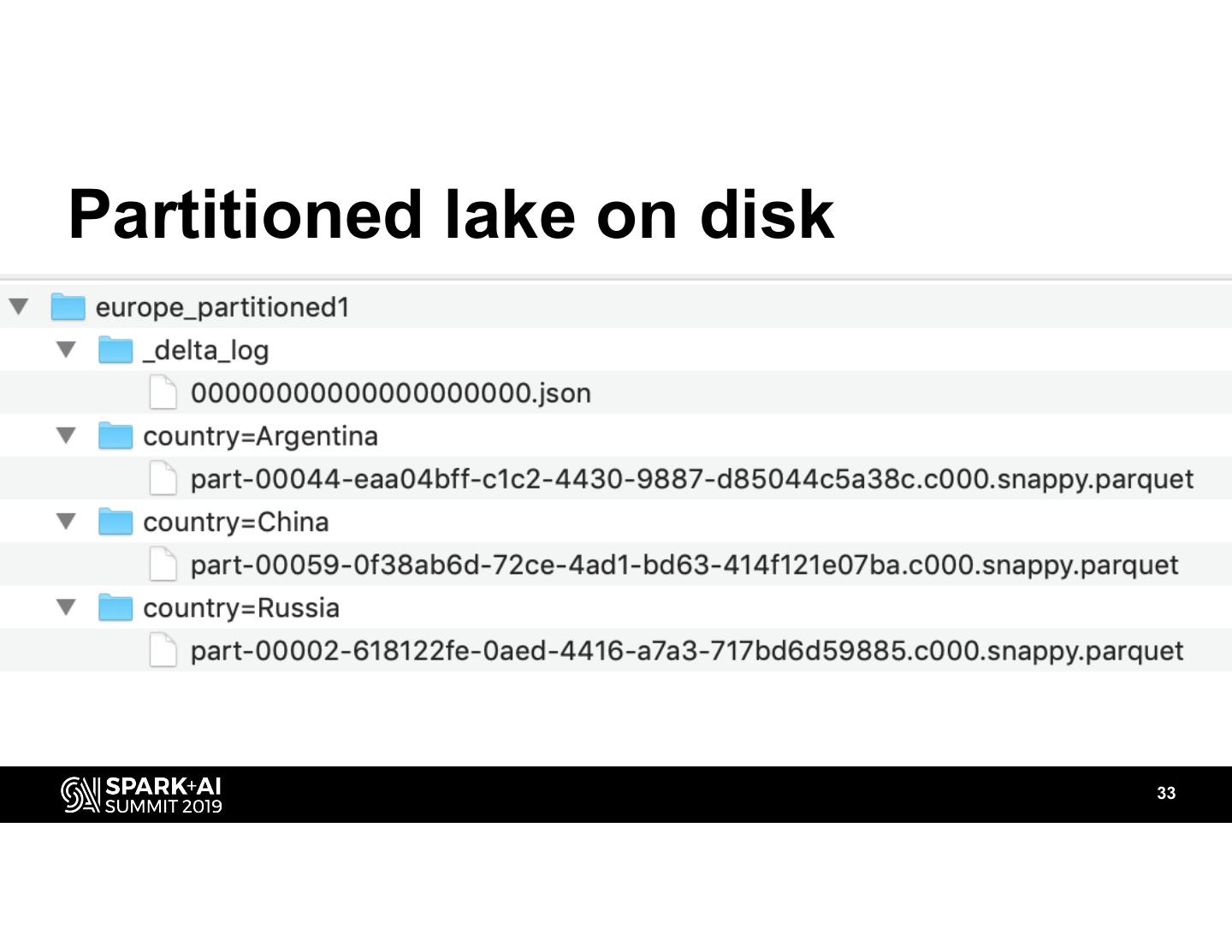

14 .Delta Lake on Disk 14

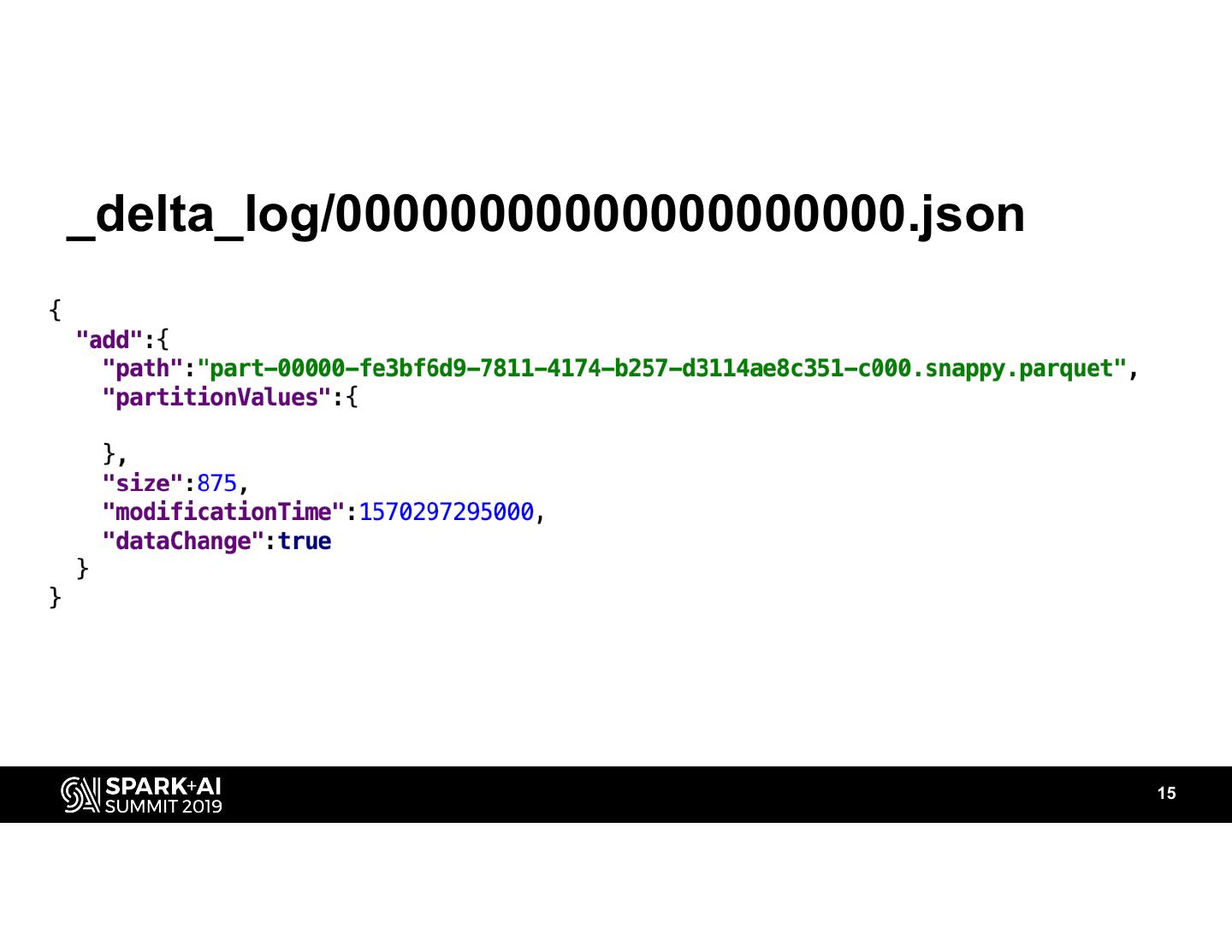

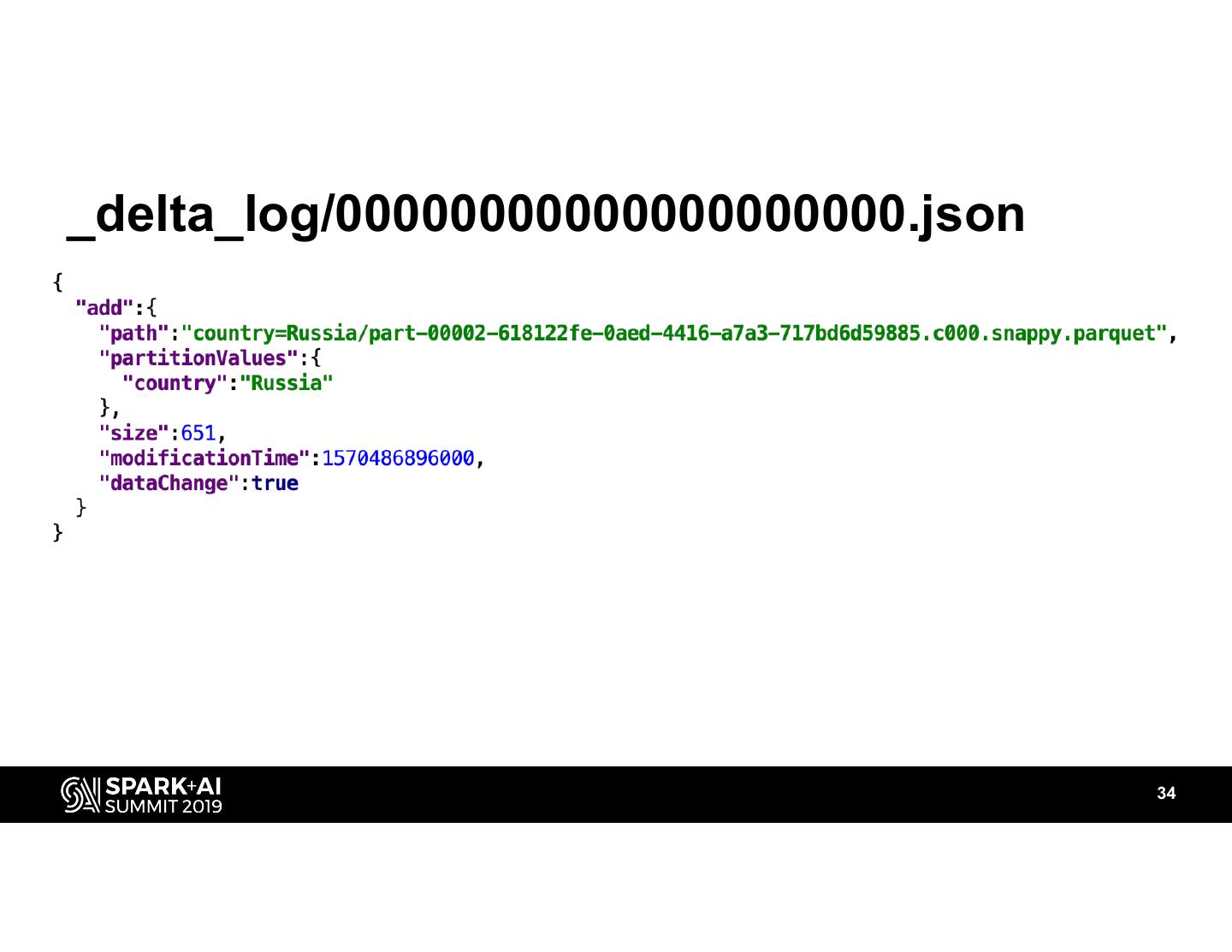

15 ._delta_log/00000000000000000000.json 15

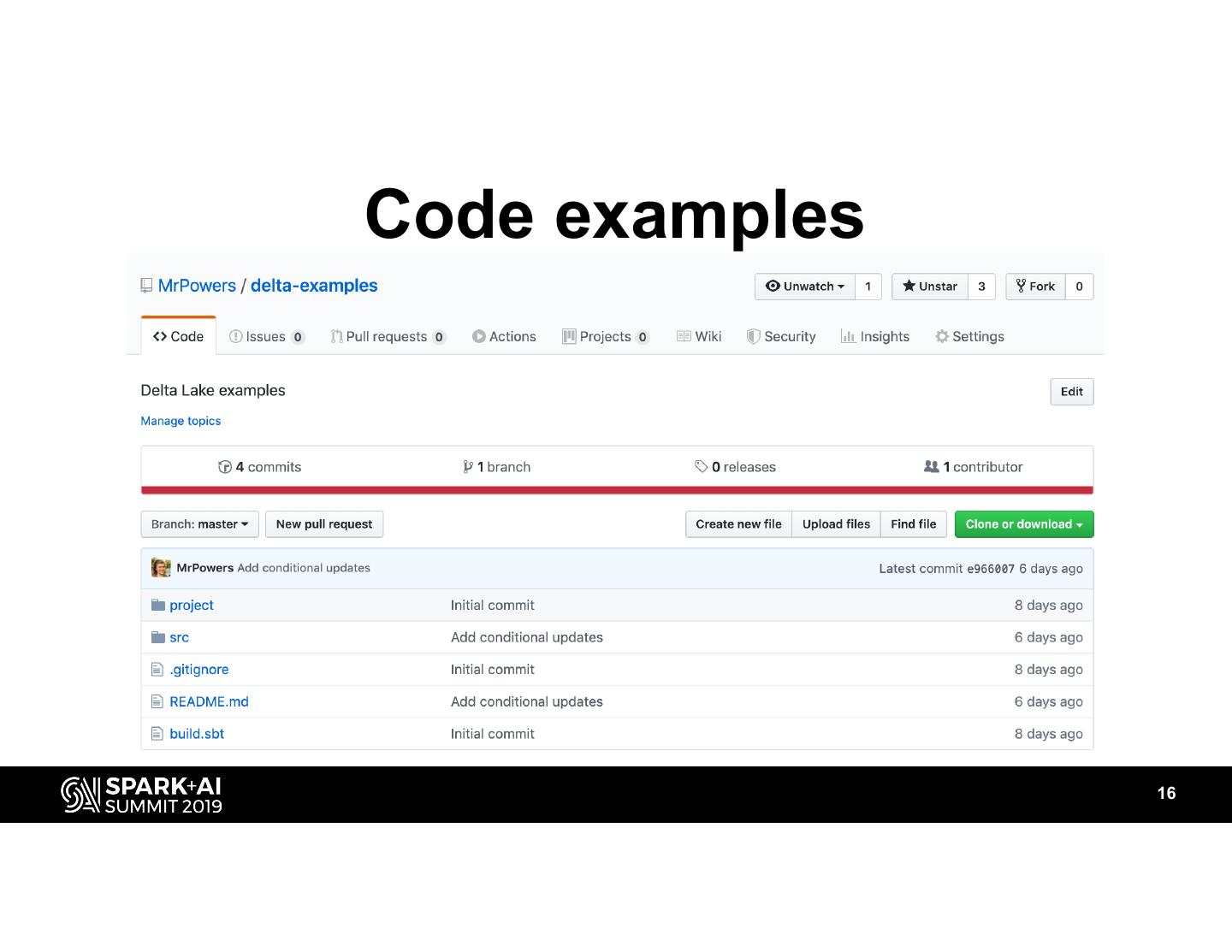

16 .Code examples 16

17 .Compact Delta Data Lake 17

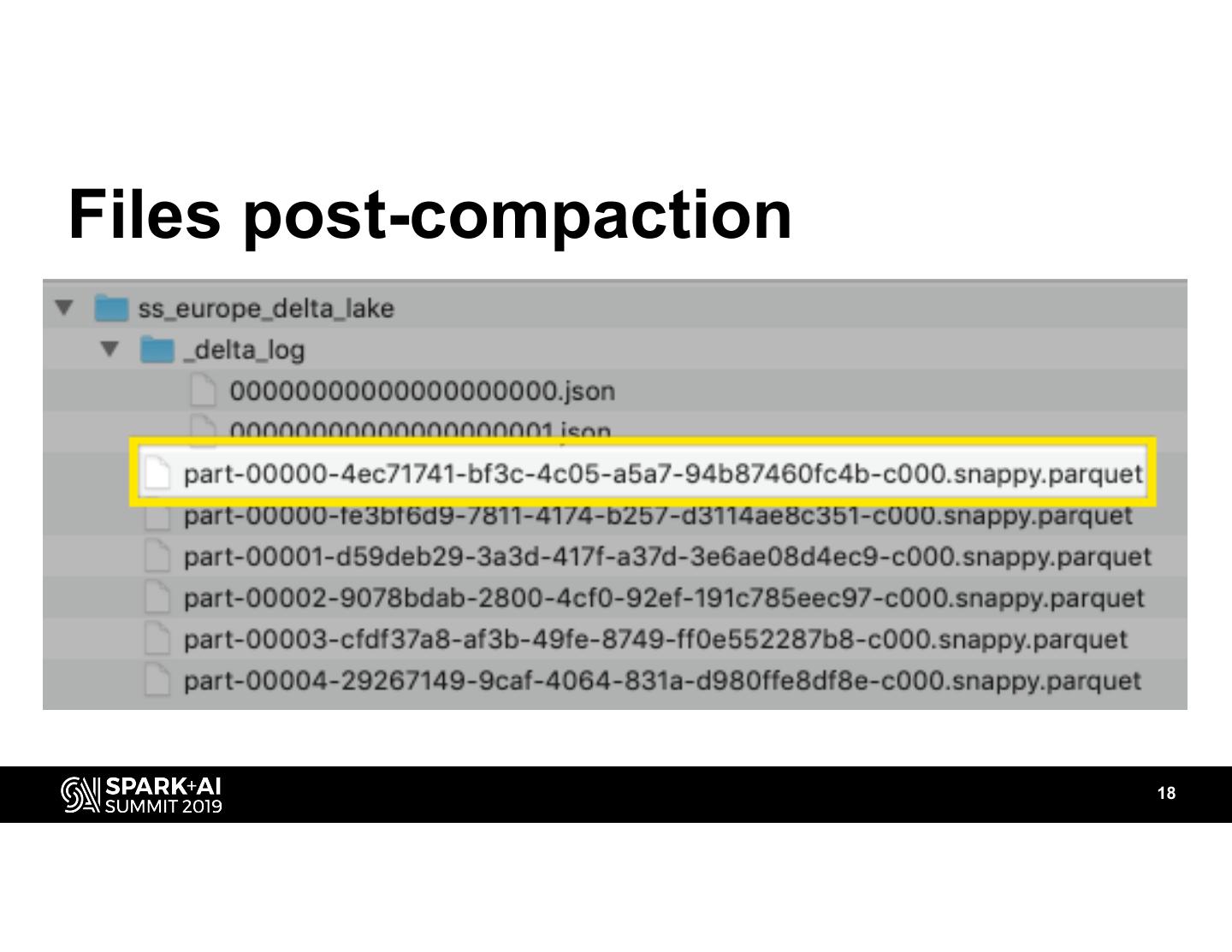

18 .Files post-compaction 18

19 ._delta_log/00000000000000000001.json 19

20 .Compacting Delta lakes without breaking downstream apps https://github.com/delta-io/delta/issues/146 20

21 .21

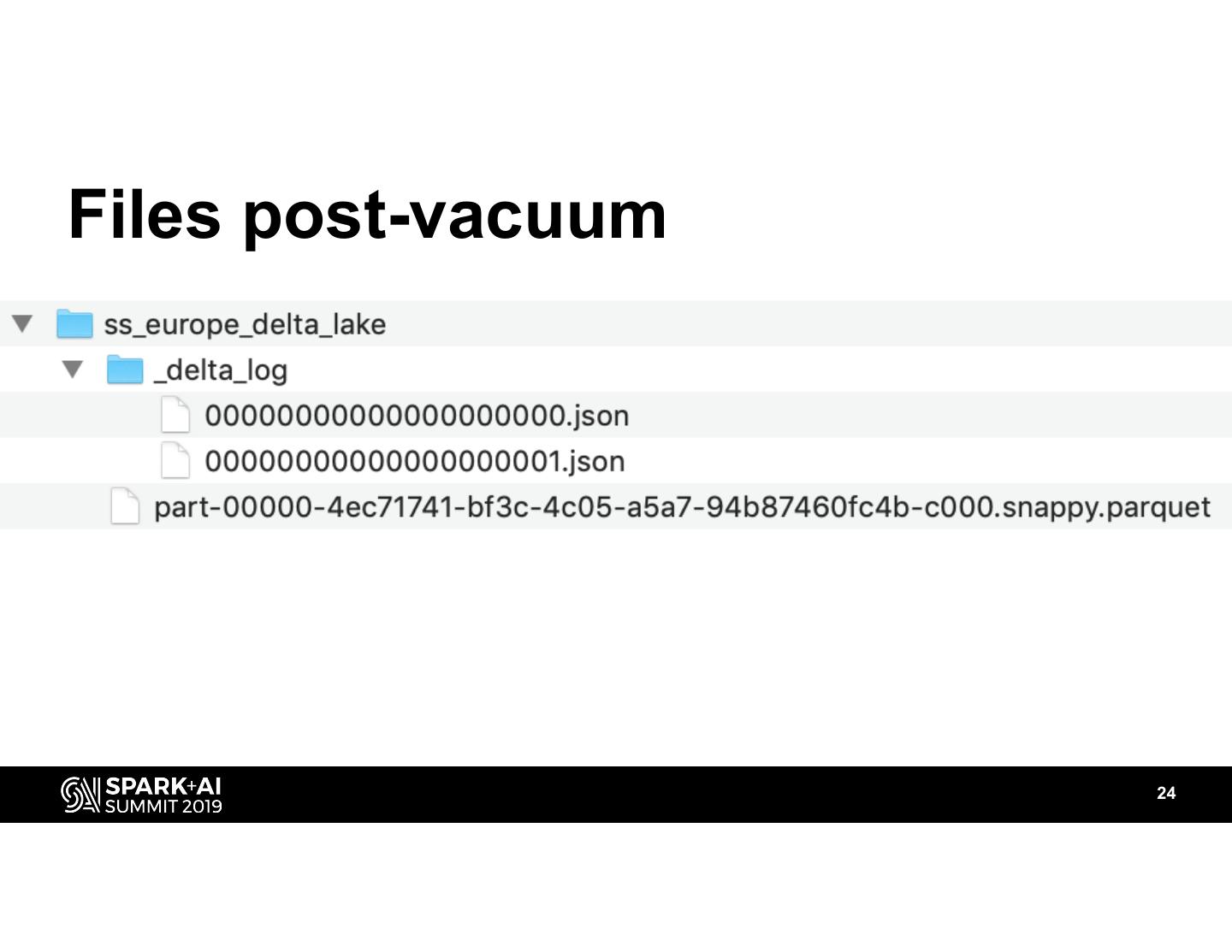

22 .Delta Lake Vacuum • Files marked for removal older than the retention period • Default retention period is 7 days • Not going to improve performance 22

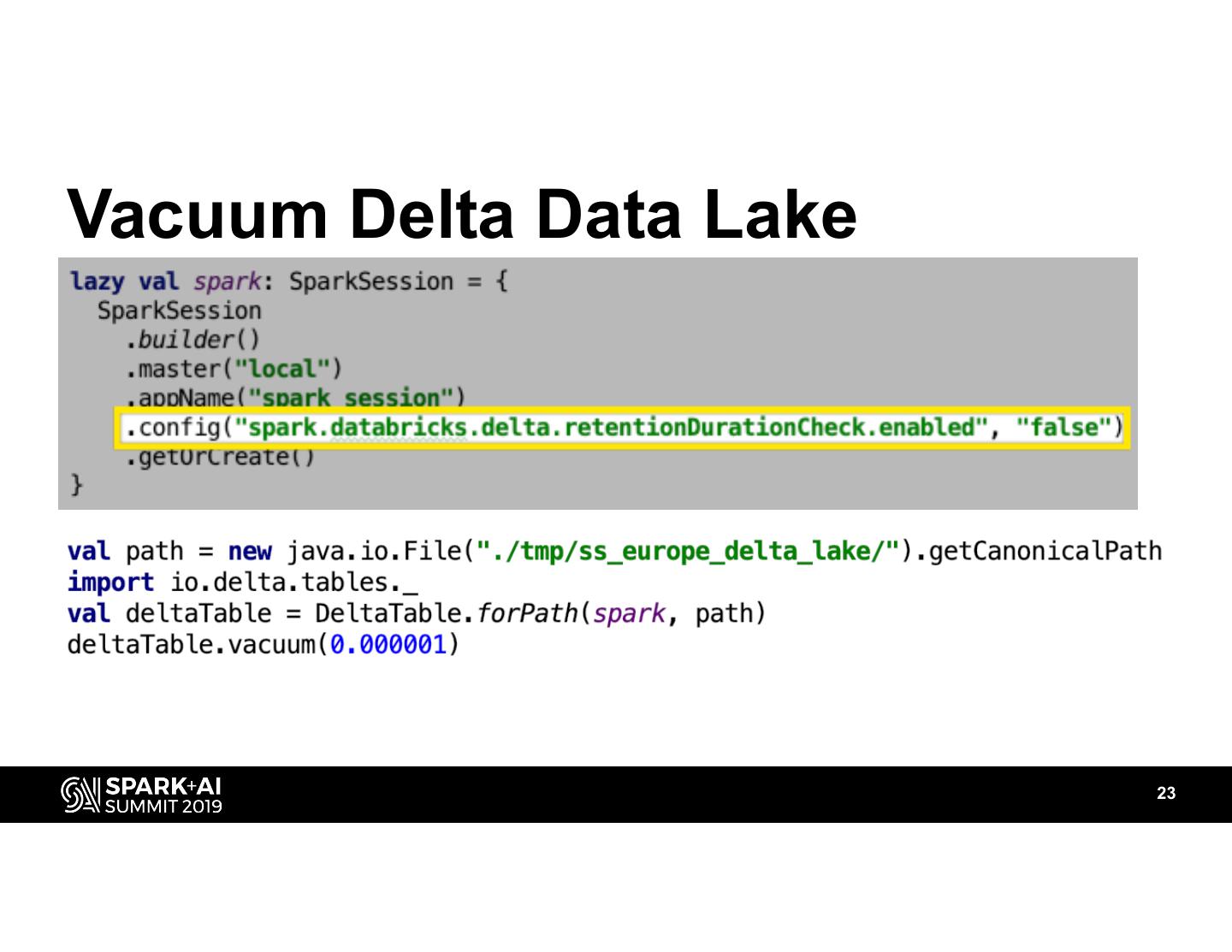

23 .Vacuum Delta Data Lake 23

24 .Files post-vacuum 24

25 .Optimal number of partitions (delta) 25

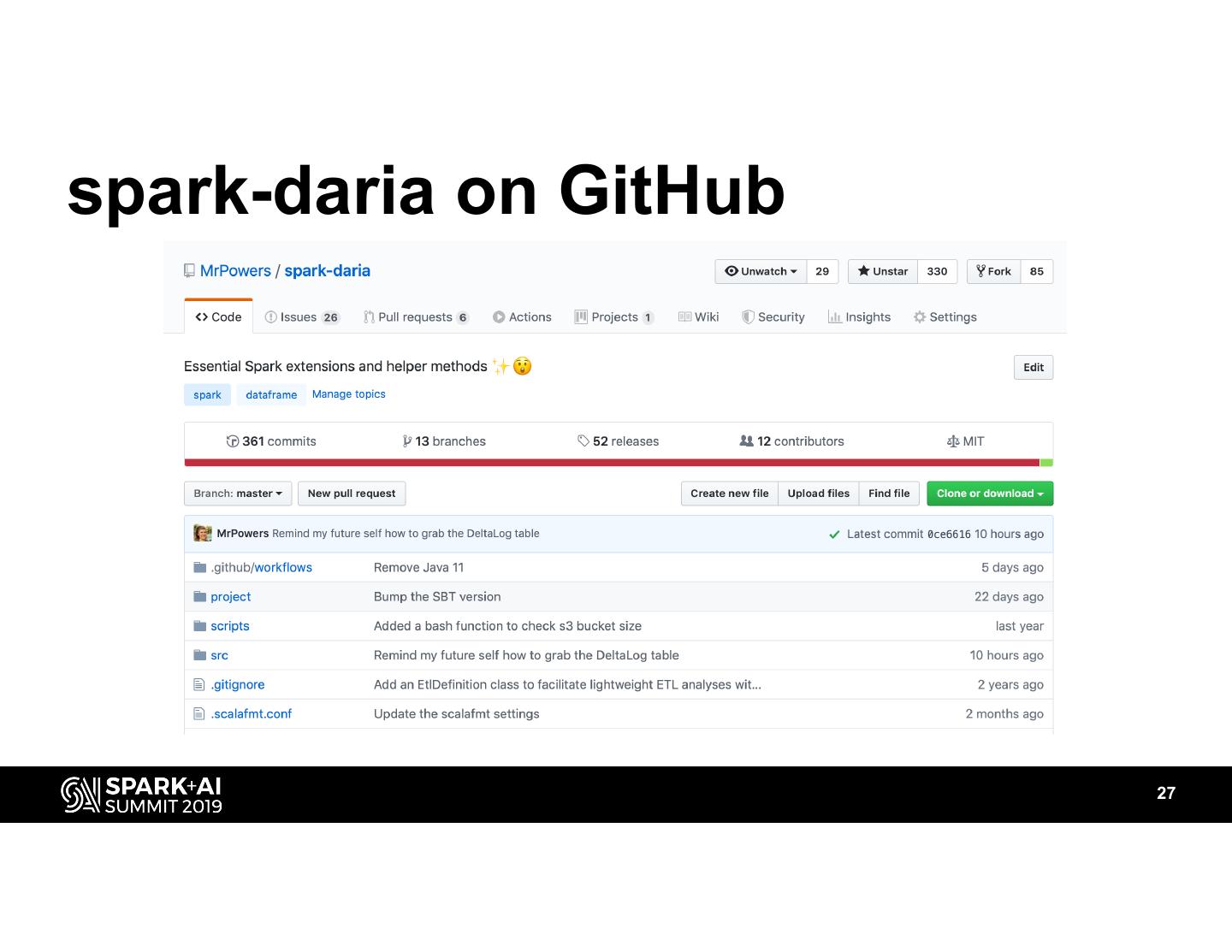

26 .spark-daria helps! 26

27 .spark-daria on GitHub 27

28 .Optimal number of partitions (parquet) https://github.com/MrPowers/spark-daria/blob/master/src/main/scala/com/github/ mrpowers/spark/daria/utils/DirHelpers.scala 28

29 .Why partition data lakes? • Data skipping • Massively improve query performance • I’ve seen queries run 50-100 times faster on partitioned lakes 29