- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Deep Anomaly Detection from Research to Production Leveraging Spark and Tensorflow

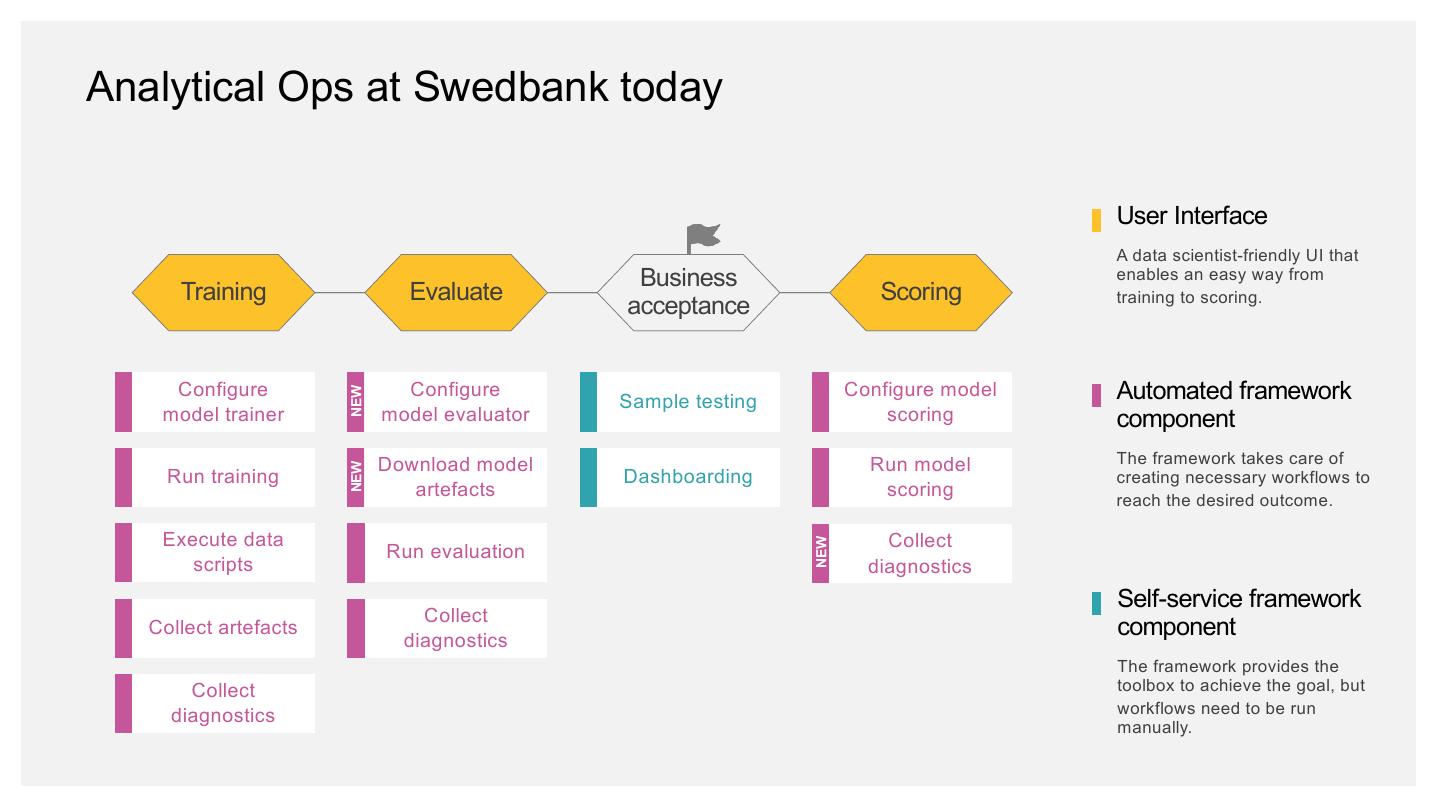

Anomaly detection has numerous applications in a wide variety of fields. In banking, with ever growing heterogeneity and complexity, the difficulty of discovering deviating cases using conventional techniques and scenario definitions is on the rise. In our talk, we’ll present an outline of Swedbank’s ways of constructing and leveraging scalable pipelines based on Spark and Tensorflow in combination with an in-house tailor-made platform to develop, deploy and monitor deep anomaly detection models. In summary, this talk will present Swedbank’s approach on building, unifying and scaling an end-to-end solution using large amounts of heterogeneous imbalanced data. In this talk we will include sections with the following topics: Feature engineering: transactions2vec; Anomaly detection and its applications in banking; Deep anomaly detection methods: Deep SVDD and Generative adversarial networks, Model overview and code snippets in Tensorflow estimator API; Model Deployment: An overview of how the different puzzle pieces outlined above are put together and operationalized to create and end-to-end deployment.

展开查看详情

1 .Deep Anomaly Detection What’s in this talk: We present our work on anomaly From research to production detection: describe overall results, how Swedbank goes from research to production, and what we think is important Leveraging Spark and TensorFlow when building AI infrastructure. Davit Bzhalava, Shaheer Mansoor, Erik Ekerot

2 .Introduction

3 .The leading bank in our home markets Sweden Estonia Population: 10.2 million Population: 1.3 million Private customers: 4.0 million Private customers: 0.9 million Corporate customers: 335 000 Corporate customers: 141 000 Branches: 248 Branches: 35 Employees: 8 600 Employees: 2 600 Latvia Lithuania Population: 2.0 million Population: 2.9 million Private customers: 0.9 million Private customers: 1.5 million Corporate customers: 91 000 Corporate customers: 86 000 Branches: 41 Branches: 65 Employees: 1 800 Employees: 2 500

4 .Swedbank HQ, Stockholm

5 .Analytics & AI R&D Data exploration, advanced › Established in 2016, consolidating disparate analytics analytics discovery and AI research. functions across the organization. Long term. › Today 30 colleagues. › A mix of data scientists, business analysts, developers, Business development engineers and project managers. Effective support and execution of prioritized value streams and › A center of excellence, promoting a data-driven mindset strategic business projects. in the bank. Medium term. Delivery & Service Deliver on-demand analytics and AI applications addressing immediate business requirements. Short term.

6 .Why this now? % 80 70 60 50 40 30 20 10 0 2 01 0 2 01 1 2 01 2 2 01 3 2 01 4 2 01 5 2 01 6 2 01 7 2 01 8 2 01 9 The po li ce U ni vers itie s H ea lthc are N atio na l b an k R oya l h ou se R ad io & TV The chu rch The pa rli am en t U ni on s Gov ern me nt The pre ss L arg e co mp an ie s EU com mis sio n Ba nk s Po li ti ca l pa rtie s Source: Kantar SIFO, Medieakademin, Trust Barometer. Share high/quite high trust.

7 .Our approach to data science

8 .A multi-lingual team Machine learning Data Data Statisticians engineers scientists analysts Java Python Python R Scala SQL

9 .A platform- and framework agnostic approach Collect Act data Feature engineer Monitor Analyse Deploy Model

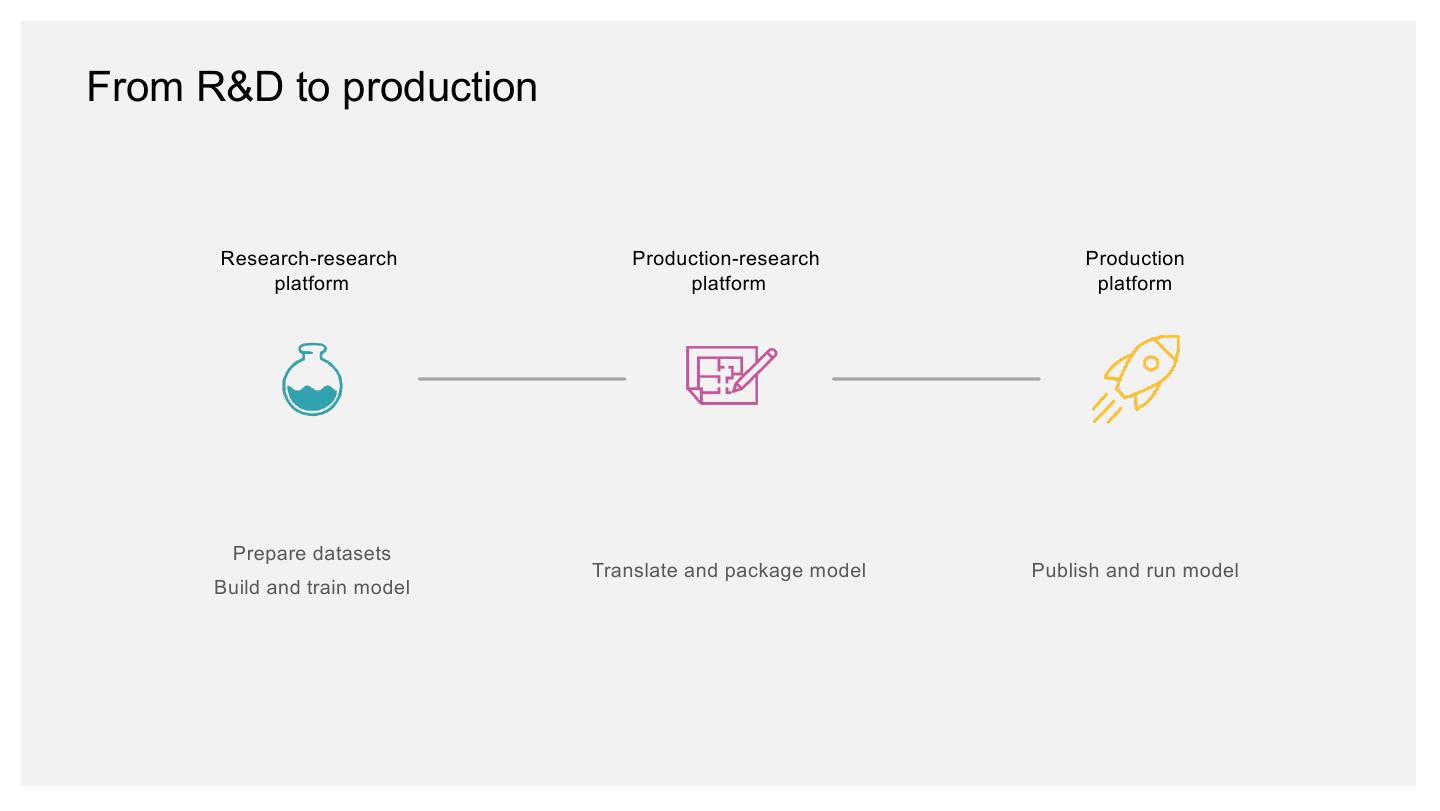

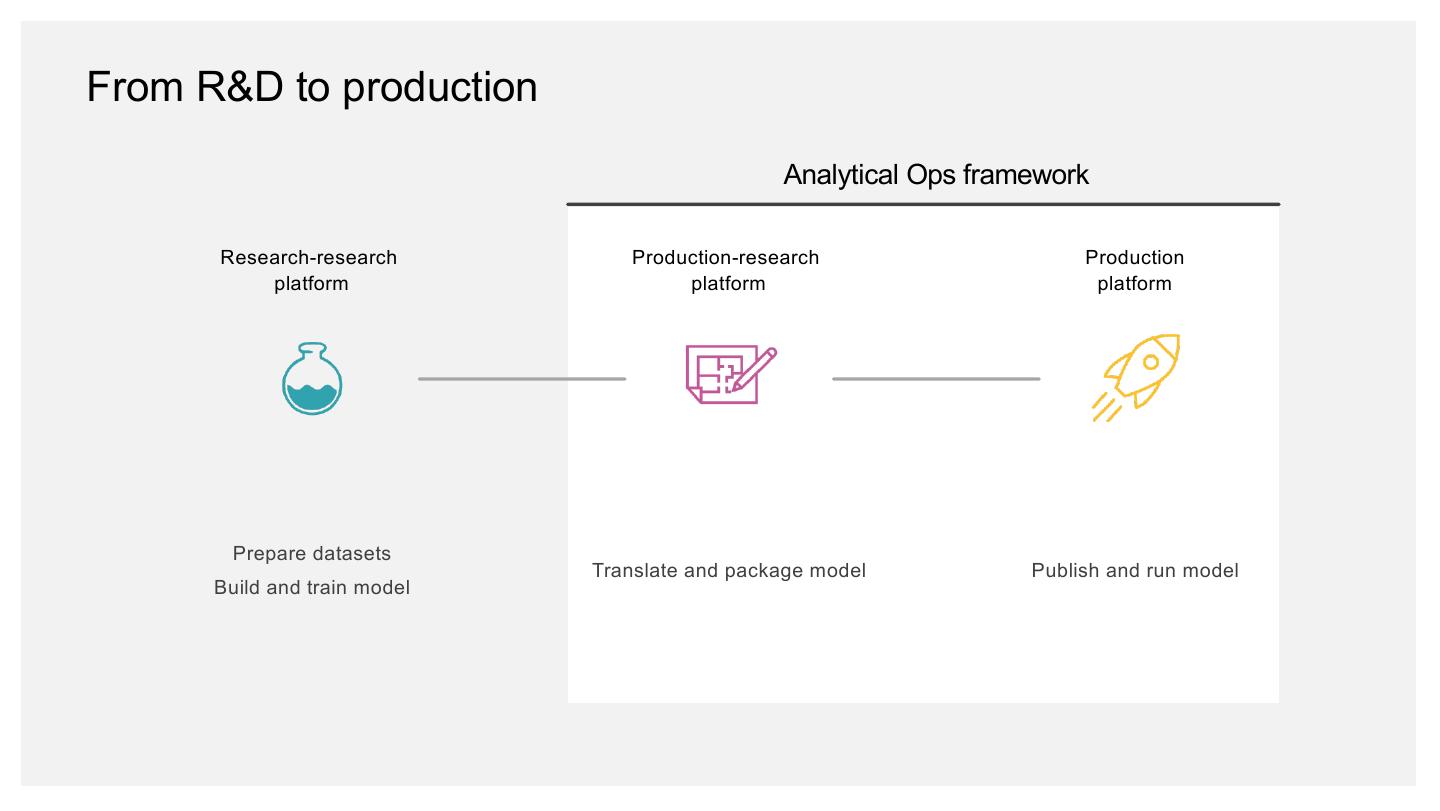

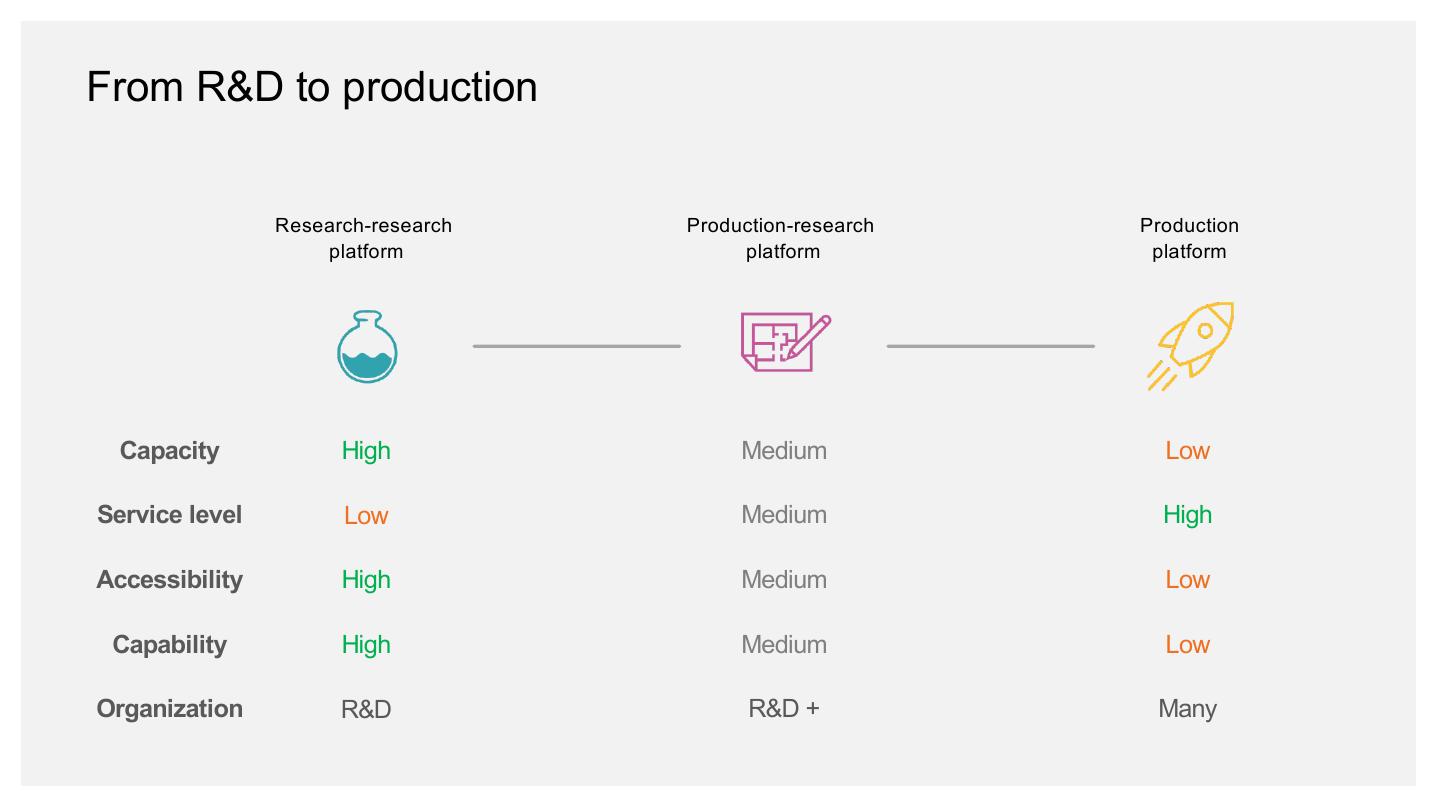

10 .From R&D to production Research-research Production-research Production platform platform platform Prepare datasets Translate and package model Publish and run model Build and train model

11 .From R&D to production Research-research Production-research Production platform platform platform Prepare datasets Translate and package model Publish and run model Build and train model

12 .Anomaly detection

13 .

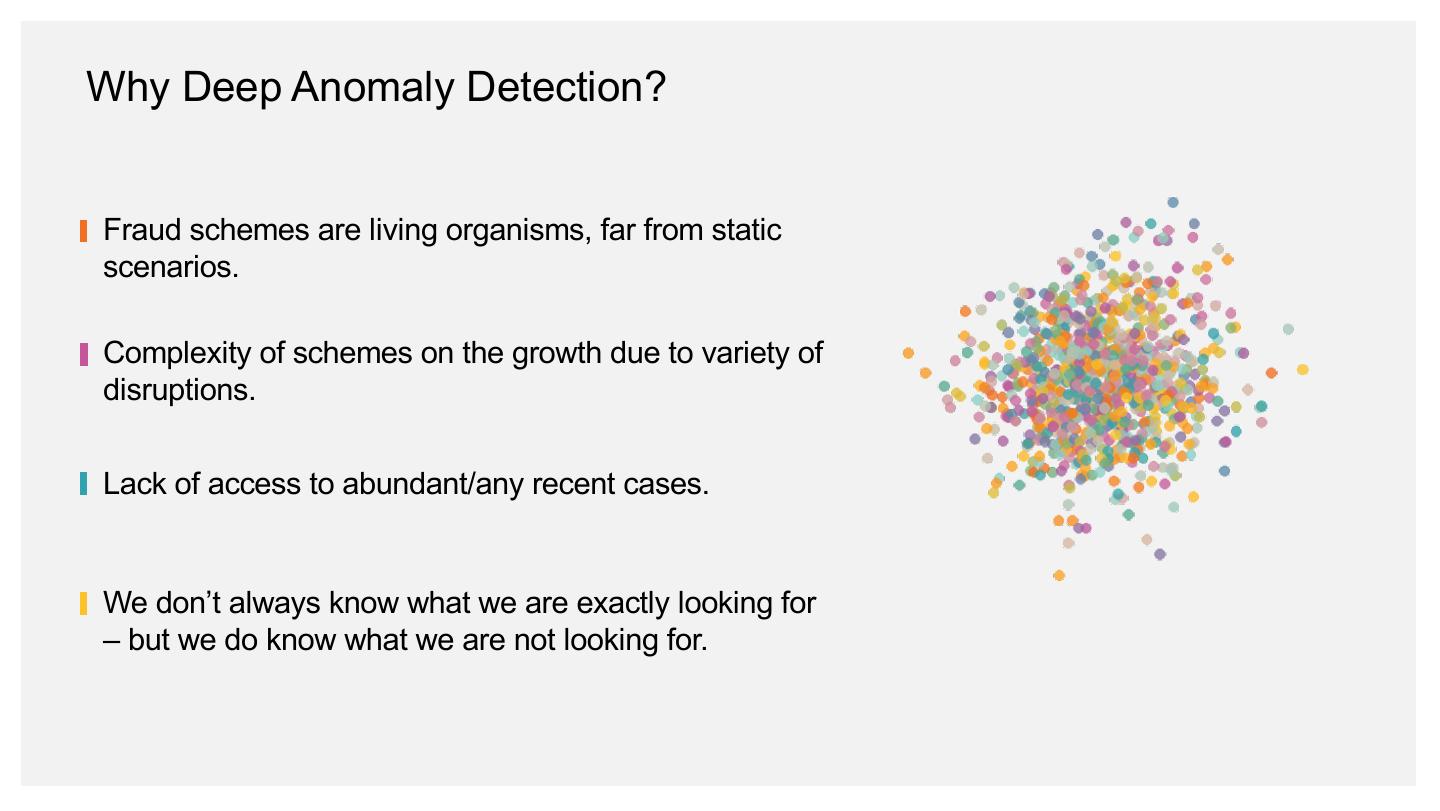

14 .Why Deep Anomaly Detection? Fraud schemes are living organisms, far from static scenarios. Complexity of schemes on the growth due to variety of disruptions. Lack of access to abundant/any recent cases. We don’t always know what we are exactly looking for – but we do know what we are not looking for.

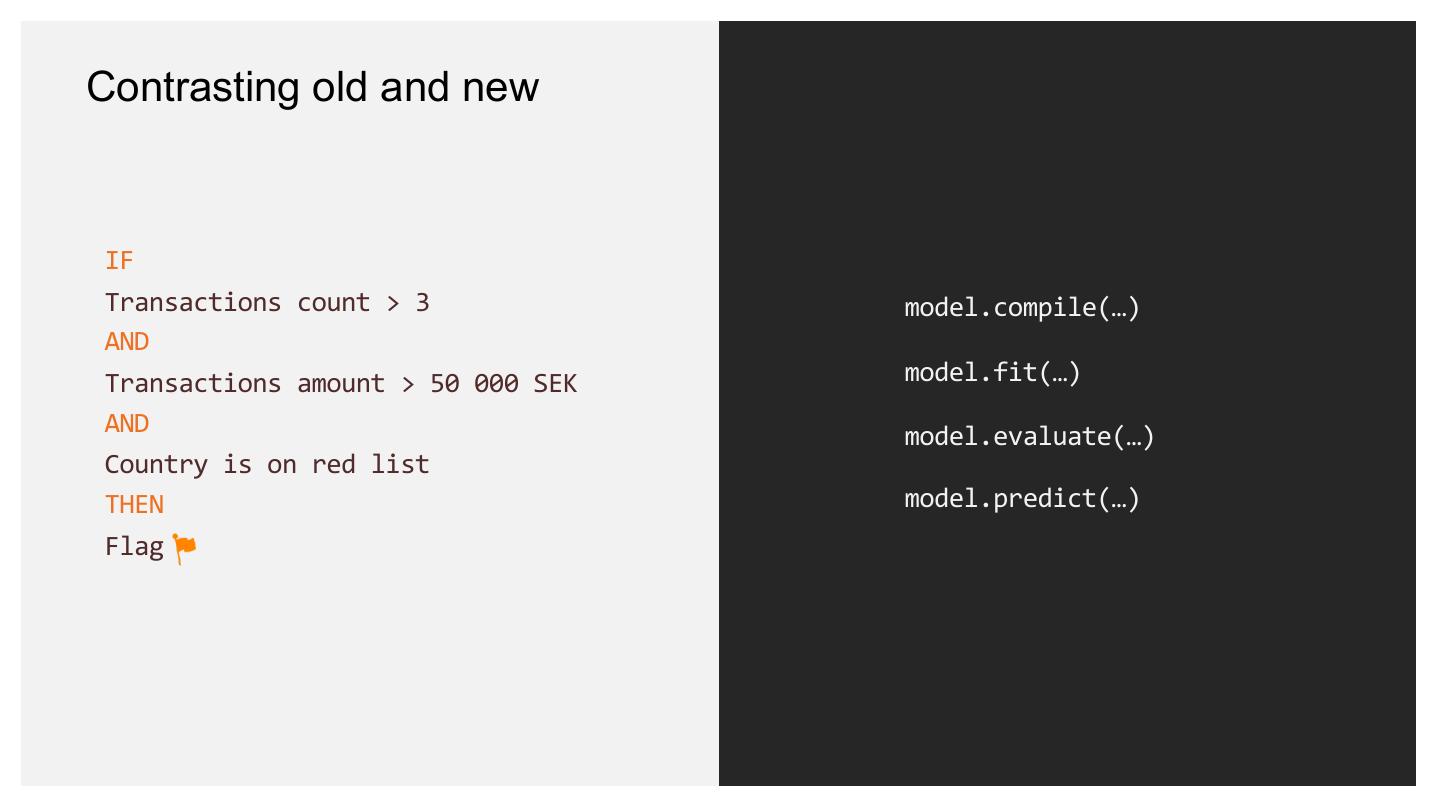

15 .Contrasting old and new IF Transactions count > 3 model.compile(…) AND Transactions amount > 50 000 SEK model.fit(…) AND model.evaluate(…) Country is on red list THEN model.predict(…) Flag

16 .Feature engineering

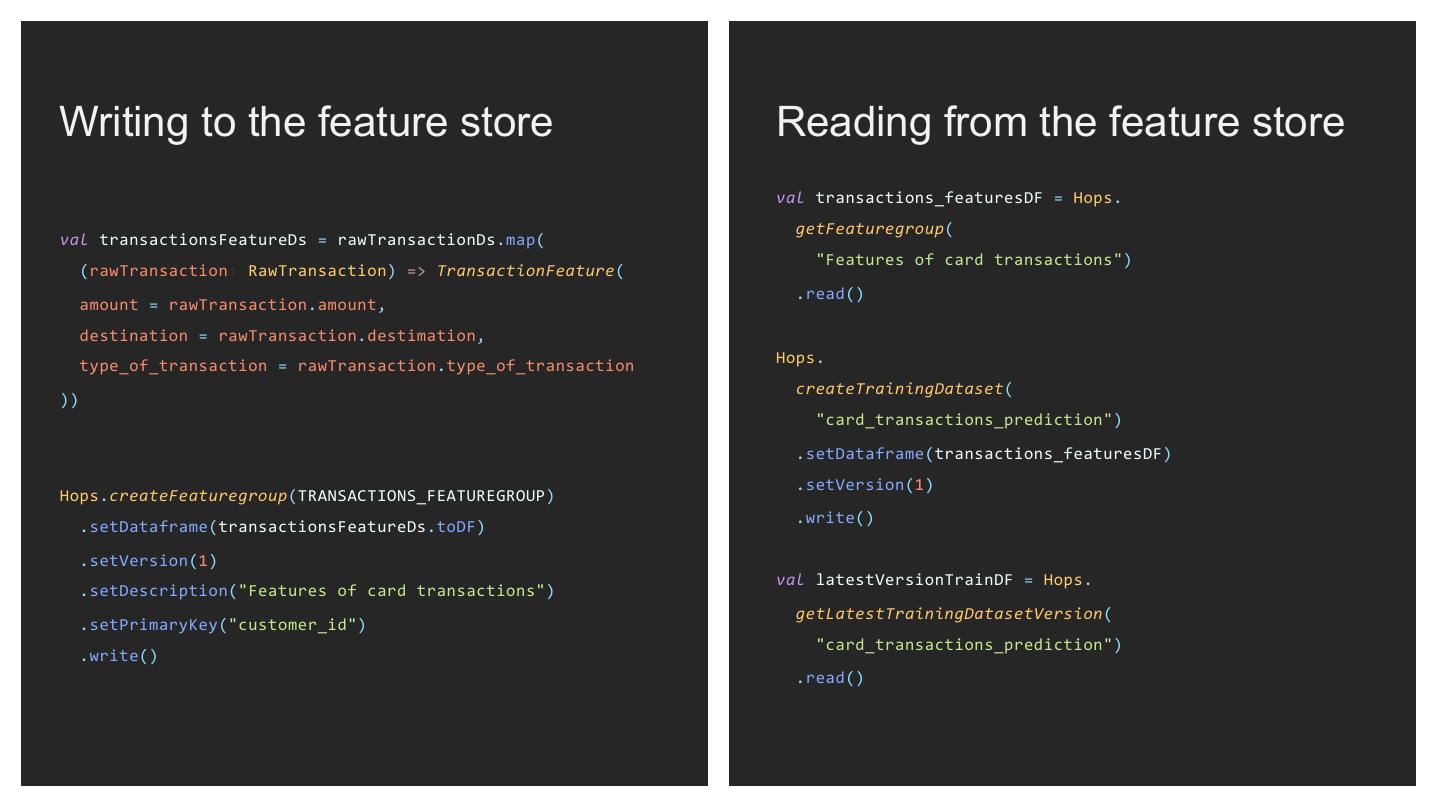

17 .Writing to the feature store Reading from the feature store val transactions_featuresDF = Hops. getFeaturegroup( val transactionsFeatureDs = rawTransactionDs.map( F e "Features of card transactions") (rawTransaction: RawTransaction) => TransactionFeature( a t u r .read() amount = rawTransaction.amount, e e n destination = rawTransaction.destimation, g i n type_of_transaction = rawTransaction.type_of_transaction e Hops. e r i n createTrainingDataset( )) g : t "card_transactions_prediction") r a n s .setDataframe(transactions_featuresDF) a c t .setVersion(1) Hops.createFeaturegroup(TRANSACTIONS_FEATUREGROUP) i o n s .write() .setDataframe(transactionsFeatureDs.toDF) 2 v e c .setVersion(1) val latestVersionTrainDF = Hops. .setDescription("Features of card transactions") getLatestTrainingDatasetVersion( .setPrimaryKey("customer_id") "card_transactions_prediction") .write() .read()

18 .Generative Adversarial Networks for anomaly detection

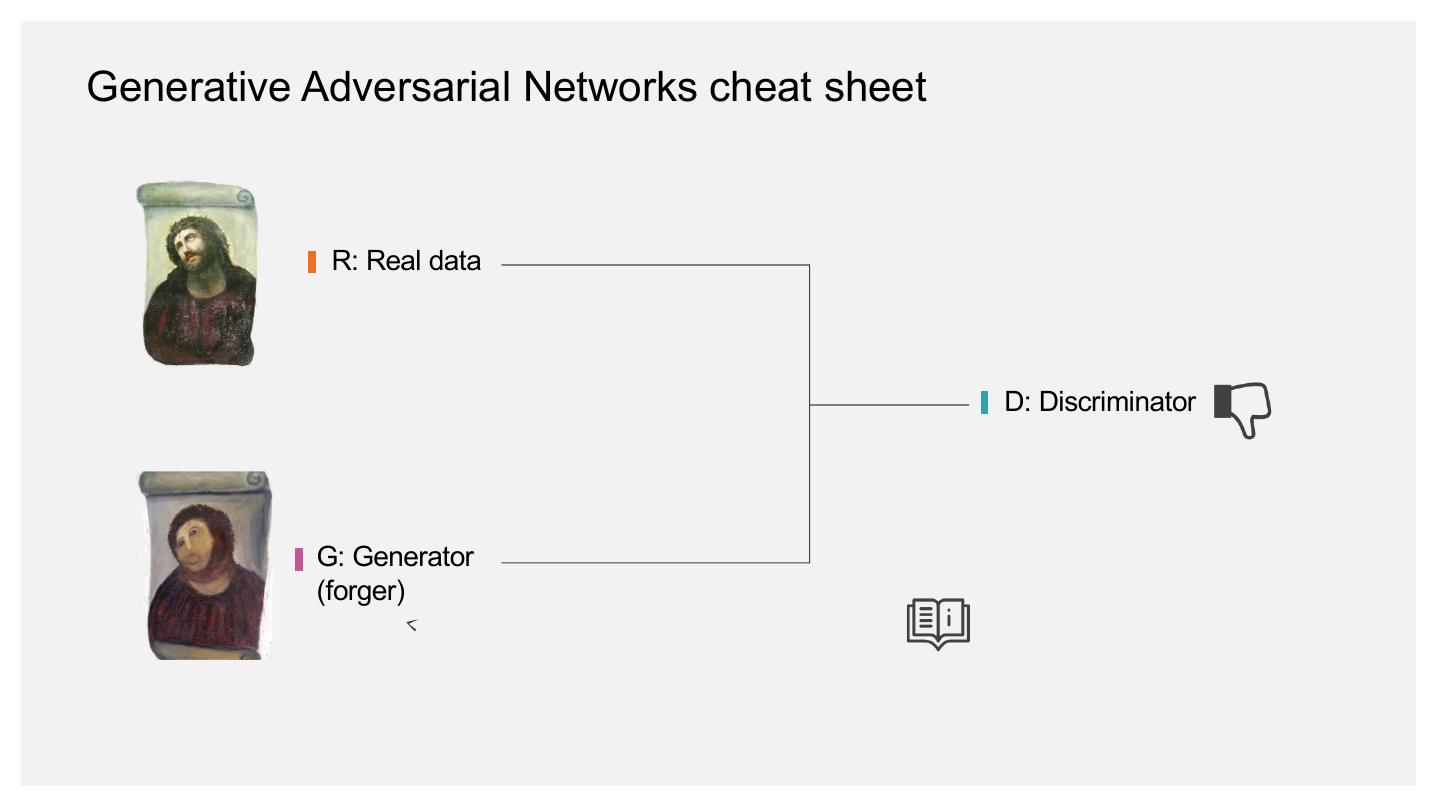

19 .Generative Adversarial Networks cheat sheet R: Real data D: Discriminator G: Generator (forger)

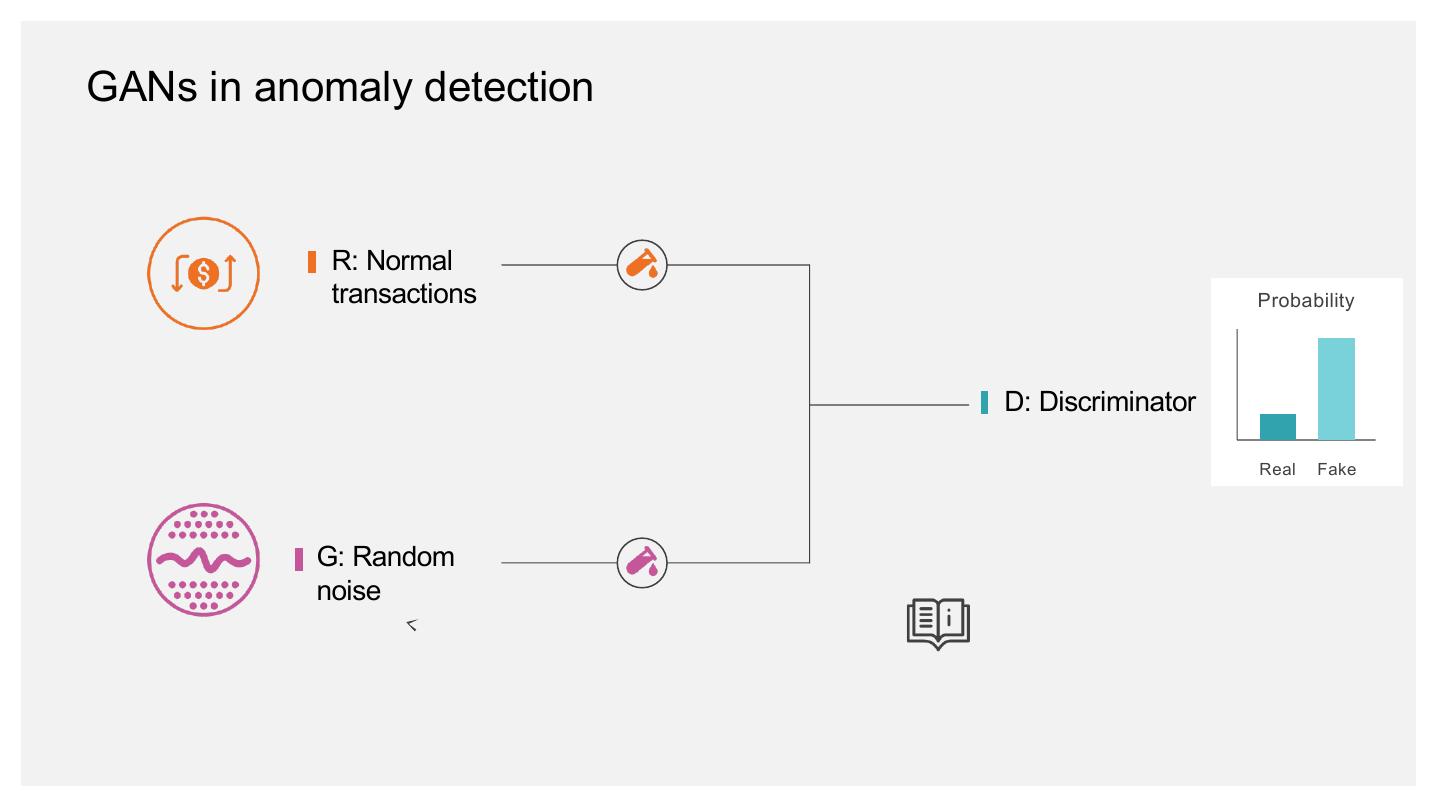

20 .GANs in anomaly detection R: Normal transactions Probability D: Discriminator Real Fake G: Random noise

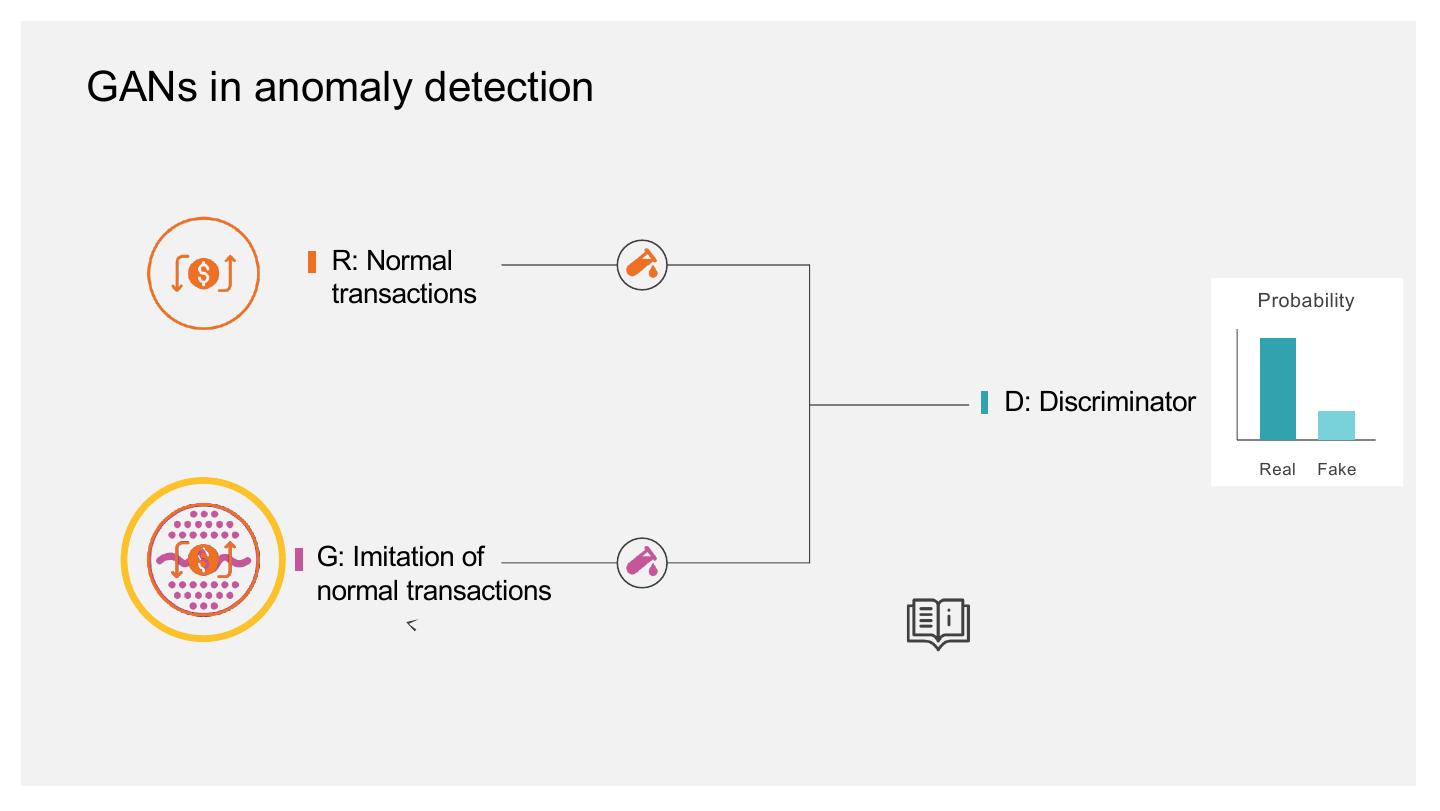

21 .GANs in anomaly detection R: Normal transactions Probability D: Discriminator Real Fake G: Imitation of normal transactions

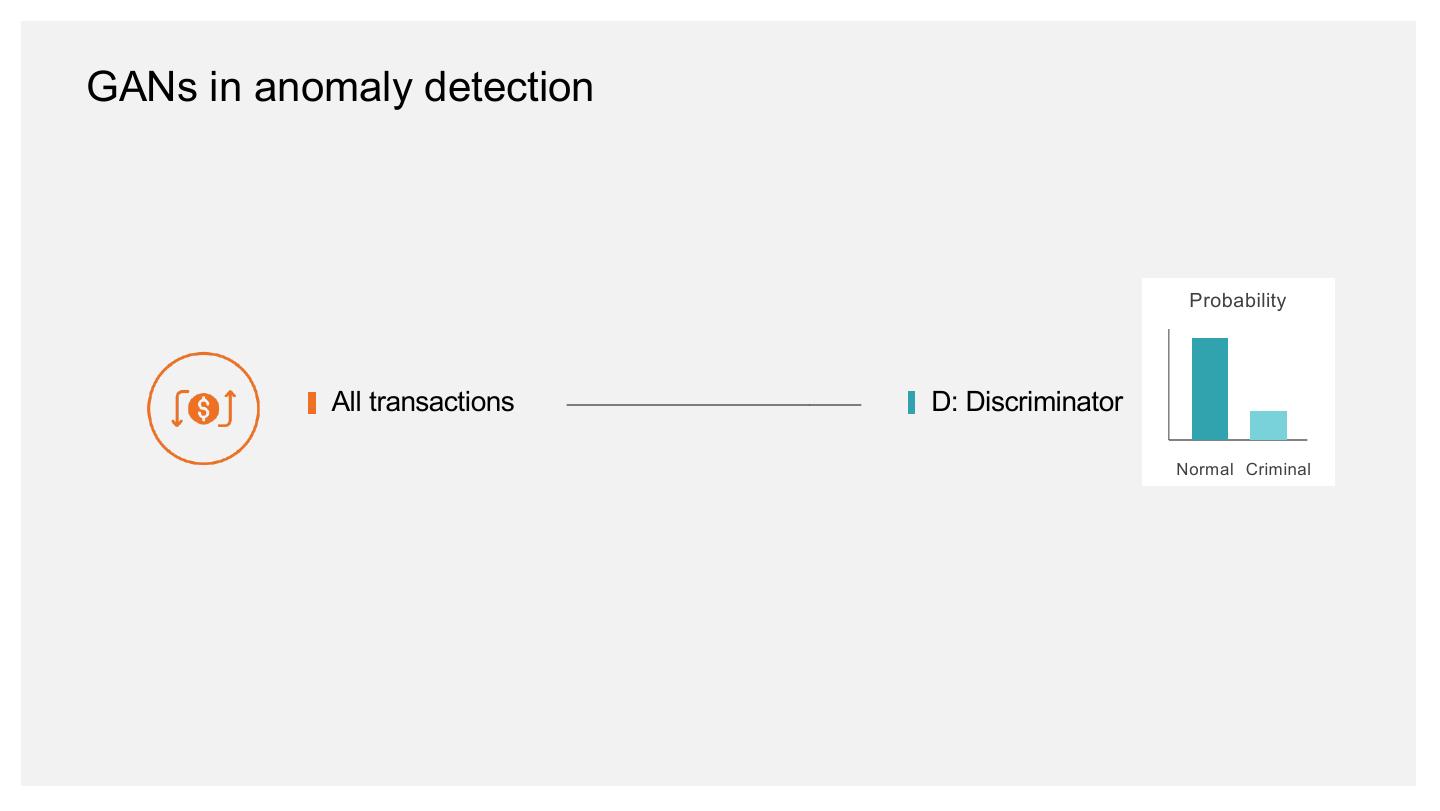

22 .GANs in anomaly detection Probability All transactions D: Discriminator Normal Criminal

23 .GAN estimator API def build_gan(): fake_input = generator_network(noise) d_logit_real = discriminator_network(real_input) d_logit_fake = discriminator_network(fake_input) return d_logit_real, d_logit_fake def gan_loss(): real_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_logit_real, labels=tf.ones_like(d_logit_real))) fake_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_logit_fake, labels=tf.zeros_like(d_logit_fake))) d_loss = real_loss + fake_loss g_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_logit_fake, labels=tf.ones_like(d_logit_fake))) return d_loss, g_loss def gan_optimizer(d_loss, g_loss, d_learning_rate, opt_type): return d_opt, g_opt

24 .GAN estimator API def build_gan(): fake_input = generator_network(noise) d_logit_real = discriminator_network(real_input) d_logit_fake = discriminator_network(fake_input) return d_logit_real, d_logit_fake def gan_loss(): real_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_logit_real, labels=tf.ones_like(d_logit_real))) fake_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_logit_fake, labels=tf.zeros_like(d_logit_fake))) d_loss = real_loss + fake_loss g_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_logit_fake, labels=tf.ones_like(d_logit_fake))) return d_loss, g_loss def gan_optimizer(d_loss, g_loss, d_learning_rate, opt_type): return d_opt, g_opt

25 .GAN estimator API def build_gan(): fake_input = generator_network(noise) d_logit_real = discriminator_network(real_input) d_logit_fake = discriminator_network(fake_input) return d_logit_real, d_logit_fake def gan_loss(): real_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_logit_real, labels=tf.ones_like(d_logit_real))) fake_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_logit_fake, labels=tf.zeros_like(d_logit_fake))) d_loss = real_loss + fake_loss g_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_logit_fake, labels=tf.ones_like(d_logit_fake))) return d_loss, g_loss def gan_optimizer(d_loss, g_loss, d_learning_rate, opt_type): return d_opt, g_opt

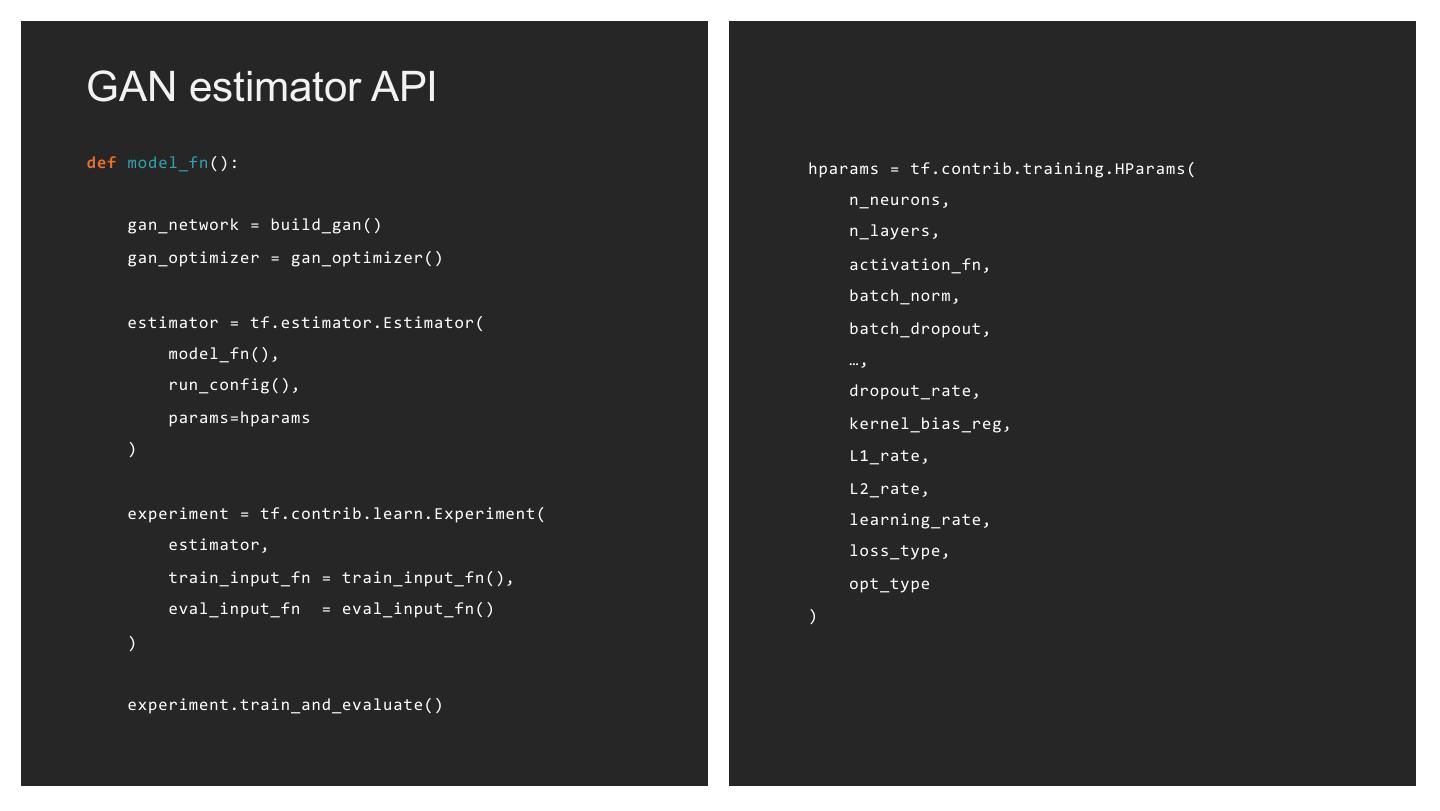

26 .GAN estimator API def model_fn(): hparams = tf.contrib.training.HParams( n_neurons, gan_network = build_gan() n_layers, gan_optimizer = gan_optimizer() F e activation_fn, a t u r batch_norm, e e estimator = tf.estimator.Estimator( n batch_dropout, g i model_fn(), n e …, e r run_config(), i dropout_rate, n g params=hparams : t kernel_bias_reg, r a ) n s L1_rate, a c t i L2_rate, o experiment = tf.contrib.learn.Experiment( n s learning_rate, 2 v estimator, e loss_type, c train_input_fn = train_input_fn(), opt_type eval_input_fn = eval_input_fn() ) ) experiment.train_and_evaluate()

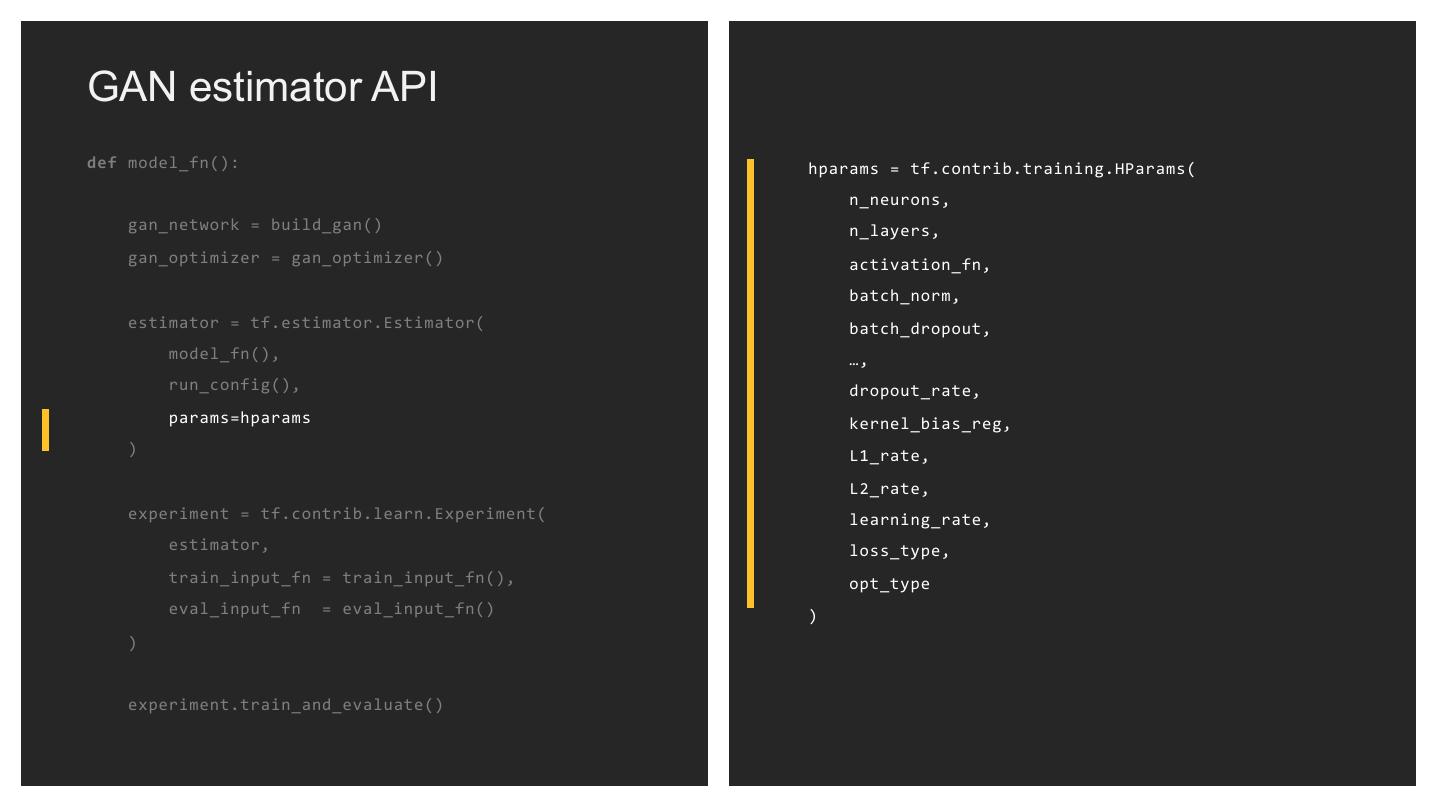

27 .GAN estimator API def model_fn(): hparams = tf.contrib.training.HParams( n_neurons, gan_network = build_gan() n_layers, gan_optimizer = gan_optimizer() F e activation_fn, a t u r batch_norm, e e estimator = tf.estimator.Estimator( n batch_dropout, g i model_fn(), n e …, e r run_config(), i dropout_rate, n g params=hparams : t kernel_bias_reg, r a ) n s L1_rate, a c t i L2_rate, o experiment = tf.contrib.learn.Experiment( n s learning_rate, 2 v estimator, e loss_type, c train_input_fn = train_input_fn(), opt_type eval_input_fn = eval_input_fn() ) ) experiment.train_and_evaluate()

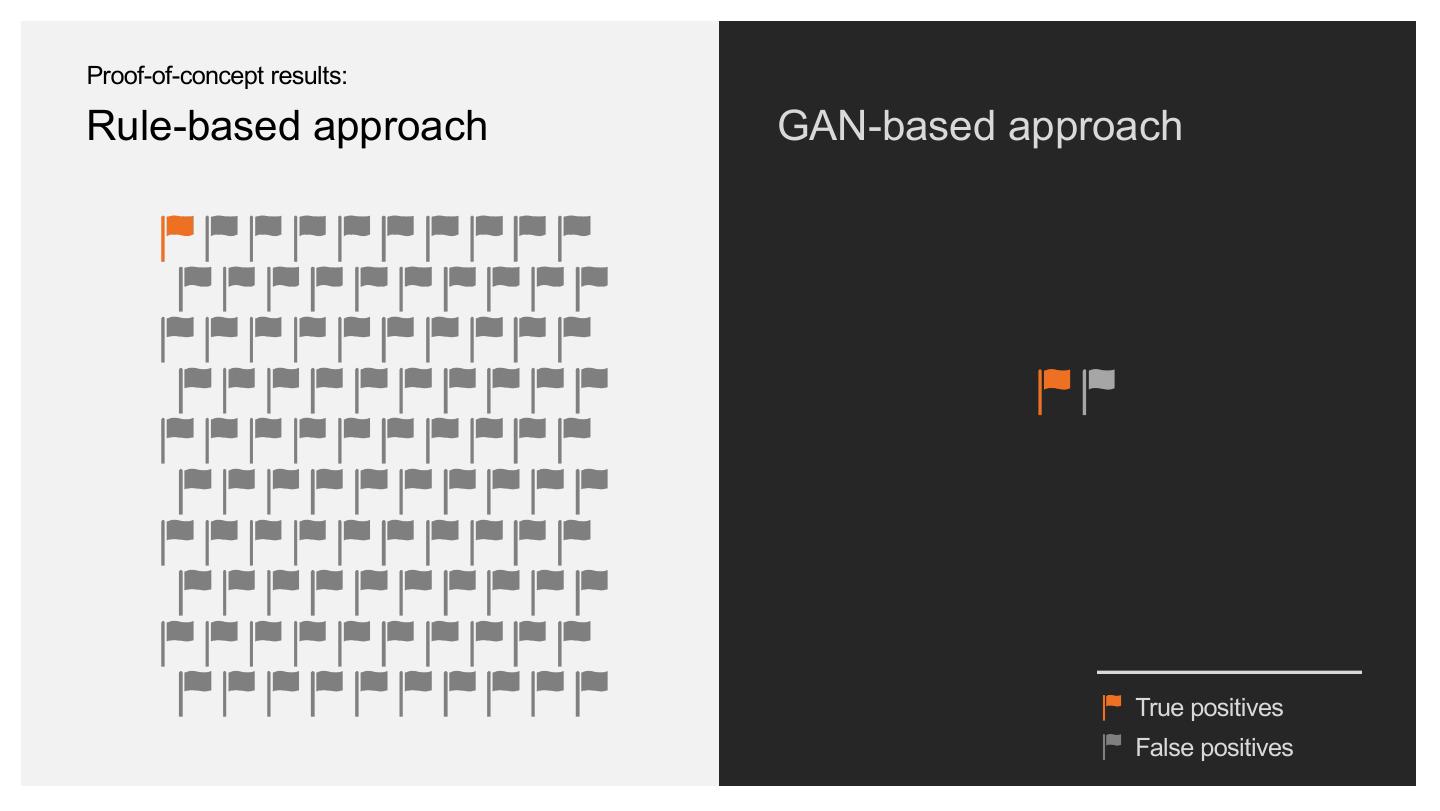

28 .Proof-of-concept results: Rule-based approach GAN-based approach True positives False positives

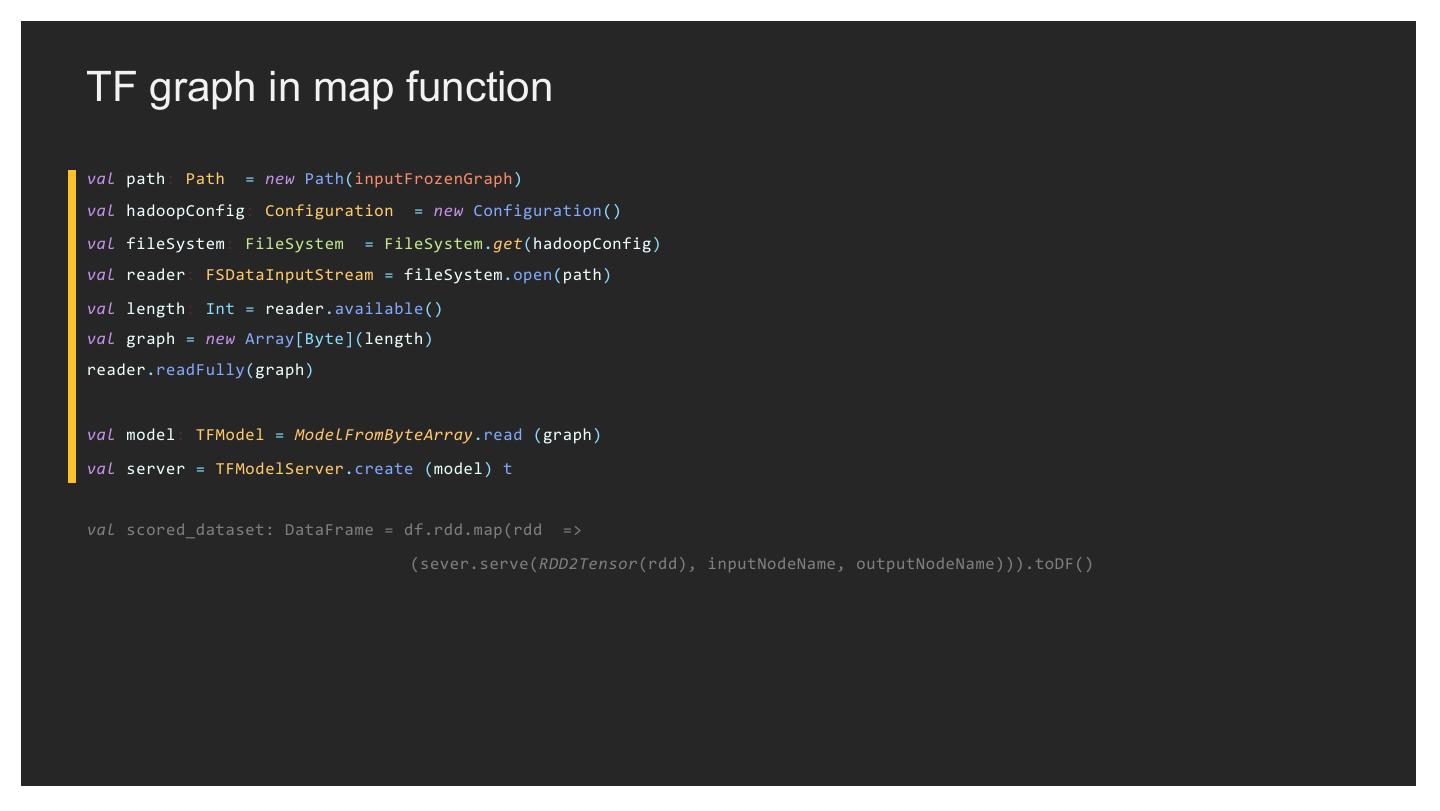

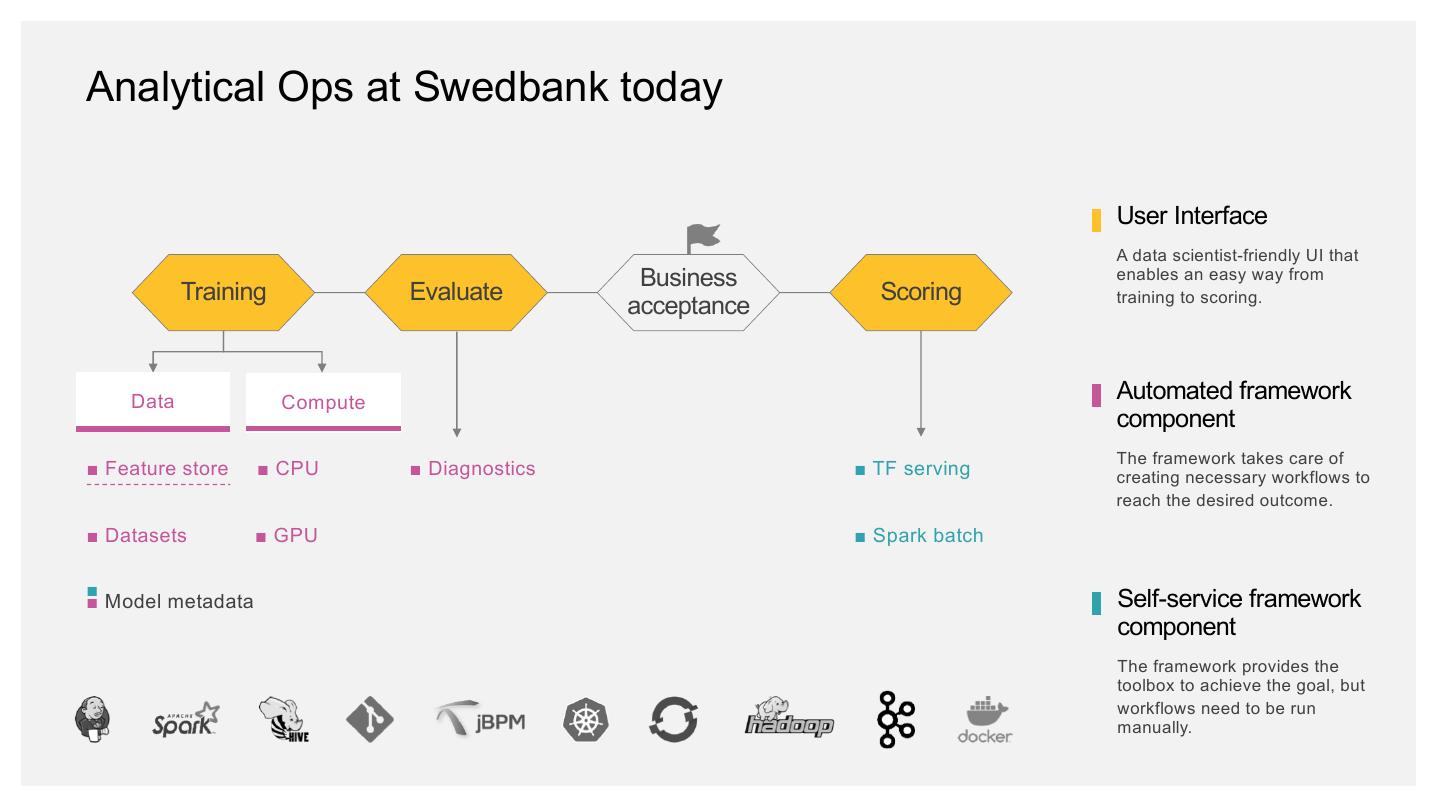

29 .Model Deployment (TensorFlow serving)