- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 视频嵌入链接 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

使用英特尔低精度优化工具LPOT,加速tensorflow模型推理

使用英特尔低精度优化工具LPOT,加速tensorflow模型推理

展开查看详情

1 .Apply Intel® Low Precision Optimization Tool (LPOT/iLiT), Speed up Tensorflow Inference Zhang, Jianyu AI TCE IAGS

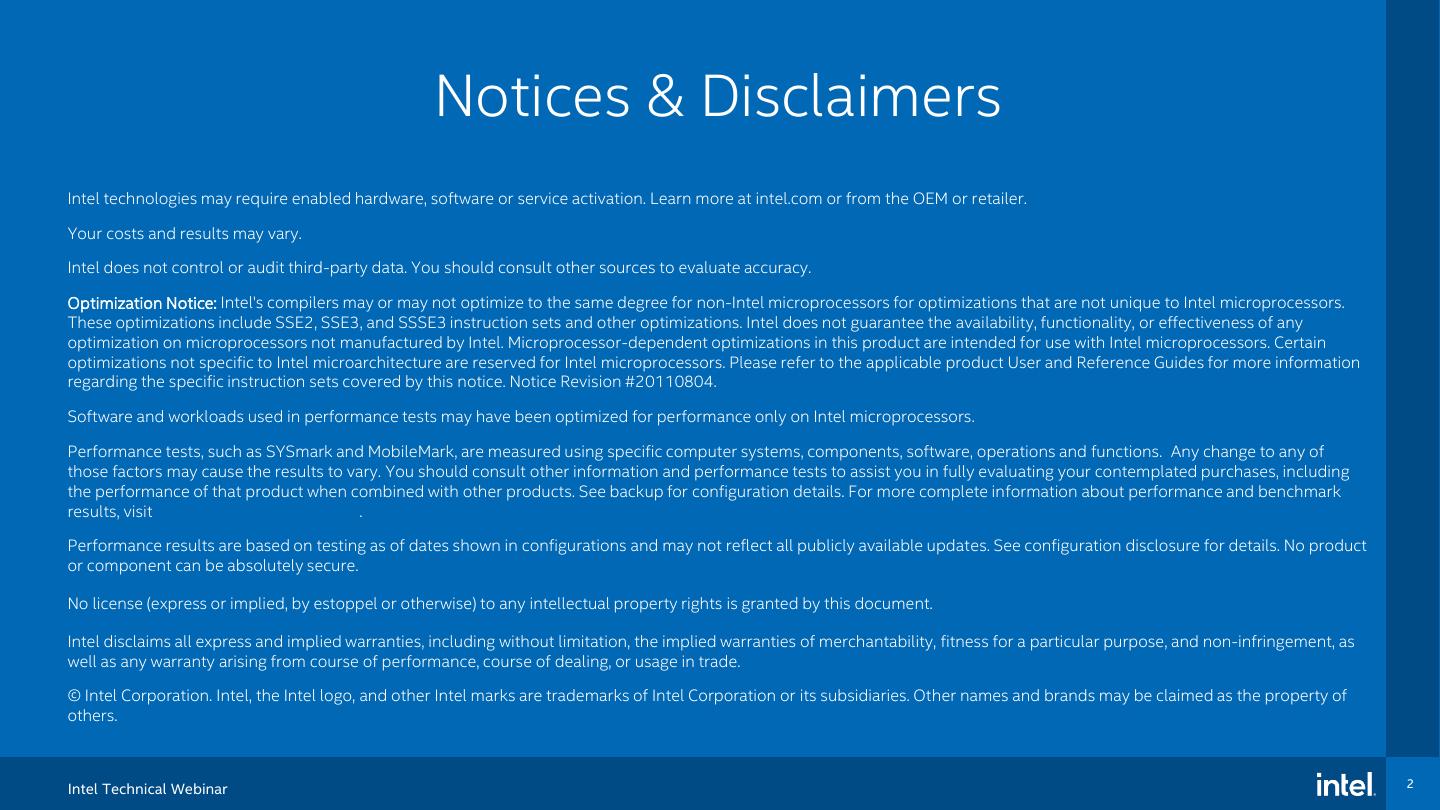

2 . Notices & Disclaimers Intel technologies may require enabled hardware, software or service activation. Learn more at intel.com or from the OEM or retailer. Your costs and results may vary. Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy. Optimization Notice: Intel's compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel microprocessors. These optimizations include SSE2, SSE3, and SSSE3 instruction sets and other optimizations. Intel does not guarantee the availability, functionality, or effectiveness of any optimization on microprocessors not manufactured by Intel. Microprocessor-dependent optimizations in this product are intended for use with Intel microprocessors. Certain optimizations not specific to Intel microarchitecture are reserved for Intel microprocessors. Please refer to the applicable product User and Reference Guides for more information regarding the specific instruction sets covered by this notice. Notice Revision #20110804. https://software.intel.com/en-us/articles/optimization-notice Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors. Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. See backup for configuration details. For more complete information about performance and benchmark results, visit www.intel.com/benchmarks. Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See configuration disclosure for details. No product or component can be absolutely secure. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. © Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others. Intel Technical Webinar 2

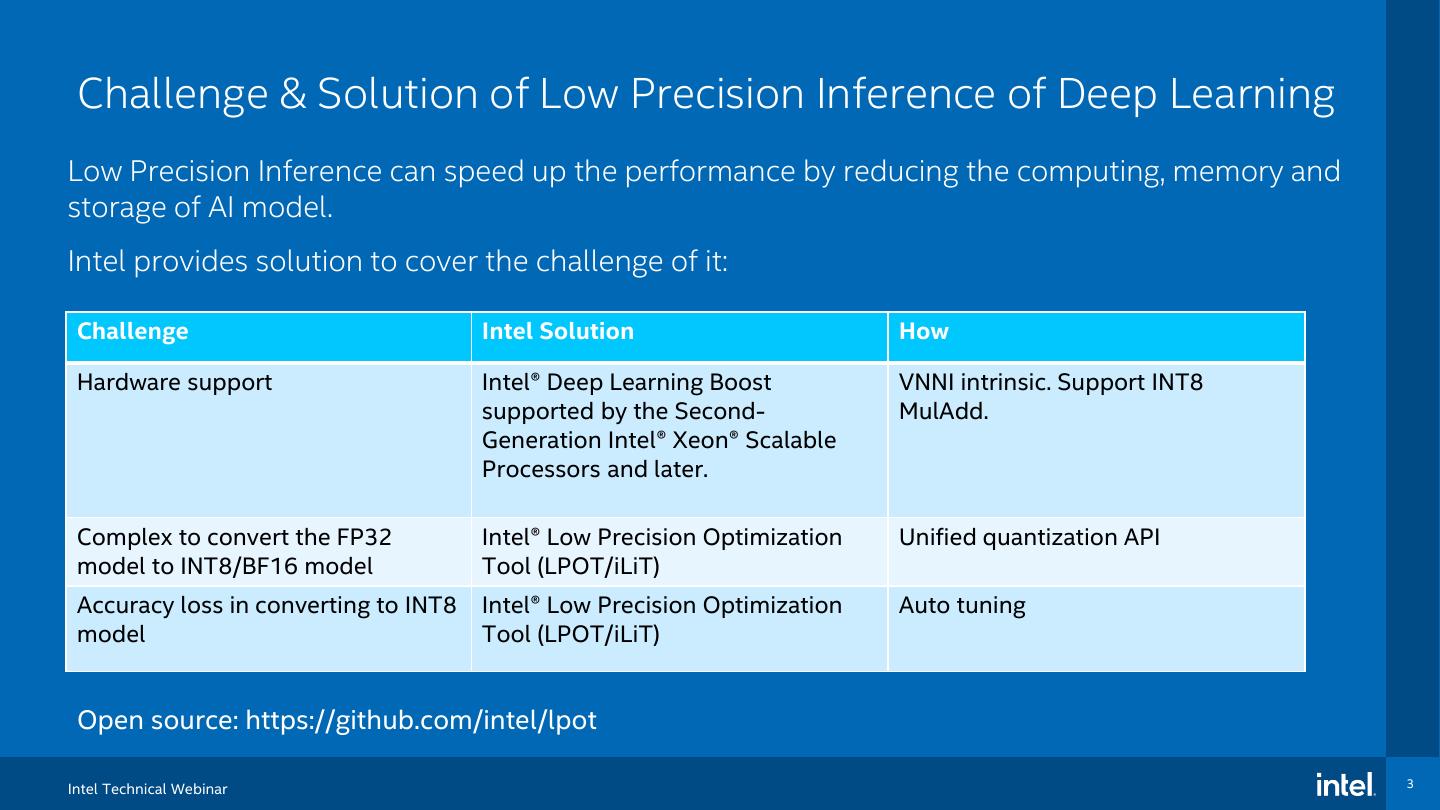

3 . Challenge & Solution of Low Precision Inference of Deep Learning Low Precision Inference can speed up the performance by reducing the computing, memory and storage of AI model. Intel provides solution to cover the challenge of it: Challenge Intel Solution How Hardware support Intel® Deep Learning Boost VNNI intrinsic. Support INT8 supported by the Second- MulAdd. Generation Intel® Xeon® Scalable Processors and later. Complex to convert the FP32 Intel® Low Precision Optimization Unified quantization API model to INT8/BF16 model Tool (LPOT/iLiT) Accuracy loss in converting to INT8 Intel® Low Precision Optimization Auto tuning model Tool (LPOT/iLiT) Open source: https://github.com/intel/lpot Intel Technical Webinar 3

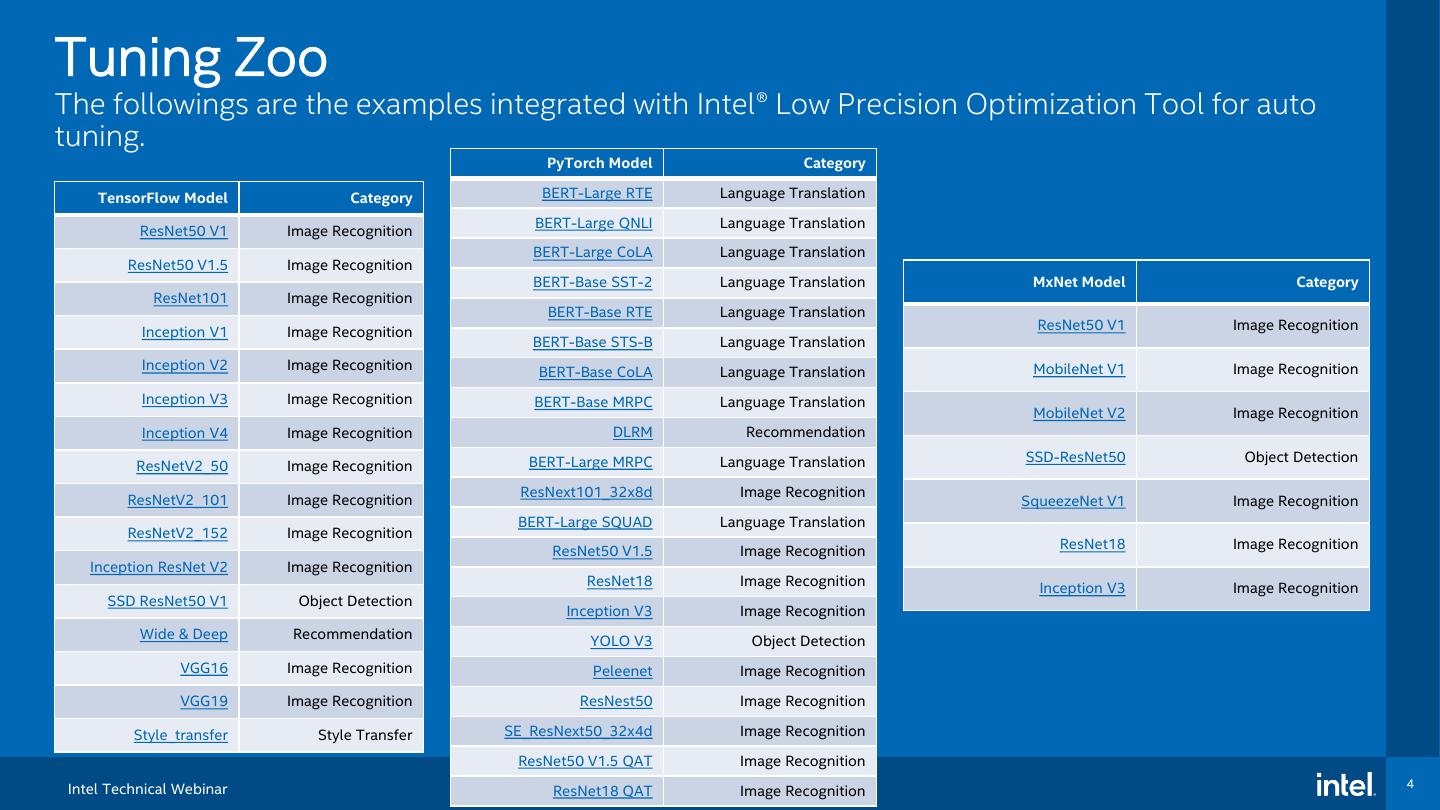

4 .Tuning Zoo The followings are the examples integrated with Intel® Low Precision Optimization Tool for auto tuning. PyTorch Model Category TensorFlow Model Category BERT-Large RTE Language Translation BERT-Large QNLI Language Translation ResNet50 V1 Image Recognition BERT-Large CoLA Language Translation ResNet50 V1.5 Image Recognition BERT-Base SST-2 Language Translation MxNet Model Category ResNet101 Image Recognition BERT-Base RTE Language Translation Inception V1 Image Recognition ResNet50 V1 Image Recognition BERT-Base STS-B Language Translation Inception V2 Image Recognition BERT-Base CoLA Language Translation MobileNet V1 Image Recognition Inception V3 Image Recognition BERT-Base MRPC Language Translation MobileNet V2 Image Recognition Inception V4 Image Recognition DLRM Recommendation BERT-Large MRPC Language Translation SSD-ResNet50 Object Detection ResNetV2_50 Image Recognition ResNext101_32x8d Image Recognition ResNetV2_101 Image Recognition SqueezeNet V1 Image Recognition BERT-Large SQUAD Language Translation ResNetV2_152 Image Recognition ResNet50 V1.5 Image Recognition ResNet18 Image Recognition Inception ResNet V2 Image Recognition ResNet18 Image Recognition Inception V3 Image Recognition SSD ResNet50 V1 Object Detection Inception V3 Image Recognition Wide & Deep Recommendation YOLO V3 Object Detection VGG16 Image Recognition Peleenet Image Recognition VGG19 Image Recognition ResNest50 Image Recognition Style_transfer Style Transfer SE_ResNext50_32x4d Image Recognition ResNet50 V1.5 QAT Image Recognition Intel Technical Webinar 4 ResNet18 QAT Image Recognition

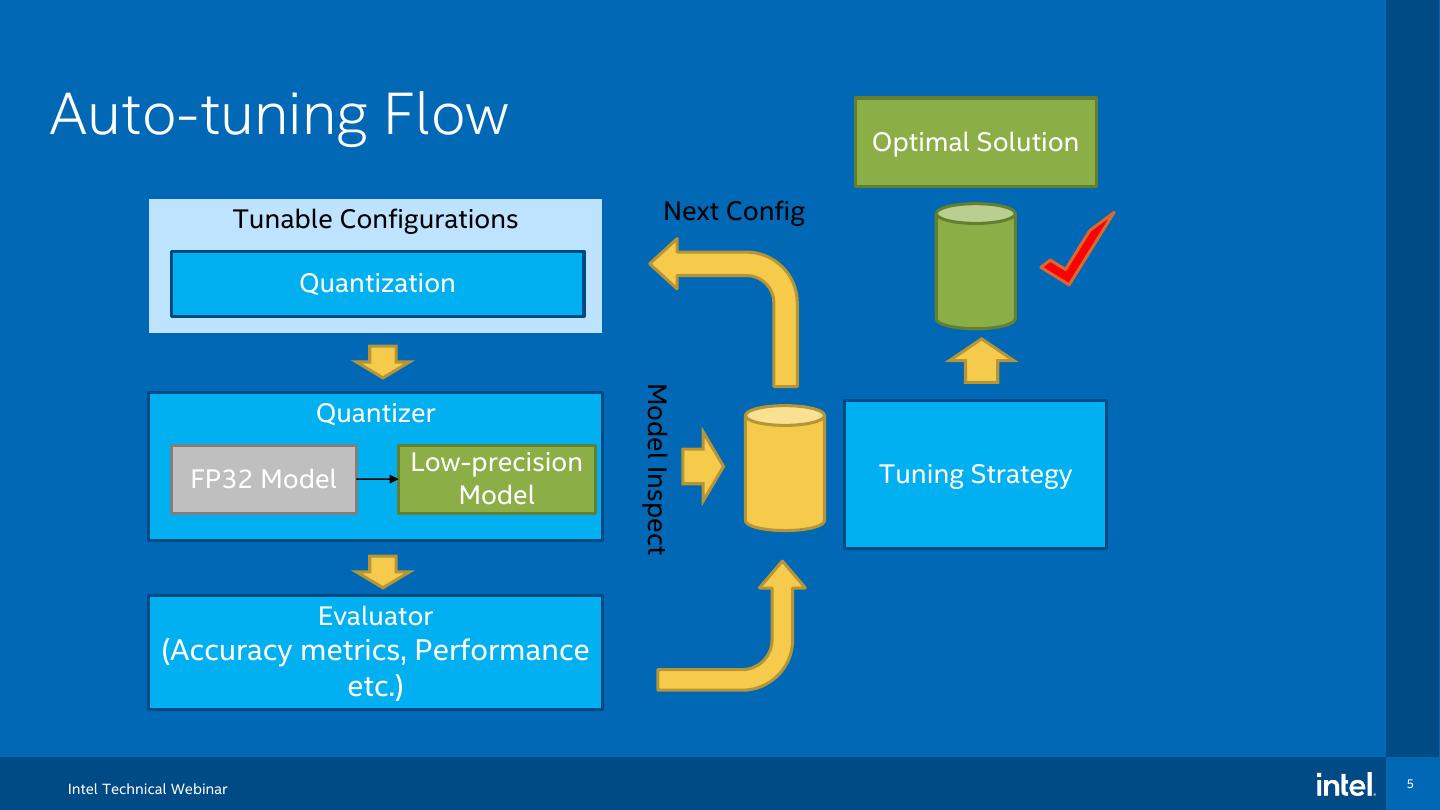

5 .Auto-tuning Flow Optimal Solution Tunable Configurations Next Config Quantization Model Inspect Quantizer Low-precision Tuning Strategy FP32 Model Model Evaluator (Accuracy metrics, Performance etc.) Intel Technical Webinar 5

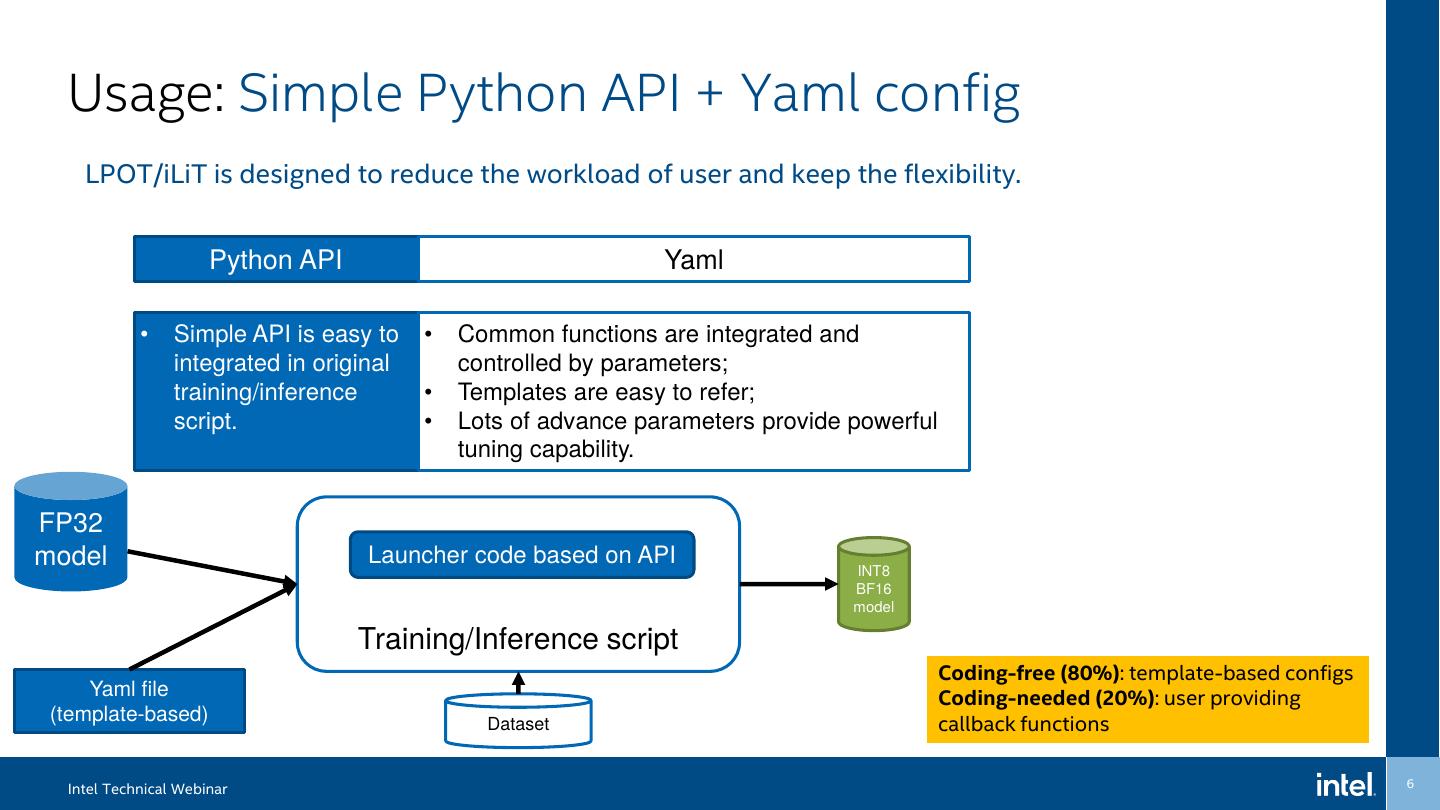

6 . Usage: Simple Python API + Yaml config LPOT/iLiT is designed to reduce the workload of user and keep the flexibility. Python API Yaml • Simple API is easy to • Common functions are integrated and integrated in original controlled by parameters; training/inference • Templates are easy to refer; script. • Lots of advance parameters provide powerful tuning capability. FP32 model Launcher code based on API INT8 BF16 model Training/Inference script Coding-free (80%): template-based configs Yaml file Coding-needed (20%): user providing (template-based) Dataset callback functions Intel Technical Webinar 6

7 . ▪ Install from oneAPI AI Analytics Toolkit source /opt/intel/oneapi/setvars.sh conda activate tensorflow cd /opt/intel/oneapi/iLiT/latest sudo ./install_iLiT.sh Installation ▪ Install from source git clone https://github.com/intel/lpot.git cd lpot python setup.py install ▪ Install from binary # install from pip pip install lpot # install from conda conda install lpot -c intel -c conda-forge Intel Technical Webinar 7

8 .Code Intel oneAPI Toolkit Samples: https://github.com/oneapi-src/oneAPI-samples iLiT Sample for Tensorflow:-samples https://github.com/oneapi-src/oneAPI-samples/tree/master/AI-and- Analytics/Getting-Started-Samples/iLiT-Sample-for-Tensorflow Intel Technical Webinar 8

9 .Prepare PIP oneAPI Prepare Running Env: Prepare Running Env: python -m venv lpot_demo --without-pip source /opt/intel/oneapi/setvars.sh source lpot_demo/bin/activate conda activate tensorflow wget bootstrap.pypa.io/get-pip.py cd /opt/intel/oneapi/iLiT/latest python get-pip.py sudo ./install_iLiT.sh pip install intel-tensorflow ilit notebook matplotlib python -m pip install notebook matplotlib or pip install intel-tensorflow lpot notebook matplotlib Run: ./run_jupyter.sh Run: ./run_jupyter.sh Stop: jupyter notebook stop Stop: jupyter notebook stop Intel Technical Webinar 9

10 . Thank you For internal use only - Intel Confidential 10