- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Running MongoDB in Production part 2

你是一个经验丰富的MySQLDBA,需要添加MongoDB到你的技能中吗?您是否习惯于管理一个运行良好的小环境,但想知道您可能还不知道的内容?

MongoDB工作得很好,但是当它有问题时,第一个问题是“我应该去哪里解决问题?”

本教程将涵盖:

体系结构和高可用性

复制最佳实践

使用读写关注点

灾难恢复注意事项

硬件

MongoDB硬件主题

储存建议

系统CPU注意事项

网络体系结构

调整MongoDB

存储引擎

耐久性

不启用的内容

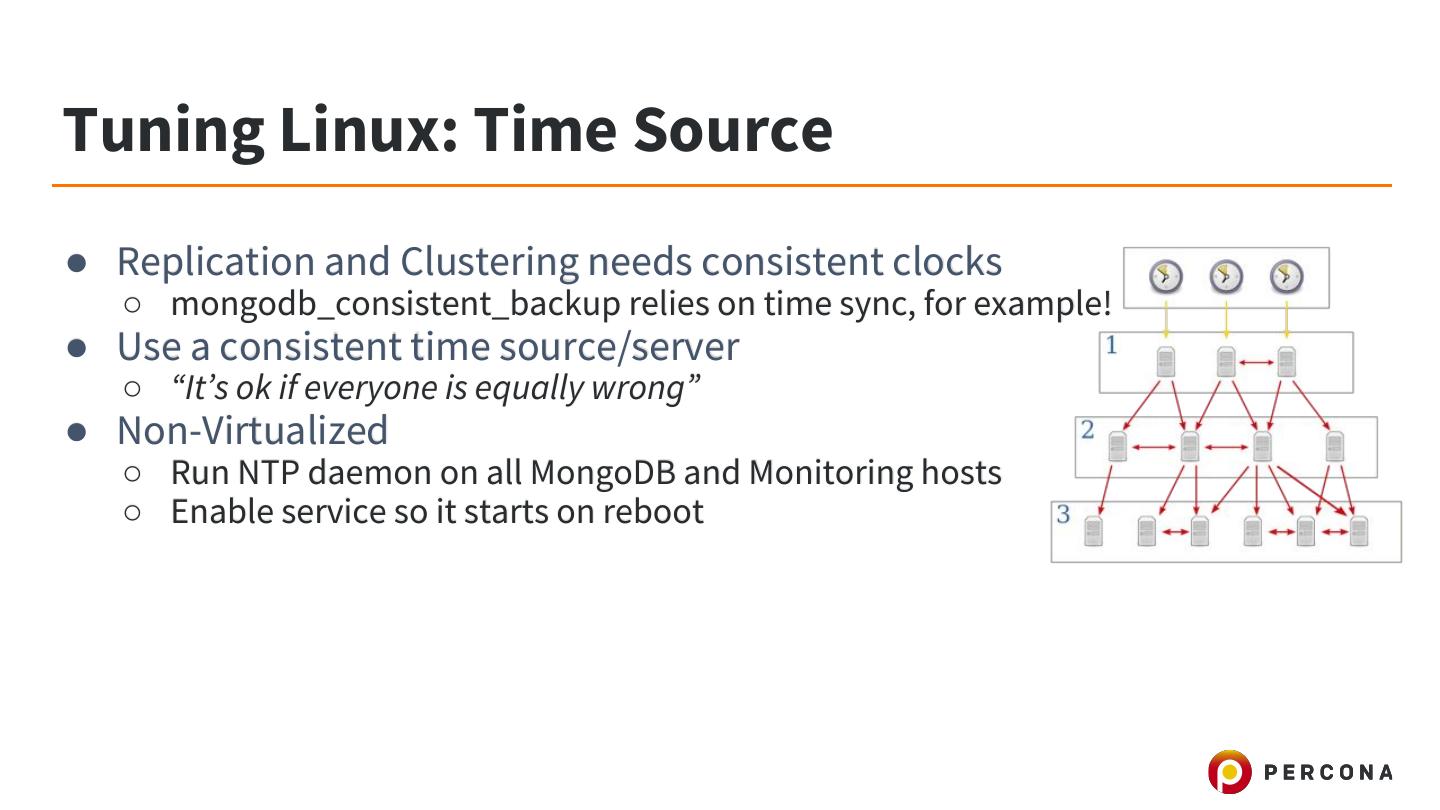

调整Linux

内核优化最佳实践

文件系统和磁盘建议

虚拟内存建议

部署最佳实践

展开查看详情

1 .Running MongoDB in Production, Part II Tim Vaillancourt Sr Technical Operations Architect, Percona Speaker Name

2 .`whoami` { name: “tim”, lastname: “vaillancourt”, employer: “percona”, techs: [ “mongodb”, “mysql”, “cassandra”, “redis”, “rabbitmq”, “solr”, “mesos” “kafka”, “couch*”, “python”, “golang” ] }

3 .Agenda ● Architecture and High-Availability ● Hardware ● Tuning MongoDB ● Tuning Linux

4 .Architecture and High-Availability

5 .High Availability ● Replication ○ Asynchronous ■ Write Concerns can provide psuedo-synchronous replication ■ Changelog based, using the “Oplog” ○ Maximum 50 members ○ Maximum 7 voting members ■ Use “vote:0” for members $gt 7 ○ Oplog ■ The “oplog.rs” capped-collection in “local” storing changes to data ■ Read by secondary members for replication ■ Written to by local node after “apply” of operation

6 .Architecture ● Datacenter Recommendations ○ Minimum of 3 x physical servers required for High-Availability ○ Ensure only 1 x member per Replica Set is on a single physical server!!! ● EC2 / Cloud Recommendations ○ Place Replica Set members in 3 Availability Zones, same region ○ Use a hidden-secondary node for Backup and Disaster Recovery in another region ○ Entire Availability Zones have been lost before!

7 .Hardware

8 .Hardware: Mainframe vs Commodity ● Databases: The Past ○ Buy some really amazing, expensive hardware ○ Buy some crazy expensive license ■ Don’t run a lot of servers due to above ○ Scale up: ■ Buy even more amazing hardware for monolithic host ■ Hardware came on a truck ○ HA: When it rains, it pours

9 .Hardware: Mainframe vs Commodity ● Databases: A New Era ○ Everything fails, nothing is precious ○ Elastic infrastructures (“The cloud”, Mesos, etc) ○ Scale up: add more cheap, commodity servers ○ HA: lots of cheap, commodity servers - still up!

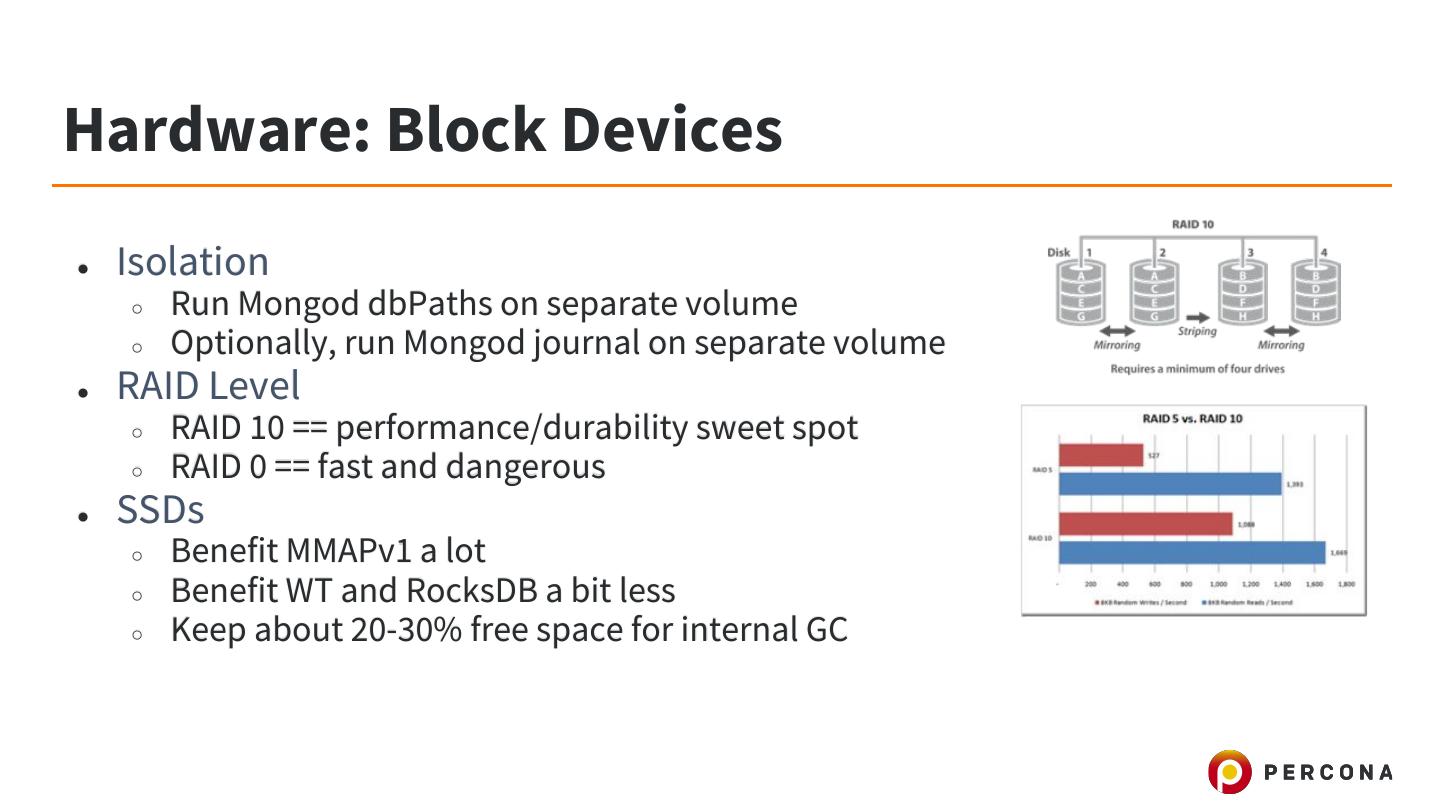

10 .Hardware: Block Devices ● Isolation ○ Run Mongod dbPaths on separate volume ○ Optionally, run Mongod journal on separate volume ● RAID Level ○ RAID 10 == performance/durability sweet spot ○ RAID 0 == fast and dangerous ● SSDs ○ Benefit MMAPv1 a lot ○ Benefit WT and RocksDB a bit less ○ Keep about 20-30% free space for internal GC

11 .Hardware: Block Devices ● EBS / NFS / iSCSI ○ Risks / Drawbacks ■ Exponentially more things to break (more on this) ■ Block device requests wrapped in TCP is extremely slow ■ You probably already paid for some fast local disks ■ More difficult (sometimes nearly-impossible) to troubleshoot ■ MongoDB doesn’t really benefit from remote storage features/flexibility ● Built-in High-Availability of data via replication ● MongoDB replication can bootstrap new members ● Strong write concerns can be specified for critical data

12 .Hardware: Block Devices ● EBS / NFS / iSCSI ○ Things to break or troubleshoot… ■ Application needs a block from disk ■ System call to kernel for block ■ Kernel frames block request in TCP ● No logic to align block sizes ■ TCP connection/handshake (if not pooled) ■ TCP packet moves across wire, routers, switches ● Ethernet is exponentially slower than SATA/SAS/SCSI ■ Storage server parses TCP to block device ■ Storage server system calls to kernel for block ■ Storage server storage driver calls RAID/storage controller ■ Block is returned (finally!)

13 .Hardware: CPUs ● Cores vs Core Speed ○ Lots of cores > faster cores (4 CPU minimum recommended) ○ Thread-per-connection Model ● CPU Frequency Scaling ○ ‘cpufreq’: a daemon for dynamic scaling of the CPU frequency ○ Terrible idea for databases or any predictability! ○ Disable or set governor to 100% frequency always, i.e mode: ‘performance’ ○ Disable any BIOS-level performance/efficiency tuneable ○ Set ENERGY_PERF_BIAS to ‘performance’ on CentOS/Red Hat

14 .Hardware: Network Infrastructure ● Datacenter Tiers ○ Network Edge ○ Public Server VLAN ■ Servers with Public NAT and/or port forwards from Network Edge ■ Examples: Proxies, Static Content, etc ■ Calls backends in Backend VLAN ○ Backend Server VLAN ■ Servers with port forwarding from Public Server VLAN (w/Source IP ACLs) ■ Optional load balancer for stateless backends ■ Examples: Webserver, Application Server/Worker, etc ■ Calls data stores in Data VLAN

15 .Hardware: Network Infrastructure ● Datacenter Tiers ○ Data VLAN ■ Servers, filers, etc with port forwarding from Backend Server VLAN (w/Source IP ACLs) ■ Examples: Databases, Queues, Filers, Caches, HDFS, etc

16 .Hardware: Network Infrastructure ● Network Fabric ○ Try to use 10GBe for low latency ○ Use Jumbo Frames for efficiency ○ Try to keep all MongoDB nodes on the same segment ■ Goal: few or no network hops between nodes ■ Check with ‘traceroute’ ● Outbound / Public Access ○ Databases don’t need to talk to the internet* ■ Store a copy of your Yum, DockerHub, etc repos locally ■ Deny any access to Public internet or have no route to it ■ Hackers will try to upload a dump of your data out of the network!!

17 .Hardware: Why So Quick? ● MongoDB allows you to scale reads and writes with more nodes ○ Single-instance performance is important, but deal-breaking ● You are the most expensive resource! ○ Not hardware anymore

18 .Tuning MongoDB

19 .Tuning MongoDB: MMAPv1 ● A kernel-level function to map file blocks to memory ● MMAPv1 syncs data to disk once per 60 seconds (default) ○ If a server with no journal crashes it can lose 1 min of data!!! ● In memory buffering of Journal ○ Synced every 30ms ‘journal’ is on a different disk ○ Or every 100ms ○ Or 1/3rd of above if change uses j:true WC

20 .Tuning MongoDB: MMAPv1 ● Fragmentation ○ Can cause serious slowdowns on scans, range queries, etc ○ WiredTiger and RocksDB have little-no fragmentation due to checkpoints / compaction

21 .Tuning MongoDB: WiredTiger ● WT syncs data to disk in a process called “Checkpointing”: ○ Every 60 seconds or >= 2GB data changes ● In-memory buffering of Journal ○ Journal buffer size 128kb ○ Synced every 50 ms (as of 3.2) ○ Or every change with Journaled write concern

22 .Tuning MongoDB: RocksDB ● Deprecated in PSDMB 3.6+ ● Level-based strategy using immutable data level files ○ Built-in Compression ○ Block and Filesystem caches ● RocksDB uses “compaction” to apply changes to data files ○ Tiered level compaction ○ Follows same logic as MMAPv1 for journal buffering

23 .Tuning MongoDB: Storage Engine Caches ● WiredTiger ○ In heap ■ 50% available system memory ■ Uncompressed WT pages ○ Filesystem Cache ■ 50% available system memory ■ Compressed pages ● RocksDB ○ Internal testing planned from Percona in the future ○ 30% in-heap cache recommended by Facebook / Parse Platform

24 .Tuning MongoDB: Durability ● storage.journal.enabled = <true/false> ○ Default since 2.0 on 64-bit builds ○ Always enable unless data is transient ○ Always enable on cluster config servers ● storage.journal.commitIntervalMs = <ms> ○ Max time between journal syncs ● storage.syncPeriodSecs = <secs> ○ Max time between data file flushes

25 .Tuning MongoDB: Don’t Enable! ● “cpu” ○ External monitoring is recommended ● “rest” ○ Will be deprecated in 3.6+ ● “smallfiles” ○ In most situations this is not necessary unless ■ You use MMAPv1, and ■ It is a Development / Test environment ■ You have 100s-1000s of databases with very little data inside (unlikely) ● Profiling mode ‘2’ ○ Unless troubleshooting an issue / intentional

26 .Tuning Linux

27 .Tuning Linux: Love your OS! ● “I can login via SSH...we’re done!” ● The database is only as fast as as the kernel ● Expect a default Linux install to be optimised for a cheap laptop, not your $$$ hardware

28 .Tuning Linux: The Linux Kernel ● Avoid Linux earlier than 3.10.x - 3.12.x ● Large improvements in parallel efficiency in 3.10+ (for Free!) ● More: https://blog.2ndquadrant.com/postgresql-vs-kernel-versions/

29 .Tuning Linux: NUMA ● A memory architecture that takes into account the locality of memory, caches and CPUs for lower latency ○ But no databases want to use it :( ● MongoDB codebase is not NUMA “aware” ○ Unbalanced memory allocations to a single zone