- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Building MySQL DBaaS on Openstack with XtraDB Cluster

我们将介绍在Paddy Power Betfair的OpenStack上构建MySQL数据库的过程。

我们想讨论一下Percona在PPB采用MySQL过程中提供的帮助和支持,以及我们如何利用Percona软件在PPB的私有OpenStack云上构建成熟的DBA。

展开查看详情

1 .Building MySQL DBaaS on OpenStack with XtraDB Cluster

2 .Who We Are Paddy Power Betfair is a leading international sports betting and gaming operator FTSE100, Market Cap ~£7Bn We operate six leading brands; PaddyPower, Betfair, Sportsbet, FanDuel, TVG, DRAFT Over five million customers worldwide We run some of the world’s most exciting online sports betting and gaming brands We employ over 7000 people from Los Angeles to Melbourne, via Dublin and London

3 .Where We Started Merger of Paddy Power and Betfair Ageing native Infrastructure Lack of cross DC DR for MySQL Reduce TTM for new database systems S/W and H/W inconsistencies across Dev, QA and Prod

4 .Our Vision DB as a service Always-On, Highly Available, Disaster Proof architecture Rapid provisioning Ability to quickly patch systems with little to no disruption for Applications Free up staff for more valuable work

5 .DBaaS at Paddy Power Betfair

6 .XtraDB Cluster on OpenStack…

7 .MySQL HA Options? • MySQL Master-Master cross DC replication • XtraDB cluster with arbitrator node in cloud/3-DC • Asymmetric cross DC XtraDB Cluster (3-node)

8 .Why Not Master-Master Cross DC Replication? Limitations: • Handling replication lags in case of unplanned failovers • Handling split brain scenarios • Operational overhead of keeping replication working for over 160+ environment’s • Conflict resolution

9 .Why Not XtraDB Cluster with Arbitrator in 3rd DC? arbitrator Limitations: • Additional round trip network latency • SST with just 2 active node will cause service disruption • Handling split brain scenarios arbitrator

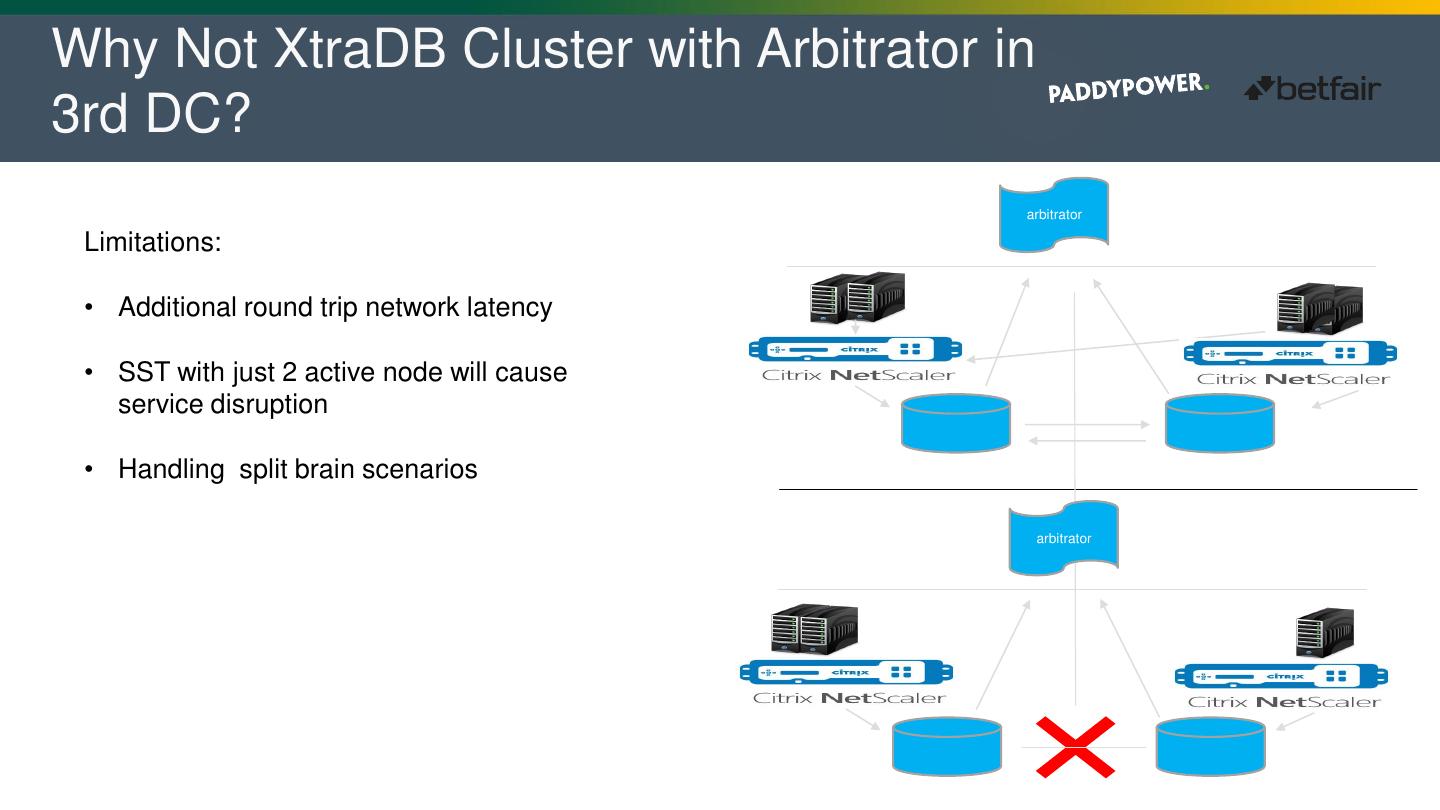

10 .Why Asymmetric Cross DC XtraDB Cluster? Limitations: • Unplanned DC outage on majority node DC

11 . Why Percona XtraDB? Cross DC resiliency Transparent/Seamless failover for planned maintenance Cross DC deployment pipeline Improving customer experience Fast recovery from DC outages Less Operational Overhead

12 .Why XtraDB Backup, PMM, pt-online-schema-change? • XtraDB Backup allows us to recover individual nodes, without having to do SST on 1 TB DB’s • XtraDB Backup allows us to do point in time and partition level recovery • PMM allows us to monitor XtraDB cluster, MySQL and O/S metrics in a centralized fashion. • PMM allows us to add PMM agents as part of our deployment pipeline • pt-online-schema-change for running schema upgrades, on OLTP platform

13 .PMM Dash Board

14 .Why NetScaler? • MaxScale and ProxySQL did not support values returned from DB procedure calls (at the time of testing) • NetScaler allows us to check DB state for routing connections, as it works better than other connection managers which checks the port state • DB state check has helped in reducing the failover time’s from 10 sec to 2-3 seconds • NetScaler allows us to implement read/write split rules, this is something we plan to use in future. • Existing framework code to provision NetScalers

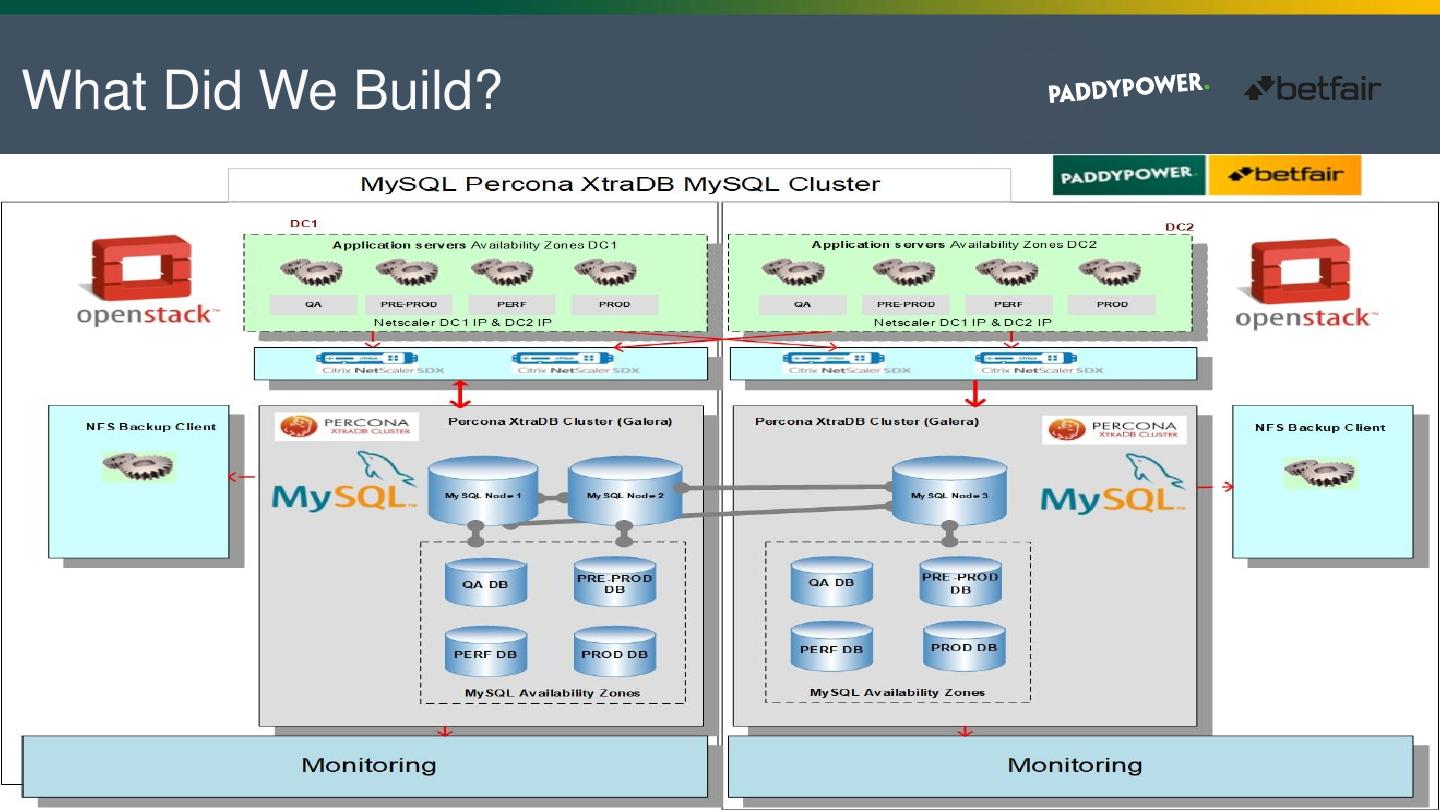

15 .What Did We Build?

16 .IaC /1 - Automation Tools Our toolset includes: • Gitlab (code repository) • Artifactory (artifacts, external repos proxy) • Jenkins (Ci Build Jobs) • GoCD (Pipeline configuration and templates)

17 .IaC /2 - Ansible Framework We have number of Git repositories to describe our infrastructure requirements. They all feed our Ansible Framework that calls APIs to provision what’s required.

18 .IaC /3 - Our repos • Openstack VM provisioning specs • SDN (Nuage network and firewall design) • Load Balancer (Citrix netscaler VIPs, AVI GSLB) • Monitoring (Sensu, Splunk, Tsdb)

19 .IaC – PPB Cloud /3a Percona XtraDB Cluster Configuration Percona XtraDB Cluster gets configured using an Ansible role included by our Framework • We use jinja2 templates • Default values for all MySQL parameters • Override values for each environment e.g. Memory parameter is calculated dynamically as a percentage of the total allocated memory to VM.

20 .IaC – PPB Cloud /3b Percona XtraDB Cluster Configuration

21 .IaC - PPB Cloud /5 Jenkins wraps it up

22 .IaC - PPB Cloud /6 GoCD Pipelines Provisioning the desired infrastructure with the same process for each Environment (QA/Pre-Prod/Perf/Prod)

23 .CI/CD Workflow in a picture

24 .Challenges • Hosting stateful applications on PPBF Openstack. • Reducing Service Disruption. • Hosting highly concurrent OTLP application on XtraDB Cluster. • Developing a mechanism for fast recovery from full unplanned DC outages.

25 .Stateful Apps on PPBF OpenStack • Rolling update is the process to redeploy our environment(s); challenge was how to minimize service disruption • Rolling update requires a new VM to be deployed with the new changes and move the DB instance onto the new VM (A / B deployments)

26 .Rolling Update Explained Volume clone

27 .Rolling Update Explained Volume snapshot

28 .Rolling Update Explained

29 .Rolling Update Explained Volume Clone