- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

MongoDB WiredTiger WriteConflicts

写冲突表示什么?

内部写冲突进程

什么时候写冲突表示有问题?

-每秒少量的写入冲突

-每秒大量的写入冲突

如果每秒看到大量的写冲突,该怎么办?

确定在mongod日志文件中引起冲突的操作

改进更新操作模式

展开查看详情

1 .MongoDB WiredTiger WriteConflicts 1

2 .Introduction Paul Agombin @pagombin 2

3 . ● The WiredTiger Storage Engine ● Identifying WriteConflicts Metrics ● Likely Symptoms ● What writeConflicts indicate Internal WriteConflicts process Overview ● ● When WriteConflicts would indicate an issue ○ Small Vs a Large Number of writeConflicts ● Things to do when we see writeConflicts ● Improving Operation patterns 3

4 . What is the WiredTiger StorageEngine? ● Introduced in MongoDB 3.0 and became the default storage engine from 3.2 ● Offers document Level Locking ● Compression for data and indexes ● Uses snapshot and checkpoints ● Memory Management 4

5 . WriteConflict Subtitle goes here. Remember Metric! to change the image! sasds A situation in which two concurrent operations, at least one of which is a write, attempt to use a resource in a way that would violate constraints imposed by a storage engine using optimistic concurrency control. MongoDB will transparently abort and retry one of the conflicting operations. ● OCC - Assumes that multiple transactions can frequently complete without interfering with each other. ● Before committing, each transaction verifies that no other transaction has modified the data it has read. ● If the check reveals conflicting modifications, the committing transaction rolls back and can be restarted. Note: This refers to storage Engine transactions, not the application tier. 5

6 . How do we identify them? These Metrics are usually reported in: - MongoDB log files 2018-10-28T15:21:06.362-0400 I COMMAND [conn5022] update bar.foo query: { _id: 1 } update: { $inc: { views: 1 } } keysExamined:1 docsExamined:1 nMatched:1 nModified:1 keyUpdates:0 writeConflicts:244 numYields:157 locks:{ Global: { acquireCount: { r: 98, w: 74 } }, Database: { acquireCount: { w: 121 } }, Collection: { acquireCount: { w: 91 } }, Metadata: { acquireCount: { w: 1 } }, oplog: { acquireCount: { w: 1 } } } 4902ms 6

7 .2018-10-28T15:40:06.362-0400 [conn24076] command foo.bar command: insert { insert: "bar", documents: [ { _id: ”1ztDIVneUv”, event_id: "1234567", type: "Concert", active: true, event_type: "social", code_id: "394289", user_type: "IOS", start_time: new Date(1530044638000), u_at: new Date(1530044829166), completed_at: new Date(1530044829166) } ], ordered: true, shardVersion: [ Timestamp 2412000|1, ObjectId('59e1044205d644ef68ca3d05') ] } ninserted:0 keyUpdates:0 writeConflicts:250 exception: E11000 duplicate key error collection: foo.bar index: event_type_1_code_id_1 dup key: { : "1234567", : "394289" } code:11000 numYields:0 reslen:459 locks:{ Global: { acquireCount: { r: 1, w: 1 } }, Database: { acquireCount: { w: 1 } }, Collection: { acquireCount: { w: 1 } } } protocol:op_command 3492ms 7

8 . The Output of Collection Stats From MongoDB 3.0 In a Sharded Cluster: mongos> db.getSiblingDB('config').shards.find({},{_id:1}).forEach(function(f){x=db.getSiblin gDB('foo').bar.stats().shards[f._id].wiredTiger.transaction; print(f._id +" has "+ JSON.stringify(x))} ) shard1 has {"update conflicts":13883} shard2 has {"update conflicts":13291} shard3 has {"update conflicts":15970} shard4 has {"update conflicts":13083} shard5 has {"update conflicts":13497} shard6 has {"update conflicts":15826} shard7 has {"update conflicts":13859} shard8 has {"update conflicts":16571} 8

9 .On a Mongod: PRIMARY> db.bar.stats().wiredTiger.transaction { "update conflicts" : 13898 } Note: Metrics reported by Collection Stats are specific to conflicting update operations. 9

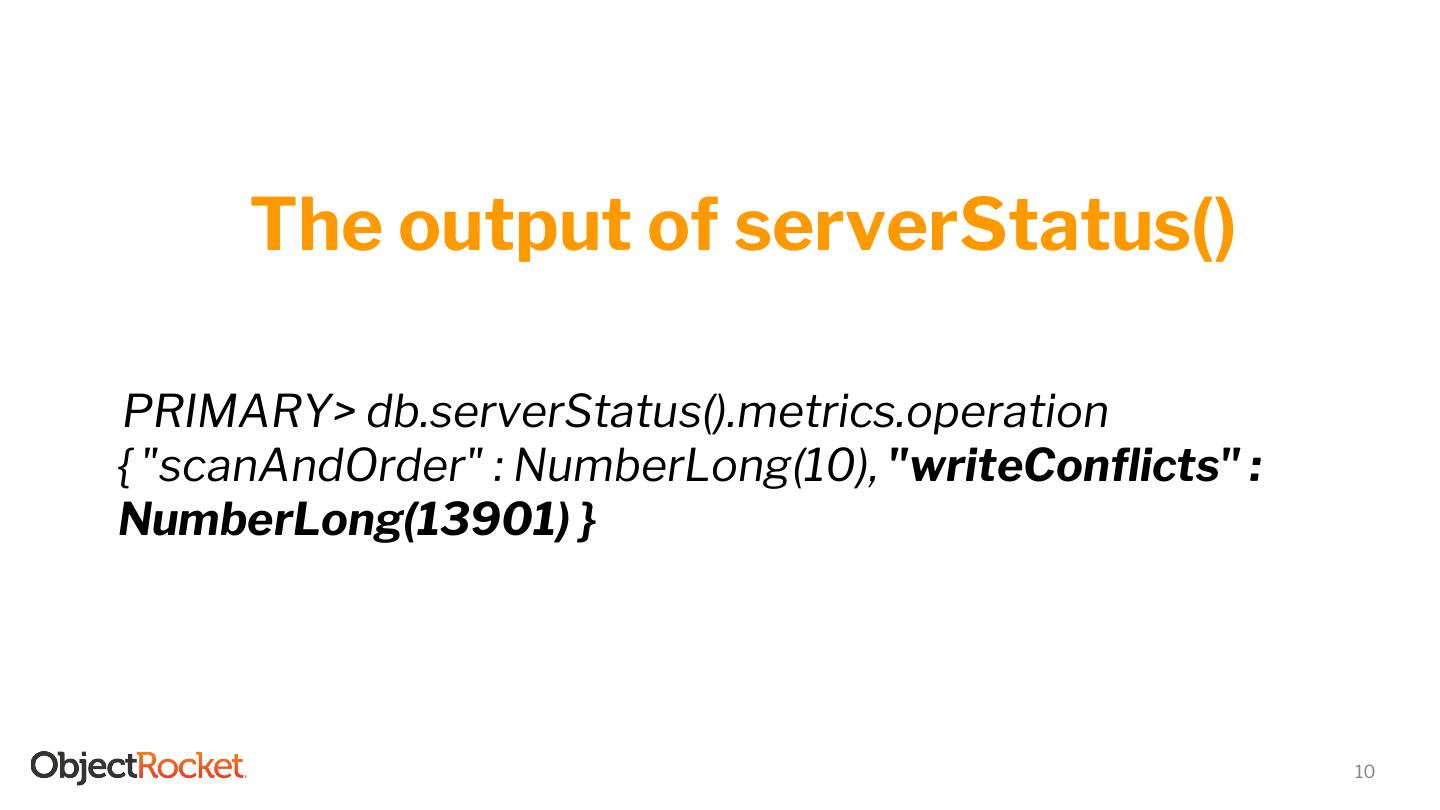

10 . The output of serverStatus() PRIMARY> db.serverStatus().metrics.operation { "scanAndOrder" : NumberLong(10), "writeConflicts" : NumberLong(13901) } 10

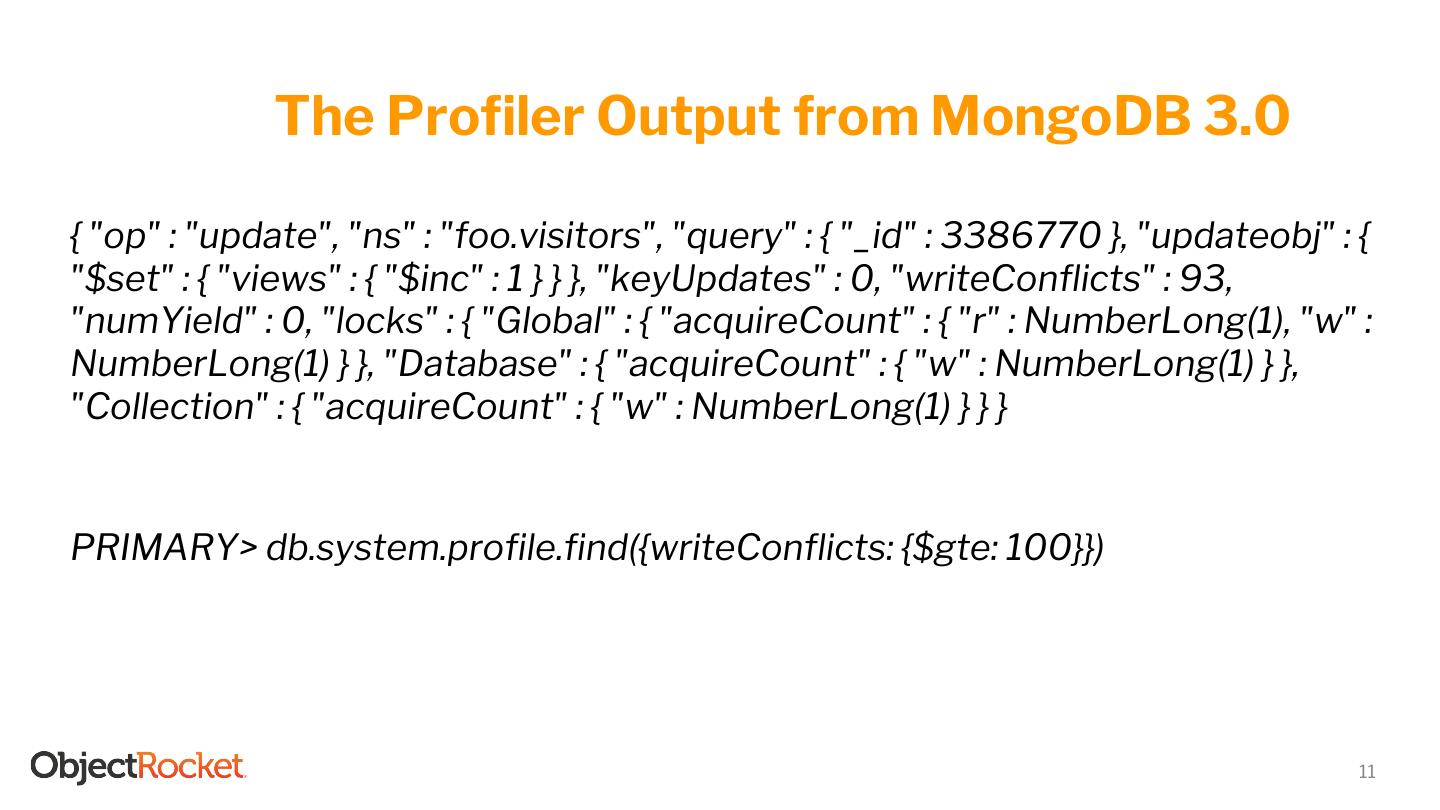

11 . The Profiler Output from MongoDB 3.0 { "op" : "update", "ns" : "foo.visitors", "query" : { "_id" : 3386770 }, "updateobj" : { "$set" : { "views" : { "$inc" : 1 } } }, "keyUpdates" : 0, "writeConflicts" : 93, "numYield" : 0, "locks" : { "Global" : { "acquireCount" : { "r" : NumberLong(1), "w" : NumberLong(1) } }, "Database" : { "acquireCount" : { "w" : NumberLong(1) } }, "Collection" : { "acquireCount" : { "w" : NumberLong(1) } } } PRIMARY> db.system.profile.find({writeConflicts: {$gte: 100}}) 11

12 .Sample JS can below can be used to track the Number of WC over sample period of time. PRIMARY> var wc_start = db.serverStatus().metrics.operation.writeConflicts; PRIMARY> sleep(60000); //1 min PRIMARY> var wc_end = db.serverStatus().metrics.operation.writeConflicts; PRIMARY> print(((wc_end - wc_start) / 60) + " write conflicts per sec on average."); Note: This metric is not displayed in the output of currentOp() 12

13 . Symptoms - Use case Socket Errors and failed heartbeats: 2018-10-30T11:32:55.334-0600 I NETWORK [conn89432] SocketException handling request, closing client connection: 9001 socket exception [SEND_ERROR] server [0.0.0.0:49202] 2018-10-30T11:32:55.348-0600 I REPL [ReplicationExecutor] Error in heartbeat request to host1.objectrocket.com:27017; HostUnreachable: short read ❏ Caused by an overloaded Primary and could cause it to become unresponsive to new request. ❏ Clients on the other side might treat this as TCP timeout, even though TCP protocol hasn’t been broken. ❏ Likely to see a spike in CPU utilization 13

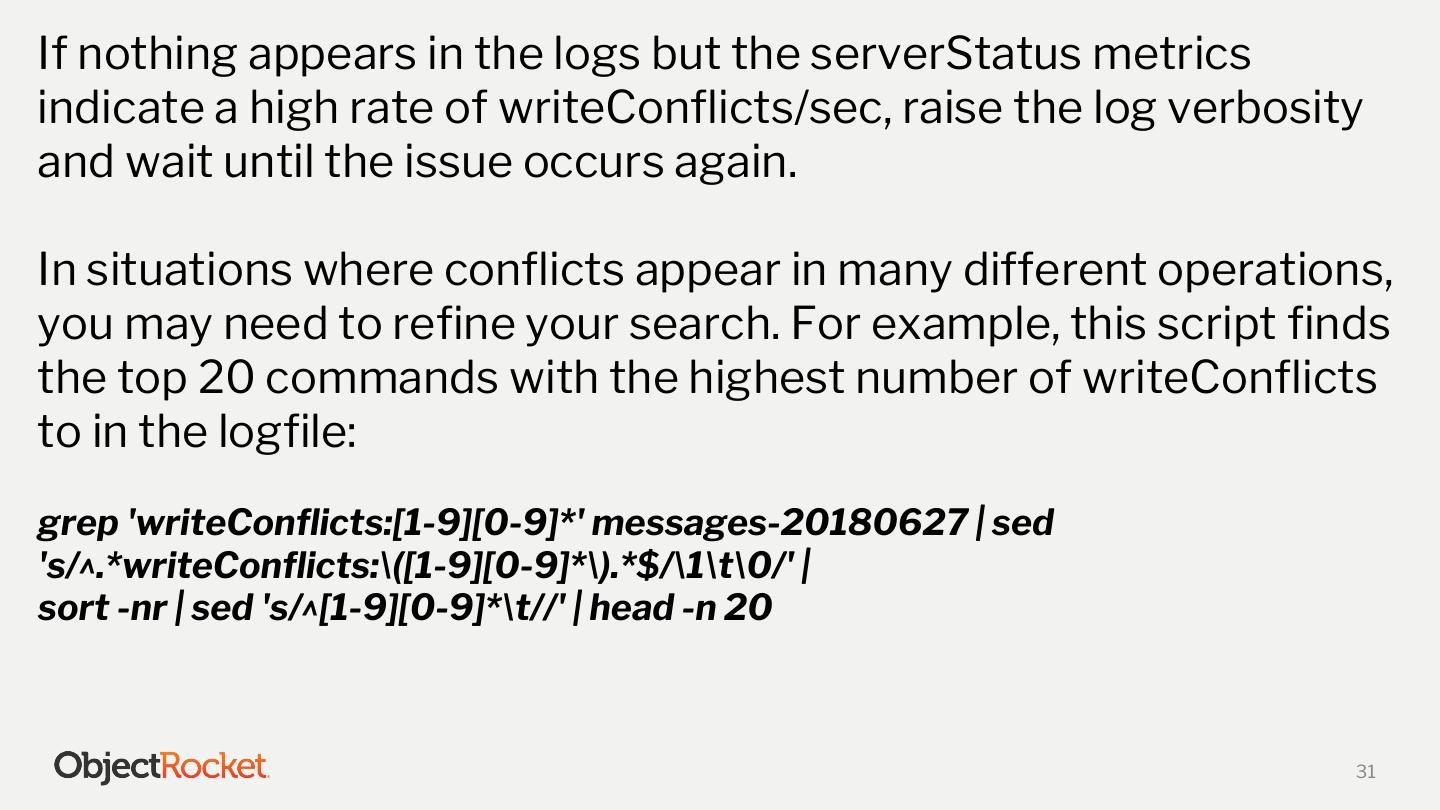

14 . Frequent Elections and OOM Under normal circumstances. - We’ll have our Primary replicating to secondaries. PRIMARY SECONDARY SECONDARY 14

15 .In the likely event of a writeConflict: - We could see what appears to be a split brain 2018-10-30T15:02:24.234-0600 I REPL [ReplicationExecutor] Member host1.objectrocket.com:27017 is now in state PRIMARY 2018-10-30T15:02:24.255-0600 I REPL [ReplicationExecutor] Member host2.objectrocket.com:27017 is now in state PRIMARY 2018-10-30T15:02:24.255-0600 W REPL [ReplicationExecutor] two remote primaries (transiently) Host1 PRIMARY Host2 Host3 PRIMARY SECONDARY 15

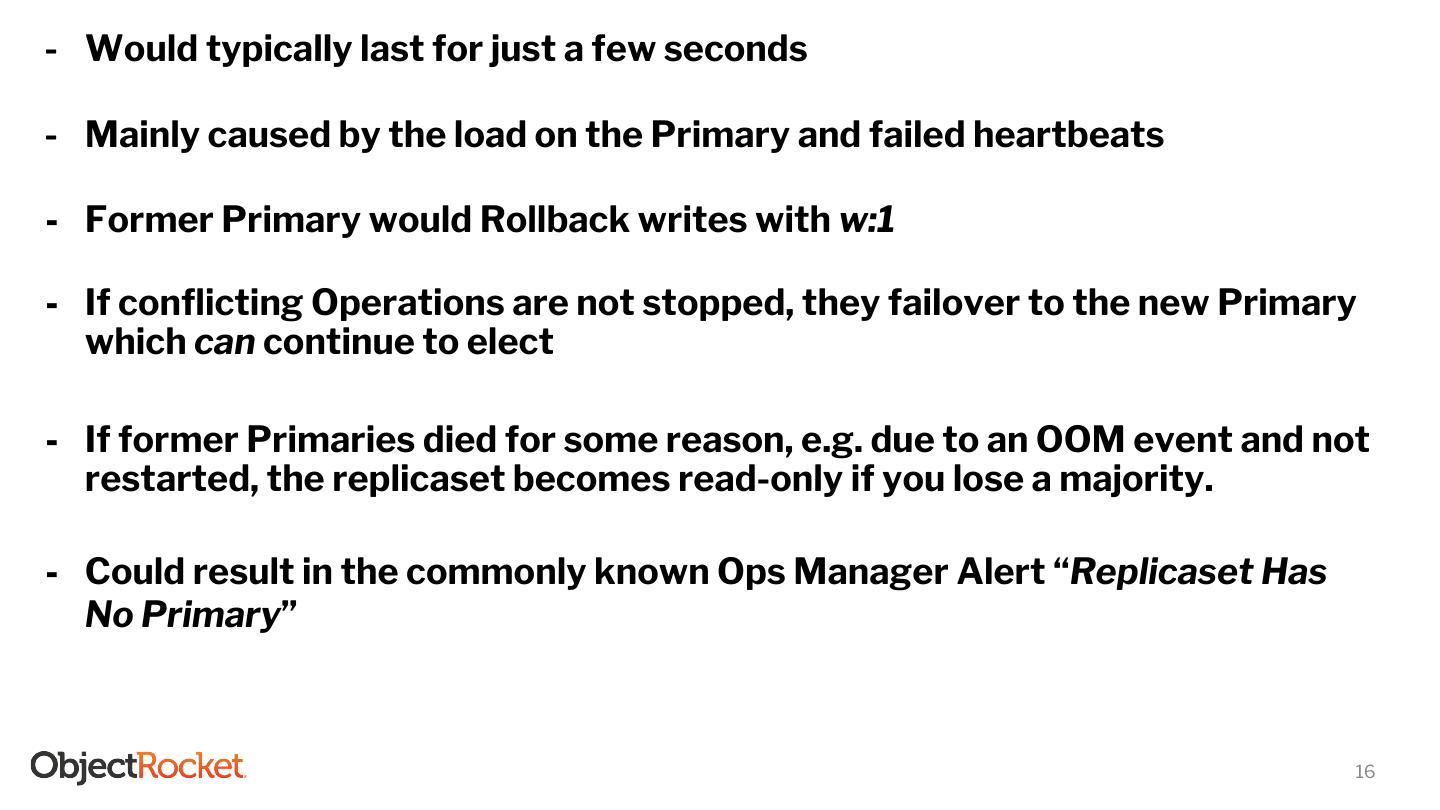

16 .- Would typically last for just a few seconds - Mainly caused by the load on the Primary and failed heartbeats - Former Primary would Rollback writes with w:1 - If conflicting Operations are not stopped, they failover to the new Primary which can continue to elect - If former Primaries died for some reason, e.g. due to an OOM event and not restarted, the replicaset becomes read-only if you lose a majority. - Could result in the commonly known Ops Manager Alert “Replicaset Has No Primary” 16

17 .- Likely Errors from Application - Depends on the Driver and the version. Timed out after 50000 ms while waiting for a server that matches {serverSelectors=[ReadPreferenceServerSelector{readPreference=primary} 17

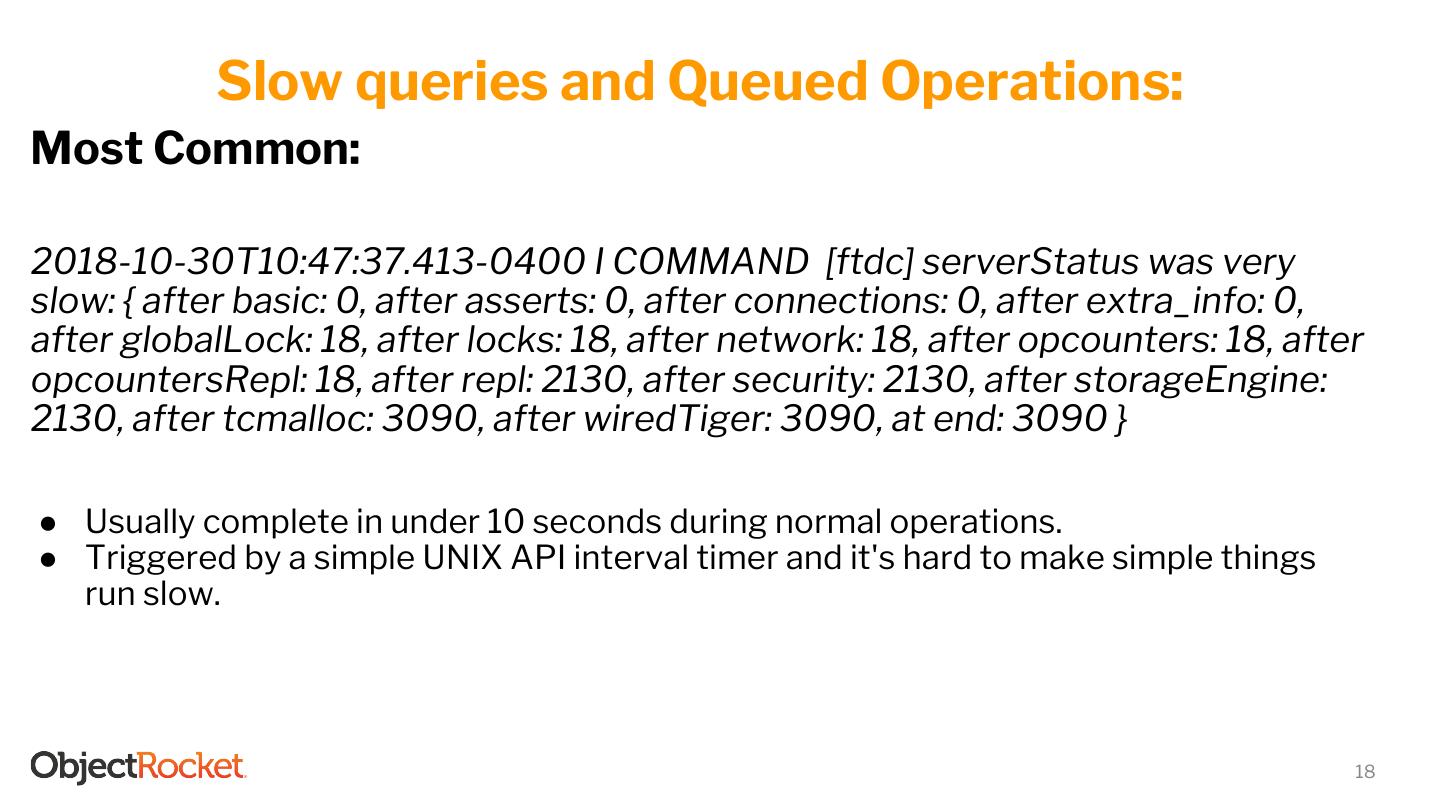

18 . Slow queries and Queued Operations: Most Common: 2018-10-30T10:47:37.413-0400 I COMMAND [ftdc] serverStatus was very slow: { after basic: 0, after asserts: 0, after connections: 0, after extra_info: 0, after globalLock: 18, after locks: 18, after network: 18, after opcounters: 18, after opcountersRepl: 18, after repl: 2130, after security: 2130, after storageEngine: 2130, after tcmalloc: 3090, after wiredTiger: 3090, at end: 3090 } ● Usually complete in under 10 seconds during normal operations. ● Triggered by a simple UNIX API interval timer and it's hard to make simple things run slow. 18

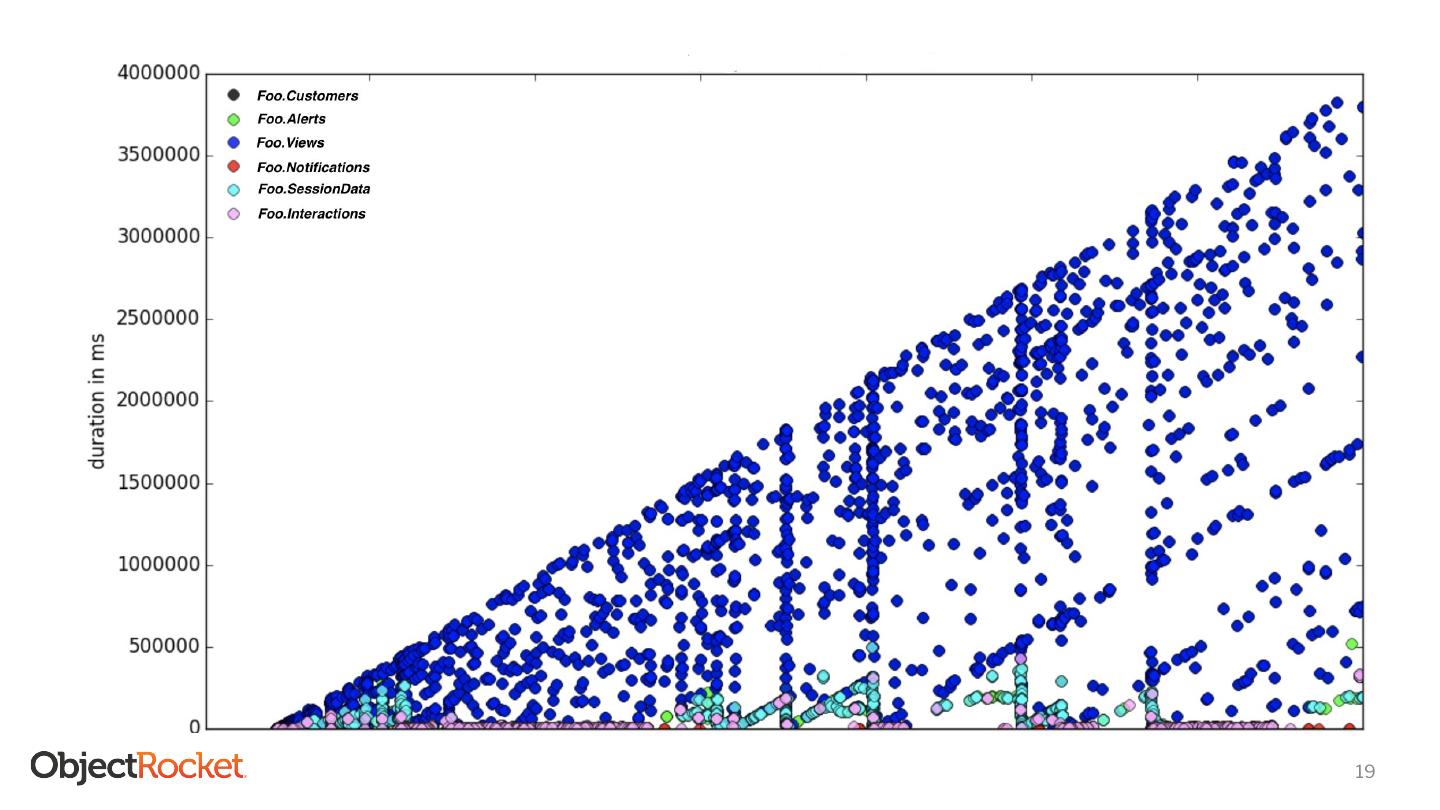

19 .19

20 . What do writeConflicts indicate? Every type of command that performs a write can encounter write conflicts, including less frequently run user and administrative commands that write to system collections such as: - Creating indexes - Dropping collections - Creating Users - Enabling Sharding But it is very rare. In practice, you are only likely to see notable writeConflicts on your busiest, user-made, non-system collections. 20

21 .A non-zero writeConflicts metric indicates that WiredTiger has detected an update that could potentially create a data concurrency violation. The update does not fail, nor is it sent back to the client with an error. Instead, the writeConflicts metric increments and the update is retried by MongoDB until it completes without a conflict. From the client perspective, the write succeeds and is otherwise normal except for increased latency. 21

22 .Because WiredTiger uses optimistic updates for document-level concurrency, writeConflicts indicates that multiple clients are trying to update exactly the same document within the same fraction of a second. This window is the time between the execution of the WiredTiger API's WT_SESSION::begin_transaction() and WT_SESSION::commit_transaction() operations. There is no fixed minimum time for these operations. It could be double or even single-digit microseconds if the amount of data to be written is small and the server has no queues for CPU or memory channels. There is no fixed maximum time either, although it is somewhat dependent on the performance of the server. 22

23 . Internal writeConflicts process ● The storage engine returns a status value of WT_ROLLBACK from the document-update function. ● The MongoDB integration layer wrapping the storage engine API throws a WriteConflictException. ● That exception is caught at a higher scope of the operation's execution thread. ● The operation's writeConflicts metric increments. ● The operation is resubmitted at increasing intervals in following cycles of WiredTiger transactions until it succeeds: 23

24 .● It is retried immediately three times. ● After 4 retries a sleep of 1ms is applied before each successive retry. ● After 10 retries the sleep period increases to 5ms. ● After 100 retries the sleep period increases to 10ms. 24

25 . When does writeConflicts indicate a problem? Small number of writeConflicts/sec! A small number of writeConflicts per second does not usually indicate a problem. For example, 10 conflicts per second does not have a performance impact on a server that processes a thousand operations per second. Data concurrency is preserved with only a small latency cost for those conflict updates, which is measured in milliseconds or even microseconds between one storage engine logical commit and the next. The difference in average server latency is negligible, and is unlikely to be noticed by a client that must also deal with network latency. 25

26 . Large number of writeConflicts/sec A large number of writeConflicts per second can create performance issues. For example, 100 updates for the same document that arrive simultaneously are much slower than 100 updates for different documents. The reasons for this difference are: The server must execute 100 commits serially, rather than 100 updates in parallel that are executed in a single or small number of commits. 26

27 .● The server executes an exponential amount of CPU work: ○ WiredTiger creates 100 MultiVersion Concurrency Control (MVCC) versions of the document, of which 1 is kept and the others are discarded. ○ 99 updates are retried. X updates, where X <= 99, reach the WiredTiger layer simultaneously. X MVCC versions of the document are created, and 1 is committed while the others are discarded. ○ The remaining updates are retried in the above fashion until all are successful. In order to commit n updates, an exponential number of uncommitted updates must be created. That exponential factor is quadratic in the worst case. The back-off timing logic in the internal retry process might reduce the number of attempted-but-discarded updates, but not if the application continues to queue more updates to the same document. 27

28 .What to do if you see a large number of writeConflicts/sec! WiredTiger write conflicts are caused by several application processes, or threads within a multi-threaded application, all competing to update the same document at the same time. To address the issue, first identify the offending operation, then improve the corresponding update logic in your application. 28

29 .Identify the operation causing conflicts in the mongod log file An operation causing conflicts records a writeConflicts:XXX field in the logs, where XXX is a number greater than 50, and likely over 100. At the default log verbosity a command is logged only if it takes longer than the slowOpThresholdMs span to execute. Write conflicts often result in slow operations, but you can lower slowOpThresholdMs to capture operations below the 100ms default, or run the db.setLogLevel(1, "command") command to log all commands in the logfile or setProfiling Level to 2 for Mongo versions > 3.0. Search the logs for operations with a high number of writeConflicts. For example, you can use grep to find operations with over 100 writeConflicts: grep 'writeConflicts:[1-9][0-9][0-9]' [pathToLogfile] 29