- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Lock Stock and Backup: Data Guaranteed

备份在数据数字化、正常运行时间是收入的世界中至关重要。环境不再绑定到传统的数据中心,跨越多个云提供商以及大量异构环境。我们需要防弹备份和完美的恢复过程。本文旨在回答“我应该如何备份我的MySQL数据库?”通过利用关键技术、自动化技术和主要云提供商服务,提供3-2-1备份设计、最佳实践和现实解决方案。

展开查看详情

1 .Lock, Stock and Backup: Data Guaranteed Jervin Real August 16, 2017 1 / 42

2 . Agenda 1. Backup and Recovery Primer 2. Binary Log Backups 3. Logical Backups with mydumper 4. Physical Backups with xtrabackup 5. Leveraging Snapshots 6. "3-2-1" in the Cloud 7. Monitoring, Testing and Recovery 8. Encryption and Security 2 / 42

3 .Backup and Recovery Primer 3 / 42

4 . Backup and Recovery Primer Why do we take backups? Absolute, hands down, no contest, necessity. Period. Backups are the insurance you almost always never use, recovery is the ultimate test of reliability 4 / 42

5 . Backup and Recovery Primer Pillars of good backup implementation Restore/recovery time objective Restore/recovery point objective Retention period Storage Storage engines Tools and environment 5 / 42

6 . Backup and Recovery Primer Backup types we will discuss today: Binary Logs Logical Backups with mydumper Physical Backups with xtrabackup Filesystem Snapshots 6 / 42

7 .Binary Log Backups 7 / 42

8 . Binary Log Backups Its not just another type of backup ... mysqlbinlog as primary tool of choice, but you can write your own! Support from mysqlbinlog only became available from 5.6, but can be used <5.6 servers Complements other backup types for Point in Time Recovery 8 / 42

9 . Binary Log Backups Pros and Cons? There is nothing to discuss really, just Yes or No Hopefully, you choose Yes Binary log backups is uniquely important on its own. 9 / 42

10 . Binary Log Backups Why Why Not If point in time recovery is If you have rolled out a important custom solution that is faster If you need some limited in your use case i.e. auditing capabilities snapshotting a big table based on timestamp 10 / 42

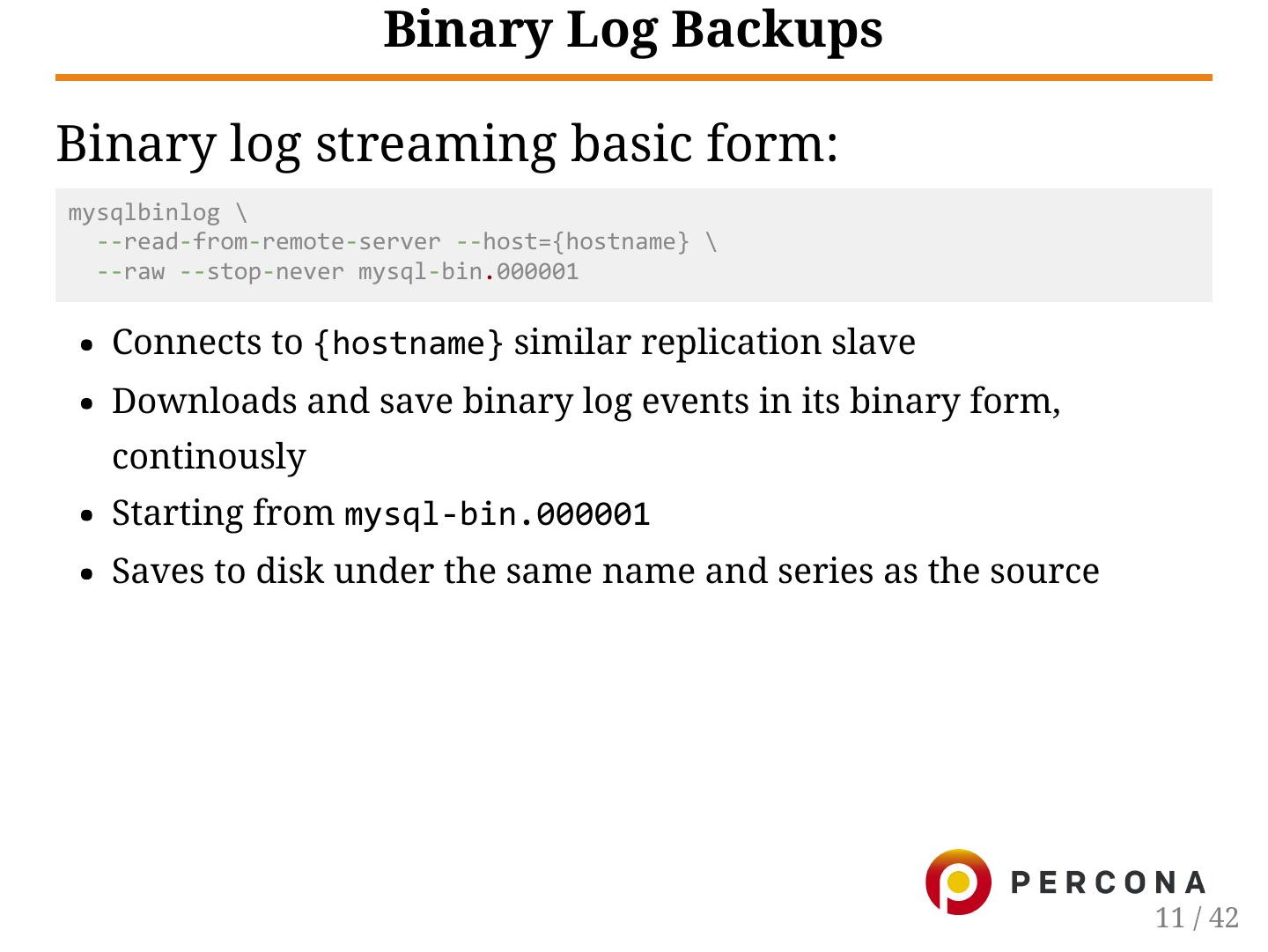

11 . Binary Log Backups Binary log streaming basic form: mysqlbinlog \ ‐‐read‐from‐remote‐server ‐‐host={hostname} \ ‐‐raw ‐‐stop‐never mysql‐bin.000001 Connects to {hostname} similar replication slave Downloads and save binary log events in its binary form, continously Starting from mysql‐bin.000001 Saves to disk under the same name and series as the source 11 / 42

12 .Logical Backups with mydumper 12 / 42

13 . Logical Backups with mydumper Distinct features of mydumper Parallel logical dump and restore (think row level) Works with Percona Server backup locks Regex based include/exclude Kill or abort on long running queries Wire compression (mysql > (protocol) mydumper > (stream) disk) Multiple levels of lock reduction SAVEPOINT (‐‐use‐savepoints) to reduce MDL locks Everything else (mostly) mysqldump does Open source and community maintained 13 / 42

14 . Logical Backups with mydumper Pros Cons Per object backup/restore, No piping/streaming, direct to highly flexible with regex disk only - means encryption Storage engine agnostic comes after Highly compressible, dump only data or even snapshots based on time Parallelization means speed advantage 14 / 42

15 . Logical Backups with mydumper mydumper mysqlpump 1. ~/.my.cnf / ‐‐defaults‐file 1. ‐‐login‐path 2. ZLIB output compression 2. ZLIB or LZ4 output 3. ‐‐regex compression 4. No HEX dumps, BLOBs could 3. SSL connections be unreadable 4. ‐‐include/‐‐exclude 5. Linux only, dump over TCP is 5. ‐‐hex‐blob possible from Windows 6. Linux/Windows source 7. ‐‐watch‐progress 6. Manual progress monitoring is tricky 15 / 42

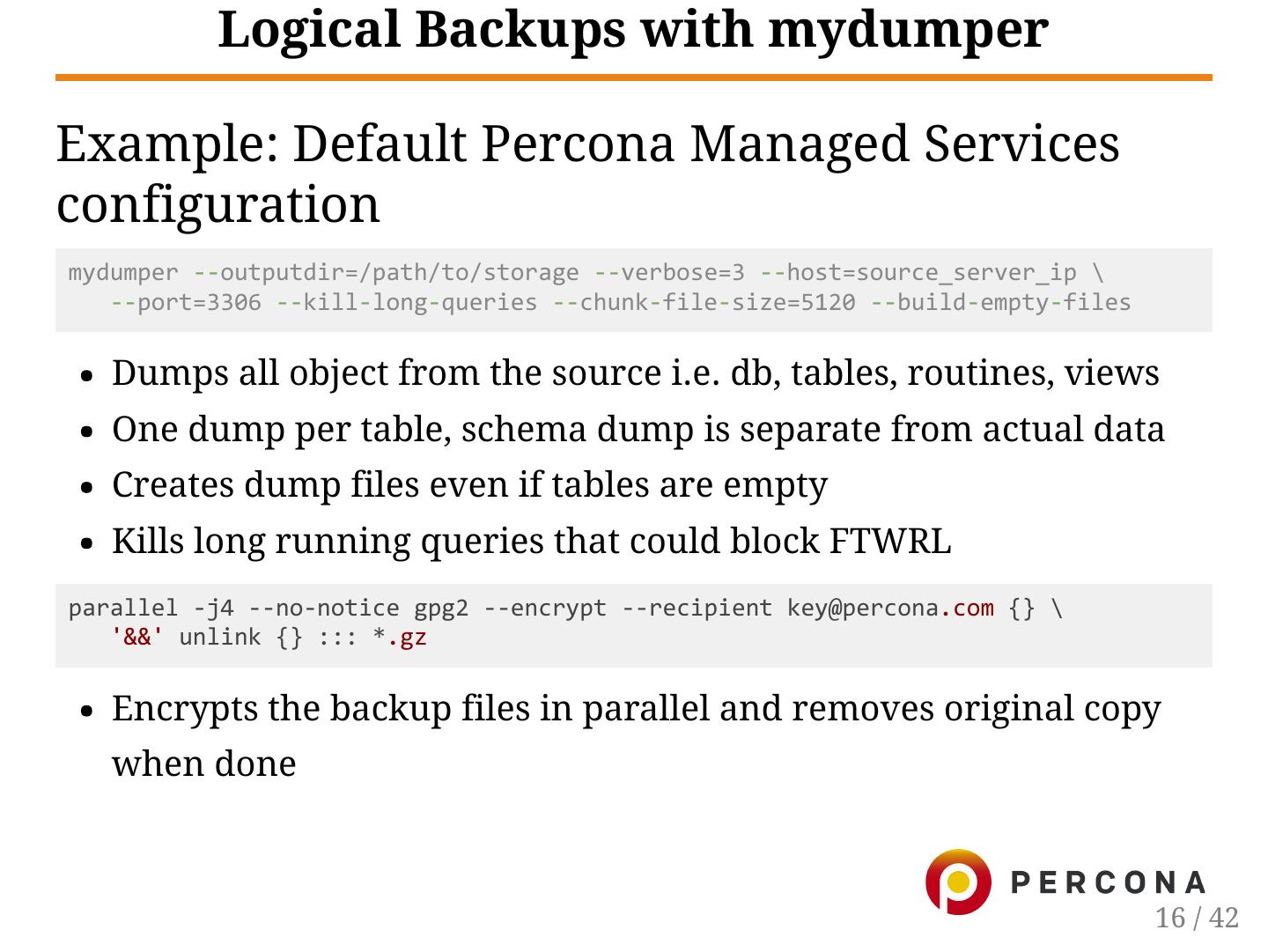

16 . Logical Backups with mydumper Example: Default Percona Managed Services configuration mydumper ‐‐outputdir=/path/to/storage ‐‐verbose=3 ‐‐host=source_server_ip \ ‐‐port=3306 ‐‐kill‐long‐queries ‐‐chunk‐file‐size=5120 ‐‐build‐empty‐files Dumps all object from the source i.e. db, tables, routines, views One dump per table, schema dump is separate from actual data Creates dump files even if tables are empty Kills long running queries that could block FTWRL parallel ‐j4 ‐‐no‐notice gpg2 ‐‐encrypt ‐‐recipient key@percona.com {} \ '&&' unlink {} ::: *.gz Encrypts the backup files in parallel and removes original copy when done 16 / 42

17 .Physical Backups with xtrabackup 17 / 42

18 . Physical Backups with xtrabackup What is xtrabackup? Open source alternative to MySQL Enterprise Backup Consistent online backup tool, highly configurable to many workloads 18 / 42

19 . Physical Backups with xtrabackup Pros Cons Typically faster, Linux-only official support parallelization options Occupies more disk space available (compression ratio depends Least intrusive - multiple on state of data on disk) levels of locking granularities Designed for InnoDB data for consistency and workload Per object backup possible Incremental backups available Compression and Encryption features 19 / 42

20 . Physical Backups with xtrabackup Example: Encryption with GPG xtrabackup ‐‐backup ‐‐stream=xbstream ‐‐target=./ | \ gpg ‐‐encrypt ‐‐recipient key@percona.com > backups.xbs.gpg Better control of encryption keys, storage is not tied to encryption No parallelization via stream, backup and encrypt separately if needed (22mins vs 8mins) https://twindb.com/encrypting-mysql-backups/ 20 / 42

21 . Physical Backups with xtrabackup Example: Stream backup to multiple destinations at once with FIFO 1. Last receiving server/slave nc ‐l 9999 | xbstream ‐x ‐C /path/to/destination 2. Receiving server/slave 1-to-N mkfifo xbfifo *nc IP_of_next_receiving_server 9999 < xbfifo & nc ‐l 9999 | tee xbfifo | xbstream ‐x ‐C /path/to/destination 3. Backup source xtrabackup ‐‐stream=xbstream ‐‐backup ‐‐target=./ \ | nc IP_of_first_receiving_server 9999 21 / 42

22 . Physical Backups with xtrabackup Example: Backup to populate a Galera/PXC node manually xtrabackup ‐‐backup ‐‐stream=xbstream ‐‐target=./ ‐‐galera‐info \ | nc IP_of_dest_galera_node 9999 Make sure the data on the destination is cleared and ready to receive on netcat port 9999 Backup will include xtrabackup_galera_info https://www.percona.com/blog/2012/08/02/avoiding-sst-when- adding-new-percona-xtradb-cluster-node/ 22 / 42

23 .Filesystem Snapshots 23 / 42

24 . Filesystem Snapshots Why consider snapshots: Mixing storage engines i.e. InnoDB + TokuDB Really large datasets, less frequency, must have at least N intervals When it is more practical solution i.e. GCE snapshots 24 / 42

25 . Filesystem Snapshots What is available commonly used? LVM ZFS/ZVOL Amazon EBS Google Cloud Engine 25 / 42

26 . Filesystem Snapshots LVM Snapshots mysql> FLUSH TABLES; ‐‐ optional, to just preflush without locking mysql> FLUSH TABLES WITH READ LOCK; shell> sudo sync shell> lvcreate ‐‐snapshot ‐‐name mysql‐nnnnnn /dev/vgname/lvname mysql> UNLOCK TABLES; shell> mount /dev/vgname/mysql‐nnnnnn /tmp‐vol shell> rsync ‐avz /tmp‐vol /path/to/storage shell> umount /tmp‐vol shell> lvremove ‐f /dev/vgname/mysql‐nnnnnn Use on non-critical nodes if possible, LVM snapshots has performance penalty Easy to write tools, mylvmbackup was once defacto tool 26 / 42

27 . Filesystem Snapshots ZFS/ZVOL Snapshots mysql> FLUSH TABLES; ‐‐ optional if excl innodb and iflatc = 1 mysql> FLUSH TABLES WITH READ LOCK; ‐‐ or if you need binlog coordinates; shell> zfs snapshot zpool/data@date mysql> UNLOCK TABLES; ‐‐ optional, see above Recommend only when running on native platform i.e. BSD/Solaris Have not seen production cases for ZFSonLinux 27 / 42

28 . Filesystem Snapshots EBS Snapshots mysql> FLUSH TABLES; ‐‐ optional mysql> FLUSH TABLES WITH READ LOCK; shell> sudo sync shell> sudo fsfreeze ‐f disk‐name shell> aws ec2 create‐snapshot shell> sudo fsfreeze ‐u disk‐name mysql> UNLOCK TABLES; Once the snapshot is taken, no additional step is required, uploads to S3 in the background 28 / 42

29 . Filesystem Snapshots GCE Snapshots mysql> FLUSH TABLES; ‐‐ optional mysql> FLUSH TABLES WITH READ LOCK; shell> sudo sync shell> sudo fsfreeze ‐f disk‐name shell> gcloud compute disks snapshot disk‐name shell> sudo fsfreeze ‐u disk‐name mysql> UNLOCK TABLES; Wait until snapshot is uploaded to GCS No performance impact on storage (as per Google doc) https://cloud.google.com/compute/docs/disks/create-snapshots 29 / 42