- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Solving Everyday Data Problems with FoundationDB

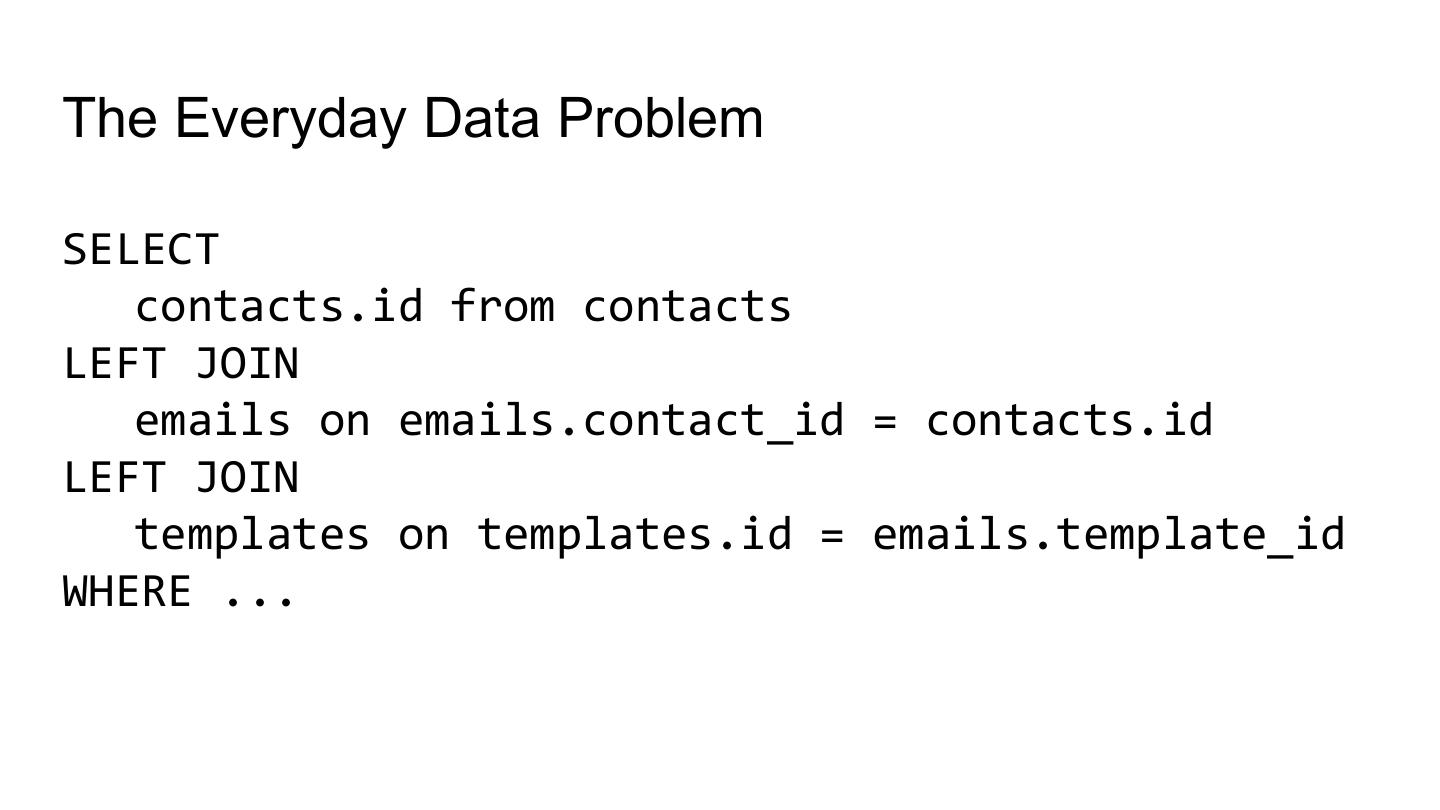

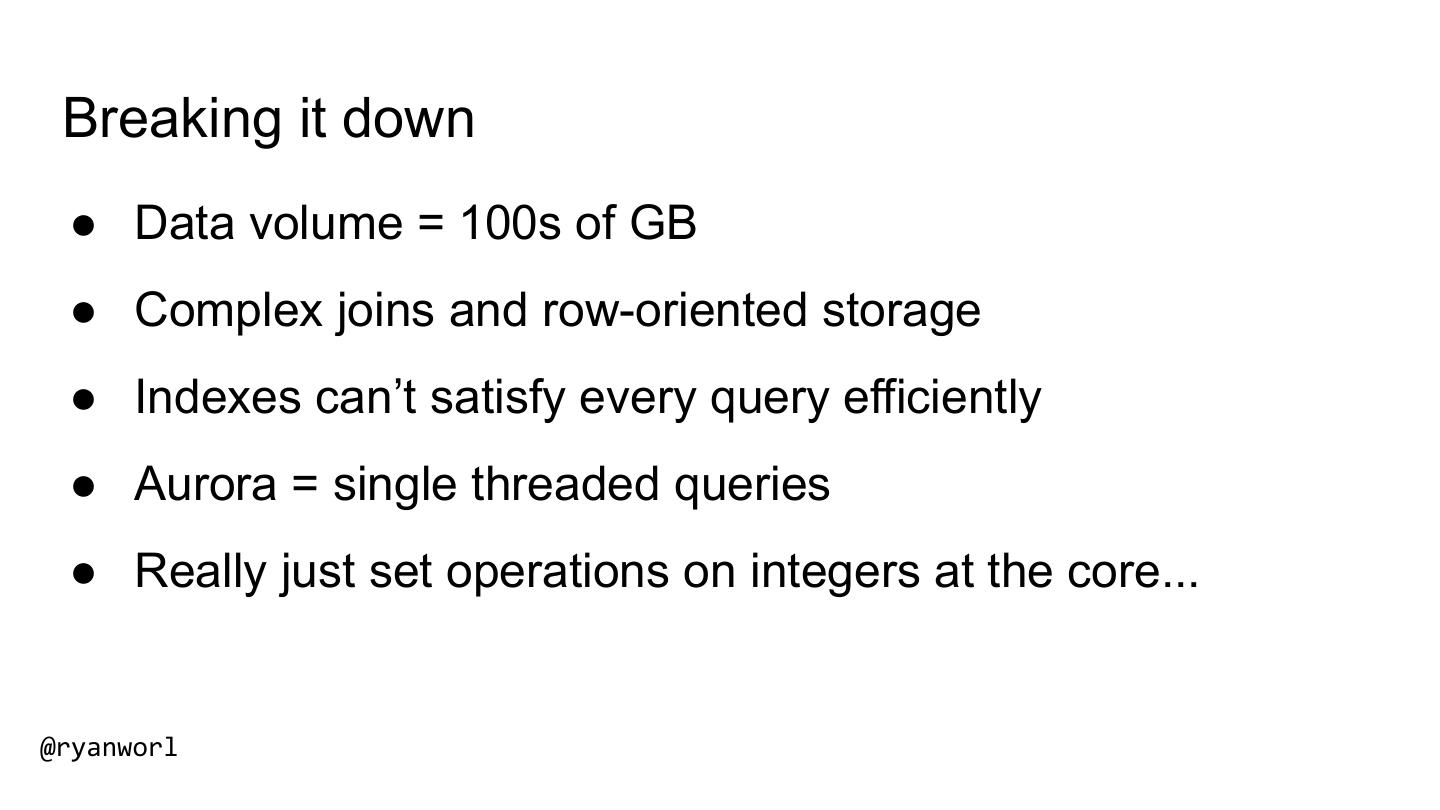

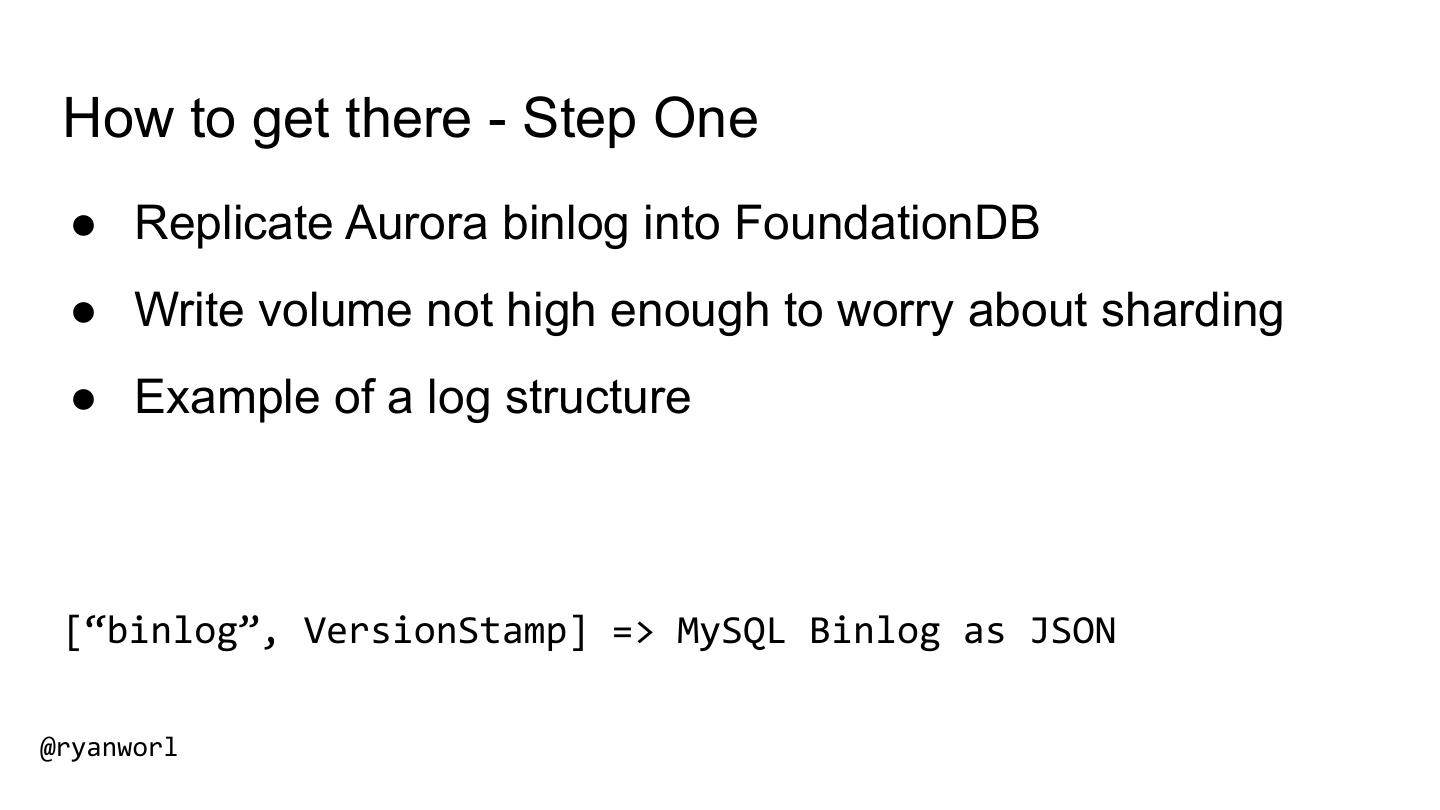

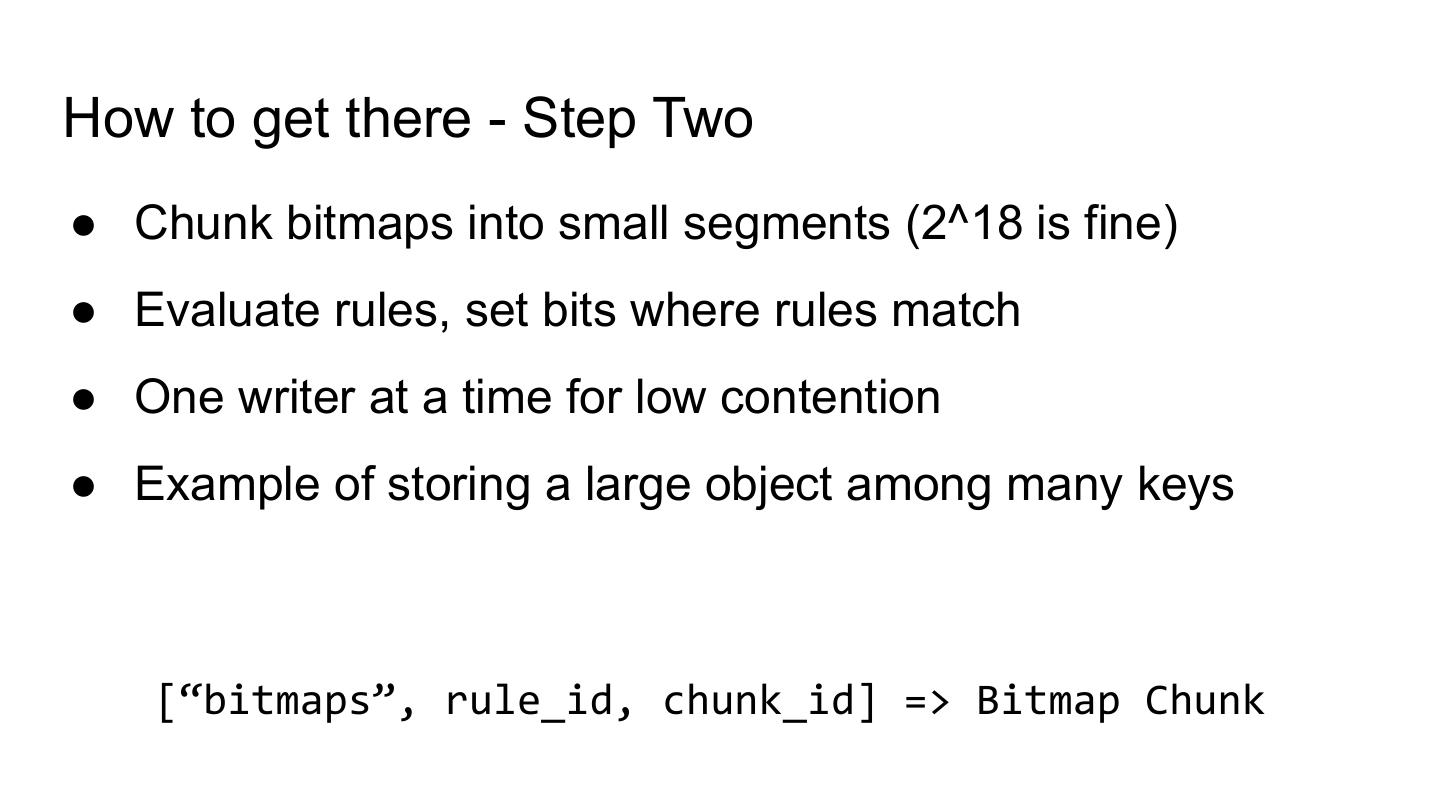

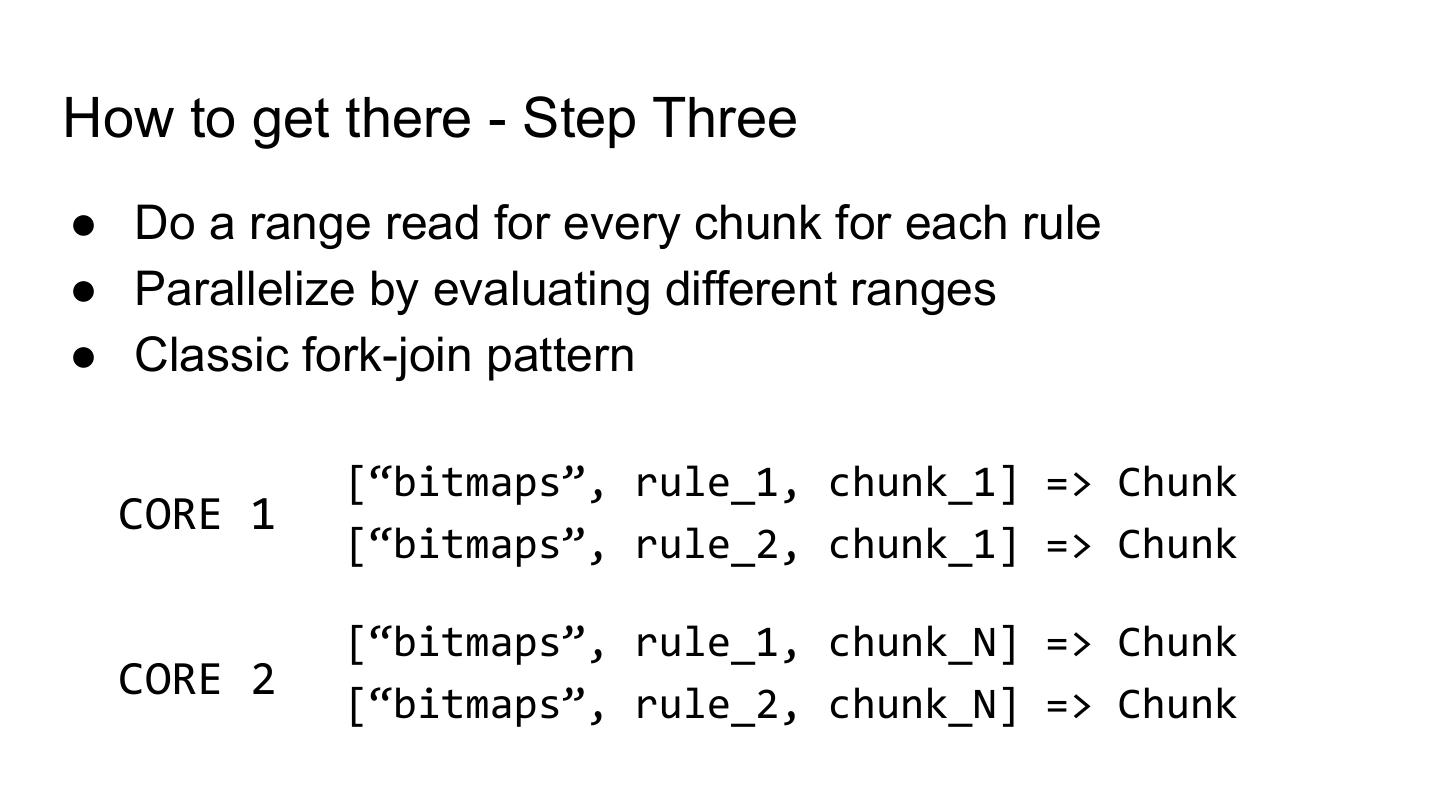

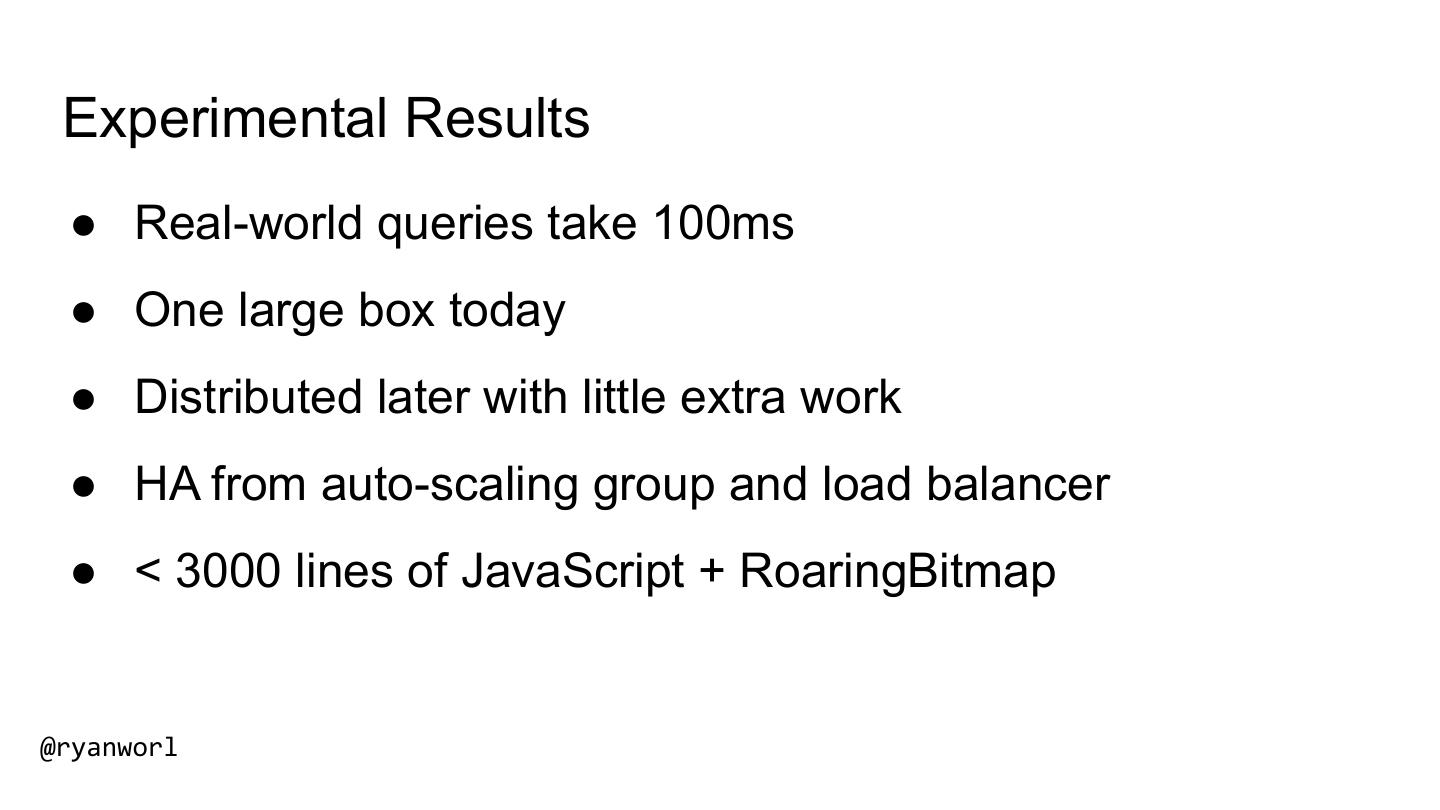

Ryan将演示如何应用FoundationDB来解决当前的实际业务问题,以及如何将日志、表和索引等公共基础设施组件映射到FoundationDB内的一个内聚系统中。他的例子将这些技术应用到了ClickFunnels在2018年年中面临的一个问题上,该问题要求每小时扫描数万个客户的数百万个最终用户数据点。通过在FoundationDB上构建的自定义位图索引,现在根本无法完成的查询需要几毫秒时间,这使得新的用例成为可能。

展开查看详情

1 . Solving Everyday Data Problems with FoundationDB Ryan Worl (ryantworl@gmail.com) Consultant

2 .About Me ● Independent software engineer ● Today’s real example is from ClickFunnels ● > 70,000 customers, > 1.8B of payments processed ● Billions of rows of OLTP data (Amazon Aurora MySQL) ● Ryan Worl ● @ryanworl on Twitter ● ryantworl@gmail.com

3 .Agenda ● How FoundationDB Works ● “Everyday” data problems ● Why FoundationDB can be the solution ● ClickFunnels’ recent data problem ● FoundationDB for YOUR data problems

4 . Coordinators elect & heartbeat Cluster Controller (Paxos) Coordinators store core cluster state, used like ZooKeeper All processes register themselves with the Cluster Controller Cluster Controller Coordinators 1

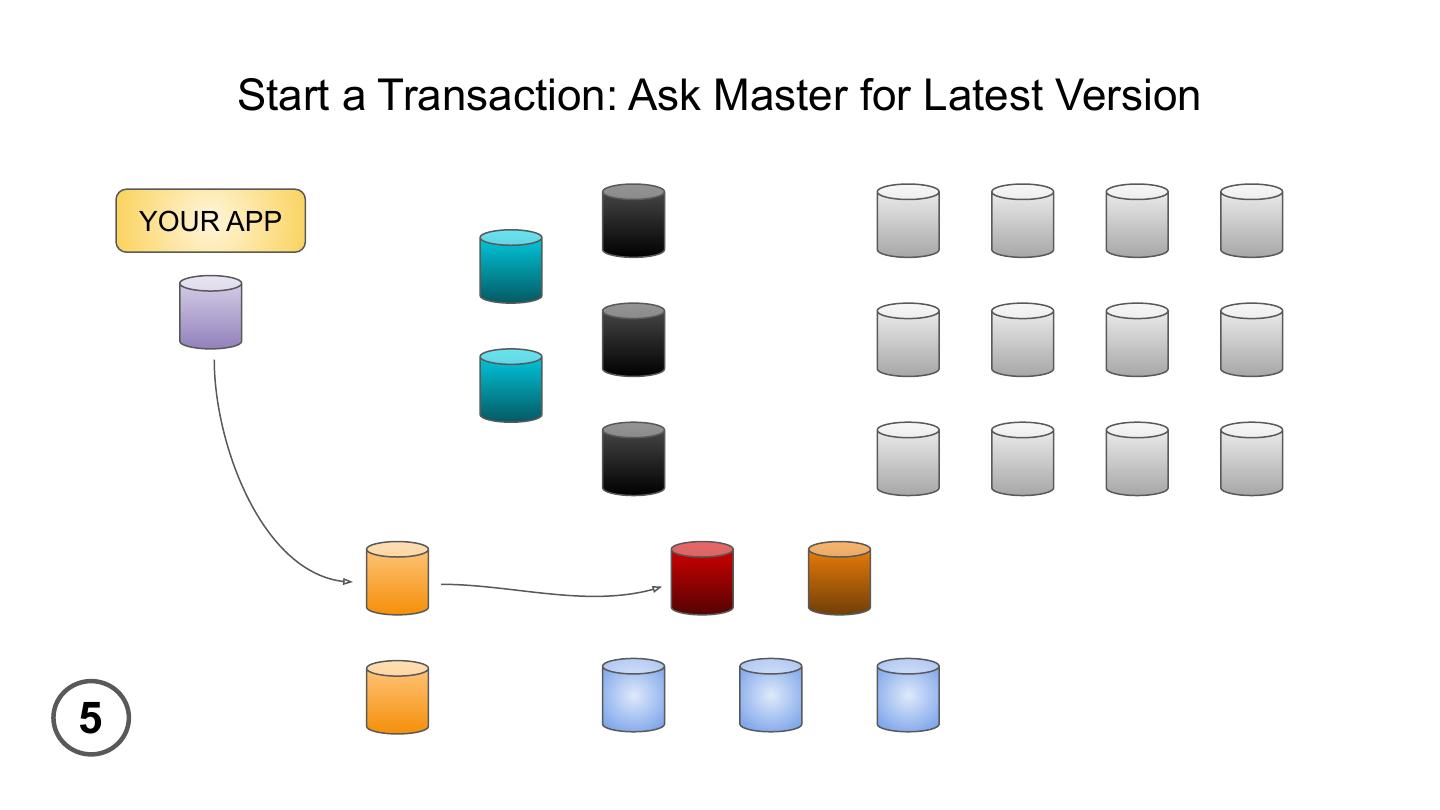

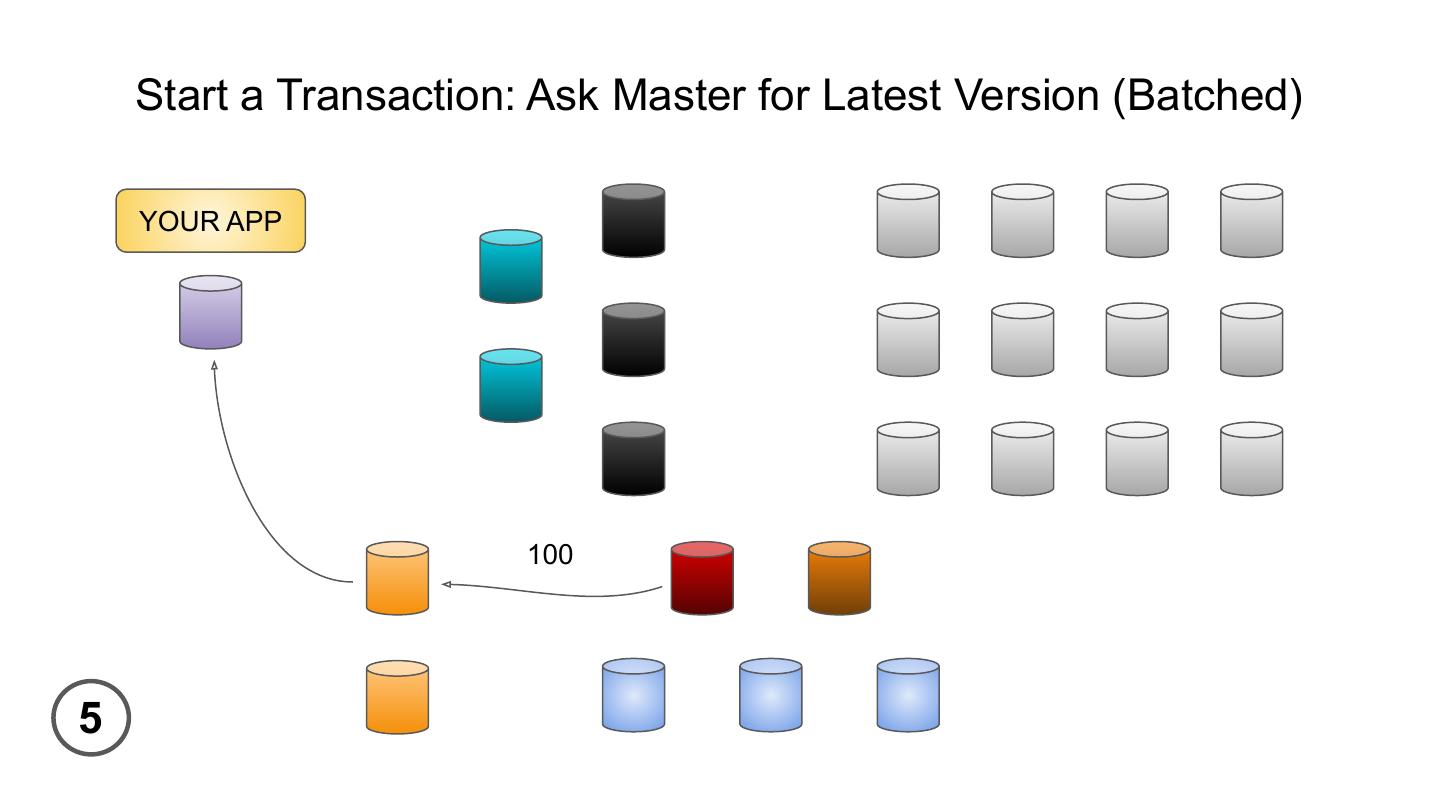

5 . Cluster Controller (CC) assigns Master Role Master 2

6 . CC assigns TLog, Proxy, Resolver, and Storage Roles Proxy 3

7 . CC assigns TLog, Proxy, Resolver, and Storage Roles Resolver 3

8 . CC assigns TLog, Proxy, Resolver, and Storage Roles TLog 3

9 . CC assigns TLog, Proxy, Resolver, and Storage Roles Storage 3

10 . On Start: Your App Connects and Asks CC For Topology YOUR APP 4

11 . Client Library Asks a Proxy for Key Range to Storage Mapping YOUR APP 4

12 .Data Distribution Runs On Master, Key Map Stored in Database YOUR APP FF 4

13 . Start a Transaction: Ask Master for Latest Version YOUR APP 5

14 . Start a Transaction: Ask Master for Latest Version (Batched) YOUR APP 100 5

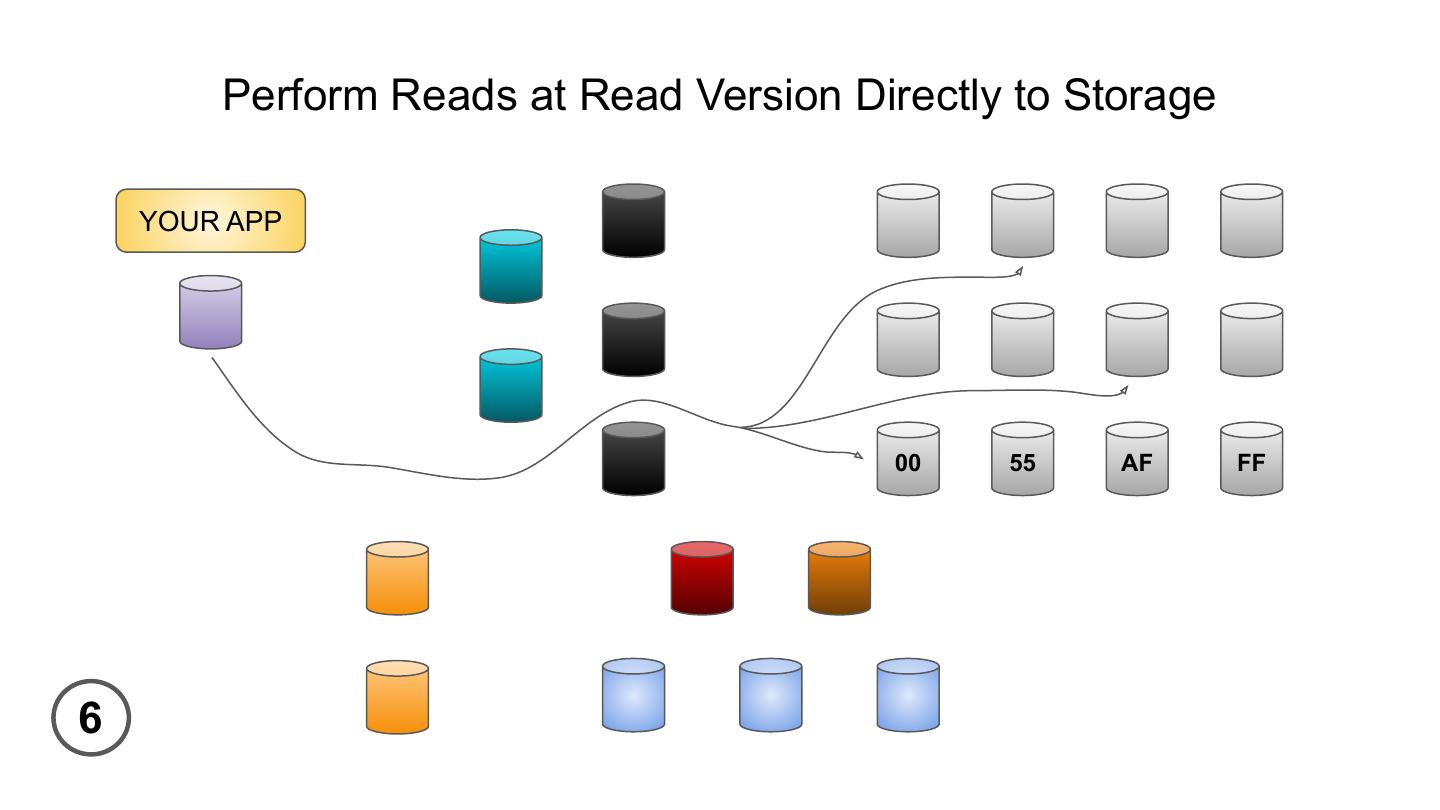

15 . Perform Reads at Read Version Directly to Storage YOUR APP 00 55 AF FF 6

16 . Consequences ● All replicas participate in reads ● Client load balances among different replicas ● Failures of all but one replica for each range keep the system alive @ryanworl

17 . Buffer Writes Locally Until Commit YOUR APP 00 55 AF FF 7

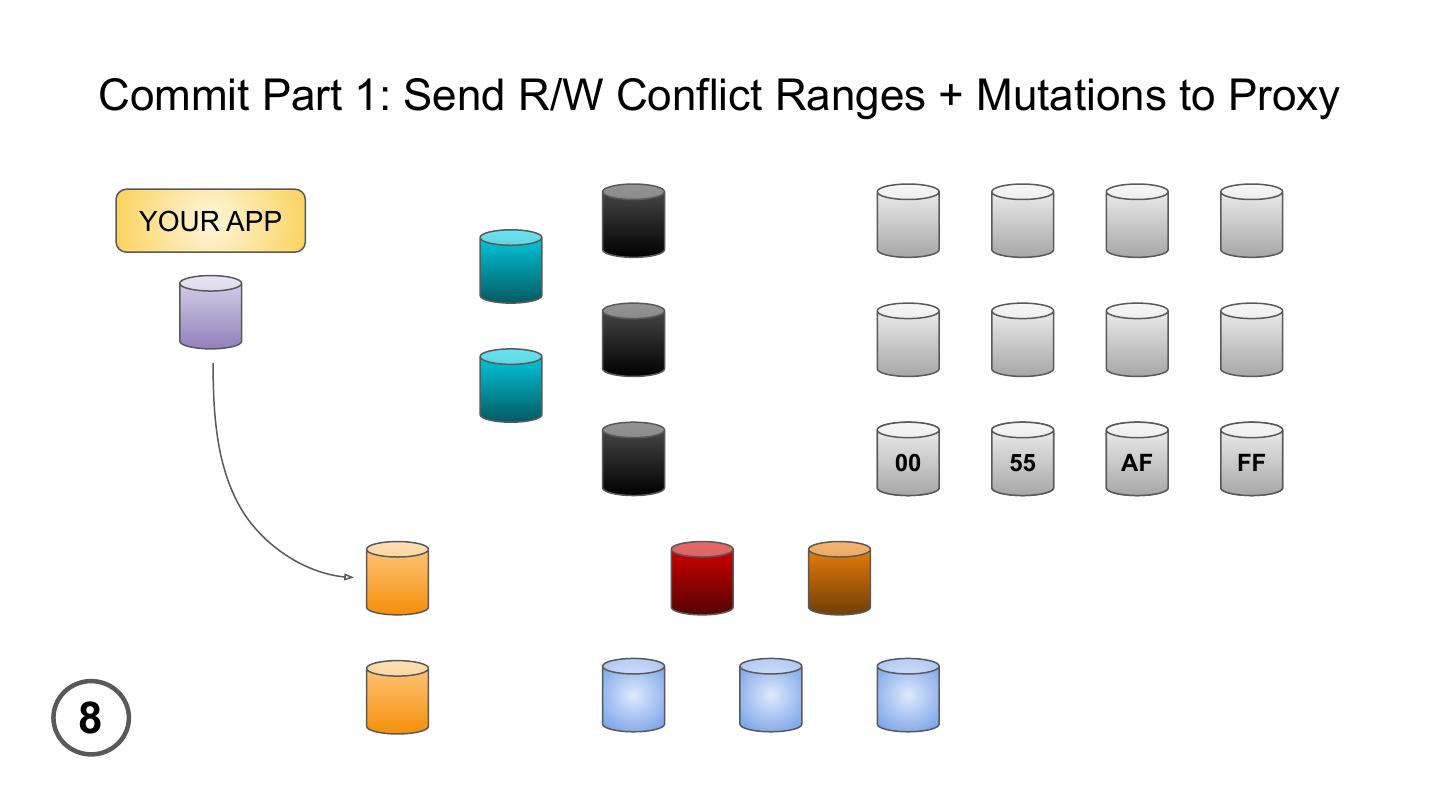

18 .Commit Part 1: Send R/W Conflict Ranges + Mutations to Proxy YOUR APP 00 55 AF FF 8

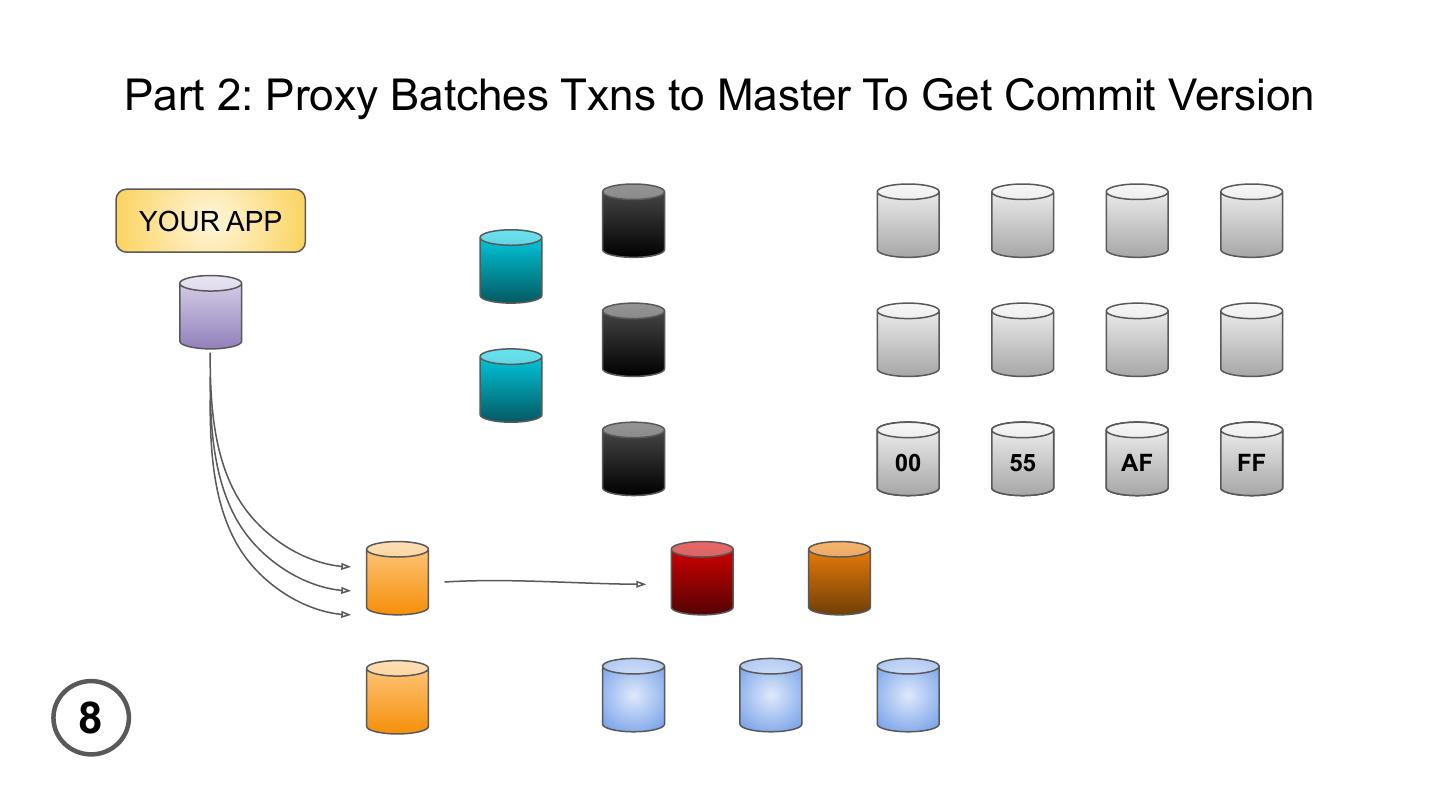

19 . Part 2: Proxy Batches Txns to Master To Get Commit Version YOUR APP A 00 H 55 T AF Z FF 8

20 . Consequences ● Master is not a throughput bottleneck ● Intelligent batching makes Master workload small ● Conflict ranges and mutations are not sent to Master at all @ryanworl

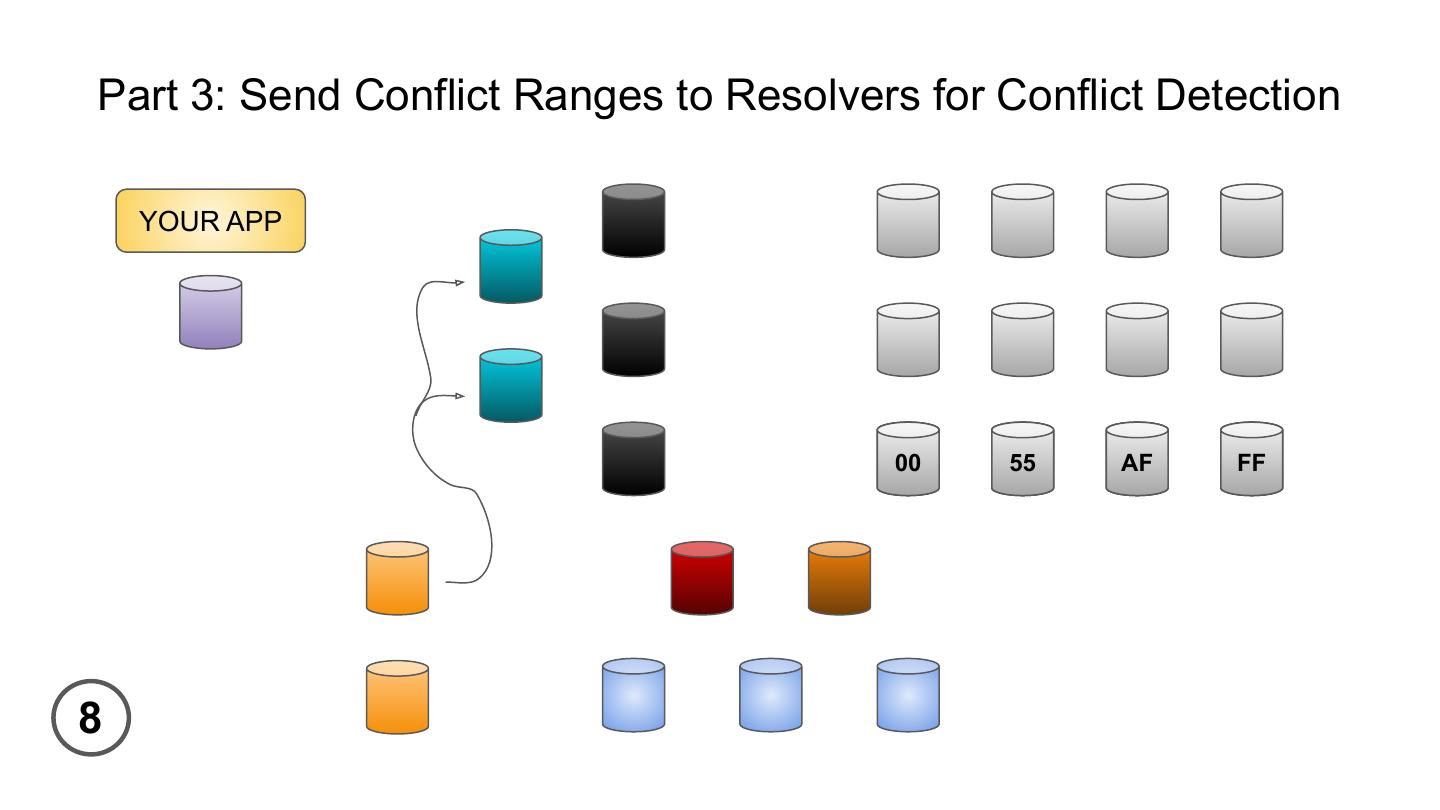

21 .Part 3: Send Conflict Ranges to Resolvers for Conflict Detection YOUR APP A 00 H 55 T AF Z FF 8

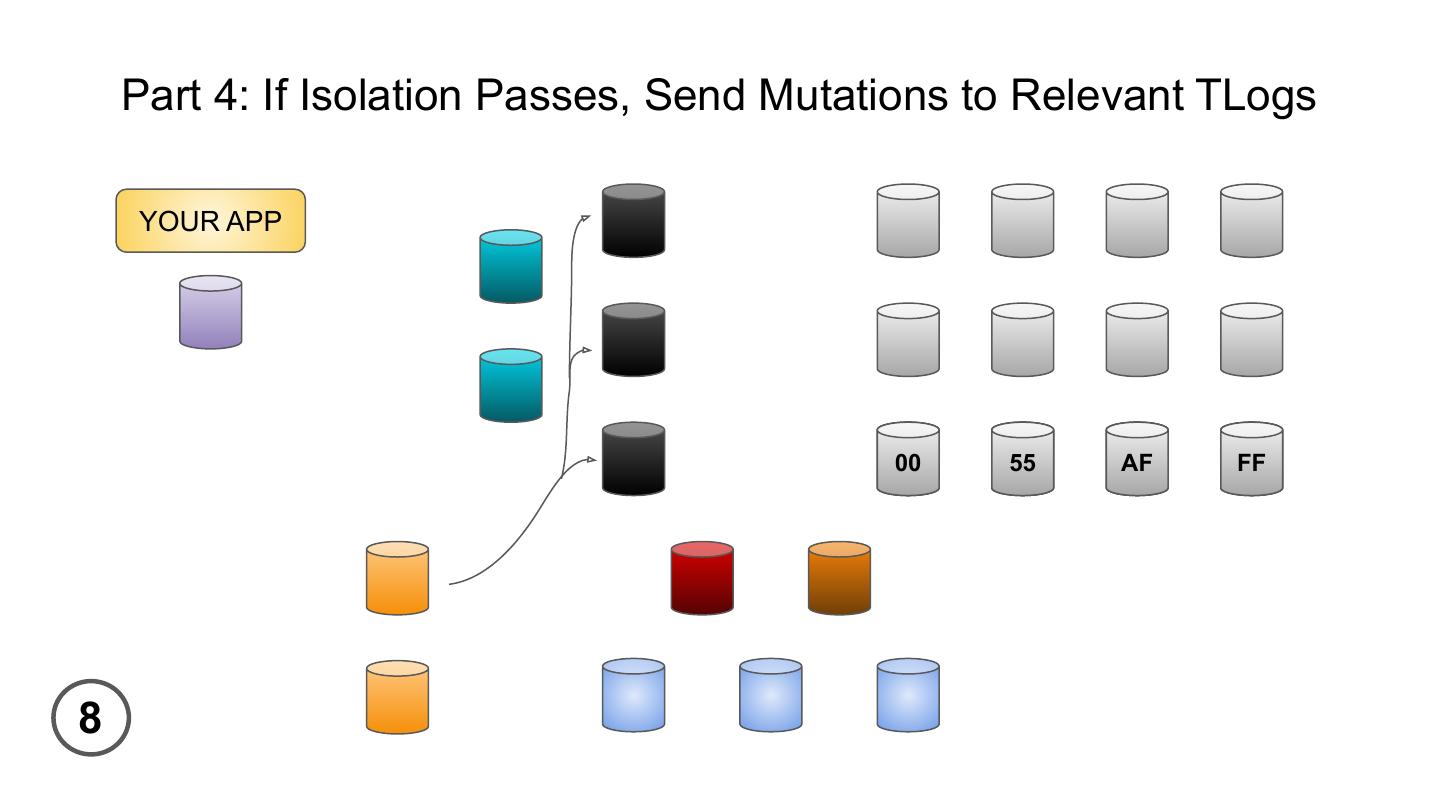

22 . Part 4: If Isolation Passes, Send Mutations to Relevant TLogs YOUR APP A 00 H 55 T AF Z FF 8

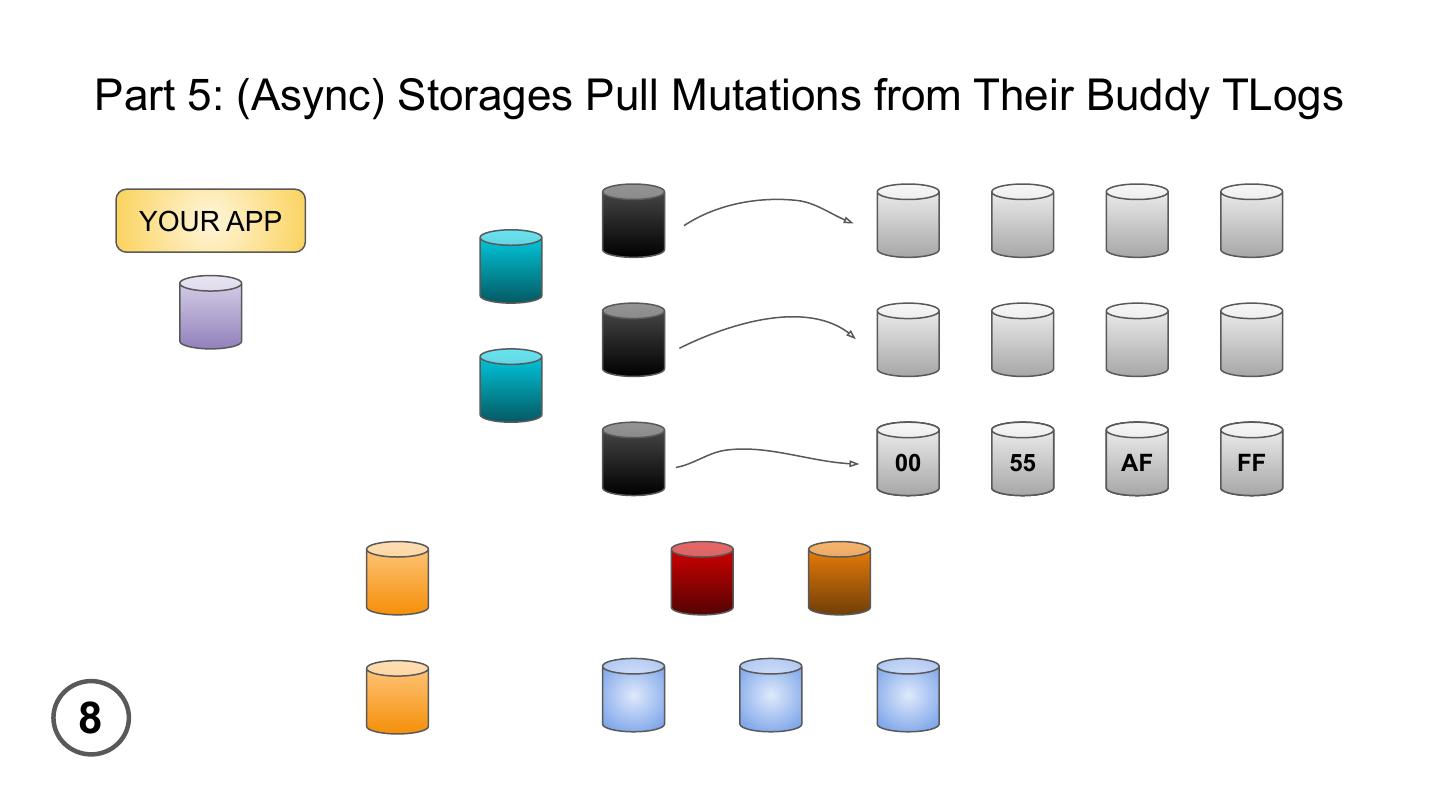

23 .Part 5: (Async) Storages Pull Mutations from Their Buddy TLogs YOUR APP A 00 H 55 T AF Z FF 8

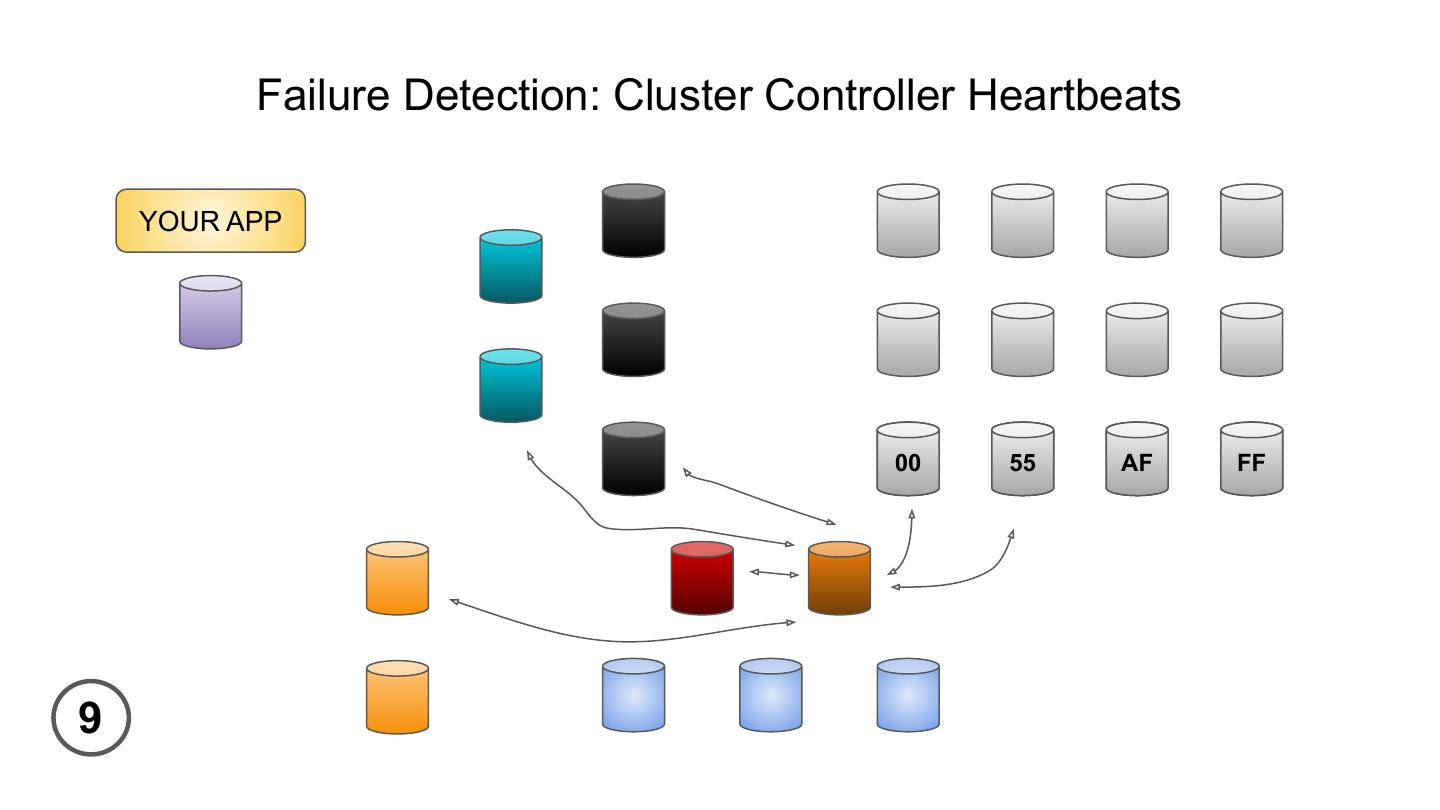

24 . Failure Detection: Cluster Controller Heartbeats YOUR APP A 00 H 55 T AF Z FF 9

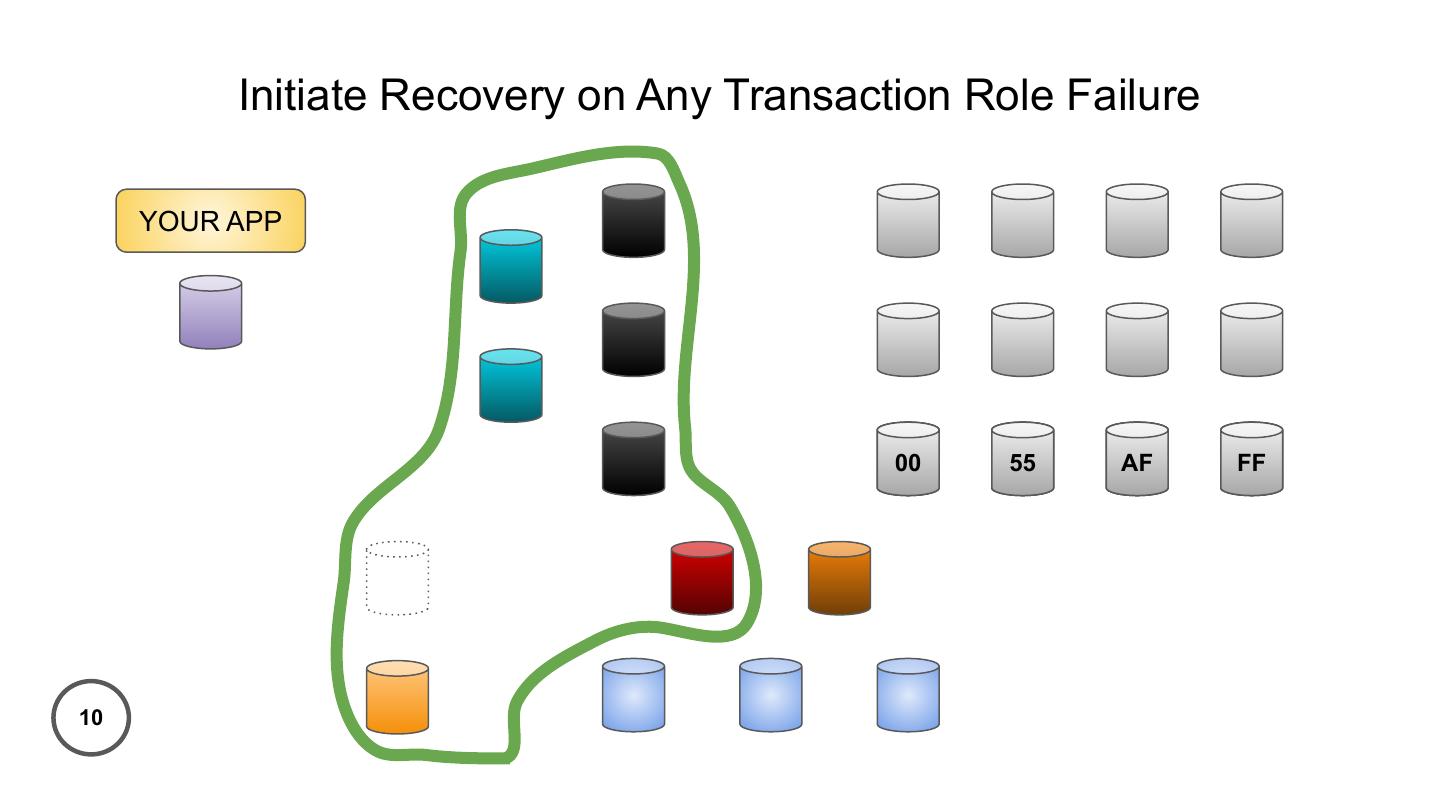

25 . Initiate Recovery on Any Transaction Role Failure YOUR APP A 00 H 55 T AF Z FF �� 10

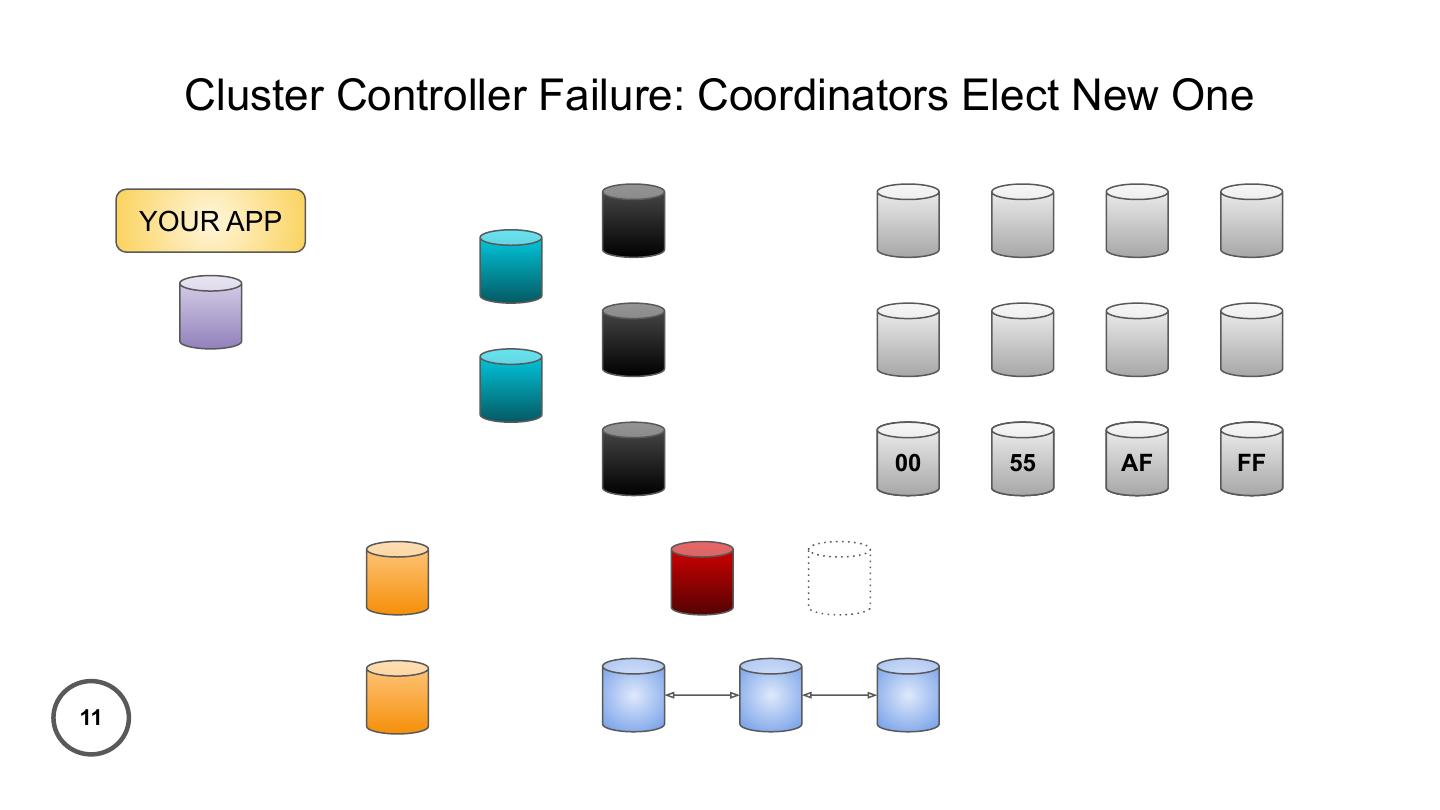

26 . Cluster Controller Failure: Coordinators Elect New One YOUR APP A 00 H 55 T AF Z FF �� 11

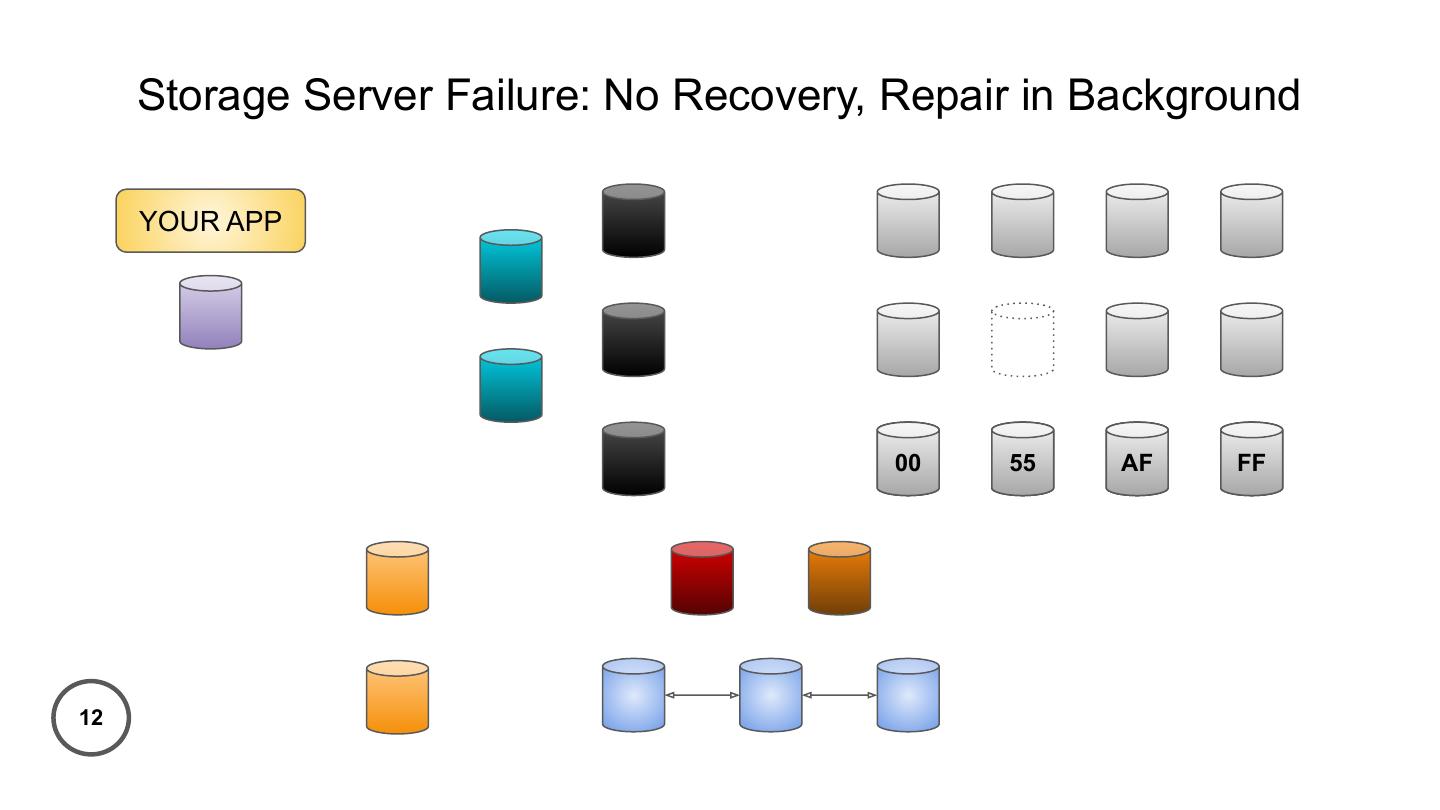

27 . Storage Server Failure: No Recovery, Repair in Background YOUR APP �� A 00 H 55 T AF Z FF 12

28 . Status Quo ● Most apps start uncomplicated ● One database, one queue ● … five years later, a dozen data systems @ryanworl

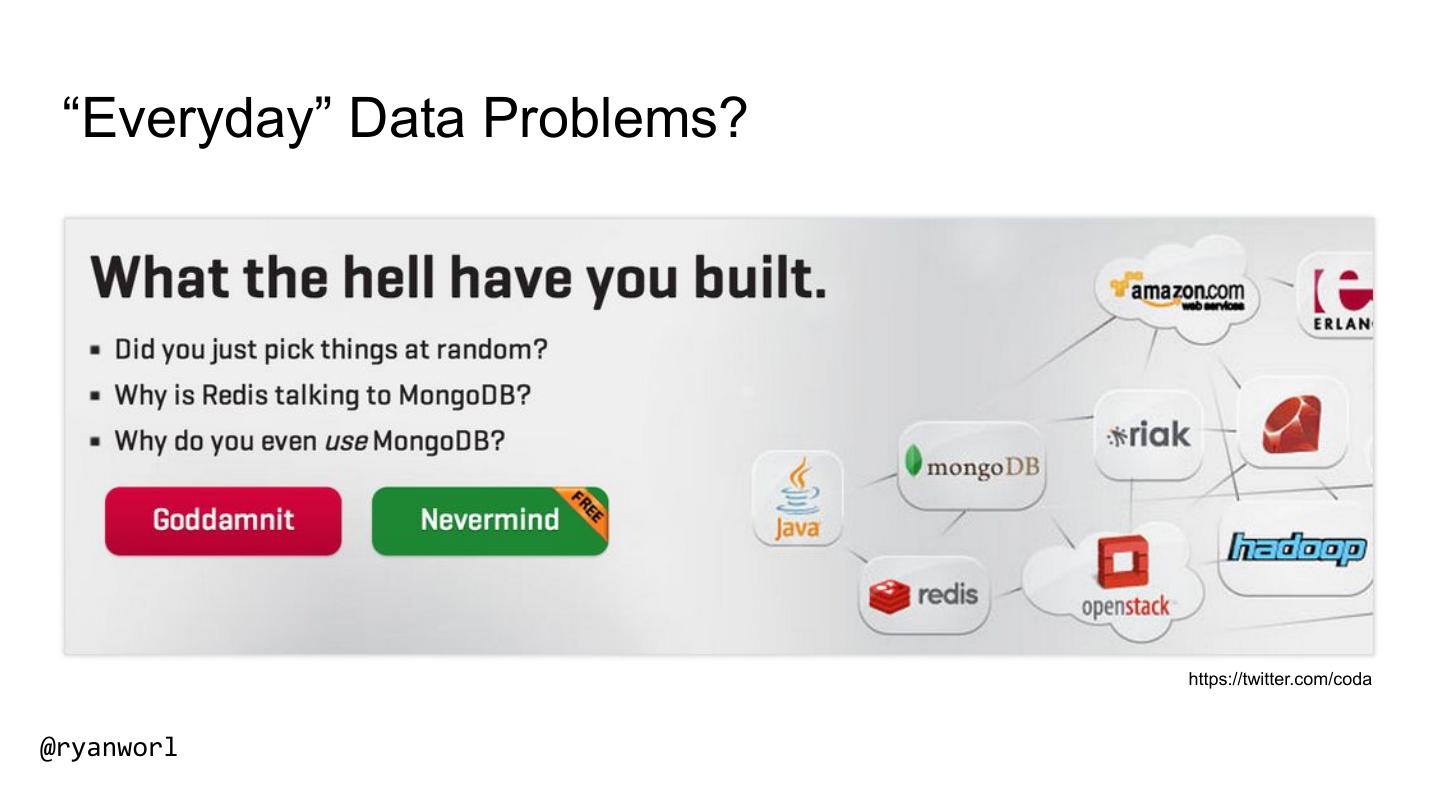

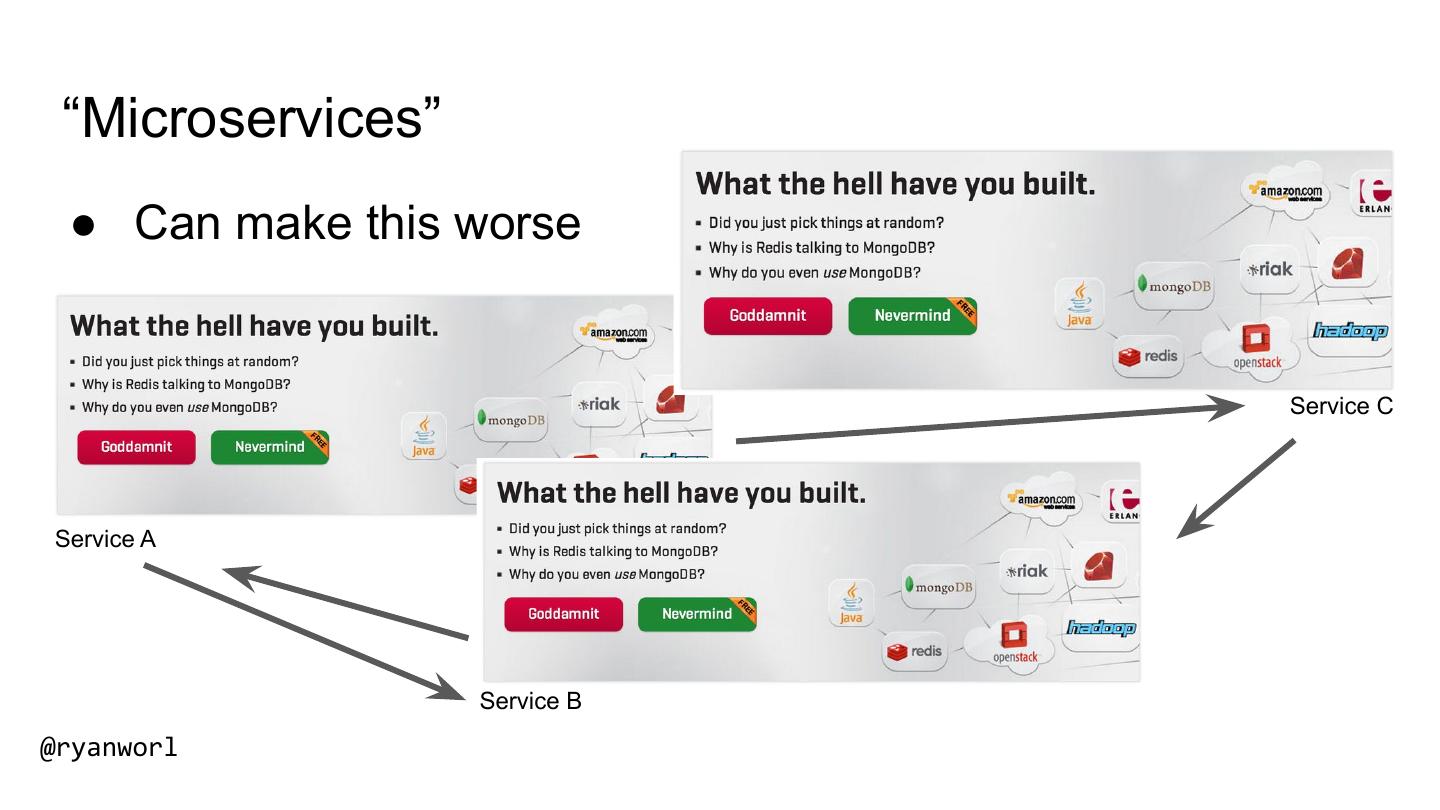

29 . “Everyday” Data Problems? https://twitter.com/coda @ryanworl