- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Application Managed Flash

展开查看详情

1 .Application-Managed Flash Sungjin Lee , Ming Liu, Sangwoo Jun, Shuotao Xu, Jihong Kim † and Arvind Massachusetts Institute of Technology † Seoul National University 14th USENIX Conference on File and Storage Technologies February 22-25, 2016

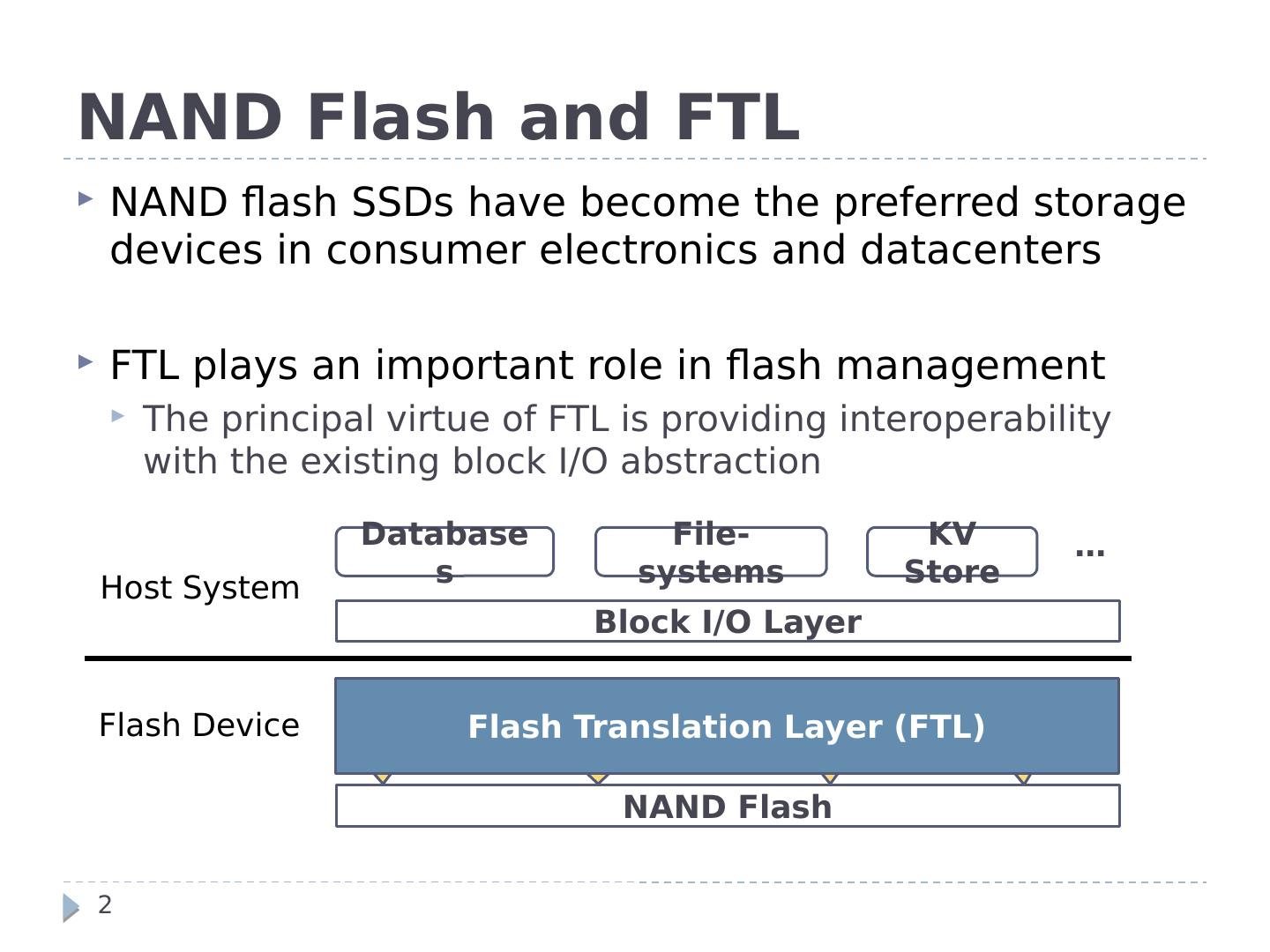

2 .NAND Flash and FTL 2 NAND flash SSDs have become the preferred storage devices in consumer electronics and datacenters FTL plays an important role in flash management The principal virtue of FTL is providing interoperability with the existing block I/O abstraction NAND Flash Databases File-systems KV Store Flash Device Host System … Block I/O Layer Overwriting restriction Limited P/E cycles Bad blocks Asymmetric I/O operation Flash Translation Layer (FTL)

3 .FTL is a Complex Piece of Software 3 Requires significant hardware resources (e.g., 4 CPUs / 1-4 GB DRAM) Incurs extra I/Os for flash management (e.g., GC) Badly affects the behaviors of host applications FTL runs complicated firmware algorithms to avoid in-place updates and manage unreliable NAND substrates Flash Translation Layer (FTL) NAND Flash Databases File-systems KV Store Address Remapping Garbage Collection I/O Scheduling Wear-leveling & Bad-block Flash Device Host System … … Block I/O Layer

4 .Databases File-systems KV Store … ..But, Its Functionality is Mostly Useless 4 Many host applications manage underlying storage in a log-like manner , mostly avoiding in-place updates NAND Flash Log-structured Host Applications Block I/O Layer Flash Device Host System Object-to-storage Remapping Versioning & Cleaning I/O Scheduling … Flash Translation Layer (FTL) Address Remapping Garbage Collection I/O Scheduling Wear-leveling & Bad-block … This duplicate management not only (1) incurs serious performance penalties but also (2) wastes hardware resources Address Remapping Garbage Collection I/O Scheduling Wear-leveling & Bad-block … Flash Translation Layer (FTL) Duplicate Management

5 .Which Applications??? 5 F2FS WAFL Btrfs NILFS RethinkDB LevelDB RocksDB FlexVol BlueSky LogBase Hyder SpriteLFS BigTable MongoDB Cassandra HDFS Which Applications ??? LSM-Tree File Systems Key-value Stores Databases Storage Virtualization

6 .Question: What if we removed FTL from storage devices and allowed host applications to directly manage NAND flash?

7 .Application-Managed Flash (AMF) 7 Host Applications (Log-structured) Block I/O Layer Flash Device Host System Object-to-storage Remapping Versioning & Cleaning I/O Scheduling … NAND Flash Flash Translation Layer (FTL) Address Remapping Garbage Collection I/O Scheduling Wear-leveling & Bad-block … … NAND Flash Light-weight Flash Translation Layer (2) The host runs almost all of the complicated algorithms - Reuse existing algorithms to manage storage devices (1) The device runs essential device management algorithms - Manages unreliable NAND flash and hides internal storage architectures AMF Block I/O Layer (AMF I/O) (3) A new AMF block I/O abstraction enables us to separate the roles of the host and the device Log-structured Host Applications Object-to-storage Remapping Versioning & Cleaning I/O Scheduling … …

8 .AMF Block I/O Abstraction (AMF I/O) 8 AMF I/O is similar to a conventional block I/O interface A linear array of fixed-size sectors (e.g., 4 KB) with existing I/O primitives (e.g., READ and WRITE) Host Applications A logical layout exposed to applications Sector (4KB) READ and WRITE AMF Block I/O Layer Host System Flash Device … Minimize changes in existing host applications

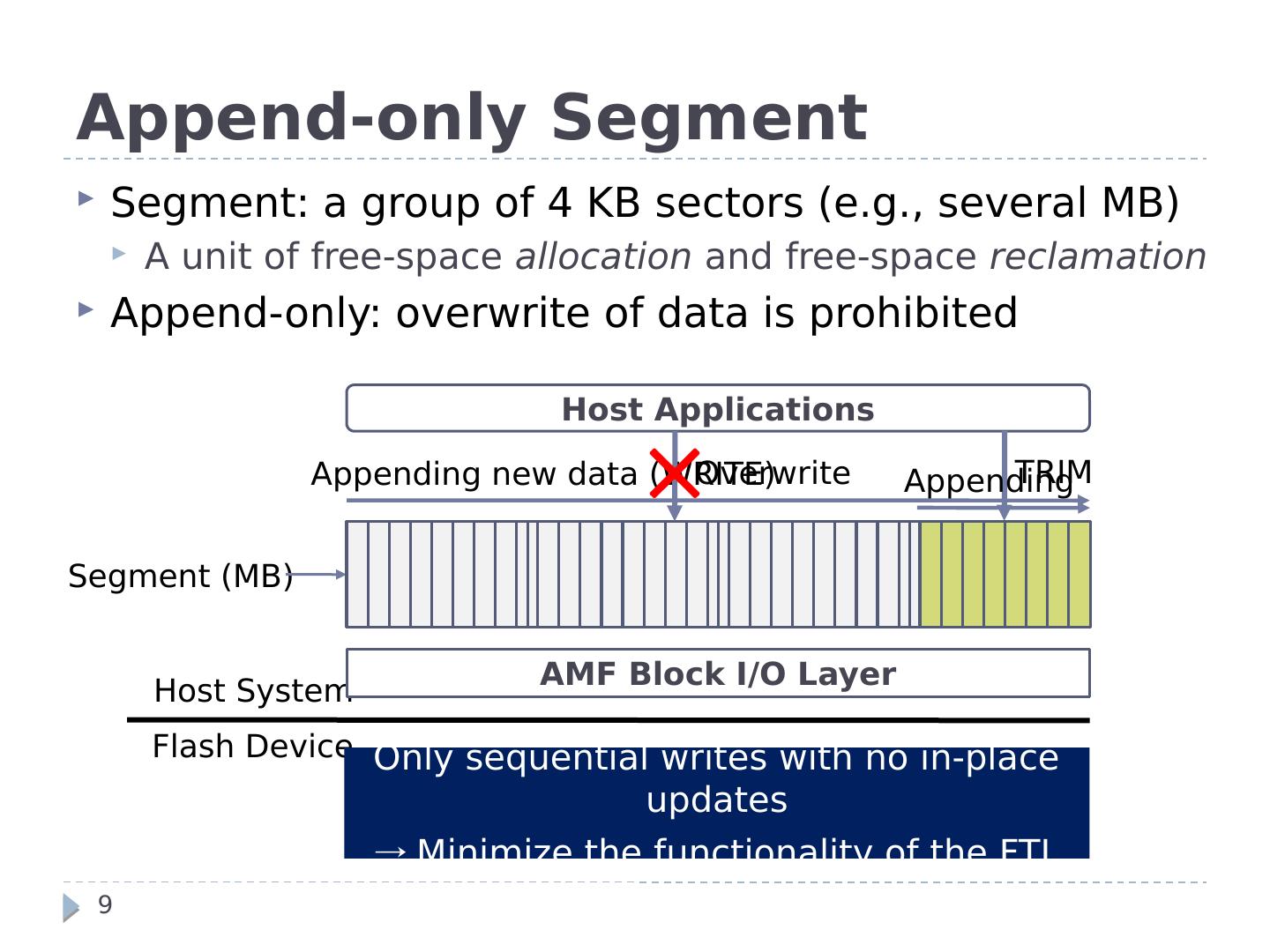

9 .Append-only Segment 9 Segment: a group of 4 KB sectors (e.g., several MB) A unit of free-space allocation and free-space reclamation Append-only: overwrite of data is prohibited Host Applications Host System AMF Block I/O Layer Flash Device … Segment (MB) Appending new data (WRITE) Overwrite TRIM Appending Only sequential writes with no in-place updates → Minimize the functionality of the FTL

10 .Case Study with AMF 10 F2FS WAFL Btrfs NILFS RethinkDB LevelDB RocksDB FlexVol BlueSky LogBase Hyder SpriteLFS BigTable MongoDB Cassandra HDFS LSM-Tree File Systems Key-value Stores Databases Storage Virtualization Which Applications ???

11 .Case Study with File System 11 Host Applications (Log-structured) AMF Block I/O Layer Object-to-storage Remapping Versioning & Cleaning I/O Scheduling … … NAND Flash AMF Log-structured File System (ALFS) (based on F2FS) Host System Flash Device AMF Flash Translation Layer (AFTL) Segment-level Address Remapping Wear-leveling & Bad-block

12 .<A comparison of source-code lines of F2FS and ALFS> AMF Log-structured File System (ALFS) 12 ALFS is based on the F2FS file system How did we modify F2FS for ALFS? Eliminate in-place updates F2FS overwrites check-points and inode-map blocks Change the TRIM policy TRIM is issued to individual sectors How many new codes were added? 1300 lines

13 .How Conventional LFS (F2FS) Works 13 LFS PFTL Check-Point Segment Inode-Map Segment Data Segment 0 Data Segment 1 Data Segment 2 Segment Block with 2 pages * PFTL: page-level FTL

14 .How Conventional LFS (F2FS) Works 14 LFS CP Check-Point Segment Inode-Map Segment CP Data Segment 0 Data Segment 1 Data Segment 2 IM #0 A B C D E B F G CP IM #0 B Invalid Check-point and inode-map blocks are overwritten CP PFTL * PFTL: page-level FTL

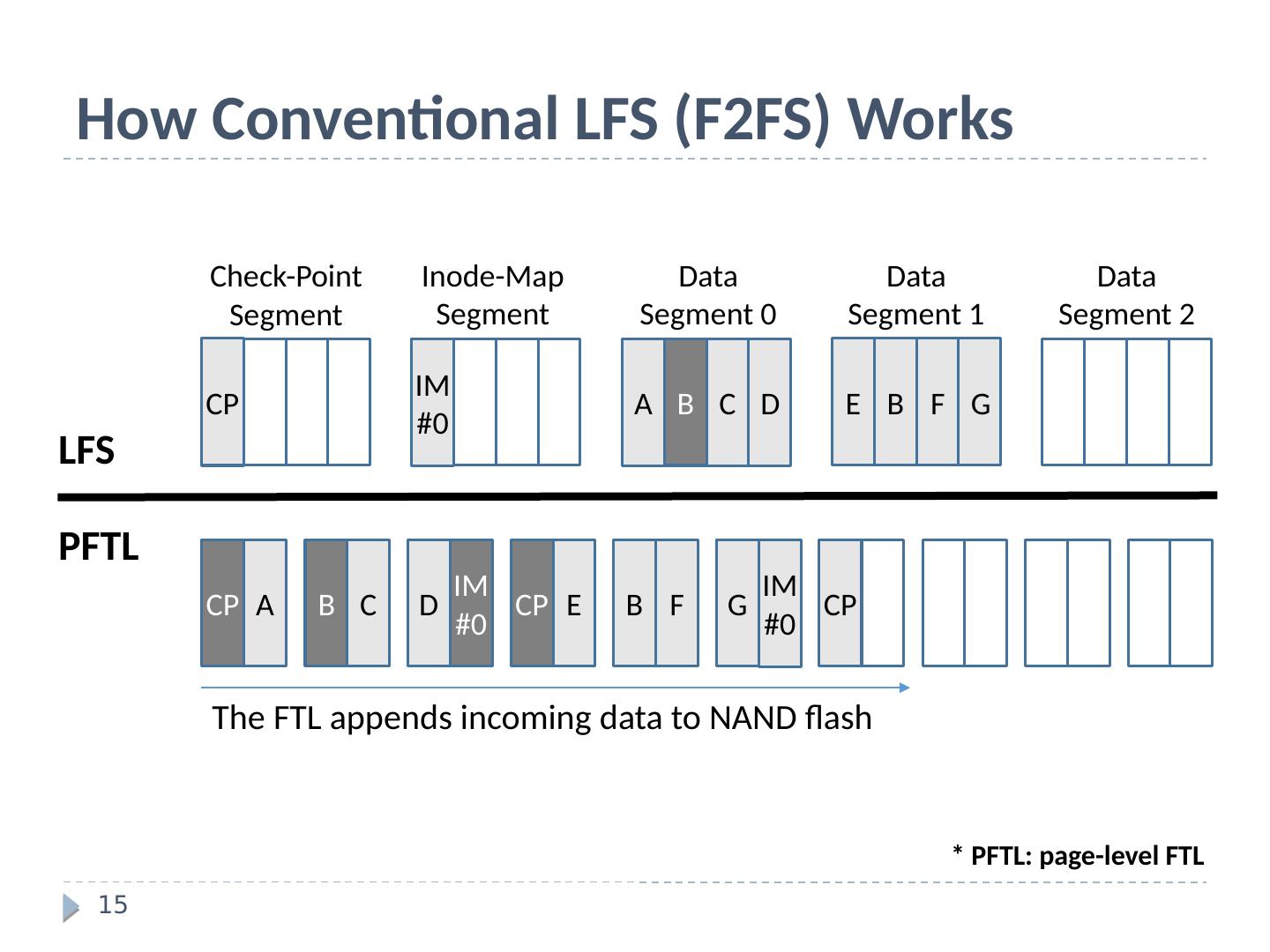

15 .How Conventional LFS (F2FS) Works 15 LFS CP Check-Point Segment Inode-Map Segment CP Data Segment 0 Data Segment 1 Data Segment 2 IM #0 A B C D E B F G CP IM #0 B IM #0 A B C D E F G B CP CP CP IM #0 The FTL appends incoming data to NAND flash PFTL * PFTL: page-level FTL

16 .How Conventional LFS (F2FS) Works 16 LFS CP Check-Point Segment Inode-Map Segment CP Data Segment 0 Data Segment 1 Data Segment 2 IM #0 A B C D E B F G CP IM #0 B IM #0 A B C D E F G B CP CP CP IM #0 A C D E The FTL triggers garbage collection A C D E : 4 page copies and 4 block erasures PFTL * PFTL: page-level FTL

17 .How Conventional LFS (F2FS) Works 17 LFS CP Check-Point Segment Inode-Map Segment CP Data Segment 0 Data Segment 1 Data Segment 2 IM #0 A B C D E B F G CP IM #0 B IM #0 A B C D E F G B CP CP CP IM #0 A C D E A C D E The LFS triggers garbage collection A C D A C D A C D D A C TRIM : 3 page copies PFTL * PFTL: page-level FTL

18 .How ALFS Works 18 ALFS AFTL Check-Point Segment Inode-Map Segment Data Segment 0 Data Segment 1 Data Segment 2 Segment Segment with 2 flash blocks

19 .How ALFS Works 19 ALFS AFTL Check-Point Segment Inode-Map Segment Data Segment 0 Data Segment 1 Data Segment 2 A B C D E B F G CP IM #0 CP IM #0 B CP CP IM #0 CP CP A B C D IM #0 CP E B F G IM #0 CP No in-place updates No obsolete pages – GC is not necessary

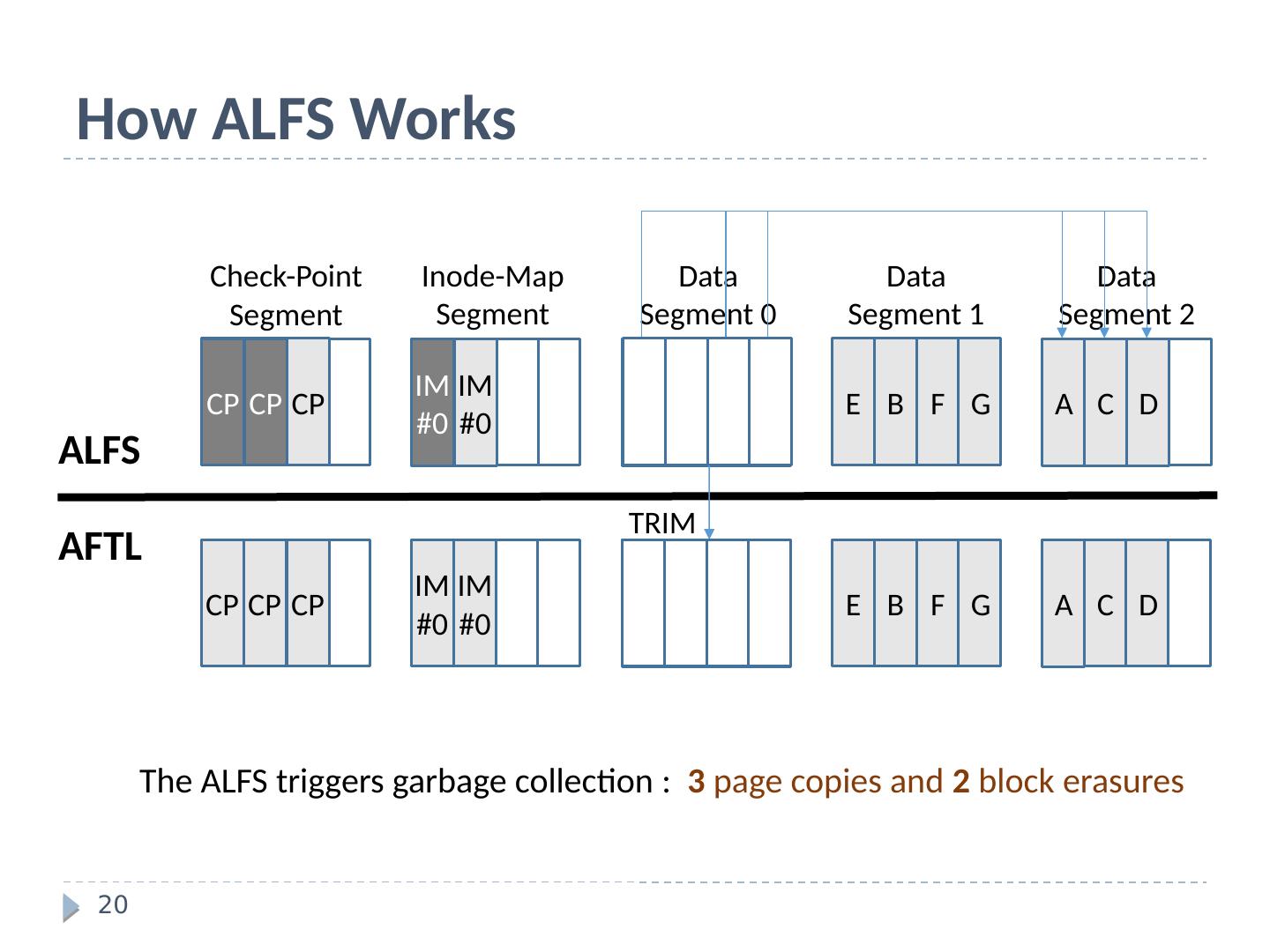

20 .How ALFS Works 20 ALFS AFTL Check-Point Segment Inode-Map Segment CP IM #0 CP IM #0 CP CP IM #0 CP CP A B C D IM #0 CP E B F G IM #0 CP Data Segment 0 Data Segment 1 Data Segment 2 A B C D E B F G B A C D A C D A B C D TRIM A C D The ALFS triggers garbage collection : 3 page copies and 2 block erasures

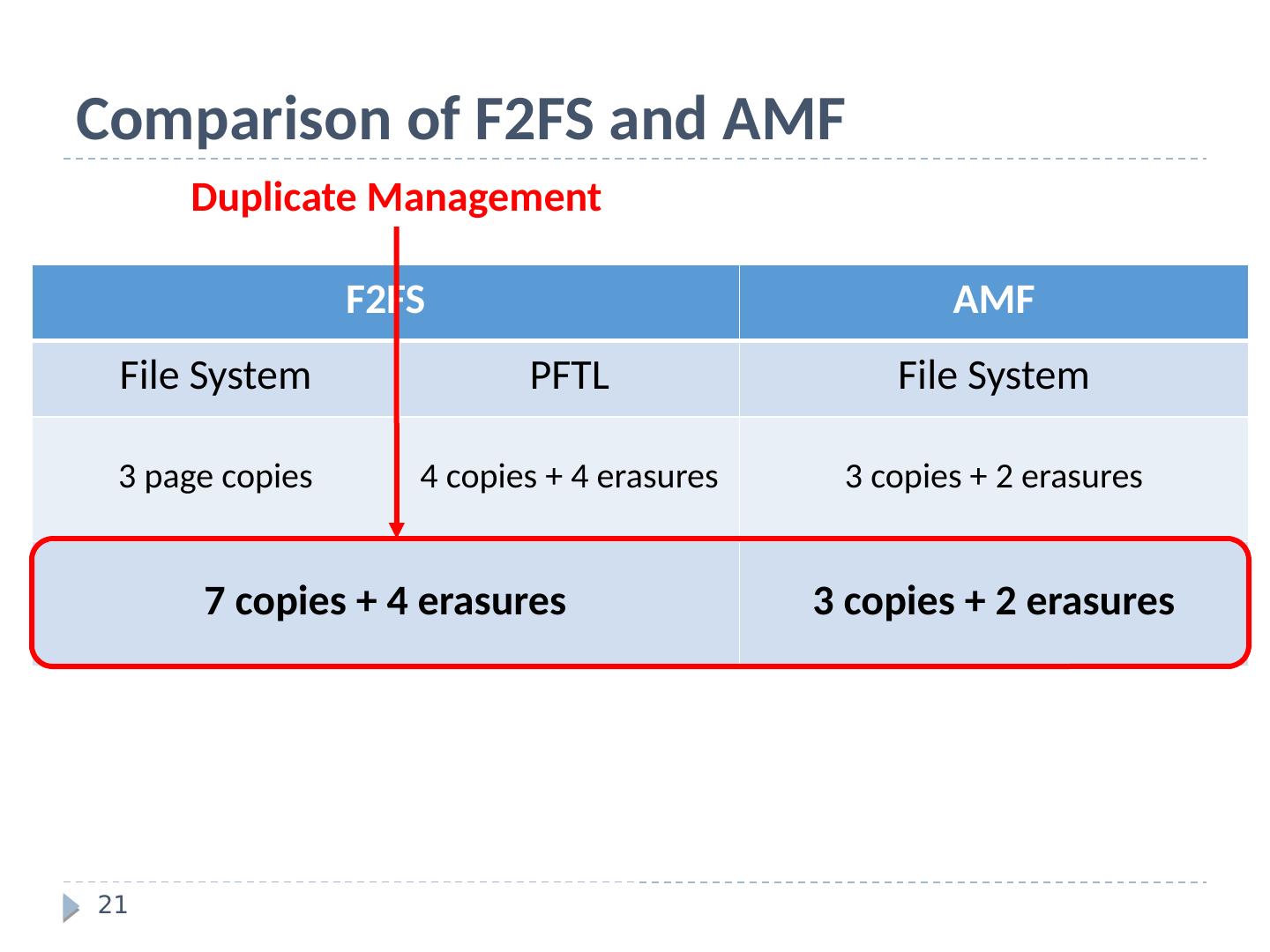

21 .Comparison of F2FS and AMF 21 F2FS AMF File System PFTL File System 3 page copies 4 copies + 4 erasures 3 copies + 2 erasures 7 copies + 4 erasures 3 copies + 2 erasures Duplicate Management

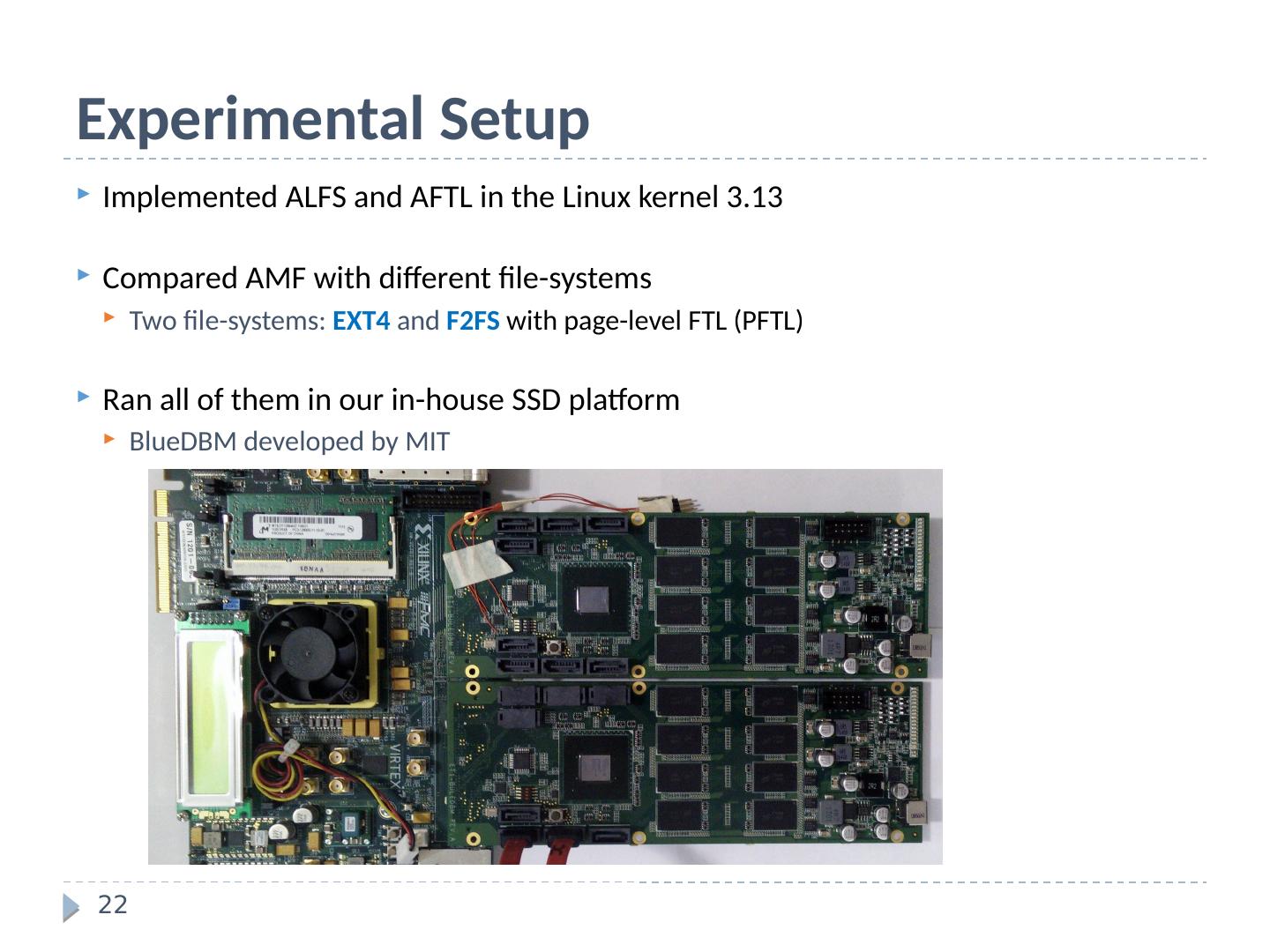

22 .Experimental Setup 22 Implemented ALFS and AFTL in the Linux kernel 3.13 Compared AMF with different file-systems Two file-systems: EXT4 and F2FS with page-level FTL (PFTL) Ran all of them in our in-house SSD platform BlueDBM developed by MIT

23 .Performance with FIO 23 For random writes, AMF shows better throughput F2FS is badly affected by the duplicate management problem

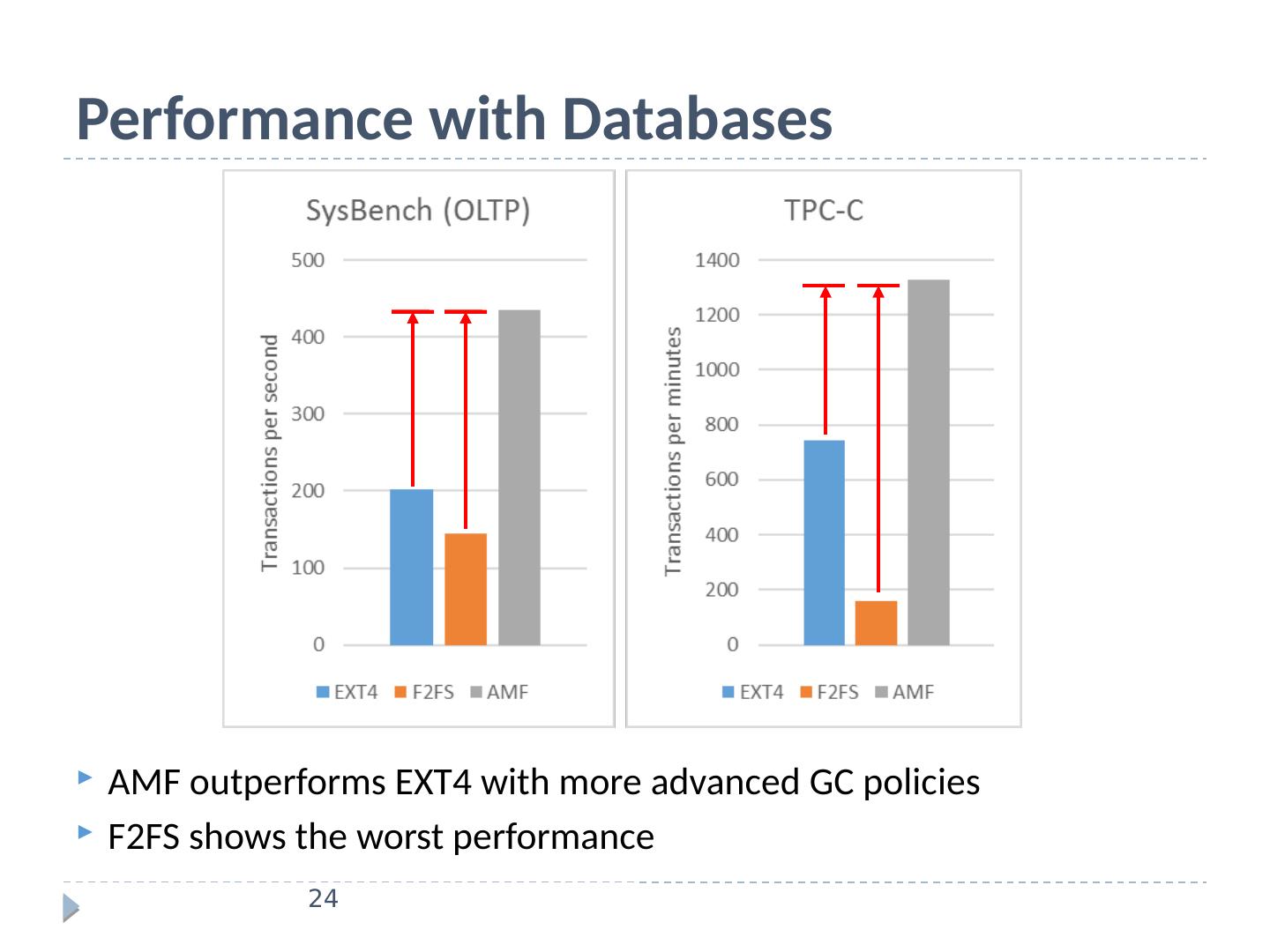

24 .Performance with Databases 24 AMF outperforms EXT4 with more advanced GC policies F2FS shows the worst performance

25 .Erasure Counts 25 AMF achieves 6% and 37% better lifetimes than EXT4 and F2FS, respectively, on average

26 .Resource (DRAM & CPU) 26 FTL mapping table size Host CPU usage SSD Capacity Block-level FTL Hybrid FTL Page-level FTL AMF 512 GB 4 MB 96 MB 512 MB 4 MB 1 TB 8 MB 186 MB 1 GB 8 MB

27 .Conclusion 27 We proposed the Application-Managed Flash (AMF) architecture. AMF was based on a new block I/O interface, called AMF IO, which exposed flash storage as append-only segments Based on AMF IO, we implemented a new FTL scheme (AFTL) and a new file system (ALFS) in the Linux kernel and evaluated them using our in-house SSD prototype Our results showed that DRAM in the flash controller was reduced by 128X and performance was improved by 80 % Future Work We are doing case studies with key-value stores, database systems, and storage virtualization platforms

28 .Source Code 28 All of the software/hardware is being developed under the GPL license Please refer to our Git repositories Hardware Platform: https://github.com/sangwoojun/bluedbm.git FTL: https ://github.com/chamdoo/bdbm_drv.git File-System : https:// bitbucket.org/chamdoo/risa-f2fs Thank you!