- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

TensorFlow简介

展开查看详情

1 .Tensorflow In Practice By Donghwi Cha with the help of engineering

2 . Summary - An introduction to Tensorflow - Goal - Get used to terms - Basics about its structure - catch up basic pillars of tensorflow - This document - based on web search and Tensorflow document ( www.tensorflow.org )

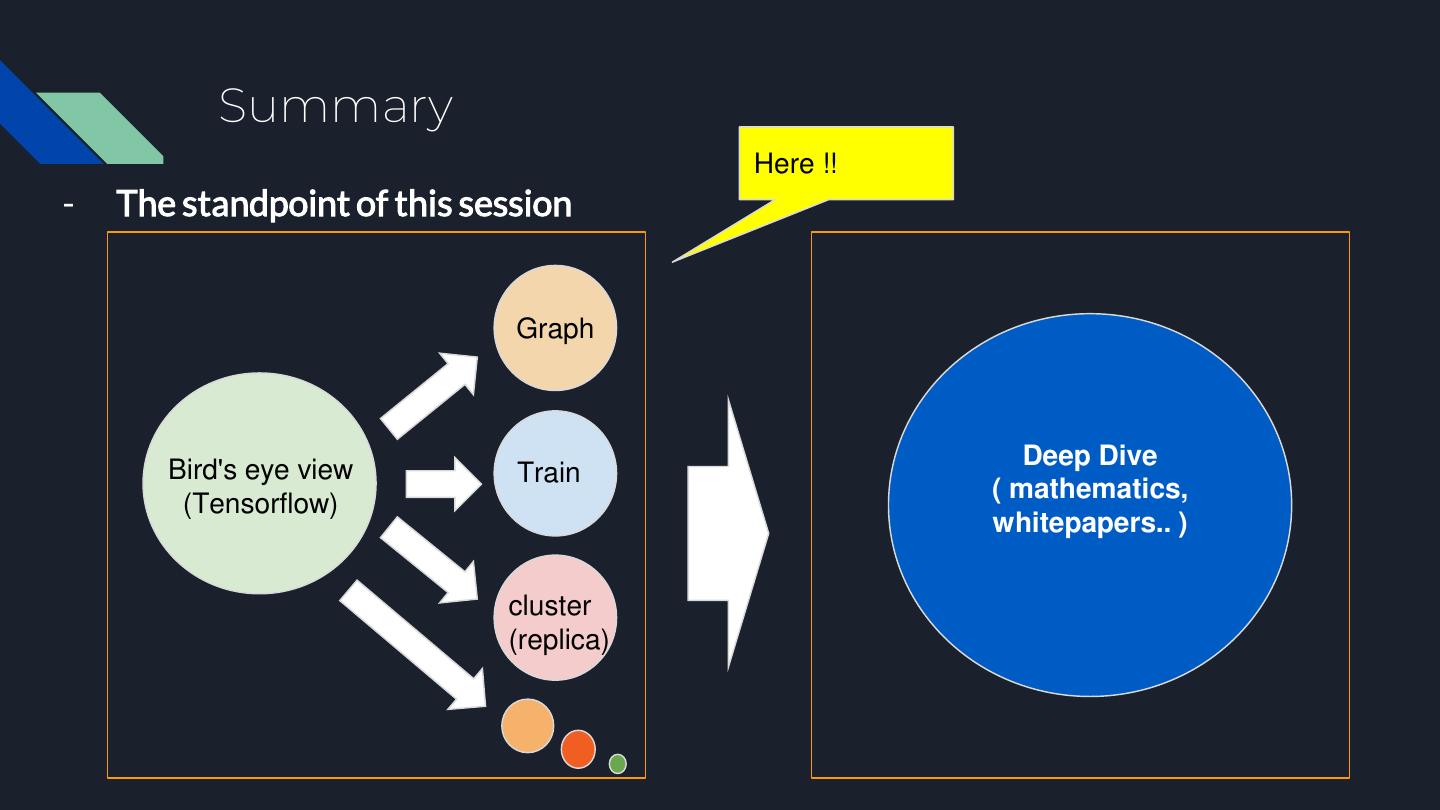

3 . Summary Here !! - The standpoint of this session Graph Deep Dive Bird's eye view Train ( mathematics, (Tensorflow) whitepapers.. ) cluster (replica)

4 .Short intro

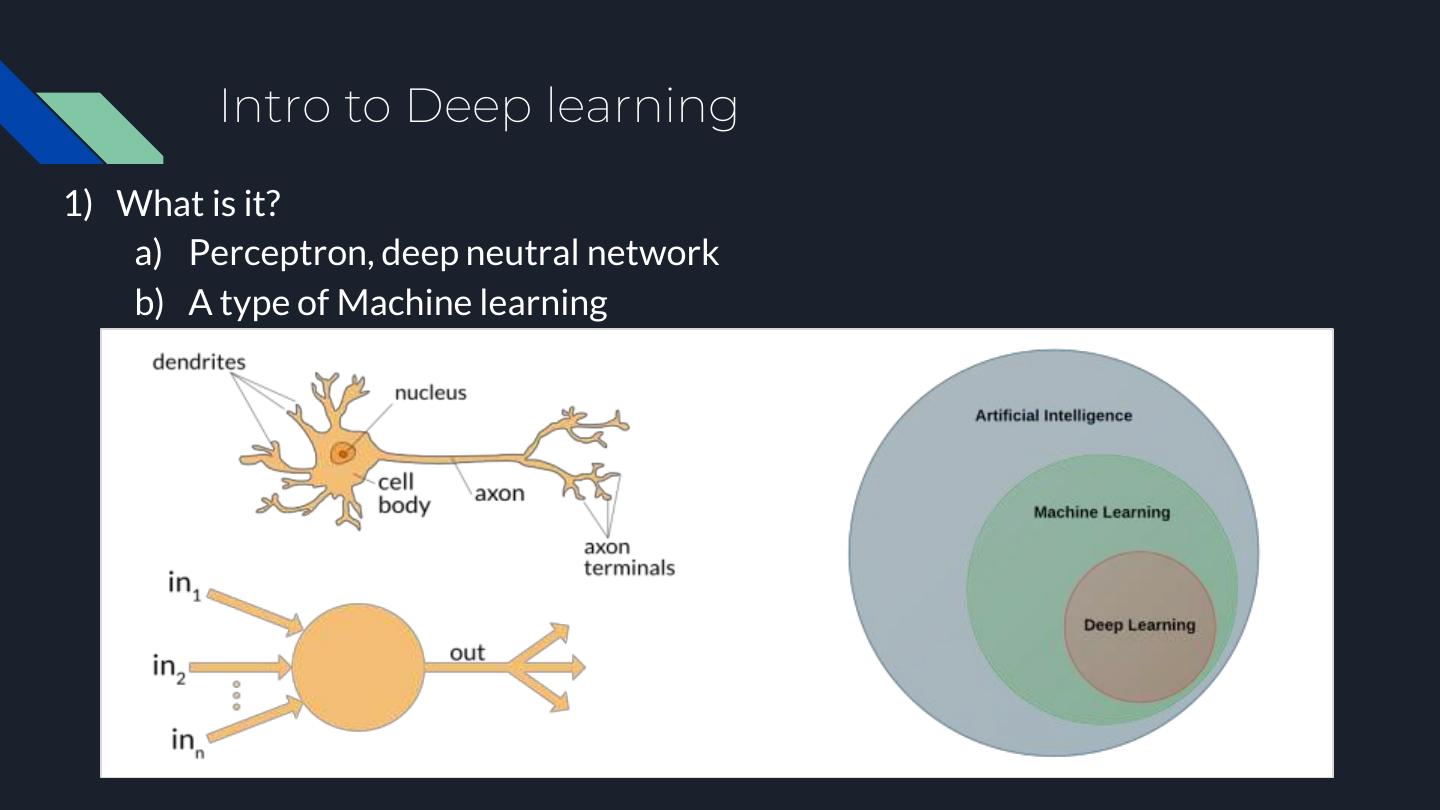

5 . Intro to Deep learning 1) What is it? a) Perceptron, deep neutral network b) A type of Machine learning

6 .Tensorflow, as a Computation graph engine

7 .

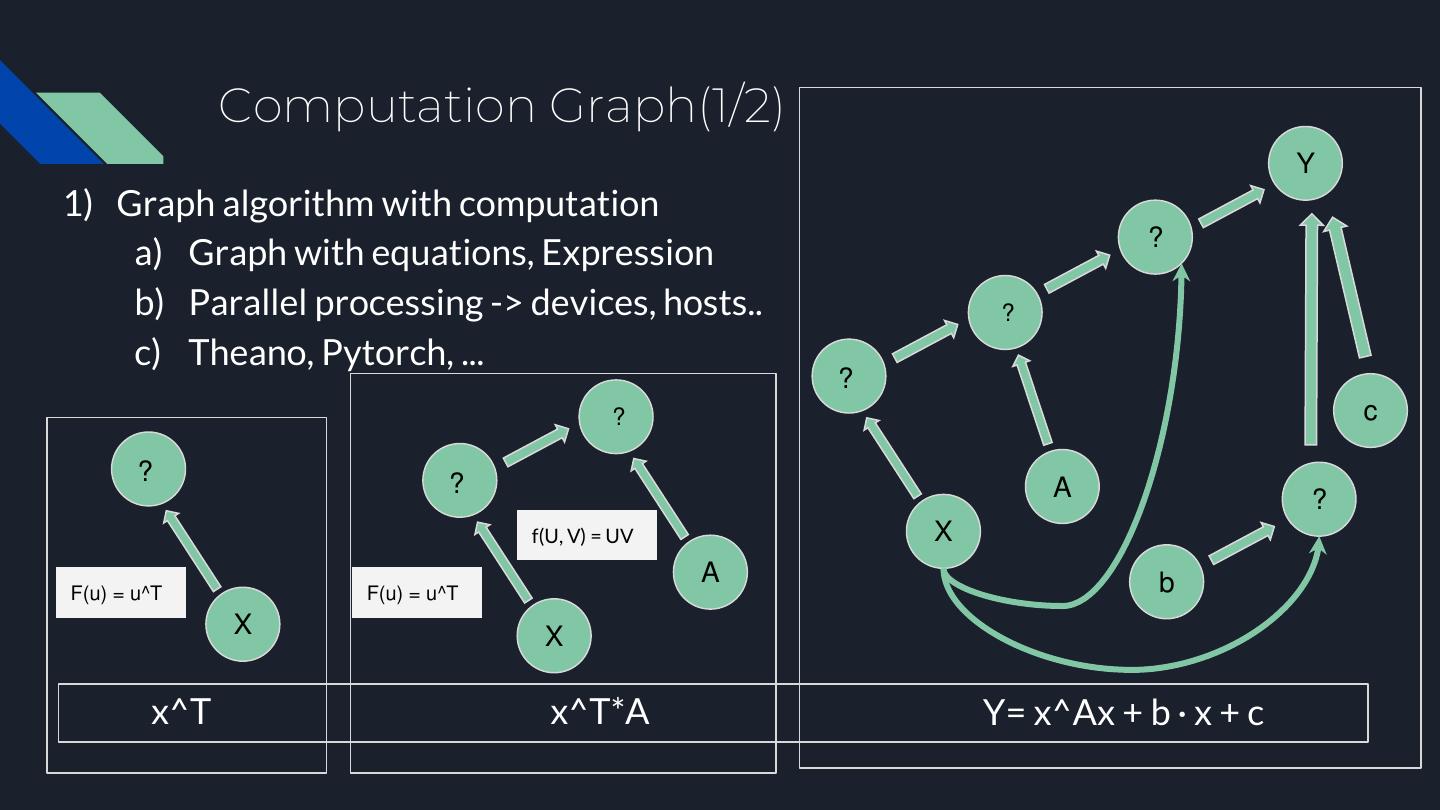

8 . Computation Graph(1/2) Y 1) Graph algorithm with computation ? a) Graph with equations, Expression b) Parallel processing -> devices, hosts.. ? c) Theano, Pytorch, ... ? ? c ? ? A ? f(U, V) = UV X A b F(u) = u^T F(u) = u^T X X x^T x^T*A Y= x^Ax + b · x + c

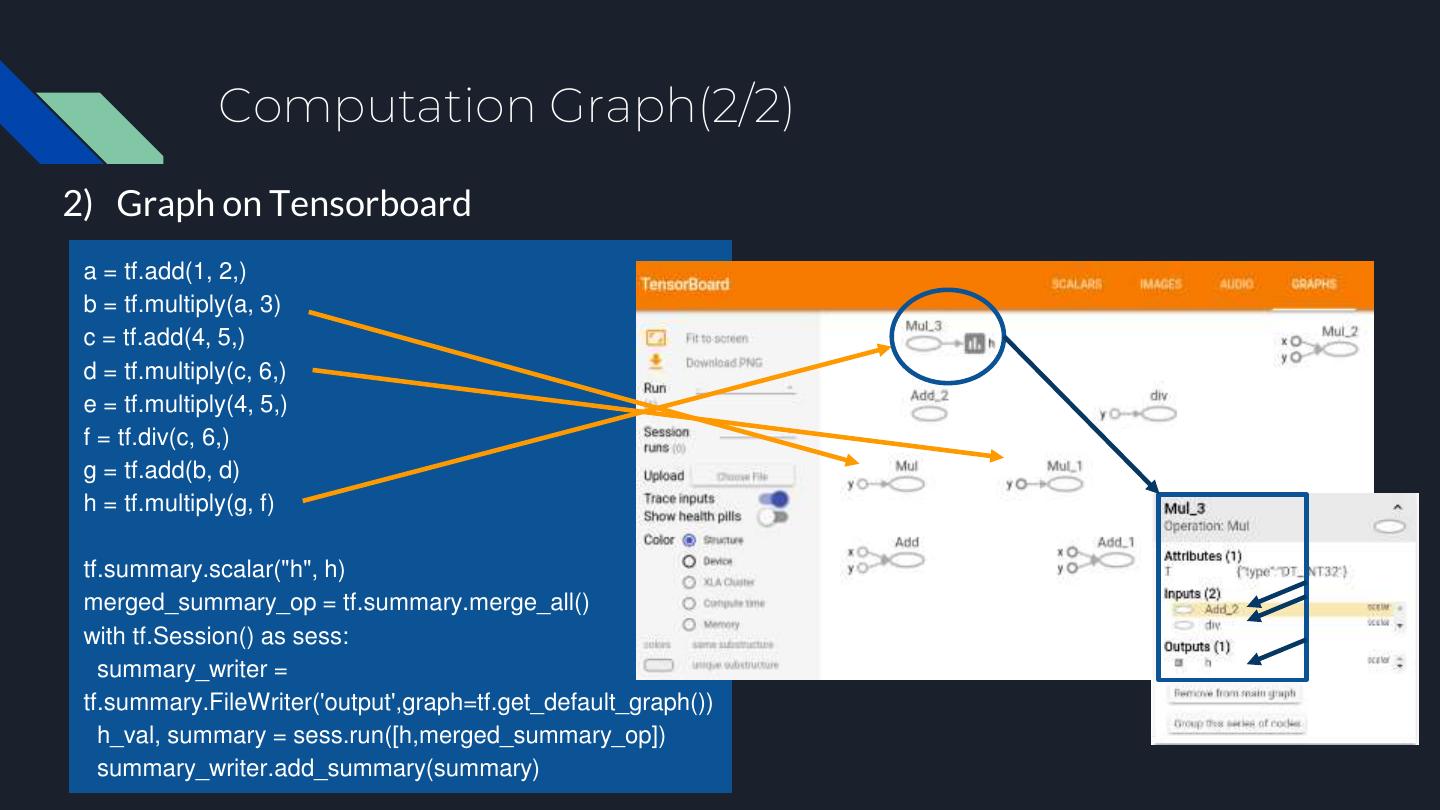

9 . Computation Graph(2/2) 2) Graph on Tensorboard a = tf.add(1, 2,) b = tf.multiply(a, 3) c = tf.add(4, 5,) d = tf.multiply(c, 6,) e = tf.multiply(4, 5,) f = tf.div(c, 6,) g = tf.add(b, d) h = tf.multiply(g, f) tf.summary.scalar("h", h) merged_summary_op = tf.summary.merge_all() with tf.Session() as sess: summary_writer = tf.summary.FileWriter('output',graph=tf.get_default_graph()) h_val, summary = sess.run([h,merged_summary_op]) summary_writer.add_summary(summary)

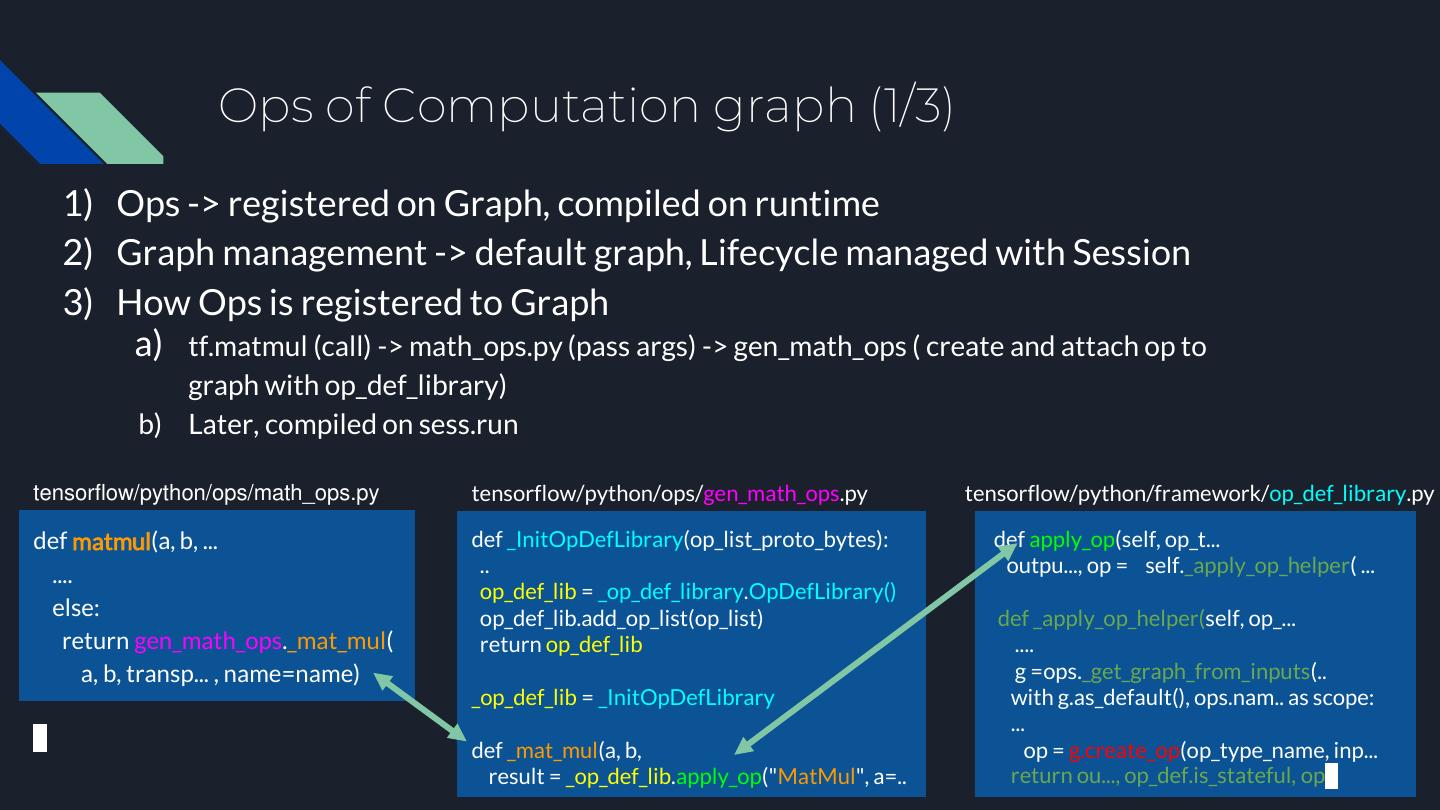

10 . Ops of Computation graph (1/3) 1) Ops -> registered on Graph, compiled on runtime 2) Graph management -> default graph, Lifecycle managed with Session 3) How Ops is registered to Graph a) tf.matmul (call) -> math_ops.py (pass args) -> gen_math_ops ( create and attach op to graph with op_def_library) b) Later, compiled on sess.run tensorflow/python/ops/math_ops.py tensorflow/python/ops/gen_math_ops.py tensorflow/python/framework/op_def_library.py def matmul(a, b, ... def _InitOpDefLibrary(op_list_proto_bytes): def apply_op(self, op_t... .... .. outpu..., op = self._apply_op_helper( ... op_def_lib = _op_def_library.OpDefLibrary() else: op_def_lib.add_op_list(op_list) def _apply_op_helper(self, op_... return gen_math_ops._mat_mul( return op_def_lib .... a, b, transp... , name=name) g =ops._get_graph_from_inputs(.. _op_def_lib = _InitOpDefLibrary with g.as_default(), ops.nam.. as scope: ... def _mat_mul(a, b, op = g.create_op(op_type_name, inp... result = _op_def_lib.apply_op("MatMul", a=.. return ou..., op_def.is_stateful, op

11 . Ops of Computation graph (2/3) 2) Kernel a) Kernel with 200 standard operations b) Source of operational kernels in Tensorflow i) Eigen::Tensor(아이겐 텐서) ii) CuDNN (쿠디엔엔) c) Ops of gen_X : generated by C++ i) example (1) /usr/local/lib/python2.7/dist- packages/tensorflow/python/ops/gen_math_ops.py 3) Further.. a) Static Graph vs Dynamic Graph (TensorFlow Fold, Pytorch ...)

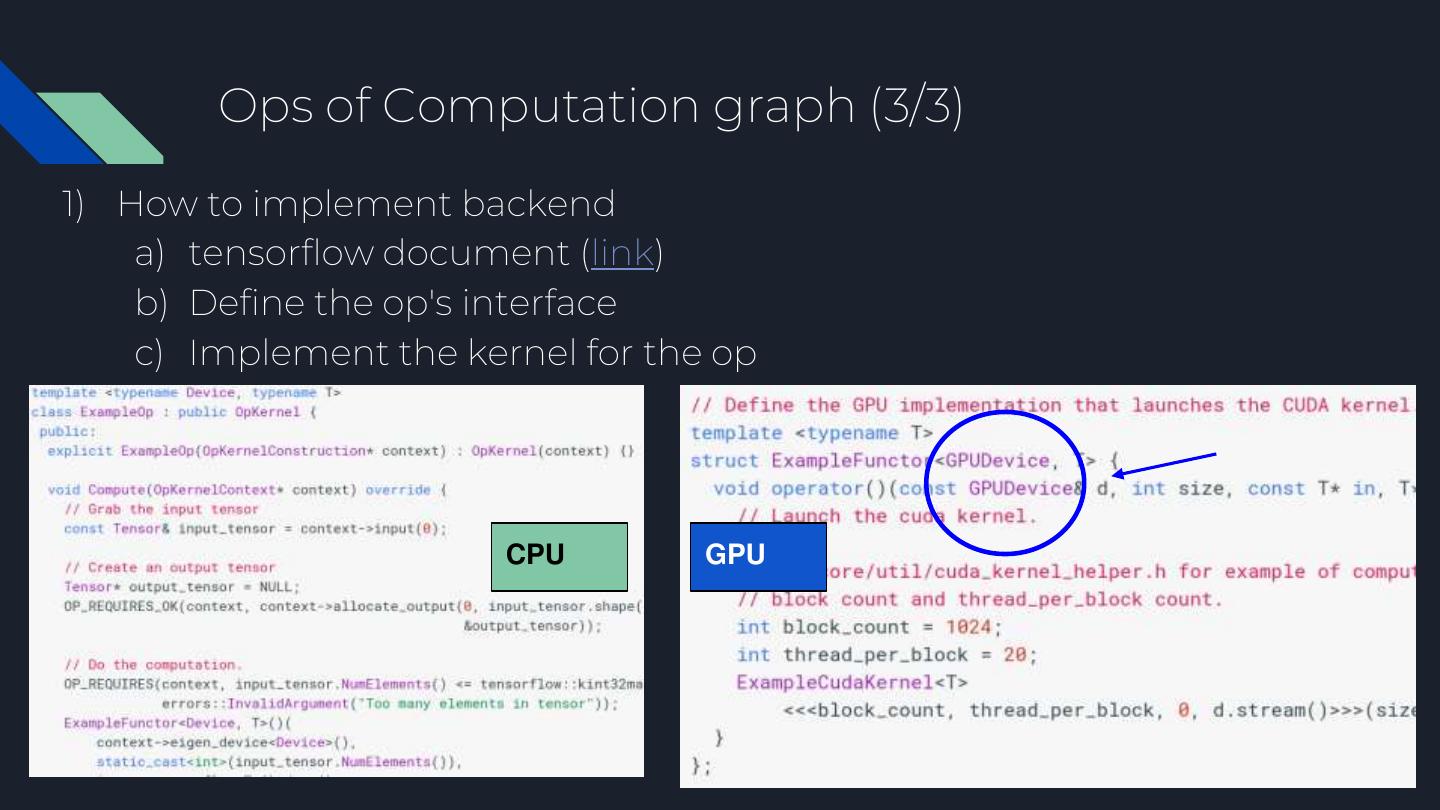

12 . Ops of Computation graph (3/3) 1) How to implement backend a) tensorflow document (link) b) Define the op's interface c) Implement the kernel for the op CPU GPU

13 . Protocol Buffer of Graph 1) Protocol Buffer a) A type of protocol for data i) Json alike, but based on Binary stream, small size ii) made by Google b) Graph i) A protocol Buffer object where Ops is registered c) Graph Deserialize i) can be used to serve a web service ii) freeze_graph (a tool) (1) tensorflow/python/tools/freeze_graph.py iii) export (a class) (1) saved_model_builder

14 . Tensor and tf.tensor 1) Tensor a) Generalisation of vector, scala b) flows on computation graph (ops) => Tensorflow 2) tf.tensor a) tf.tensor as a function pointer without any value (Pointer) b) tf.tensor as a return type of OPs c) Calculation of Ops by kernel implementations 3) tf.tensor and Numpy Array a) Both of them is related with Array value b) conversion i) tf.tensor -> Numpy Array (O), ii) reverse -> tf.convert_to_tensor (constant)

15 . Session (1/3) How tensorflow code is built and executed 1) Scope: Define and Run x = tf.constant([[37.0, -23.0], [1.0, 4.0]]) a) Define Graph (prepare Objects) w = tf.Variable(tf.random_uniform([2, 2])) b) Compute Graph with Session y = tf.matmul(x, w) output = tf.nn.softmax(y) 2) Session Object init_op = w.initializer a) Context on runtime with tf.Session() as sess: b) tf.Operation print("-- sess.run: 1 arg --") i) tf.Variable().Initializer sess.run(init_op) print(sess.run(output)) ii) Matmul, Softmax print("-- sess.run: 2 args --") c) Multiple Argument y_val, output_val = sess.run([y, output]) print(y_val) d) Eval Method with default sess print(output_val) i) "default sess" or "y.eval(session=ss)" print("-- Y Eval -- ") print(y.eval())

16 . Session (2/3) 3) Session class a) python/client/session.py module b) assigned devices, Graph, run(), close() class SessionInterface(object): class BaseSession(SessionInterface): class Session(BaseSession): @property def __init__(self, targe.. setup graph def __enter__(self): def graph(self): setup config def list_devices(self): create session def __exit__(self, ...): def run(self, fetches, @property ... .. def graph(self): return device list def run(self,... Context return graph info management Set of actions that Session Inst will do run based on argument array type

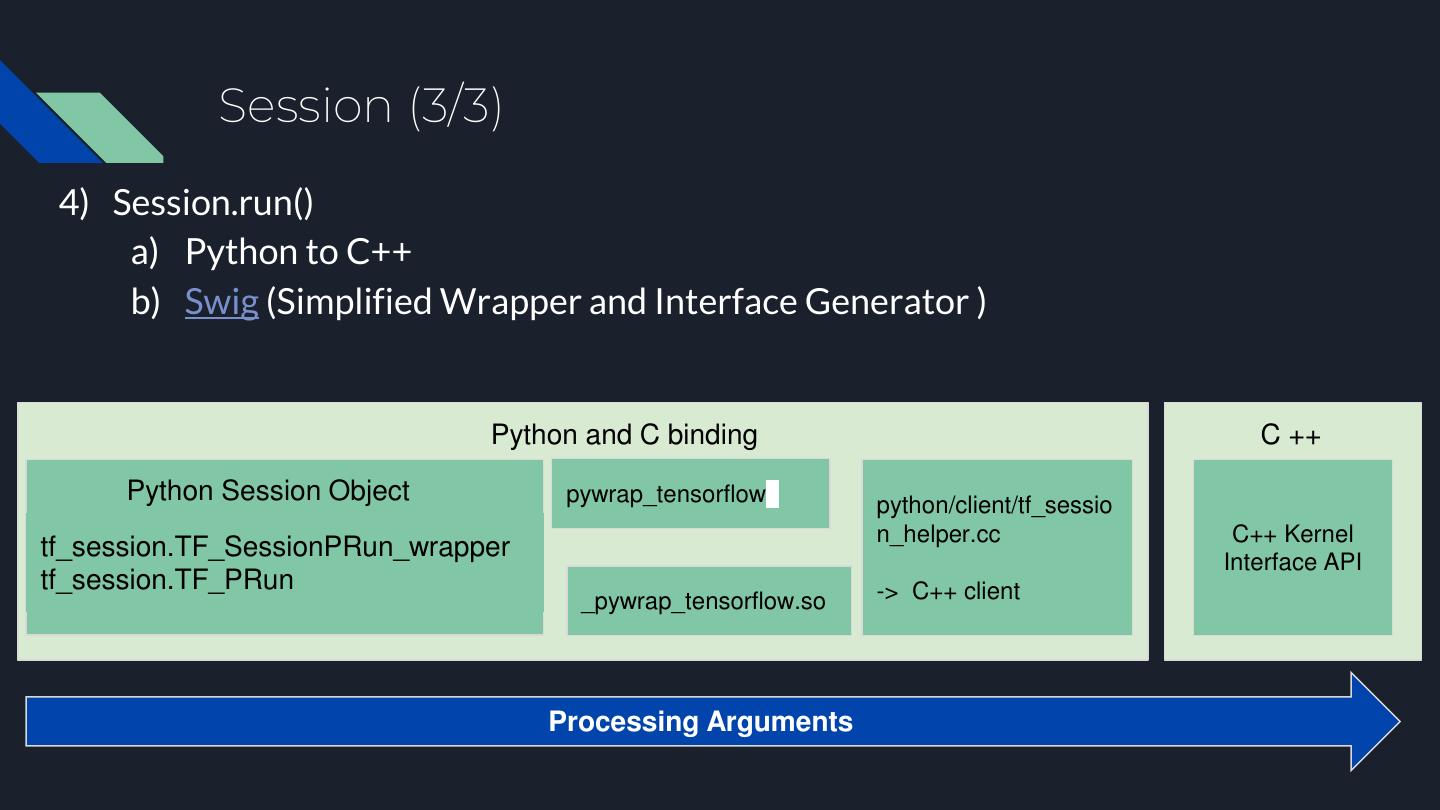

17 . Session (3/3) 4) Session.run() a) Python to C++ b) Swig (Simplified Wrapper and Interface Generator ) Python and C binding C ++ Python Session Object pywrap_tensorflow python/client/tf_sessio n_helper.cc C++ Kernel tf_session.TF_SessionPRun_wrapper Interface API tf_session.TF_PRun -> C++ client _pywrap_tensorflow.so Processing Arguments

18 .Training

19 .

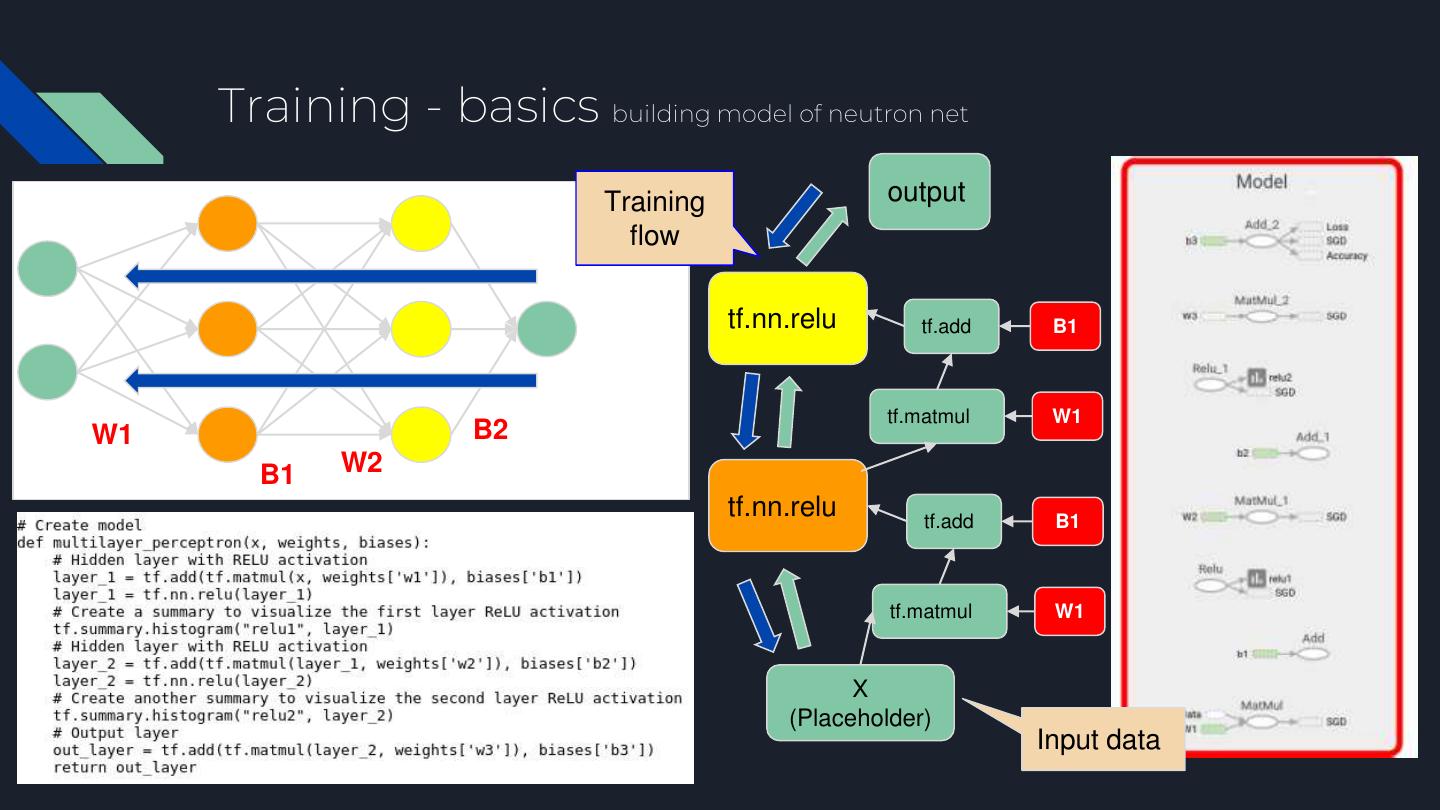

20 . Training - basics building model of neutron net Training output flow tf.nn.relu tf.add B1 tf.matmul W1 W1 B2 B1 W2 tf.nn.relu tf.add B1 tf.matmul W1 X (Placeholder) Input data

21 . Training - basics 1) Example: Multi-layer Epoch: 0001 cost= 54.984976748 Sample MNIST code Epoch: 0002 cost= 12.827661085 ... https://raw.githubusercontent.com/aymericdamien/Tenso Epoch: 0024 cost= 0.237979749 rFlow- Epoch: 0025 cost= 0.212254504 Examples/master/examples/4_Utils/tensorboard_advance Optimization Finished! d.py Accuracy: 0.9347

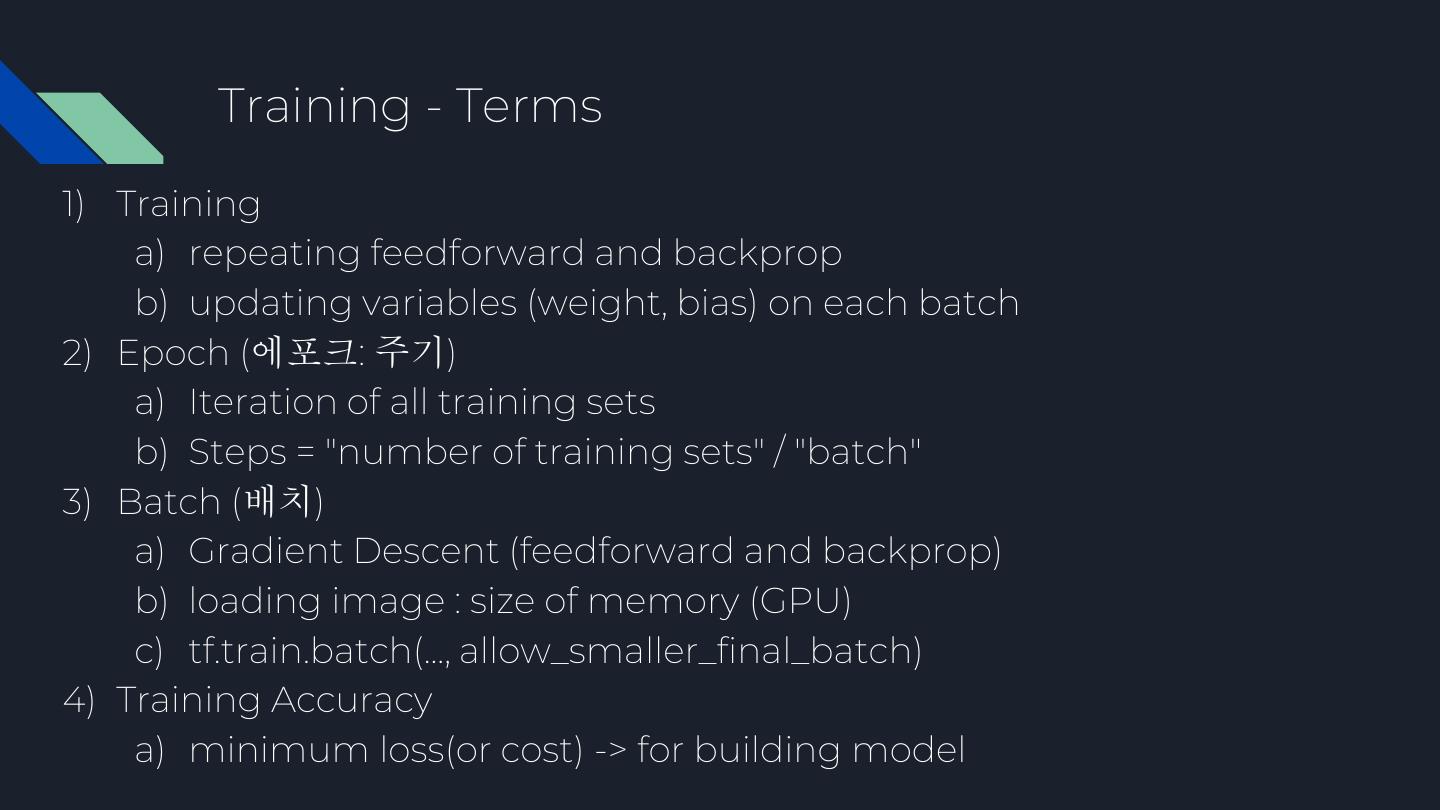

22 . Training - Terms 1) Training a) repeating feedforward and backprop b) updating variables (weight, bias) on each batch 2) Epoch (에포크: 주기) a) Iteration of all training sets b) Steps = "number of training sets" / "batch" 3) Batch (배치) a) Gradient Descent (feedforward and backprop) b) loading image : size of memory (GPU) c) tf.train.batch(..., allow_smaller_final_batch) 4) Training Accuracy a) minimum loss(or cost) -> for building model

23 . Train - Back Propagation 1) creating Train OP a) Loss Op b) Optimizer 2) Loss Op a) diff btw ideal(true) value and the predicted one b) Any op for calculation 3) Optimizer a) type of Gradient Descent (경사하강법) 4) run on session a) pass over as array

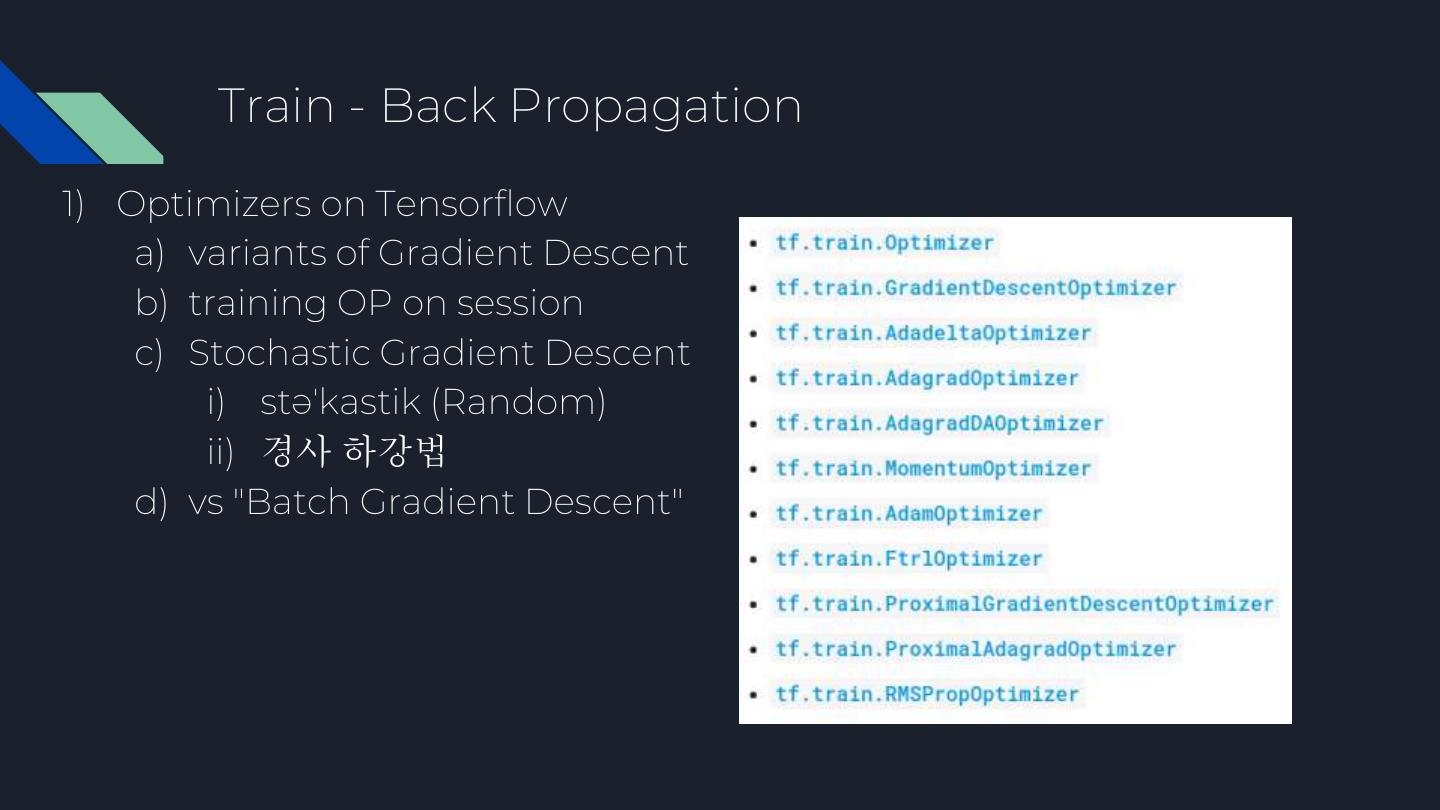

24 . Train - Back Propagation 1) Optimizers on Tensorflow a) variants of Gradient Descent b) training OP on session c) Stochastic Gradient Descent i) stəˈkastik (Random) ii) 경사 하강법 d) vs "Batch Gradient Descent"

25 . Run - Inference without training 1) meaning of Inference a) exactly -> Inference layer of model b) usually -> without training 2) how to a) load graph and predict b) Estimator.predict

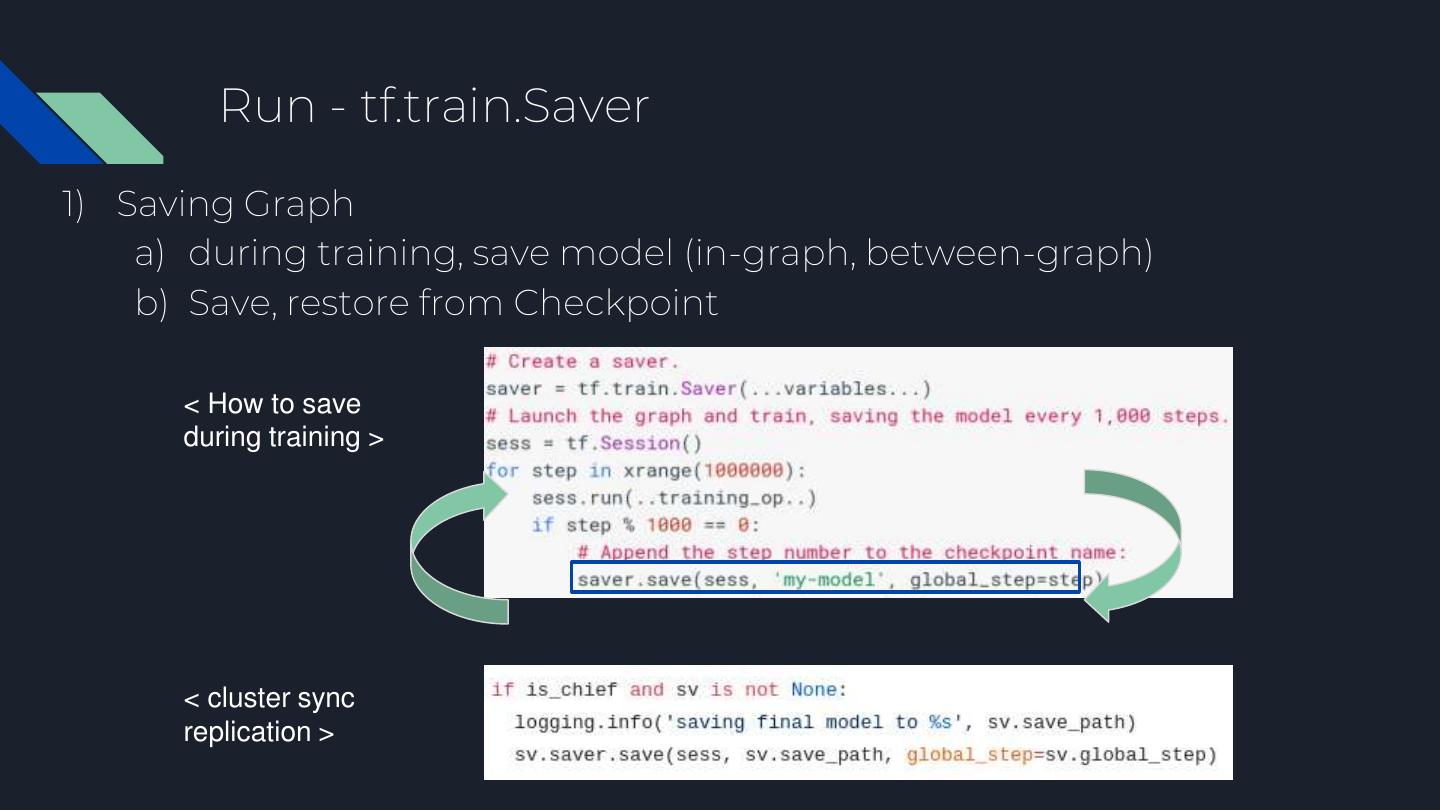

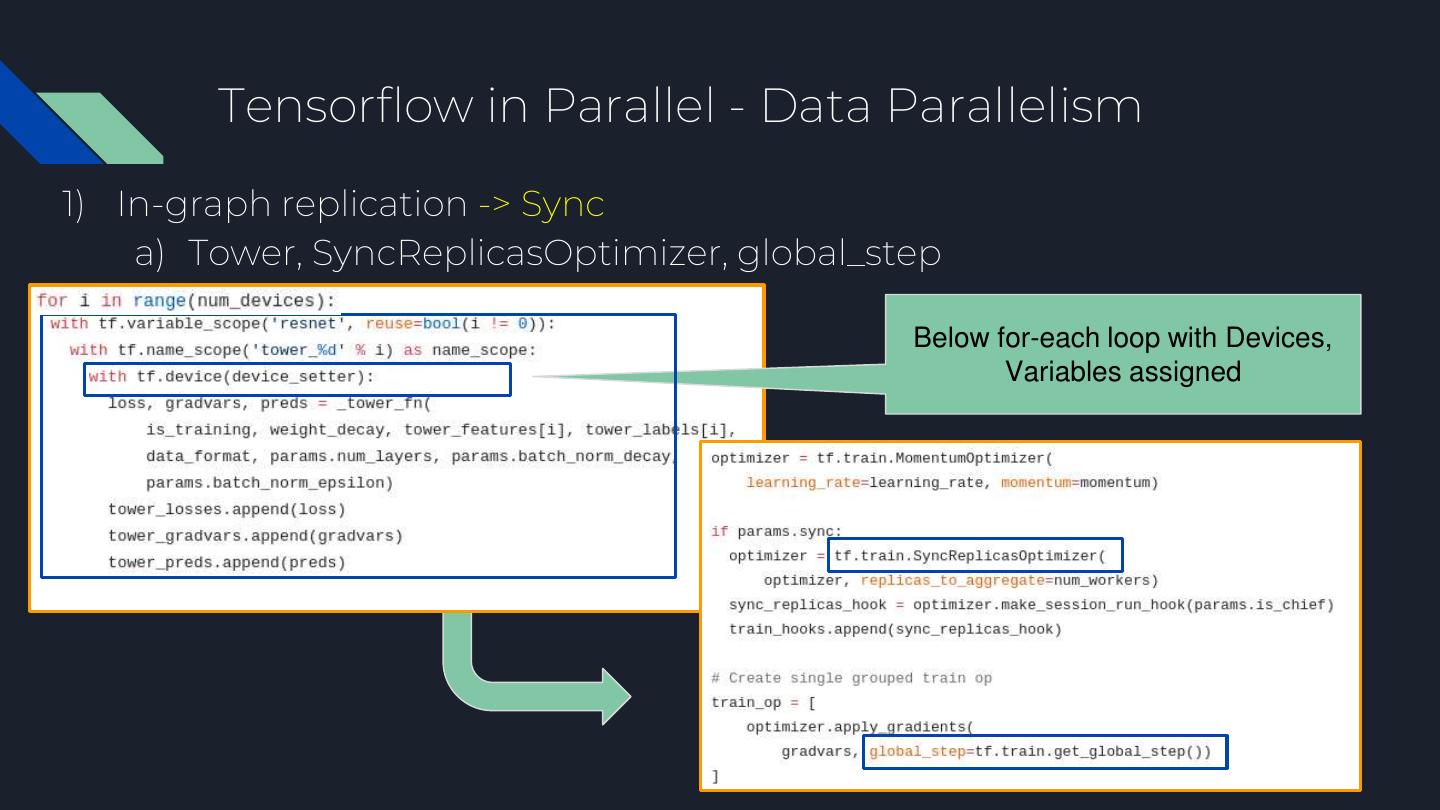

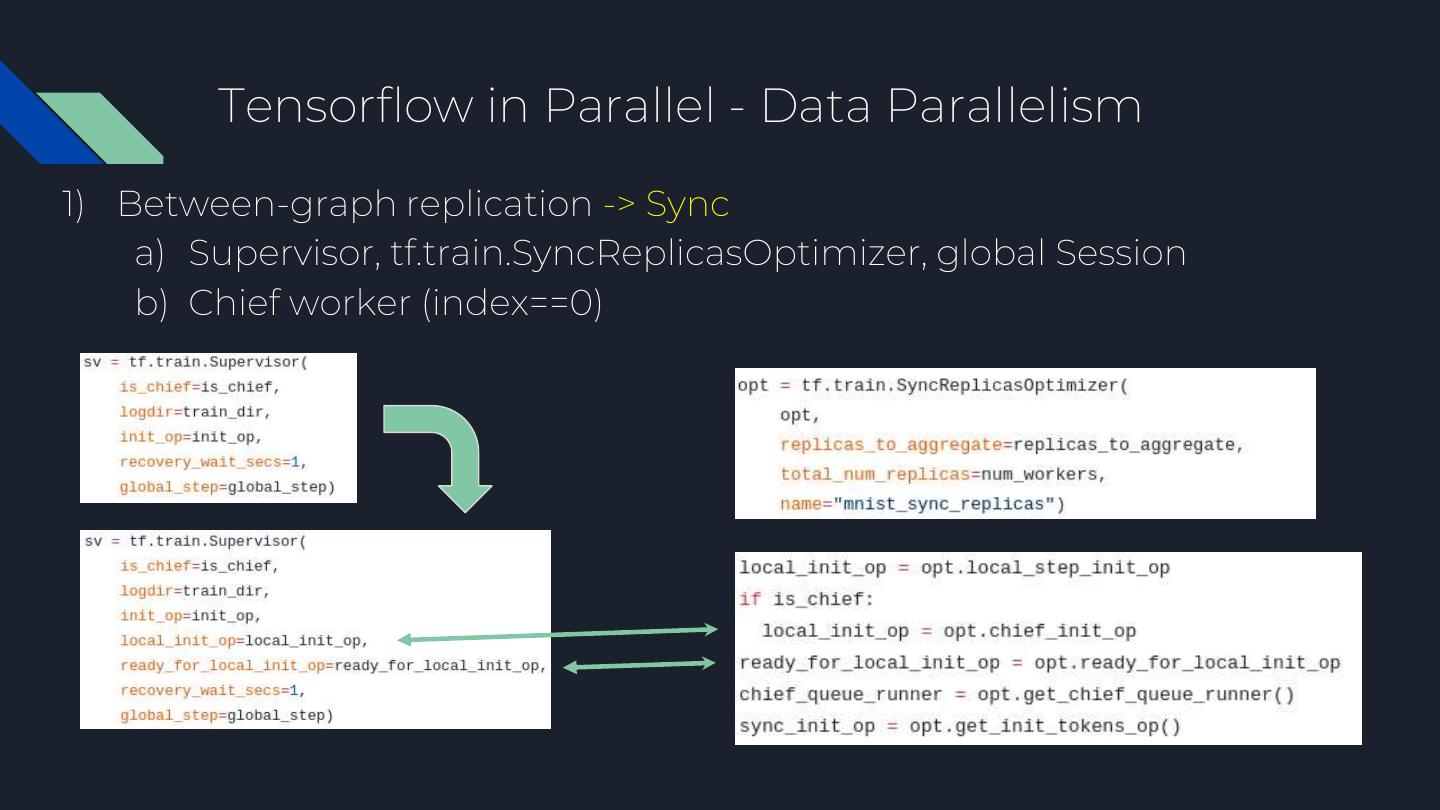

26 . Run - tf.train.Saver 1) Saving Graph a) during training, save model (in-graph, between-graph) b) Save, restore from Checkpoint < How to save during training > < cluster sync replication >

27 .Training example

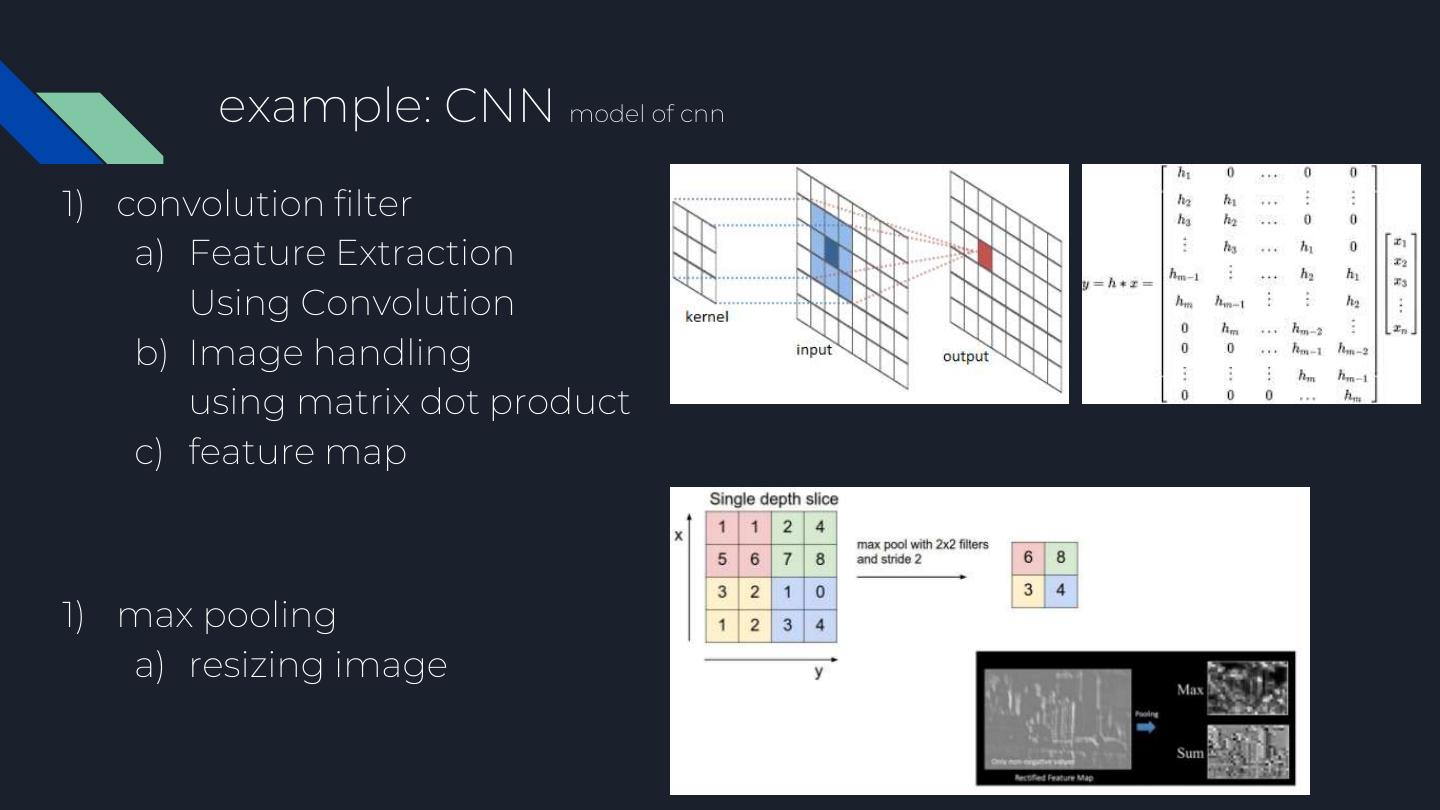

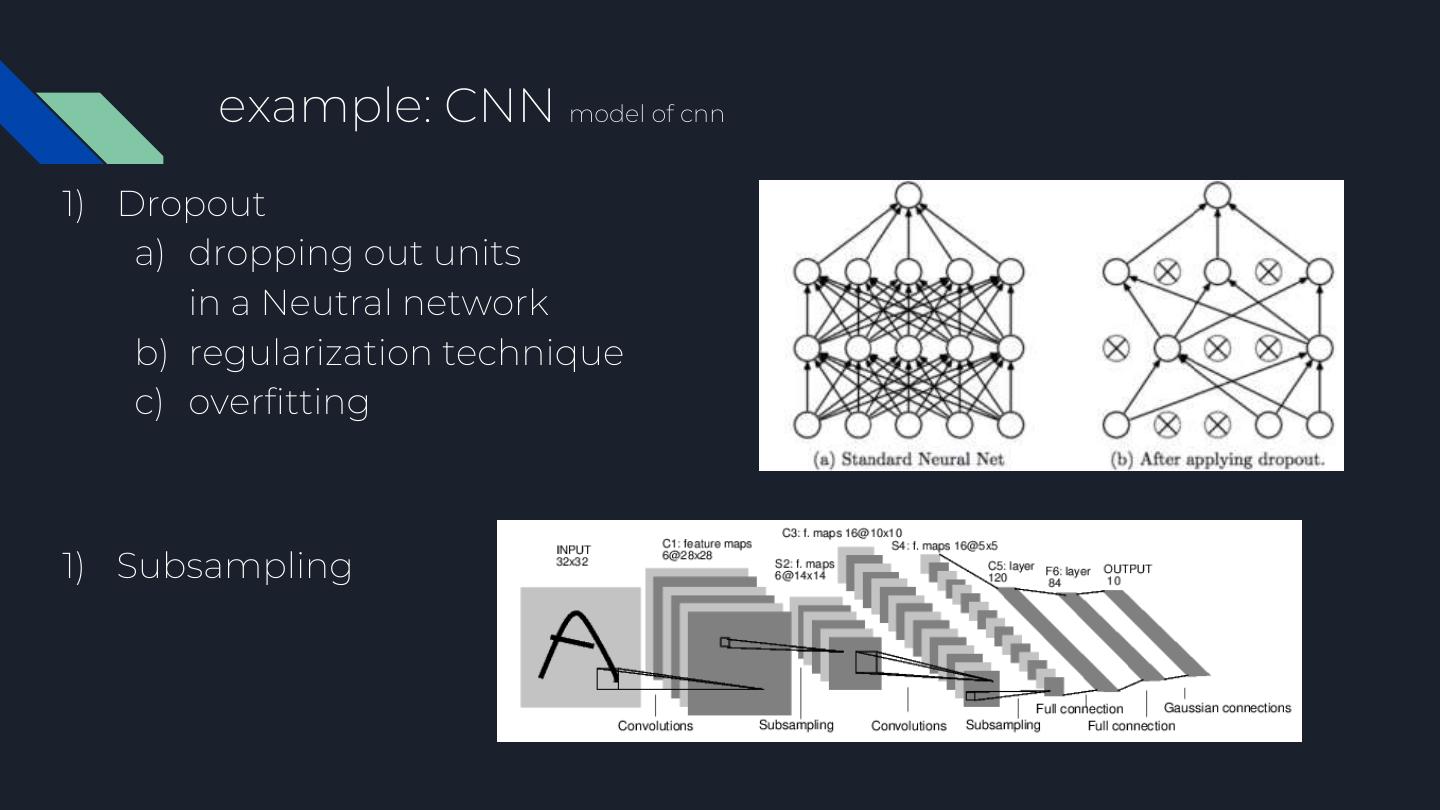

28 . example: CNN model of cnn 1) convolution filter a) Feature Extraction Using Convolution b) Image handling using matrix dot product c) feature map 1) max pooling a) resizing image

29 . example: CNN model of cnn 1) Dropout a) dropping out units in a Neutral network b) regularization technique c) overfitting 1) Subsampling