- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

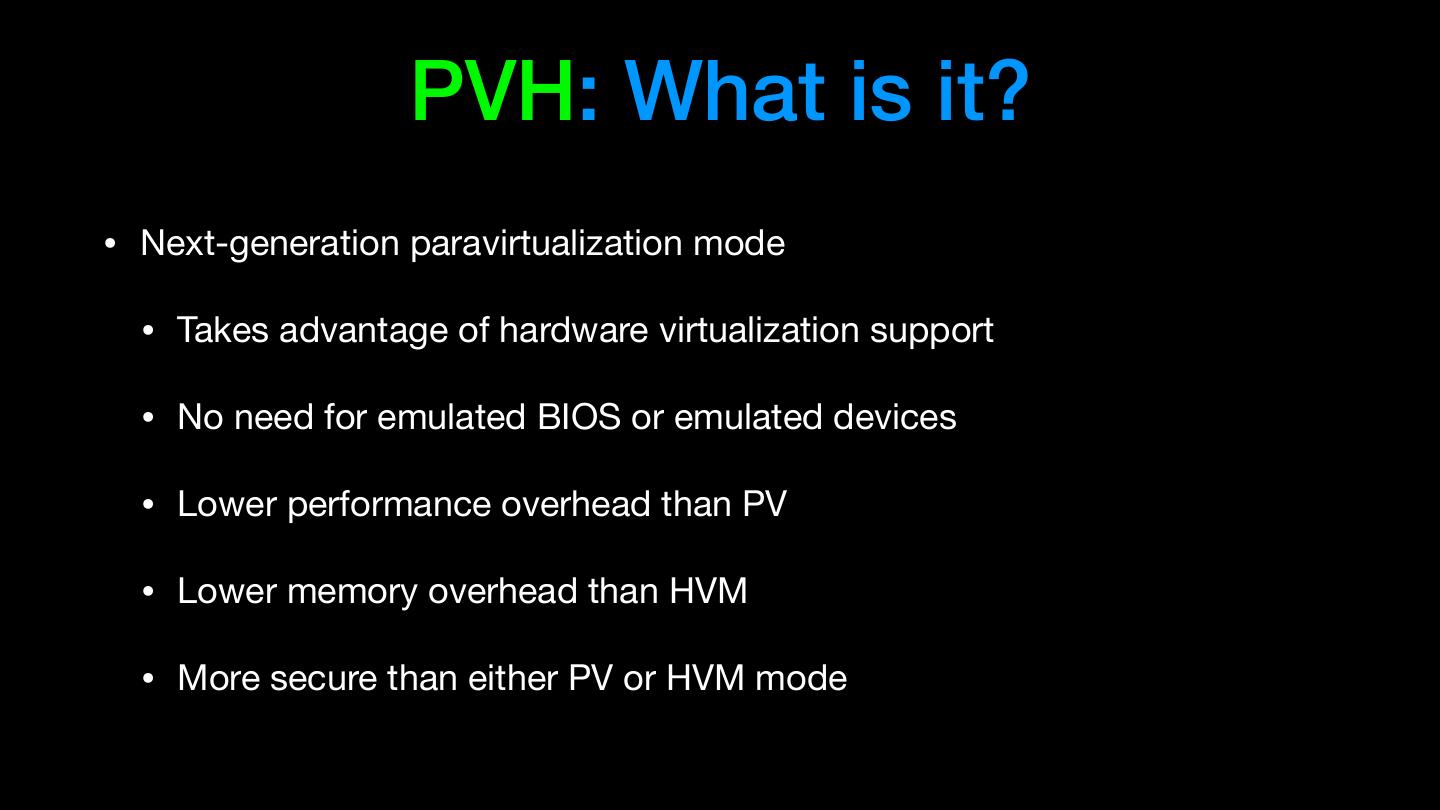

Xen Project: After 15 years, What's Next?

展开查看详情

1 .Xen on x86, 15 years later Recent development, future direction

2 . QEMU Deprivileging PVShim Panopticon Large guests (288 vcpus) NVDIMM PVH Guests VM Introspection / PVCalls Memaccess PV IOMMU ACPI Memory Hotplug PVH dom0 Posted Interrupts KConfig Sub-page protection Hypervisor Multiplexing

3 . Talk approach • Highlight some key features • Recently finished • In progress • Cool Idea: Should be possible, nobody committed to working on it yet • Highlight how these work together to create interesting theme

4 .• PVH (with PVH dom0) • KConfig • … to disable PV • PVshim • Windows in PVH

5 . PVH: Finally here • Full PVH DomU support in Xen 4.10, Linux 4.15 • First backwards-compatibility hack • Experimental PVH Dom0 support in Xen 4.11

6 . PVH: What is it? • Next-generation paravirtualization mode • Takes advantage of hardware virtualization support • No need for emulated BIOS or emulated devices • Lower performance overhead than PV • Lower memory overhead than HVM • More secure than either PV or HVM mode

7 .• PVH (with PVH dom0) • KConfig • … to disable PV • PVshim • Windows in PVH

8 . KConfig • KConfig for Xen allows… • Users to produce smaller / more secure binaries • Makes it easier to merge experimental functionality • KConfig option to disable PV entirely

9 .• PVH • KConfig • … to disable PV • PVshim • Windows in PVH

10 . PVShim • Some older kernels can only run in PV mode • Expect to run in ring 1, ask a hypervisor PV-only kernel (ring 1) to perform privileged actions “Shim” Hypervisor (ring 0) • “Shim”: A build of Xen designed to allow an unmodified PV guest to run in PVH mode PVH Guest • type=‘pvh’ / pvshim=1 Xen

11 .• PVH • KConfig • … to disable PV No-PV • PVshim Hypervisors • Windows in PVH

12 .• PVH • KConfig • … to disable PV • PVshim • Windows in PVH

13 . Windows in PVH • Windows EFI should be able to do • OVMF (Virtual EFI implementation) already has • PVH support • Xen PV disk, network support • Only need PV Framebuffer support…?

14 .• PVH One guest type • KConfig to rule them all • … to disable PV • PVshim • Windows in PVH

15 .Is PV mode obsolete then?

16 .• KConfig: No HVM • PV 9pfs • PVCalls • rkt Stage 1 • Hypervisor Multiplexing

17 .Containers: Passing through “host” OS resources Container Container Container Container Host Filesystem Host Port Linux Container Host Host Port

18 .Containers: Passing through “host” OS resources • Allows file-based difference tracking rather than block-based • Allows easier inspection of container state Container Container Container Container from host OS • Allows setting up multiple isolated services without needing to mess around with Host Filesystem multiple IP addresses Host Linux Host

19 .• KConfig: No HVM • PV 9pfs • PVCalls • rkt Stage 1 • Hypervisor Multiplexing

20 . PV 9pfs • Allows dom0 to expose files directly to 9pfs 9pfs 9pfs Host guests Front Front Backend Filesystem Dom0 Xen

21 .• KConfig: No HVM • PV 9pfs • PVCalls • “Stage 1 Xen” • Hypervisor Multiplexing

22 . PV Calls Host • Pass through specific system calls Port • socket() PVCalls PVCalls PVCalls Host Front Front Backend Port • listen() • accept() • read() Dom0 • write() Xen

23 .• KConfig: No HVM • PV 9pfs • PVCalls • “Stage 1 Xen” • Hypervisor Multiplexing

24 . rkt Stage 1 • rkt: “Container abstraction” part of CoreOS • Running rkt containers (part of CoreOS) under Xen

25 .• Xen as full KConfig: No HVM • PV 9pfs • PVCalls Container • rkt Stage 1 • Hypervisor Multiplexing Host

26 .• KConfig: No HVM • PV 9pfs • PVCalls • “Stage 1 Xen” • Hypervisor Multiplexing

27 . Hypervisor multiplexing • Xen can run in an HVM guest /without nested HVM support/ • PV protocols use xenbus + hypercalls • At the moment, Linux code assumes only one xenbus / hypervisor Front Back Front Back L1 Dom0 • Host PV drivers L1 Xen • OR Guest PV drivers HVM Guest L0 Dom0 • Multiplexing: Allow both L0 Xen

28 .• KConfig: No HVM Xen as • • PV 9pfs PVCalls Cloud-ready • “Stage 1 Xen” Container • Hypervisor Multiplexing Host

29 . QEMU Deprivileging PVShim Panopticon Large guests (288 vcpus) NVDIMM PVH Guests VM Introspection / PVCalls Memaccess PV IOMMU ACPI Memory Hotplug PVH dom0 Posted Interrupts KConfig Sub-page protection Hypervisor Multiplexing