- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

基于区域卷积神经网络的手术视频中的刀具检测与手术技巧评估

展开查看详情

1 . Tool Detection and Operative Skill Assessment in Surgical Videos Using Region-Based Convolutional Neural Networks Amy Jin, Serena Yeung, Jeffrey Jopling, Jonathan Krause, Dan Azagury, Arnold Milstein, and Li Fei-Fei Stanford University Stanford, CA 94305 {ajin,serena,jkrause,feifeili}@cs.stanford.edu {jjopling,dazagury,amilstein}@stanford.edu Abstract Five billion people in the world lack access to quality surgical care. Surgeon skill varies dramatically, and many surgical patients suffer complications and avoidable harm. Improving surgical training and feedback will help to reduce the rate of complications—half of which have been shown to be preventable. To do this, it is essential to assess operative skills, a process that is currently manual, time consuming, subjective, and requires experts. In this work, we introduce an approach to automatically assess surgeon performance by tracking and analyzing tool movements in surgical videos, leveraging region-based convolutional neural networks. In order to study this problem, we also introduce a new dataset, m2cai16- tool-locations, which extends the m2cai16-tool dataset with spatial bounds of tools. While previous methods have addressed tool presence detection, ours is the first to not only detect presence but also spatially localize surgical tools in real-world surgical videos. We show that our method both effectively detects the spatial bounds of tools as well as significantly outperforms existing methods on tool presence detection. We further demonstrate the ability of our method to assess surgical quality through analysis of tool usage patterns, movement range, and economy of motion. 1 Introduction According to the World Health Organization, there is a global mortality rate of 0.5-5% for major procedures, and up to 25% of surgical patients suffer complications [1]. Many of these complications are caused by poor individual and team performance, for which inadequate training and feedback play an important role [2, 3, 4]. These outcomes can be improved, as studies show that half of all adverse surgical events are preventable. One major challenge to improving these outcomes is that surgeons currently lack individualized, objective feedback on their surgical technique. Real time automated surgical video analysis can provide a way to objectively and efficiently assess surgical skill. In this work, we address this task of spatial tool detection, which to our knowledge has not been previously studied. In order to study this, we introduce a new dataset, m2cai16-tool-locations, which extends the m2cai16-tool dataset with spatial bounds of tools. We develop an approach leveraging region-based convolutional neural networks (R-CNNs) to perform spatial detection of tools, and show that our method is able to both effectively detect the spatial bounds of tools as well as significantly outperform previous work on detecting tool presence. Finally, we demonstrate that our spatial detections in these real-world surgical videos enables efficient assessment of surgical quality through analysis of tool usage patterns, movement range, and economy of motion. 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA.

2 .Figure 1: Top: the seven tools and their annotation counts in m2cai-16-tool-locations. Bottom: example frames with their spatial tool annotations. Color of the bounding box corresponds to tool identity. 2 Related Work Early work on surgical tool detection, categorization, and tracking include those based on radio frequency identification (RFID) tags [5]; segmentation, contour processing and 3D modelling [6]; and the Viola-Jones detection framework [7]. Furthermore, deep learning approaches based on convolutional neural networks have shown impressive performance on computer vision tasks [8], and works including [9, 10, 11, 12] leverage deep learning architectures to achieve state-of-the-art performance on surgical tool presence detection and phase recognition. Our work builds on these directions, and uses region-based convolutional neural networks [13] to detect the spatial bounds of tools and enable richer analysis of surgical quality. 3 Dataset In order to study the task of spatial tool detection, a dataset containing annotations of spatial bounds of tools is required. However, to the best of our knowledge, no such dataset currently exists for real-world laparoscopic surgical videos. We therefore collect and introduce a new dataset, m2cai16- tool-locations, which extends the m2cai16-tool dataset [14] with spatial annotations of tools. m2cai-16-tool consists of 15 videos recorded at 25 fps of cholecystectomy procedures. The dataset contains 23,000 frames labeled with binary annotations indicating presence or absence of seven surgical tools: grasper, bipolar, hook, scissors, clip applier, irrigator, and specimen bag. In m2cai16- tool-locations, we label 2532 of the frames, with the coordinates of spatial bounding boxes around the tools. We use 50%, 30%, and 20% for training, validation, and test splits. 4 Approach Our approach for spatial detection of surgical tools is based on Faster R-CNN [13], a region-based convolutional neural network. The input is a video frame, and the output is the spatial coordinates of bounding boxes around any of the seven surgical instruments in Fig. 1. This output allows us to perform qualitative and quantitative analyses of tool movements, from tracking tool usage patterns to evaluating motion economy. The base network is a VGG-16 convolutional neural network [15], which extracts powerful visual features. On top of this network is a region proposal network (RPN) that shares convolutional features with object detection networks. For each input image, the RPN generates region proposals likely to contain an object, and features are pooled over these regions before being passed to a final classification and bounding box refinement network. We pre-train the network on the ImageNet dataset [8], where a large amount of data is available to learn general visual features, and then fine-tune the network on our m2cai16-tool-locations dataset, where a smaller amount of data is labeled with the surgical tools of interest. To train the RPN, we assign a binary objectness label to each anchor at each sliding window position of the feature map. We also assign a positive label to anchors with an overlap greater than 0.8 with the ground-truth box or if those do not exist, to an anchor or anchors with the highest Intersection over Union (IoU), and a negative label to anchors with an IoU of less than 0.3. We fine-tune the VGG-16 network to optimize model performance using stochastic gradient descent. We modify the classification layer of the network to output softmax probabilities over the 7 tools. 2

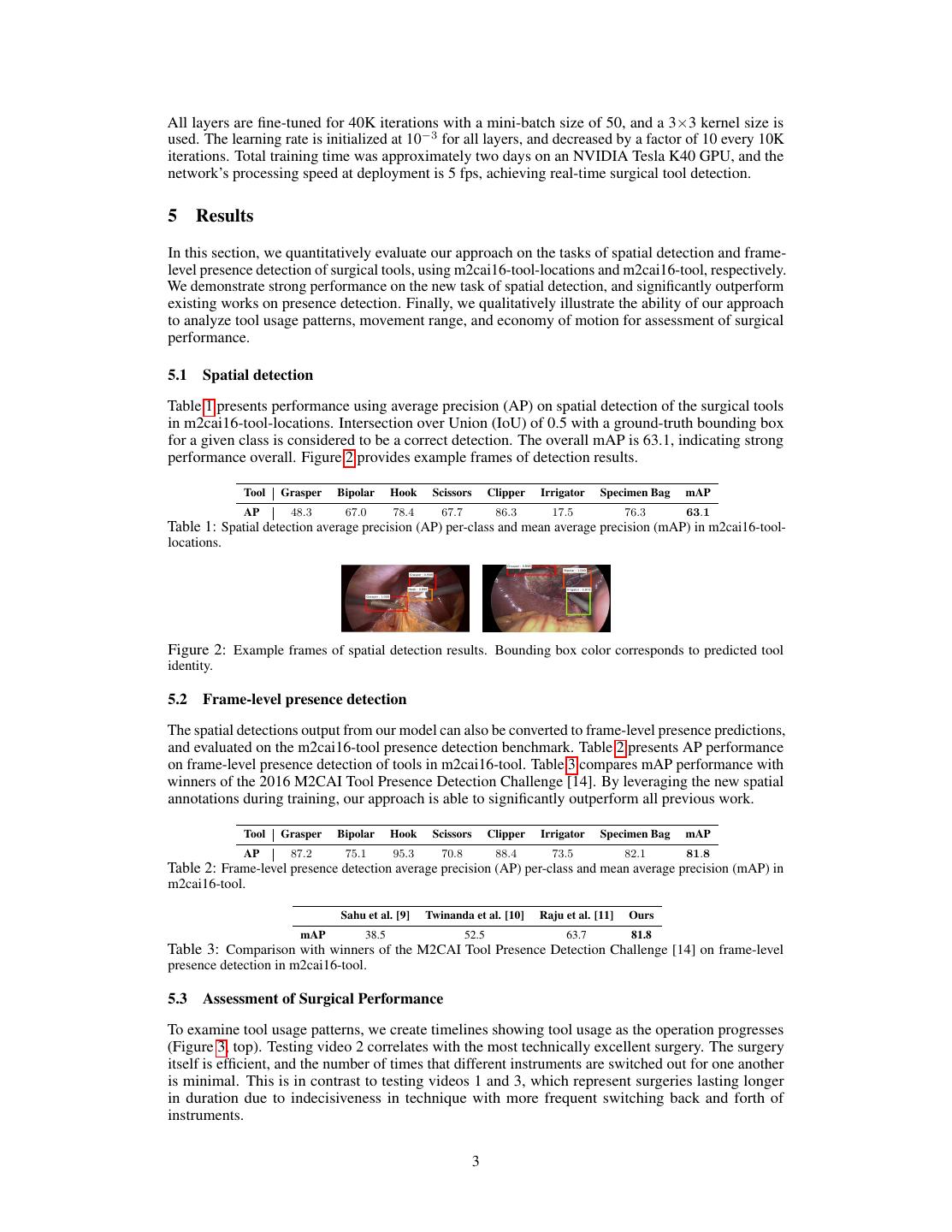

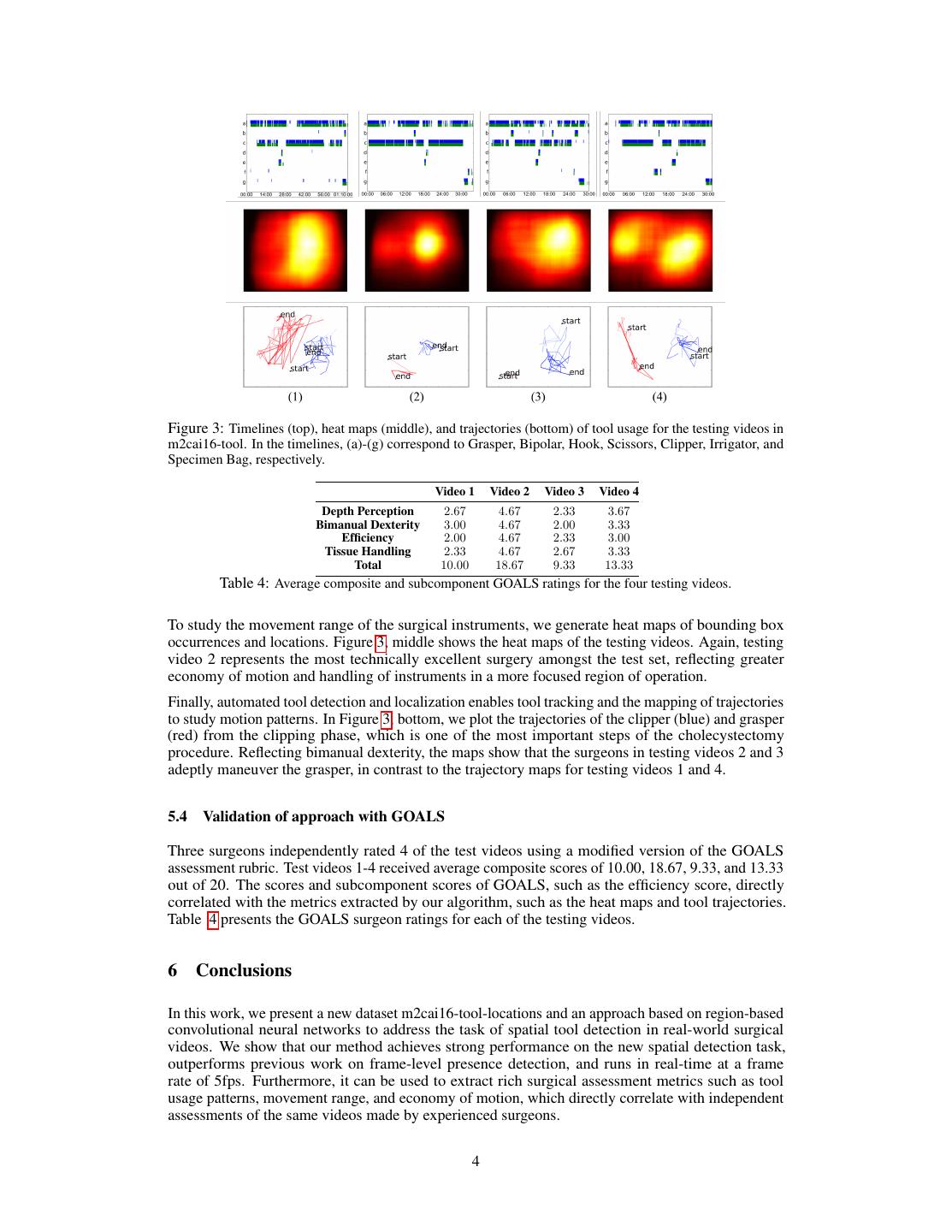

3 .All layers are fine-tuned for 40K iterations with a mini-batch size of 50, and a 3×3 kernel size is used. The learning rate is initialized at 10−3 for all layers, and decreased by a factor of 10 every 10K iterations. Total training time was approximately two days on an NVIDIA Tesla K40 GPU, and the network’s processing speed at deployment is 5 fps, achieving real-time surgical tool detection. 5 Results In this section, we quantitatively evaluate our approach on the tasks of spatial detection and frame- level presence detection of surgical tools, using m2cai16-tool-locations and m2cai16-tool, respectively. We demonstrate strong performance on the new task of spatial detection, and significantly outperform existing works on presence detection. Finally, we qualitatively illustrate the ability of our approach to analyze tool usage patterns, movement range, and economy of motion for assessment of surgical performance. 5.1 Spatial detection Table 1 presents performance using average precision (AP) on spatial detection of the surgical tools in m2cai16-tool-locations. Intersection over Union (IoU) of 0.5 with a ground-truth bounding box for a given class is considered to be a correct detection. The overall mAP is 63.1, indicating strong performance overall. Figure 2 provides example frames of detection results. Tool Grasper Bipolar Hook Scissors Clipper Irrigator Specimen Bag mAP AP 48.3 67.0 78.4 67.7 86.3 17.5 76.3 63.1 Table 1: Spatial detection average precision (AP) per-class and mean average precision (mAP) in m2cai16-tool- locations. Figure 2: Example frames of spatial detection results. Bounding box color corresponds to predicted tool identity. 5.2 Frame-level presence detection The spatial detections output from our model can also be converted to frame-level presence predictions, and evaluated on the m2cai16-tool presence detection benchmark. Table 2 presents AP performance on frame-level presence detection of tools in m2cai16-tool. Table 3 compares mAP performance with winners of the 2016 M2CAI Tool Presence Detection Challenge [14]. By leveraging the new spatial annotations during training, our approach is able to significantly outperform all previous work. Tool Grasper Bipolar Hook Scissors Clipper Irrigator Specimen Bag mAP AP 87.2 75.1 95.3 70.8 88.4 73.5 82.1 81.8 Table 2: Frame-level presence detection average precision (AP) per-class and mean average precision (mAP) in m2cai16-tool. Sahu et al. [9] Twinanda et al. [10] Raju et al. [11] Ours mAP 38.5 52.5 63.7 81.8 Table 3: Comparison with winners of the M2CAI Tool Presence Detection Challenge [14] on frame-level presence detection in m2cai16-tool. 5.3 Assessment of Surgical Performance To examine tool usage patterns, we create timelines showing tool usage as the operation progresses (Figure 3, top). Testing video 2 correlates with the most technically excellent surgery. The surgery itself is efficient, and the number of times that different instruments are switched out for one another is minimal. This is in contrast to testing videos 1 and 3, which represent surgeries lasting longer in duration due to indecisiveness in technique with more frequent switching back and forth of instruments. 3

4 .Figure 3: Timelines (top), heat maps (middle), and trajectories (bottom) of tool usage for the testing videos in m2cai16-tool. In the timelines, (a)-(g) correspond to Grasper, Bipolar, Hook, Scissors, Clipper, Irrigator, and Specimen Bag, respectively. Video 1 Video 2 Video 3 Video 4 Depth Perception 2.67 4.67 2.33 3.67 Bimanual Dexterity 3.00 4.67 2.00 3.33 Efficiency 2.00 4.67 2.33 3.00 Tissue Handling 2.33 4.67 2.67 3.33 Total 10.00 18.67 9.33 13.33 Table 4: Average composite and subcomponent GOALS ratings for the four testing videos. To study the movement range of the surgical instruments, we generate heat maps of bounding box occurrences and locations. Figure 3, middle shows the heat maps of the testing videos. Again, testing video 2 represents the most technically excellent surgery amongst the test set, reflecting greater economy of motion and handling of instruments in a more focused region of operation. Finally, automated tool detection and localization enables tool tracking and the mapping of trajectories to study motion patterns. In Figure 3, bottom, we plot the trajectories of the clipper (blue) and grasper (red) from the clipping phase, which is one of the most important steps of the cholecystectomy procedure. Reflecting bimanual dexterity, the maps show that the surgeons in testing videos 2 and 3 adeptly maneuver the grasper, in contrast to the trajectory maps for testing videos 1 and 4. 5.4 Validation of approach with GOALS Three surgeons independently rated 4 of the test videos using a modified version of the GOALS assessment rubric. Test videos 1-4 received average composite scores of 10.00, 18.67, 9.33, and 13.33 out of 20. The scores and subcomponent scores of GOALS, such as the efficiency score, directly correlated with the metrics extracted by our algorithm, such as the heat maps and tool trajectories. Table 4 presents the GOALS surgeon ratings for each of the testing videos. 6 Conclusions In this work, we present a new dataset m2cai16-tool-locations and an approach based on region-based convolutional neural networks to address the task of spatial tool detection in real-world surgical videos. We show that our method achieves strong performance on the new spatial detection task, outperforms previous work on frame-level presence detection, and runs in real-time at a frame rate of 5fps. Furthermore, it can be used to extract rich surgical assessment metrics such as tool usage patterns, movement range, and economy of motion, which directly correlate with independent assessments of the same videos made by experienced surgeons. 4

5 .References [1] T. Weiser, S. Regenbogen, K. Thompson, et al. An estimation of the global volume of surgery: a modelling strategy based on available data. The Lancet, 372(9633):139–144, 2008. [2] A. Gawande, E. Thomas, M. Zinner, and T. Brennan. The incidence and nature of surgical adverse events in Colorado and Utah in 1992. Surgery, 126(1):66–75, 1999. [3] M. Healey, S. Shackford, T. Osler, F. Rogers, and E. Burns. Complications in surgical patients. Archives of Surgery, 137(5):611–618, 2002. [4] A. Kable, R. Gibberd, and A. Spigelman. Adverse events in surgical patients in Australia. International Journal for Quality in Health Care, 14(4):269–276, 2002. [5] M. Kranzfelder, A. Schneider, A. Fiolka, et al. Real-time instrument detection in minimally invasive surgery using radiofrequency identification technology. Journal of Surgical Research, 185(2):704–710, 2013. [6] S. Speidel, J. Benzko, S. Krappe, et al. Automatic classification of minimally invasive instru- ments based on endoscopic image sequences. In SPIE Medical Imaging, pages 72610A–72610A. International Society for Optics and Photonics, 2009. [7] F. Lalys, L. Riffaud, D. Bouget, and P. Jannin. A framework for the recognition of high-level surgical tasks from video images for cataract surgeries. IEEE Transactions on Biomedical Engineering, 59(4):966–976, 2012. [8] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, et al. Imagenet large scale visual recognition challenge. International Journal of Computer Vision, 115(3):211–252, 2015. [9] M. Sahu, A. Mukhopadhyay, A. Szengel, and S. Zachow. Tool and phase recognition using contextual CNN features. 2016. [10] A. Twinanda, D. Mutter, J. Marescaux, M. de Mathelin, and N. Padoy. Single-and multi- task architectures for tool presence detection challenge at M2CAI 2016. arXiv preprint arXiv:1610.08851, 2016. [11] A. Raju, S. Wang, and J. Huang. M2CAI surgical tool detection challenge report. 2016. [12] A. Twinanda, S. Shehata, D. Mutter, J. Marescaux, M. de Mathelin, and N. Padoy. Endonet: A deep architecture for recognition tasks on laparoscopic videos. IEEE Transactions on Medical Imaging, 36(1):86–97, 2016. [13] S. Ren, K. He, R. Girshick, and J. Sun. Faster R-CNN: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems, pages 91–99, 2015. [14] Tool presence detection challenge results. http://camma.u-strasbg.fr/m2cai2016/index.php/ tool-presence-detection-challenge-results. [15] K. Simonyan and A. Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014. 5