- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Device Assignment with Nested Guests and DPDK

展开查看详情

1 .Device Assignment with Nested Guests and DPDK Peter Xu <peterx@redhat.com> Red Hat Virtualization Team

2 . Agenda • Backgrounds • Problems • Unsafe userspace device drivers • Nested device assignments • Solution • Status updates, known issues 2 Device Assignment with Nested Guests and DPDK

3 .Backgrounds

4 . Backgrounds • What is this talk about • DMA of assigned devices (nothing about configuration spaces, IRQs, MMIOs...), and... • vIOMMU! • What is vIOMMU? • IOMMU is “MMU for I/O” • vIOMMU is the emulated IOMMU in the guests • What is device assignment? • The fastest device (always) to do IOs in the guest! (at least when without a vIOMMU in the guest...) 4 Device Assignment with Nested Guests and DPDK

5 . QEMU, Device Assignment & vIOMMU • QEMU had device assignment since 2012 • QEMU had vIOMMU emulation (VT-d) since 2014 • Emulated devices are supported by vIOMMU • Using QEMU’s memory API when DMA • DMA happens with QEMU’s awareness • Either full-emulated, or para-virtualized (vhost is special!) • Assigned devices are not supported by vIOMMU • Bypassing QEMU’s memory API when DMA • DMA happens without QEMU’s awareness • Need to talk to host IOMMU for that • Why bother? 5 Device Assignment with Nested Guests and DPDK

6 .The Problems (Why?)

7 . Problem 1: Userspace Drivers • More userspace drivers! • DPDK/SPDK use PMDs to drive devices • Userspace processes are not trusted • Processes can try to access any memory • Kernel protects against malicious memory access using MMU (until we have Meltdown and Spectre…) • Userspace device drivers are not trusted too! • Userspace drivers control devices, bypassing MMU • Need to protect the system on the device’s side 7 Device Assignment with Nested Guests and DPDK

8 . Userspace Drivers: MMU Protection Process A Process B Process C Allowed Allowed Illegal Access Denied MMU Memory (safe) 8 Device Assignment with Nested Guests and DPDK

9 . Userspace Drivers: Bypass MMU Protection Process A Process B Process C Allowed Allowed MMU Illegal Access Memory (unsafe) Device 9 Device Assignment with Nested Guests and DPDK

10 . Userspace Drivers: MMU and IOMMU Process A Process B Process C Allowed Allowed MMU Illegal Access Memory (safe) IOMMU Denied Device 10 Device Assignment with Nested Guests and DPDK

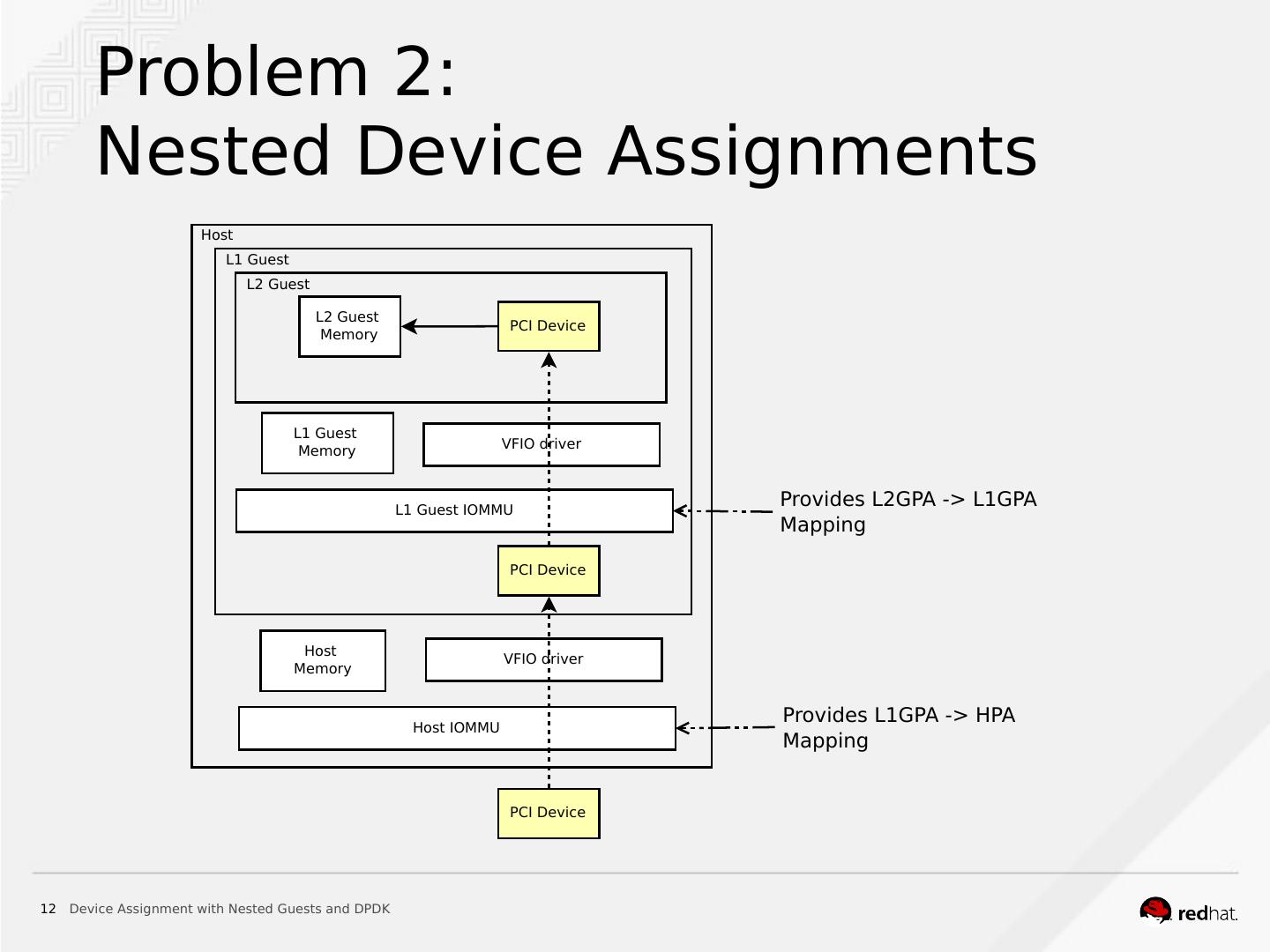

11 . Problem 2: Nested Device Assignments • Terms: • HPA: Host Physical Address • LnGPA: nth-level Guest Physical Address • How device assignment works • Maps L1GPA → HPA (L1 guest is unaware of this) • Can device assignment be nested? • What we want in the end: L2GPA → HPA • What we have already: L1GPA → HPA • Can’t do this without an IOMMU in L 1 guest (L2GPA → L1GPA)! 11 Device Assignment with Nested Guests and DPDK

12 . Problem 2: Nested Device Assignments Host L1 Guest L2 Guest L2 Guest PCI Device Memory L1 Guest VFIO driver Memory L1 Guest IOMMU Provides L2GPA -> L1GPA Mapping PCI Device Host VFIO driver Memory Host IOMMU Provides L1GPA -> HPA Mapping PCI Device 12 Device Assignment with Nested Guests and DPDK

13 . Summary of Problems • Unsafe userspace device drivers: • needs IOMMU in L1 guest to protect L1 guest kernel from malicious/buggy userspace drivers • Nested device assignments: • needs IOMMU in L1 guest to provide the L2GPA to L1GPA mapping, finally to HPA • We want device assignment to work under vIOMMU in the guests 13 Device Assignment with Nested Guests and DPDK

14 .The Solution (How?)

15 . Guest DMA for Emulated Devices, no vIOMMU QEMU Guest vCPU (1) Guest (2) Emulated (1) IO Request Device Memory (2) Allocate DMA buffer (e1000/virtio) (3) DMA request (GPA) (4) Memory access (GPA) (3) (4) Memory Core API 15 Device Assignment with Nested Guests and DPDK

16 . Guest DMA for Emulated Devices, with vIOMMU QEMU Guest vCPU (1) (2) (1) IO request Emulated Guest Device (2) Allocate DMA buffer, setup Memory (e1000/virtio) device page table (IOVA->GPA) (3) DMA request (IOVA) (8) (4) Page translation request (IOVA) (5) (6) (3) (5) Lookup device page table (IOVA->GPA) (6) Get translation result (GPA) vIOMMU Memory (7) Complete translation request (GPA) Core API (8) Memory access (GPA) (7) (4) 16 Device Assignment with Nested Guests and DPDK

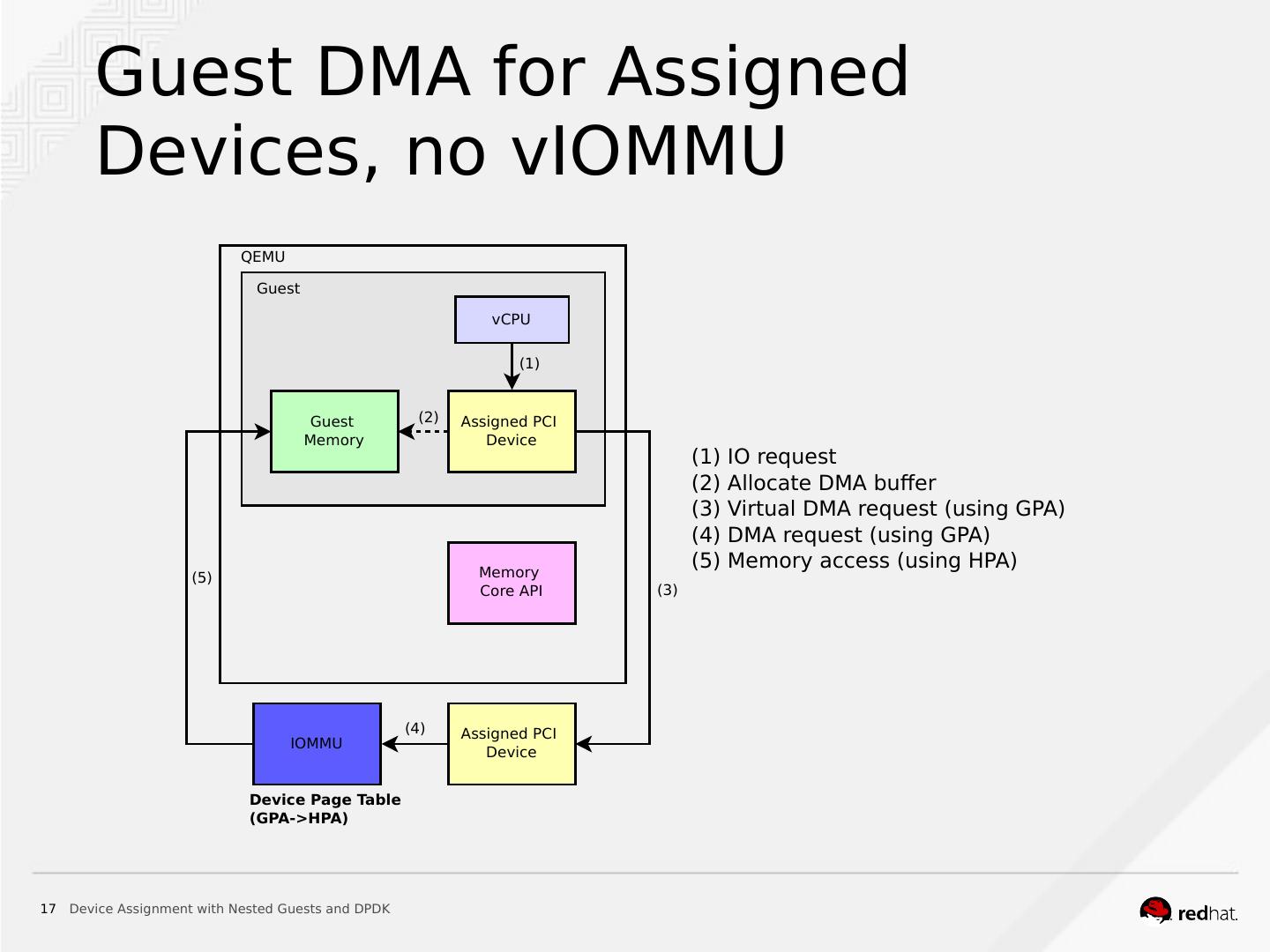

17 . Guest DMA for Assigned Devices, no vIOMMU QEMU Guest vCPU (1) Guest (2) Assigned PCI Memory Device (1) IO request (2) Allocate DMA buffer (3) Virtual DMA request (using GPA) (4) DMA request (using GPA) Memory (5) Memory access (using HPA) (5) Core API (3) (4) Assigned PCI IOMMU Device Device Page Table (GPA->HPA) 17 Device Assignment with Nested Guests and DPDK

18 . Guest DMA for Assigned Devices, with vIOMMU QEMU Guest vCPU (1) Guest (2) Assigned PCI Memory Device (1) IO request (2) Allocate DMA buffer, setup (3) device page table (IOVA->GPA) (3) Send MAP notification (6) (4) Sync shadow page table (IOVA->HPA) Memory (5) Sync Complete (9) vIOMMU (7) Core API (6) MAP notification Complete (7) Virtual DMA request (using IOVA) (4) (5) (8) DMA request (using IOVA) (9) Memory access (using HPA) (8) Assigned PCI IOMMU Device Device Shadow Page Table (IOVA->HPA) 18 Device Assignment with Nested Guests and DPDK

19 . IOMMU Shadow Page Table Hardware IOMMU page tables without/with a vIOMMU in the guests (GPA→HPA is the original page table; IOVA→HPA is the shadow page table) GPA[31:22] GPA[21:12] GPA[11:0] HPA HPA DATA HPA HPA DATA HPA HPA DATA ... ... ... Without vIOMMU: GPA->HPA HPA HPA DATA HPA HPA DATA HPA HPA DATA Device Page Table Root Pointer (GPA->HPA) HPA HPA DATA IOVA[31:22] IOVA[21:12] IOVA[11:0] HPA HPA DATA HPA HPA DATA HPA HPA DATA With vIOMMU: ... ... ... IOVA->HPA HPA HPA DATA HPA HPA DATA HPA HPA DATA Device Shadow Page Table Root Pointer (IOVA->HPA) HPA HPA DATA 19 Device Assignment with Nested Guests and DPDK

20 . Shadow Page Synchronization • General solution: • Write-protect the whole device page table? • Actual solution: • VT-d caching-mode: Any page entry update will require explicit invalidation of caches (VT-d spec chapter 6.1) • Intel only solution; PV-like, but also applies to hardware (Is there real hardware that declares caching-mode?) • Maybe it could be nicer if…? • Each invalidation can be marked as MAP or UNMAP • Invalidation range can be strict for MAPs 20 Device Assignment with Nested Guests and DPDK

21 . Shadow Page Table: MMU vs. IOMMU Type MMU IOMMU Processor memory Device memory Target accesses accesses (DMA) Trigger mode #PF (Page Faults) Caching mode (PV?) (shadow sync) Code path Short Long (shadow sync) (KVM only) (We’ll see...) Page table 32-bits, 64-bits, 64-bits only formats PAE... Need previous Yes (cares more about page No state? changes[1]) Page faults? Yes No (not yet?) [1]: Converts new/deleted pages into MAP/UNMAP notifies downwards. A funny fact is that we can’t really “modify” an IOMMU page table entry since we don’t normally have a modify API along the way (Please refer to VFIO_IOMMU_[UN]MAP_DMA in VFIO API, or iommu_[un]map() in kernel API) 21 Device Assignment with Nested Guests and DPDK

22 . Some Facts... • Emulated devices vs. Assigned devices • Emulated: quick mappings, slow IOs • Assigned: slow mappings, quick IOs • Performance (assigned devices + vIOMMU, 10gbps NIC) • Kernel drivers are slow (>80% degradation) • DPDK drivers are as fast as when without vIOMMU • Both L1/L2 guests performances close to line speed • What matters: whether the mapping is static • Long code path on shadow page synchronization • Reduce context switches? “Yet-Another vhost(-iommu)”? • “How long?” Please see the next slide... 22 Device Assignment with Nested Guests and DPDK

23 . “How Long?” (Example: when L2 guest maps one page) QEMU (L1 instance) QEMU (L2 instance) L2 Guest IOMMU Driver vIOMMU VFIO KVM vIOMMU IOMMU Driver L1 Kernel VFIO KVM IOMMU Driver Host Kernel Host IOMMU 23 Device Assignment with Nested Guests and DPDK

24 .Status Update

25 . Status and Update • QEMU • QEMU 2.12 provided initial support for device assignment with vIOMMU, QEMU 2.13 (3.0) contains some important bug fixes • Please use QEMU 2.13 (3.0) or newer • Linux • Linux v4.18 contains a very critical bug fix: 87684fd997a6 • Please use v4.18-rc1 or newer • For more information about VT-d emulation on QEMU, please refer to: • https://wiki.qemu.org/Features/VT-d 25 Device Assignment with Nested Guests and DPDK

26 . Known Issues • Extremely bad performance for dynamical mapping DMA • >80% performance drop for kernel drivers • DPDK applications are not affected • Limitation on assigning multiple functions that share a single IOMMU group in the host (when vIOMMU exists) • Currently only allow to assign a single function if multiple functions are sharing the same IOMMU group on the host 26 Device Assignment with Nested Guests and DPDK

27 .THANK YOU plus.google.com/+RedHat facebook.com/redhatinc linkedin.com/company/red-hat twitter.com/RedHat youtube.com/user/RedHatVideos