- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Processes(1), IPC, Scheduling Parallelism and POSIX Threads

展开查看详情

1 . Goals for Today CS194-24 • Processes, Scheduling (First Take) Advanced Operating Systems • Interprocess Communication Structures and Implementation • Multithreading Lecture 6 • Posix support for threads Processes(con’t), IPC, Scheduling Parallelism and POSIX Threads Interactive is important! Ask Questions! February 9th, 2014 Prof. John Kubiatowicz http://inst.eecs.berkeley.edu/~cs194-24 Note: Some slides and/or pictures in the following are adapted from slides ©2013 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.2 Recall: example of fork( ): Web Server Recall: How does fork() actually work in Linux? int main() { • Semantics are that fork() Serial Version duplicates a lot from the int listen_fd = listen_for_clients(); while (1) { int client_fd = accept(listen_fd); parent: handle_client_request(client_fd); – Memory space, File close(client_fd); Descriptors, Security Context } } Immediately after fork int main() { • How to make this cheap? int listen_fd = listen_for_clients(); while (1) { – Copy on Write! – Instead of copying Process Per Request int client_fd = accept(listen_fd); memory, copy page-tables if (fork() == 0) { handle_client_request(client_fd); close(client_fd); exit(0); // Close FD in child when done • Another option: vfork() } else { – Same effect as fork(), close(client_fd); // Close FD in parent but page tables not copied After Process-1 writes Page C // Let exited children rest in peace! – Instead, child executes in parent’s address space while (waitpid(-1,&status,WNOHANG) > 0); Parent blocked until child calls “exec()” or exits } } – Child not allowed to write to the address space 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.3 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.4

2 . Some preliminary notes on Kernel memory Linux Virtual memory map • Many OS implementations (including linux) map parts of kernel into every address space 0xFFFFFFFFFFFFFFFF – Allows kernel to see into client processes 0xFFFFFFFF 128TiB » Transferring data 896MB Kernel Kernel 1GB » Examining state Physical Addresses 64 TiB Addresses • In Linux, Kernel memory not generally visible to user Physical 0xC0000000 – Exception: special VDSO facility that maps kernel code into user 0xFFFF800000000000 space to aid in system calls (and to provide certain actual system calls such as gettimeofday(). • Every physical page described by a “page” structure “Canonical Hole” Empty – Collected together in lower physical memory Space 3GB Total – Can be accessed in kernel virtual space User – Linked together in various “LRU” lists Addresses 0x00007FFFFFFFFFFF • For 32-bit virtual memory architectures: 128TiB – When physical memory < 896MB User » All physical memory mapped at 0xC0000000 Addresses – When physical memory >= 896MB » Not all physical memory mapped in kernel space all the time 0x00000000 0x0000000000000000 » Can be temporarily mapped with addresses > 0xCC000000 • For 64-bit virtual memory architectures: 32-Bit Virtual Address Space 64-Bit Virtual Address Space – All physical memory mapped above 0xFFFF800000000000 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.5 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.6 Physical Memory in Linux Kmalloc/Kfree: The “easy interface” to memory • Simplest kernel interface to manage memory: kmalloc()/kfree() • Memory Zones: physical memory categories – Allocate chunk of memory in kernel’s address space (will be physically contiguous and virtually contiguous): – ZONE_DMA: < 16MB memory, DMAable on ISA bus void * kmalloc(size_t size, gfp_t flags); – ZONE_NORMAL: 16MB 896MB (mapped at 0xC0000000) – ZONE_HIGHMEM: Everything else (> 896MB) – Example usage: • Each zone has 1 freelist, 2 LRU lists (Active/Inactive) struct dog *p; p = kmalloc(sizeof(struct dog), GFP_KERNEL); if (!p) – Will discuss when we talk about memory paging /* Handle error! */ • Many different types of allocation – Free memory: void kfree(const void *ptr); – Important restrictions! – SLAB allocators, per-page allocators, mapped/unmapped » Must call with memory previously allocated through kmalloc() interface!!! • Many different types of allocated memory: » Must not free memory twice! – Anonymous memory (not backed by a file, heap/stack) • Alternative: vmalloc()/vfree() – Mapped memory (backed by a file) – Will be only contiguous in virtual address space – No guarantee contiguous in physical RAM • Allocation priorities » However, only devices typically care about difference – Is blocking allowed/etc – vmalloc() may be slower than kmalloc(), since kernel must deal with memory mappings 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.7 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.8

3 . Allocation flags More sophisticated memory allocation (more later) • Possible allocation type flags: • One (core) mechanism for requesting pages: everything – GFP_ATOMIC: Allocation high-priority and must never else on top of this mechanism: sleep. Use in interrupt handlers, top halves, while holding locks, or other times – Allocate contiguous group of pages of size 2order bytes cannot sleep given the specified mask: – GFP_NOWAIT: Like GFP_ATOMIC, except call will not fall back on emergency memory struct page * alloc_pages(gfp_t gfp_mask, pools. Increases likely hood of failure unsigned int order) – GFP_NOIO: Allocation can block but must not – Allocate one page: initiate disk I/O. struct page * alloc_page(gfp_t gfp_mask) – GFP_NOFS: Can block, and can initiate disk I/O, but will not initiate filesystem ops. – Convert page to logical address (assuming mapped): – GFP_KERNEL: Normal allocation, might block. Use in process context when safe to sleep. This should be default choice void * page_address(struct page *page) – GFP_USER: Normal allocation for processes • Also routines for freeing pages – GFP_HIGHMEM: Allocation from ZONE_HIGHMEM • Zone allocator uses “buddy” allocator that tries to – GFP_DMA Allocation from ZONE_DMA. Use in keep memory unfragmented combination with a previous flag • Allocation routines pick from proper zone, given flags 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.9 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.10 Administrivia Multiple Processes Collaborate on a Task • Groups posted on Website and in Redmine – See “Group Membership” link – Problems with infrastructure? Let us know! Proc 1 Proc 2 Proc 3 • Piazza Usage: – Excessive posts may not be useful • (Relatively) High Creation/memory Overhead – Before asking someone: • (Relatively) High Context-Switch Overhead » Try Google or “man” • Need Communication mechanism: » Read the code! – Separate Address Spaces Isolates Processes » Can get function prototypes by looking at header files – Shared-Memory Mapping • Developing FAQ – please tell us about problems » Accomplished by mapping addresses to common DRAM » Read and Write through memory • Design Document – Message Passing – What is in Design Document? » send() and receive() messages – BDD => “No Design Document”!? » Works across network – Pipes, Sockets, Signals, Synchronization primitives, …. 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.11 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.12

4 . Message queues Shared Memory Communication • What are they? Data 2 – Similar to the FIFO pipes, except that a tag (type) is matched Code Code when reading/writing Stack 1 Data » Allowing cutting in line (I am only interested in a particular type of Data message) Heap 1 Heap » Equivalent to merging of multiple FIFO pipes in one Heap • Creating a message queue: Code 1 Stack – int msgget(key_t key, int msgflag); Stack Stack 2 Shared – Key can be any large number. But to avoiding using conflicting Shared keys in different programs, use ftok() (the key master). Data 1 » key_t ftok(const char *path, int id); Prog 2 Prog 1 • Path point to a file that the process can stat Heap 2 Virtual • Id: project ID, only the last 8 bits are used Virtual • Message queue operations Address Code 2 Address int msgget(key_t, int flag) Space 1 Space 2 int msgctl(int msgid, int cmd, struct msgid_ds *buf) Shared int msgsnd(int msgid, const void *ptr, size nbytes, int flag); int msgrcv(int msgid, void *ptr, size_t nbytes, long type, int flag); • Communication occurs by “simply” reading/writing to shared address page • Performance advantage is no longer there in newer – Really low overhead communication systems (compared with pipe) – Introduces complex synchronization problems 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.13 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.14 Shared Memory Creating Shared Memory Common chunk of read/write memory // Create new segment among processes int shmget(key_t key, size_t size, int shmflg); MAX Example: key_t key; Shared Memory int shmid; (unique key) Create key = ftok(“<somefile>", ‘A'); ptr ptr Attach 0 Attach shmid = shmget(key, 1024, 0644 | IPC_CREAT); Proc. 1 Proc. 2 Special key: IPC_PRIVATE (create new segment) Flags: IPC_CREAT (Create new segment) IPC_EXCL (Fail if segment with key already exists) ptr ptr ptr lower 9 bits – permissions use on new segment Proc. 3 Proc. 4 Proc. 5 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.15 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.16

5 . Attach and Detach Shared Memory Recall: CPU Scheduling // Attach void *shmat(int shmid, void *shmaddr, int shmflg); // Detach int shmdt(void *shmaddr); Example: key_t key; int shmid; char *data; • Earlier, we talked about the life-cycle of a thread – Active threads work their way from Ready queue to key = ftok("<somefile>", ‘A'); Running to various waiting queues. shmid = shmget(key, 1024, 0644); • Question: How is the OS to decide which of several data = shmat(shmid, (void *)0, 0); tasks to take off a queue? – Obvious queue to worry about is ready queue shmdt(data); – Others can be scheduled as well, however • Scheduling: deciding which threads are given access Flags: SHM_RDONLY, SHM_REMAP to resources from moment to moment 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.17 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.18 Scheduling Assumptions Assumption: CPU Bursts • CPU scheduling big area of research in early 70’s • Many implicit assumptions for CPU scheduling: – One program per user Weighted toward small bursts – One thread per program – Programs are independent • Clearly, these are unrealistic but they simplify the problem so it can be solved – For instance: is “fair” about fairness among users or programs? » If I run one compilation job and you run five, you get five times as much CPU on many operating systems • Execution model: programs alternate between bursts of • The high-level goal: Dole out CPU time to optimize CPU and I/O some desired parameters of system – Program typically uses the CPU for some period of time, then does I/O, then uses CPU again USER1 USER2 USER3 USER1 USER2 – Each scheduling decision is about which job to give to the CPU for use by its next CPU burst Time – With timeslicing, thread may be forced to give up CPU before finishing current CPU burst 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.19 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.20

6 . Scheduling Policy Goals/Criteria Example: First-Come, First-Served (FCFS) Scheduling • Minimize Response Time • First-Come, First-Served (FCFS) – Minimize elapsed time to do an operation (or job) – Also “First In, First Out” (FIFO) or “Run until done” – Response time is what the user sees: » In early systems, FCFS meant one program » Time to echo a keystroke in editor scheduled until done (including I/O) » Time to compile a program » Now, means keep CPU until thread blocks » Real-time Tasks: Must meet deadlines imposed by World • Example: Process Burst Time P1 24 • Maximize Throughput P2 3 – Maximize operations (or jobs) per second P3 3 – Throughput related to response time, but not identical: – Suppose processes arrive in the order: P1 , P2 , P3 » Minimizing response time will lead to more context The Gantt Chart for the schedule is: switching than if you only maximized throughput – Two parts to maximizing throughput P1 P2 P3 » Minimize overhead (for example, context-switching) » Efficient use of resources (CPU, disk, memory, etc) 0 24 27 30 • Fairness – Waiting time for P1 = 0; P2 = 24; P3 = 27 – Share CPU among users in some equitable way – Average waiting time: (0 + 24 + 27)/3 = 17 – Fairness is not minimizing average response time: – Average Completion time: (24 + 27 + 30)/3 = 27 » Better average response time by making system less fair • Convoy effect: short process behind long process 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.21 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.22 Real-Time Scheduling Priorities Recall: Modern Technique: SMT/Hyperthreading • Efficiency is important but predictability is essential • Hardware technique – In RTS, performance guarantees are: – Exploit natural properties » Task- and/or class centric of superscalar processors » Often ensured a priori to provide illusion of – In conventional systems, performance is: multiple processors » System oriented and often throughput oriented – Higher utilization of » Post-processing (… wait and see …) processor resources – Real-time is about enforcing predictability, and does not equal • Can schedule each thread to fast computing!!! as if were separate CPU • Typical metrics: – However, not linear – Guarantee miss ratio = 0 (hard real-time) speedup! – Guarantee Probability(missed deadline) < X% (firm real-time) – If have multiprocessor, – Minimize miss ratio / maximize completion ratio (firm real-time) should schedule each – Minimize overall tardiness; maximize overall usefulness (soft processor first real-time) • Scheduling Heuristics? • EDF (Earliest Deadline First), LLF (Least Laxity First), RMS – Maximize pipeline throughput (Rate-Monotonic Scheduling), DM (Deadline Monotonic Scheduling) – Possibly include thread priority 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.23 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.24

7 . Kernel versus User-Mode threads Threading models mentioned by Silbershatz book • We have been talking about Kernel threads (/tasks) – Native threads supported directly by the kernel – Every thread can run or block independently – One process may have several threads waiting on different Simple One-to-One things Threading Model • Downside of kernel threads for many OSes: a bit expensive – Need to make a crossing into kernel mode to schedule – Linux does make creation of threads pretty inexpensive • Even lighter weight option: User Threads – User program provides scheduler and thread package – May have several user threads per kernel thread – User threads may be scheduled non-premptively relative to each other (only switch on yield()) • Downside of user threads: – When one thread blocks on I/O, all threads block – Kernel cannot adjust scheduling among all threads – Option: Scheduler Activations » Have kernel inform user level when thread blocks… Many-to-One Many-to-Many 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.25 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.26 Thread Level Parallelism (TLP) Multiprocessing vs Multiprogramming • In modern processors, Instruction Level Parallelism (ILP) • Remember Definitions: exploits implicit parallel operations within a loop or – Multiprocessing Multiple CPUs straight-line code segment – Multiprogramming Multiple Jobs or Processes • Thread Level Parallelism (TLP) explicitly represented by the use of multiple threads of execution that are – Multithreading Multiple threads per Process inherently parallel • What does it mean to run two threads “concurrently”? – Threads can be on a single processor – Scheduler is free to run threads in any order and – Or, on multiple processors interleaving: FIFO, Random, … • Concurrency vs Parallelism – Dispatcher can choose to run each thread to completion – Concurrency is when two tasks can start, run, and complete or time-slice in big chunks or small chunks in overlapping time periods. It doesn't necessarily mean they'll ever both be running at the same instant. A » For instance, multitasking on a single-threaded machine. Multiprocessing B C – Parallelism is when tasks literally run at the same time, eg. on a multicore processor. A B C • Goal: Use multiple instruction streams to improve – Throughput of computers that run many programs Multiprogramming A B C A B C B – Execution time of multi-threaded programs 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.27 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.28

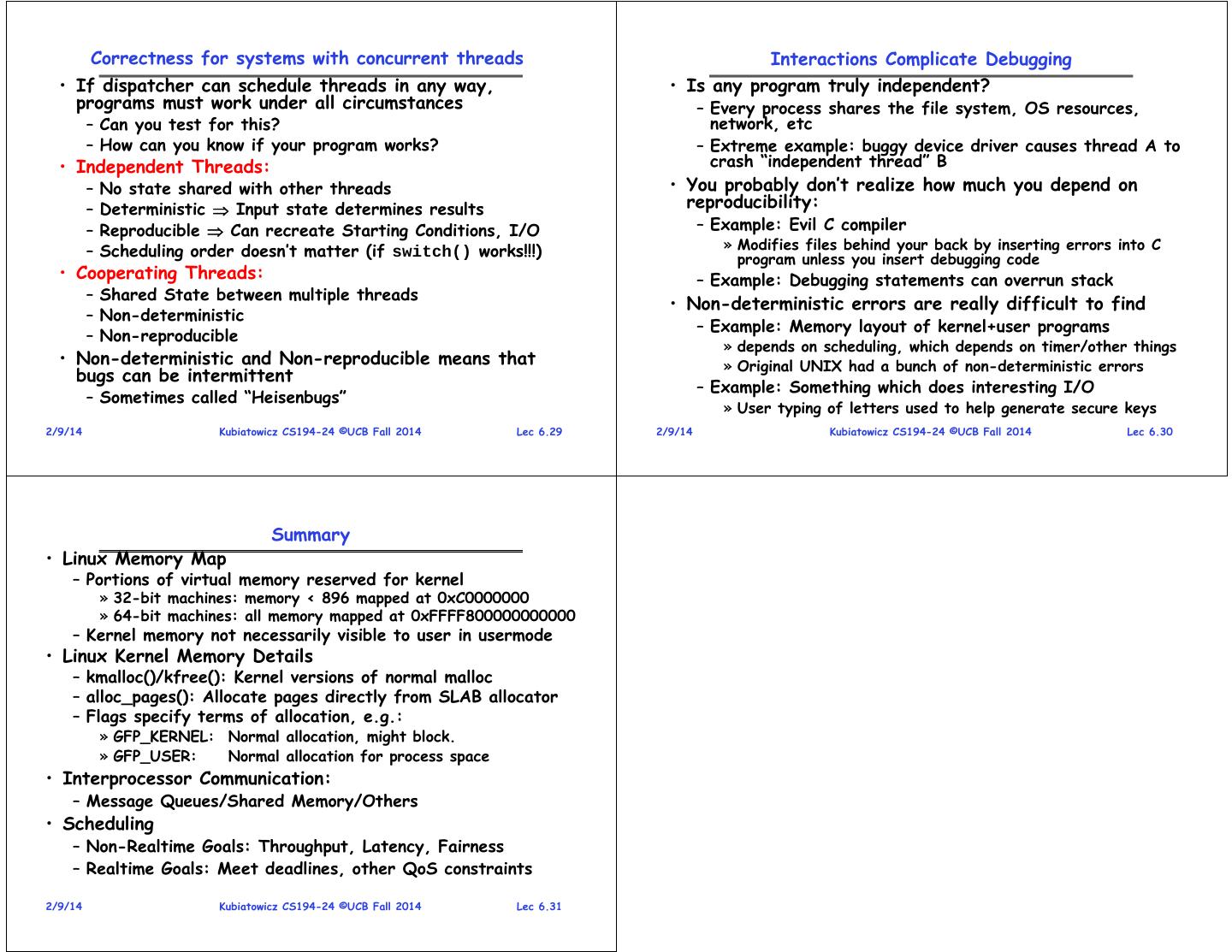

8 . Correctness for systems with concurrent threads Interactions Complicate Debugging • If dispatcher can schedule threads in any way, • Is any program truly independent? programs must work under all circumstances – Every process shares the file system, OS resources, – Can you test for this? network, etc – How can you know if your program works? – Extreme example: buggy device driver causes thread A to • Independent Threads: crash “independent thread” B – No state shared with other threads • You probably don’t realize how much you depend on – Deterministic Input state determines results reproducibility: – Reproducible Can recreate Starting Conditions, I/O – Example: Evil C compiler – Scheduling order doesn’t matter (if switch() works!!!) » Modifies files behind your back by inserting errors into C program unless you insert debugging code • Cooperating Threads: – Example: Debugging statements can overrun stack – Shared State between multiple threads • Non-deterministic errors are really difficult to find – Non-deterministic – Example: Memory layout of kernel+user programs – Non-reproducible » depends on scheduling, which depends on timer/other things • Non-deterministic and Non-reproducible means that » Original UNIX had a bunch of non-deterministic errors bugs can be intermittent – Example: Something which does interesting I/O – Sometimes called “Heisenbugs” » User typing of letters used to help generate secure keys 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.29 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.30 Summary • Linux Memory Map – Portions of virtual memory reserved for kernel » 32-bit machines: memory < 896 mapped at 0xC0000000 » 64-bit machines: all memory mapped at 0xFFFF800000000000 – Kernel memory not necessarily visible to user in usermode • Linux Kernel Memory Details – kmalloc()/kfree(): Kernel versions of normal malloc – alloc_pages(): Allocate pages directly from SLAB allocator – Flags specify terms of allocation, e.g.: » GFP_KERNEL: Normal allocation, might block. » GFP_USER: Normal allocation for process space • Interprocessor Communication: – Message Queues/Shared Memory/Others • Scheduling – Non-Realtime Goals: Throughput, Latency, Fairness – Realtime Goals: Meet deadlines, other QoS constraints 2/9/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 6.31