- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Queueing Theory (continued) Networks

展开查看详情

1 . Goals for Today CS194-24 • Queueing Theory (Con’t) Advanced Operating Systems • Network Drivers Structures and Implementation Lecture 22 Queueing Theory (continued) Interactive is important! Networks Ask Questions! April 23rd, 2014 Prof. John Kubiatowicz http://inst.eecs.berkeley.edu/~cs194-24 Note: Some slides and/or pictures in the following are adapted from slides ©2013 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.2 Recall: Queueing Behavior Recall: Use of random distributions Mean • Server spends variable time with customers (m1) – Mean (Average) m1 = p(T)T • Performance of disk drive/file system – Variance 2 = p(T)(T-m1)2 = – Metrics: Response Time, Throughput p(T)T2-m1 = E(T2)-m1 Distribution – Contributing factors to latency: – Squared coefficient of variance: C = 2/m12 of service times » Software paths (can be loosely 300 Response Aggregate description of the distribution. modeled by a queue) Time (ms) • Important values of C: mean » Hardware controller 200 – No variance or deterministic C=0 » Physical disk media – “memoryless” or exponential C=1 100 » Past tells nothing about future • Queuing behavior: » Many complex systems (or aggregates) Memoryless – Leads to big increases of latency 0 100% well described as memoryless 0% as utilization approaches 100% Throughput (Utilization) – Disk response times C 1.5 (majority seeks < avg) (% total BW) • Mean Residual Wait Time, m1(z): – Mean time must wait for server to complete current task – Can derive m1(z) = ½m1(1 + C) » Not just ½m1 because doesn’t capture variance – C = 0 m1(z) = ½m1; C = 1 m1(z) = m1 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.3 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.4

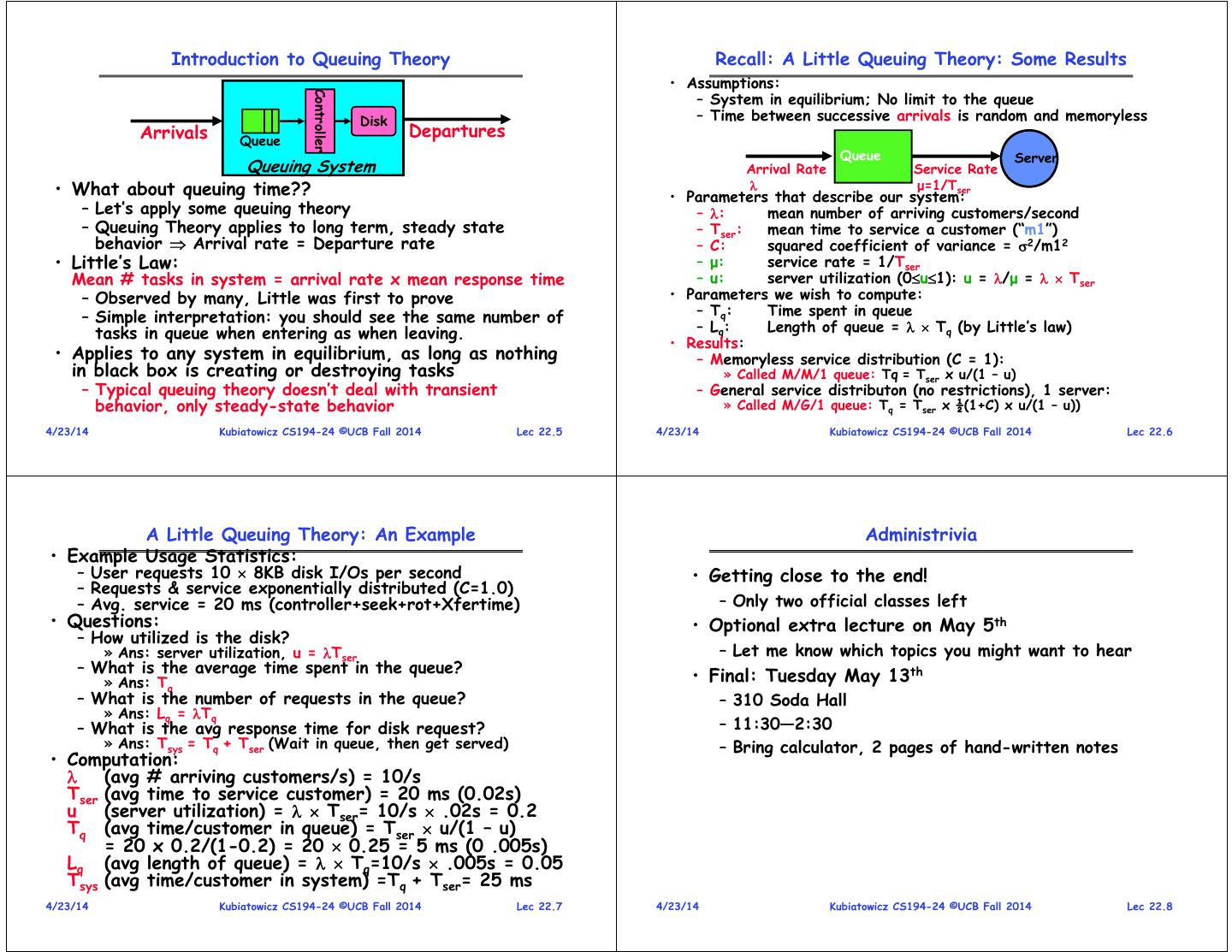

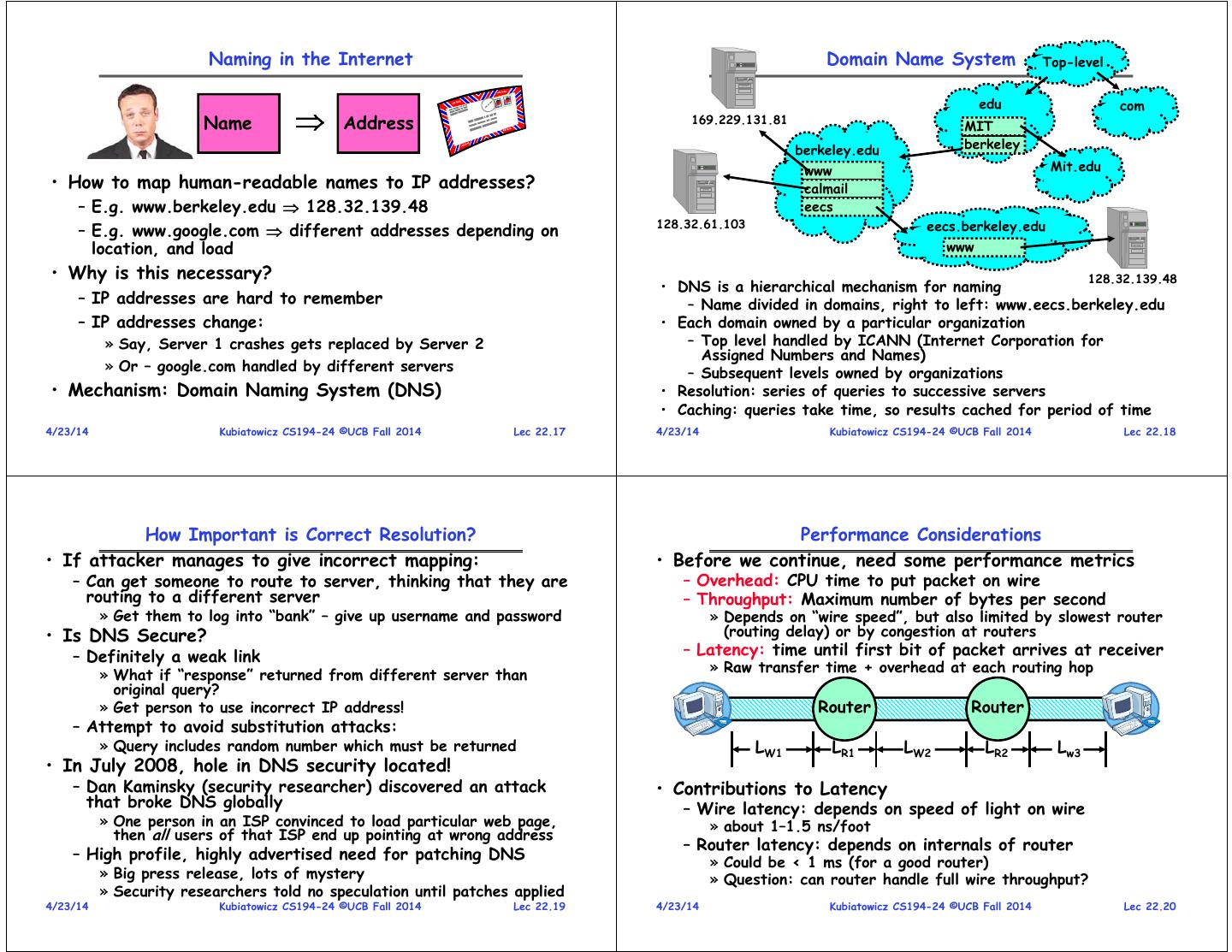

2 . Introduction to Queuing Theory Recall: A Little Queuing Theory: Some Results • Assumptions: – System in equilibrium; No limit to the queue Controller Disk – Time between successive arrivals is random and memoryless Arrivals Queue Departures Queue Server Queuing System Arrival Rate Service Rate • What about queuing time?? • Parameters that describe our system: μ=1/Tser – Let’s apply some queuing theory – : mean number of arriving customers/second – Queuing Theory applies to long term, steady state – Tser: mean time to service a customer (“m1”) behavior Arrival rate = Departure rate – C: squared coefficient of variance = 2/m12 • Little’s Law: – μ: service rate = 1/Tser Mean # tasks in system = arrival rate x mean response time – u: server utilization (0u1): u = /μ = Tser – Observed by many, Little was first to prove • Parameters we wish to compute: – Simple interpretation: you should see the same number of – Tq: Time spent in queue tasks in queue when entering as when leaving. – Lq: Length of queue = Tq (by Little’s law) • Results: • Applies to any system in equilibrium, as long as nothing – Memoryless service distribution (C = 1): in black box is creating or destroying tasks » Called M/M/1 queue: Tq = Tser x u/(1 – u) – Typical queuing theory doesn’t deal with transient – General service distributon (no restrictions), 1 server: behavior, only steady-state behavior » Called M/G/1 queue: Tq = Tser x ½(1+C) x u/(1 – u)) 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.5 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.6 A Little Queuing Theory: An Example Administrivia • Example Usage Statistics: – User requests 10 8KB disk I/Os per second • Getting close to the end! – Requests & service exponentially distributed (C=1.0) – Avg. service = 20 ms (controller+seek+rot+Xfertime) – Only two official classes left • Questions: • Optional extra lecture on May 5th – How utilized is the disk? » Ans: server utilization, u = Tser – Let me know which topics you might want to hear – What is the average time spent in the queue? • Final: Tuesday May 13th » Ans: Tq – What is the number of requests in the queue? – 310 Soda Hall » Ans: Lq = Tq – What is the avg response time for disk request? – 11:30—2:30 » Ans: Tsys = Tq + Tser (Wait in queue, then get served) – Bring calculator, 2 pages of hand-written notes • Computation: (avg # arriving customers/s) = 10/s Tser (avg time to service customer) = 20 ms (0.02s) u (server utilization) = Tser= 10/s .02s = 0.2 Tq (avg time/customer in queue) = Tser u/(1 – u) = 20 x 0.2/(1-0.2) = 20 0.25 = 5 ms (0 .005s) Lq (avg length of queue) = Tq=10/s .005s = 0.05 Tsys (avg time/customer in system) =Tq + Tser= 25 ms 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.7 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.8

3 . Networking The Internet Protocol: “IP” Other • The Internet is a large network of computers spread subnets across the globe Transcontinental – According to the Internet Systems Consortium, there subnet1 Router were over 681 million computers as of July 2009 Link – In principle, every host can speak with every other one under the right circumstances Router • IP Packet: a network packet on the internet • IP Address: a 32-bit integer used as the destination Other of an IP packet Router subnet3 – Often written as four dot-separated integers, with each subnets subnet2 integer from 0—255 (thus representing 8x4=32 bits) – Example CS file server is: 169.229.60.83 0xA9E53C53 • Internet Host: a computer connected to the Internet – Host has one or more IP addresses used for routing » Some of these may be private and unavailable for routing • Networking is different from all other facilities in the kernel: – Not every computer has a unique IP address – Events come from outside rather than just from user or kernel » Groups of machines may share a single IP address » In this case, machines have private addresses behind a – Security breaches can be initiated by people anywhere “Network Address Translation” (NAT) gateway – Interfaces are queue-based and mediated by transport protocols 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.9 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.10 Point-to-point networks Address Subnets • Subnet: A network connecting a set of hosts with related destination addresses • With IP, all the addresses in subnet are related by a Internet prefix of bits Switch Router – Mask: The number of matching prefix bits » Expressed as a single value (e.g., 24) or a set of ones in a 32-bit value (e.g., 255.255.255.0) • A subnet is identified by 32-bit value, with the bits • Point-to-point network: a network in which every which differ set to zero, followed by a slash and a physical wire is connected to only two computers mask • Switch: a bridge that transforms a shared-bus – Example: 128.32.131.0/24 designates a subnet in which (broadcast) configuration into a point-to-point network. all the addresses look like 128.32.131.XX • Hub: a multiport device that acts like a repeater – Same subnet: 128.32.131.0/255.255.255.0 broadcasting from each input to every output • Difference between subnet and complete network range • Router: a device that acts as a junction between two – Subnet is always a subset of address range networks to transfer data packets among them. – Once, subnet meant single physical broadcast wire; now, less clear exactly what it means (virtualized by switches) 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.11 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.12

4 . Address Ranges in IP Simple Network Terminology • IP address space divided into prefix-delimited ranges: • Local-Area Network (LAN) – designed to cover small – Class A: NN.0.0.0/8 geographical area » NN is 1–126 (126 of these networks) – Multi-access bus, ring, or star network » 16,777,214 IP addresses per network – Speed 10 – 10000 Megabits/second » 10.xx.yy.zz is private – Broadcast is fast and cheap » 127.xx.yy.zz is loopback – Class B: NN.MM.0.0/16 – In small organization, a LAN could consist of a single » NN is 128–191, MM is 0-255 (16,384 of these networks) subnet. In large organizations (like UC Berkeley), a LAN contains many subnets » 65,534 IP addresses per network » 172.[16-31].xx.yy are private • Wide-Area Network (WAN) – links geographically – Class C: NN.MM.LL.0/24 separated sites » NN is 192–223, MM and LL 0-255 – Point-to-point connections over long-haul lines (often (2,097,151 of these networks) leased from a phone company) » 254 IP addresses per networks – Speed 1.544 – 45 Megabits/second » 192.168.xx.yy are private • Address ranges are often owned by organizations – Broadcast usually requires multiple messages – Can be further divided into subnets 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.13 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.14 Routing Setting up Routing Tables • Routing: the process of forwarding packets hop-by-hop • How do you set up routing tables? through routers to reach their destination – Internet has no centralized state! – Need more than just a destination address! » No single machine knows entire topology » Need a path » Topology constantly changing (faults, reconfiguration, etc) – Post Office Analogy: – Need dynamic algorithm that acquires routing tables » Destination address on each letter is not » Ideally, have one entry per subnet or portion of address sufficient to get it to the destination » Could have “default” routes that send packets for unknown » To get a letter from here to Florida, must route to local subnets to a different router that has more information post office, sorted and sent on plane to somewhere in • Possible algorithm for acquiring routing table: OSPF Florida, be routed to post office, sorted and sent with (Open Shortest Path First) carrier who knows where street and house is… – Routing table has “cost” for each entry • Routing mechanism: prefix based using routing tables » Includes number of hops to destination, congestion, etc. – Each router does table lookup to decide which link to use » Entries for unknown subnets have infinite cost to get packet closer to destination – Neighbors periodically exchange routing tables – Don’t need 4 billion entries in table: routing is by subnet » If neighbor knows cheaper route to a subnet, replace your – Could packets be sent in a loop? Yes, if tables incorrect entry with neighbors entry (+1 for hop to neighbor) • Routing table contains: • In reality: – Destination address range output link closer to – Internet has networks of many different scales destination » E.g. BGP at large scale, OSPF locally, … – Default entry (for subnets without explicit entries) – Different algorithms run at different scales 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.15 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.16

5 . Naming in the Internet Domain Name System Top-level edu com Name Address 169.229.131.81 MIT berkeley.edu berkeley www Mit.edu • How to map human-readable names to IP addresses? calmail – E.g. www.berkeley.edu 128.32.139.48 eecs 128.32.61.103 eecs.berkeley.edu – E.g. www.google.com different addresses depending on location, and load www • Why is this necessary? 128.32.139.48 • DNS is a hierarchical mechanism for naming – IP addresses are hard to remember – Name divided in domains, right to left: www.eecs.berkeley.edu – IP addresses change: • Each domain owned by a particular organization » Say, Server 1 crashes gets replaced by Server 2 – Top level handled by ICANN (Internet Corporation for Assigned Numbers and Names) » Or – google.com handled by different servers – Subsequent levels owned by organizations • Mechanism: Domain Naming System (DNS) • Resolution: series of queries to successive servers • Caching: queries take time, so results cached for period of time 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.17 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.18 How Important is Correct Resolution? Performance Considerations • If attacker manages to give incorrect mapping: • Before we continue, need some performance metrics – Can get someone to route to server, thinking that they are – Overhead: CPU time to put packet on wire routing to a different server – Throughput: Maximum number of bytes per second » Get them to log into “bank” – give up username and password » Depends on “wire speed”, but also limited by slowest router • Is DNS Secure? (routing delay) or by congestion at routers – Definitely a weak link – Latency: time until first bit of packet arrives at receiver » Raw transfer time + overhead at each routing hop » What if “response” returned from different server than original query? » Get person to use incorrect IP address! Router Router – Attempt to avoid substitution attacks: » Query includes random number which must be returned LW1 LR1 LW2 LR2 Lw3 • In July 2008, hole in DNS security located! – Dan Kaminsky (security researcher) discovered an attack • Contributions to Latency that broke DNS globally – Wire latency: depends on speed of light on wire » One person in an ISP convinced to load particular web page, » about 1–1.5 ns/foot then all users of that ISP end up pointing at wrong address – Router latency: depends on internals of router – High profile, highly advertised need for patching DNS » Could be < 1 ms (for a good router) » Big press release, lots of mystery » Question: can router handle full wire throughput? » Security researchers told no speculation until patches applied 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.19 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.20

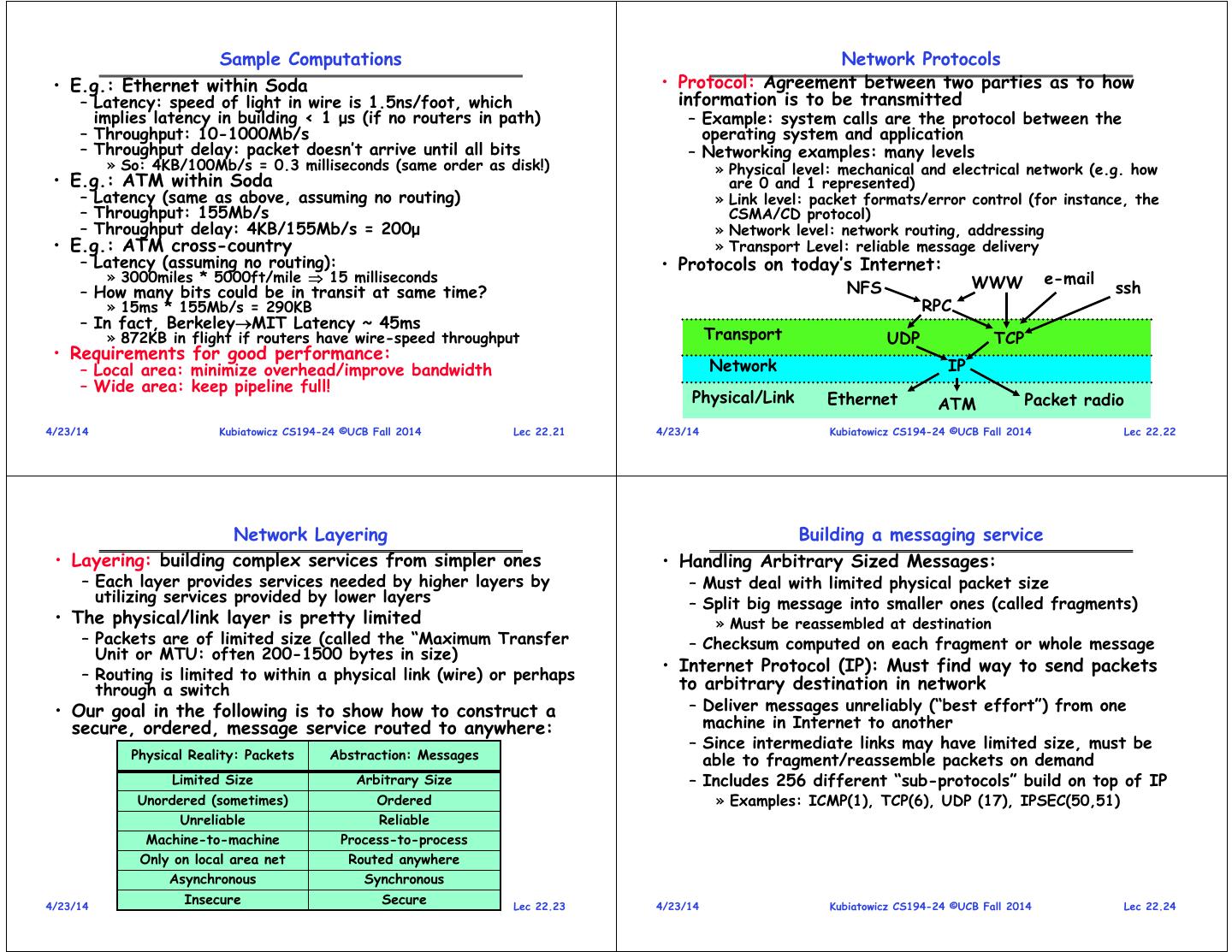

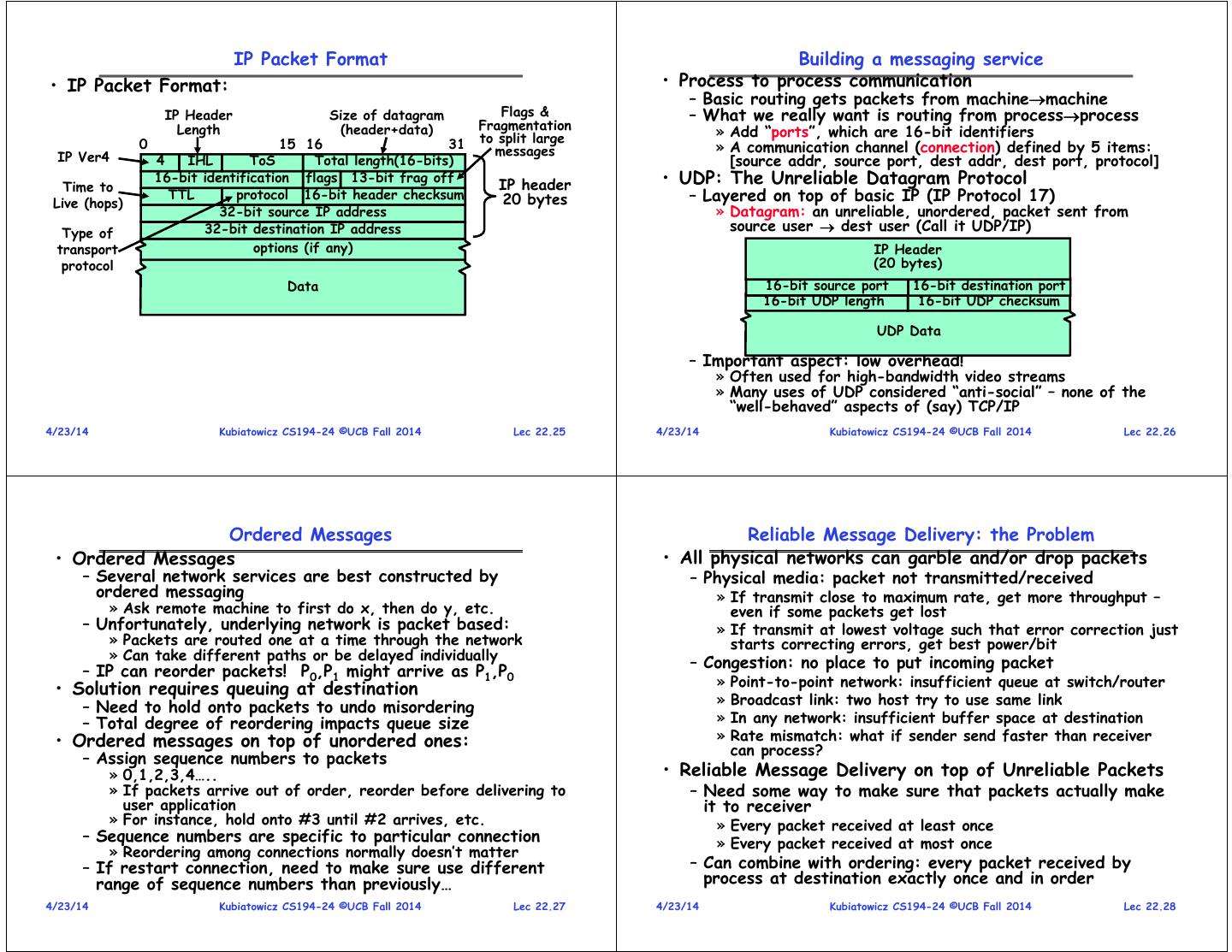

6 . Sample Computations Network Protocols • E.g.: Ethernet within Soda • Protocol: Agreement between two parties as to how – Latency: speed of light in wire is 1.5ns/foot, which information is to be transmitted implies latency in building < 1 μs (if no routers in path) – Example: system calls are the protocol between the – Throughput: 10-1000Mb/s operating system and application – Throughput delay: packet doesn’t arrive until all bits – Networking examples: many levels » So: 4KB/100Mb/s = 0.3 milliseconds (same order as disk!) » Physical level: mechanical and electrical network (e.g. how • E.g.: ATM within Soda are 0 and 1 represented) – Latency (same as above, assuming no routing) » Link level: packet formats/error control (for instance, the – Throughput: 155Mb/s CSMA/CD protocol) – Throughput delay: 4KB/155Mb/s = 200μ » Network level: network routing, addressing • E.g.: ATM cross-country » Transport Level: reliable message delivery – Latency (assuming no routing): • Protocols on today’s Internet: » 3000miles * 5000ft/mile 15 milliseconds WWW e-mail – How many bits could be in transit at same time? NFS ssh » 15ms * 155Mb/s = 290KB RPC – In fact, BerkeleyMIT Latency ~ 45ms » 872KB in flight if routers have wire-speed throughput Transport UDP TCP • Requirements for good performance: – Local area: minimize overhead/improve bandwidth Network IP – Wide area: keep pipeline full! Physical/Link Ethernet ATM Packet radio 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.21 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.22 Network Layering Building a messaging service • Layering: building complex services from simpler ones • Handling Arbitrary Sized Messages: – Each layer provides services needed by higher layers by – Must deal with limited physical packet size utilizing services provided by lower layers – Split big message into smaller ones (called fragments) • The physical/link layer is pretty limited » Must be reassembled at destination – Packets are of limited size (called the “Maximum Transfer – Checksum computed on each fragment or whole message Unit or MTU: often 200-1500 bytes in size) – Routing is limited to within a physical link (wire) or perhaps • Internet Protocol (IP): Must find way to send packets through a switch to arbitrary destination in network • Our goal in the following is to show how to construct a – Deliver messages unreliably (“best effort”) from one secure, ordered, message service routed to anywhere: machine in Internet to another – Since intermediate links may have limited size, must be Physical Reality: Packets Abstraction: Messages able to fragment/reassemble packets on demand Limited Size Arbitrary Size – Includes 256 different “sub-protocols” build on top of IP Unordered (sometimes) Ordered » Examples: ICMP(1), TCP(6), UDP (17), IPSEC(50,51) Unreliable Reliable Machine-to-machine Process-to-process Only on local area net Routed anywhere Asynchronous Synchronous 4/23/14 Insecure Secure Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.23 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.24

7 . IP Packet Format Building a messaging service • IP Packet Format: • Process to process communication – Basic routing gets packets from machinemachine IP Header Size of datagram Flags & – What we really want is routing from processprocess Length (header+data) Fragmentation to split large » Add “ports”, which are 16-bit identifiers 0 15 16 31 » A communication channel (connection) defined by 5 items: IP Ver4 messages 4 IHL ToS Total length(16-bits) [source addr, source port, dest addr, dest port, protocol] Time to 16-bit identification flags 13-bit frag off IP header • UDP: The Unreliable Datagram Protocol Live (hops) TTL protocol 16-bit header checksum 20 bytes – Layered on top of basic IP (IP Protocol 17) 32-bit source IP address » Datagram: an unreliable, unordered, packet sent from Type of 32-bit destination IP address source user dest user (Call it UDP/IP) transport options (if any) IP Header protocol (20 bytes) Data 16-bit source port 16-bit destination port 16-bit UDP length 16-bit UDP checksum UDP Data – Important aspect: low overhead! » Often used for high-bandwidth video streams » Many uses of UDP considered “anti-social” – none of the “well-behaved” aspects of (say) TCP/IP 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.25 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.26 Ordered Messages Reliable Message Delivery: the Problem • Ordered Messages • All physical networks can garble and/or drop packets – Several network services are best constructed by – Physical media: packet not transmitted/received ordered messaging » If transmit close to maximum rate, get more throughput – » Ask remote machine to first do x, then do y, etc. even if some packets get lost – Unfortunately, underlying network is packet based: » If transmit at lowest voltage such that error correction just » Packets are routed one at a time through the network starts correcting errors, get best power/bit » Can take different paths or be delayed individually – Congestion: no place to put incoming packet – IP can reorder packets! P0,P1 might arrive as P1,P0 » Point-to-point network: insufficient queue at switch/router • Solution requires queuing at destination – Need to hold onto packets to undo misordering » Broadcast link: two host try to use same link – Total degree of reordering impacts queue size » In any network: insufficient buffer space at destination • Ordered messages on top of unordered ones: » Rate mismatch: what if sender send faster than receiver can process? – Assign sequence numbers to packets » 0,1,2,3,4….. • Reliable Message Delivery on top of Unreliable Packets » If packets arrive out of order, reorder before delivering to – Need some way to make sure that packets actually make user application it to receiver » For instance, hold onto #3 until #2 arrives, etc. » Every packet received at least once – Sequence numbers are specific to particular connection » Every packet received at most once » Reordering among connections normally doesn’t matter – If restart connection, need to make sure use different – Can combine with ordering: every packet received by range of sequence numbers than previously… process at destination exactly once and in order 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.27 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.28

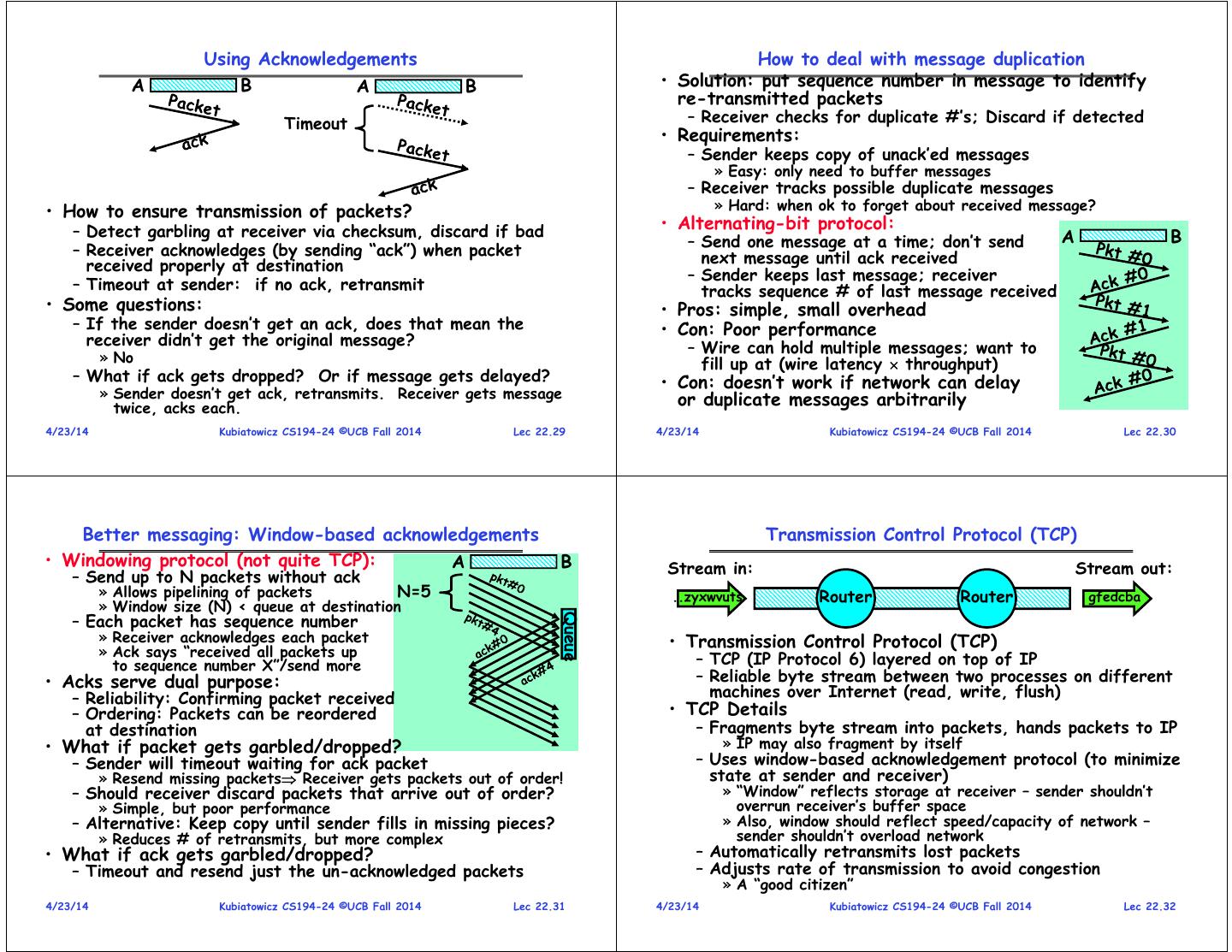

8 . Using Acknowledgements How to deal with message duplication A B A B • Solution: put sequence number in message to identify re-transmitted packets Timeout – Receiver checks for duplicate #’s; Discard if detected • Requirements: – Sender keeps copy of unack’ed messages » Easy: only need to buffer messages – Receiver tracks possible duplicate messages • How to ensure transmission of packets? » Hard: when ok to forget about received message? – Detect garbling at receiver via checksum, discard if bad • Alternating-bit protocol: – Send one message at a time; don’t send A B – Receiver acknowledges (by sending “ack”) when packet next message until ack received received properly at destination – Sender keeps last message; receiver – Timeout at sender: if no ack, retransmit tracks sequence # of last message received • Some questions: • Pros: simple, small overhead – If the sender doesn’t get an ack, does that mean the • Con: Poor performance receiver didn’t get the original message? – Wire can hold multiple messages; want to » No fill up at (wire latency throughput) – What if ack gets dropped? Or if message gets delayed? • Con: doesn’t work if network can delay » Sender doesn’t get ack, retransmits. Receiver gets message or duplicate messages arbitrarily twice, acks each. 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.29 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.30 Better messaging: Window-based acknowledgements Transmission Control Protocol (TCP) • Windowing protocol (not quite TCP): A B Stream in: Stream out: – Send up to N packets without ack » Allows pipelining of packets N=5 ..zyxwvuts Router Router gfedcba » Window size (N) < queue at destination – Each packet has sequence number Queue » Receiver acknowledges each packet • Transmission Control Protocol (TCP) » Ack says “received all packets up to sequence number X”/send more – TCP (IP Protocol 6) layered on top of IP • Acks serve dual purpose: – Reliable byte stream between two processes on different – Reliability: Confirming packet received machines over Internet (read, write, flush) – Ordering: Packets can be reordered • TCP Details at destination – Fragments byte stream into packets, hands packets to IP • What if packet gets garbled/dropped? » IP may also fragment by itself – Sender will timeout waiting for ack packet – Uses window-based acknowledgement protocol (to minimize » Resend missing packets Receiver gets packets out of order! state at sender and receiver) – Should receiver discard packets that arrive out of order? » “Window” reflects storage at receiver – sender shouldn’t » Simple, but poor performance overrun receiver’s buffer space – Alternative: Keep copy until sender fills in missing pieces? » Also, window should reflect speed/capacity of network – » Reduces # of retransmits, but more complex sender shouldn’t overload network • What if ack gets garbled/dropped? – Automatically retransmits lost packets – Timeout and resend just the un-acknowledged packets – Adjusts rate of transmission to avoid congestion » A “good citizen” 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.31 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.32

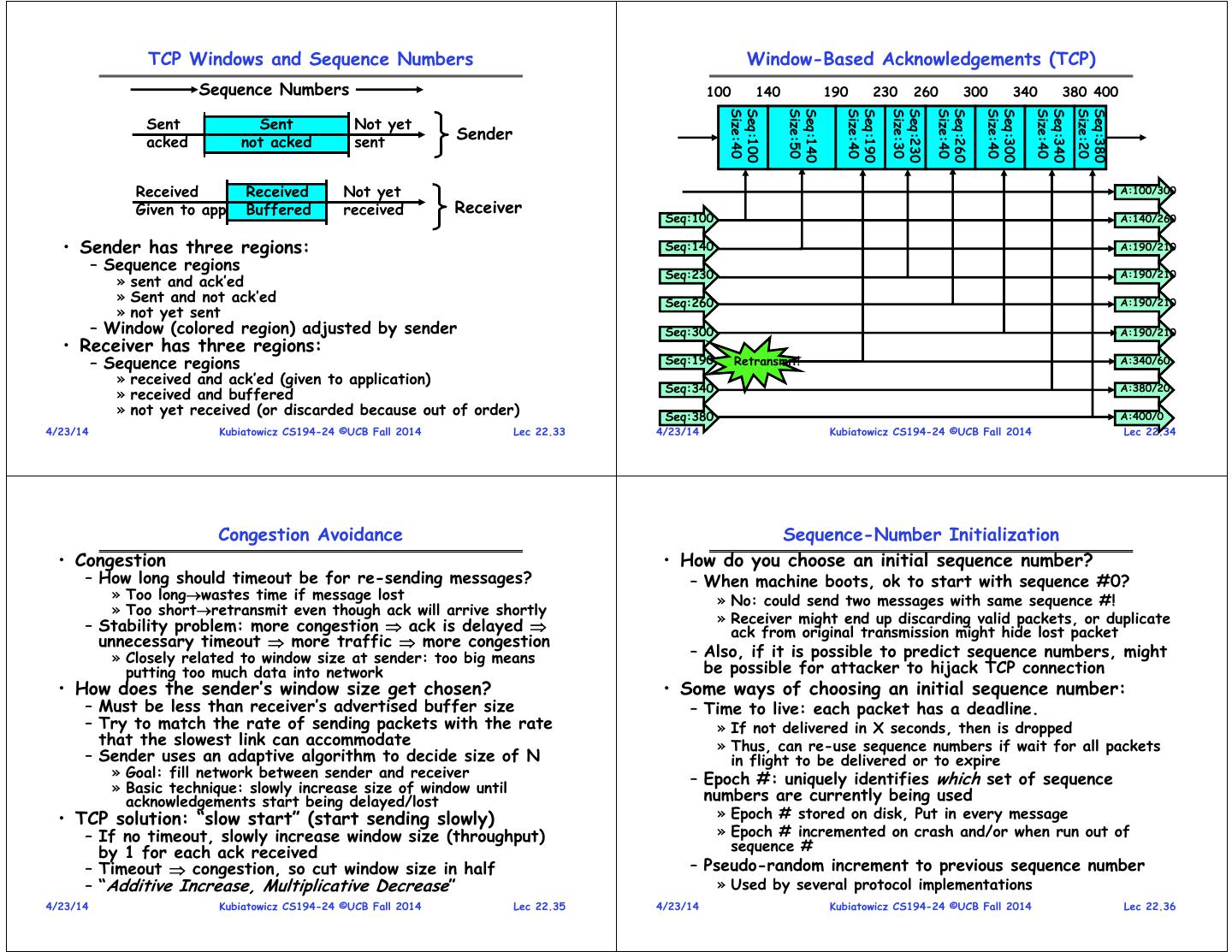

9 . TCP Windows and Sequence Numbers Window-Based Acknowledgements (TCP) Sequence Numbers 100 140 190 230 260 300 340 380 400 Size:40 Seq:100 Size:50 Seq:140 Size:40 Seq:190 Size:30 Seq:230 Size:40 Seq:260 Size:40 Seq:300 Size:40 Seq:340 Size:20 Seq:380 Sent Sent Not yet acked not acked sent Sender Received Received Not yet A:100/300 Given to app Buffered received Receiver Seq:100 A:140/260 • Sender has three regions: Seq:140 A:190/210 – Sequence regions Seq:230 A:190/210 » sent and ack’ed » Sent and not ack’ed Seq:260 A:190/210 » not yet sent – Window (colored region) adjusted by sender Seq:300 A:190/210 • Receiver has three regions: – Sequence regions Seq:190 Retransmit! A:340/60 » received and ack’ed (given to application) Seq:340 A:380/20 » received and buffered » not yet received (or discarded because out of order) Seq:380 A:400/0 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.33 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.34 Congestion Avoidance Sequence-Number Initialization • Congestion • How do you choose an initial sequence number? – How long should timeout be for re-sending messages? – When machine boots, ok to start with sequence #0? » Too longwastes time if message lost » No: could send two messages with same sequence #! » Too shortretransmit even though ack will arrive shortly » Receiver might end up discarding valid packets, or duplicate – Stability problem: more congestion ack is delayed ack from original transmission might hide lost packet unnecessary timeout more traffic more congestion » Closely related to window size at sender: too big means – Also, if it is possible to predict sequence numbers, might putting too much data into network be possible for attacker to hijack TCP connection • How does the sender’s window size get chosen? • Some ways of choosing an initial sequence number: – Must be less than receiver’s advertised buffer size – Time to live: each packet has a deadline. – Try to match the rate of sending packets with the rate » If not delivered in X seconds, then is dropped that the slowest link can accommodate » Thus, can re-use sequence numbers if wait for all packets – Sender uses an adaptive algorithm to decide size of N in flight to be delivered or to expire » Goal: fill network between sender and receiver » Basic technique: slowly increase size of window until – Epoch #: uniquely identifies which set of sequence acknowledgements start being delayed/lost numbers are currently being used • TCP solution: “slow start” (start sending slowly) » Epoch # stored on disk, Put in every message – If no timeout, slowly increase window size (throughput) » Epoch # incremented on crash and/or when run out of by 1 for each ack received sequence # – Timeout congestion, so cut window size in half – Pseudo-random increment to previous sequence number – “Additive Increase, Multiplicative Decrease” » Used by several protocol implementations 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.35 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.36

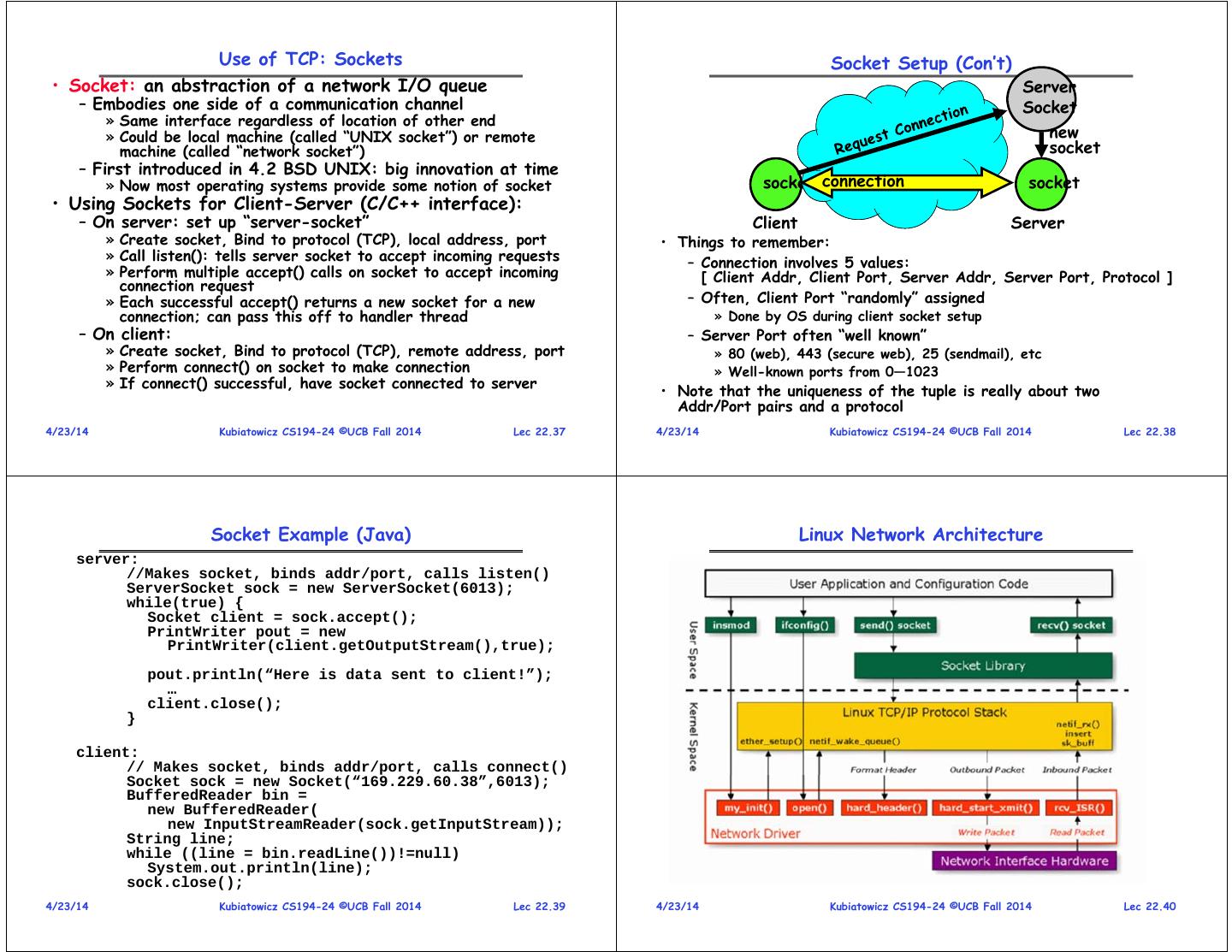

10 . Use of TCP: Sockets Socket Setup (Con’t) • Socket: an abstraction of a network I/O queue Server – Embodies one side of a communication channel Socket » Same interface regardless of location of other end » Could be local machine (called “UNIX socket”) or remote new machine (called “network socket”) socket – First introduced in 4.2 BSD UNIX: big innovation at time » Now most operating systems provide some notion of socket socket connection socket • Using Sockets for Client-Server (C/C++ interface): – On server: set up “server-socket” Client Server » Create socket, Bind to protocol (TCP), local address, port • Things to remember: » Call listen(): tells server socket to accept incoming requests – Connection involves 5 values: » Perform multiple accept() calls on socket to accept incoming [ Client Addr, Client Port, Server Addr, Server Port, Protocol ] connection request » Each successful accept() returns a new socket for a new – Often, Client Port “randomly” assigned connection; can pass this off to handler thread » Done by OS during client socket setup – On client: – Server Port often “well known” » Create socket, Bind to protocol (TCP), remote address, port » 80 (web), 443 (secure web), 25 (sendmail), etc » Perform connect() on socket to make connection » Well-known ports from 0—1023 » If connect() successful, have socket connected to server • Note that the uniqueness of the tuple is really about two Addr/Port pairs and a protocol 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.37 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.38 Socket Example (Java) Linux Network Architecture server: //Makes socket, binds addr/port, calls listen() ServerSocket sock = new ServerSocket(6013); while(true) { Socket client = sock.accept(); PrintWriter pout = new PrintWriter(client.getOutputStream(),true); pout.println(“Here is data sent to client!”); … client.close(); } client: // Makes socket, binds addr/port, calls connect() Socket sock = new Socket(“169.229.60.38”,6013); BufferedReader bin = new BufferedReader( new InputStreamReader(sock.getInputStream)); String line; while ((line = bin.readLine())!=null) System.out.println(line); sock.close(); 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.39 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.40

11 . Network Details: sk_buff structure Headers, Fragments, and All That • Socket Buffers: sk_buff structure • The “linear region”: – The I/O buffers of sockets are lists of sk_buff – Space from skb->data to skb->end – Actual data from skb->head to skb->tail » Pointers to such structures usually called “skb” – Header pointers point to parts of packet – Complex structures with lots of manipulation routines • The fragments (in skb_shared_info): – Packet is linked list of sk_buff structures – Right after skb->end, each fragment has pointer to pages, start of data, and length 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.41 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.42 Copies, manipulation, etc Network Processing Contexts • Lots of sk_buff manipulation functions for: – removing and adding headers, merging data, pulling it up into linear region – Copying/cloning sk_buff structures 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.43 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.44

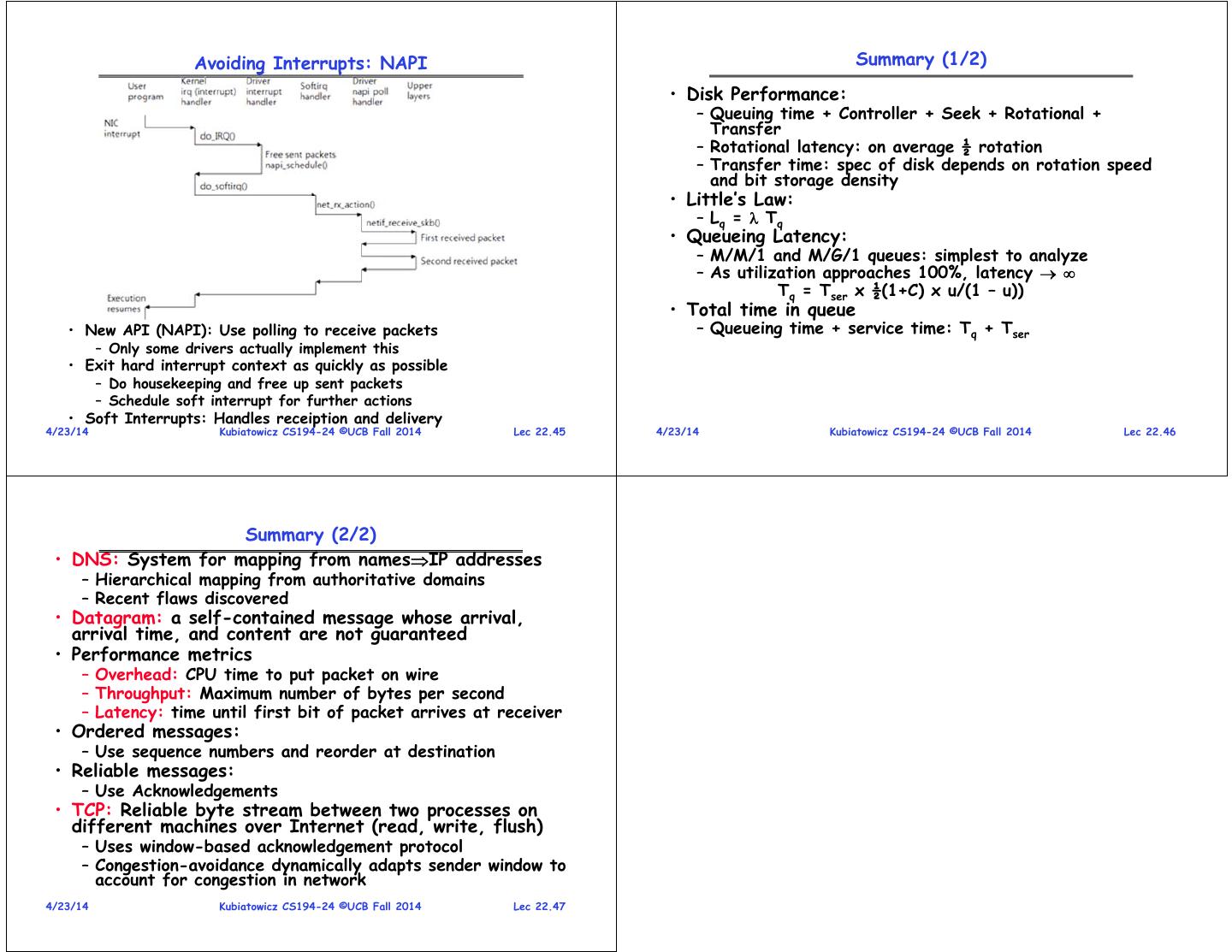

12 . Avoiding Interrupts: NAPI Summary (1/2) • Disk Performance: – Queuing time + Controller + Seek + Rotational + Transfer – Rotational latency: on average ½ rotation – Transfer time: spec of disk depends on rotation speed and bit storage density • Little’s Law: – Lq = Tq • Queueing Latency: – M/M/1 and M/G/1 queues: simplest to analyze – As utilization approaches 100%, latency Tq = Tser x ½(1+C) x u/(1 – u)) • Total time in queue • New API (NAPI): Use polling to receive packets – Queueing time + service time: Tq + Tser – Only some drivers actually implement this • Exit hard interrupt context as quickly as possible – Do housekeeping and free up sent packets – Schedule soft interrupt for further actions • Soft Interrupts: Handles receiption and delivery 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.45 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.46 Summary (2/2) • DNS: System for mapping from namesIP addresses – Hierarchical mapping from authoritative domains – Recent flaws discovered • Datagram: a self-contained message whose arrival, arrival time, and content are not guaranteed • Performance metrics – Overhead: CPU time to put packet on wire – Throughput: Maximum number of bytes per second – Latency: time until first bit of packet arrives at receiver • Ordered messages: – Use sequence numbers and reorder at destination • Reliable messages: – Use Acknowledgements • TCP: Reliable byte stream between two processes on different machines over Internet (read, write, flush) – Uses window-based acknowledgement protocol – Congestion-avoidance dynamically adapts sender window to account for congestion in network 4/23/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 22.47