- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

调度_实时调度

展开查看详情

1 . Goals for Today CS194-24 • Scheduling (Con’t) Advanced Operating Systems • Realtime Scheduling Structures and Implementation Lecture 17 Interactive is important! Ask Questions! Scheduling (Con’t) Real-Time Scheduling April 2nd, 2014 Prof. John Kubiatowicz http://inst.eecs.berkeley.edu/~cs194-24 Note: Some slides and/or pictures in the following are adapted from slides ©2013 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.2 Recall: Multi-Level Feedback Scheduling Case Study: Linux O(1) Scheduler Long-Running Compute Kernel/Realtime Tasks User Tasks Tasks Demoted to Low Priority 0 100 139 • Priority-based scheduler: 140 priorities – 40 for “user tasks” (set by “nice”), 100 for “Realtime/Kernel” • Another method for exploiting past behavior – Lower priority value higher priority (for nice values) – First used in CTSS – Lower priority value Lower priority (for realtime values) – Multiple queues, each with different priority – All algorithms O(1) » Timeslices/priorities/interactivity credits all computed when » Higher priority queues often considered “foreground” tasks job finishes time slice – Each queue has its own scheduling algorithm » 140-bit bit mask indicates presence or absence of job at » e.g. foreground – RR, background – FCFS given priority level » Sometimes multiple RR priorities with quantum increasing • Two separate priority queues: exponentially (highest:1ms, next:2ms, next: 4ms, etc) – The “active queue” and the “expired queue” • Adjust each job’s priority as follows (details vary) – All tasks in the active queue use up their timeslices and get placed on the expired queue, after which queues swapped – Job starts in highest priority queue – However, “interactive tasks” get special dispensation – If timeout expires, drop one level » To try to maintain interactivity – If timeout doesn’t expire, push up one level (or to top) » Placed back into active queue, unless some other task has 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.3 4/2/14 been starved for too long Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.4

2 . O(1) Scheduler Continued What about Linux “Real-Time Priorities” (0-99)? • Real-Time Tasks: Strict Priority Scheme • Heuristics – No dynamic adjustment of priorities (i.e. no heuristics) – User-task priority adjusted ±5 based on heuristics – Scheduling schemes: (Actually – POSIX 1.1b) » p->sleep_avg = sleep_time – run_time » SCHED_FIFO: preempts other tasks, no timeslice limit » Higher sleep_avg more I/O bound the task, more » SCHED_RR: preempts normal tasks, RR scheduling amongst reward (and vice versa) tasks of same priority – Interactive Credit • With N processors: » Earned when a task sleeps for a “long” time – Always run N highest priority tasks that are runnable » Spend when a task runs for a “long” time – Rebalancing task on every transition: » IC is used to provide hysteresis to avoid changing » Where to place a task optimally on wakeup? interactivity for temporary changes in behavior » What to do with a lower-priority task when it wakes up but • Real-Time Tasks is on a runqueue running a task of higher priority? – Always preempt non-RT tasks » What to do with a low-priority task when a higher-priority task on the same runqueue wakes up and preempts it? – No dynamic adjustment of priorities » What to do when a task lowers its priority and causes a – Scheduling schemes: previously lower-priority task to have the higher priority? » SCHED_FIFO: preempts other tasks, no timeslice limit – Optimized implementation with global bit vectors to quickly identify where to place tasks » SCHED_RR: preempts normal tasks, RR scheduling amongst tasks of same priority • More on this later… 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.5 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.6 Linux Completely Fair Scheduler (CFS) CFS (Continued) • First appeared in 2.6.23, modified in 2.6.24 • Idea: track amount of “virtual time” received by each • “CFS doesn't track sleeping time and doesn't use process when it is executing heuristics to identify interactive tasks—it just makes – Take real execution time, scale by weighting factor sure every process gets a fair share of CPU within a » Lower priority real time divided by greater weight set amount of time given the number of runnable » Actually – multiply by sum of all weights/current weight processes on the CPU.” – Keep virtual time advancing at same rate • Inspired by Networking “Fair Queueing” • Targeted latency ( ): period of time after which all processes get to run at least a little – Each process given their fair share of resources – Each process runs with quantum ⁄∑ – Models an “ideal multitasking processor” in which N processes execute simultaneously as if they truly got – Never smaller than “minimum granularity” 1/N of the processor • Use of Red-Black tree to hold all runnable processes » Tries to give each process an equal fraction of the as sorted on vruntime variable processor – O(log n) time to perform insertions/deletions – Priorities reflected by weights such that increasing a » Cash the item at far left (item with earliest vruntime) task’s priority by 1 always gives the same fractional – When ready to schedule, grab version with smallest increase in CPU time – regardless of current priority vruntime (which will be item at the far left). 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.7 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.8

3 . CFS Examples Characteristics of a RTS • Suppose Targeted latency = 20ms, • Extreme reliability and safety Minimum Granularity = 1ms – Embedded systems typically control the environment in which • Two CPU bound tasks with same priorities they operate – Both switch with 10ms – Failure to control can result in loss of life, damage to environment or economic loss • Two CPU bound tasks separated by nice value of 5 • Guaranteed response times – One task gets 5ms, another gets 15 – We need to be able to predict with confidence the worst case • 40 tasks: each gets 1ms (no longer totally fair) response times for systems – Efficiency is important but predictability is essential • One CPU bound task, one interactive task same priority » In RTS, performance guarantees are: – While interactive task sleeps, CPU bound task runs and • Task- and/or class centric increments vruntime • Often ensured a priori – When interactive task wakes up, runs immediately, since it » In conventional systems, performance is: is behind on vruntime • System oriented and often throughput oriented • Group scheduling facilities (2.6.24) • Post-processing (… wait and see …) – Can give fair fractions to groups (like a user or other • Soft Real-Time mechanism for grouping processes) – Attempt to meet deadlines with high probability – So, two users, one starts 1 process, other starts 40, – Important for multimedia applications each will get 50% of CPU 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.9 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.10 What about Linux “Real-Time Priorities” (0-99)? Linux Completely Fair Scheduler (CFS) • Real-Time Tasks: Strict Priority Scheme • First appeared in 2.6.23, modified in 2.6.24 – No dynamic adjustment of priorities (i.e. no heuristics) – Scheduling schemes: (Actually – POSIX 1.1b) • “CFS doesn't track sleeping time and doesn't use » SCHED_FIFO: preempts other tasks, no timeslice limit heuristics to identify interactive tasks—it just makes » SCHED_RR: preempts normal tasks, RR scheduling amongst sure every process gets a fair share of CPU within a tasks of same priority set amount of time given the number of runnable • With N processors: processes on the CPU.” – Always run N highest priority tasks that are runnable • Inspired by Networking “Fair Queueing” – Rebalancing task on every transition: – Each process given their fair share of resources » Where to place a task optimally on wakeup? » What to do with a lower-priority task when it wakes up but – Models an “ideal multitasking processor” in which N is on a runqueue running a task of higher priority? processes execute simultaneously as if they truly got » What to do with a low-priority task when a higher-priority 1/N of the processor task on the same runqueue wakes up and preempts it? » Tries to give each process an equal fraction of the » What to do when a task lowers its priority and causes a processor previously lower-priority task to have the higher priority? – Optimized implementation with global bit vectors to quickly – Priorities reflected by weights such that increasing a identify where to place tasks task’s priority by 1 always gives the same fractional • More on this later… increase in CPU time – regardless of current priority 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.11 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.12

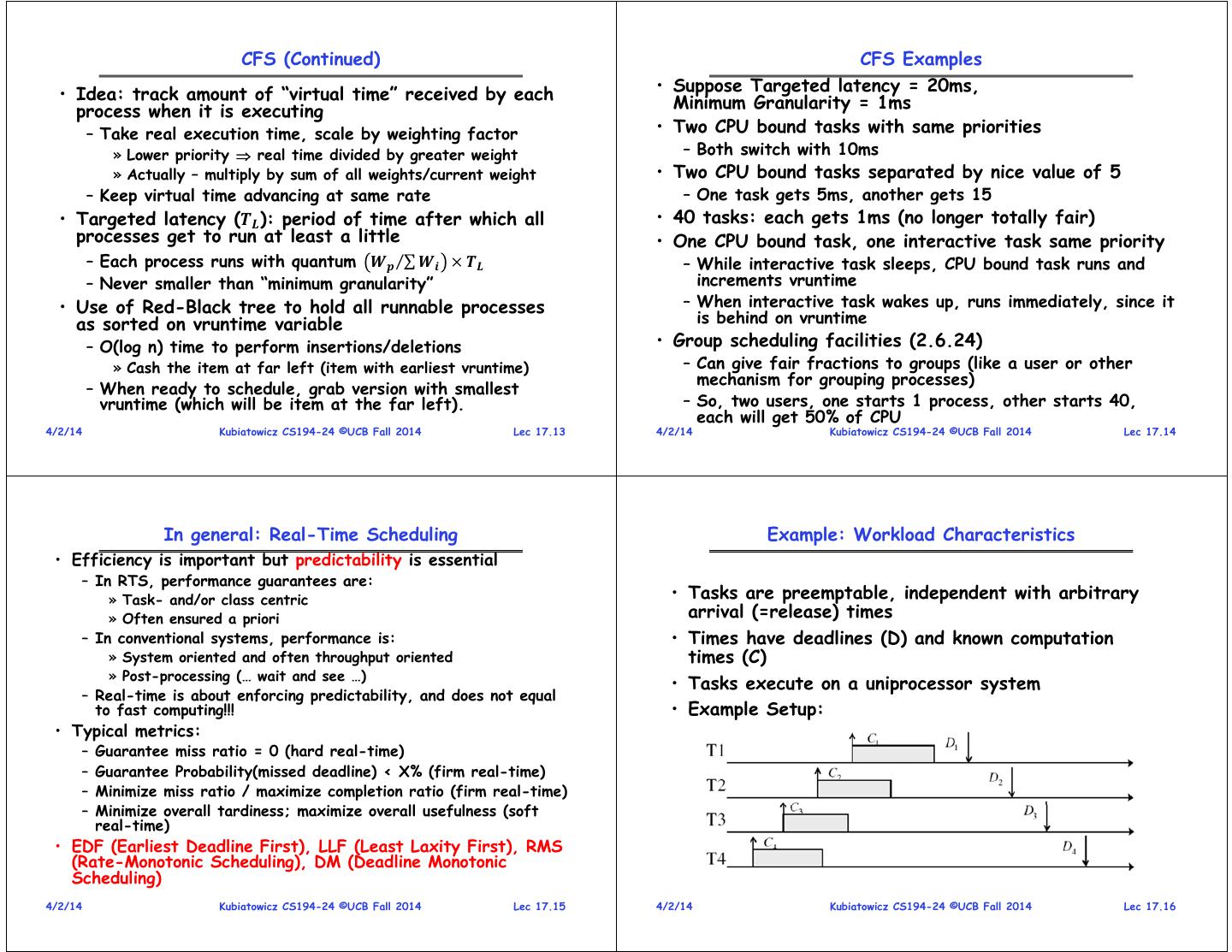

4 . CFS (Continued) CFS Examples • Idea: track amount of “virtual time” received by each • Suppose Targeted latency = 20ms, Minimum Granularity = 1ms process when it is executing – Take real execution time, scale by weighting factor • Two CPU bound tasks with same priorities » Lower priority real time divided by greater weight – Both switch with 10ms » Actually – multiply by sum of all weights/current weight • Two CPU bound tasks separated by nice value of 5 – Keep virtual time advancing at same rate – One task gets 5ms, another gets 15 • Targeted latency ( ): period of time after which all • 40 tasks: each gets 1ms (no longer totally fair) processes get to run at least a little • One CPU bound task, one interactive task same priority – Each process runs with quantum ⁄∑ – While interactive task sleeps, CPU bound task runs and – Never smaller than “minimum granularity” increments vruntime • Use of Red-Black tree to hold all runnable processes – When interactive task wakes up, runs immediately, since it as sorted on vruntime variable is behind on vruntime – O(log n) time to perform insertions/deletions • Group scheduling facilities (2.6.24) » Cash the item at far left (item with earliest vruntime) – Can give fair fractions to groups (like a user or other mechanism for grouping processes) – When ready to schedule, grab version with smallest vruntime (which will be item at the far left). – So, two users, one starts 1 process, other starts 40, each will get 50% of CPU 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.13 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.14 In general: Real-Time Scheduling Example: Workload Characteristics • Efficiency is important but predictability is essential – In RTS, performance guarantees are: » Task- and/or class centric • Tasks are preemptable, independent with arbitrary » Often ensured a priori arrival (=release) times – In conventional systems, performance is: • Times have deadlines (D) and known computation » System oriented and often throughput oriented times (C) » Post-processing (… wait and see …) • Tasks execute on a uniprocessor system – Real-time is about enforcing predictability, and does not equal to fast computing!!! • Example Setup: • Typical metrics: – Guarantee miss ratio = 0 (hard real-time) – Guarantee Probability(missed deadline) < X% (firm real-time) – Minimize miss ratio / maximize completion ratio (firm real-time) – Minimize overall tardiness; maximize overall usefulness (soft real-time) • EDF (Earliest Deadline First), LLF (Least Laxity First), RMS (Rate-Monotonic Scheduling), DM (Deadline Monotonic Scheduling) 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.15 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.16

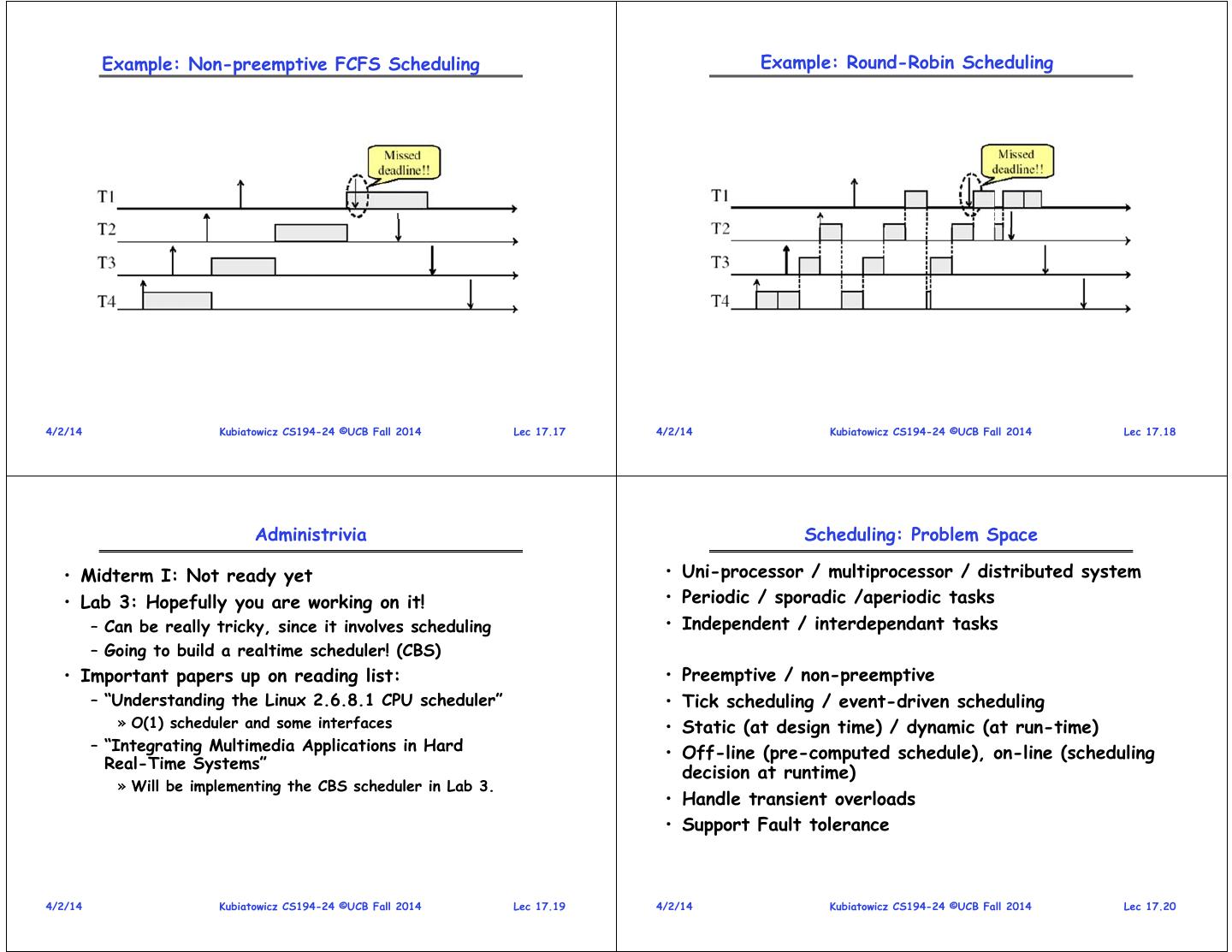

5 . Example: Non-preemptive FCFS Scheduling Example: Round-Robin Scheduling 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.17 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.18 Administrivia Scheduling: Problem Space • Midterm I: Not ready yet • Uni-processor / multiprocessor / distributed system • Lab 3: Hopefully you are working on it! • Periodic / sporadic /aperiodic tasks – Can be really tricky, since it involves scheduling • Independent / interdependant tasks – Going to build a realtime scheduler! (CBS) • Important papers up on reading list: • Preemptive / non-preemptive – “Understanding the Linux 2.6.8.1 CPU scheduler” • Tick scheduling / event-driven scheduling » O(1) scheduler and some interfaces • Static (at design time) / dynamic (at run-time) – “Integrating Multimedia Applications in Hard • Off-line (pre-computed schedule), on-line (scheduling Real-Time Systems” decision at runtime) » Will be implementing the CBS scheduler in Lab 3. • Handle transient overloads • Support Fault tolerance 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.19 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.20

6 . Task Assignment and Scheduling Simple Process Model • Cyclic executive scheduling ( later) • Fixed set of processes (tasks) • Cooperative scheduling • Processes are periodic, with known periods – scheduler relies on the current process to give up the CPU before it can start the execution of another • Processes are independent of each other process • System overheads, context switches etc, are ignored • A static priority-driven scheduler can preempt the (zero cost) current process to start a new process. Priorities are • Processes have a deadline equal to their period set pre-execution – i.e., each process must complete before its next – E.g., Rate-monotonic scheduling (RMS), Deadline release Monotonic scheduling (DM) • Processes have fixed worst-case execution time • A dynamic priority-driven scheduler can assign, and (WCET) possibly also redefine, process priorities at run-time. – Earliest Deadline First (EDF), Least Laxity First (LLF) 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.21 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.22 Performance Metrics Scheduling Approaches (Hard RTS) • Off-line scheduling / analysis (static analysis + static scheduling) • Completion ratio / miss ratio – All tasks, times and priorities given a priori (before system startup) • Maximize total usefulness value (weighted sum) – Time-driven; schedule computed and hardcoded (before system startup) • Maximize value of a task – E.g., Cyclic Executives • Minimize lateness – May be combined with static or dynamic scheduling approaches • Fixed priority scheduling (static analysis + dynamic scheduling) • Minimize error (imprecise tasks) – All tasks, times and priorities given a priori (before system • Feasibility (all tasks meet their deadlines) startup) – Priority-driven, dynamic(!) scheduling » The schedule is constructed by the OS scheduler at run time – For hard / safety critical systems – E.g., RMA/RMS (Rate Monotonic Analysis / Rate Monotonic Scheduling) • Dynamic priority scheduling – Tasks times may or may not be known – Assigns priorities based on the current state of the system – For hard / best effort systems – E.g., Least Completion Time (LCT), Earliest Deadline First (EDF), Least Slack Time (LST) 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.23 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.24

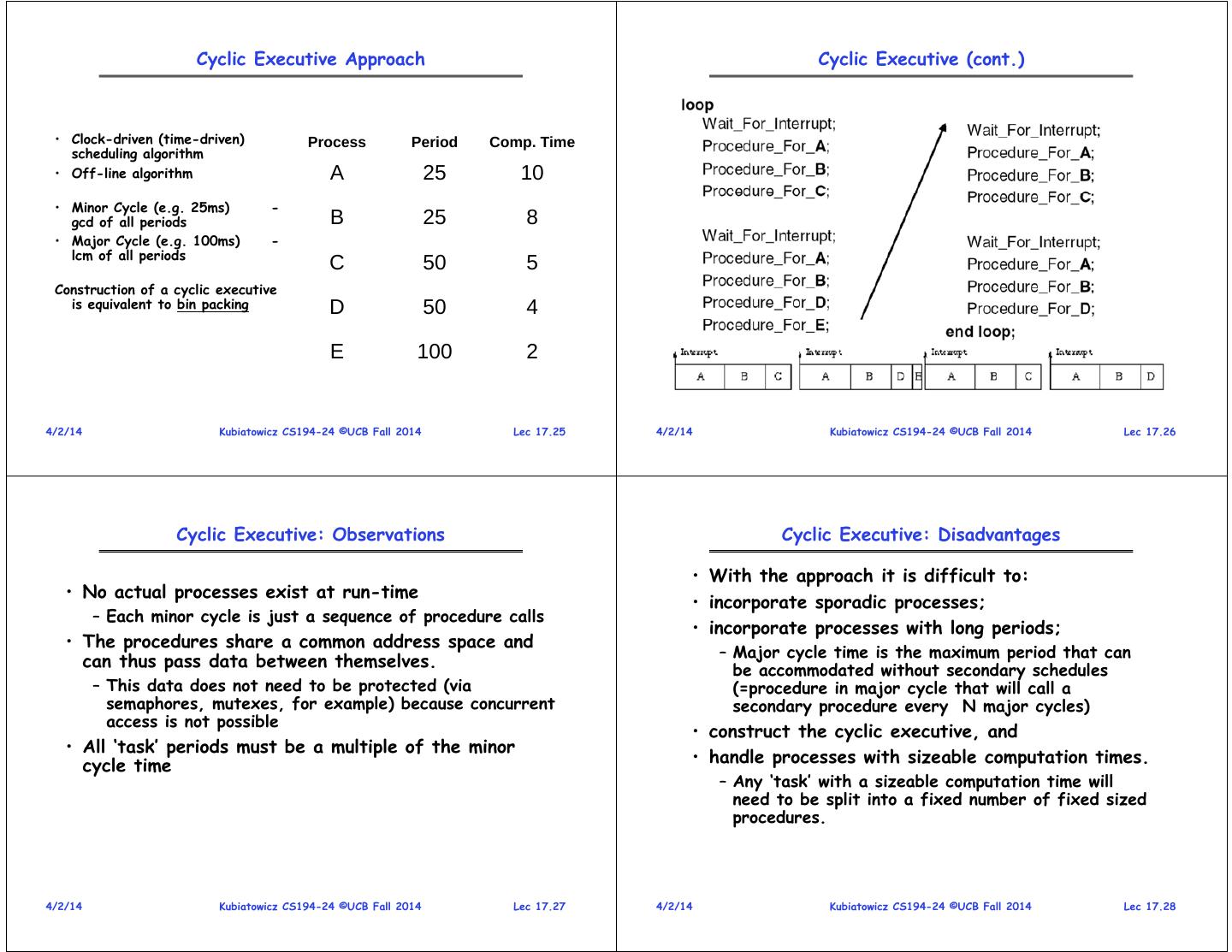

7 . Cyclic Executive Approach Cyclic Executive (cont.) • Clock-driven (time-driven) Process Period Comp. Time scheduling algorithm • Off-line algorithm A 25 10 • Minor Cycle (e.g. 25ms) - gcd of all periods B 25 8 • Major Cycle (e.g. 100ms) - lcm of all periods C 50 5 Construction of a cyclic executive is equivalent to bin packing D 50 4 E 100 2 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.25 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.26 Cyclic Executive: Observations Cyclic Executive: Disadvantages • With the approach it is difficult to: • No actual processes exist at run-time • incorporate sporadic processes; – Each minor cycle is just a sequence of procedure calls • incorporate processes with long periods; • The procedures share a common address space and – Major cycle time is the maximum period that can can thus pass data between themselves. be accommodated without secondary schedules – This data does not need to be protected (via (=procedure in major cycle that will call a semaphores, mutexes, for example) because concurrent secondary procedure every N major cycles) access is not possible • construct the cyclic executive, and • All ‘task’ periods must be a multiple of the minor cycle time • handle processes with sizeable computation times. – Any ‘task’ with a sizeable computation time will need to be split into a fixed number of fixed sized procedures. 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.27 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.28

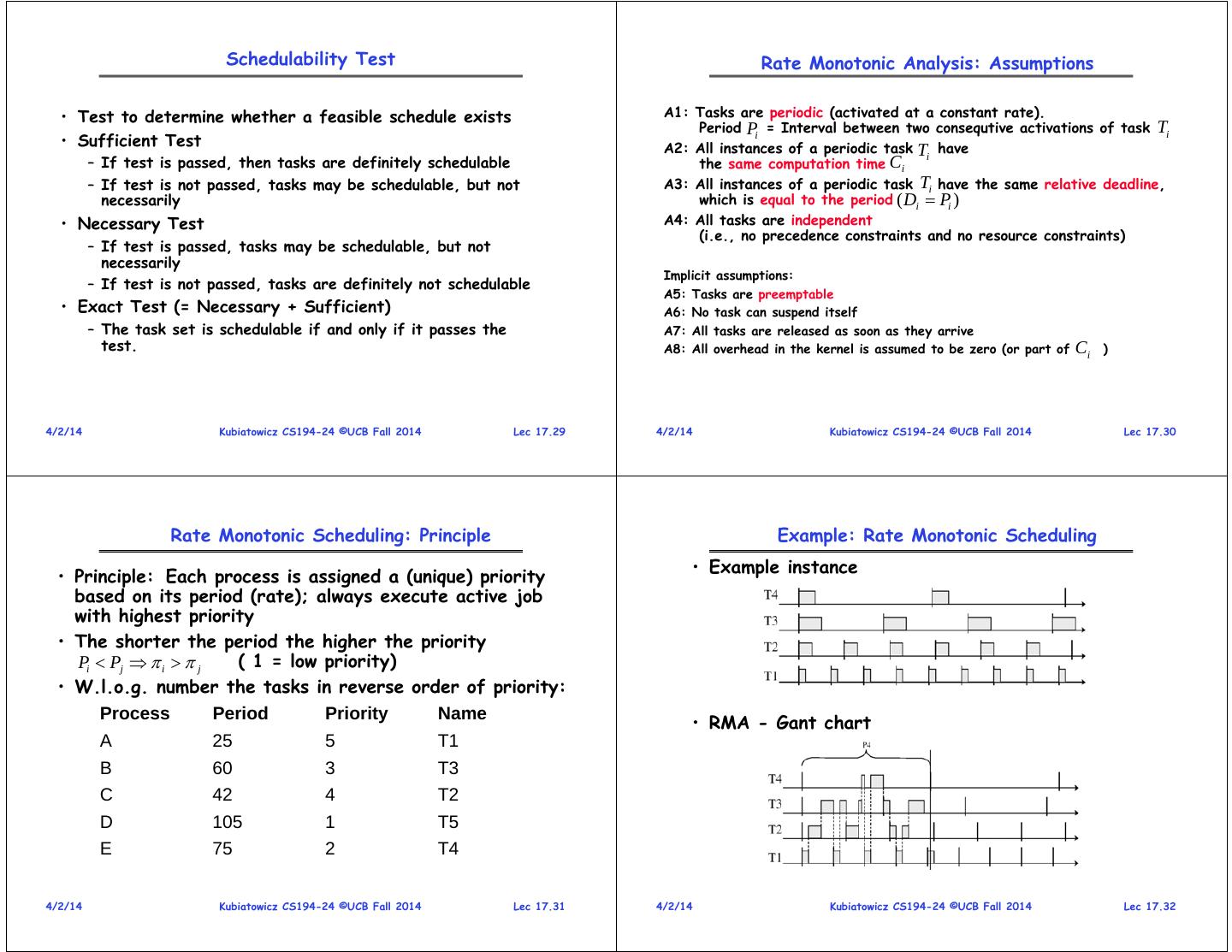

8 . Schedulability Test Rate Monotonic Analysis: Assumptions • Test to determine whether a feasible schedule exists A1: Tasks are periodic (activated at a constant rate). Period Pi = Interval between two consequtive activations of task Ti • Sufficient Test A2: All instances of a periodic task Ti have – If test is passed, then tasks are definitely schedulable the same computation time Ci – If test is not passed, tasks may be schedulable, but not A3: All instances of a periodic task Ti have the same relative deadline, necessarily which is equal to the period ( Di Pi ) • Necessary Test A4: All tasks are independent (i.e., no precedence constraints and no resource constraints) – If test is passed, tasks may be schedulable, but not necessarily Implicit assumptions: – If test is not passed, tasks are definitely not schedulable A5: Tasks are preemptable • Exact Test (= Necessary + Sufficient) A6: No task can suspend itself – The task set is schedulable if and only if it passes the A7: All tasks are released as soon as they arrive test. A8: All overhead in the kernel is assumed to be zero (or part of Ci ) 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.29 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.30 Rate Monotonic Scheduling: Principle Example: Rate Monotonic Scheduling • Example instance • Principle: Each process is assigned a (unique) priority based on its period (rate); always execute active job with highest priority • The shorter the period the higher the priority Pi Pj i j ( 1 = low priority) • W.l.o.g. number the tasks in reverse order of priority: Process Period Priority Name • RMA - Gant chart A 25 5 T1 B 60 3 T3 C 42 4 T2 D 105 1 T5 E 75 2 T4 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.31 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.32

9 . Example: Rate Monotonic Scheduling Definition: Utilization Ti ( Pi , Ci ) Pi period Ci processing time Ci Ui Utilization of task Ti Pi T1 (4,1) 2 Example : U 2 0.4 T2 (5,2) 5 T1 (4,1) T3 (7,2) 0 5 10 15 T2 (5,2) response time of job J 3,1 T3 (7,2) 0 5 10 15 Deadline Miss! 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.33 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.34 RMS: Schedulability Test RMS Example Theorem (Utilization-based Schedulability Test): • Our Set of Tasks from previous example: A periodic task set T1 , T2 , , Tn with Di Pi , 1 i n, T1 (4,1), T2 (5,2), T3 (7,2) is schedulable by the rate monotonic scheduling C1 C C 1 4 0.25, 2 2 5 0.4, 3 2 7 0.286 algorithm if: P1 P2 P3 n Ci • The schedulability test requires: n(21/ n 1), n 1,2, i 1 Pi n Ci P n(2 1/ n 1), n 1,2, i 1 i n( 21/ n 1) ln 2 for n 3 Ci • Hence, we get: P 0.936 3(2 1/ 3 1) 0.780 This schedulability test is “sufficient”: i 1 i • For harmonic periods ( T j evenly divides T ), the utilization bound is 100% i Does not satisfy schedulability condition! 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.35 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.36

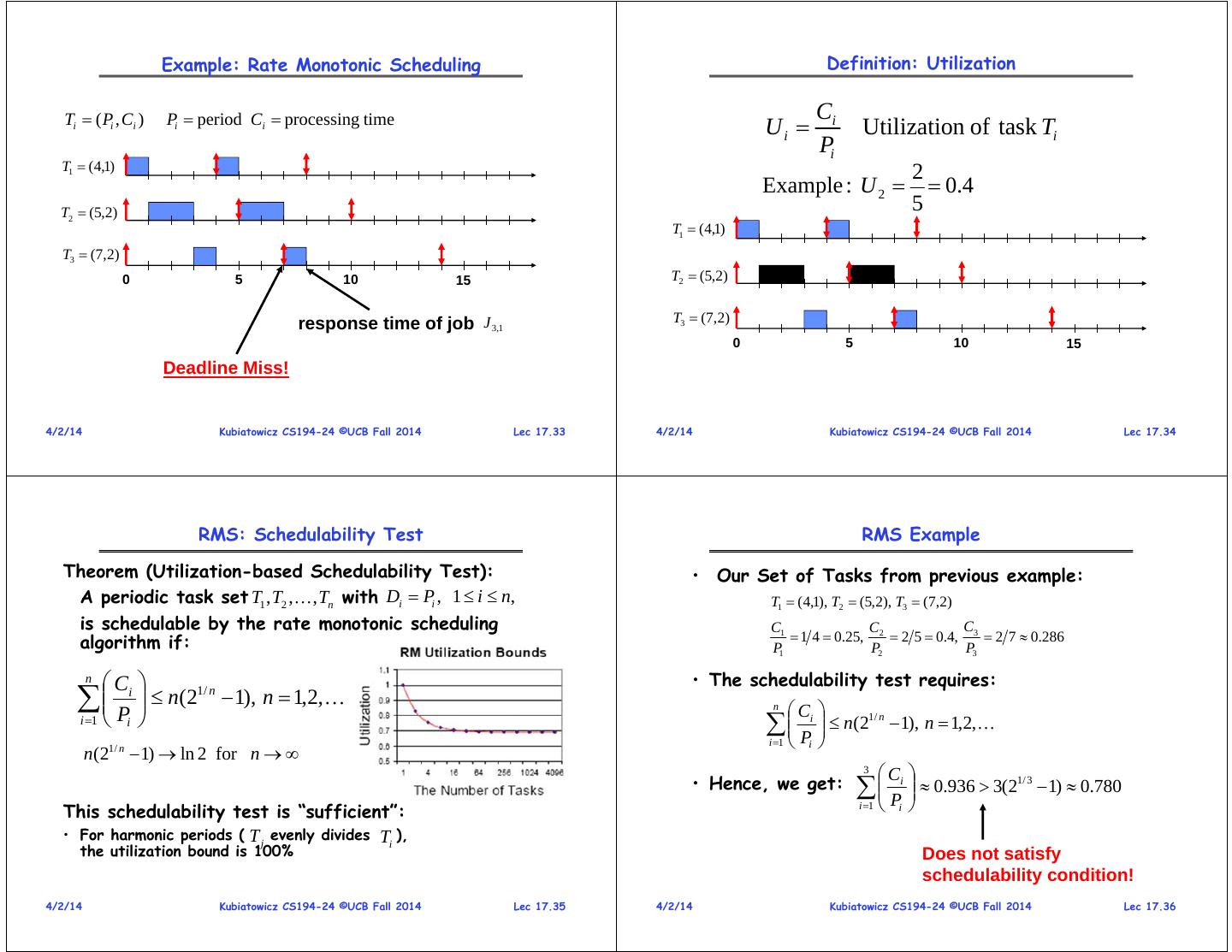

10 . EDF: Assumptions EDF Scheduling: Principle A1: Tasks are periodic or aperiodic. • Preemptive priority-based dynamic scheduling Period Pi = Interval between two consecutive activations of task Ti A2: All instances of periodic task Ti have • Each task is assigned a (current) priority based the same computation time Ci on how close the absolute deadline is. A3: All instances of periodic task Ti have the same relative deadline, • The scheduler always schedules the active task which is equal to the period ( Di Pi ) with the closest absolute deadline. A4: All tasks are independent (i.e., no precedence constraints and no resource constraints) Implicit assumptions: T1 (4,1) A5: Tasks are preemptable A6: No task can suspend itself T2 (5,2) A7: All tasks are released as soon as they arrive A8: All overhead in the kernel is assumed to be zero (or part of Ci ) T3 (7,2) 0 5 10 15 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.37 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.38 EDF: Schedulability Test EDF Optimality Theorem (Utilization-based Schedulability Test): EDF Properties A task set T1 , T2 , , Tn with Di Pi is • EDF is optimal with respect to feasibility (i.e., schedulable by the earliest deadline first (EDF) schedulability) scheduling algorithm if • EDF is optimal with respect to minimizing the maximum lateness n Ci D 1 i 1 i Exact schedulability test (necessary + sufficient) Proof: [Liu and Layland, 1973] 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.39 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.40

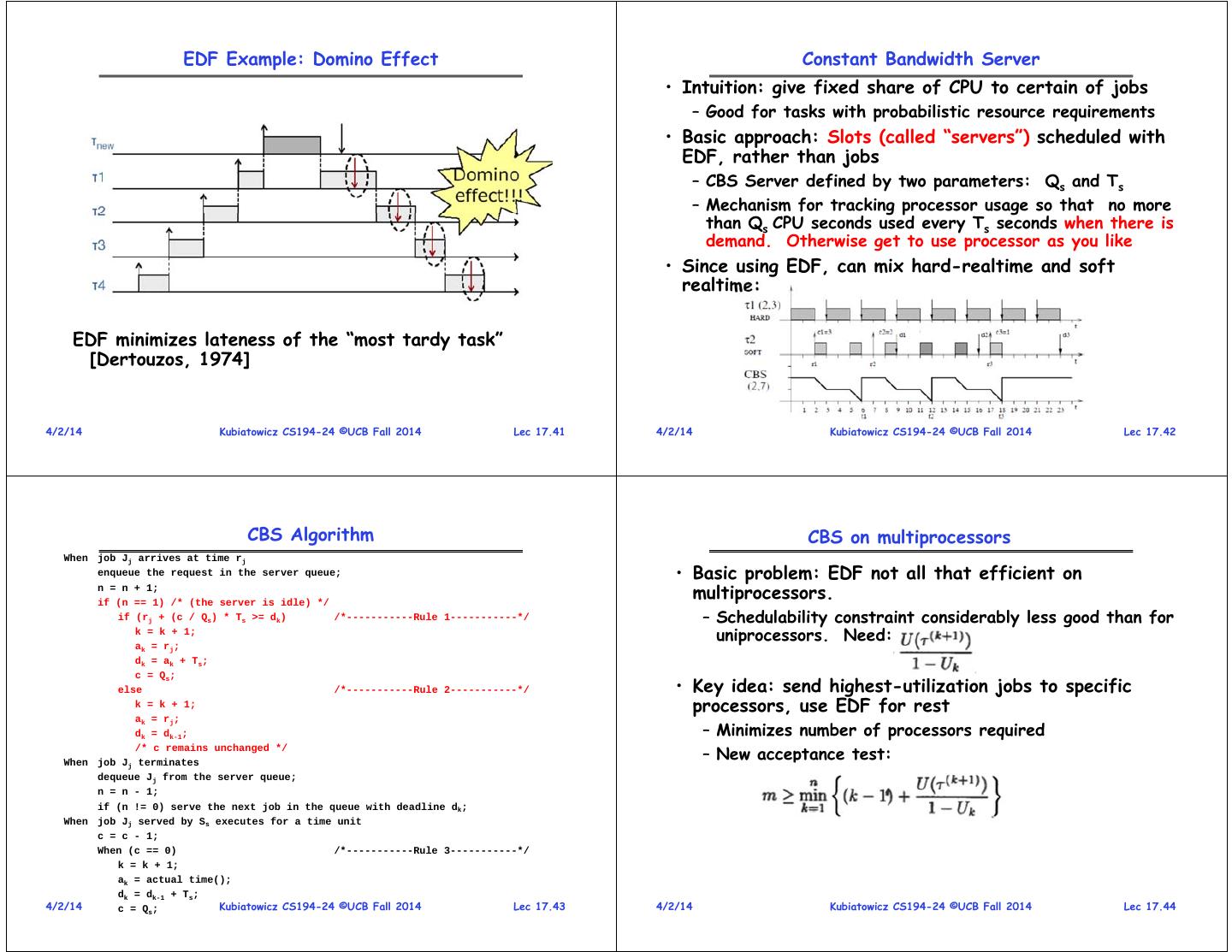

11 . EDF Example: Domino Effect Constant Bandwidth Server • Intuition: give fixed share of CPU to certain of jobs – Good for tasks with probabilistic resource requirements • Basic approach: Slots (called “servers”) scheduled with EDF, rather than jobs – CBS Server defined by two parameters: Qs and Ts – Mechanism for tracking processor usage so that no more than Qs CPU seconds used every Ts seconds when there is demand. Otherwise get to use processor as you like • Since using EDF, can mix hard-realtime and soft realtime: EDF minimizes lateness of the “most tardy task” [Dertouzos, 1974] 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.41 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.42 CBS Algorithm CBS on multiprocessors When job Jj arrives at time rj enqueue the request in the server queue; • Basic problem: EDF not all that efficient on n = n + 1; if (n == 1) /* (the server is idle) */ multiprocessors. if (rj + (c / Qs) * Ts >= dk) /*-----------Rule 1-----------*/ – Schedulability constraint considerably less good than for k = k + 1; uniprocessors. Need: ak = rj; dk = ak + Ts; c = Qs; else /*-----------Rule 2-----------*/ • Key idea: send highest-utilization jobs to specific k = k + 1; processors, use EDF for rest ak = rj; dk = dk-1; – Minimizes number of processors required /* c remains unchanged */ When job Jj terminates – New acceptance test: dequeue Jj from the server queue; n = n - 1; if (n != 0) serve the next job in the queue with deadline dk; When job Jj served by Ss executes for a time unit c = c - 1; When (c == 0) /*-----------Rule 3-----------*/ k = k + 1; ak = actual time(); dk = dk-1 + Ts; 4/2/14 c = Q s; Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.43 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.44

12 . How Realtime is Vanilla Linux? Summary • Scheduling: selecting a waiting process from the ready • Priority scheduling a important part of realtime queue and allocating the CPU to it scheduling, so that part is good • Linux O(1) Scheduler: Priority Scheduling with dynamic Priority boost/retraction – No schedulability test – All operations O(1) – No dynamic rearrangement of priorities – Fairly complex heuristics to perform dynamic priority alterations • Example: RMS – Every task gets at least a little chance to run • Linux CFS Scheduler: Fair fraction of CPU – Set priorities based on frequencies – Only one RB tree, not multiple priority queues – Works for static set, but might need to rearrange – Approximates a “ideal” multitasking processor (change) all priorities when new task arrives • Realtime Schedulers: RMS, EDF, CBS • Example: EDF, CBS – All attempting to provide guaranteed behavior by meeting deadlines. Requires analysis of compute time – Continuous changing priorities based on deadlines – Realtime tasks defined by tuple of compute time and period – Would require a *lot* of work with vanilla Linux – Schedulability test: is it possible to meet deadlines with support (with every change, would need to walk proposed set of processes? through all processes and alter their priorities • Fair Sharing: How to define a user’s fair share? – Especially with more than one resource? 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.45 4/2/14 Kubiatowicz CS194-24 ©UCB Fall 2014 Lec 17.46