- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

在 Kubernetes 上大规模运行 Vitess:京东案例研究

展开查看详情

1 .Running Vitess on Kubernetes at Massive Scale - JD.com case study.

2 .About PlanetScale Founded in early 2018 to help operationalize Vitess • Jiten Vaidya (CEO, Managed teams that operationalized Vitess at Youtube) • Sugu Sugumaran (CTO, Vitess community leader) Offerings • Open Source Vitess Support • Custom Vitess Development • Kubernetes Deployment Manager • Cross-cloud DBaaS

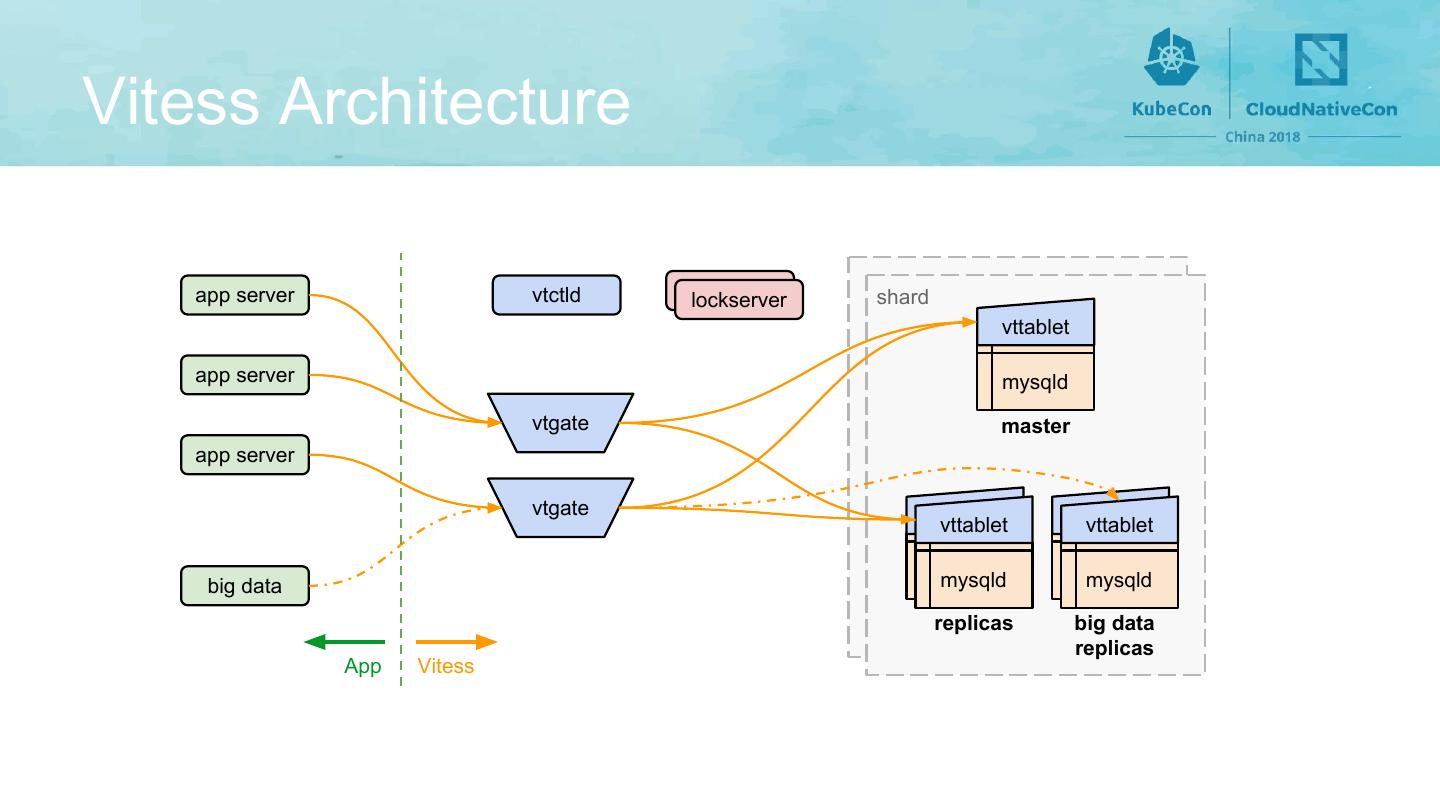

3 .Vitess Architecture app server vtctld lockserver shard vttablet app server mysqld vtgate master app server vtgate vttablet vttablet vttablet vttablet mysqld mysqld mysqld mysqld big data replicas big data replicas App Vitess

4 .Vtgate in Kubernetes ● Stateless proxy ● Vtgates can be created as load ● Accepts connections as a MySQL increase compatible server ● Start n vtgates as a Replica Set ● Contains GRPC endpoint and ● For co-located workloads start one Web UI vtgate per node and expose with a ● Computes target shards ClusterIP ● Sends queries to vttablets for targeted shards ● Receives, collates and serves response to application

5 .Vtctld in Kubernetes ● Vitess Control Plane ● Start one or two vtctld processes ● Serves a Web UI per cell ○ Operational commands ● Start them as a Deployments ○ Status ● Expose them behind a Service ○ Topology browser ● Serves an API over GRPC ○ Used by vtctlclient tool ● Supports resharding workflows

6 .lockserver (etcd) in Kubernetes ● Knits the Vitess cluster together ● One global cluster ● Backing store for metadata ● One cluster per cell (optional) ○ Service discovery ● Use etcd-operator to spin out a ○ Topology cluster ○ VSchema ● Expose etcd cluster behind a ● Not used for query serving Service ● Needed for any change in topology ○ Add a keyspace ○ Add a shard to keyspace ○ Add a tablet to a shard ○ Change master for a shard

7 .Tablet (vttablet + mysqld) in Kubernetes ● Vitess Tablet is a combination of a ● 2 containers in the same pod mysqld instance and a ● Communicate over Unix socket corresponding vttablet process ● Unix socket created in Shared ● Each tablet requires a unique id in Volume Vitess cluster ● Local Persistent Volume for data ● Tablets can be of type: master, ● One master, 2 replicas with replica, rdonly semi-sync replication enabled for ● Tablets of type “replica” can be high availability promoted to master and should ● Replicas should not be co-located have low replica lag with other members of shard ( Anti-Affinity )

8 .Authentication/Secrets management ● What secrets are needed? ○ Application -> Vtgate authentication ○ Vttablet -> mysqld authentication for various roles that Vitess supports (app, dba, replication, filtered replication etc). ○ TLS certs and keys for GRPC traffic over TLS (optional) ○ TLS certs and keys for binary logs over TLS (optional) ○ TLS certs and keys for client authorization and authentication over TLS (optional) ● Use Kubernetes Secrets and mount them in pods

9 .High Availability ● Planned reparent ○ Coordinated via lockserver ○ Existing transactions are allowed to complete ○ New transactions are buffered by vtgate ○ New master is made writable ○ Replicas are made slaves of the new master ○ Query serving is resumed ● Unplanned reparent ○ Orchestrator ○ TabletExternallyReparented ● Resharding ○ No interruption to query traffic during resharding

10 .Supporting multiple cells ● Vitess cell is the equivalent of a failure domain (e.g. AWS availability zones or regions) ● Not necessarily the same as Kubernetes failure domain. ● Choice to use global lockserver cluster OR use one lockserver cluster per cell. ● Global lockserver cluster typically outside of Kubernetes. ● Expose lockserver behind a service definition. ● If using etcd use etcd-operator to start per cell cluster.

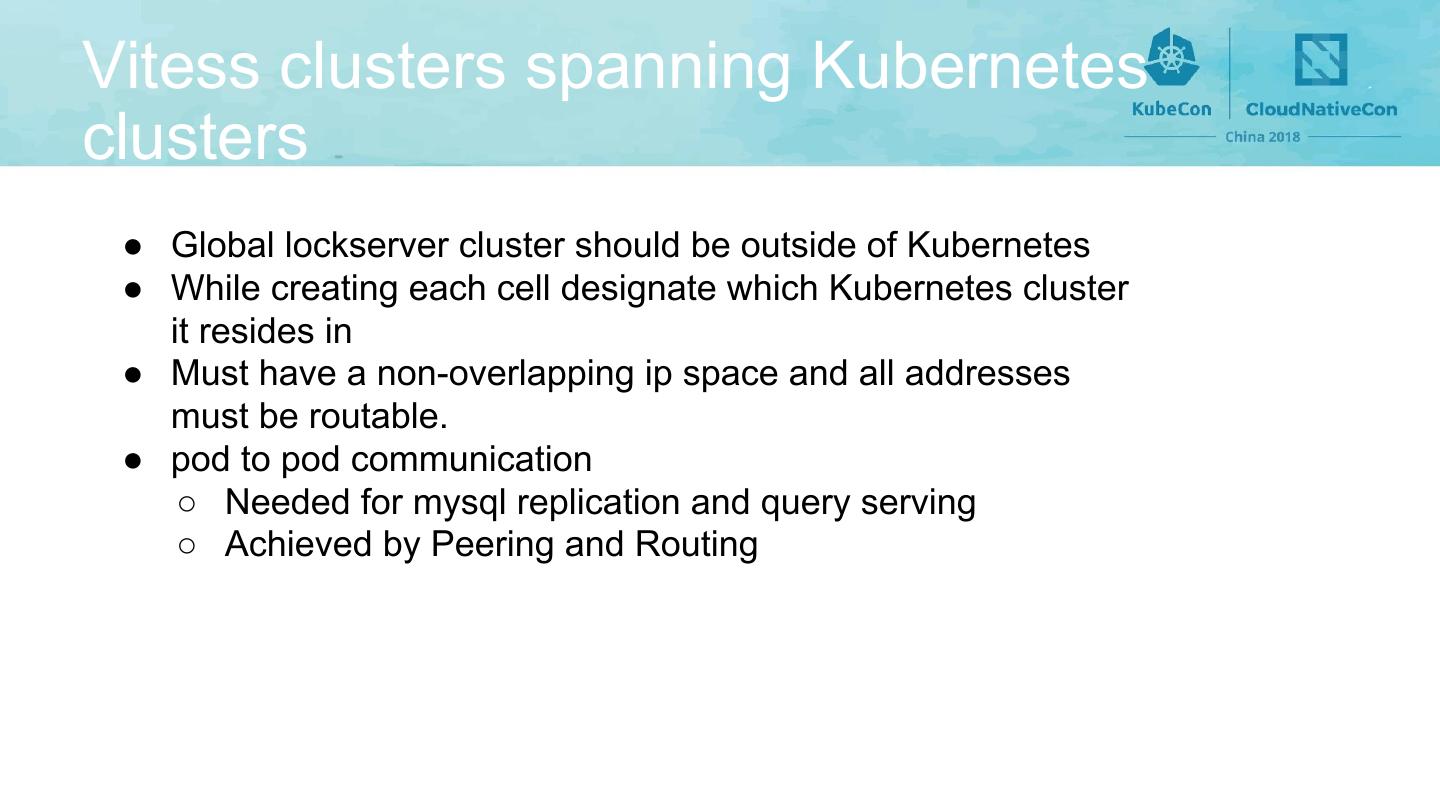

11 .Vitess clusters spanning Kubernetes clusters ● Global lockserver cluster should be outside of Kubernetes ● While creating each cell designate which Kubernetes cluster it resides in ● Must have a non-overlapping ip space and all addresses must be routable. ● pod to pod communication ○ Needed for mysql replication and query serving ○ Achieved by Peering and Routing

12 .About JD.COM • One of the two biggest e-commerce companies in China • The largest Chinese retailer • 300 million active users • First Chinese internet company to make the Fortune Global 500 • Largest e-commerce logistics infrastructure in China • Covering 99% of population • Strategic Partnerships • Tencent • Walmart • Google

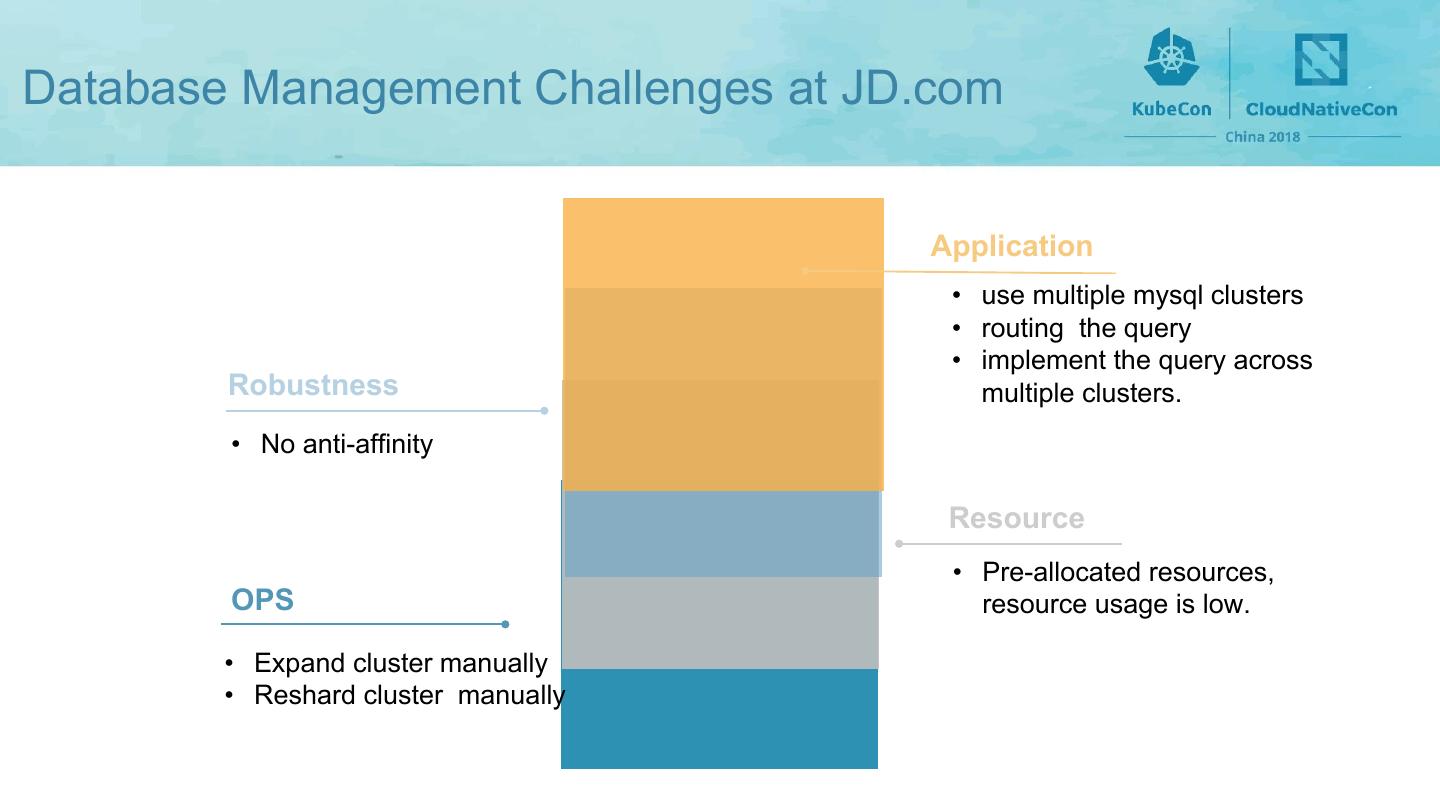

13 .Database Management Challenges at JD.com Application • use multiple mysql clusters • routing the query • implement the query across Robustness multiple clusters. • No anti-affinity Resource • Pre-allocated resources, OPS resource usage is low. • Expand cluster manually • Reshard cluster manually

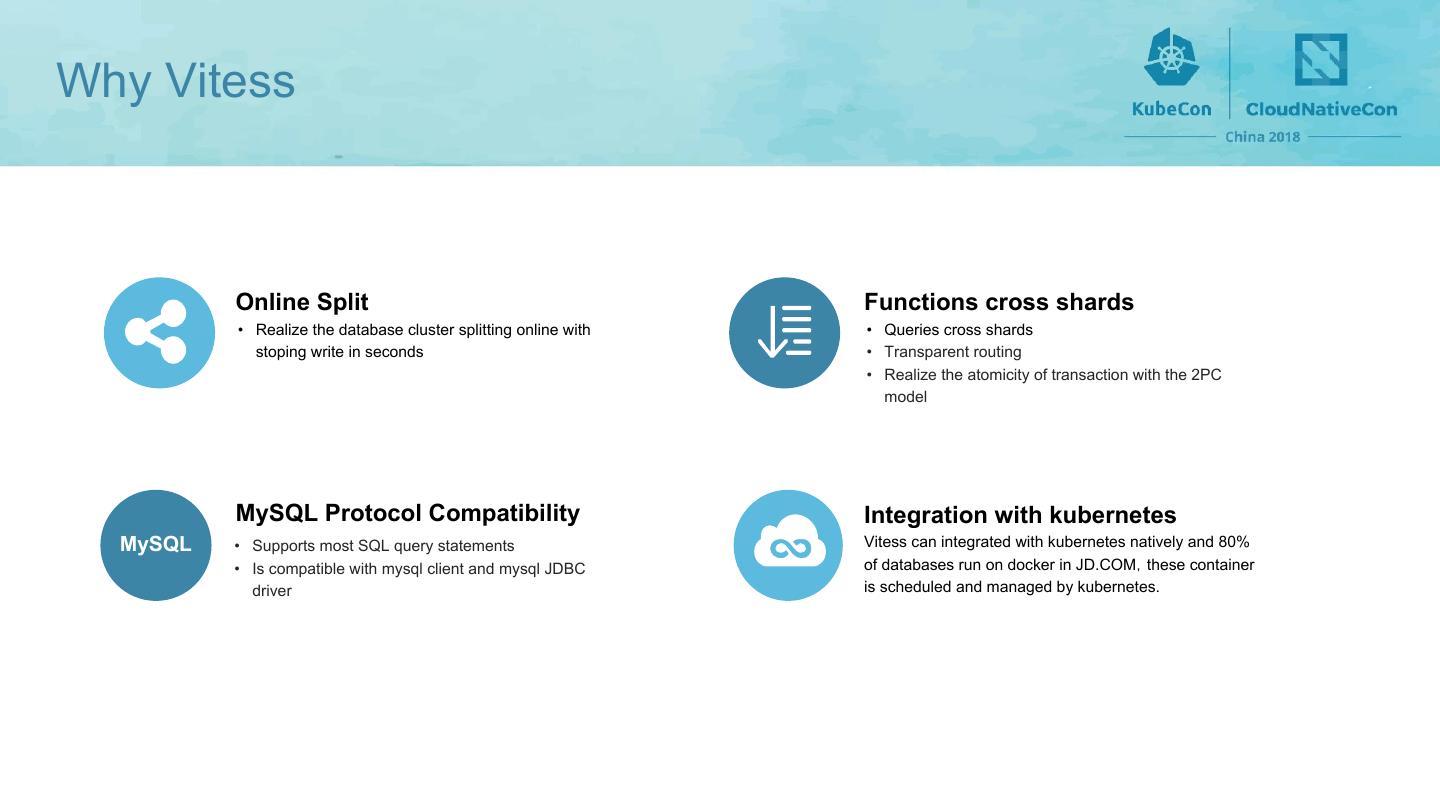

14 .Why Vitess Online Split Functions cross shards • Realize the database cluster splitting online with • Queries cross shards stoping write in seconds • Transparent routing • Realize the atomicity of transaction with the 2PC model MySQL Protocol Compatibility Integration with kubernetes MySQL • Supports most SQL query statements Vitess can integrated with kubernetes natively and 80% • Is compatible with mysql client and mysql JDBC of databases run on docker in JD.COM,these container driver is scheduled and managed by kubernetes.

15 .RoadMap Domestic deployment Use Vitess first across multiple IDC Say GoodBye to JProxy,use vitess 5 IDC in China Support 1,500 business systems in China Support important Support overseas business core systems Thailand, Indonesia Settlement system、 and other countries B2B price、Product、 After-sales and Order details filing system 2017-01 2017-06 2017-12 2018-9

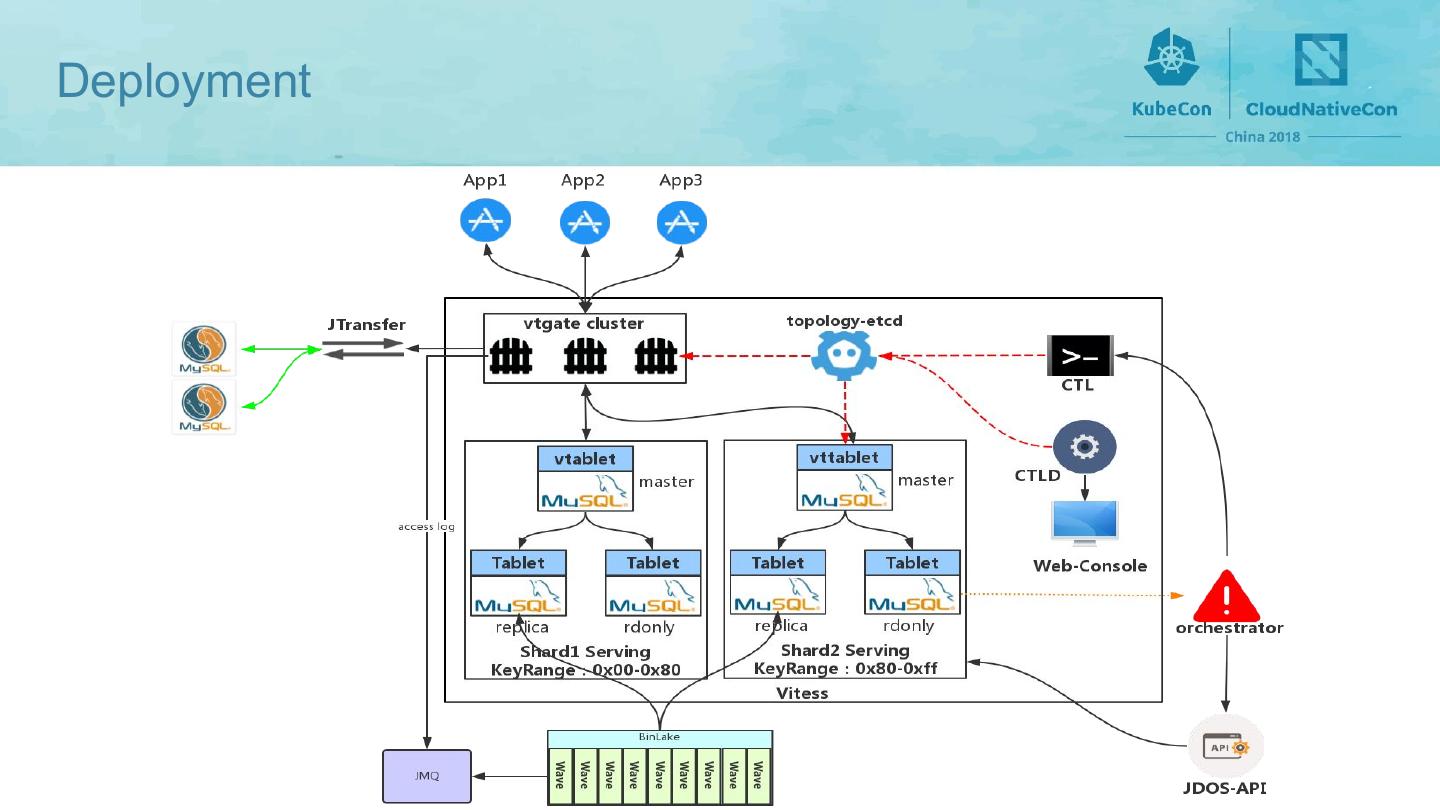

16 .Deployment The world’s largest and most complex Vitess deployment Deployment Data Size KeySpace:1911 146 TB DataCenter:8 252 billion Rows Shard:4438 Tablet:11416 Tables:552104 Most Shards/KeySpace:72 Support Business Increase MySQL project:1731 10 KeySpaces/week business: Settlement system、 order 10TB/week details system、B2B Price、Cis_pop、 20 billion Rows/week Logistics billing system、Coupon and so on, OLTP

17 .Deployment

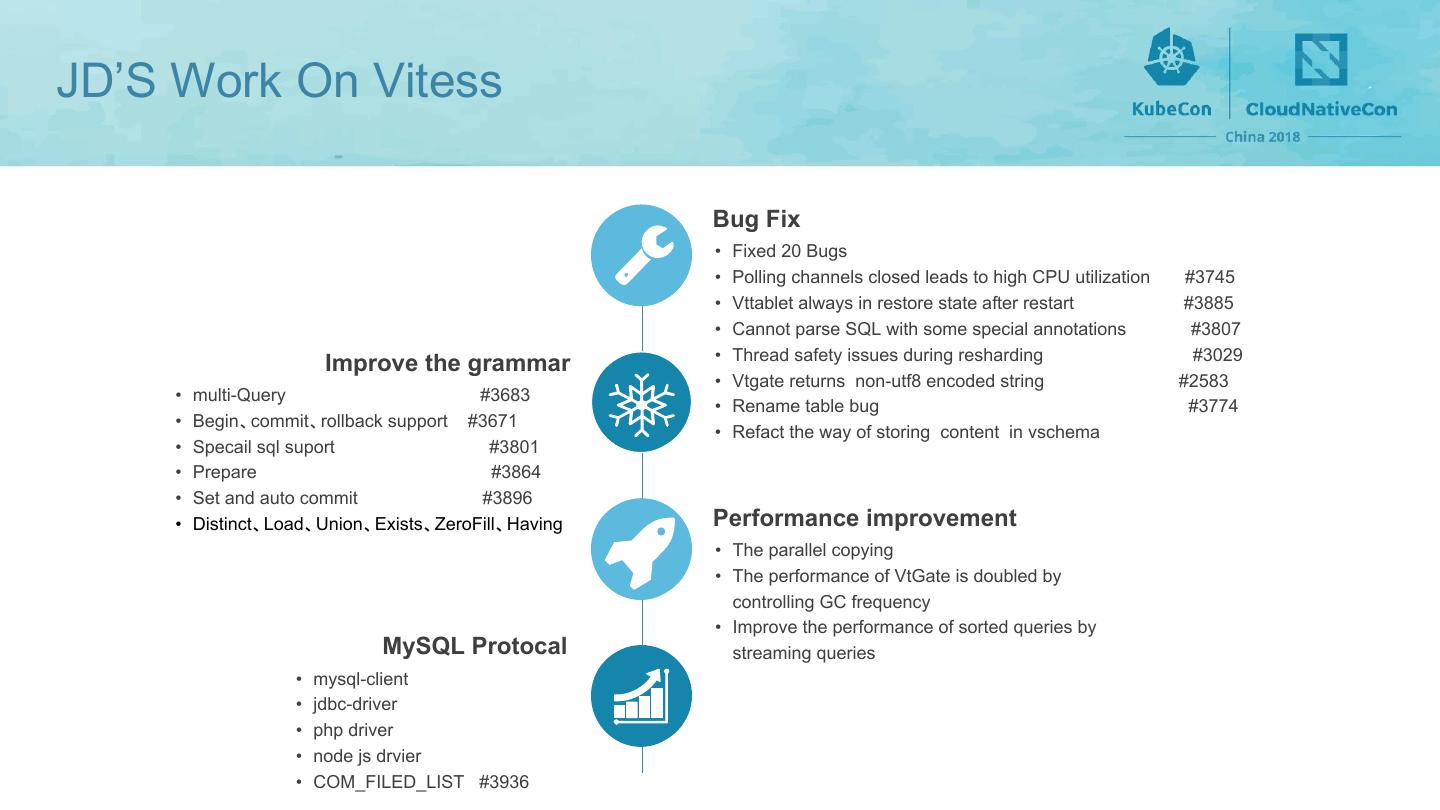

18 .JD’S Work On Vitess Bug Fix • Fixed 20 Bugs • Polling channels closed leads to high CPU utilization #3745 • Vttablet always in restore state after restart #3885 • Cannot parse SQL with some special annotations #3807 • Thread safety issues during resharding #3029 Improve the grammar • Vtgate returns non-utf8 encoded string #2583 • multi-Query #3683 • Rename table bug #3774 • Begin、commit、rollback support #3671 • Refact the way of storing content in vschema • Specail sql suport #3801 • Prepare #3864 • Set and auto commit #3896 • Distinct、Load、Union、Exists、ZeroFill、Having Performance improvement • The parallel copying • The performance of VtGate is doubled by controlling GC frequency • Improve the performance of sorted queries by MySQL Protocal streaming queries • mysql-client • jdbc-driver • php driver • node js drvier • COM_FILED_LIST #3936

19 .JD’S Work On Vitess Ecological • JTransfer • BinLake • Data access audit • Manage System Improve Resource utilization • All In One Container • OverUse OF CPU • 1 master、1 replication 1 readonly Elastic scaling Local instant capacity expansion Split with one action Anti-compatibility scheduling Multiple engine • RocksDB • TokuDB

20 .Challenges Splitting Scale Up MetaData orchestrator Slow Can not scale up immediately The design The design of orchestrator Manual of metadata storage result in result in can not manager too can not deploy too large vitess many instances cluster

21 .Solutions Splitting Challenge Solution • There are many instances with the amount of data that more • Control the amount of data each shard strictly , make it less than 512 GB than 1 TB, lead to the split of these instances process is very • Parallel copy and replication, speed up the split process slow • Realize the function of a key split, can automatically or manually triggered • There are too many business system, so it is not practical to split each shard manually

22 .Solutions Scale Up Challenge Solution • Peak twice every year: 618 and 11.11 • Increase CPU locally without service down • JD often make promotion • Monitor the load of physical machines and pods • We need to be able to improve database service ability • Migration with one click rapidly

23 .Solutions Metadata Challenge Solution • So many keyspace and vschema storage design result in • Store url in vschema,and get the contents of vschema from the url the vschema info’s size if larger than 1.5 MB which seriously • Split the value of entire vschema into the metadata of many individual keyspaces affects the stability of etcd and leads to etcd instance oom frequently

24 .Solutions orchestrator Challenge Solution • When the cluster monitored by orchestrator has more than • One orchestrator per cell 5,000 instances, the orchestrator always changing leader • Control the number of instances in one cell below 5000 looply and to can not provide services

25 .Ongoing Work And Next Step Resharding Isolation Each Worker is responsible for splitting up a Shard and achieving the independence of each Worker splitting without mutual influence. Refact VSchema Vschena’s content is currently stored in one Value We will split the vschema’s content into many individual keyspace content Auto-Balance Automatic scaling capacity, splitting and migration of database load are realized based on monitoring data

26 .