- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

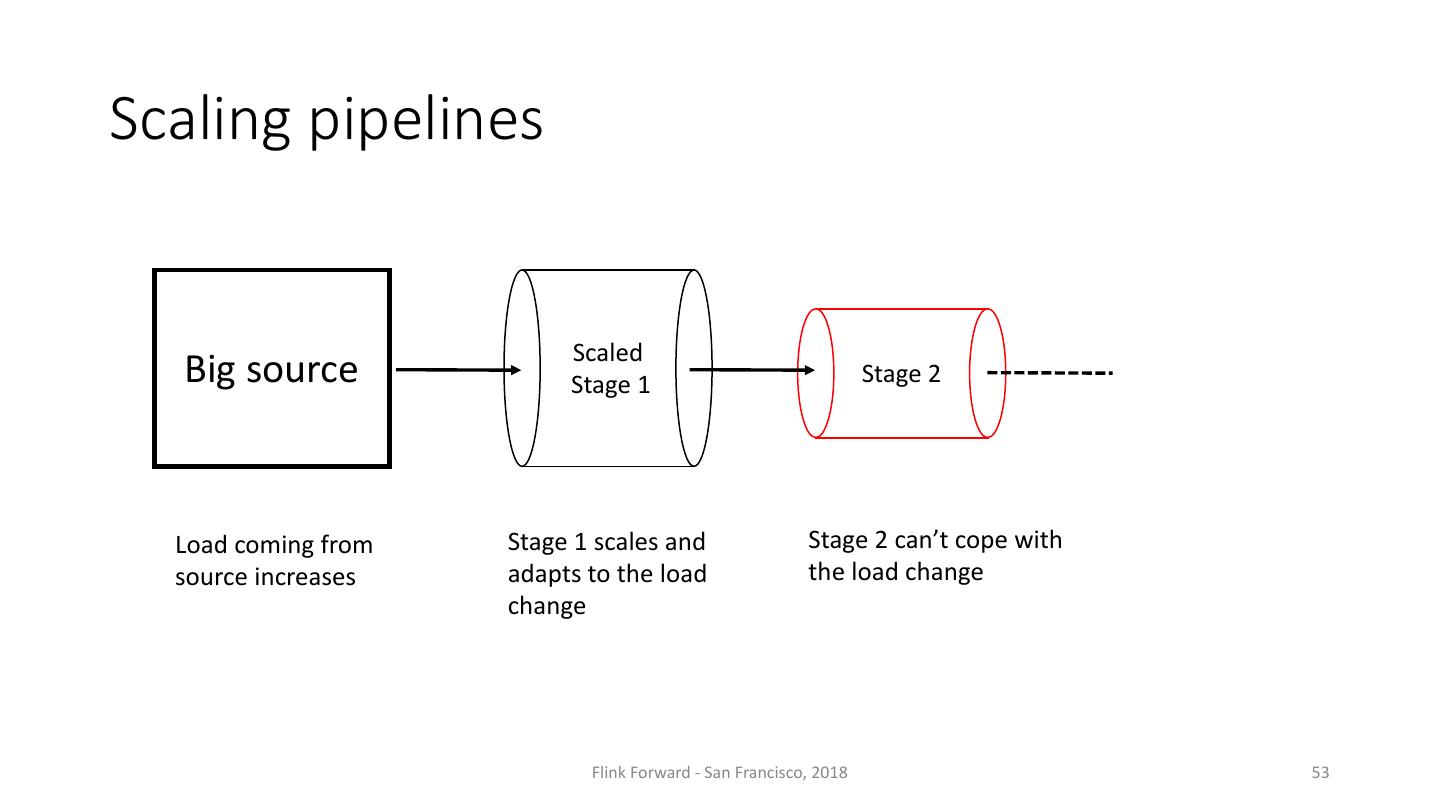

Scaling stream data pipelines

展开查看详情

1 .Scaling stream data pipelines Flavio Junqueira, Pravega - Dell EMC Till Rohrmann, Data Artisans

2 .Motivation Flink Forward - San Francisco, 2018 2

3 . Streams ahoy! Stream of user events • Status updates Social networks • Online transactions Online shopping Flink Forward - San Francisco, 2018 3

4 . Streams ahoy! Stream of user events • Status updates Social networks • Online transactions Online shopping Stream of server events • CPU, memory, disk utilization Server monitoring Flink Forward - San Francisco, 2018 4

5 . Streams ahoy! Stream of user events • Status updates Social networks • Online transactions Online shopping Stream of server events • CPU, memory, disk utilization Server monitoring Stream of sensor events • Temperature samples • Samples from radar and image sensors in cars Sensors (IoT) Flink Forward - San Francisco, 2018 5

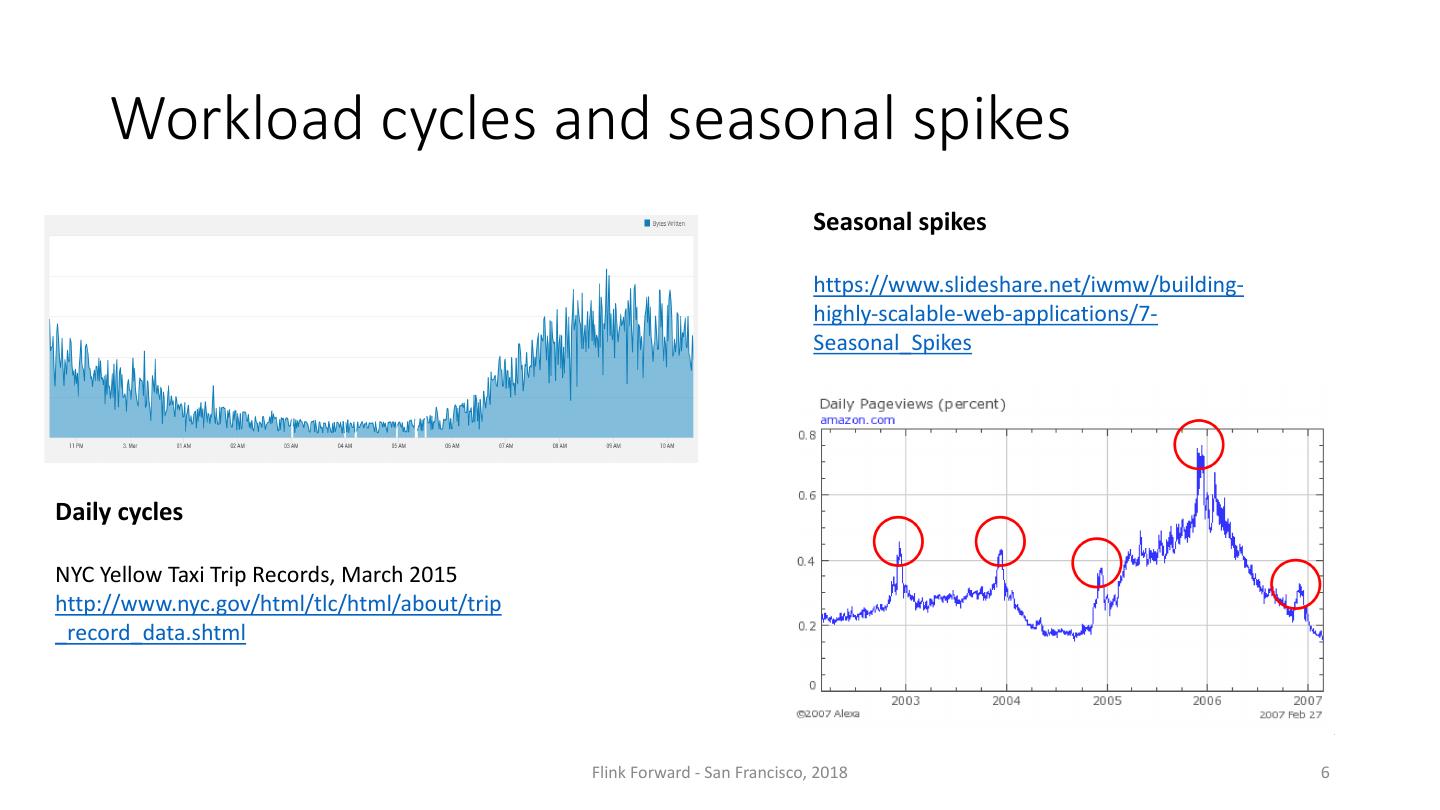

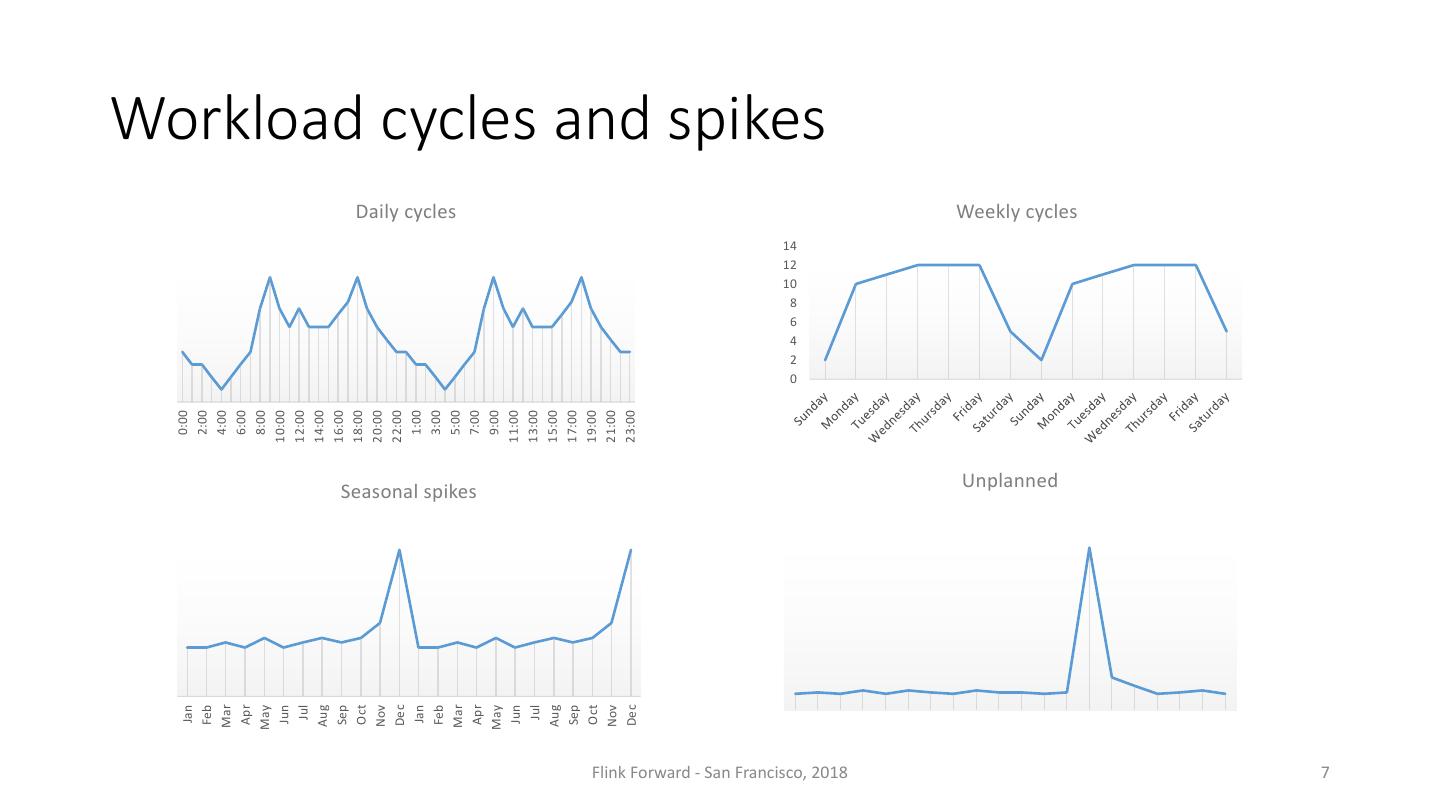

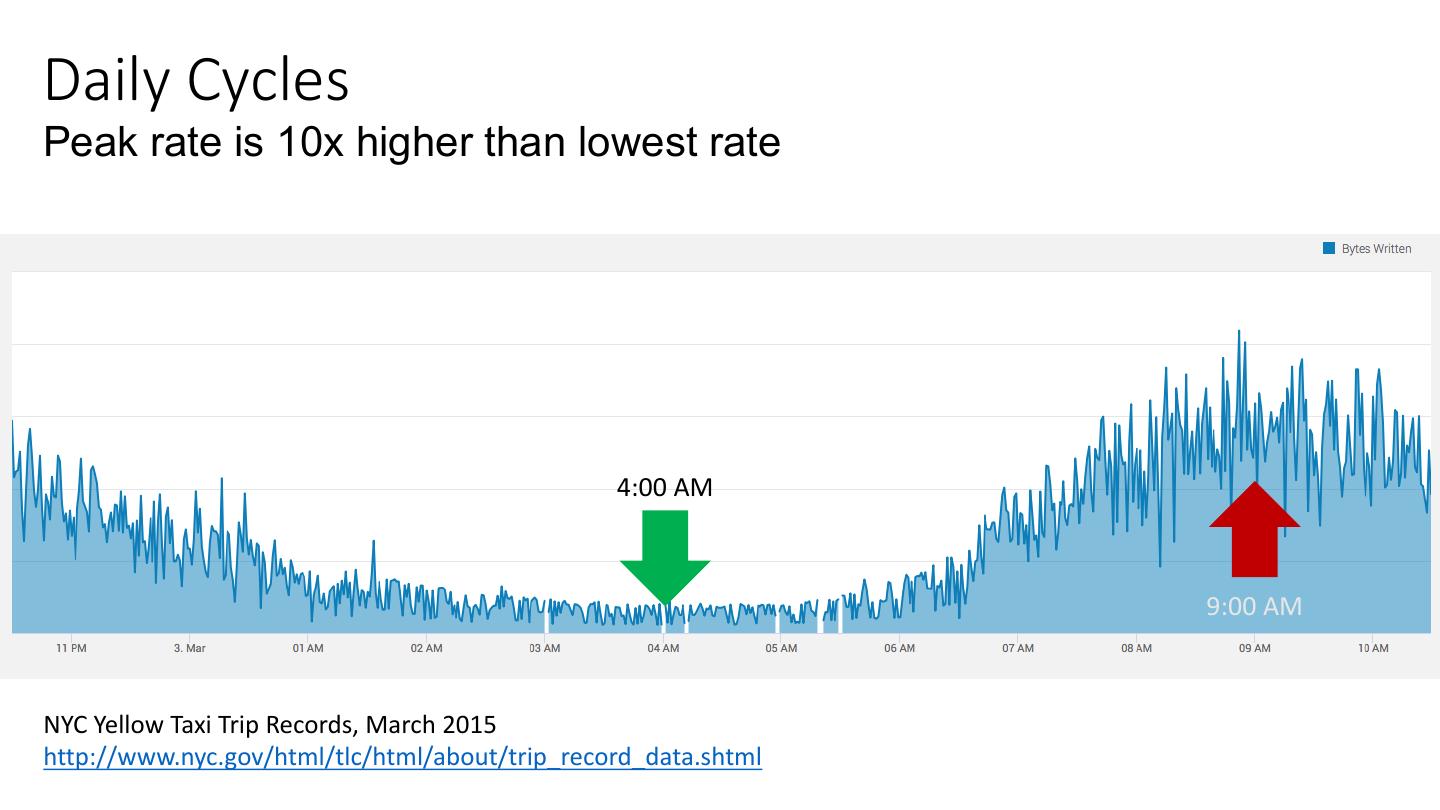

6 . Workload cycles and seasonal spikes Seasonal spikes https://www.slideshare.net/iwmw/building- highly-scalable-web-applications/7- Seasonal_Spikes Daily cycles NYC Yellow Taxi Trip Records, March 2015 http://www.nyc.gov/html/tlc/html/about/trip _record_data.shtml Flink Forward - San Francisco, 2018 6

7 . Jan 0:00 Feb 2:00 Mar 4:00 Apr 6:00 May 8:00 Jun 10:00 Jul 12:00 Aug 14:00 Sep 16:00 Oct 18:00 Nov 20:00 Dec 22:00 Jan 1:00 Feb 3:00 Daily cycles Mar 5:00 Seasonal spikes Apr 7:00 May 9:00 Jun 11:00 Jul 13:00 Aug 15:00 Sep 17:00 Oct 19:00 Nov 21:00 Dec 23:00 0 2 4 6 8 10 12 14 Workload cycles and spikes Flink Forward - San Francisco, 2018 Unplanned Weekly cycles 7

8 .Overprovisioning… what if we don’t want to overprovision? Flink Forward - San Francisco, 2018 8

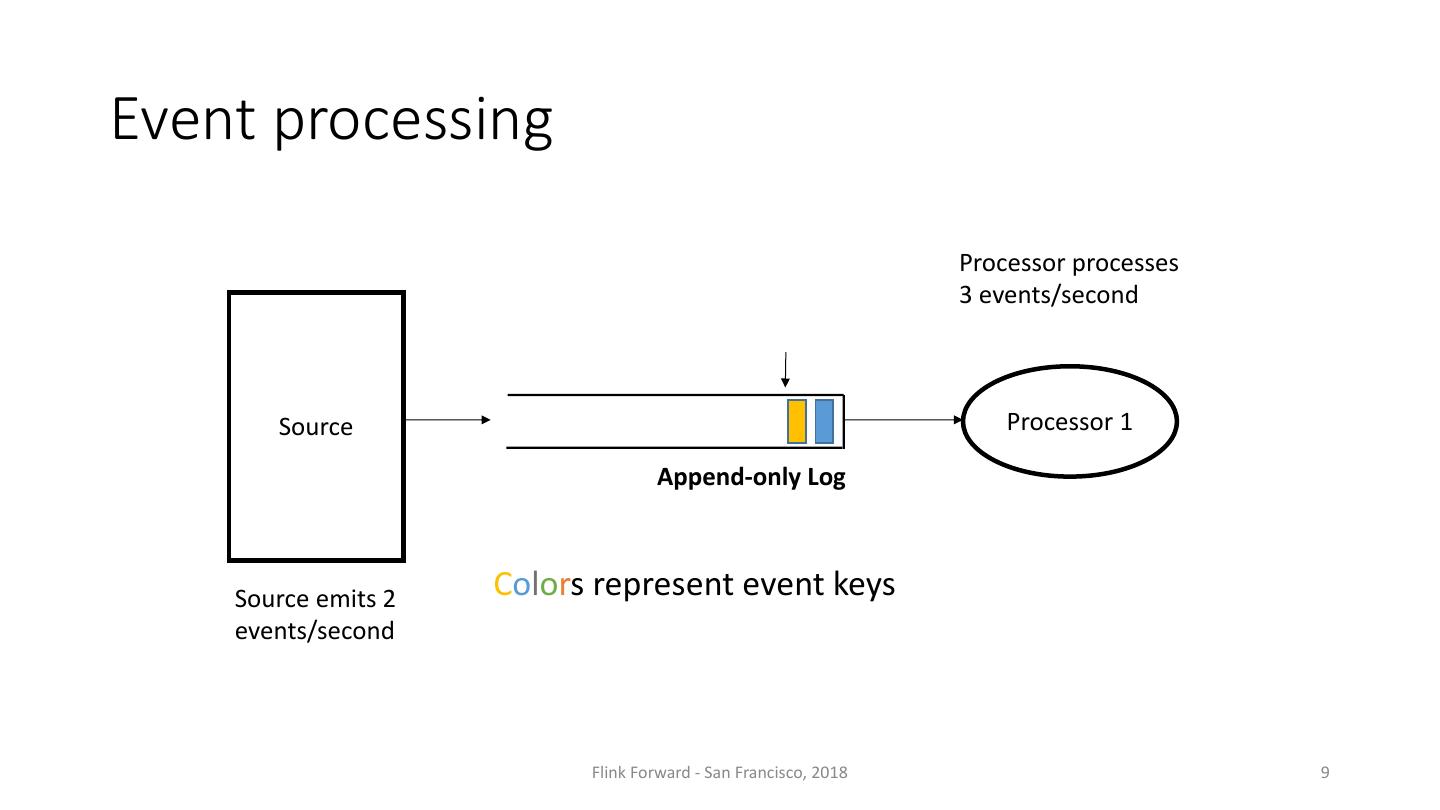

9 .Event processing Processor processes 3 events/second Source Processor 1 Append-only Log Source emits 2 Colors represent event keys events/second Flink Forward - San Francisco, 2018 9

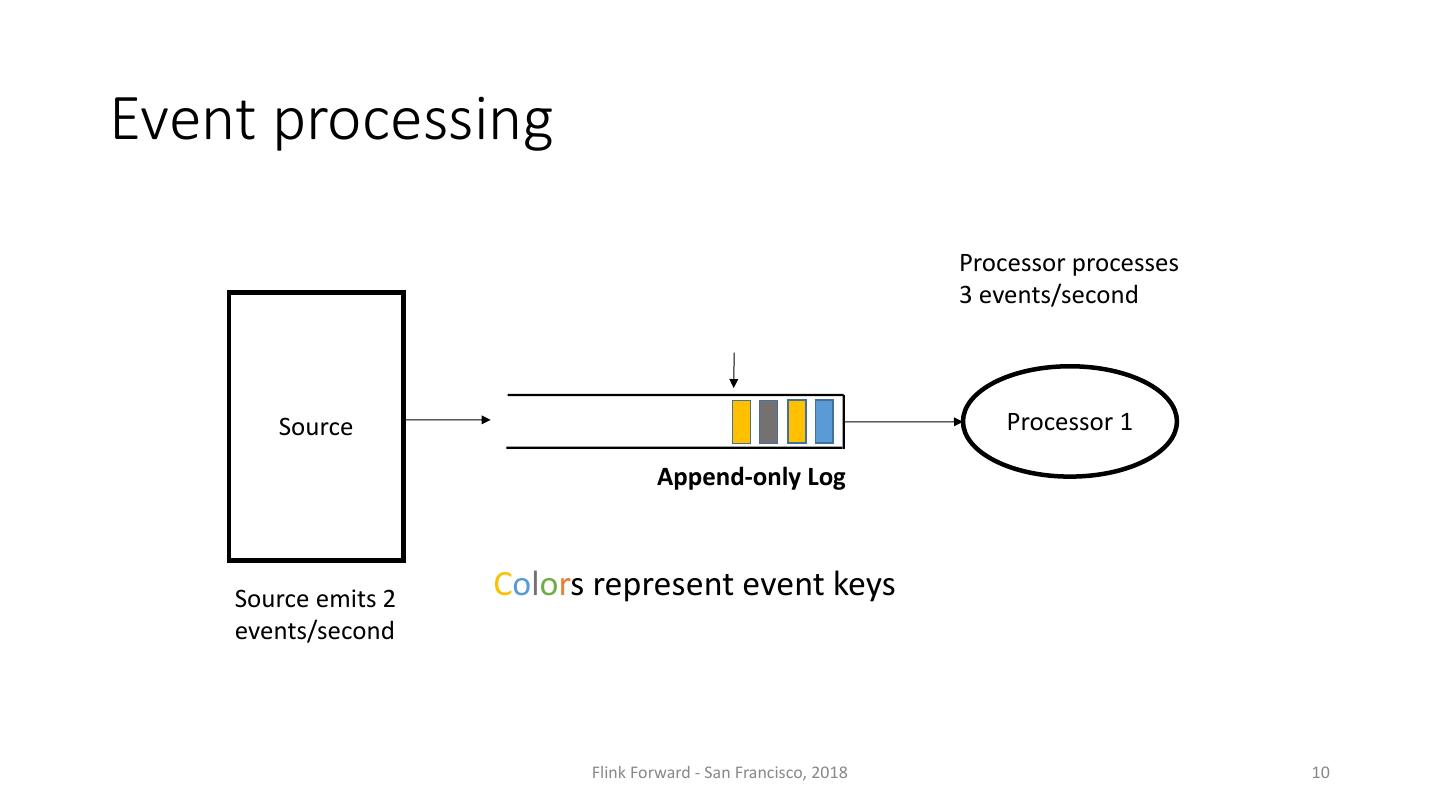

10 .Event processing Processor processes 3 events/second Source Processor 1 Append-only Log Source emits 2 Colors represent event keys events/second Flink Forward - San Francisco, 2018 10

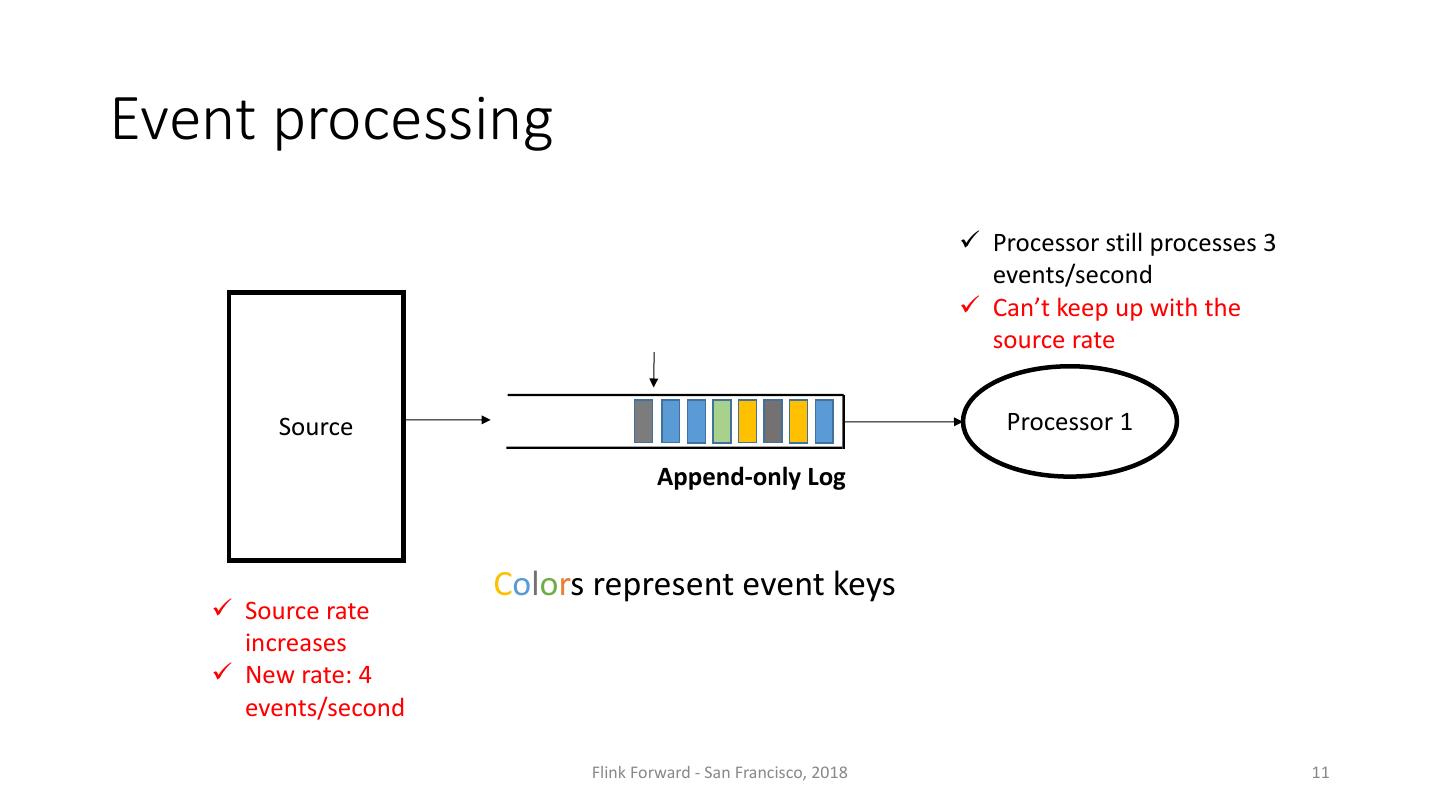

11 .Event processing ü Processor still processes 3 events/second ü Can’t keep up with the source rate Source Processor 1 Append-only Log Colors represent event keys ü Source rate increases ü New rate: 4 events/second Flink Forward - San Francisco, 2018 11

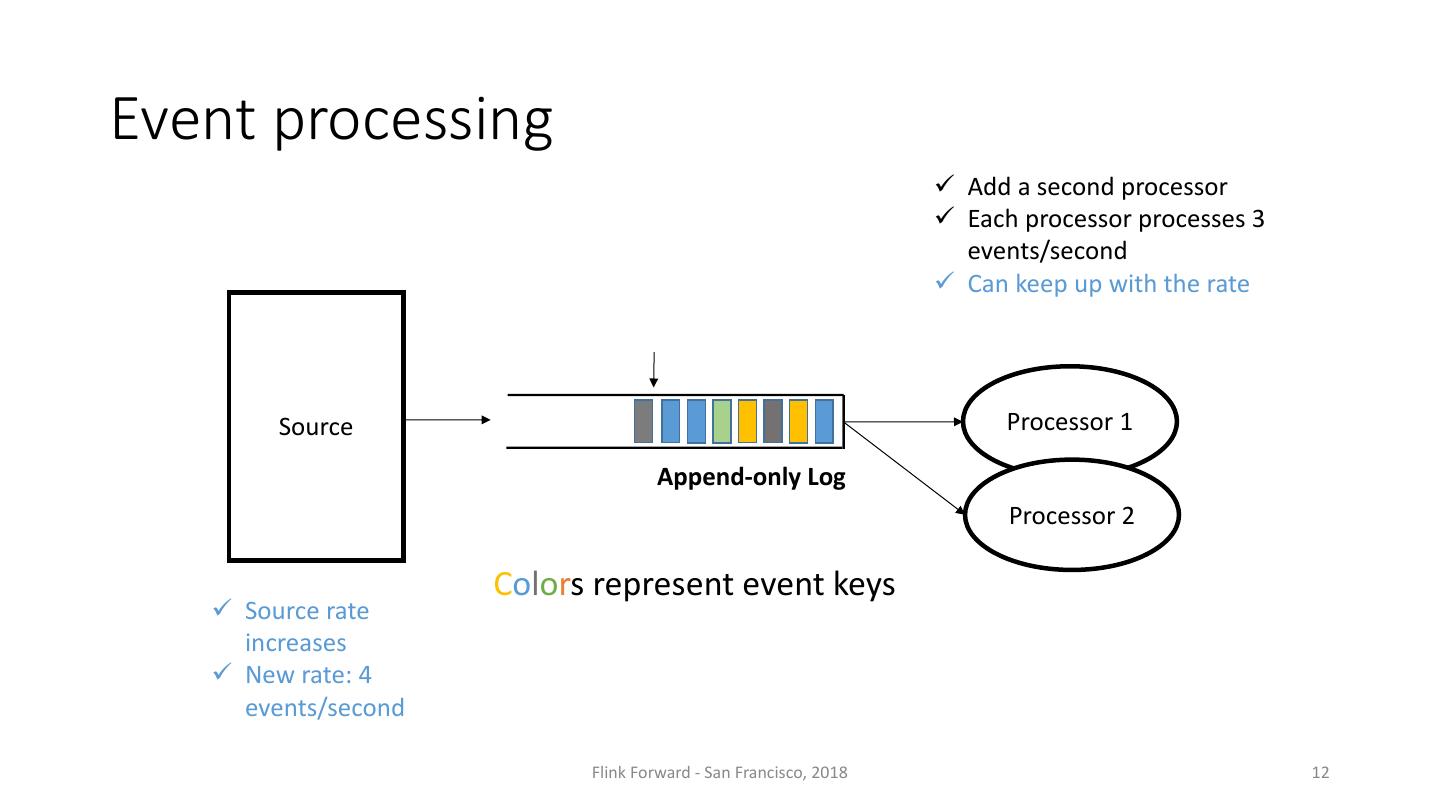

12 .Event processing ü Add a second processor ü Each processor processes 3 events/second ü Can keep up with the rate Source Processor 1 Append-only Log Processor 2 Colors represent event keys ü Source rate increases ü New rate: 4 events/second Flink Forward - San Francisco, 2018 12

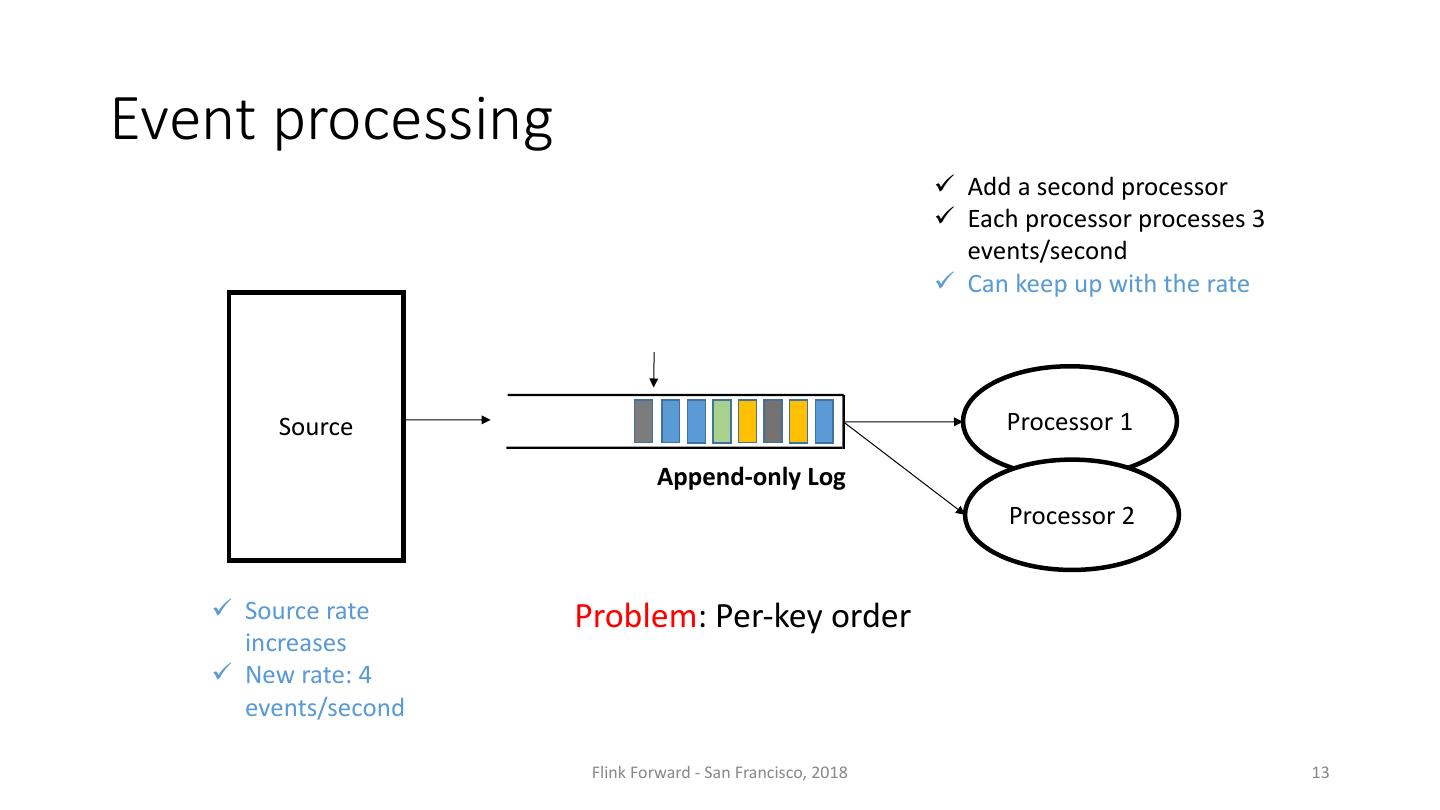

13 .Event processing ü Add a second processor ü Each processor processes 3 events/second ü Can keep up with the rate Source Processor 1 Append-only Log Processor 2 ü Source rate Problem: Per-key order increases ü New rate: 4 events/second Flink Forward - San Francisco, 2018 13

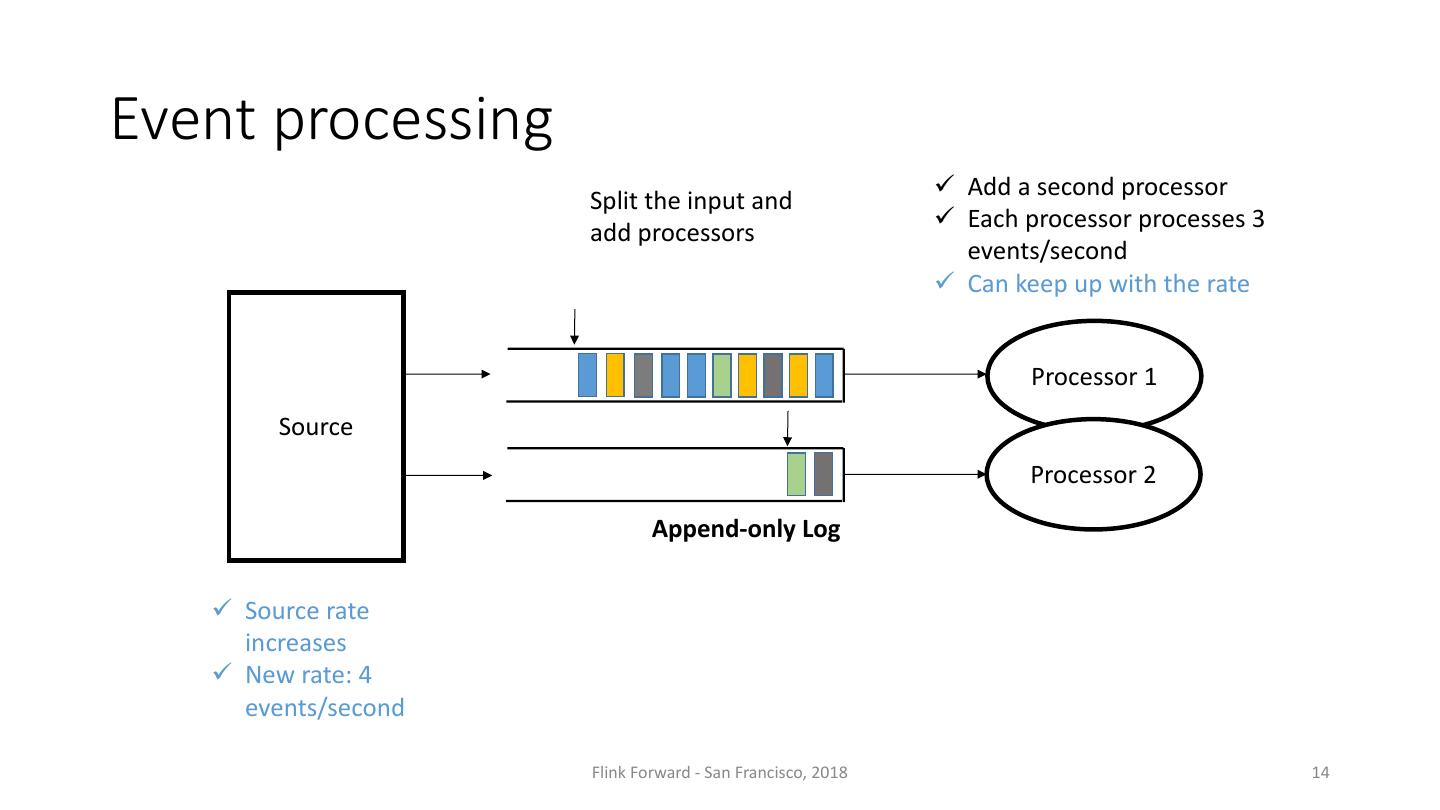

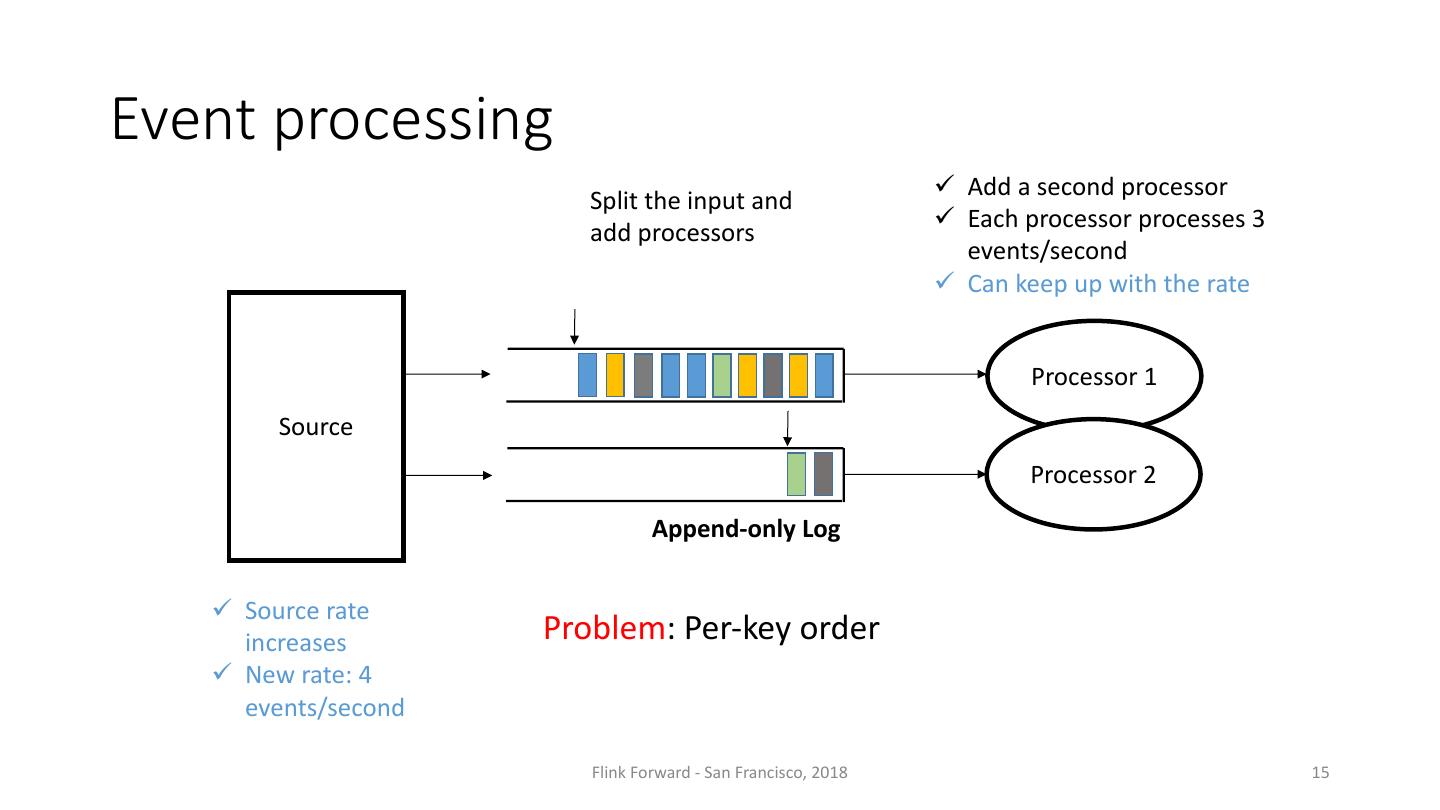

14 .Event processing ü Add a second processor Split the input and ü Each processor processes 3 add processors events/second ü Can keep up with the rate Processor 1 Source Processor 2 Append-only Log ü Source rate increases ü New rate: 4 events/second Flink Forward - San Francisco, 2018 14

15 .Event processing ü Add a second processor Split the input and ü Each processor processes 3 add processors events/second ü Can keep up with the rate Processor 1 Source Processor 2 Append-only Log ü Source rate increases Problem: Per-key order ü New rate: 4 events/second Flink Forward - San Francisco, 2018 15

16 .Event processing ü Add a second processor Split the input and ü Each processor processes 3 add processors events/second ü Can keep up with the rate Processor 1 Source Processor 2 Processor 2 only starts once earlier ü Source rate events have been processed increases ü New rate: 4 events/second Flink Forward - San Francisco, 2018 16

17 .What about the order of events? What happens if the rate increases again? What if it drops? Flink Forward - San Francisco, 2018 17

18 .Scaling in Pravega Flink Forward - San Francisco, 2018 18

19 . Pravega • Storing data streams • Young project, under active development • Open source http://pravega.io http://github.com/pravega/pravega Flink Forward - San Francisco, 2018 19

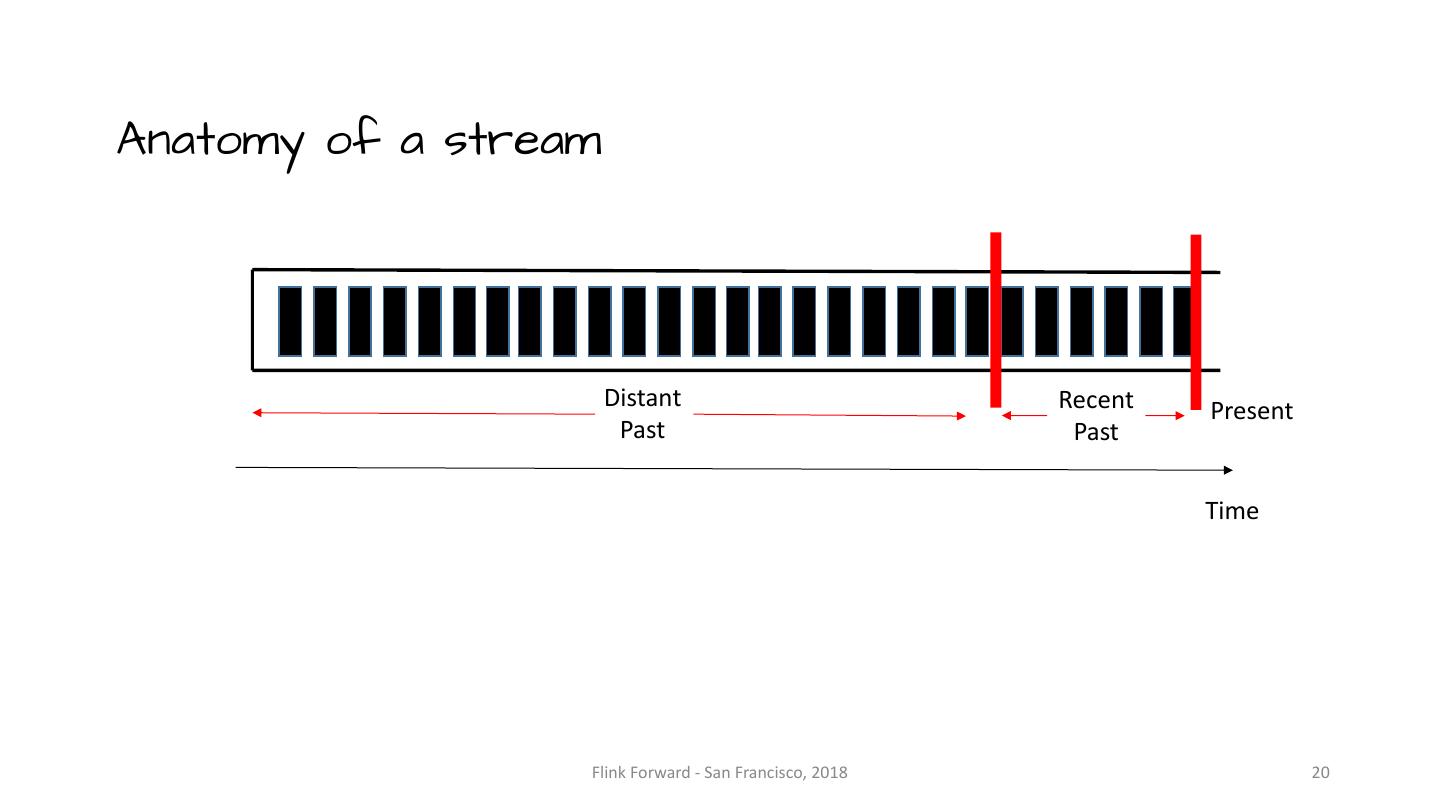

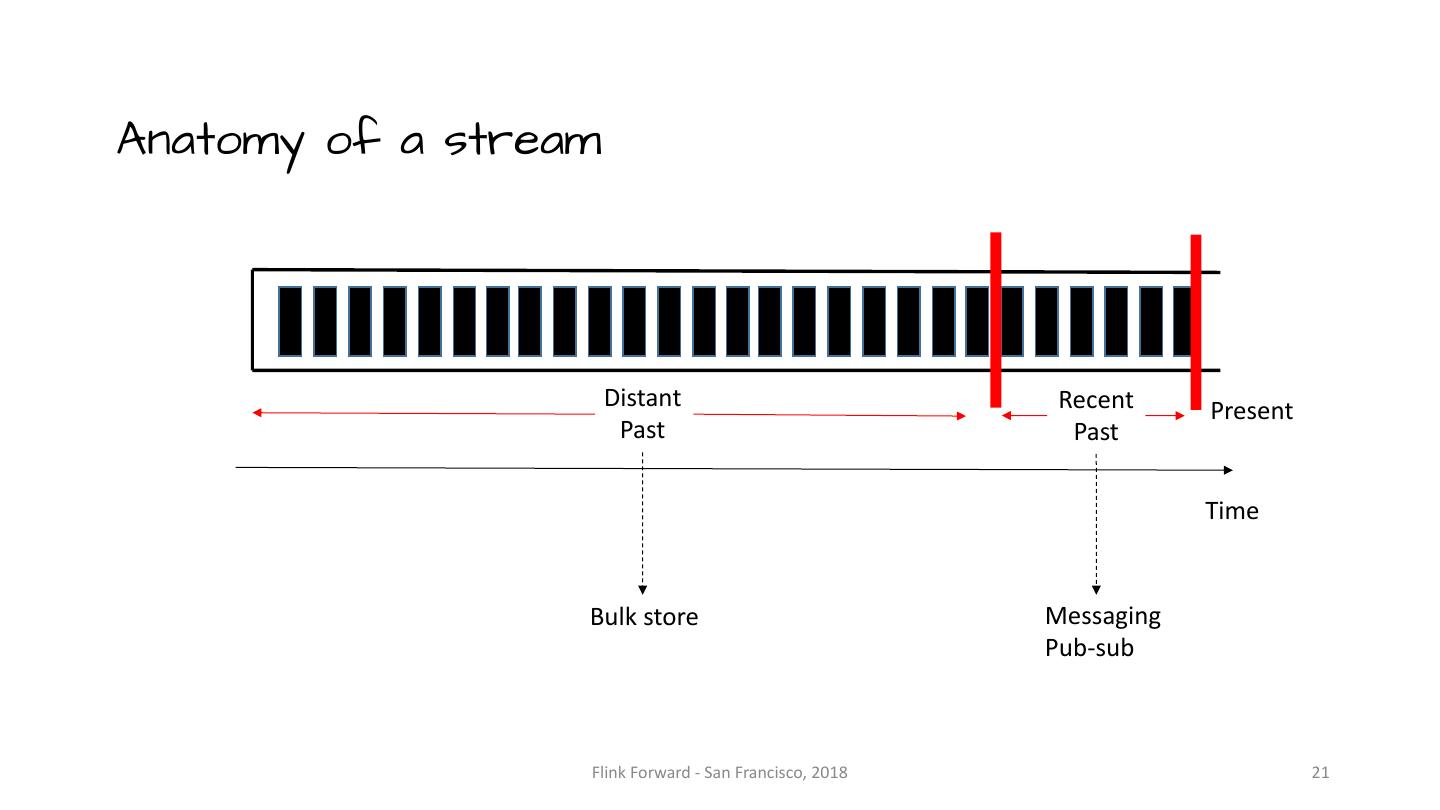

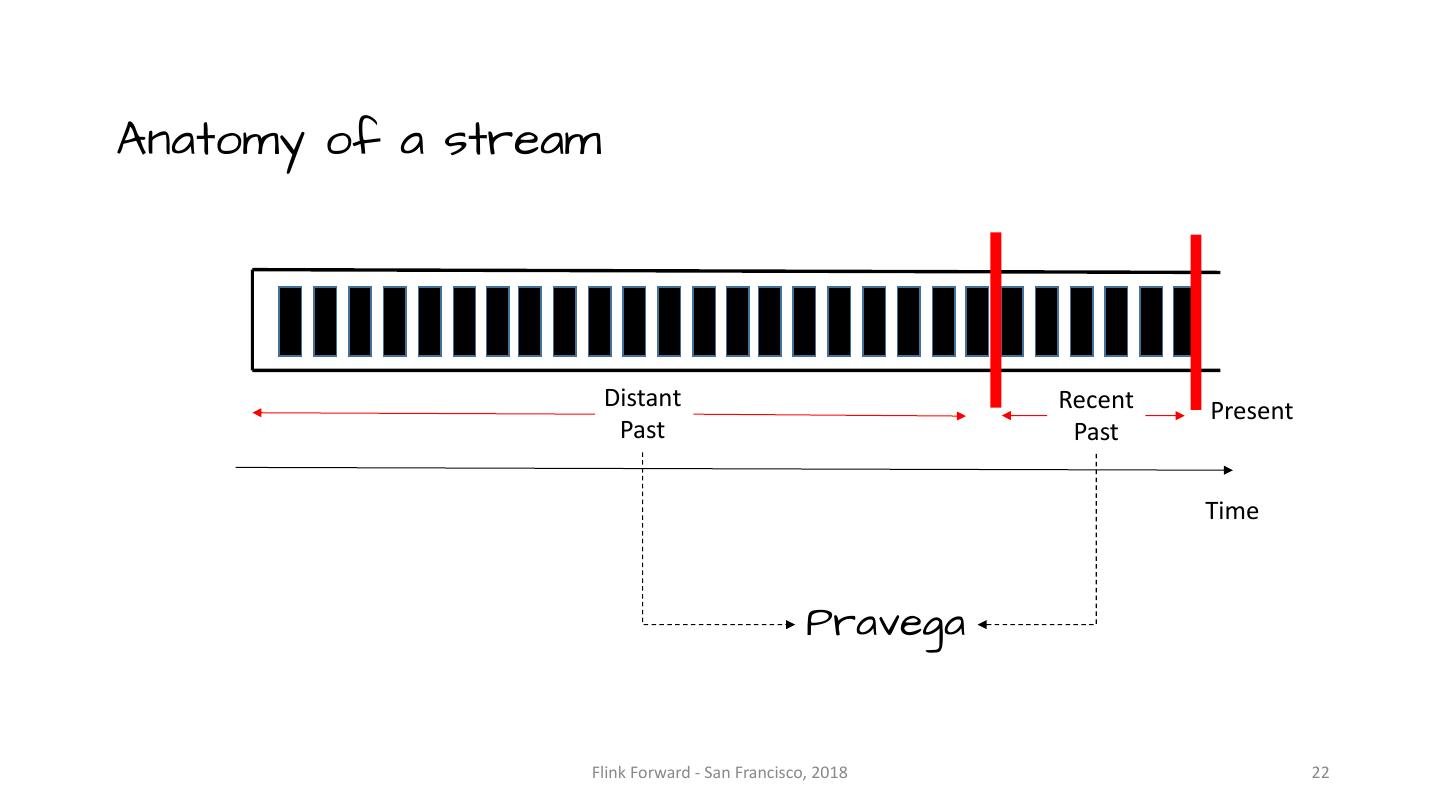

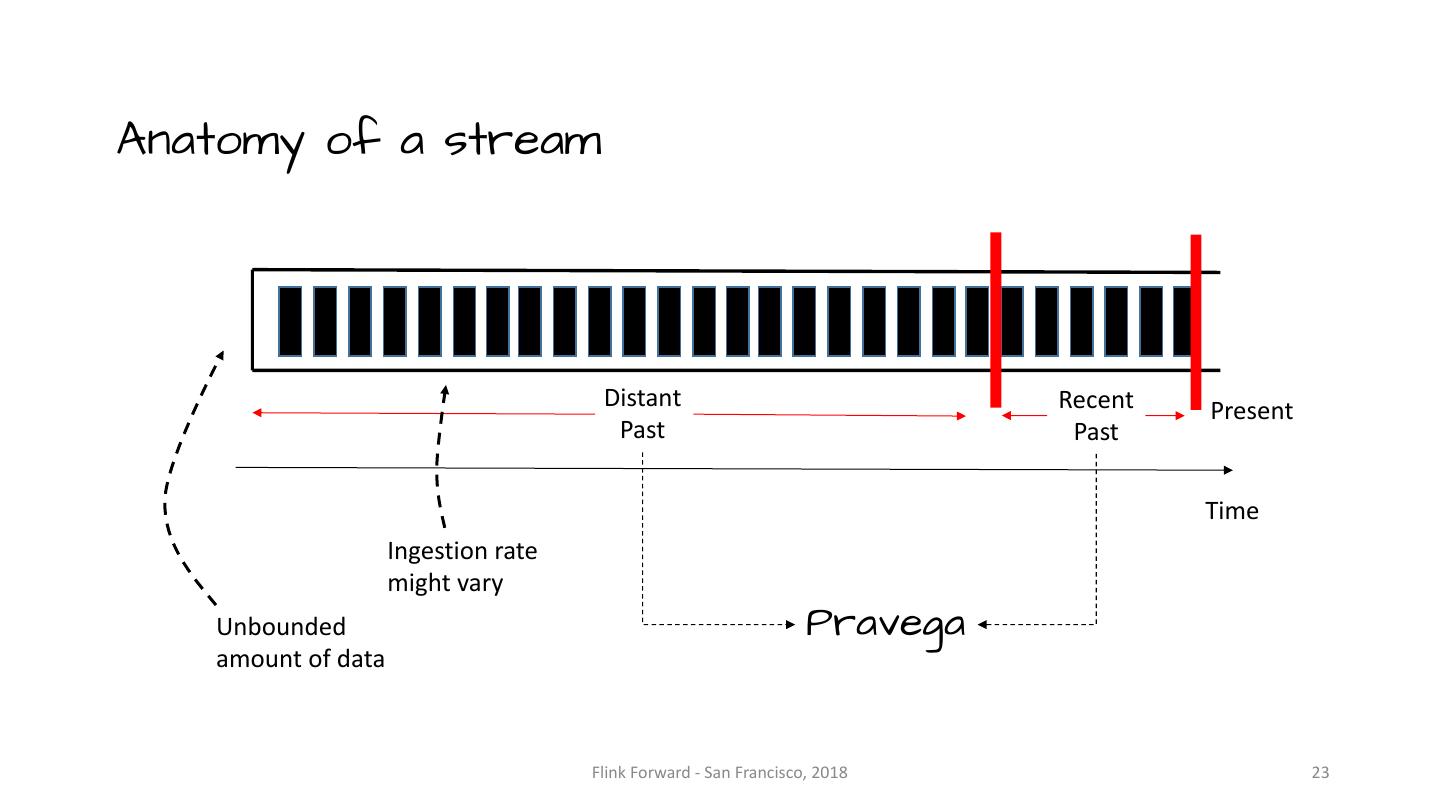

20 .Anatomy of a stream Distant Recent Present Past Past Time Flink Forward - San Francisco, 2018 20

21 .Anatomy of a stream Distant Recent Present Past Past Time Bulk store Messaging Pub-sub Flink Forward - San Francisco, 2018 21

22 .Anatomy of a stream Distant Recent Present Past Past Time Pravega Flink Forward - San Francisco, 2018 22

23 .Anatomy of a stream Distant Recent Present Past Past Time Ingestion rate might vary Unbounded Pravega amount of data Flink Forward - San Francisco, 2018 23

24 .Pravega aims to be a stream store able to: • Store stream data permanently • Preserve order • Accommodate unbounded streams • Adapt to varying workloads automatically • Low-latency from append to read Flink Forward - San Francisco, 2018 24

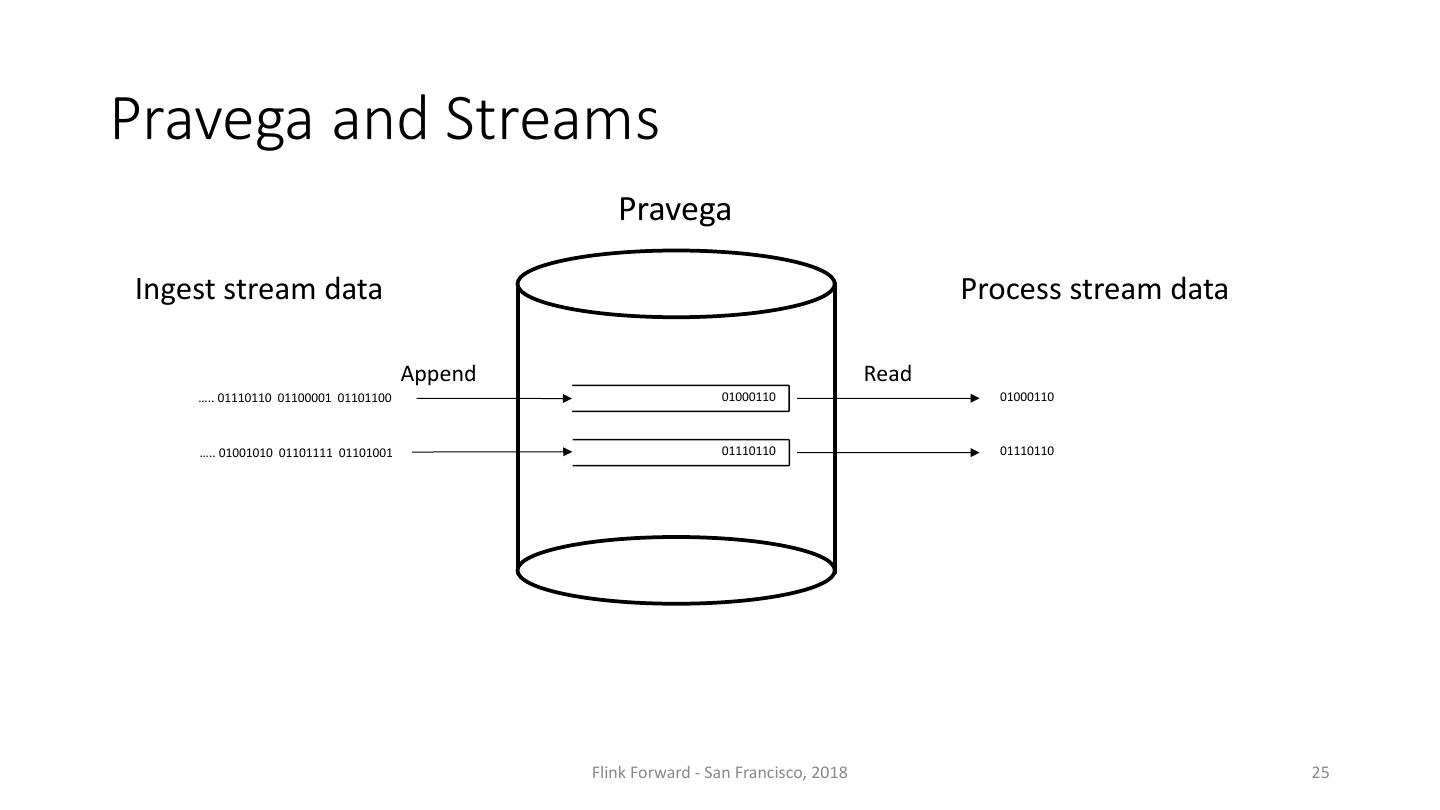

25 .Pravega and Streams Pravega Ingest stream data Process stream data Append Read ….. 01110110 01100001 01101100 01000110 01000110 ….. 01001010 01101111 01101001 01110110 01110110 Flink Forward - San Francisco, 2018 25

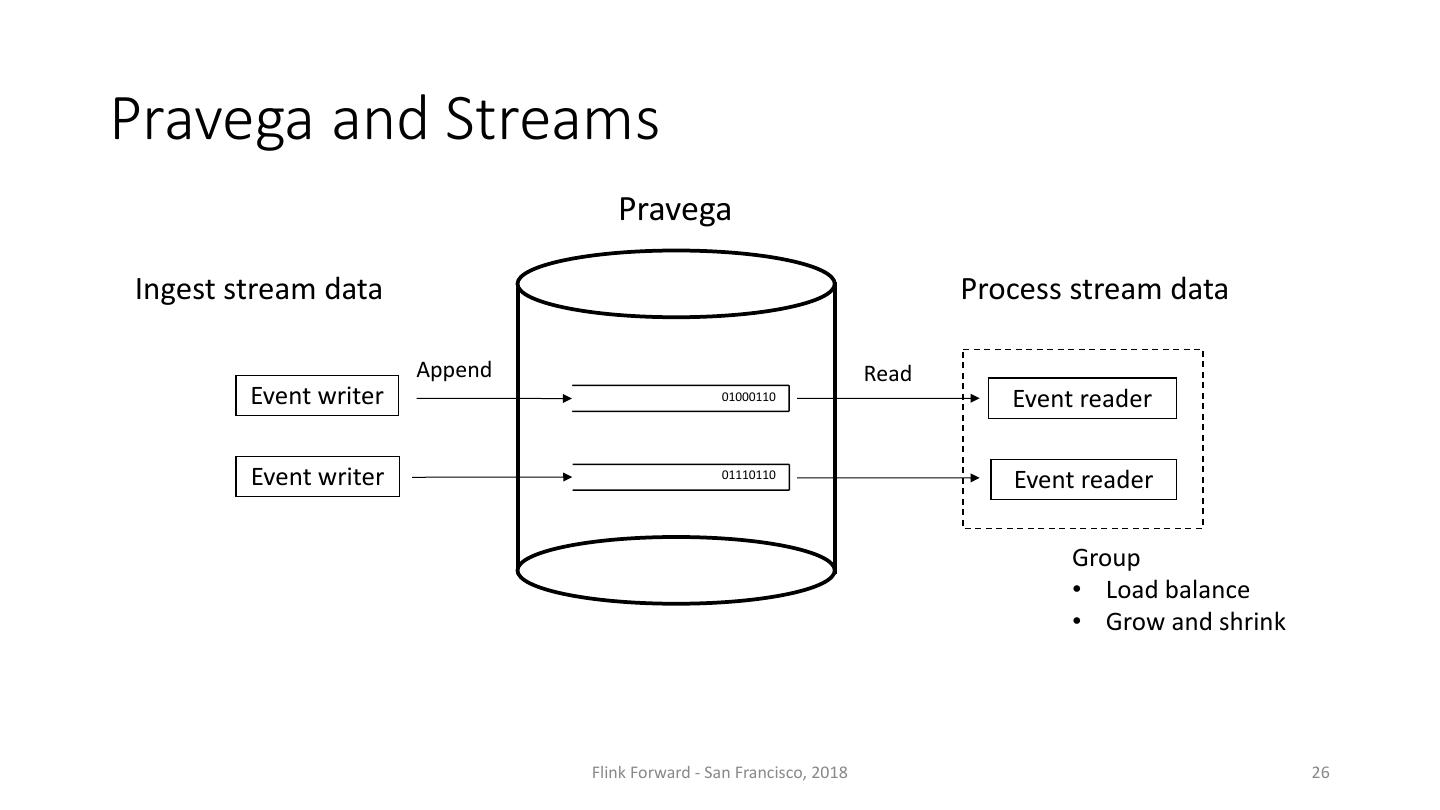

26 .Pravega and Streams Pravega Ingest stream data Process stream data Append Read Event writer 01000110 Event reader Event writer 01110110 Event reader Group • Load balance • Grow and shrink Flink Forward - San Francisco, 2018 26

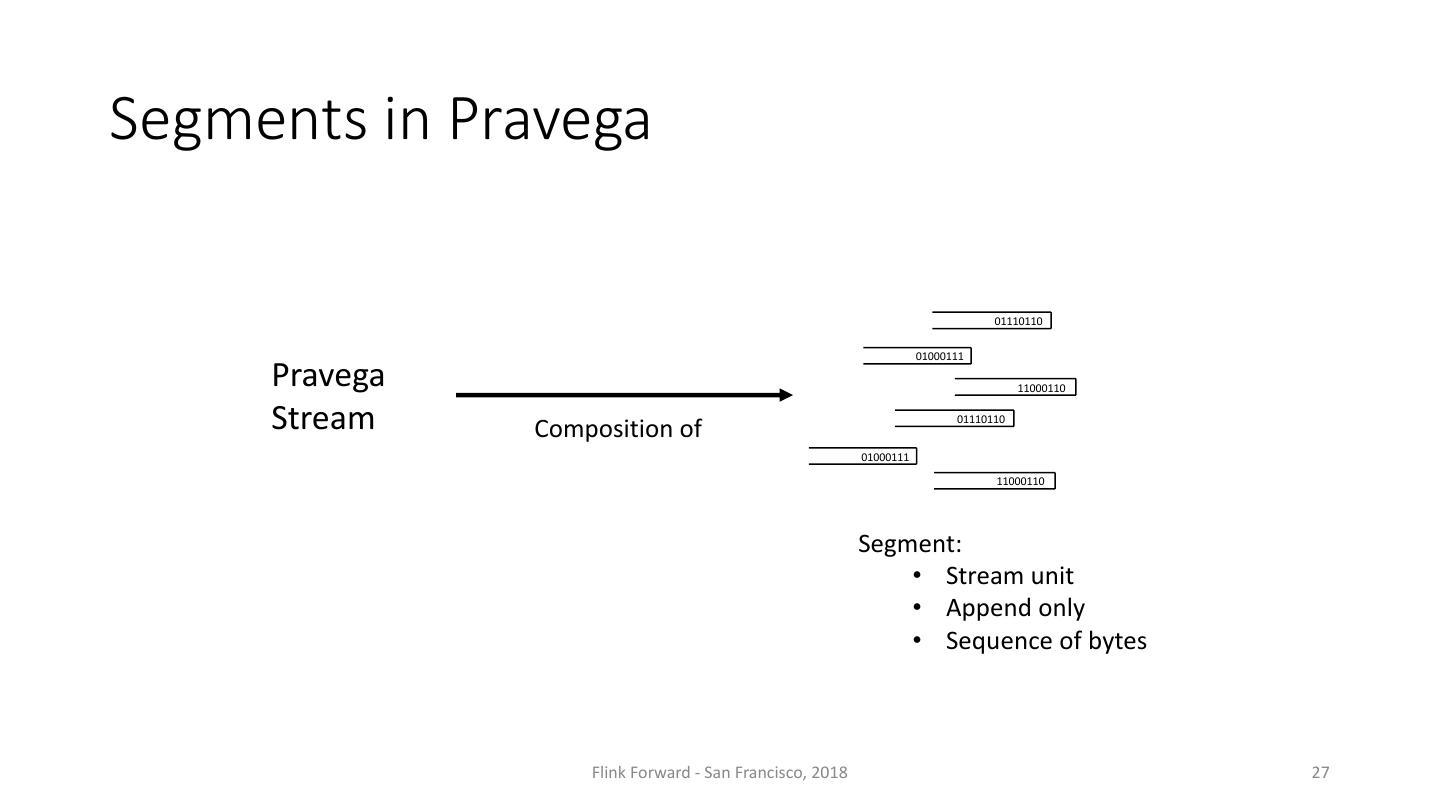

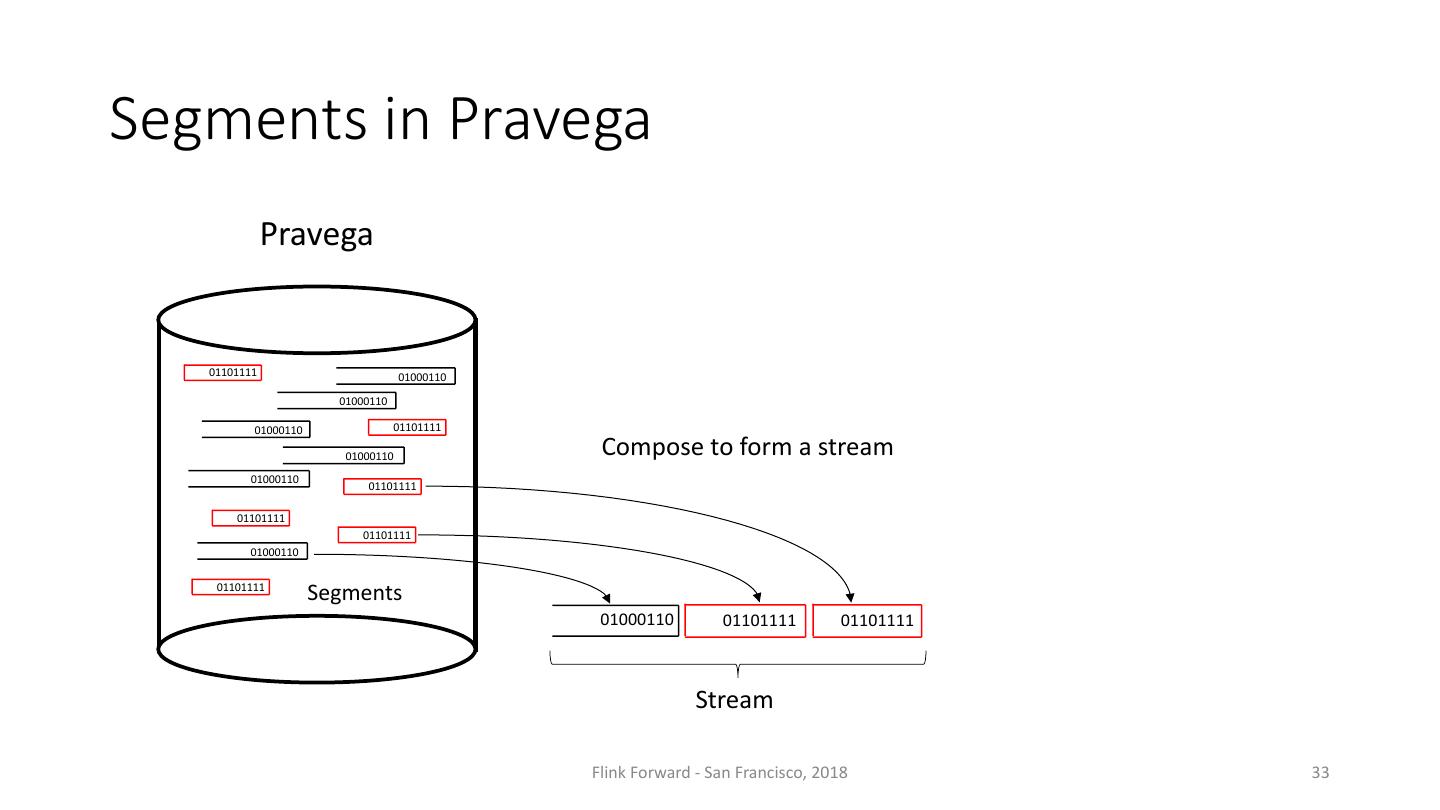

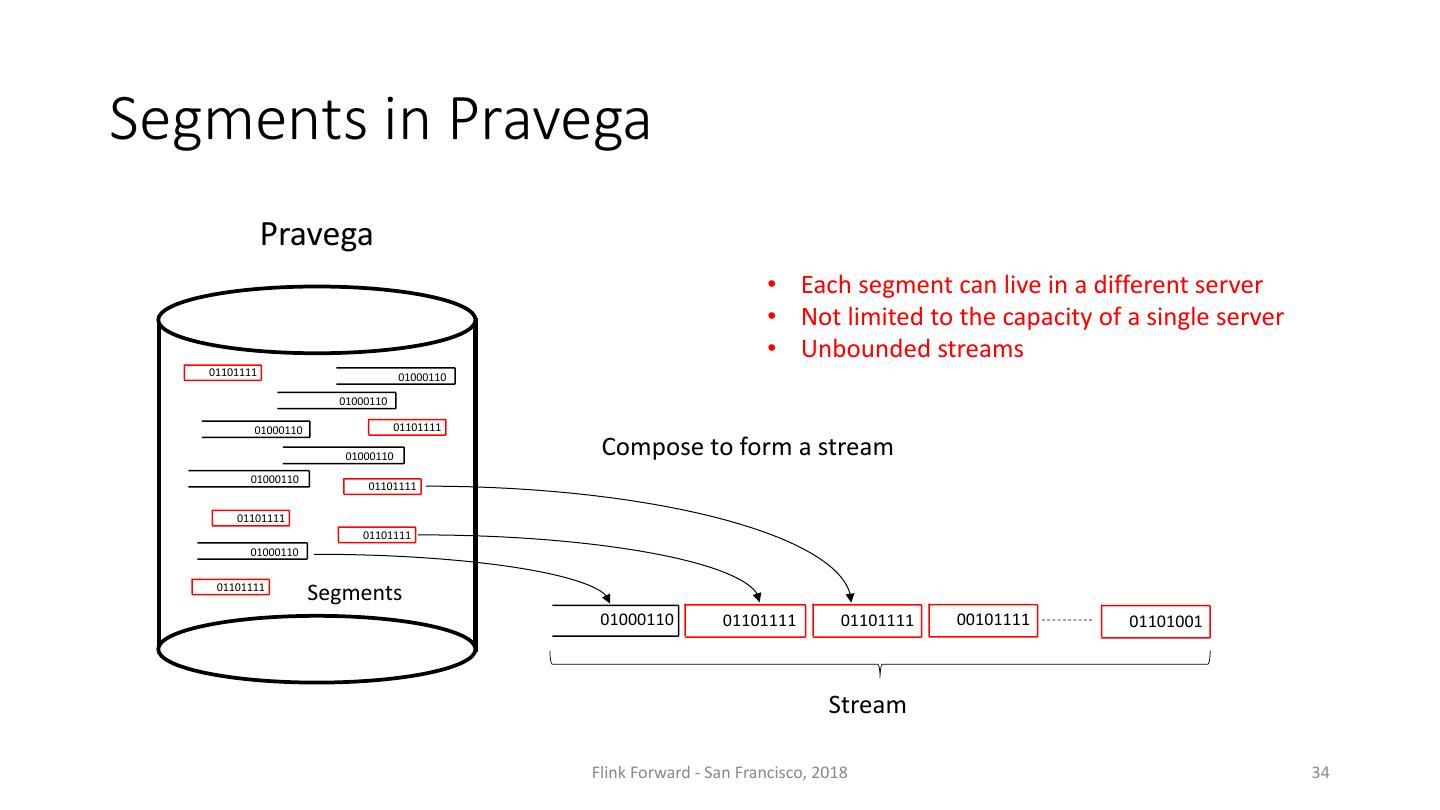

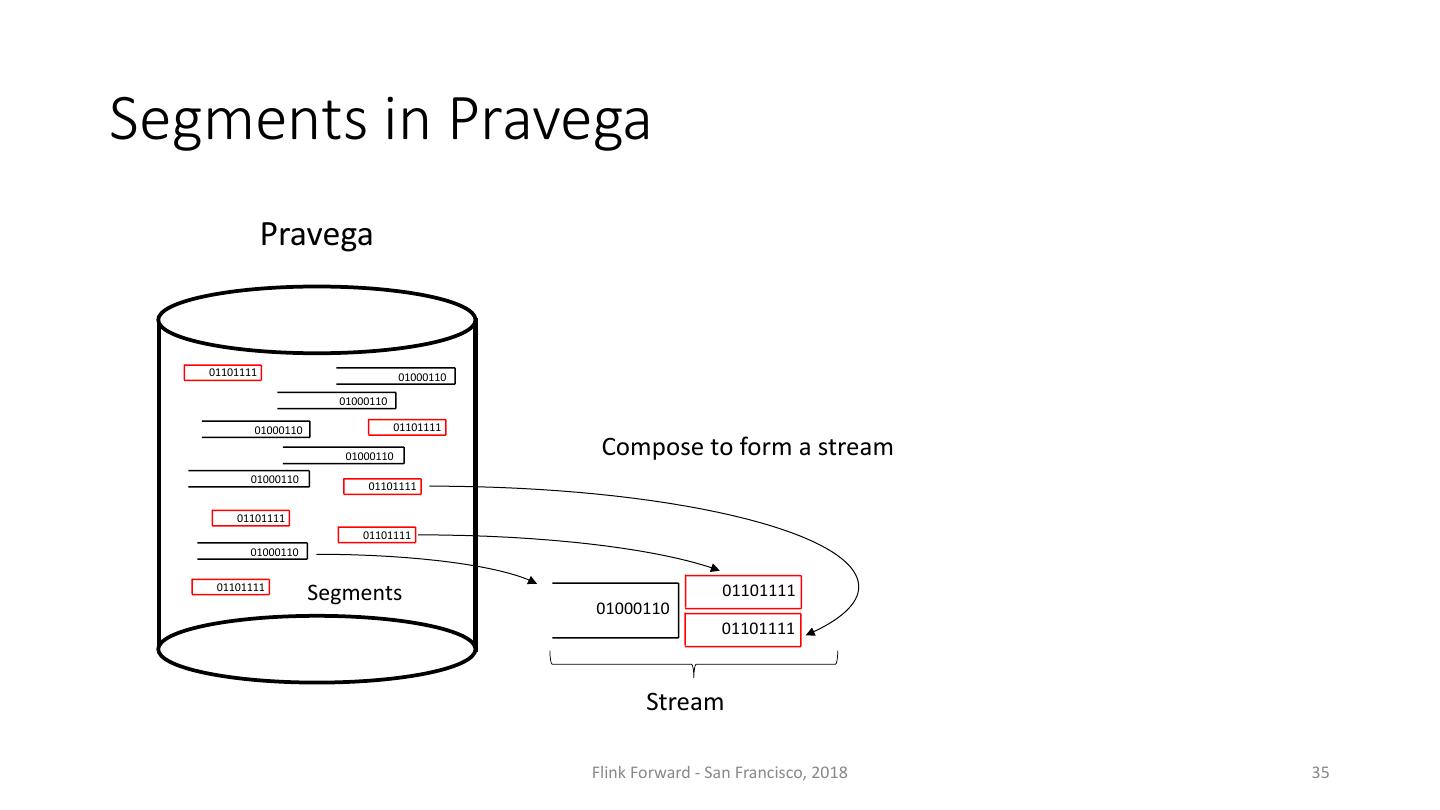

27 .Segments in Pravega 01110110 01000111 Pravega 11000110 Stream Composition of 01110110 01000111 11000110 Segment: • Stream unit • Append only • Sequence of bytes Flink Forward - San Francisco, 2018 27

28 .Parallelism Flink Forward - San Francisco, 2018 28

29 .Segments in Pravega Pravega Append Read ….. 01110110 01100001 01101100 01000110 01000110 01110110 01110110 ….. 01001010 01101111 01101001 01101001 01101001 01101111 01101111 〈key, 01101001 〉 Segments Routing Segments key • Segments are sequences of bytes • Use routing keys to determine segment Flink Forward - San Francisco, 2018 29