- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

How to build a modern stream processor

展开查看详情

1 . How to build a modern stream processor: The science behind Apache Flink Stefan Richter @StefanRRichter April 10, 2018 1

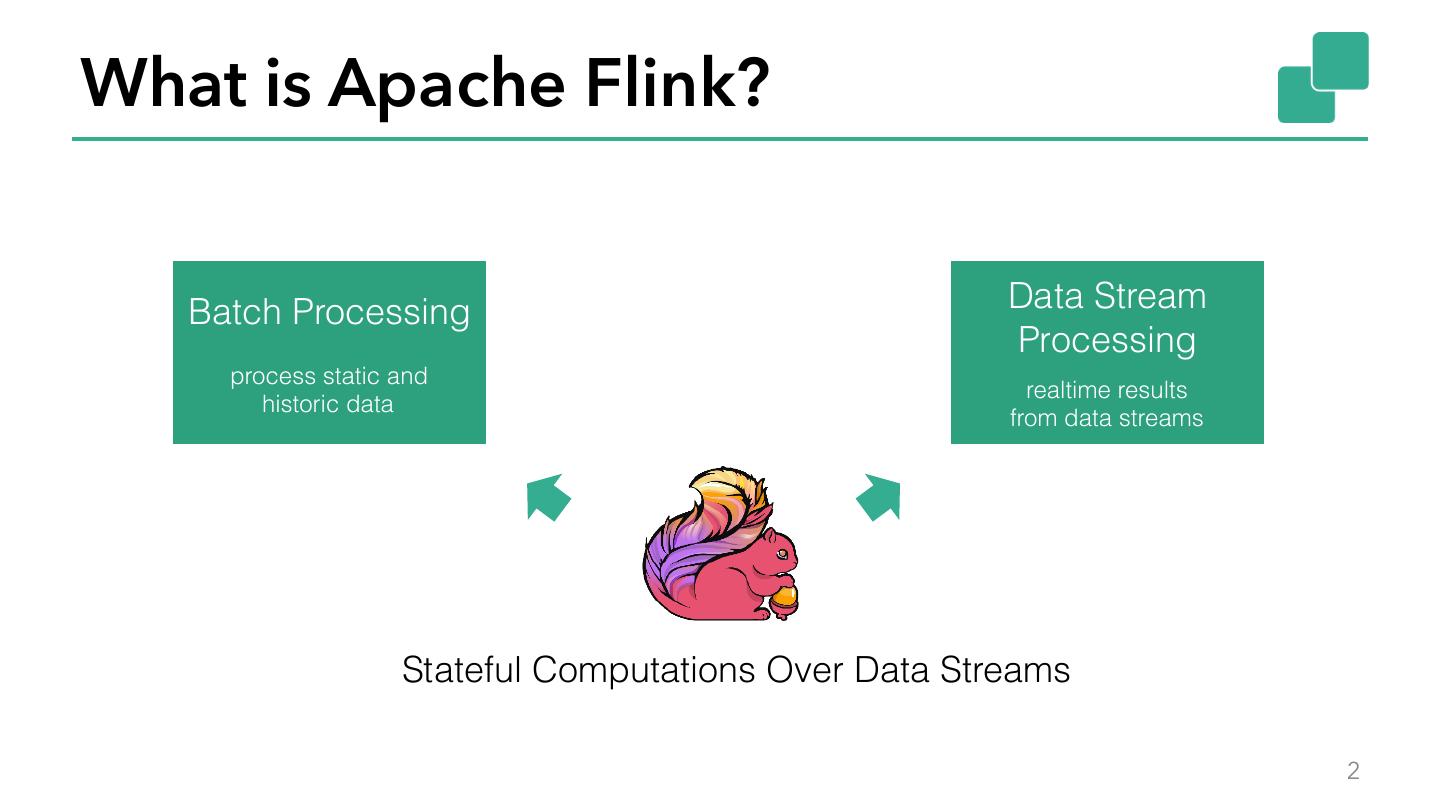

2 .What is Apache Flink? Batch Processing Data Stream Processing process static and realtime results historic data from data streams Stateful Computations Over Data Streams 2

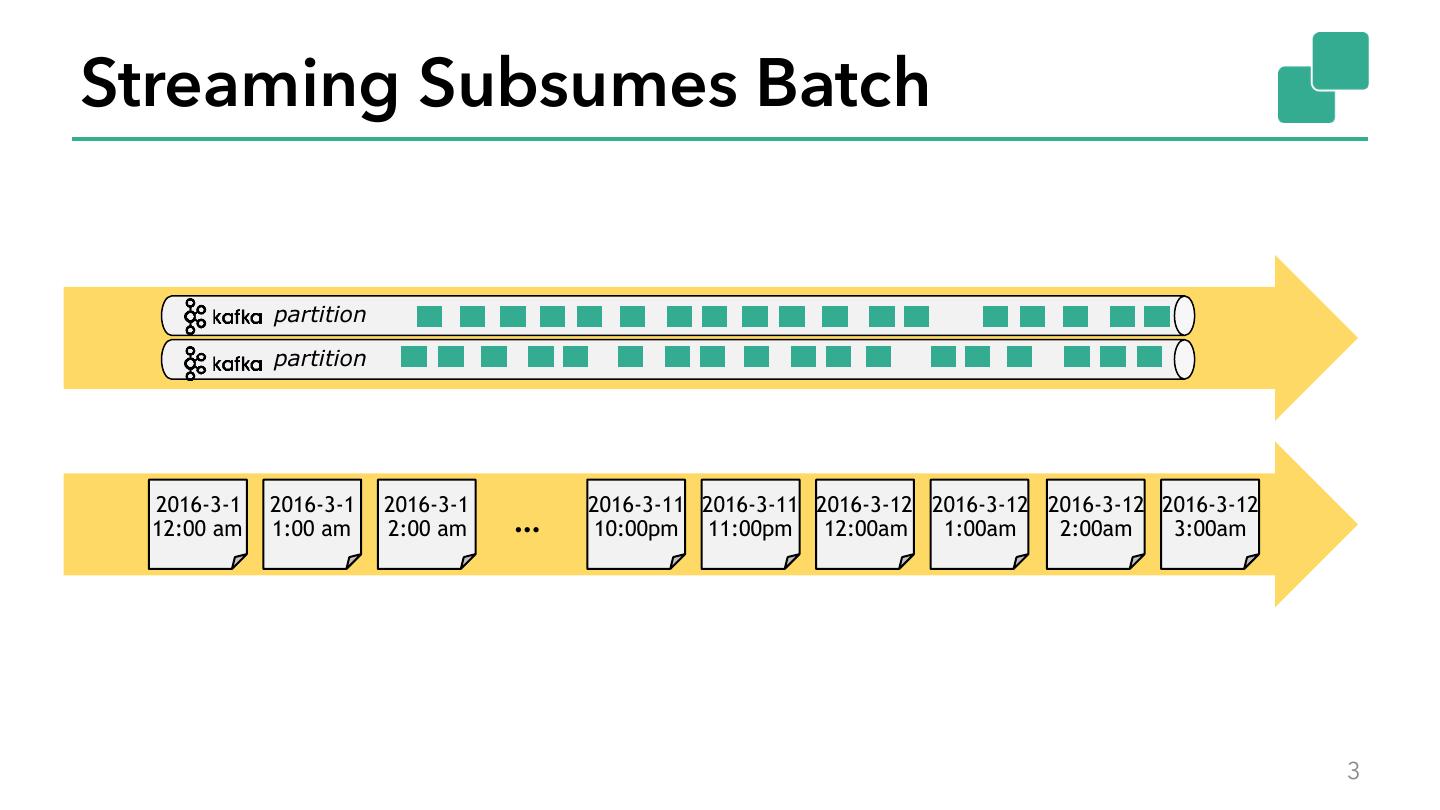

3 .Streaming Subsumes Batch partition partition 2016-3-1 2016-3-1 2016-3-1 2016-3-11 2016-3-11 2016-3-12 2016-3-12 2016-3-12 2016-3-12 12:00 am 1:00 am 2:00 am … 10:00pm 11:00pm 12:00am 1:00am 2:00am 3:00am 3

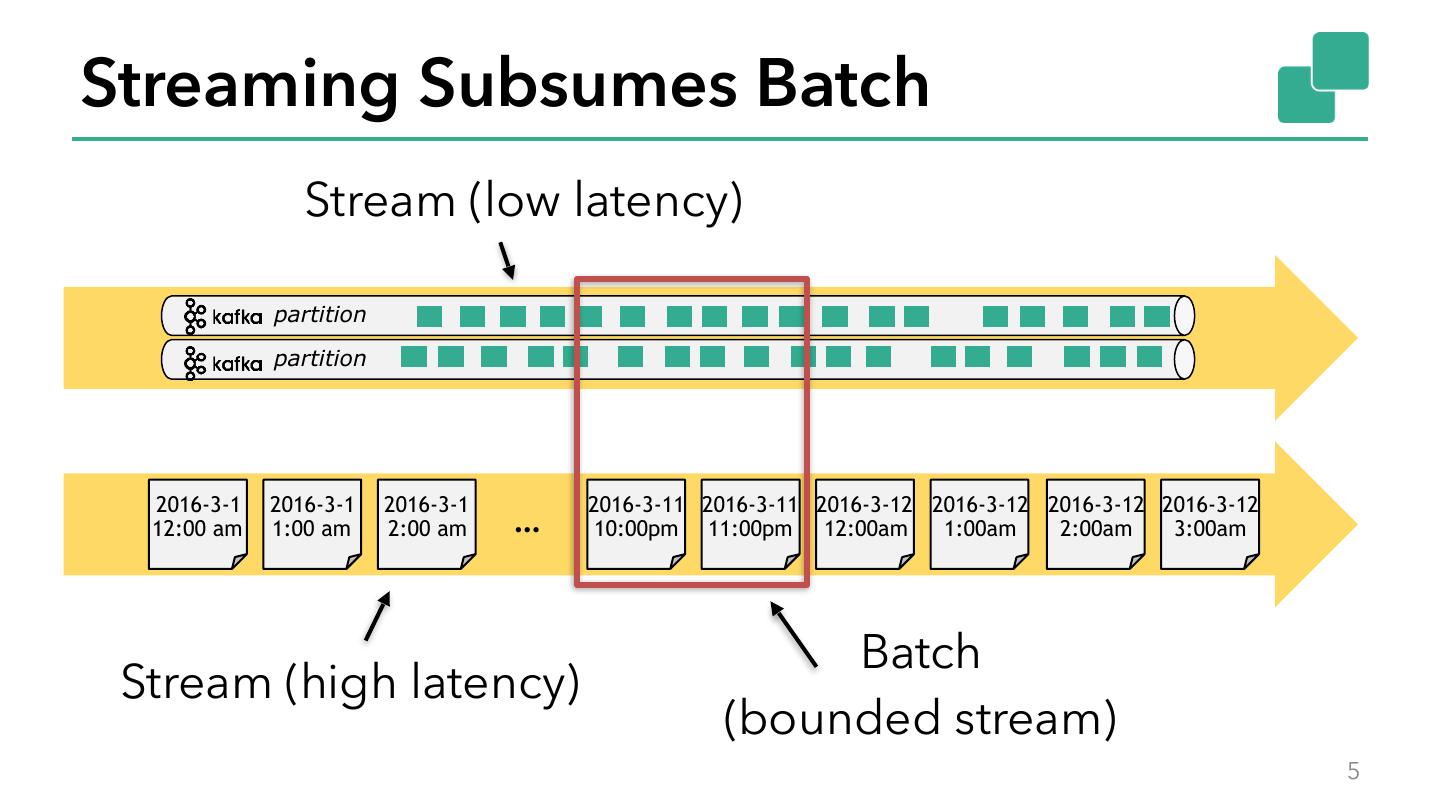

4 .Streaming Subsumes Batch Stream (low latency) partition partition 2016-3-1 2016-3-1 2016-3-1 2016-3-11 2016-3-11 2016-3-12 2016-3-12 2016-3-12 2016-3-12 12:00 am 1:00 am 2:00 am … 10:00pm 11:00pm 12:00am 1:00am 2:00am 3:00am Stream (high latency) 4

5 .Streaming Subsumes Batch Stream (low latency) partition partition 2016-3-1 2016-3-1 2016-3-1 2016-3-11 2016-3-11 2016-3-12 2016-3-12 2016-3-12 2016-3-12 12:00 am 1:00 am 2:00 am … 10:00pm 11:00pm 12:00am 1:00am 2:00am 3:00am Batch Stream (high latency) (bounded stream) 5

6 .Flink Component Stack Standalone YARN Mesos Kubernetes 6

7 .Flink Component Stack This talk Standalone YARN Mesos Kubernetes 7

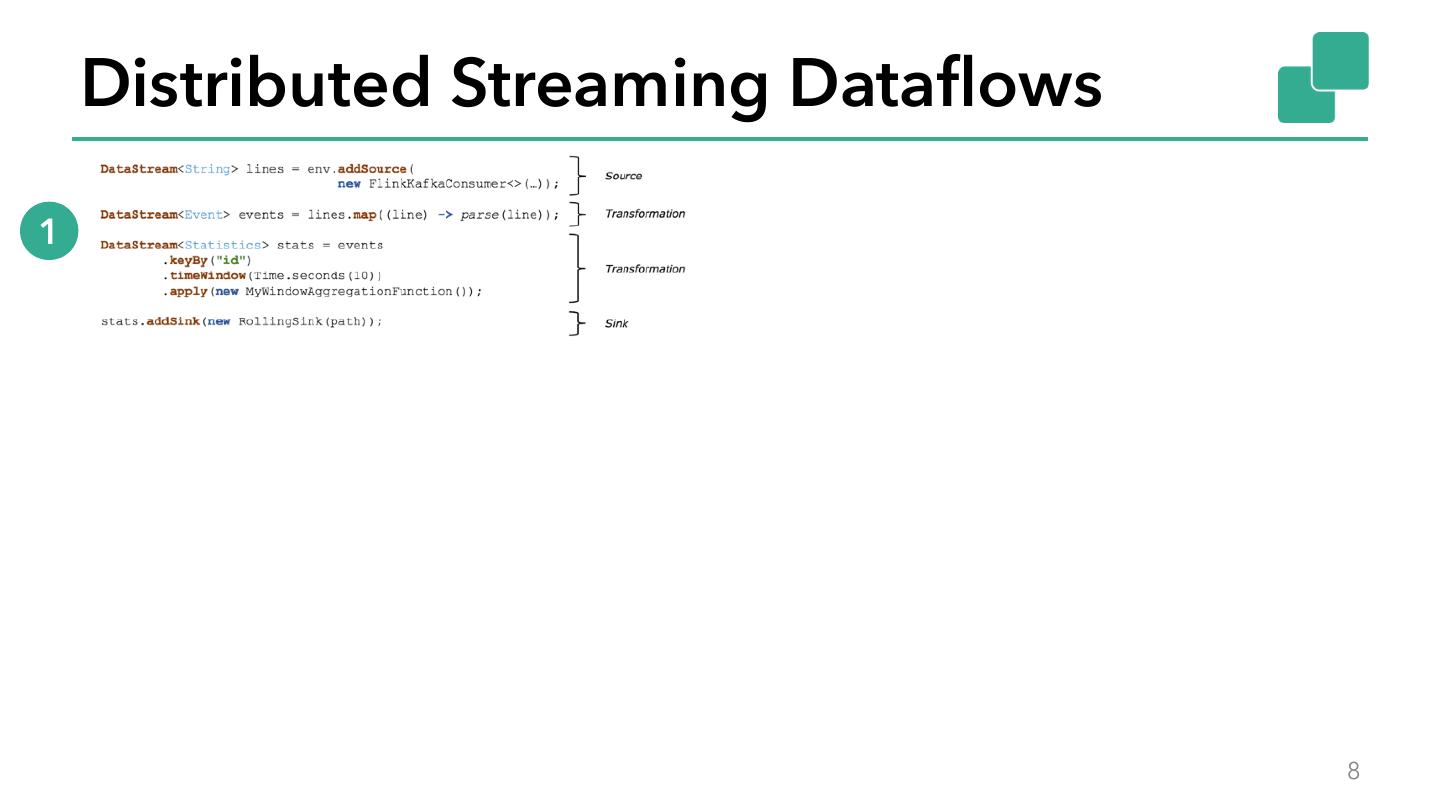

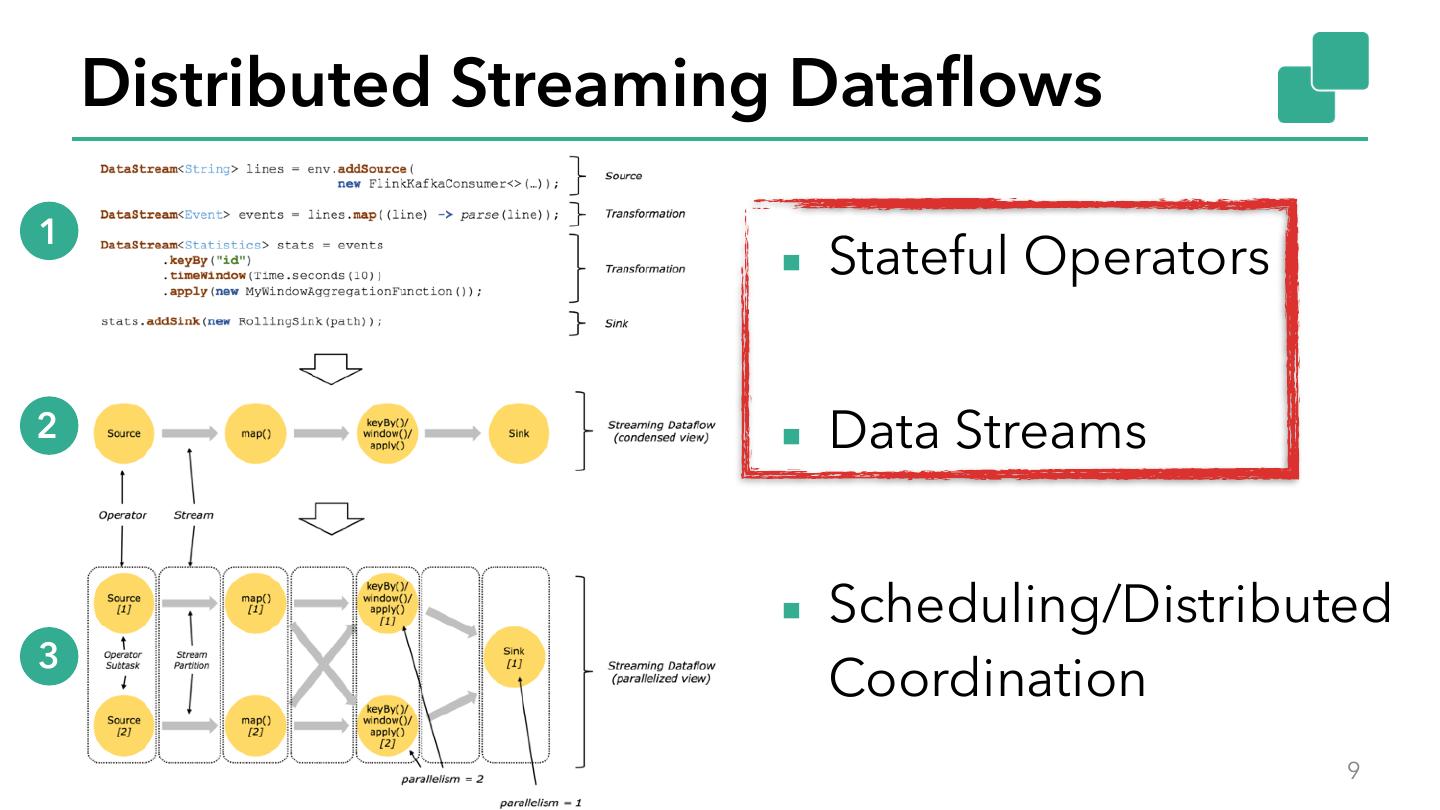

8 . Distributed Streaming Dataflows 1 ▪ Stateful Operators 2 ▪ Data Streams ▪ Scheduling/Distributed 3 Coordination 8

9 . Distributed Streaming Dataflows 1 ▪ Stateful Operators 2 ▪ Data Streams ▪ Scheduling/Distributed 3 Coordination 9

10 .Stateful Operators 10

11 .Internal vs External State Application Application State Periodic Snapshot State Stable Storage Internal State External State • State in the stream processor • State in a separate data store • Faster than external state • Can store "state capacity" independent • Working area local to computation • Usually much slower than internal state • Checkpoints to stable store (DFS) • Fault tolerance and scalability „for free“ • Always exactly-once consistent • Hard to get "exactly-once" guarantees • Stream processor has to handle scaling11

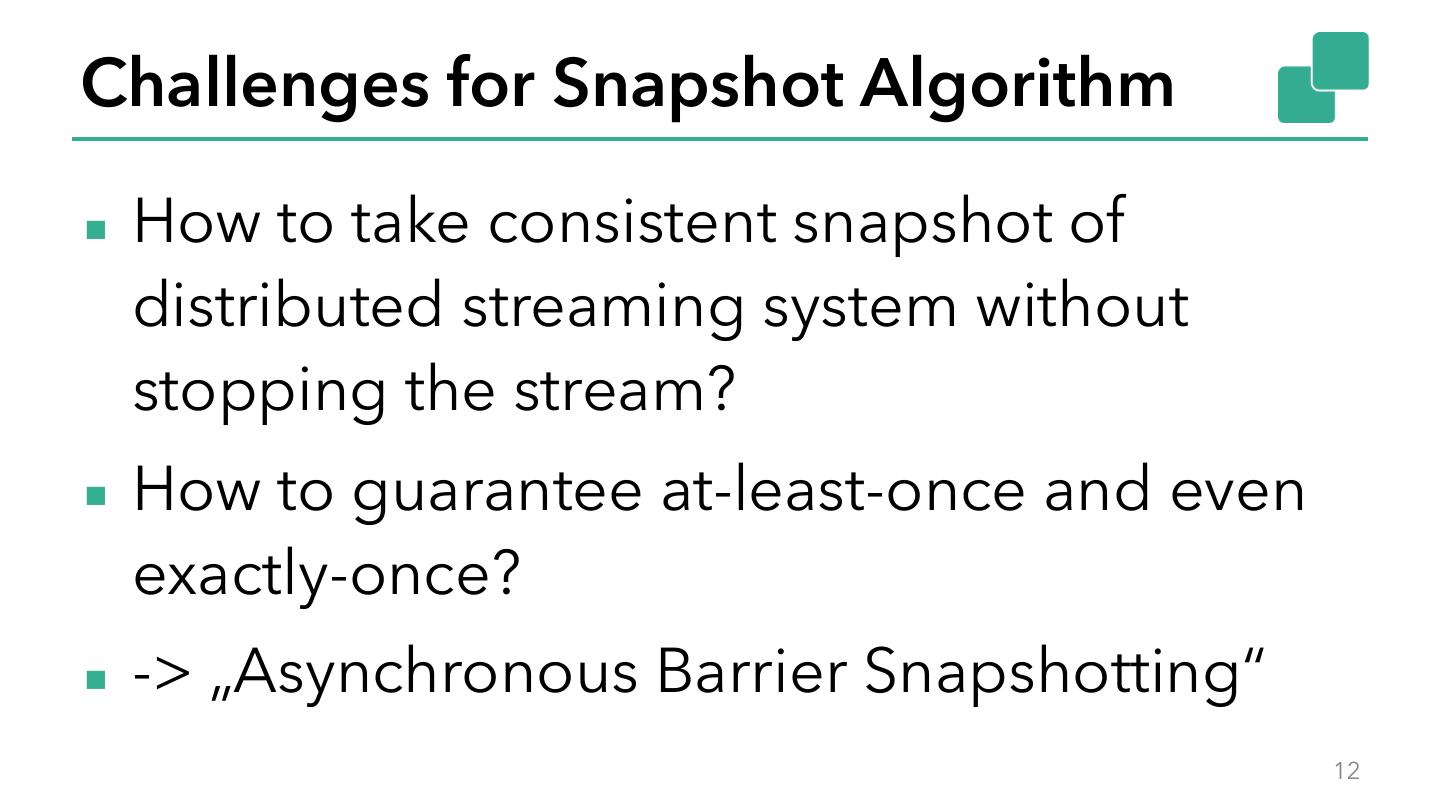

12 .Challenges for Snapshot Algorithm ▪ How to take consistent snapshot of distributed streaming system without stopping the stream? ▪ How to guarantee at-least-once and even exactly-once? ▪ -> „Asynchronous Barrier Snapshotting“ 12

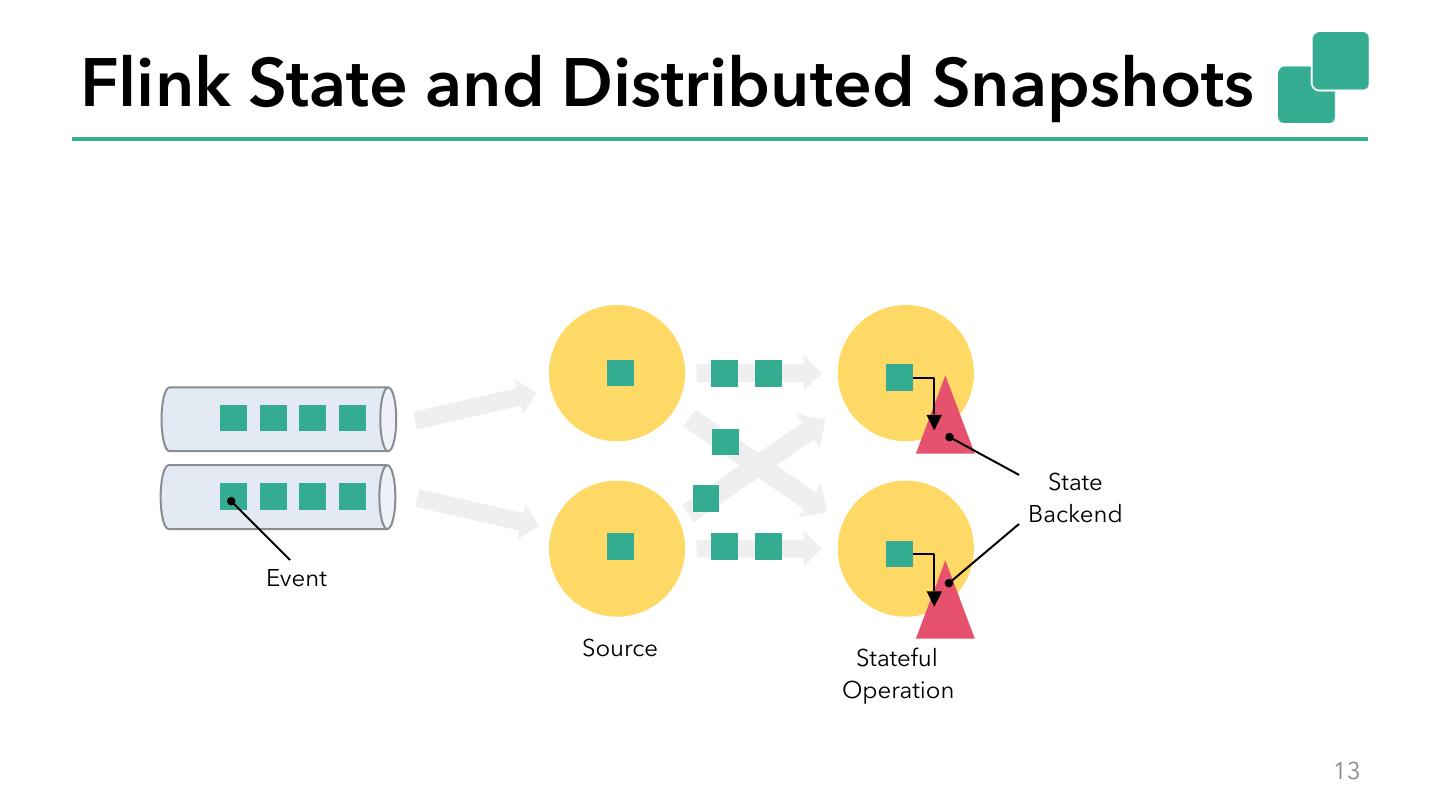

13 .Flink State and Distributed Snapshots State Backend Event Source Stateful Operation 13

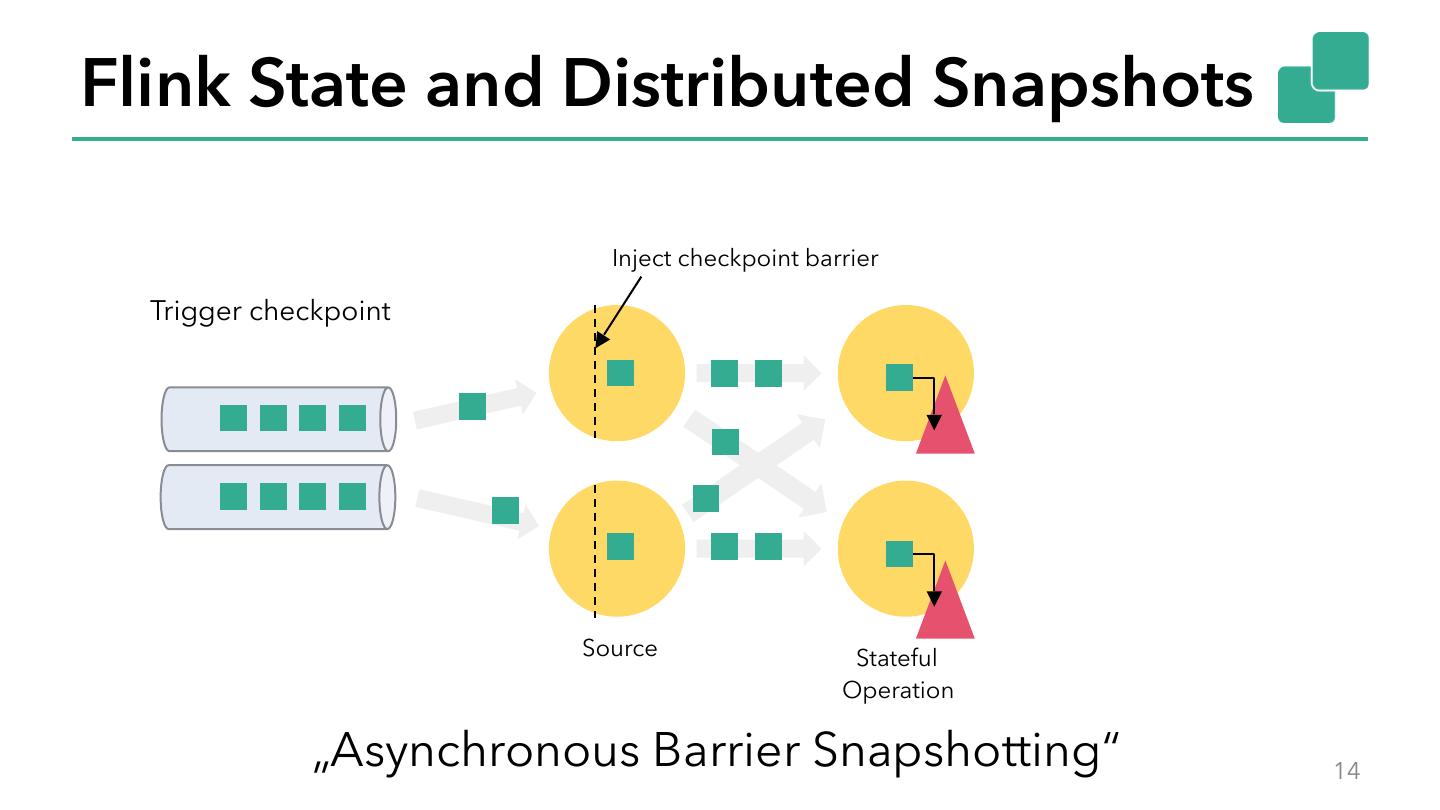

14 .Flink State and Distributed Snapshots Inject checkpoint barrier Trigger checkpoint Source Stateful Operation „Asynchronous Barrier Snapshotting“ 14

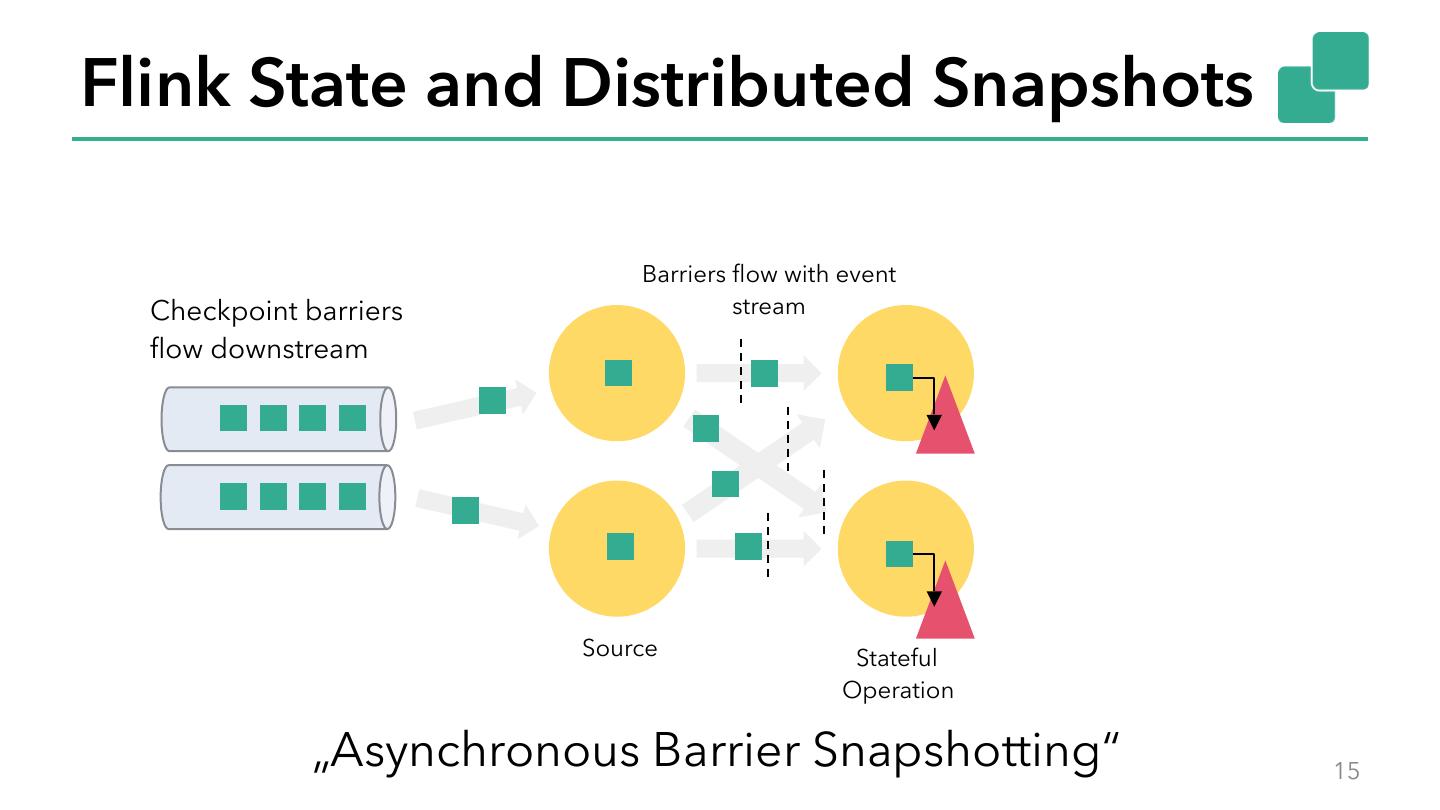

15 .Flink State and Distributed Snapshots Barriers flow with event Checkpoint barriers stream flow downstream Source Stateful Operation „Asynchronous Barrier Snapshotting“ 15

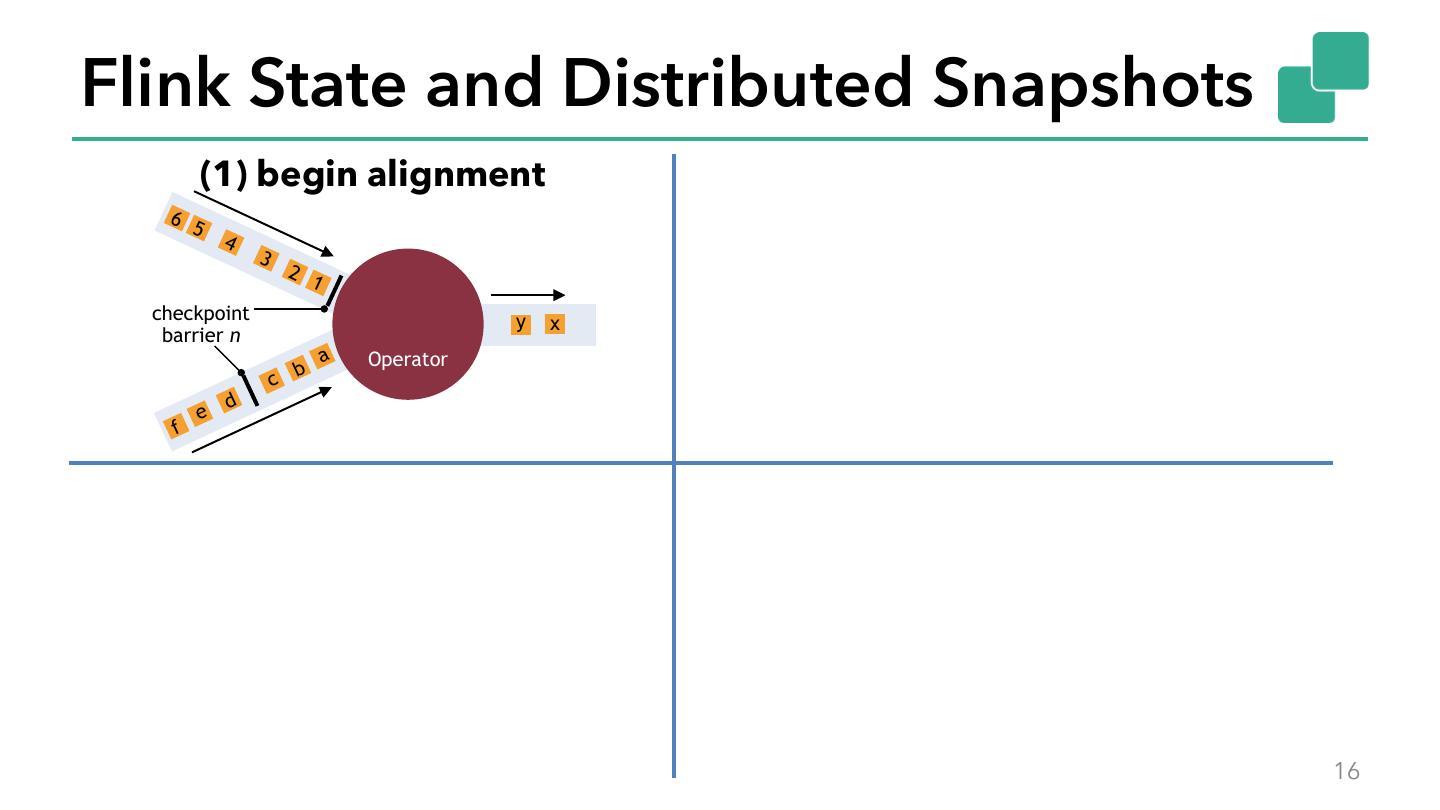

16 .Flink State and Distributed Snapshots (1) begin alignment (2) alignment 6 6 6 65 4 6 65 4 hold channel -> exactly once 6 63 2 6 63 2 6 61 6 61 checkpoint y b a y x barrier n a Operator 6 6 c Operator 6 66 66 6 6 b d c e d 6 g f 6 e 6 h 66 66 f 6 (3) take state snapshot (4) continue 6 8 6 65 4 emit 6 67 6 6 63 2 barrier n 6 65 4 6 61 6 63 c b a 2 d 1 c d Operator 666 e Operator 6 66 6 6 g f 66 e g f 6 6 66 h h 6 j i i 66 6 6 16

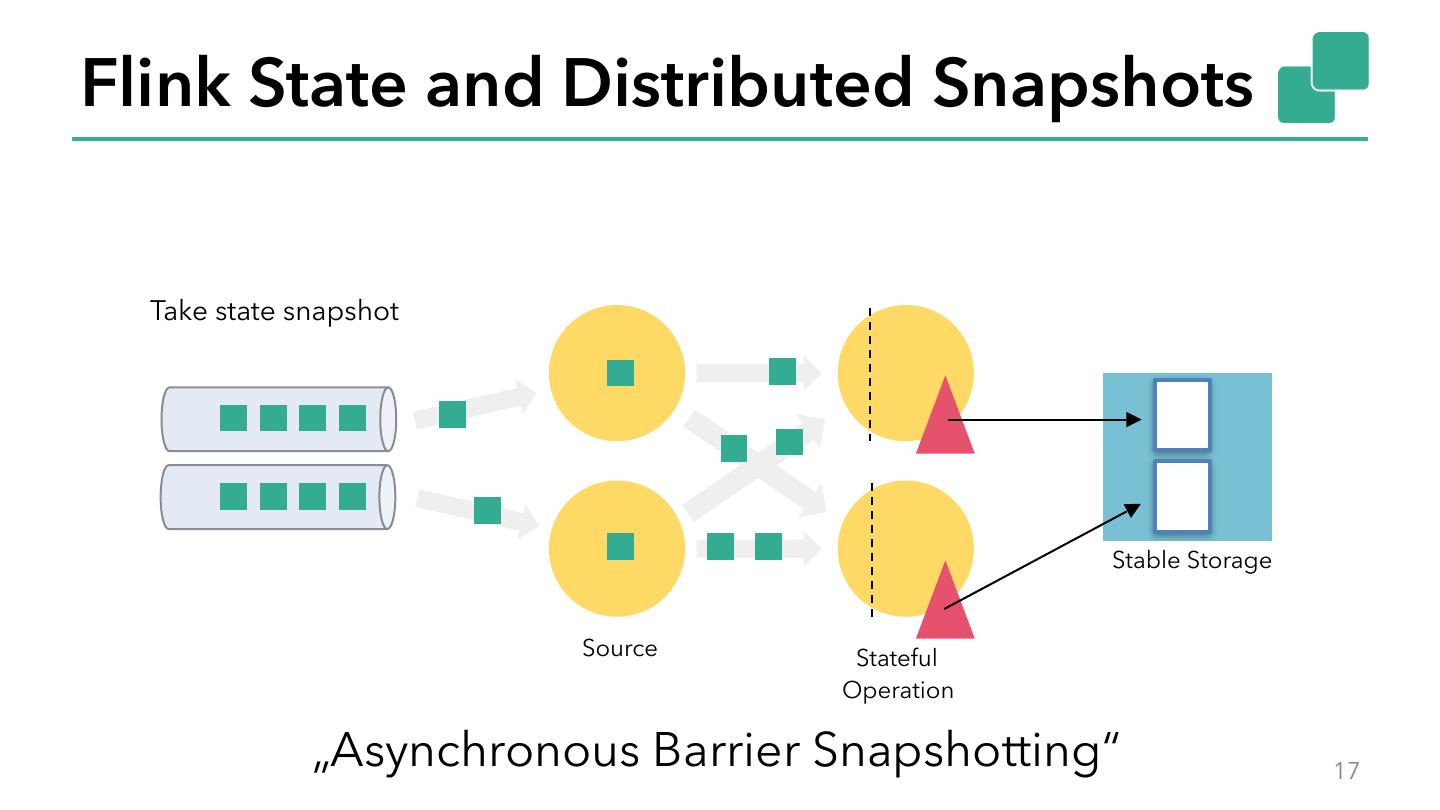

17 .Flink State and Distributed Snapshots Take state snapshot Stable Storage Source Stateful Operation „Asynchronous Barrier Snapshotting“ 17

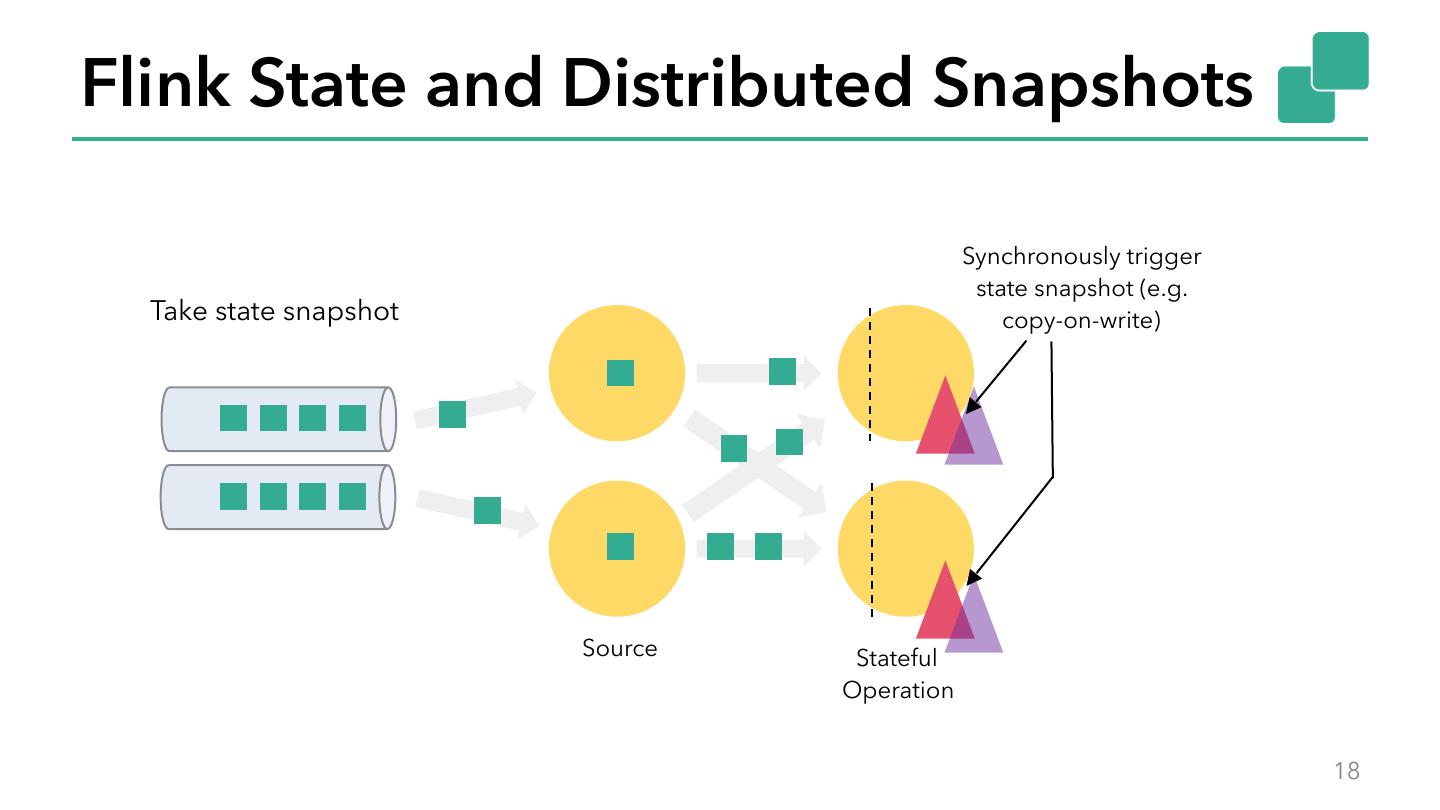

18 .Flink State and Distributed Snapshots Synchronously trigger state snapshot (e.g. Take state snapshot copy-on-write) Source Stateful Operation 18

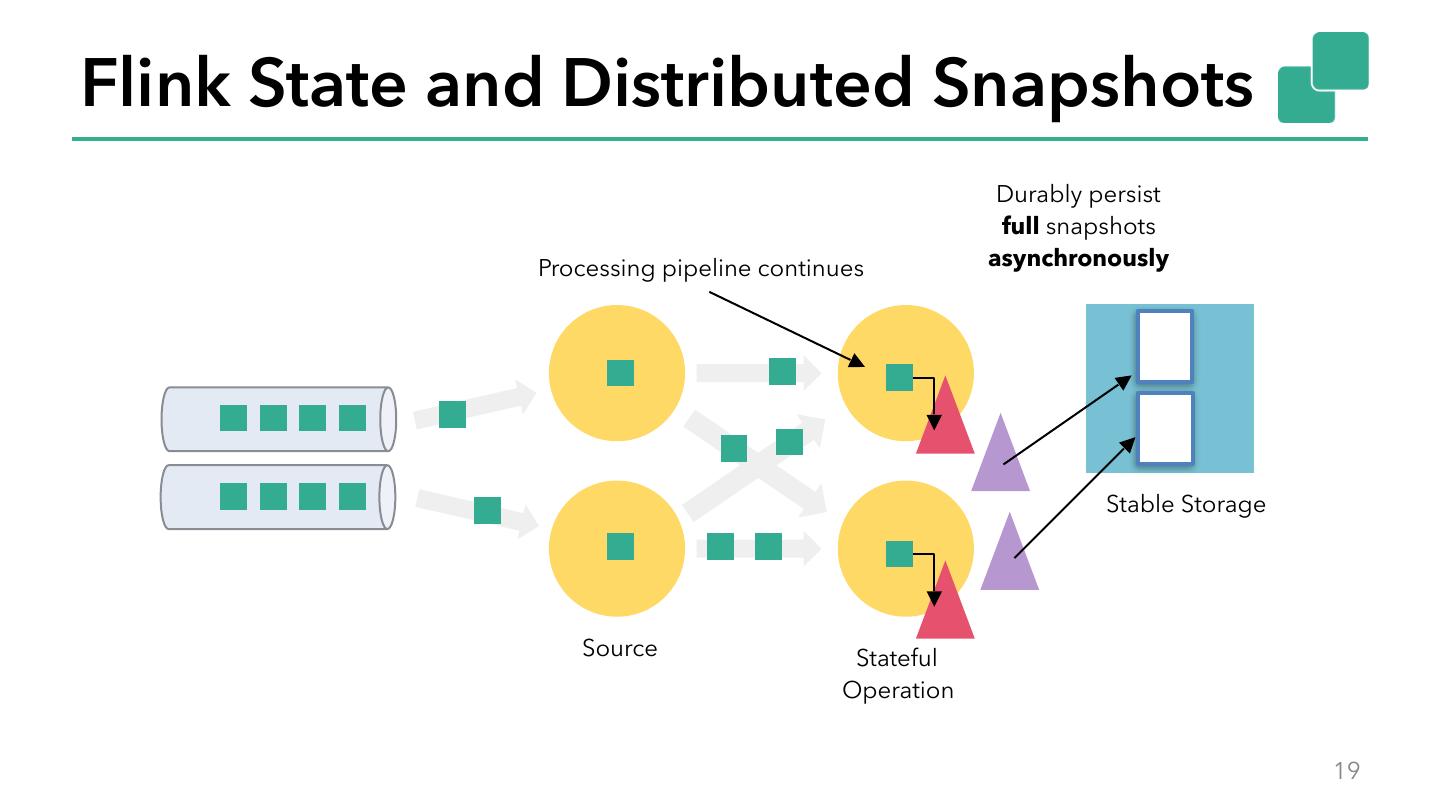

19 .Flink State and Distributed Snapshots Durably persist full snapshots Processing pipeline continues asynchronously Stable Storage Source Stateful Operation 19

20 .Challenges for Data Structures ▪ Asynchronous Snapshots: ▪ Minimize pipeline stall time while taking the snapshot. ▪ Keep interference (memory, CPU,…) as low as possible while writing the snapshot. ▪ Support multiple parallel checkpoints. ▪ -> MVCC (Multi Versioning Concurrency Control) 20

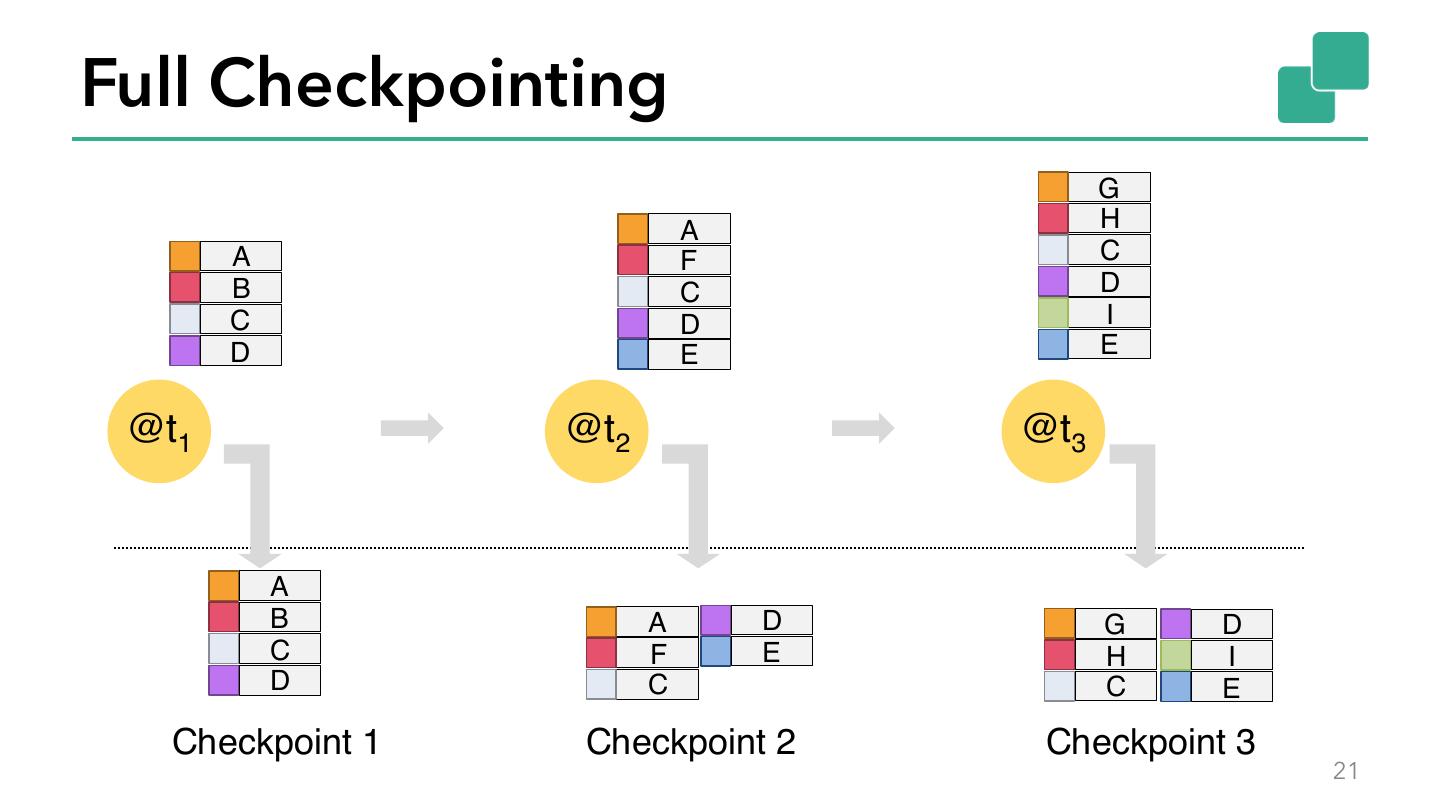

21 .Full Checkpointing G A H A F C B C D C D I D E E @t1 @t2 @t3 A B A D G D C F E H I D C C E Checkpoint 1 Checkpoint 2 Checkpoint 3 21

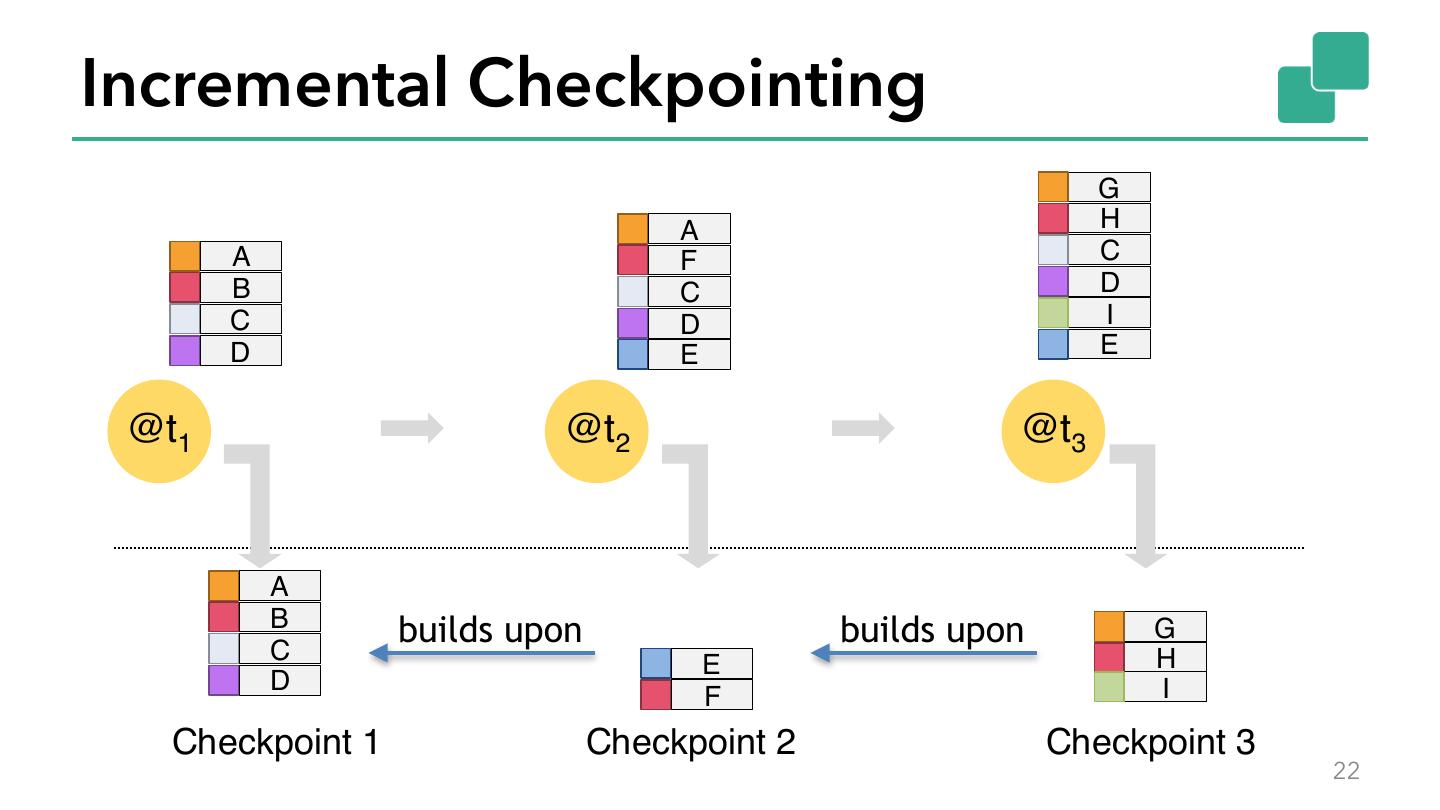

22 .Incremental Checkpointing G A H A F C B C D C D I D E E @t1 @t2 @t3 A B builds upon builds upon G C H E D I F Checkpoint 1 Checkpoint 2 Checkpoint 3 22

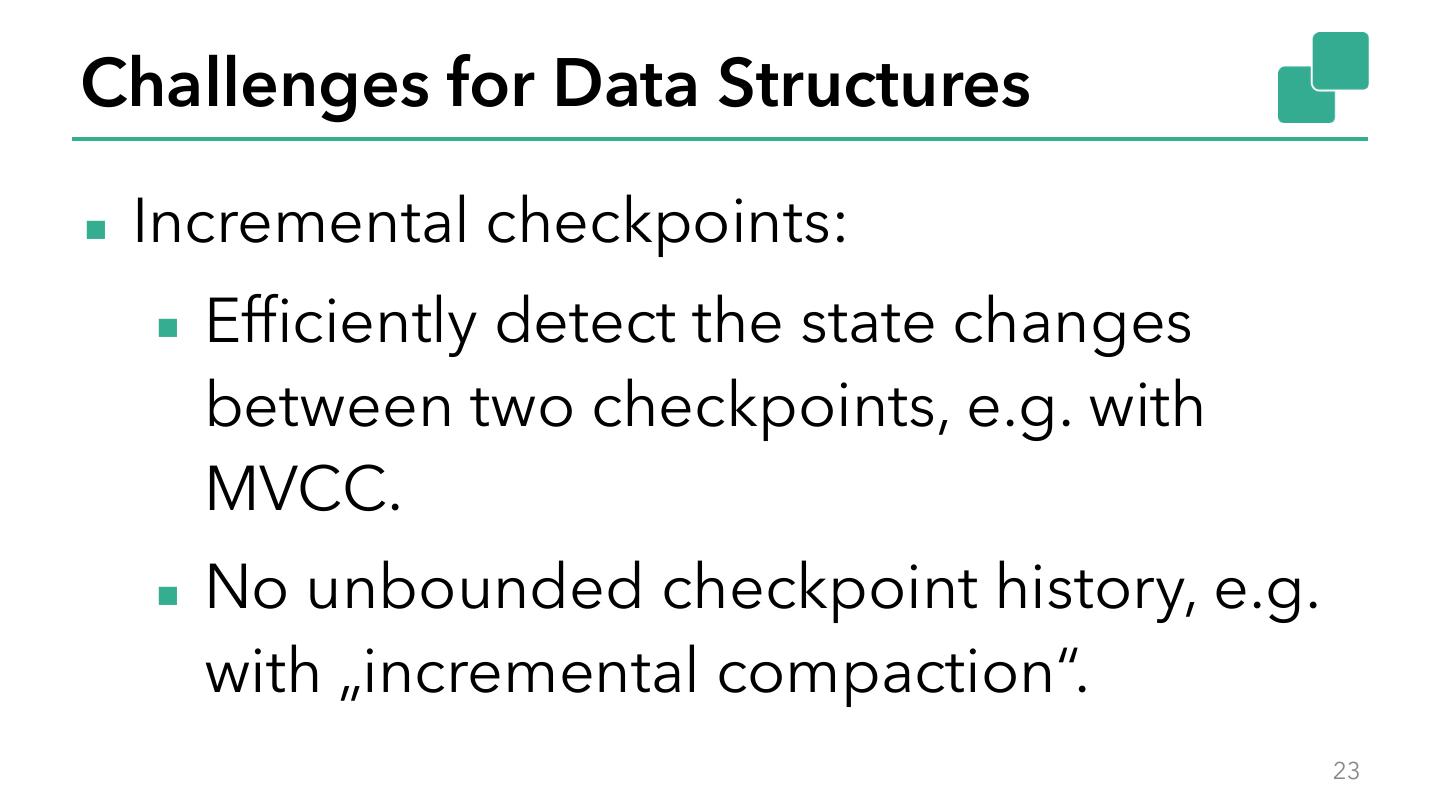

23 .Challenges for Data Structures ▪ Incremental checkpoints: ▪ Efficiently detect the state changes between two checkpoints, e.g. with MVCC. ▪ No unbounded checkpoint history, e.g. with „incremental compaction“. 23

24 . Two State Backends Based on JVM Heap Objects Based on RocksDB • State lives in memory, on Java heap. • State lives in off-heap memory and on disk. • MVCC HashMap • LSM Tree • Goes through ser/de during snapshot/restore. • Goes through ser/de for each state access. • Async snapshots supported. • Async and incremental snapshots. 24

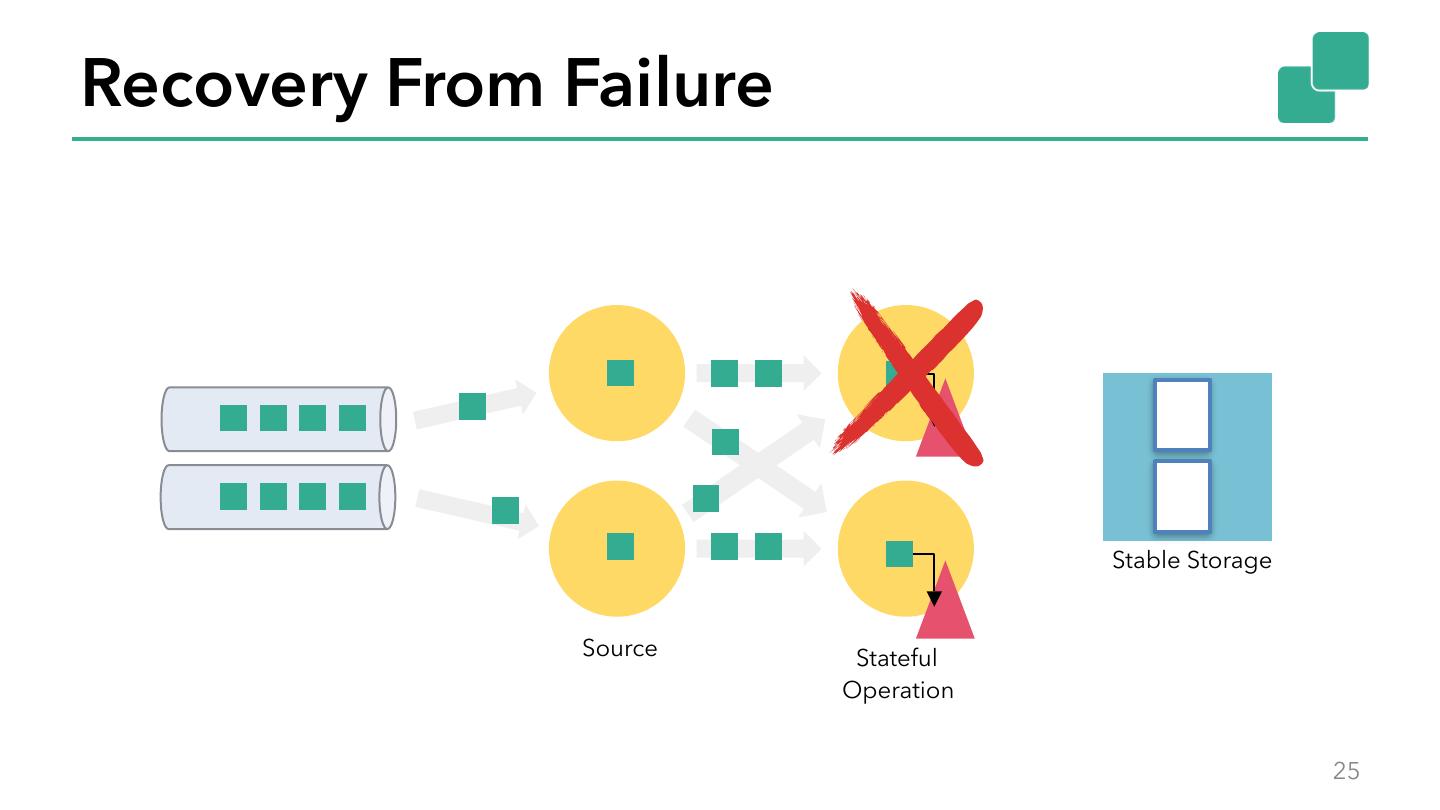

25 .Recovery From Failure Stable Storage Source Stateful Operation 25

26 .Recovery From Failure Resume to checkpoint offset Restore State Stable Storage Restore State Source Stateful Operation 26

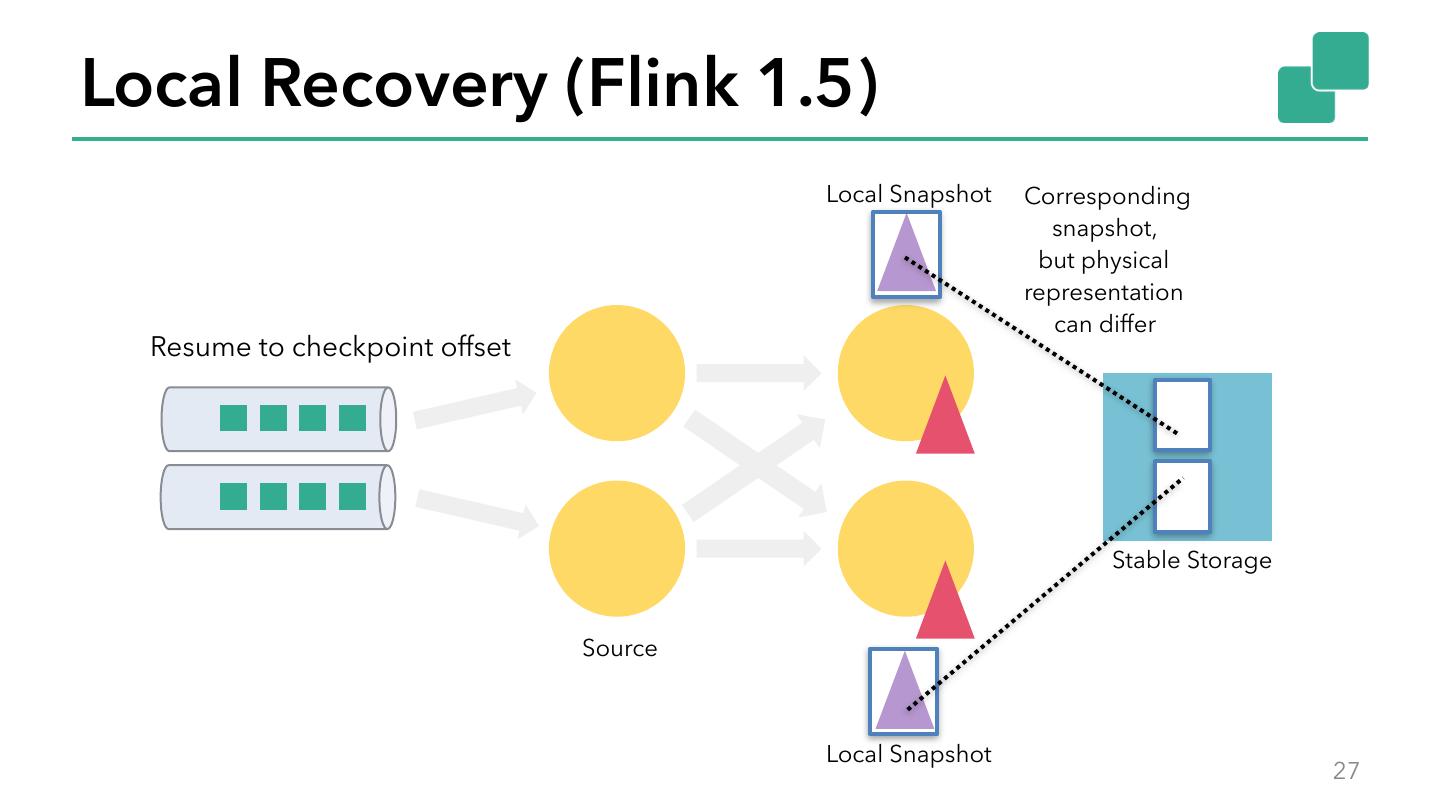

27 .Local Recovery (Flink 1.5) Local Snapshot Corresponding snapshot, but physical representation can differ Resume to checkpoint offset Stable Storage Source Local Snapshot 27

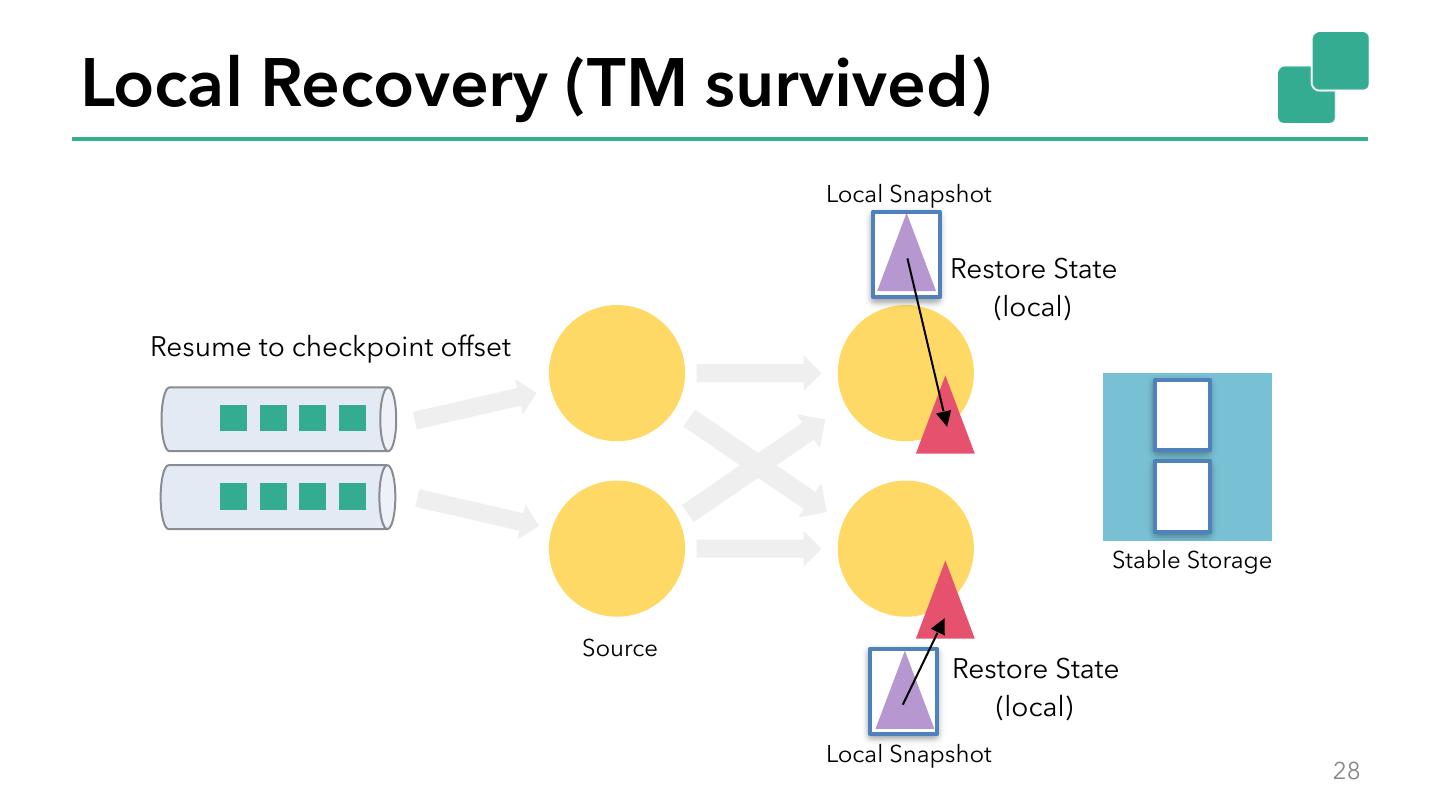

28 .Local Recovery (TM survived) Local Snapshot Restore State (local) Resume to checkpoint offset Stable Storage Source Restore State (local) Local Snapshot 28

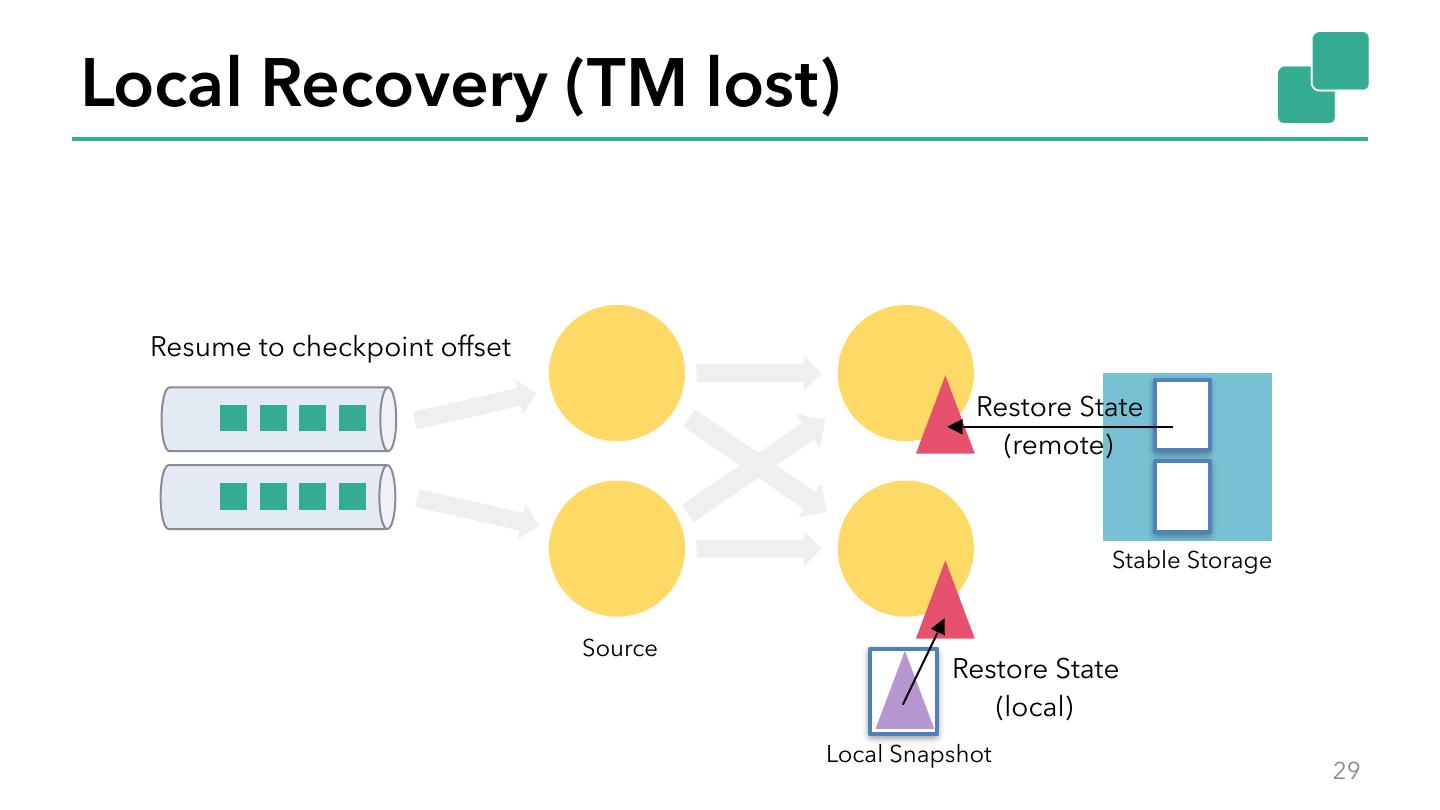

29 .Local Recovery (TM lost) Resume to checkpoint offset Restore State (remote) Stable Storage Source Restore State (local) Local Snapshot 29