- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

DeepMind深度学习及前沿进展

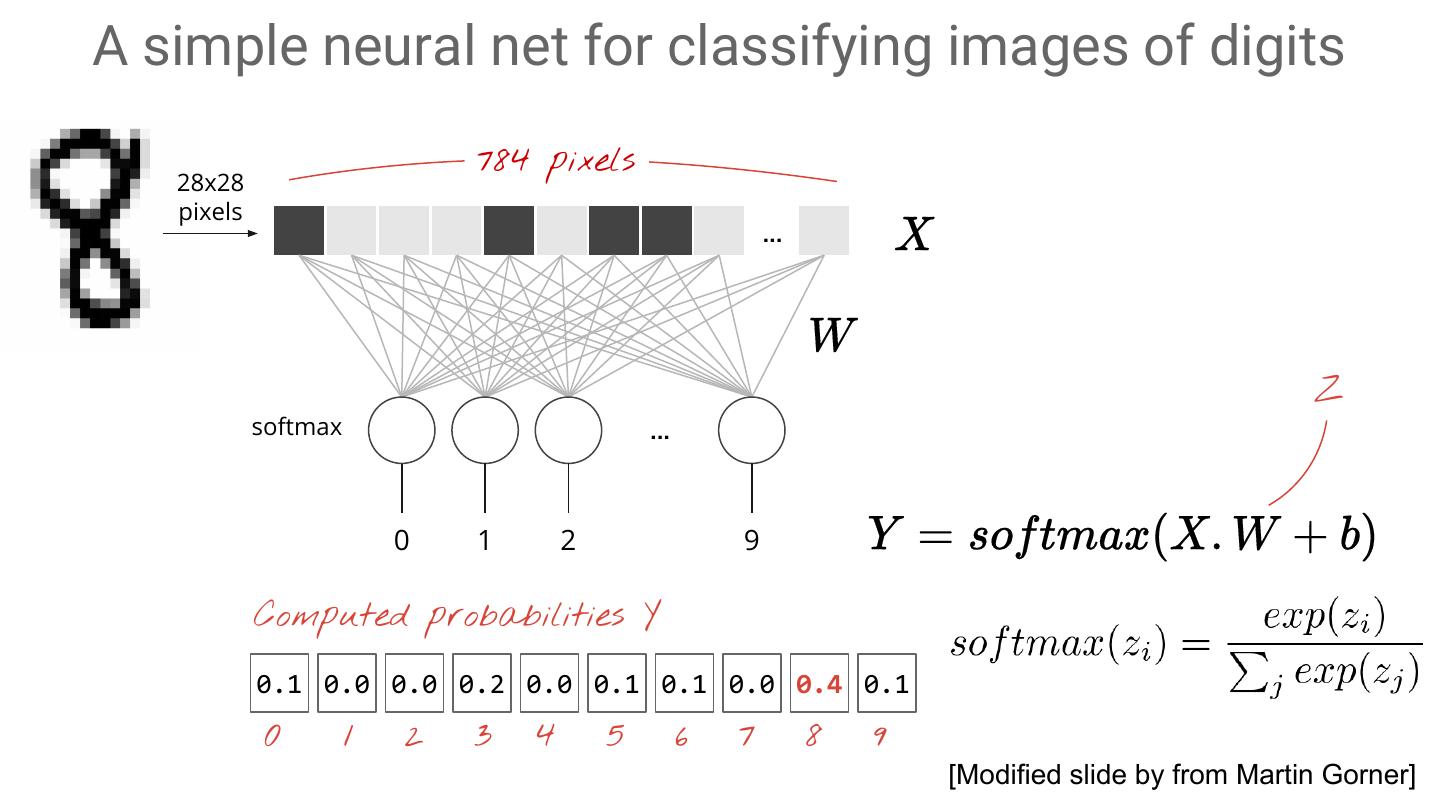

展开查看详情

1 . Deep Learning The AI revolution and its frontiers Nando de Freitas, Matt Hoffman, Ziyu Wang, Brendan Shillingford, Caglar Gulcehre, Misha Denil, Scott Reed, Serkan Cabi, Tobias Pfaff, Tom Paine, Yannis Assael, Yusuf Aytar, Yutian Chen,, Sergio Gomez, David Budden, Natasha Jaques

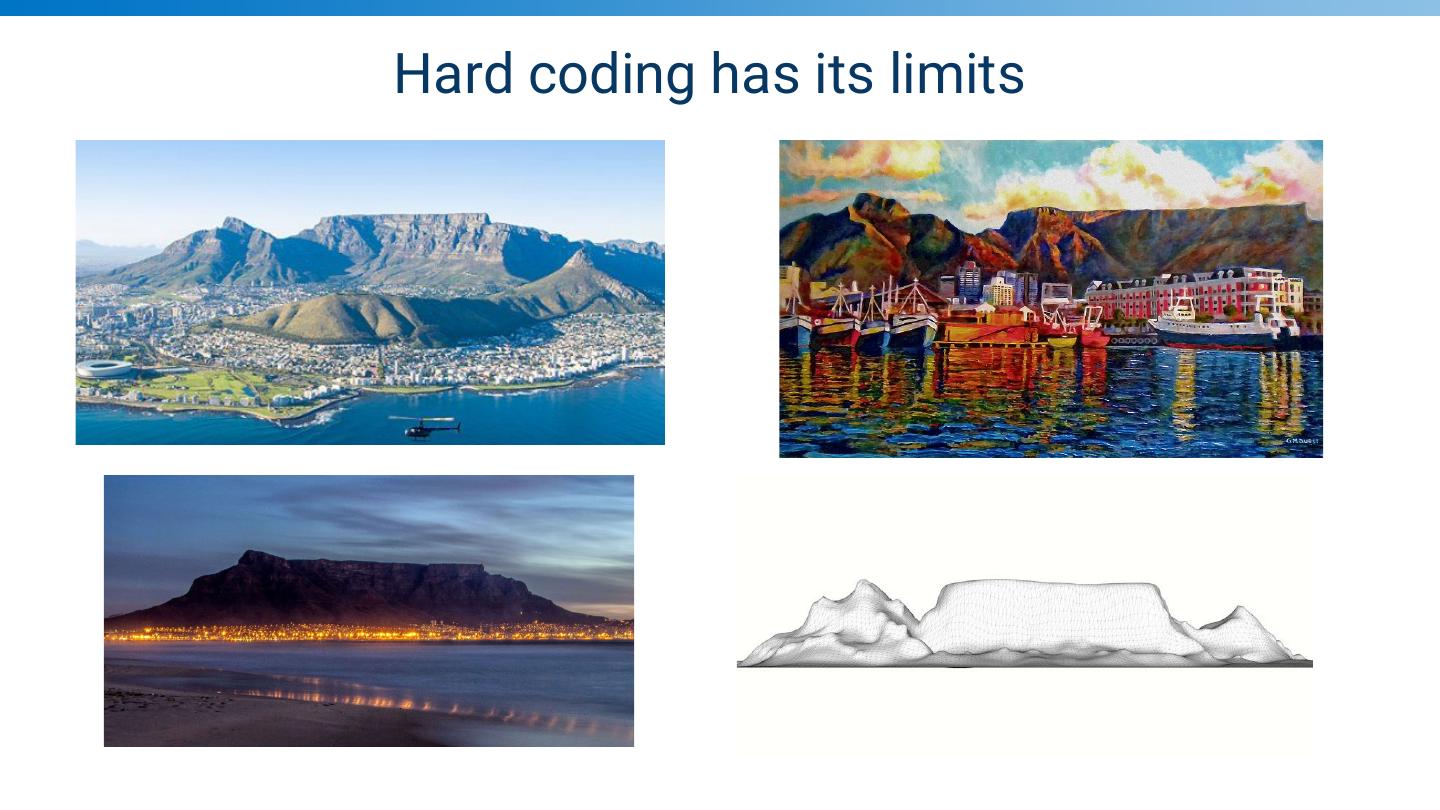

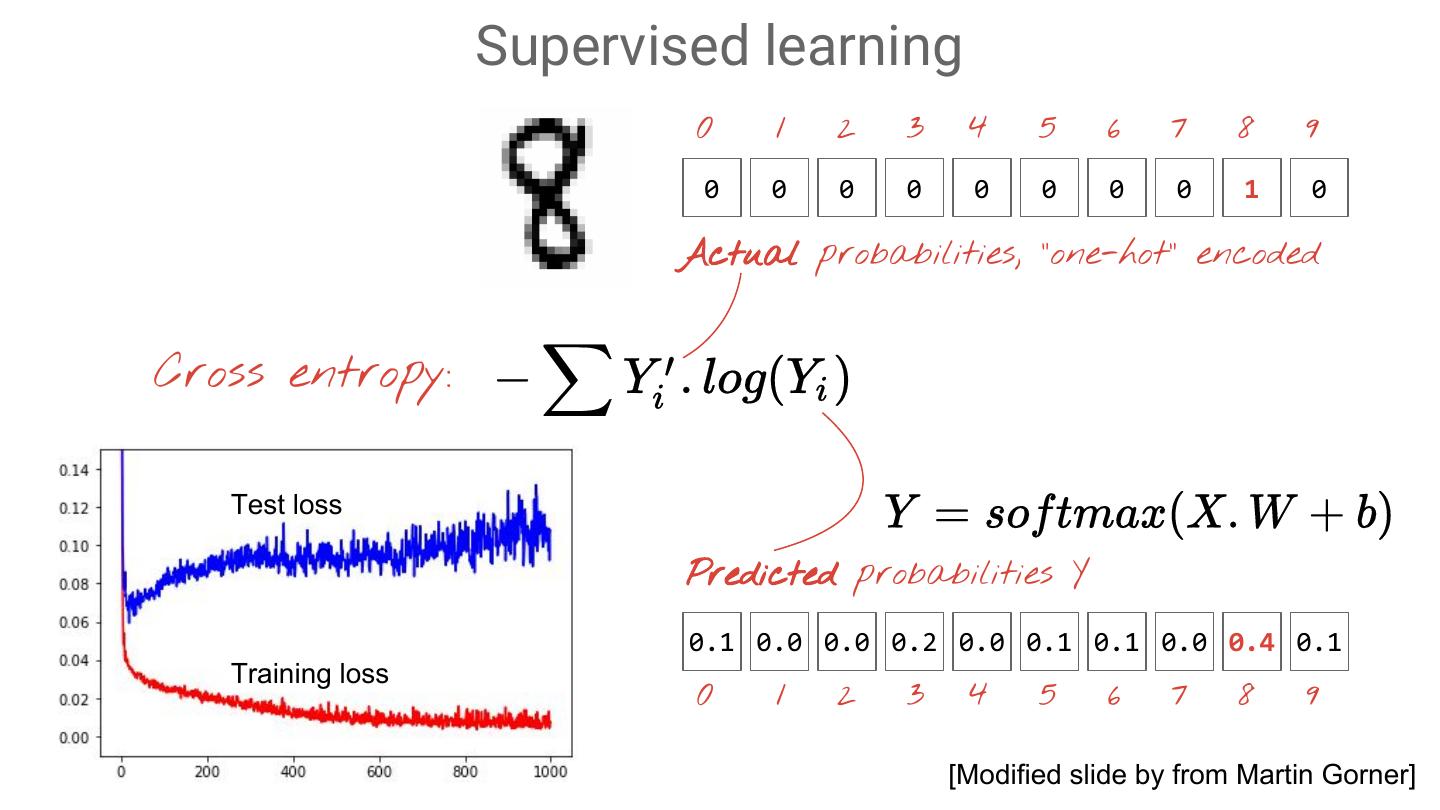

2 .Hard coding has its limits

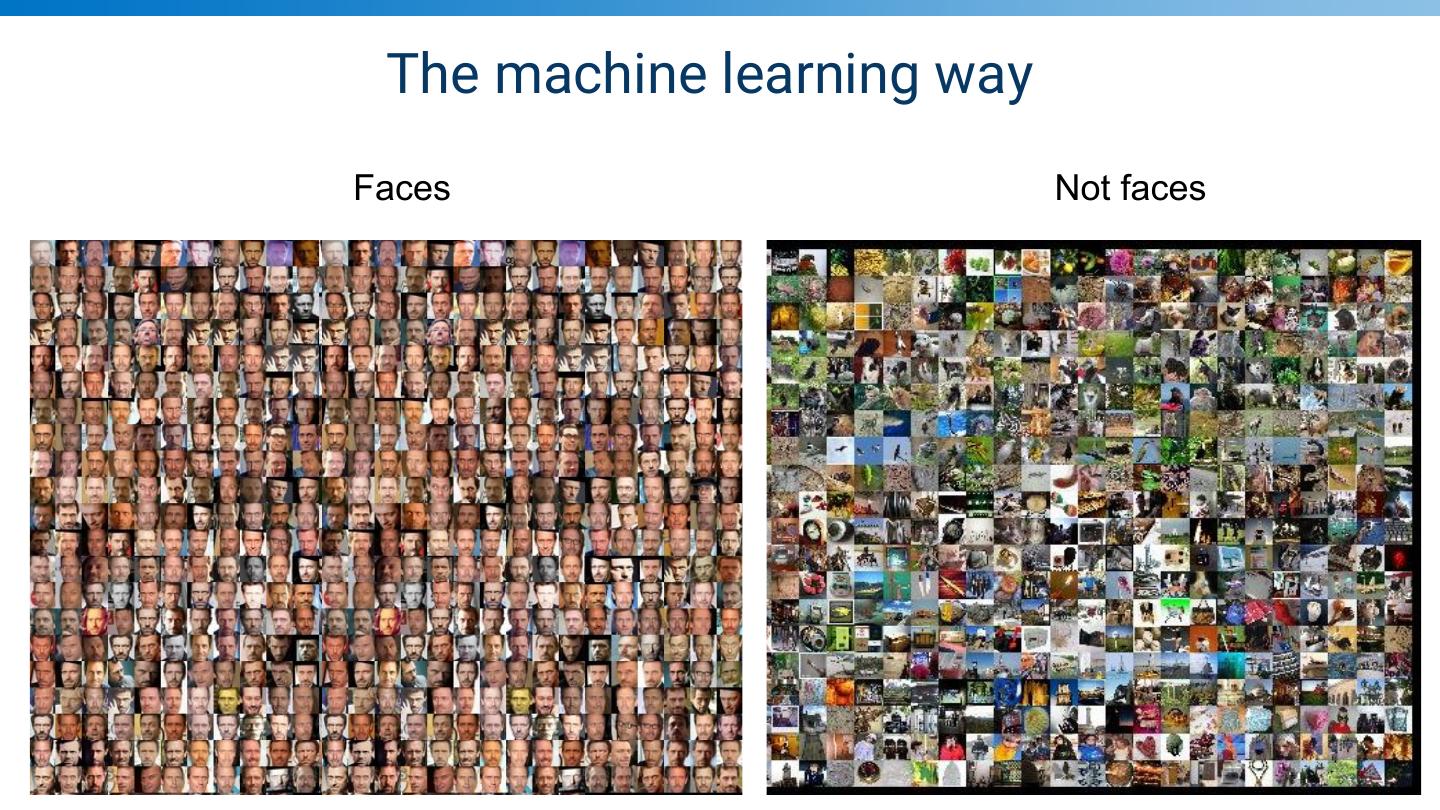

3 . The machine learning way Faces Not faces

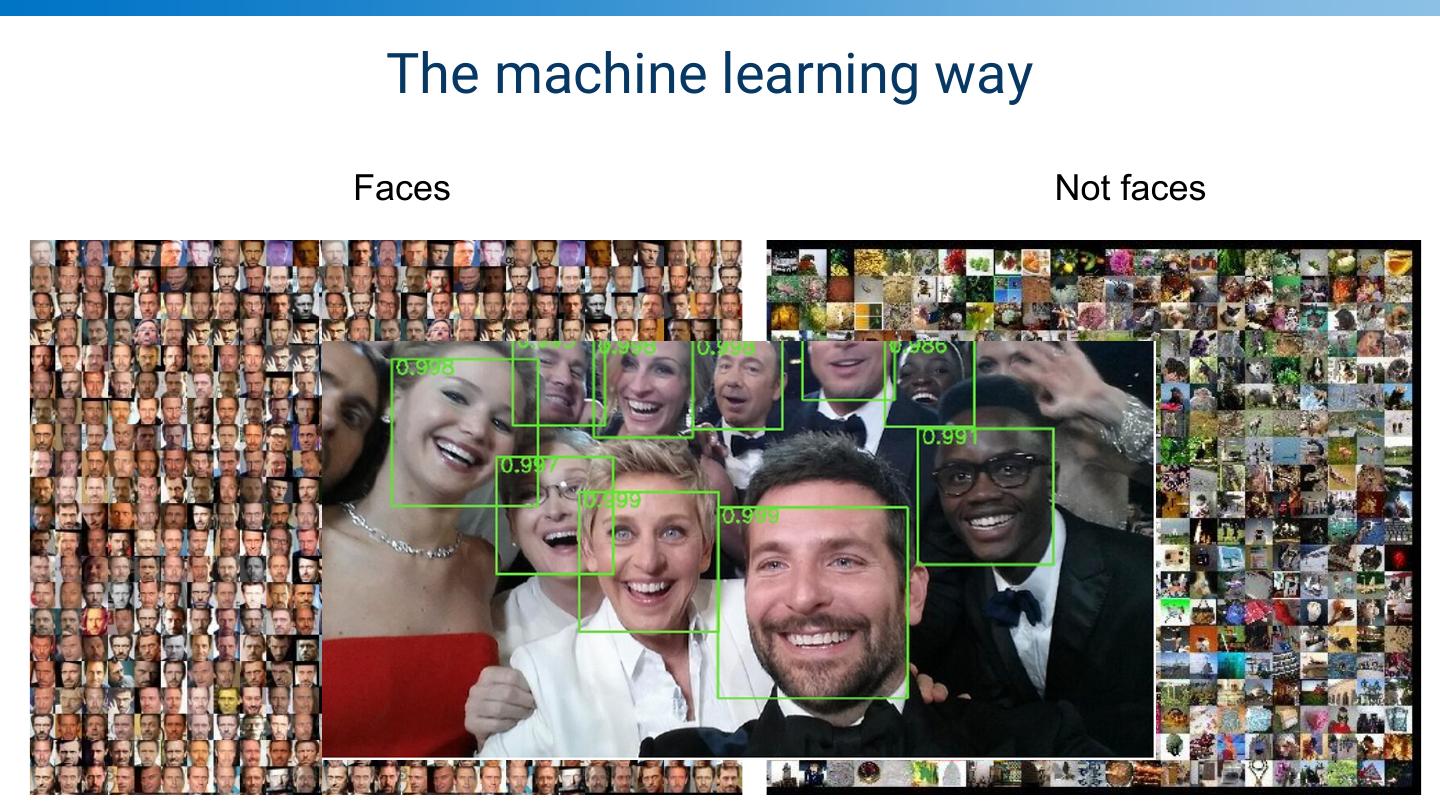

4 . The machine learning way Faces Not faces

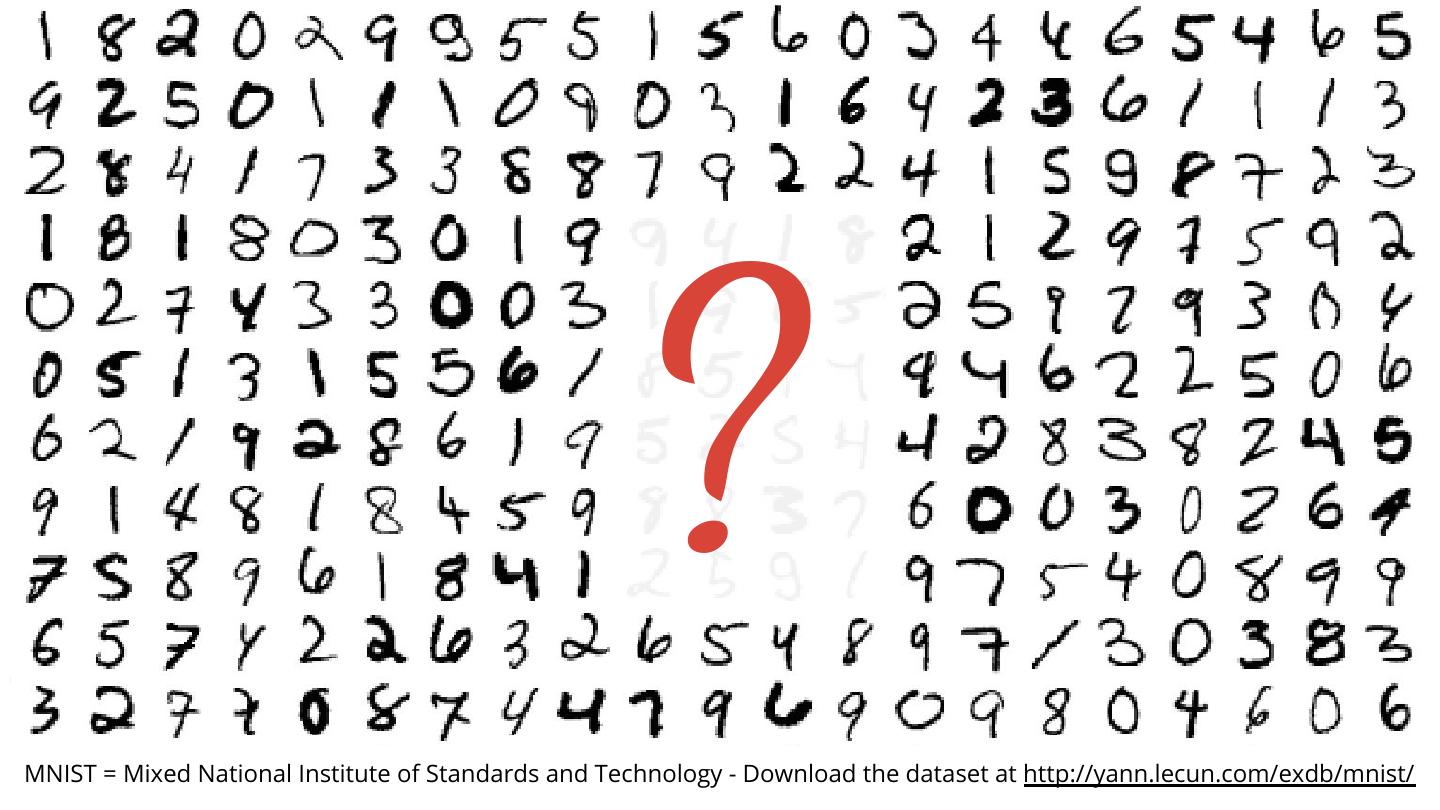

5 . ? MNIST = Mixed National Institute of Standards and Technology - Download the dataset at http://yann.lecun.com/exdb/mnist/

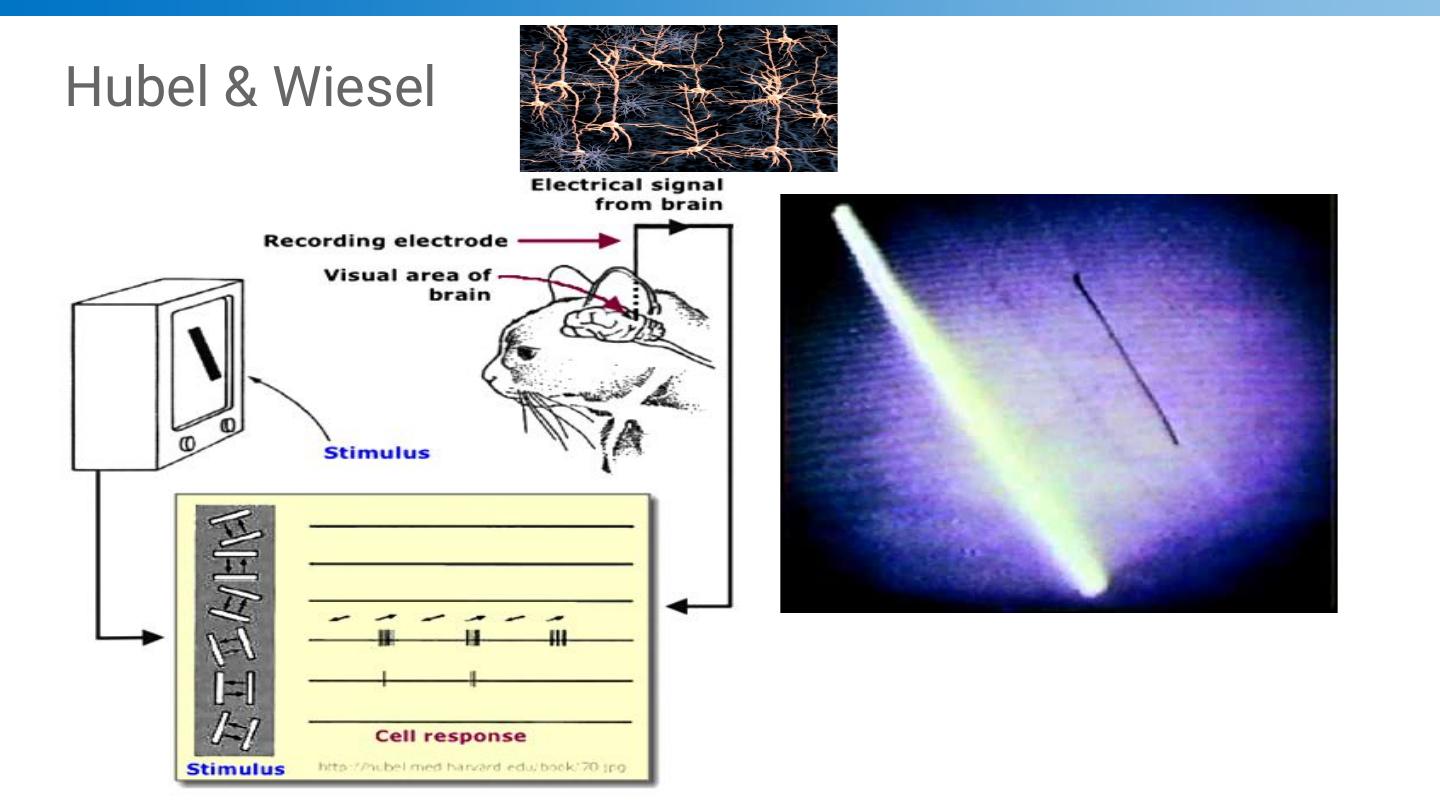

6 .Hubel & Wiesel

7 .A simple neural net for classifying images of digits 784 pixels 28x28 pixels ... Z softmax ... 0 1 2 9 Computed probabilities Y 0.1 0.0 0.0 0.2 0.0 0.1 0.1 0.0 0.4 0.1 0 1 2 3 4 5 6 7 8 9 [Modified @martin_gorner slide by from Martin Gorner]

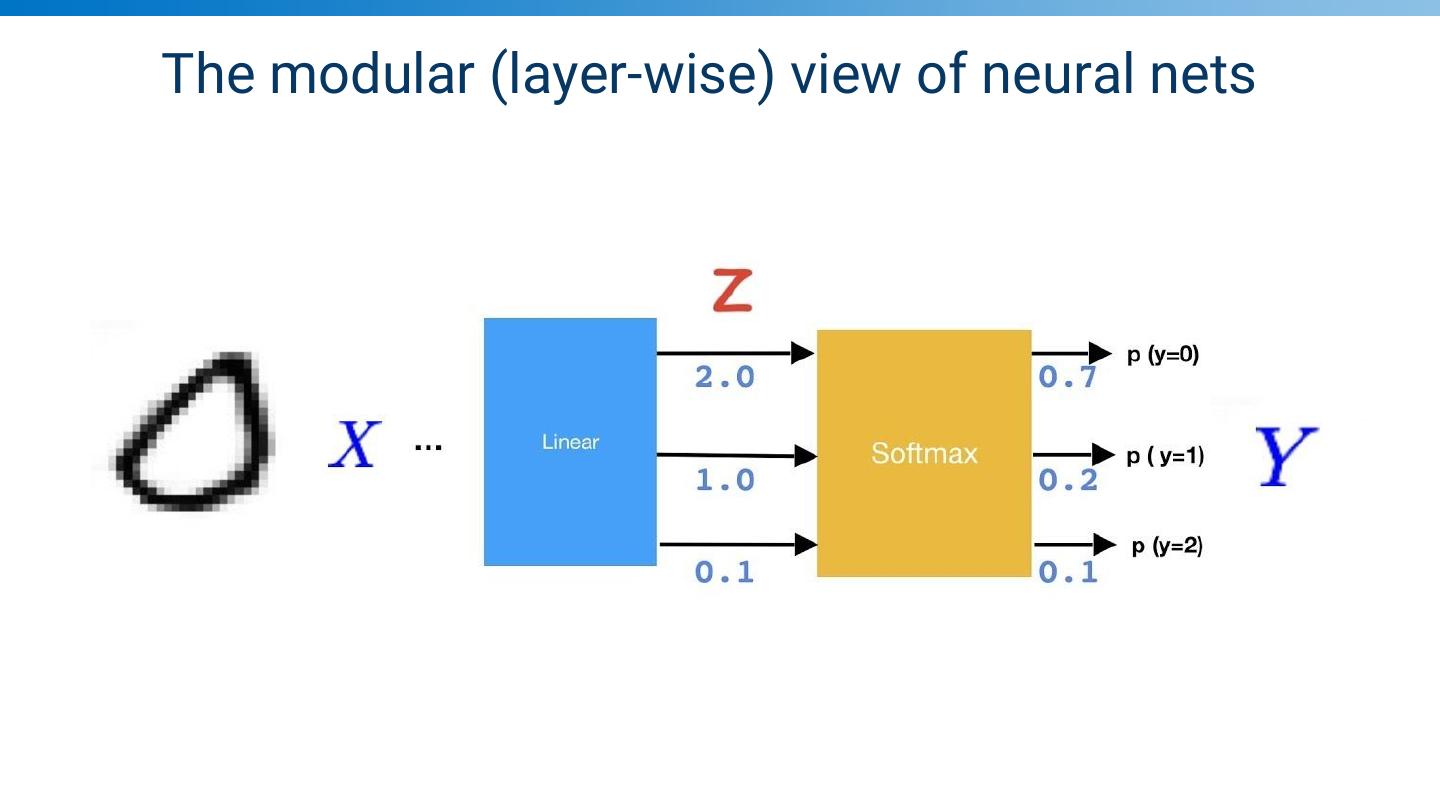

8 .The modular (layer-wise) view of neural nets

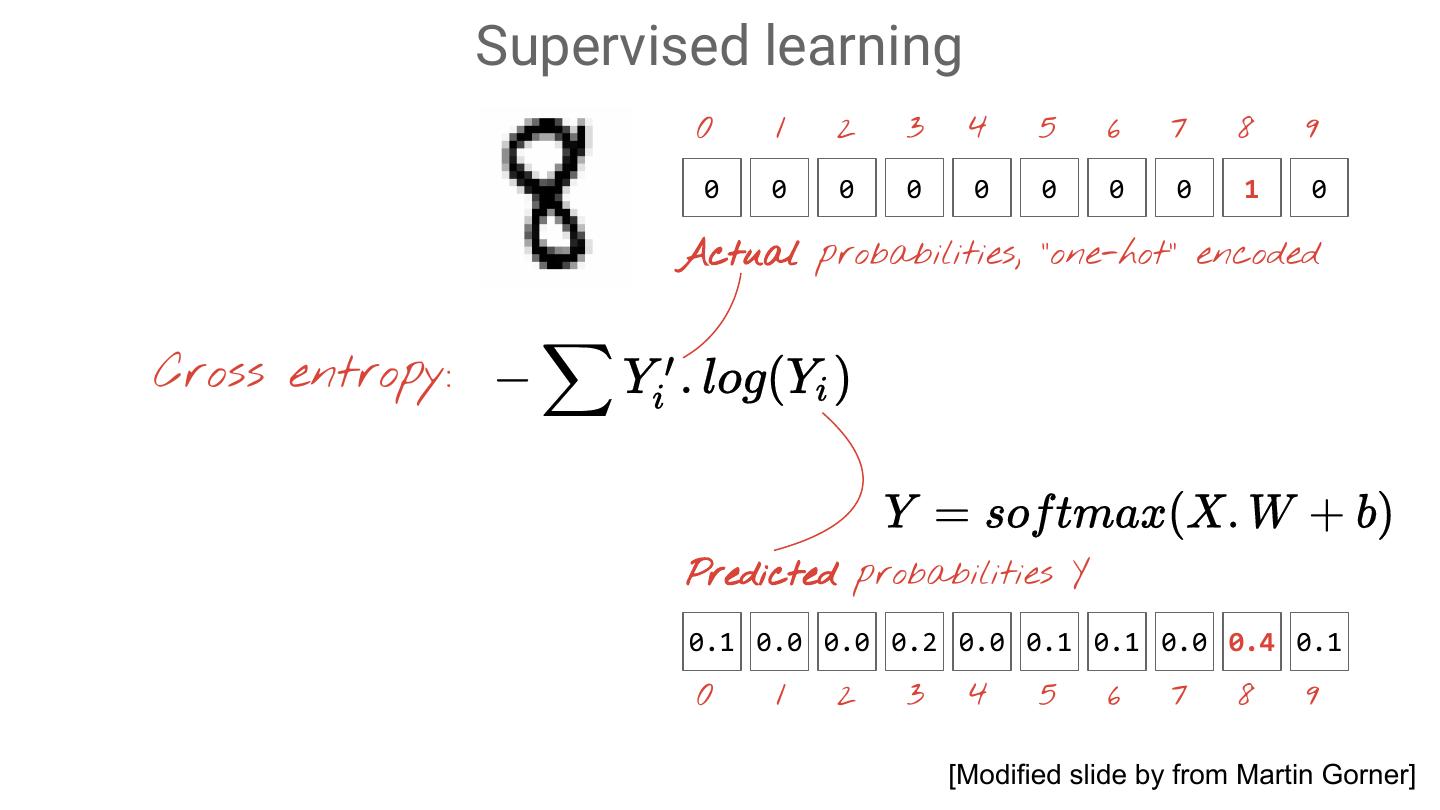

9 . Supervised learning 0 1 2 3 4 5 6 7 8 9 0 0 0 0 0 0 0 0 1 0 Actual probabilities, “one-hot” encoded Cross entropy: Predicted probabilities Y 0.1 0.0 0.0 0.2 0.0 0.1 0.1 0.0 0.4 0.1 0 1 2 3 4 5 6 7 8 9 [Modified @martin_gorner slide by from Martin Gorner]

10 . Supervised learning 0 1 2 3 4 5 6 7 8 9 0 0 0 0 0 0 0 0 1 0 Actual probabilities, “one-hot” encoded Cross entropy: Test loss Predicted probabilities Y 0.1 0.0 0.0 0.2 0.0 0.1 0.1 0.0 0.4 0.1 Training loss 0 1 2 3 4 5 6 7 8 9 [Modified @martin_gorner slide by from Martin Gorner]

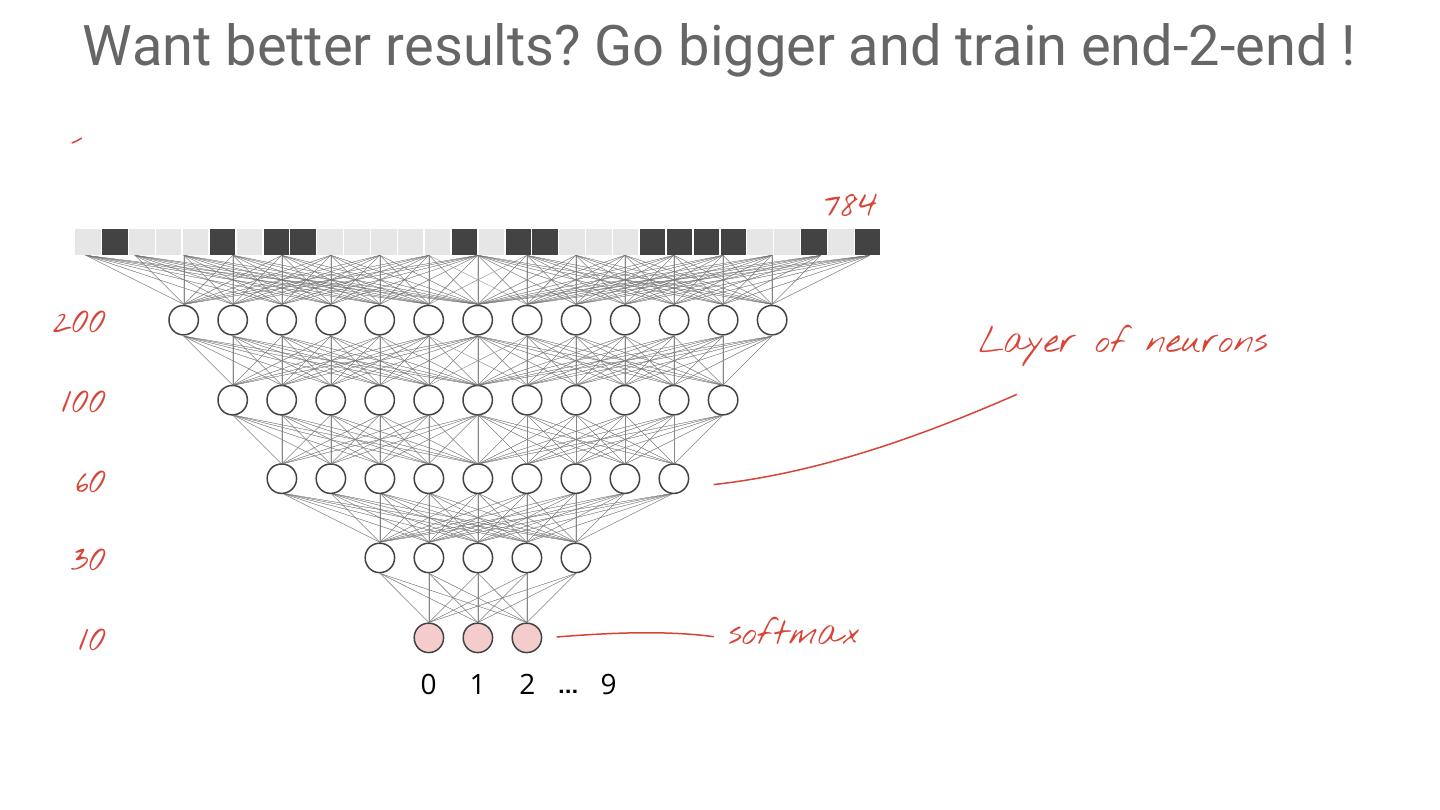

11 . Want better results? Go bigger and train end-2-end ! 784 200 Layer of neurons 100 60 30 10 softmax 0 1 2 ... 9 @martin_gorner

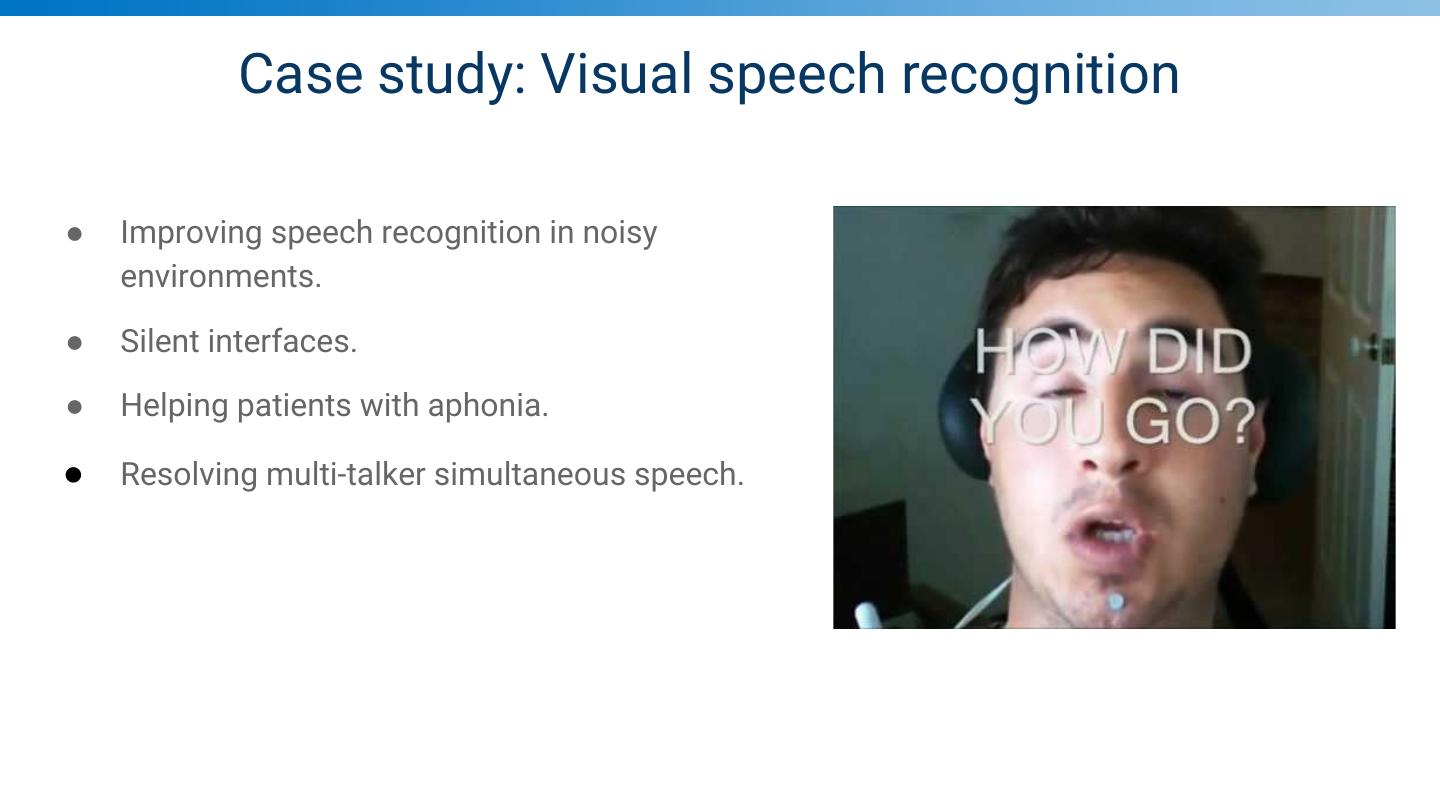

12 . Case study: Visual speech recognition ● Improving speech recognition in noisy environments. ● Silent interfaces. ● Helping patients with aphonia. ● Resolving multi-talker simultaneous speech.

13 .Step 1: Create a massive labelled dataset

14 .Step 2: Train a large deep network

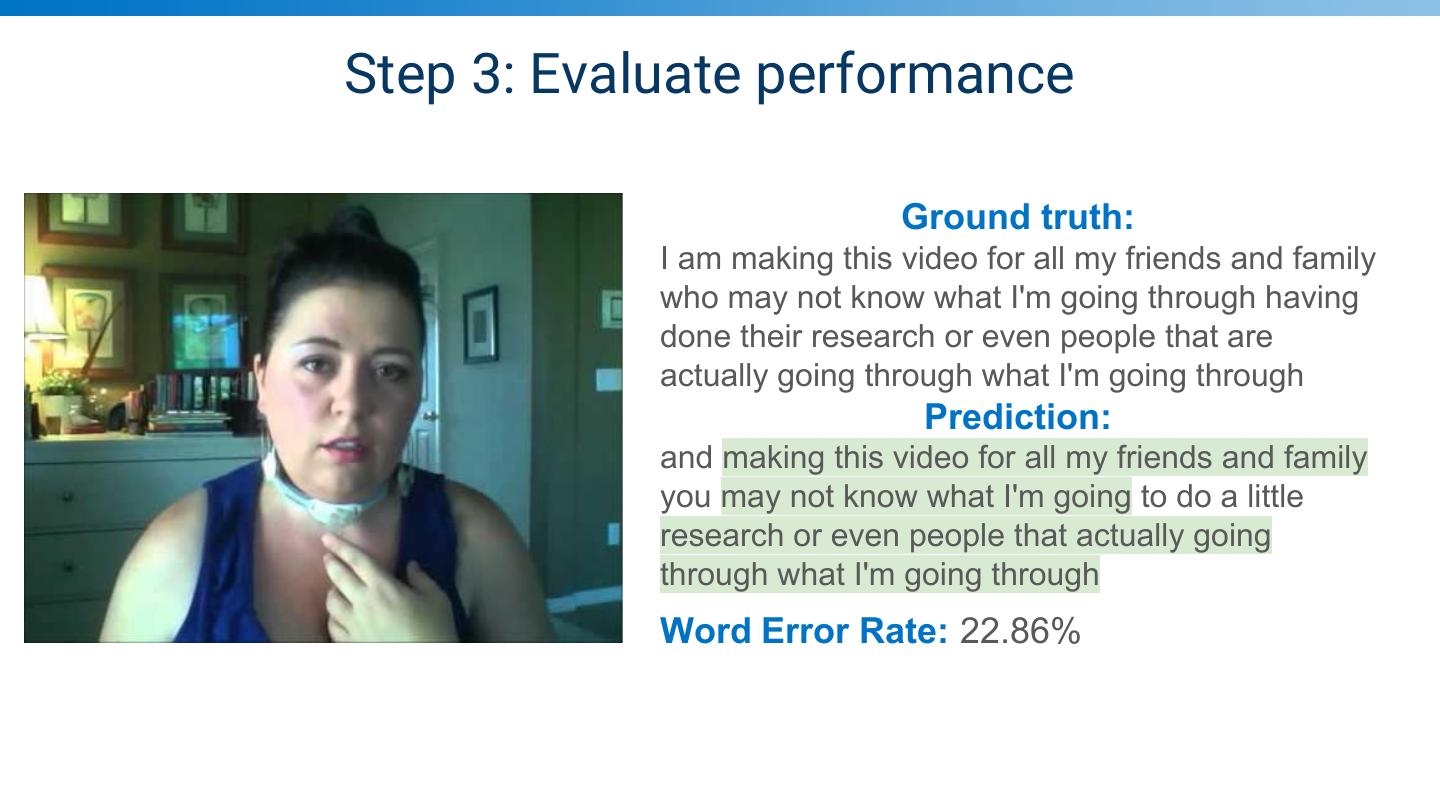

15 .Step 3: Evaluate performance Ground truth: I am making this video for all my friends and family who may not know what I'm going through having done their research or even people that are actually going through what I'm going through Prediction: and making this video for all my friends and family you may not know what I'm going to do a little research or even people that actually going through what I'm going through Word Error Rate: 22.86%

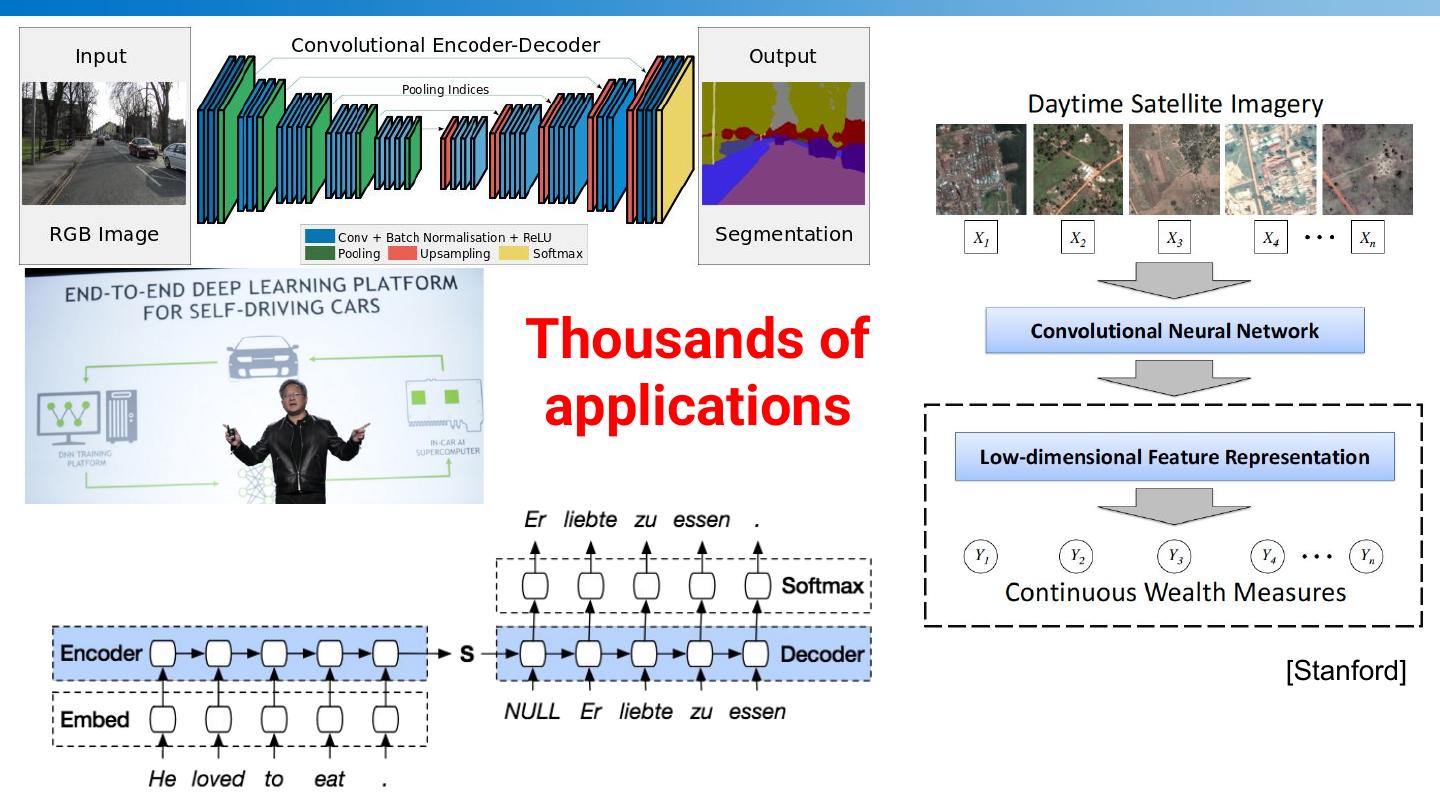

16 .Thousands of applications [Stanford]

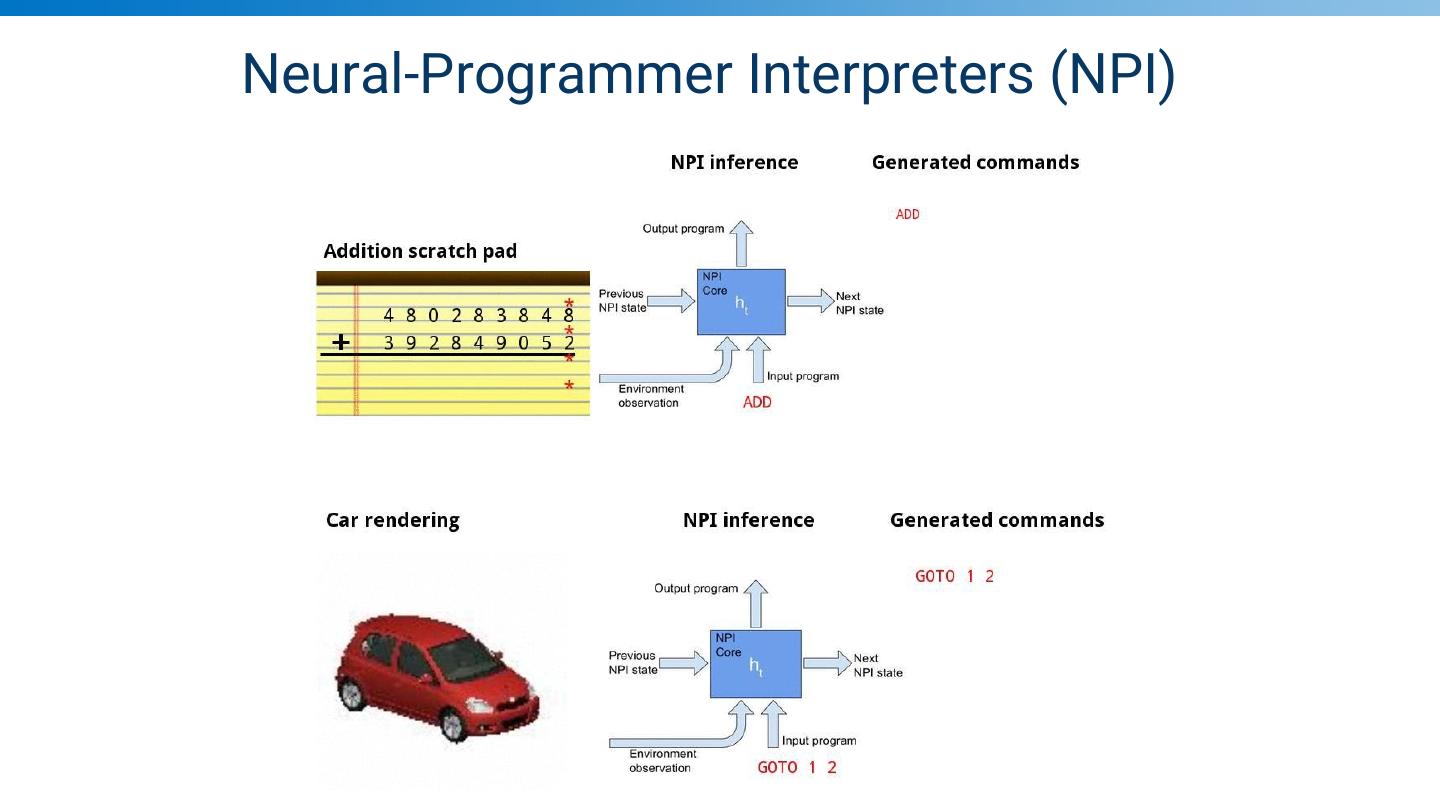

17 .The key AI revolution ingredients 1. Science 2. Data 3. Computing 4. Software

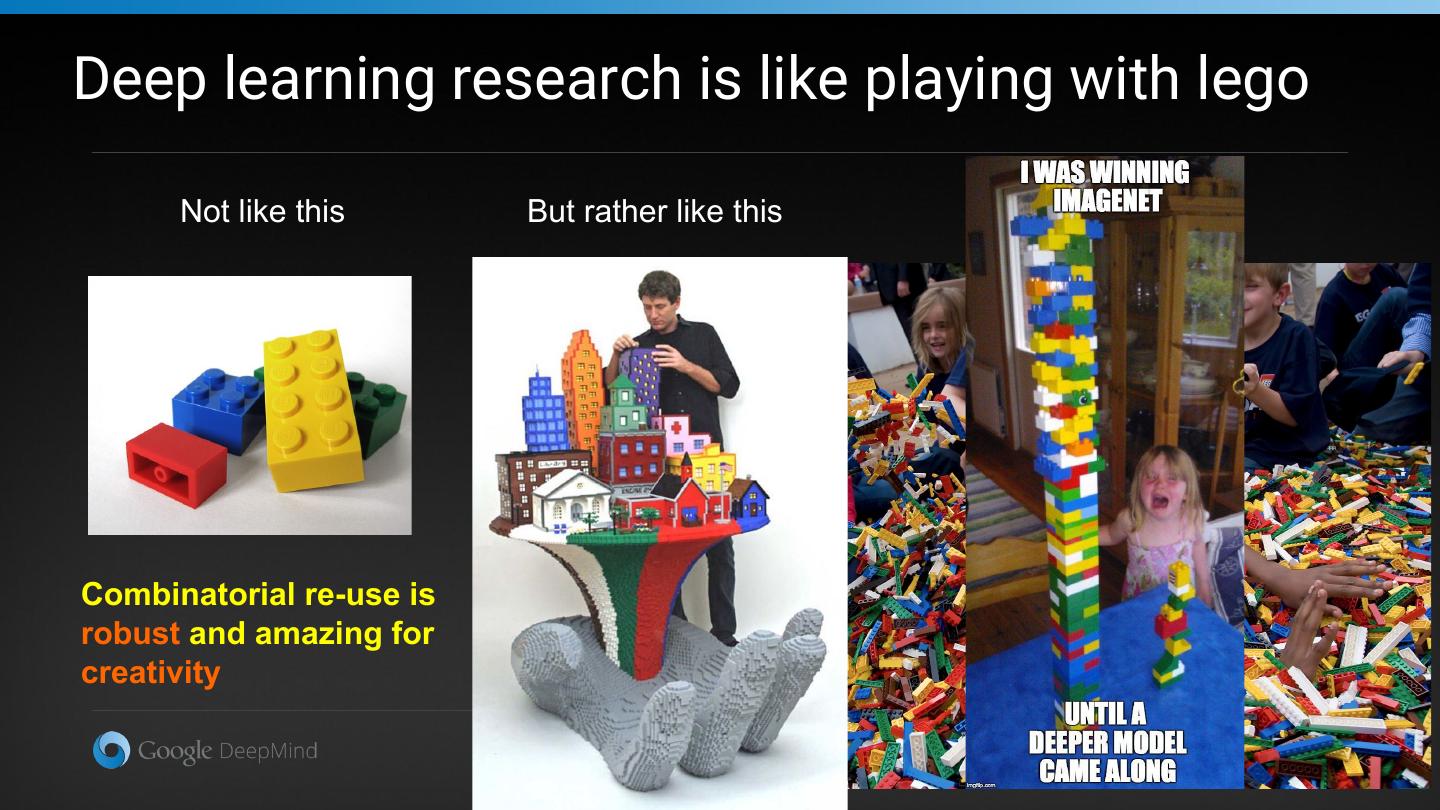

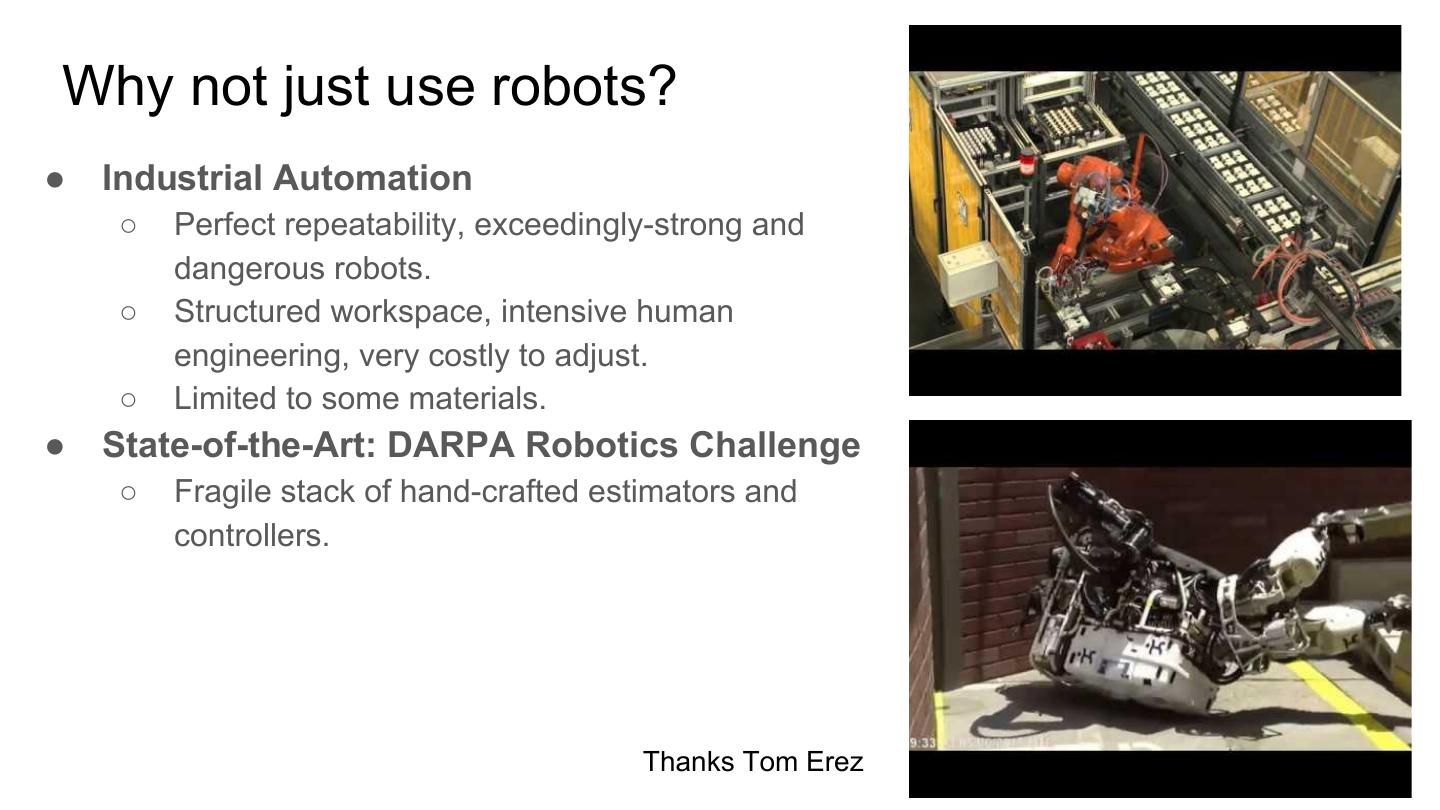

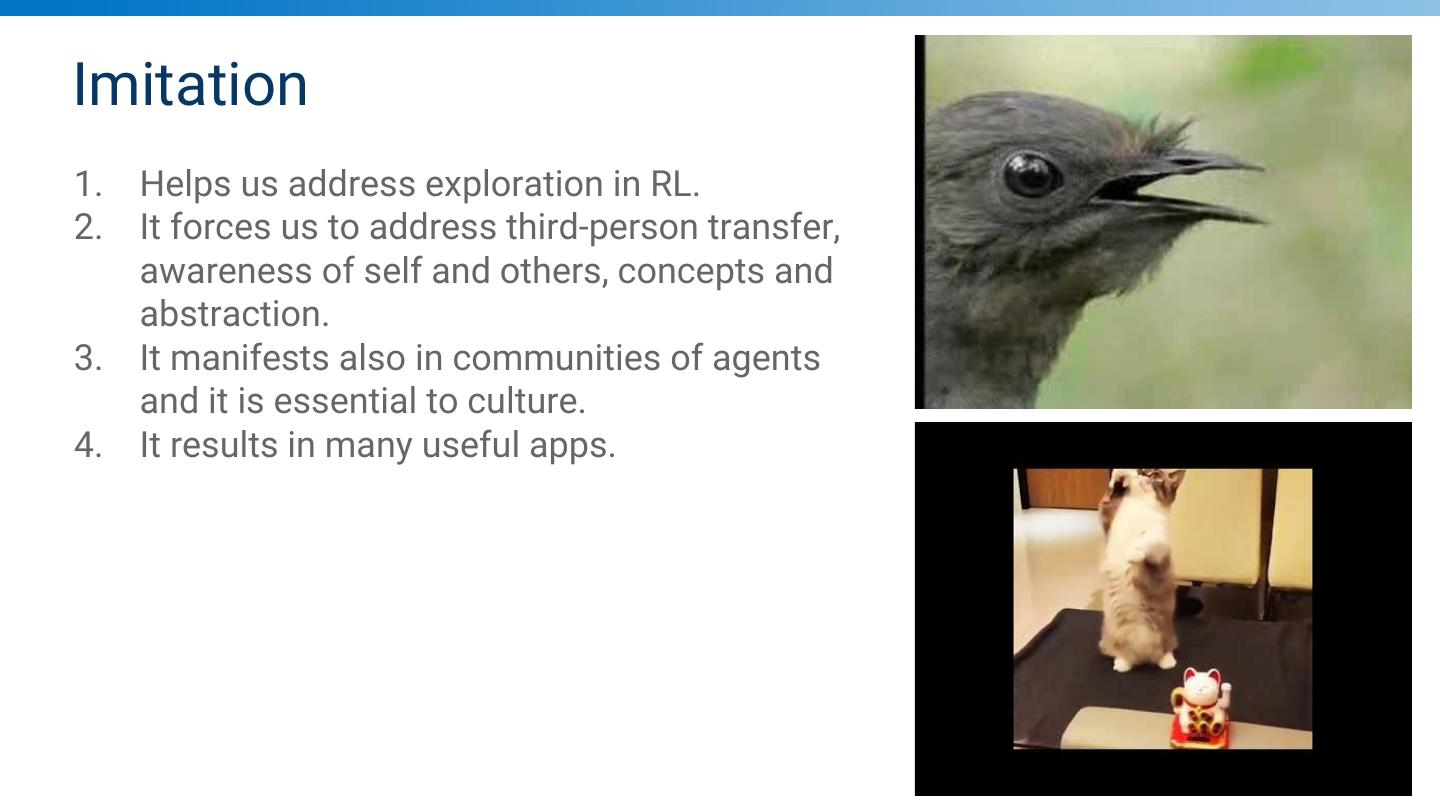

18 .Deep learning research is like playing with lego Not like this But rather like this Combinatorial re-use is robust and amazing for creativity General Artificial Intelligence

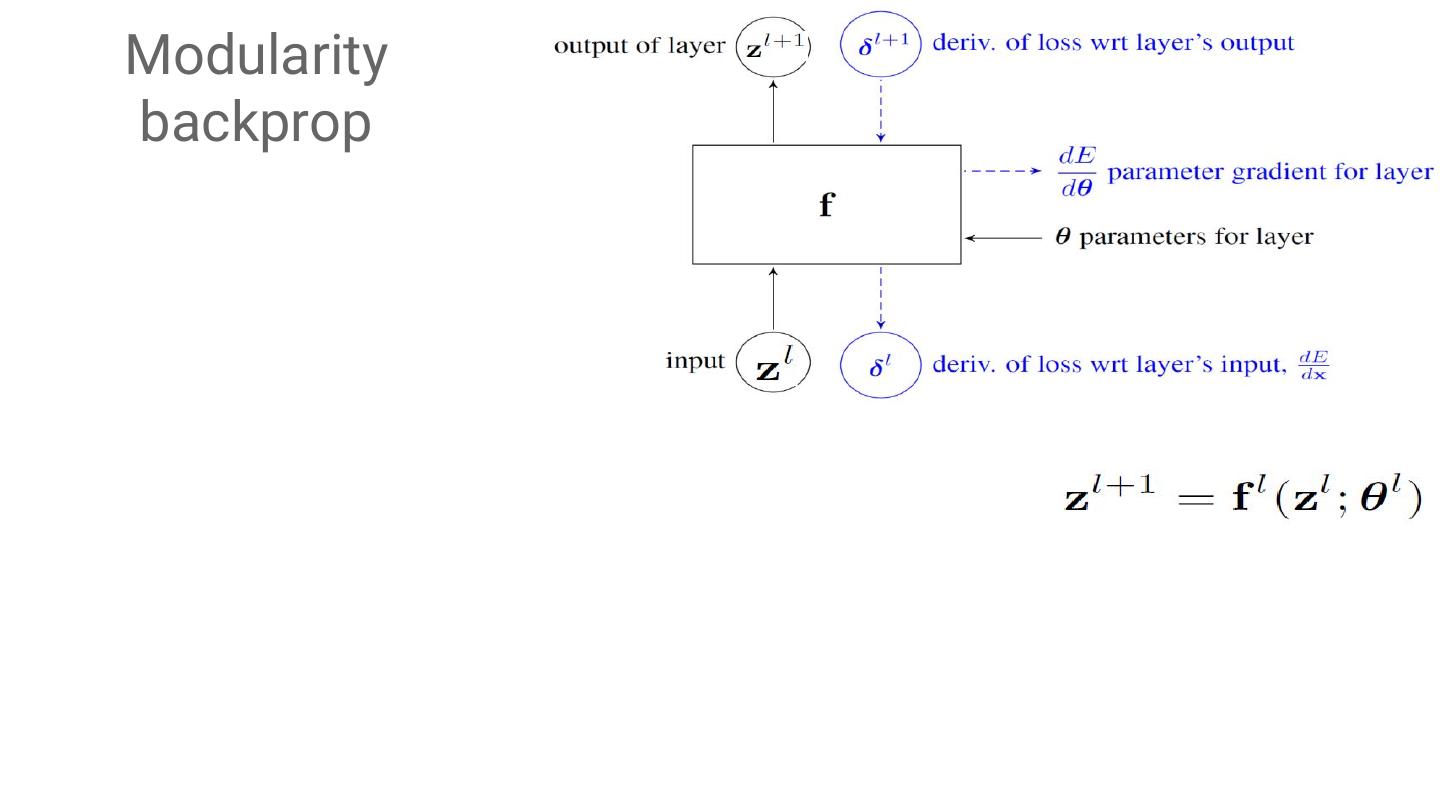

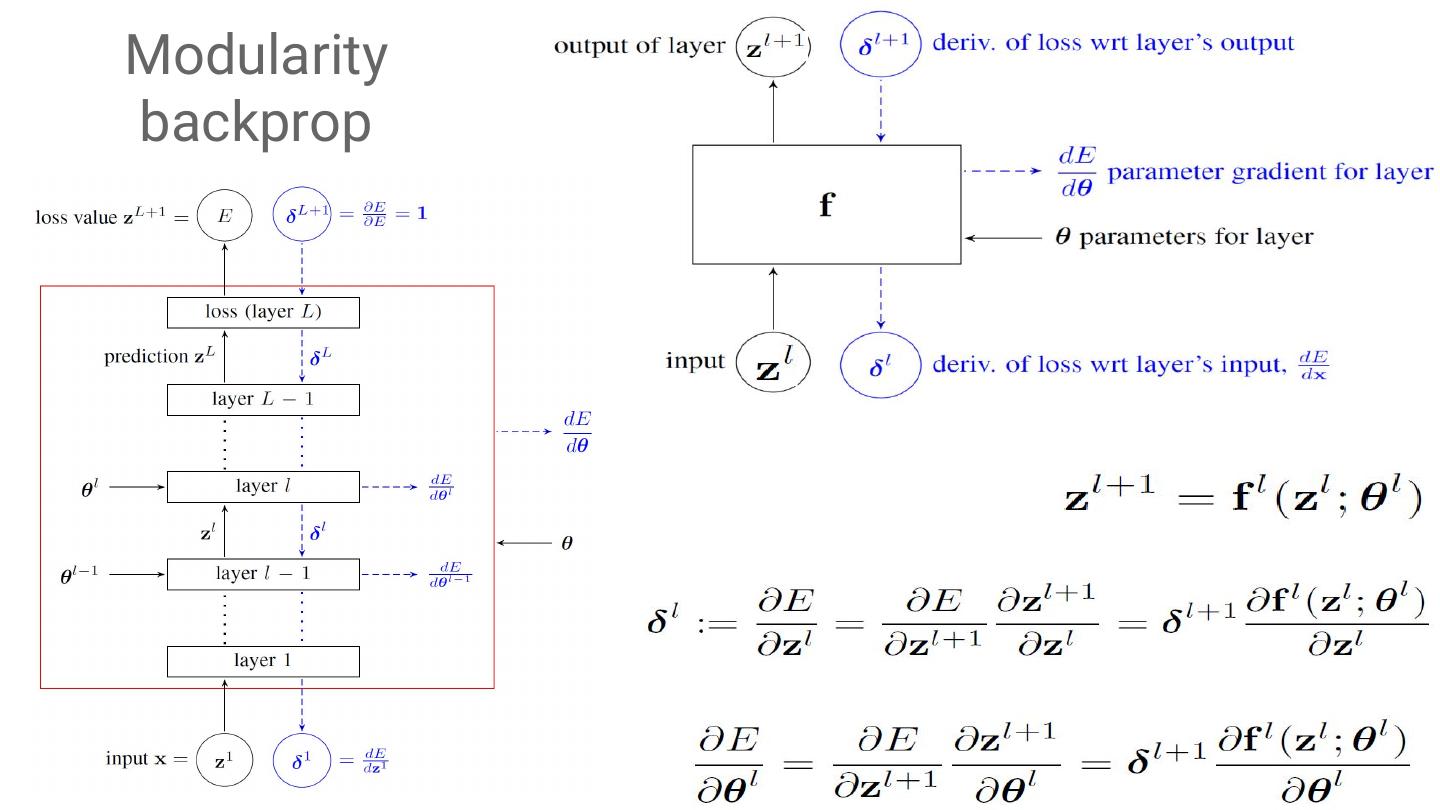

19 .Modularity backprop

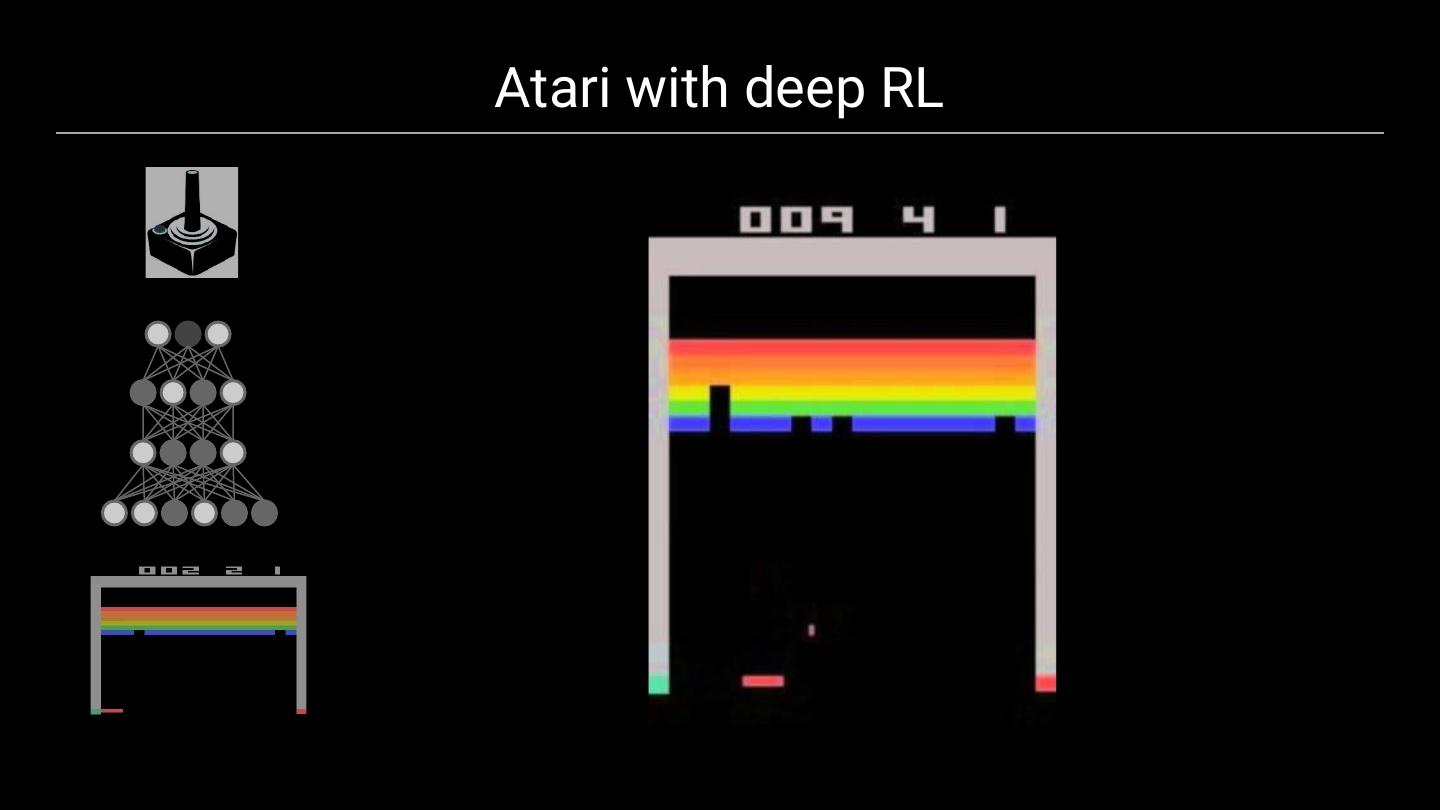

20 .Modularity backprop

21 .Modularity backprop

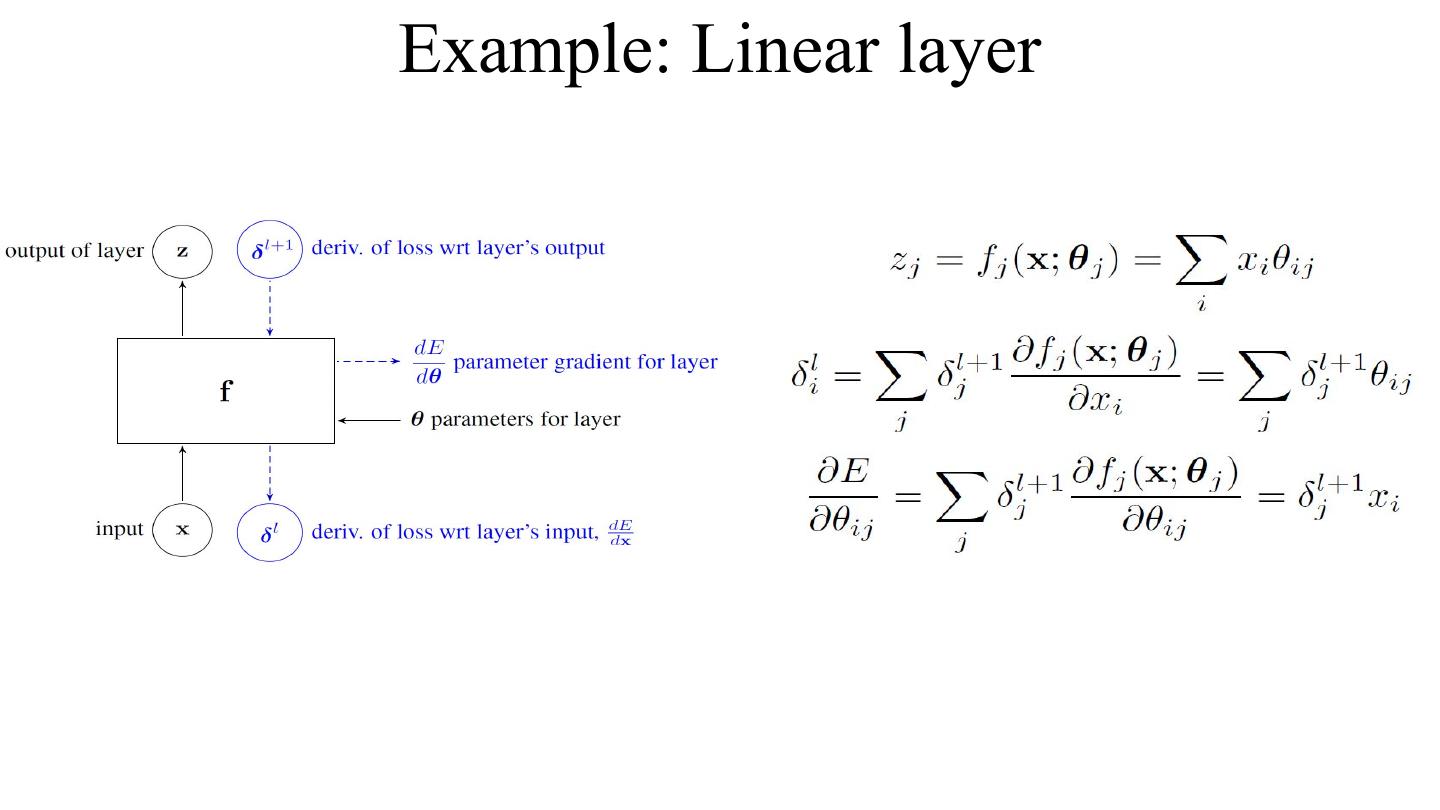

22 .Example: Linear layer

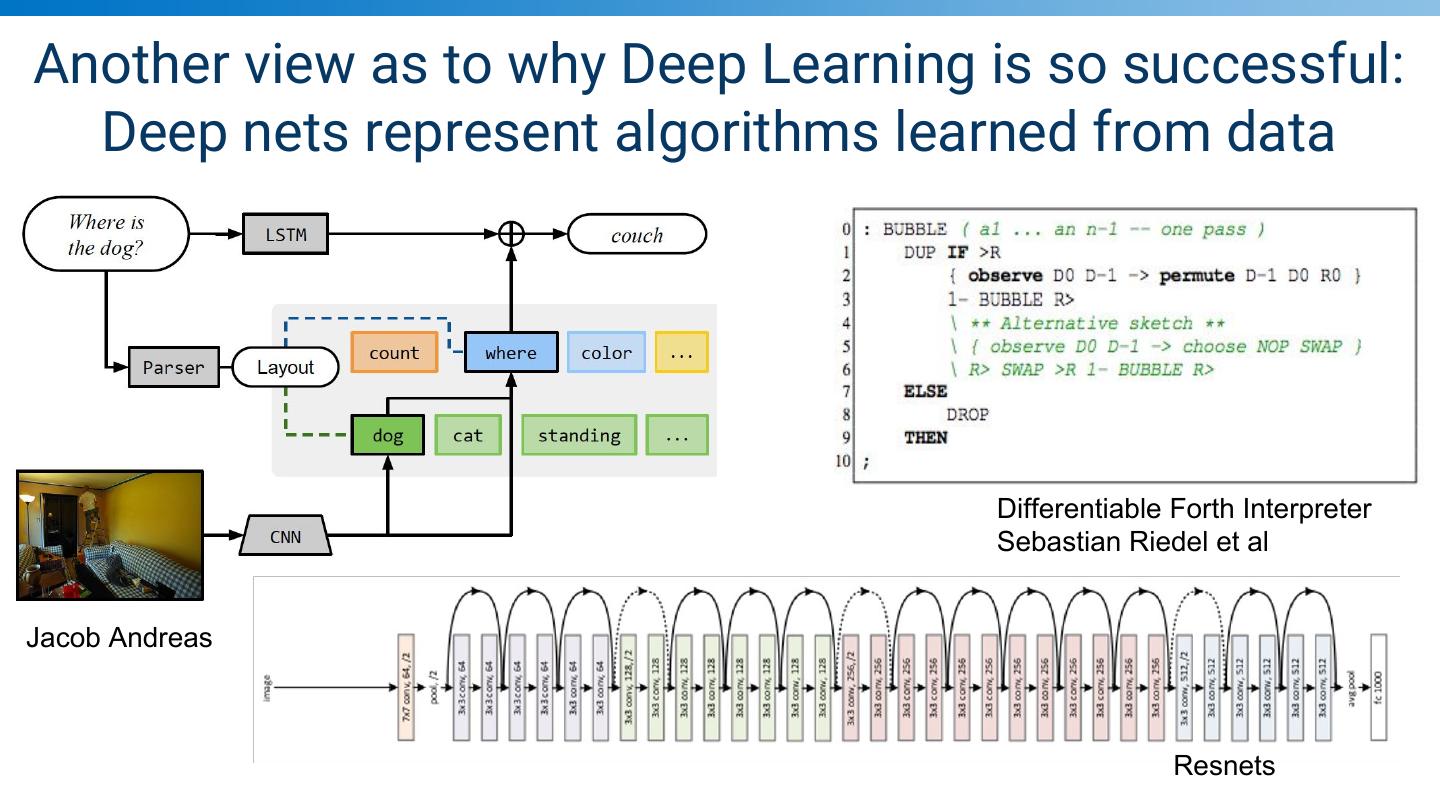

23 .Another view as to why Deep Learning is so successful: Deep nets represent algorithms learned from data Differentiable Forth Interpreter Sebastian Riedel et al Jacob Andreas Resnets

24 .Neural-Programmer Interpreters (NPI)

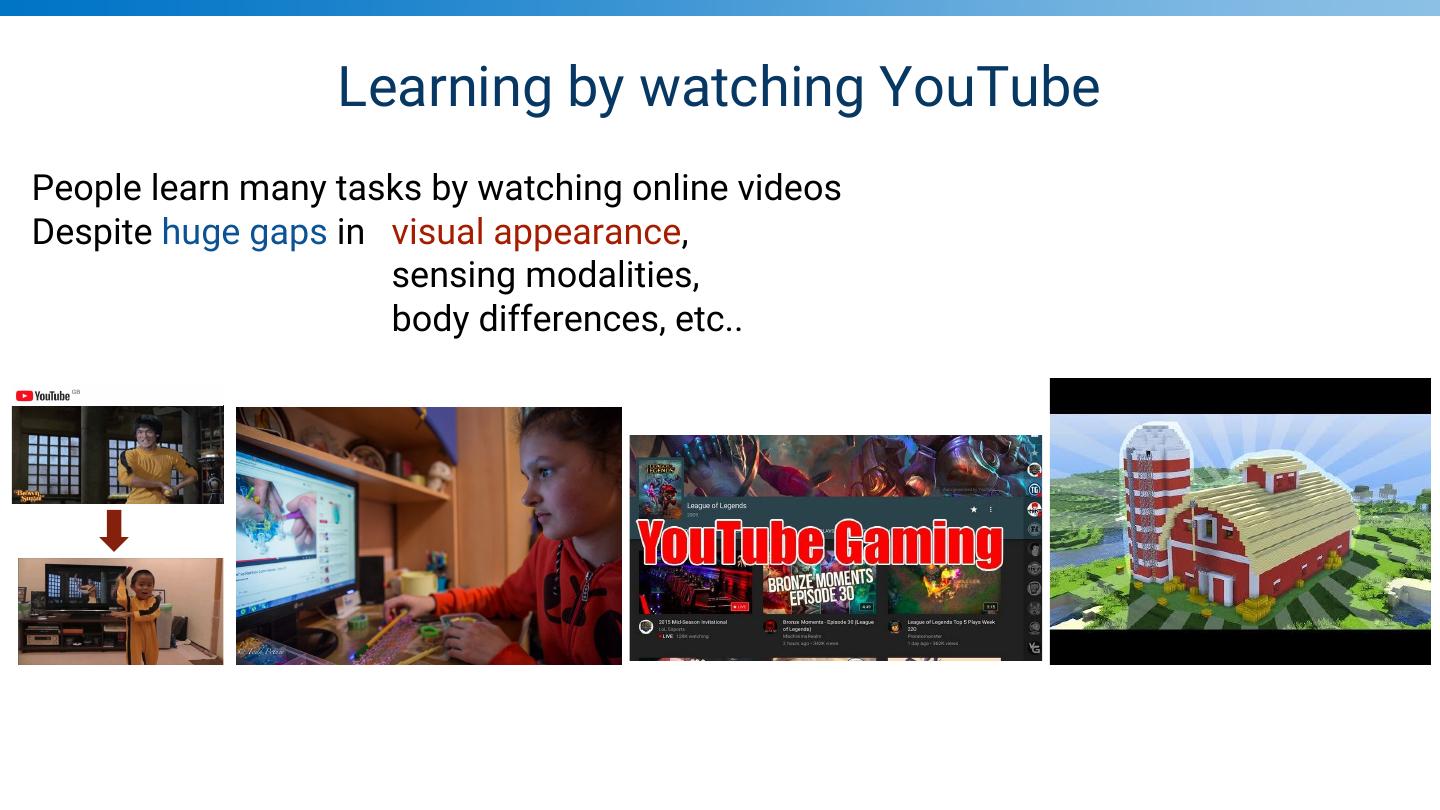

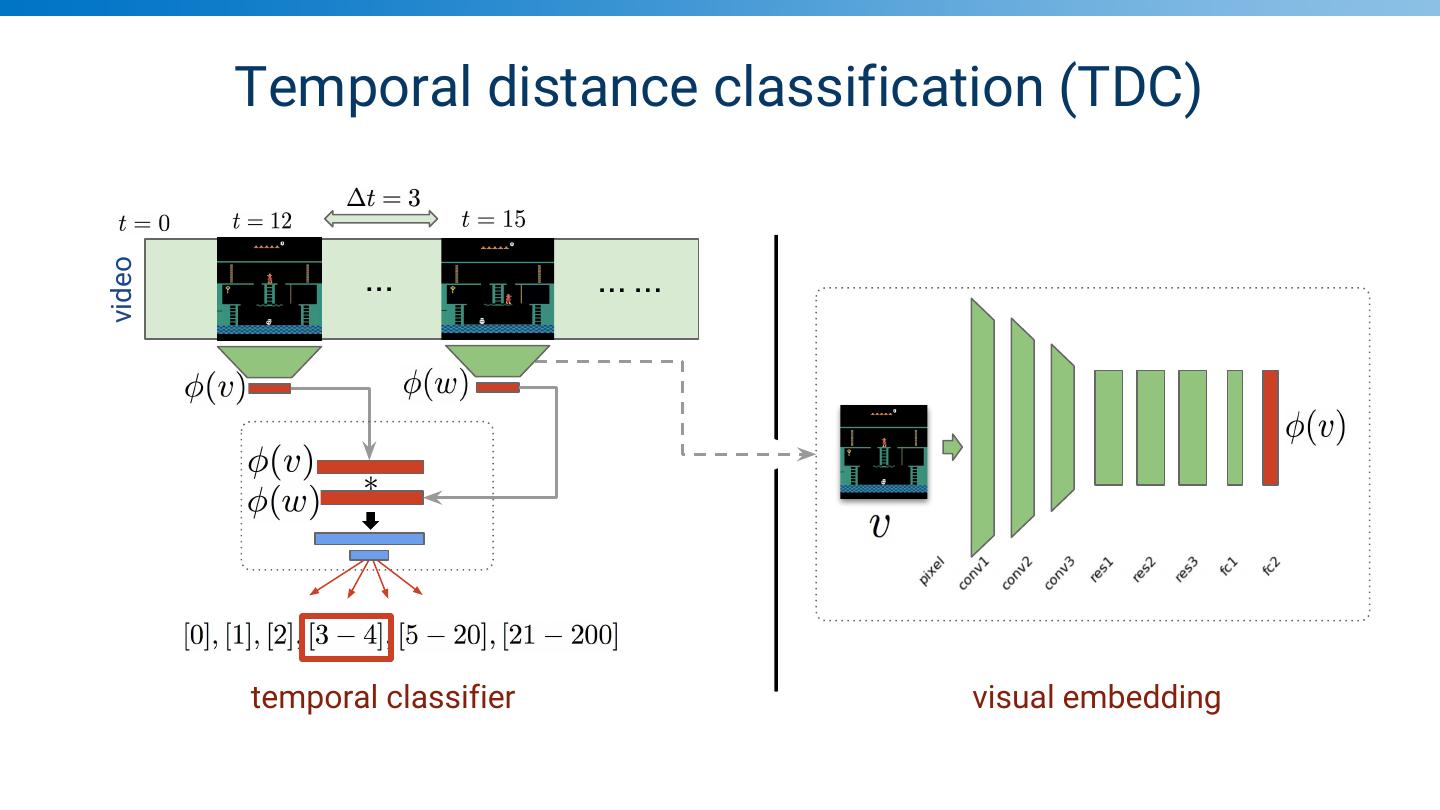

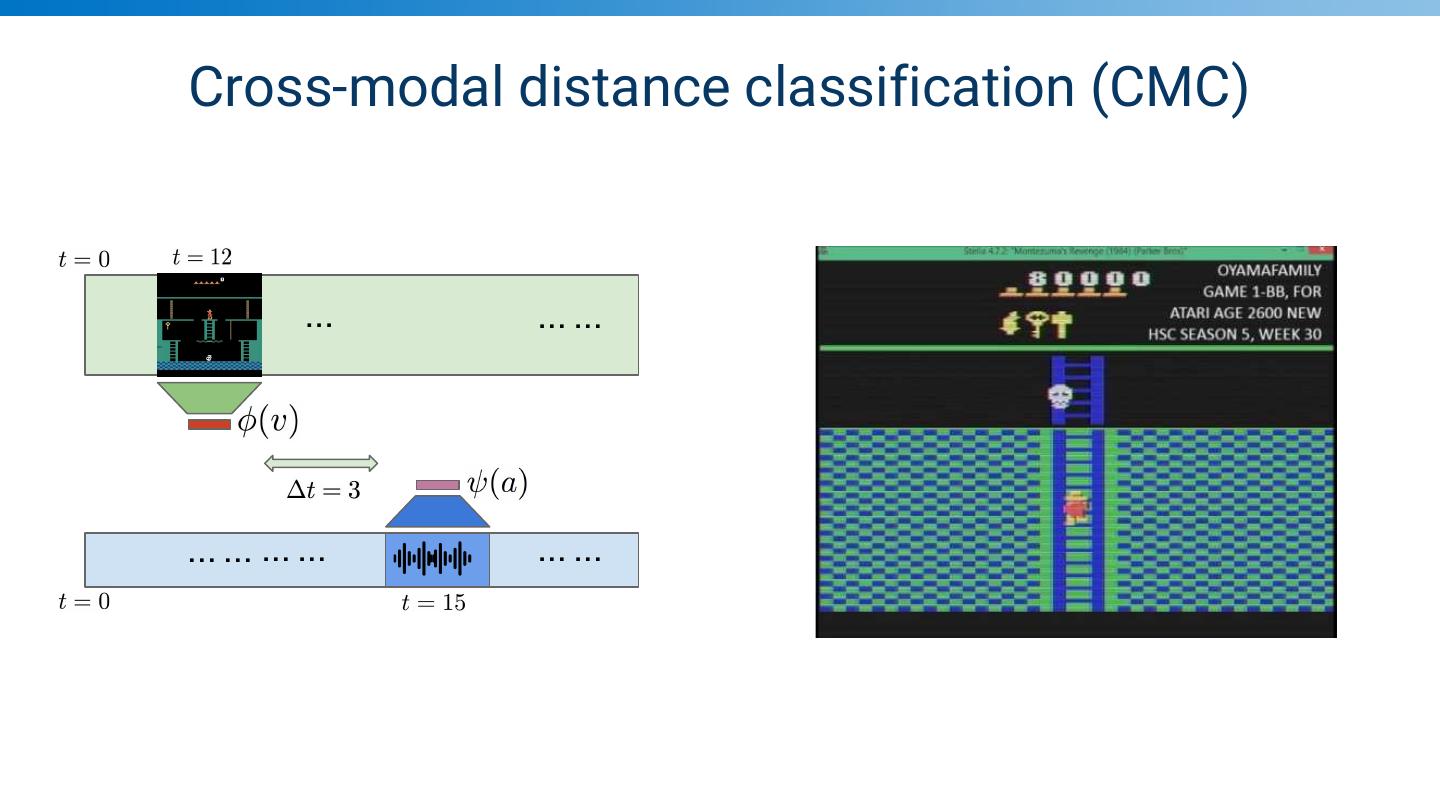

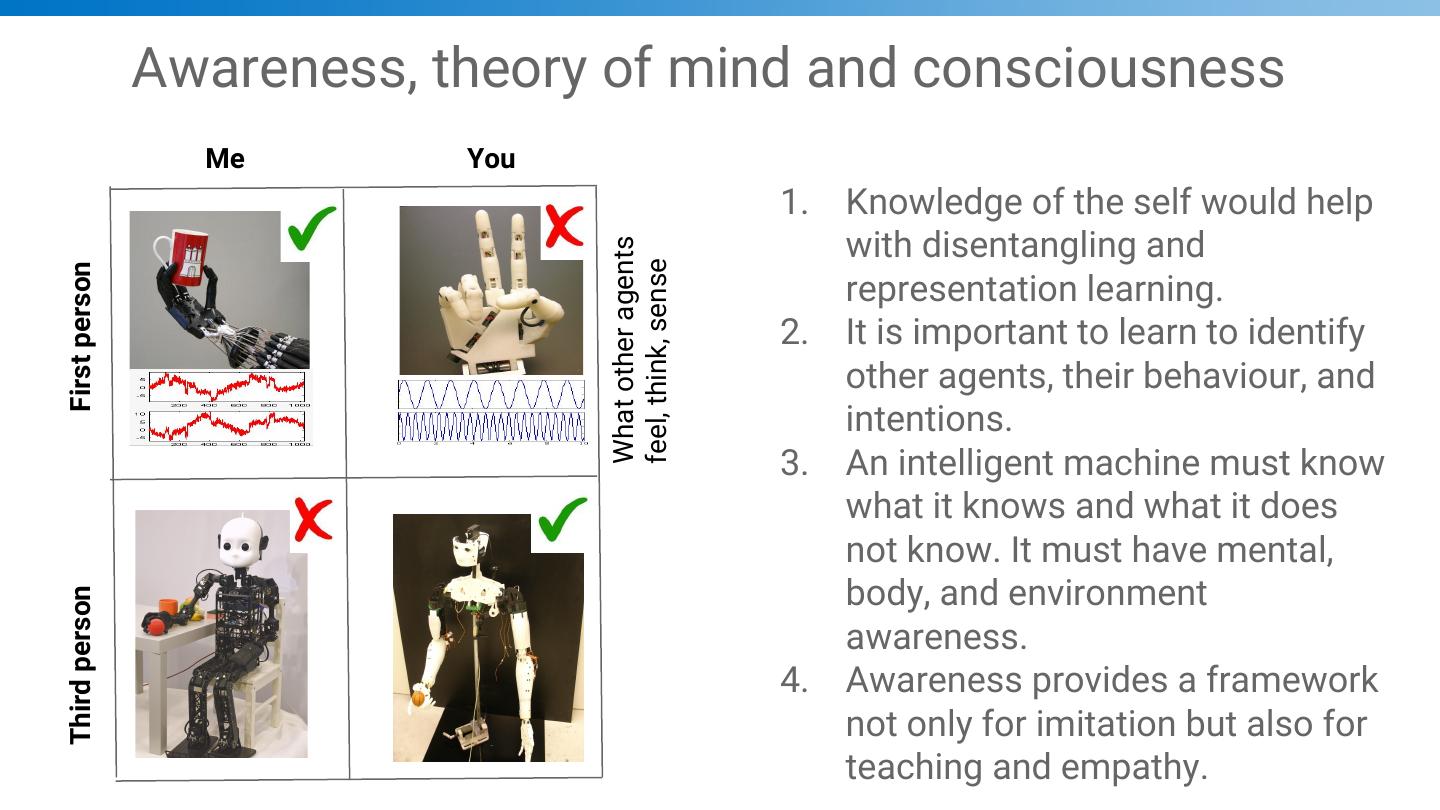

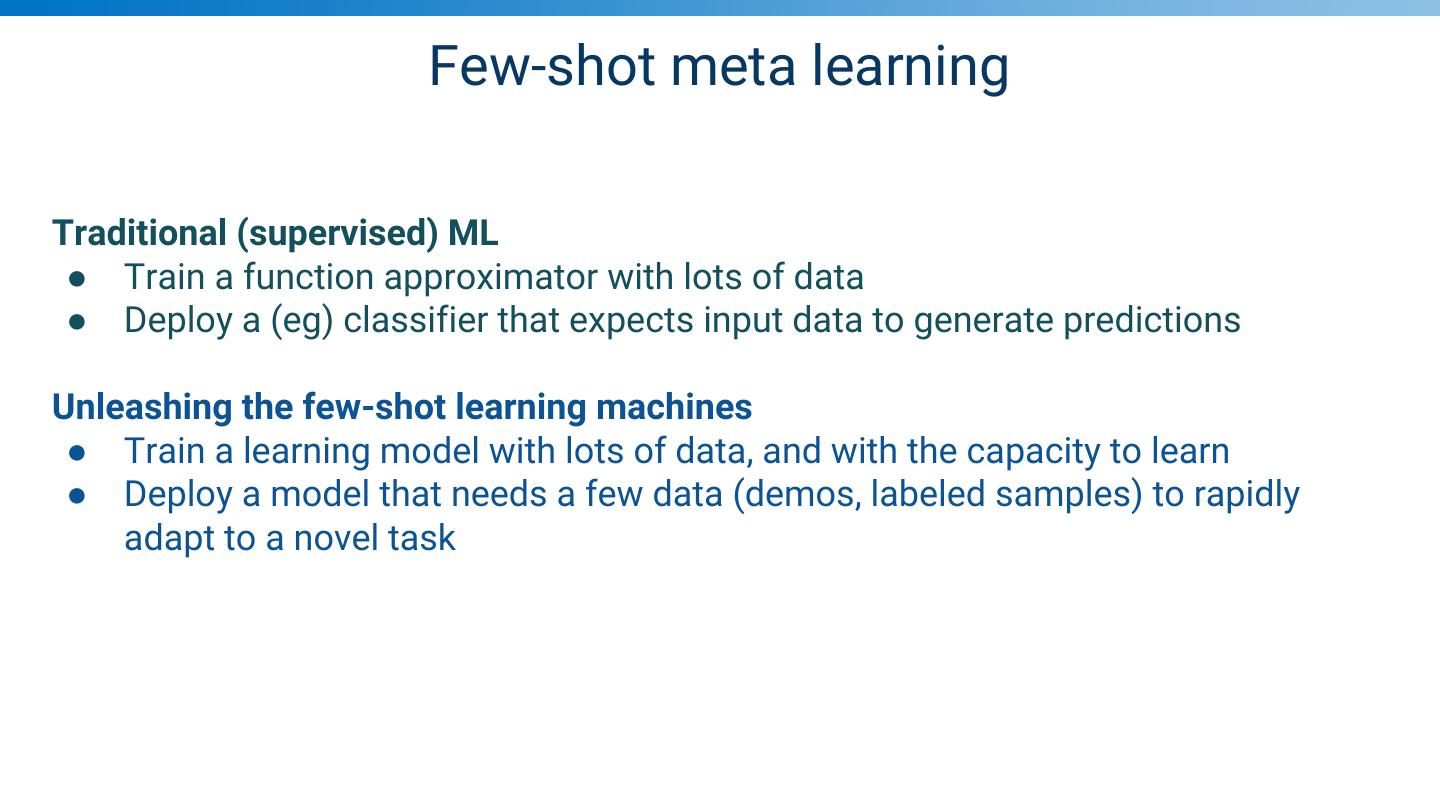

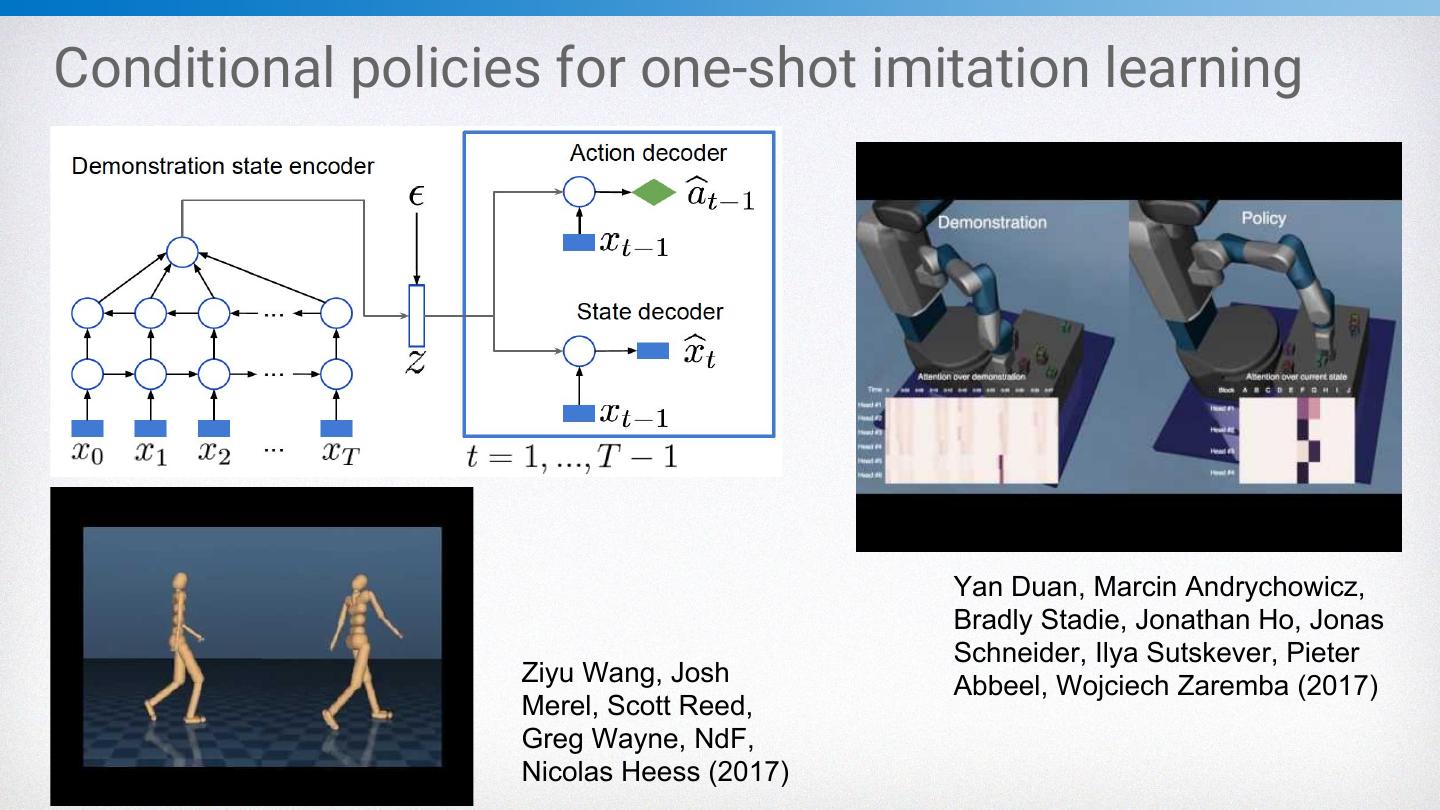

25 .Frontiers that excite me 1. Reinforcement learning 2. Meta learning 3. Imitation 4. Robotics 5. Concepts and abstraction 6. Awareness and consciousness 7. Causal reasoning

26 .Reinforcement Learning Framework OBSERVATIONS GOAL Agent Environment ACTIONS

27 .Atari with deep RL

28 .

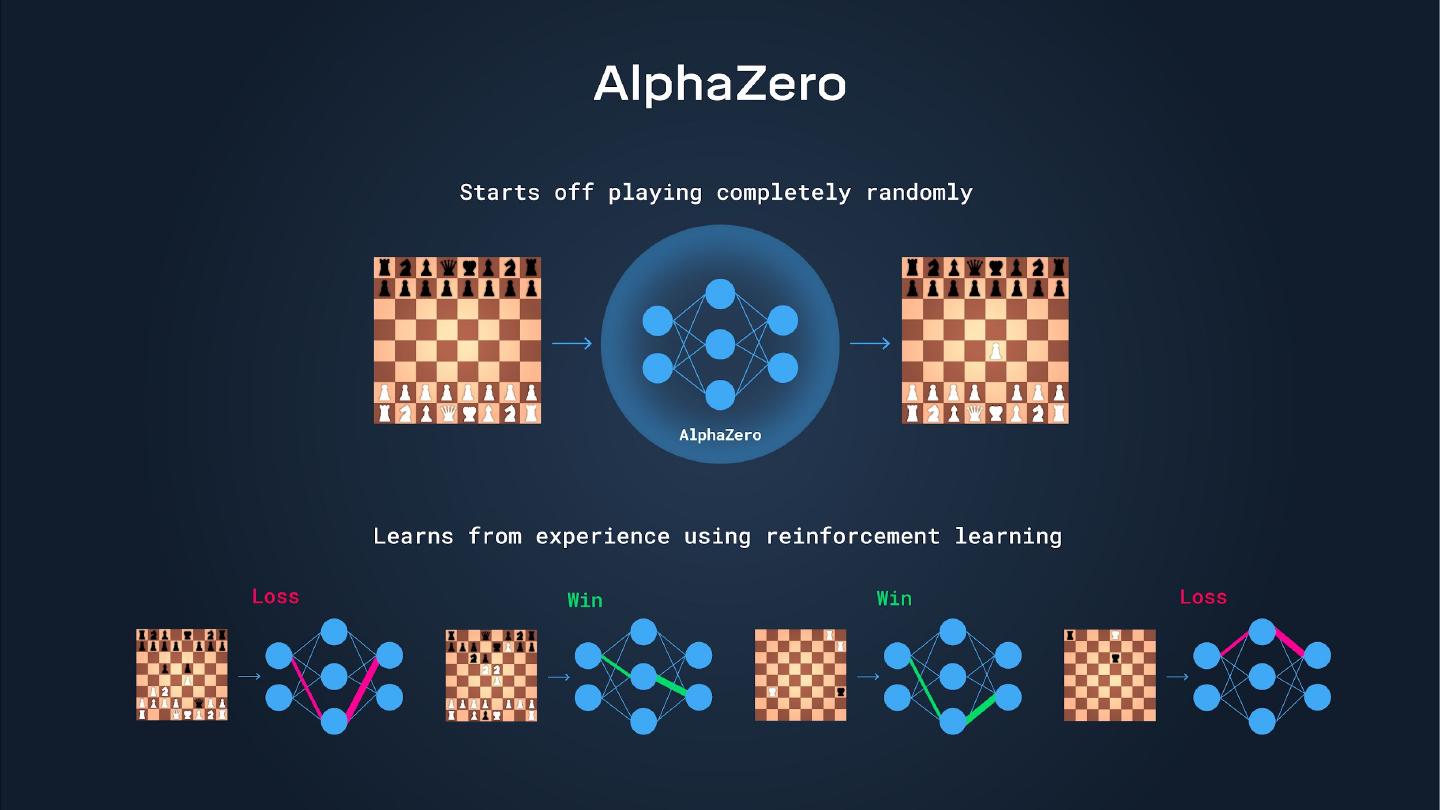

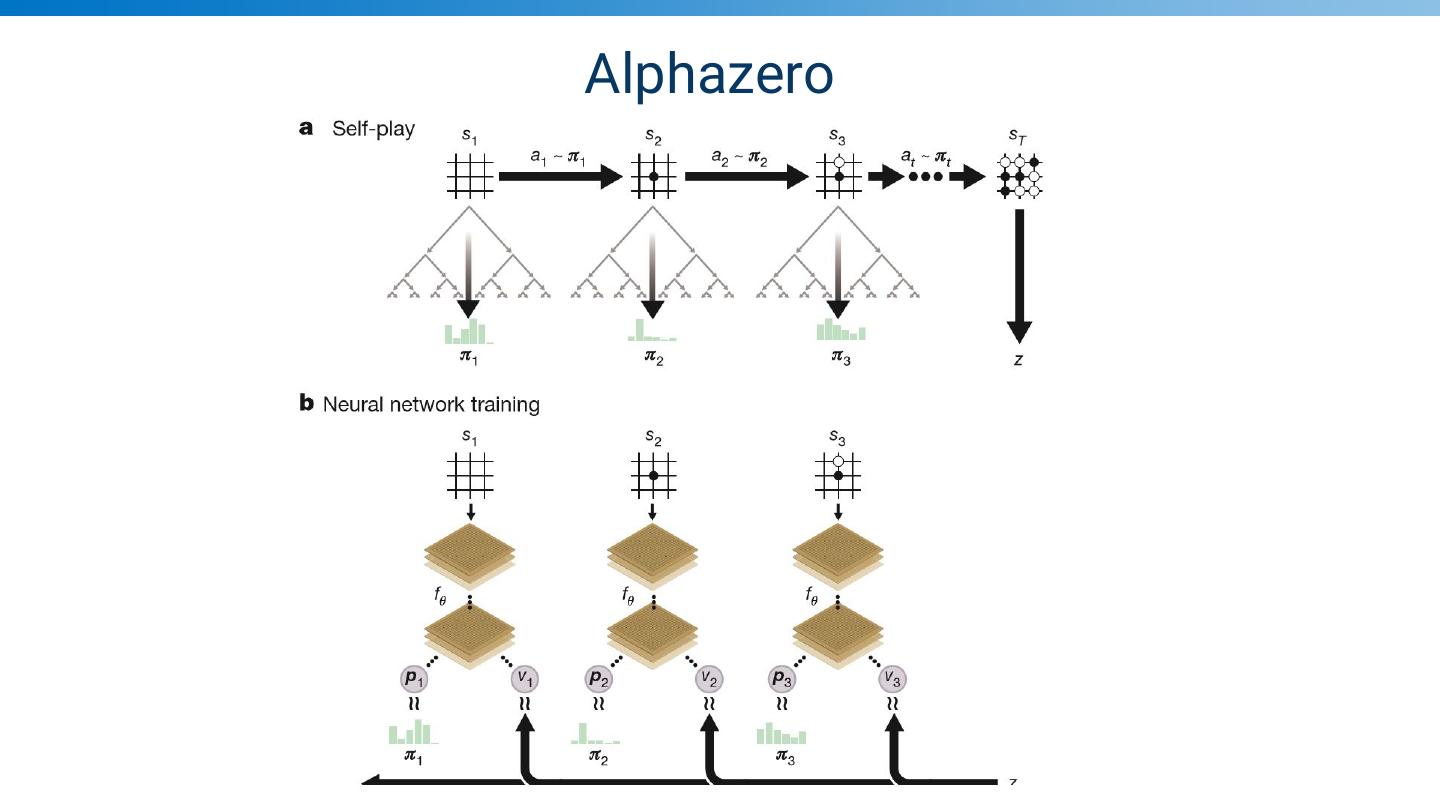

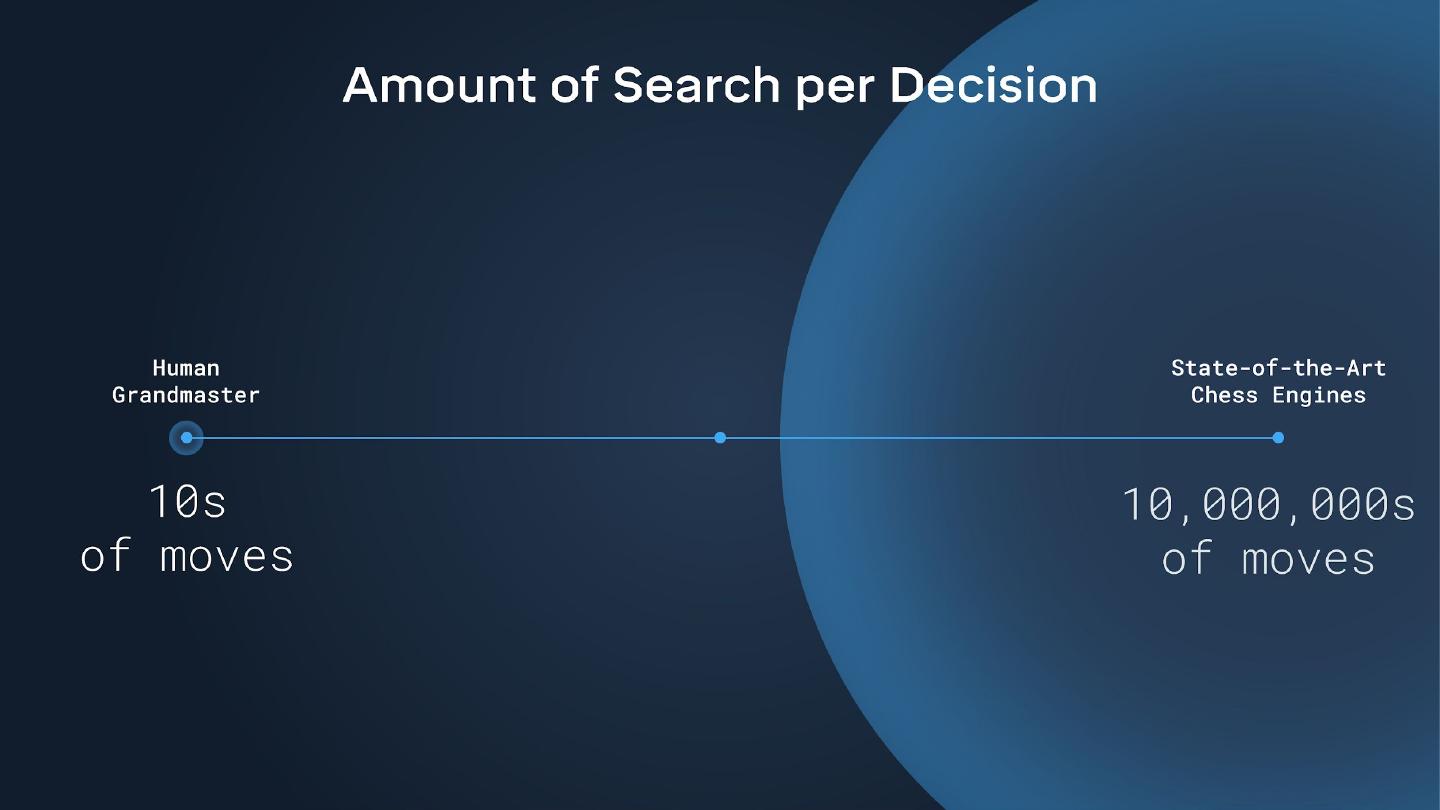

29 .Alphazero