- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Introduction to Neural Networks

展开查看详情

1 .

2 .

3 .

4 .

5 .

6 .

7 .

8 .

9 .

10 .

11 .

12 .

13 .

14 .

15 .What is the simplest thing that isn’t linear? We have tried fitting linear functions. What about iterating linear functions?

16 .ReLU Very fast computation. Most common activation function. The resulting functions N(x) are piecewise linear. Not actually differentiable everywhere, but this is okay. More of a problem is when the derivative is zero, “dead ReLU” problem But very unlikely for a ReLU to be “dead” for all inputs.

17 .Mean squared error Often used if the network is predicting a scalar.

18 .Neural responses to inputs Guang, Armstrong, Foehring, 2015

19 .Neural responses to inputs Guang, Armstrong, Foehring, 2015

20 .So then what does a neuron do? Poirazi Brennon Mel, 2003

21 .So then what does a neuron do? Poirazi Brennon Mel, 2003

22 .A bit like a biological neuron Neurons have one output They linearly integrate on their dendrites They have a ReLU-like nonlinearity And they are hierarchically organized

23 .Do perceptrons actually work? Not really, but sometimes surprisingly well. It’s a good first cut. Very rarely are data actually linearly separable.

24 .Linear integration within dendritic trees

25 .What if a deep network were actually linear? What if the activation function is linear? The overall network would be a linear transformation. But any learning algorithms might give different results. Also, the resulting weight matrices would be factored into different layers. Used sometimes as a simple case to prove results, and in some neuro models. Various authors, esp. Andrew Saxe, have considered these networks, but not really used in practice.

26 .logistic sigmoid Similar to tanh, but asymptotes to 0 and 1, instead of -1 and 1. Like tanh, used rarely nowadays.

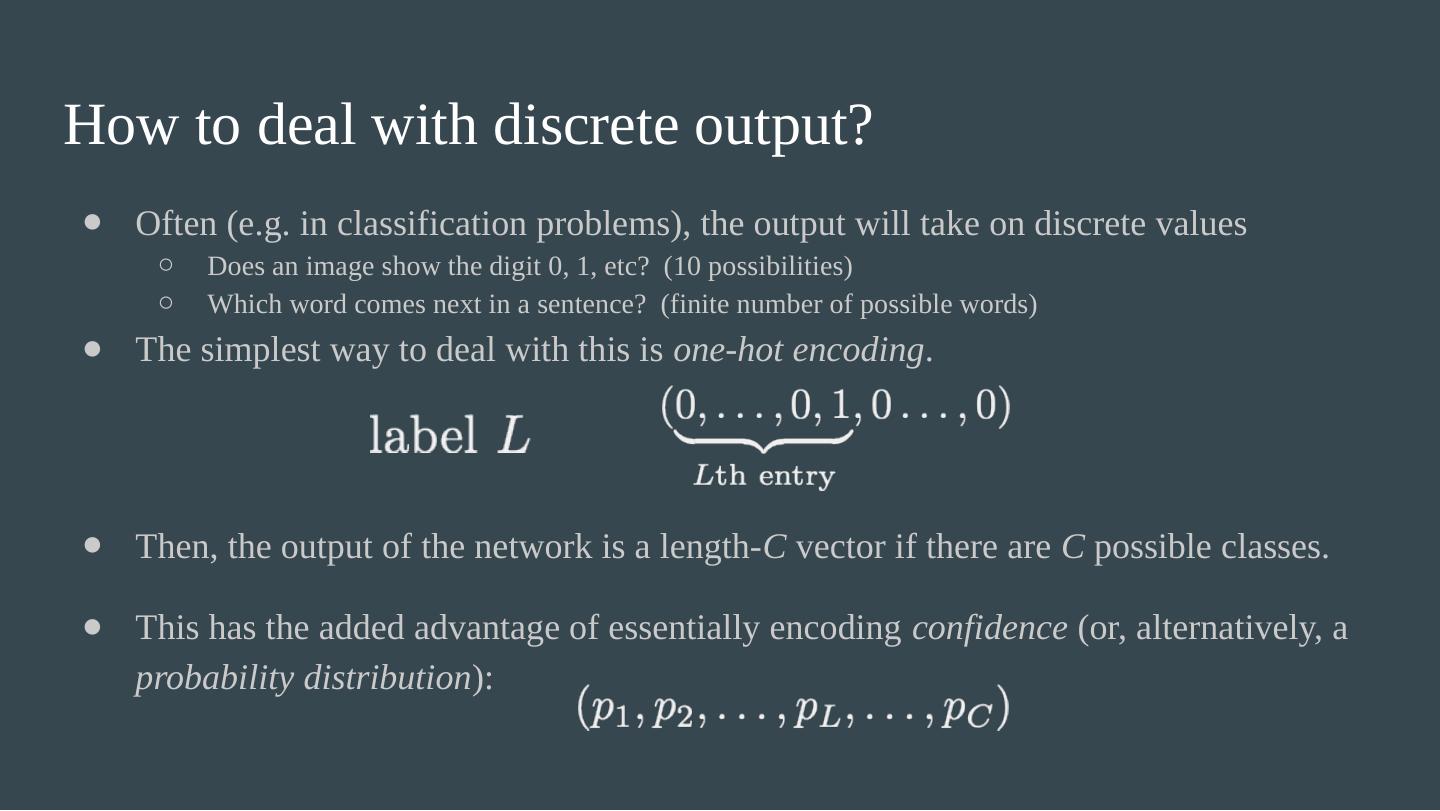

27 .How to deal with discrete output? A disadvantage of one-hot encoding is if there are a lot of possibilities. It also doesn’t capture information about structure in the outputs. In natural language processing (NLP), often Word2Vec representations are used instead (will be covered more later).

28 .A first cut at learning - linear functions The easiest way to fit data: Mean squared error (MSE) - a natural loss function: