- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

History of Deep Learning

展开查看详情

1 .

2 .

3 .

4 .

5 .

6 .

7 .

8 .

9 .

10 .

11 .

12 .

13 .

14 .Key questions in this course How do we decide which problems to tackle with deep learning? Given a problem setting, how do we determine what model is best? What’s the best way to implement said model? How can we best visualize, explain, and justify our findings? How can neuroscience inspire deep learning?

15 .Looking forward No class on Monday (MLK day) On Wednesday: Introduction to PyTorch HW 0: due on 1/30

16 .Solution: the GPU

17 .More mapping

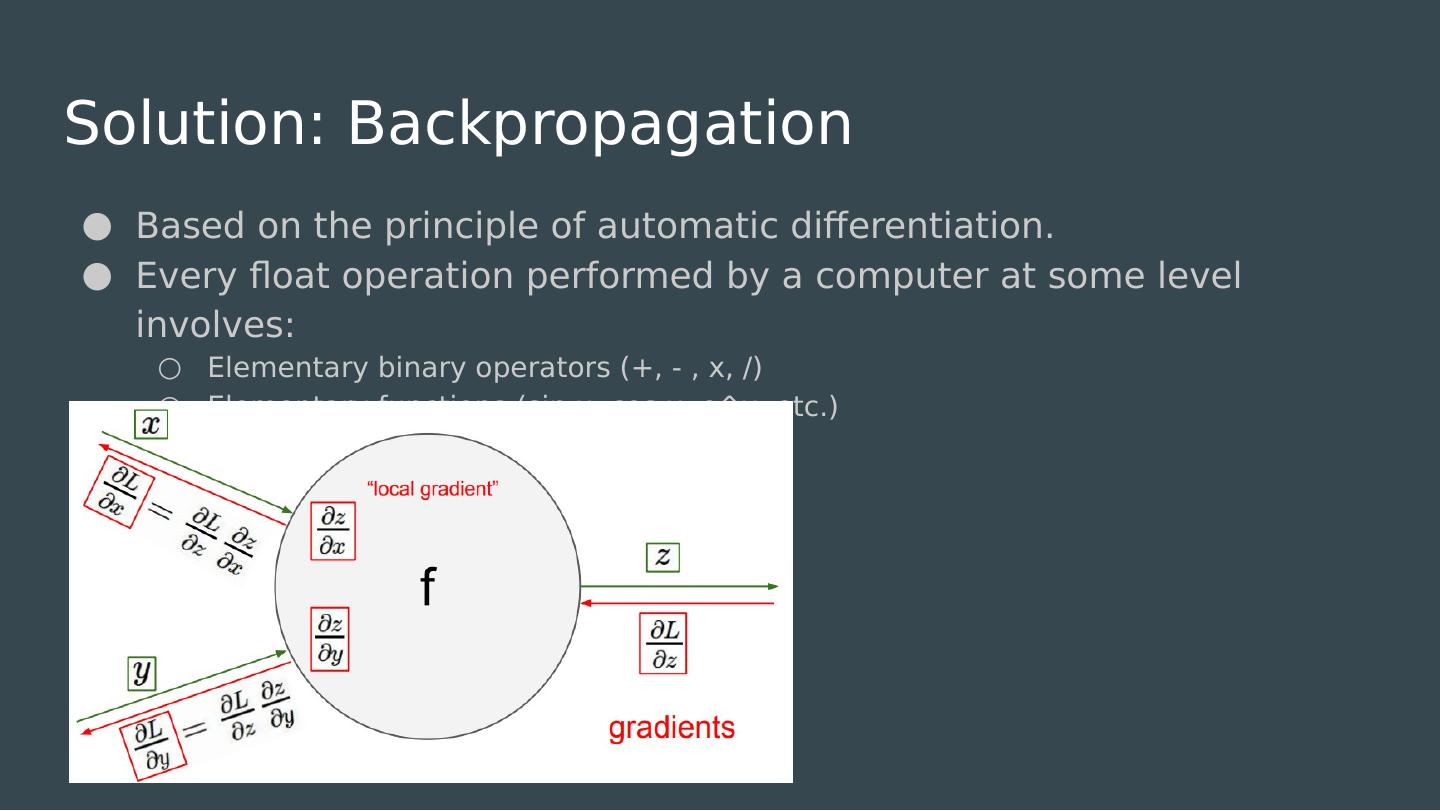

18 .As of 1996, there were 2 huge problems with neural nets. They couldnt solve any problem that wasnt linearly separable. Solved by backpropagation and depth. Backpropagation takes forever to converge! Not enough compute power to run the model Not enough labeled data to train the neural net

19 .Neurons are polarized

20 .Rosenblatt’s perceptron

21 .As of 1970, there was a huge problem with neural nets. They couldnt solve any problem that wasnt linearly separable.

22 .Course materials Website (cis700dl.com) Piazza Canvas

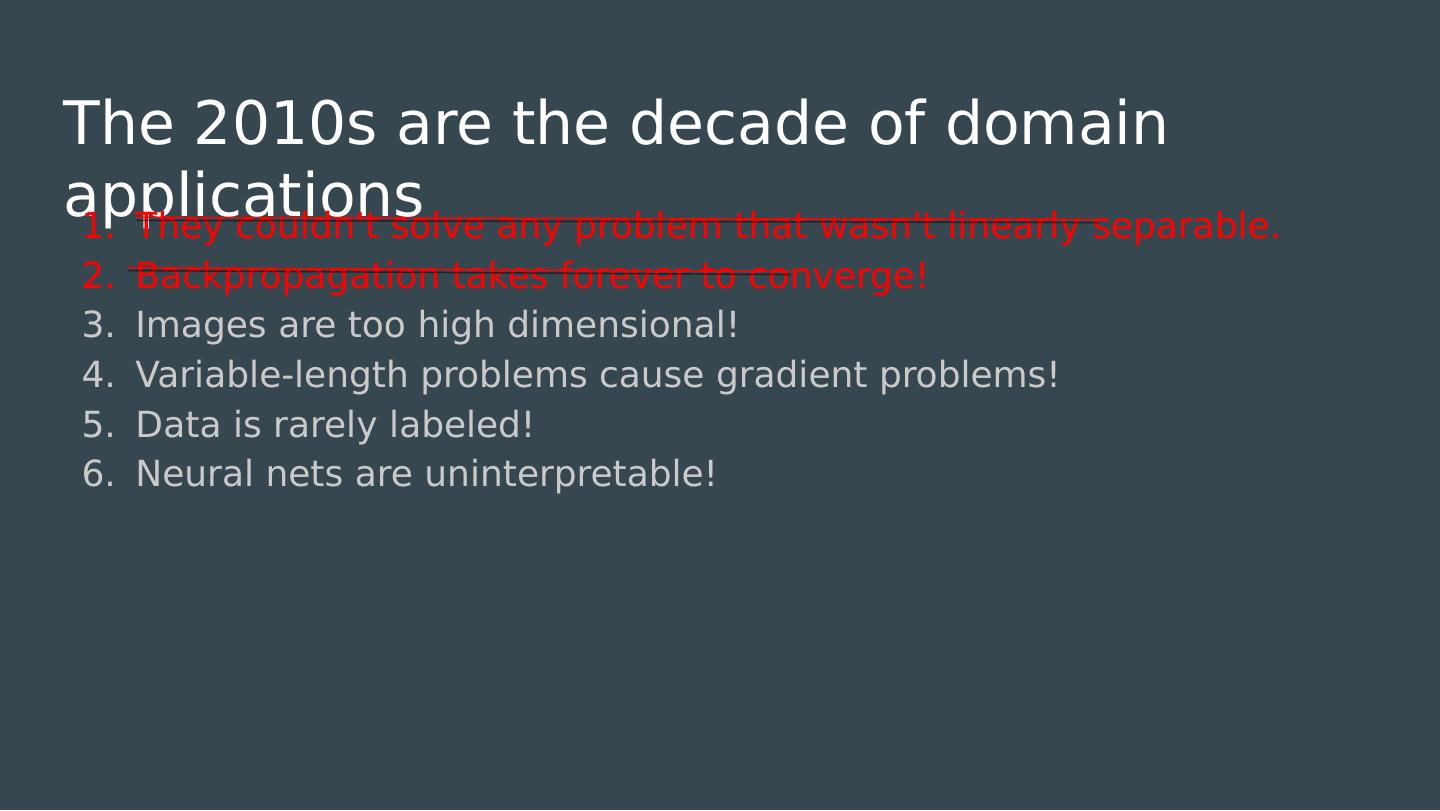

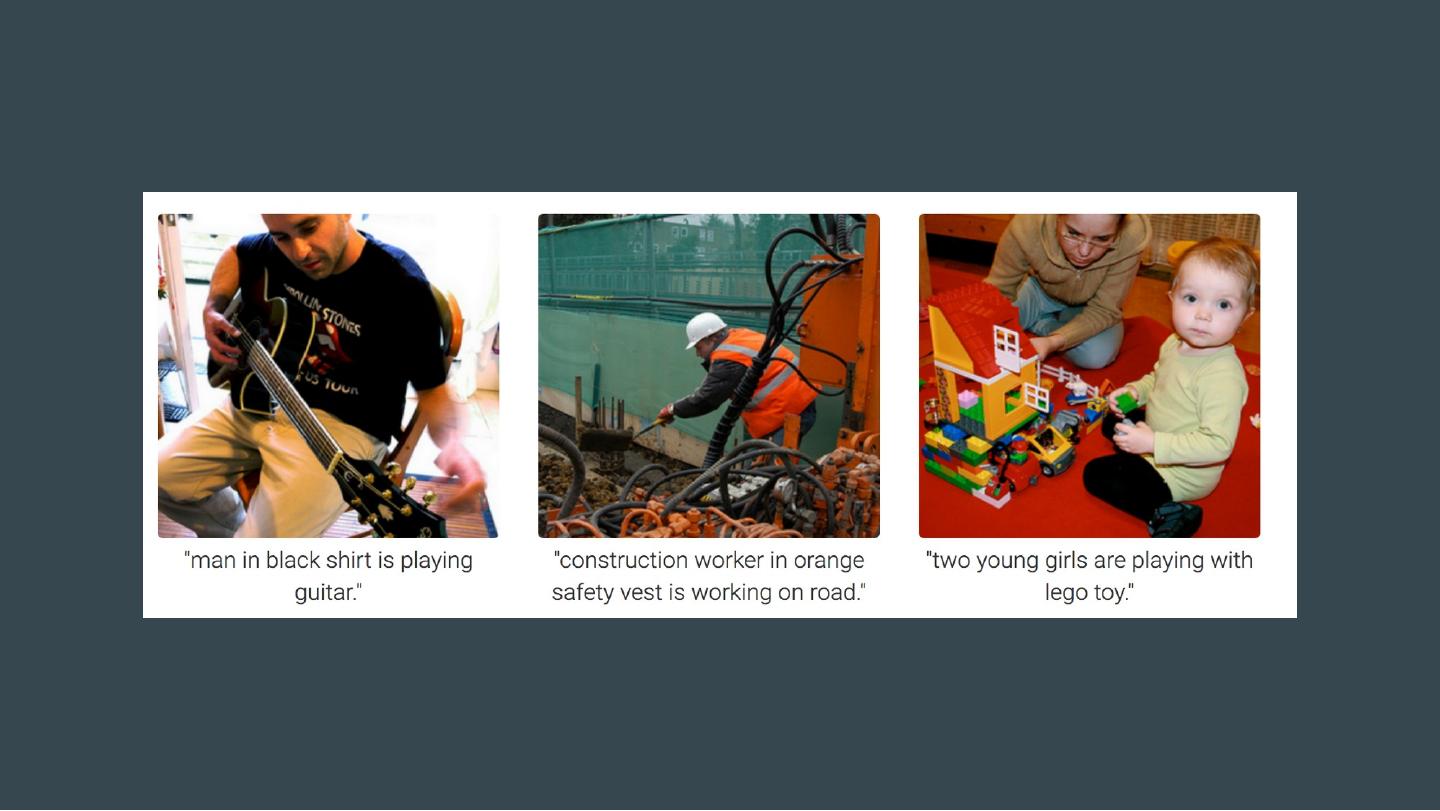

23 .The 2010s are the decade of domain applications They couldnt solve any problem that wasnt linearly separable. Backpropagation takes forever to converge! Images are too high dimensional! Convolutions reduce the number of learned weights via a prior. Encoders learn better representations of data. Variable-length problems cause gradient problems! Solved by the forget-gate. Data is rarely labeled! Addressed by DQN, SOMs. Neural nets are uninterpretable! Addressed by attention.

24 .Big Data 2004: Google develops MapReduce 2011: Apache releases Hadoop 2012: Apache and Berkeley develop Spark