- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Convolutional Neural Nets

展开查看详情

1 .

2 .

3 .

4 .

5 .

6 .

7 .

8 .

9 .

10 .

11 .

12 .

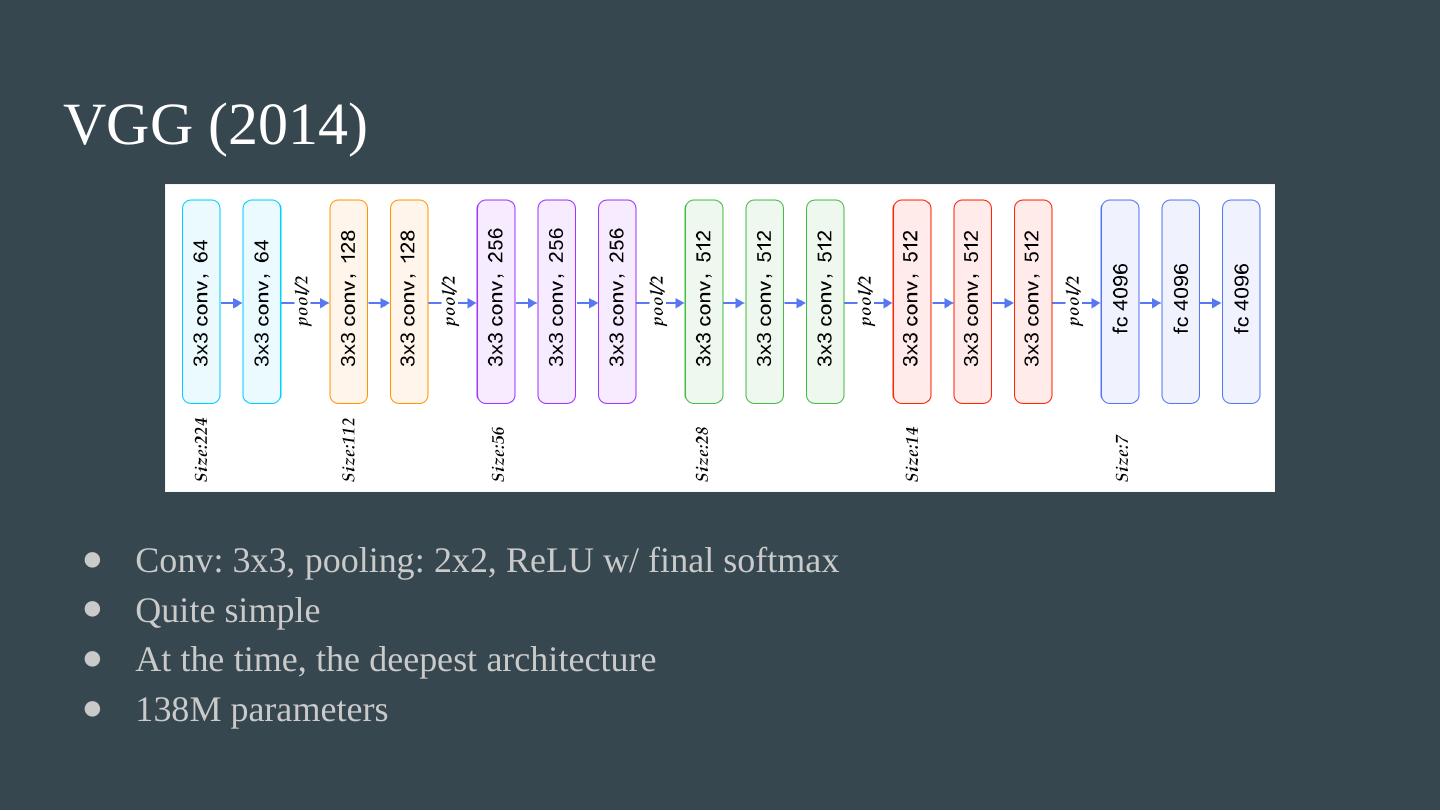

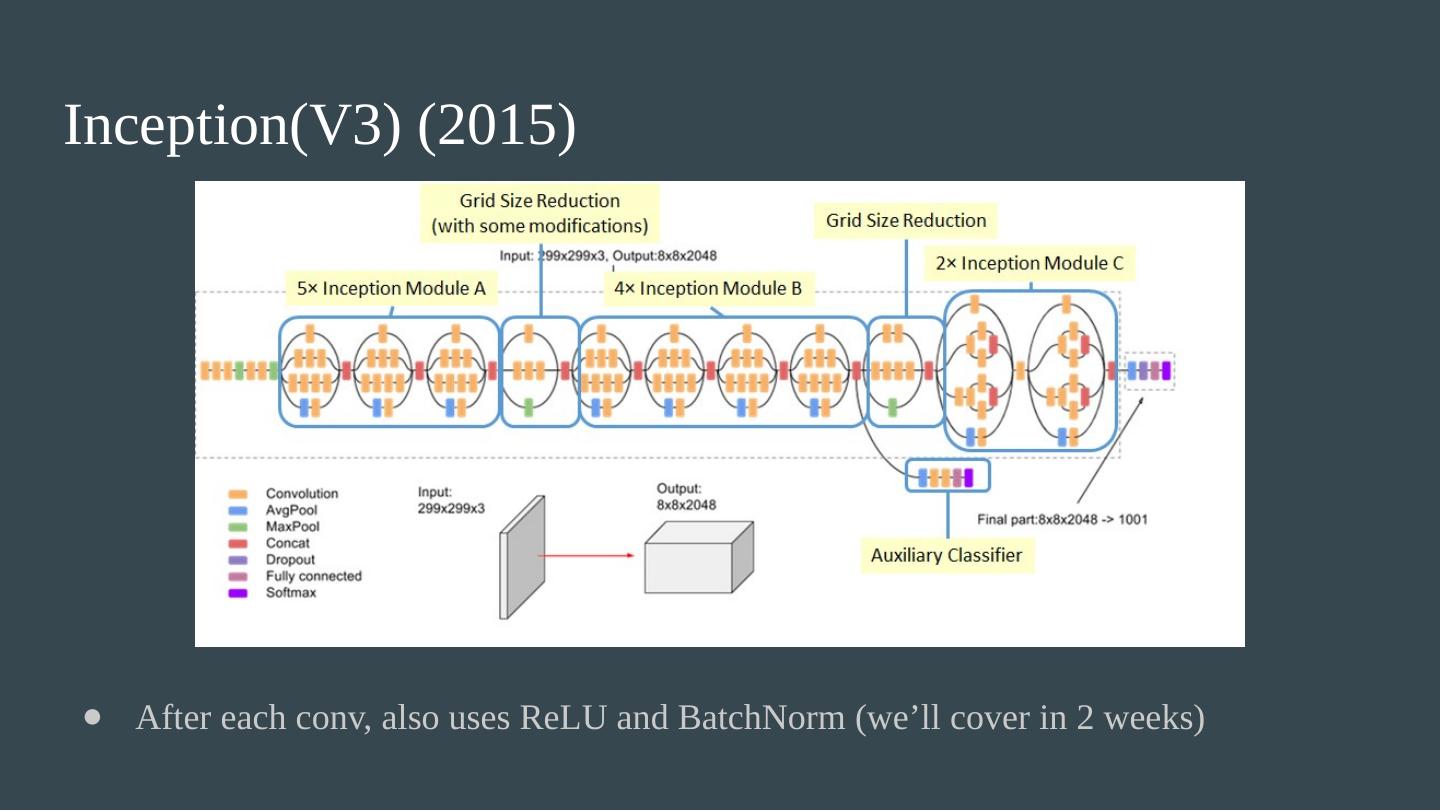

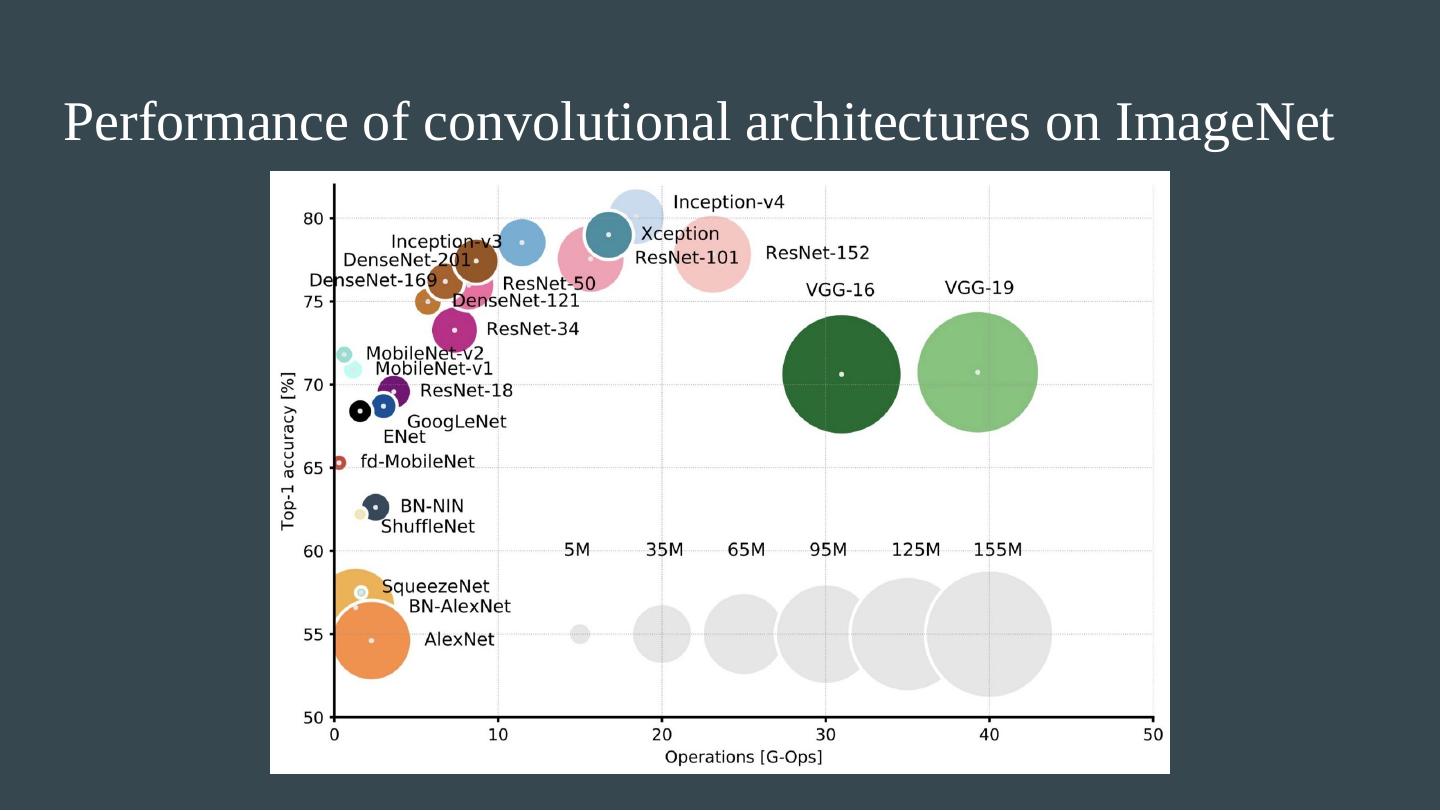

13 .

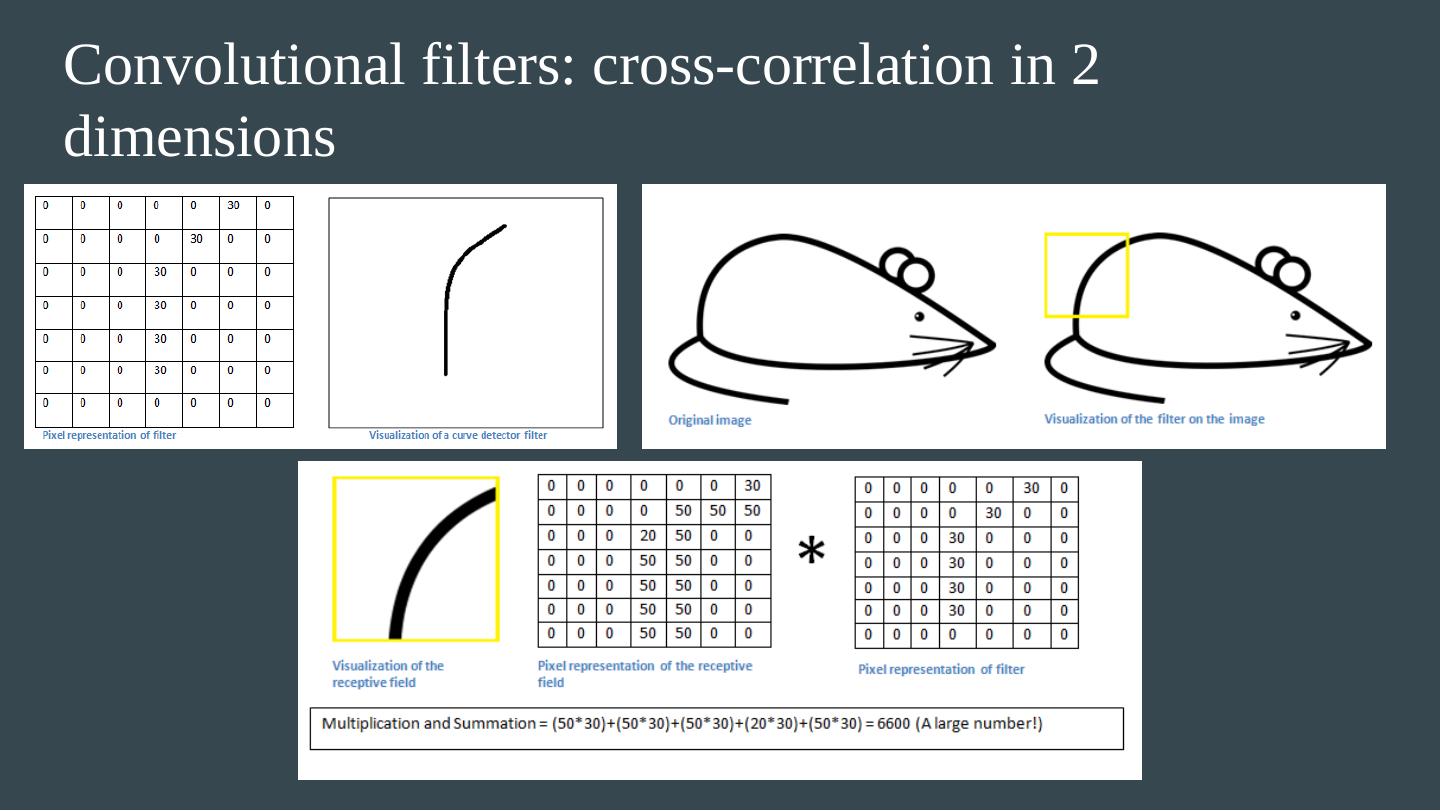

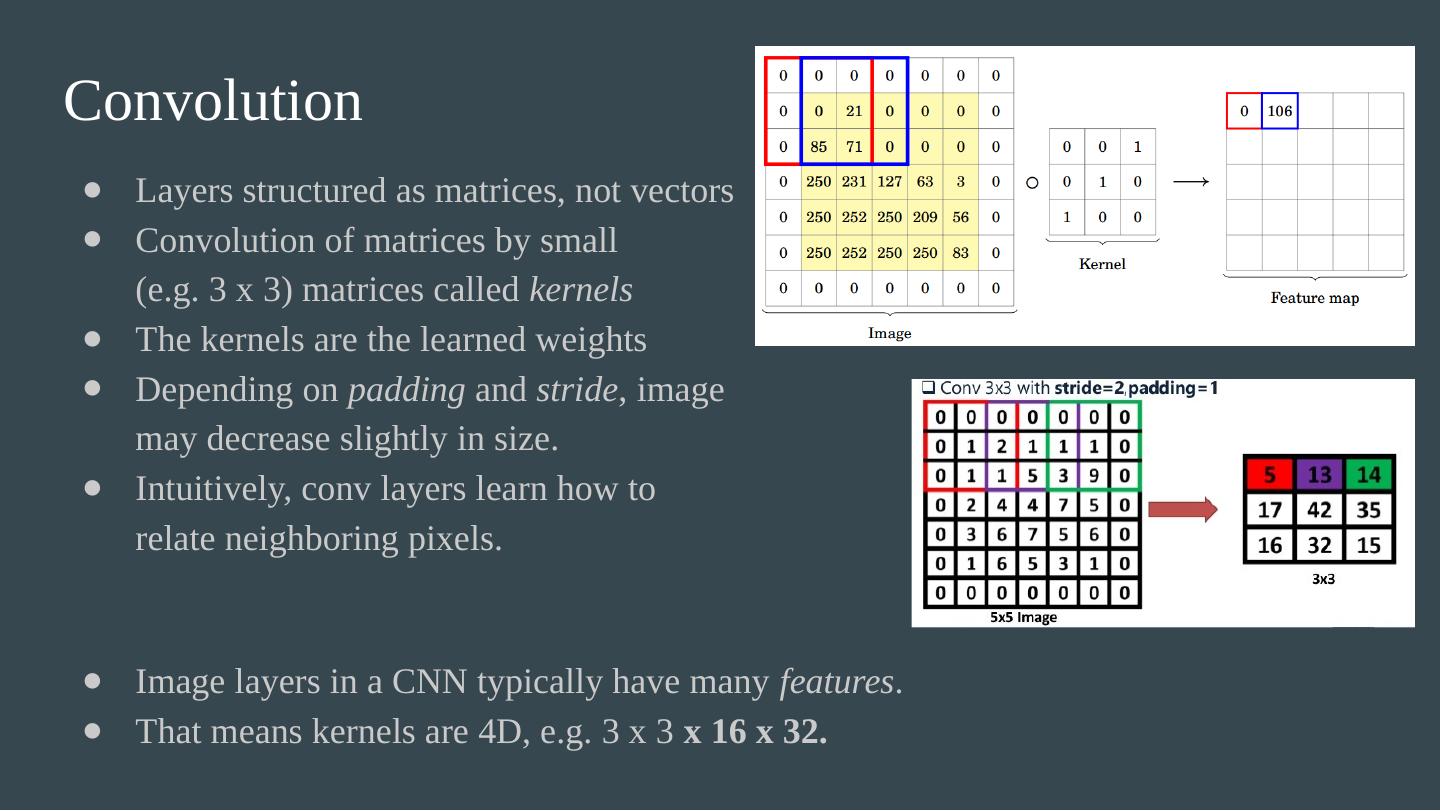

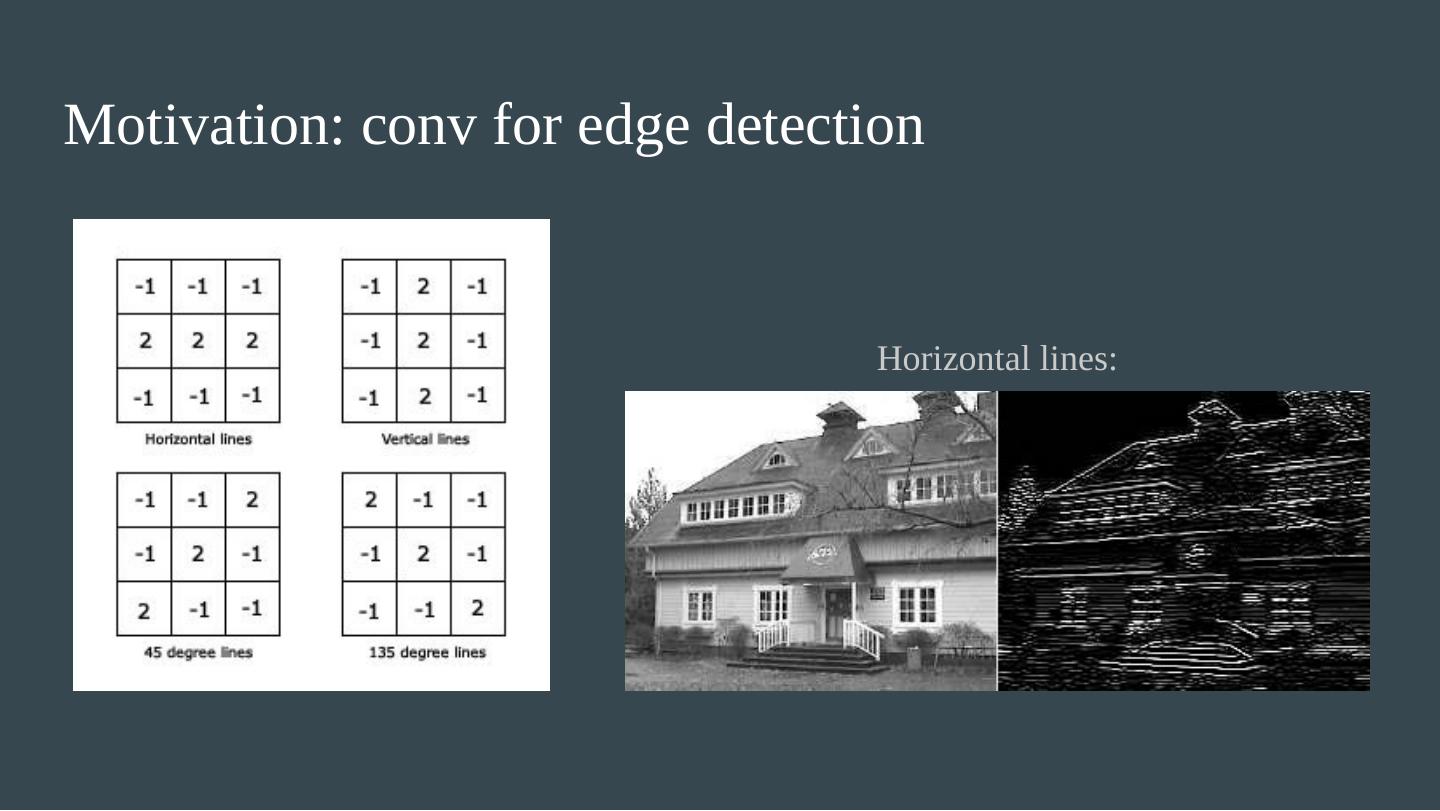

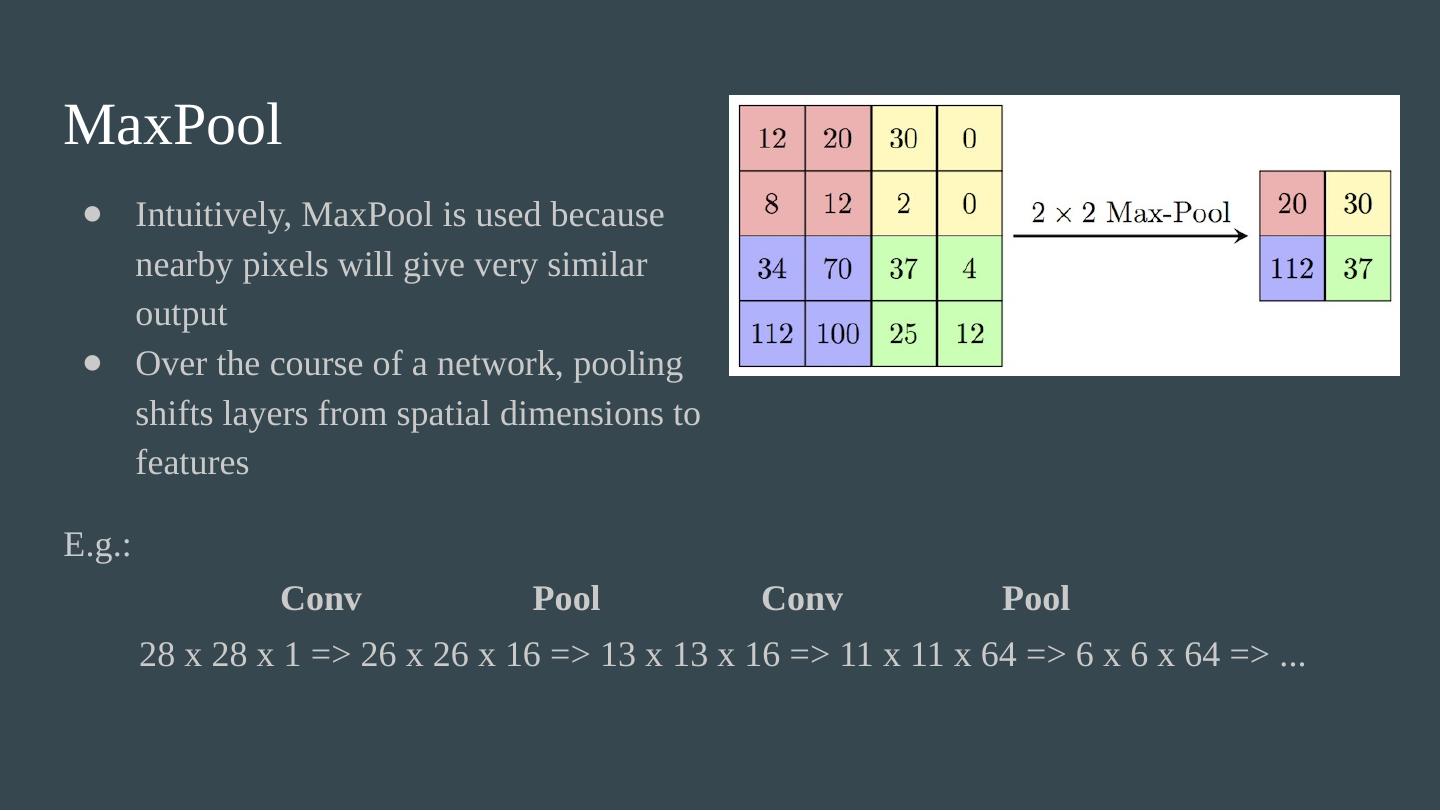

14 .

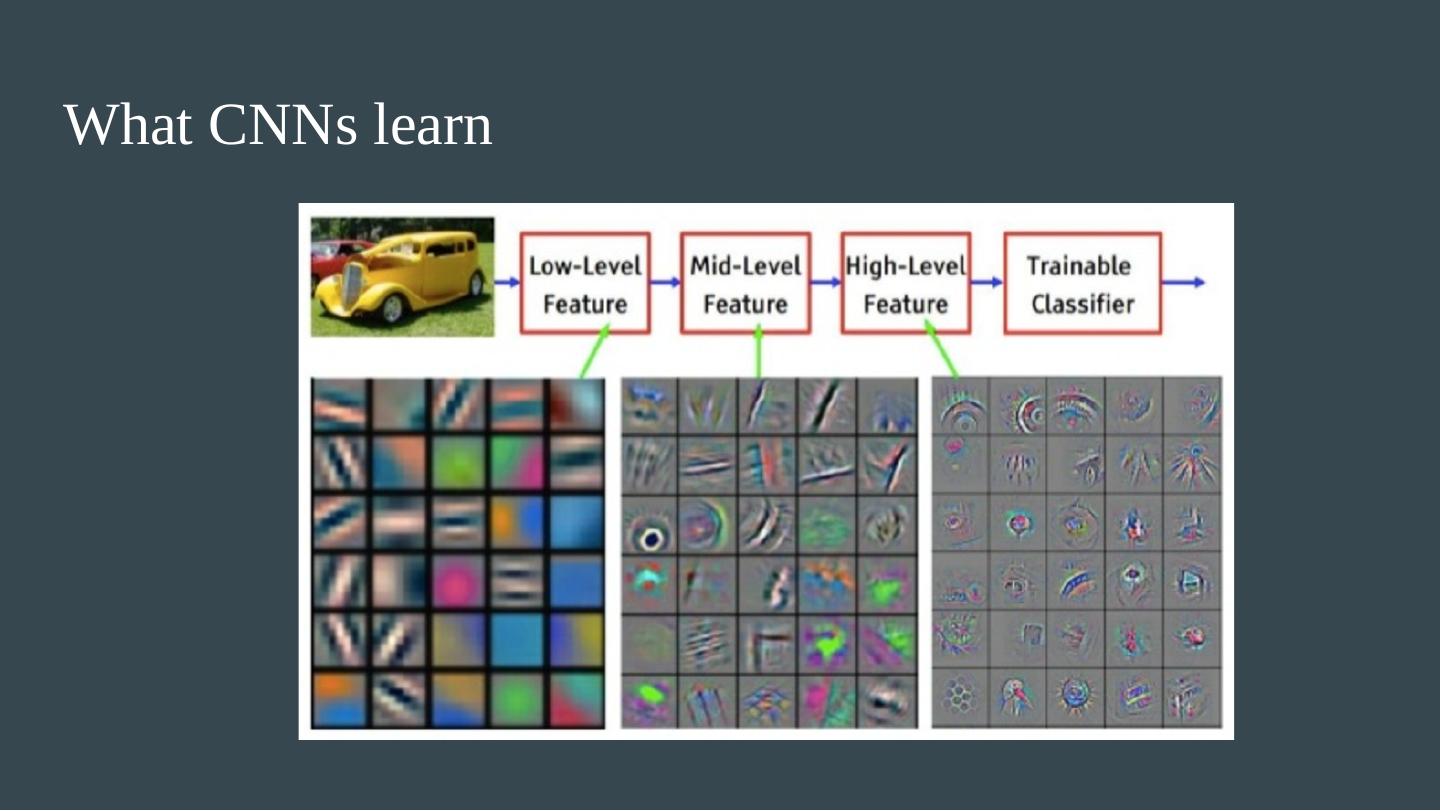

15 .

16 .

17 .

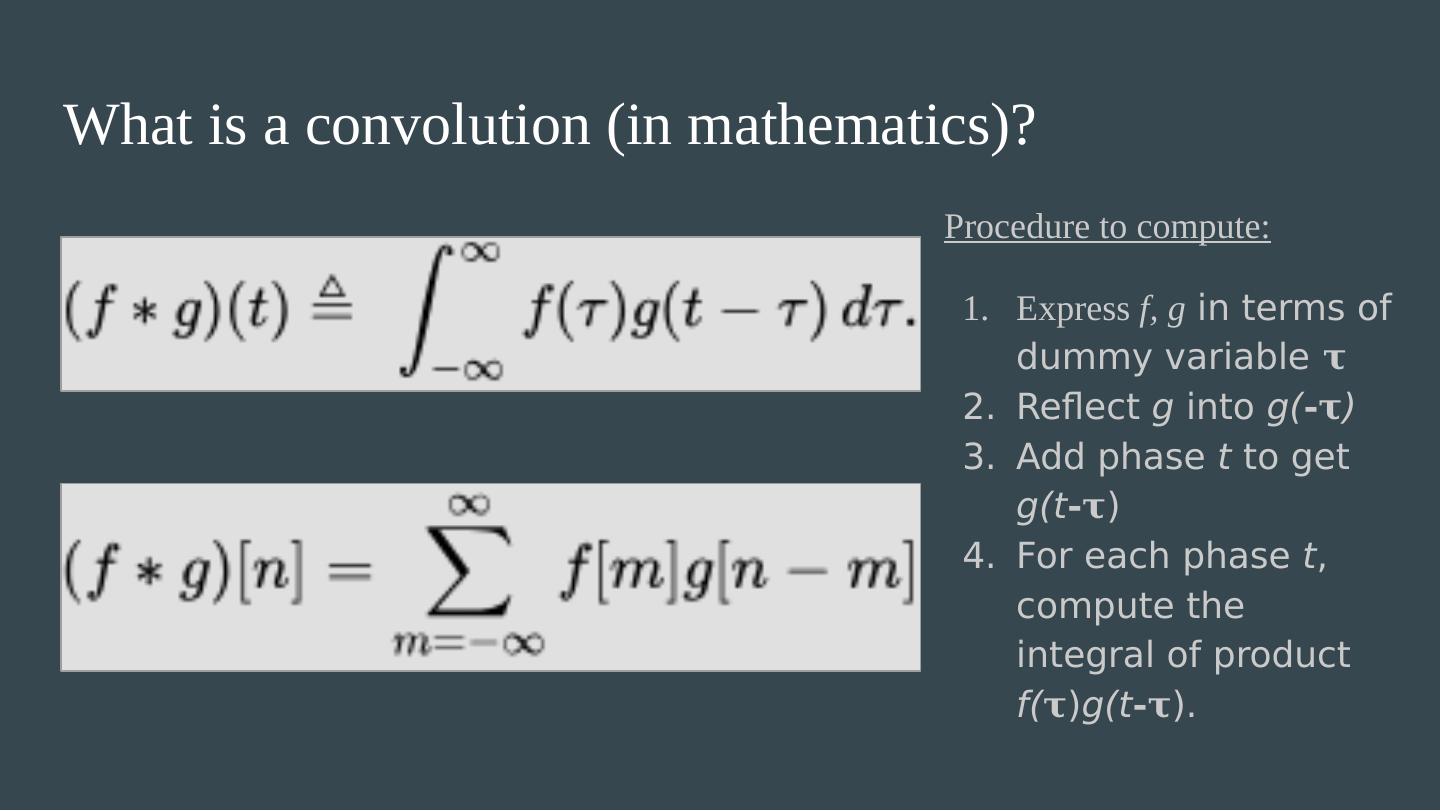

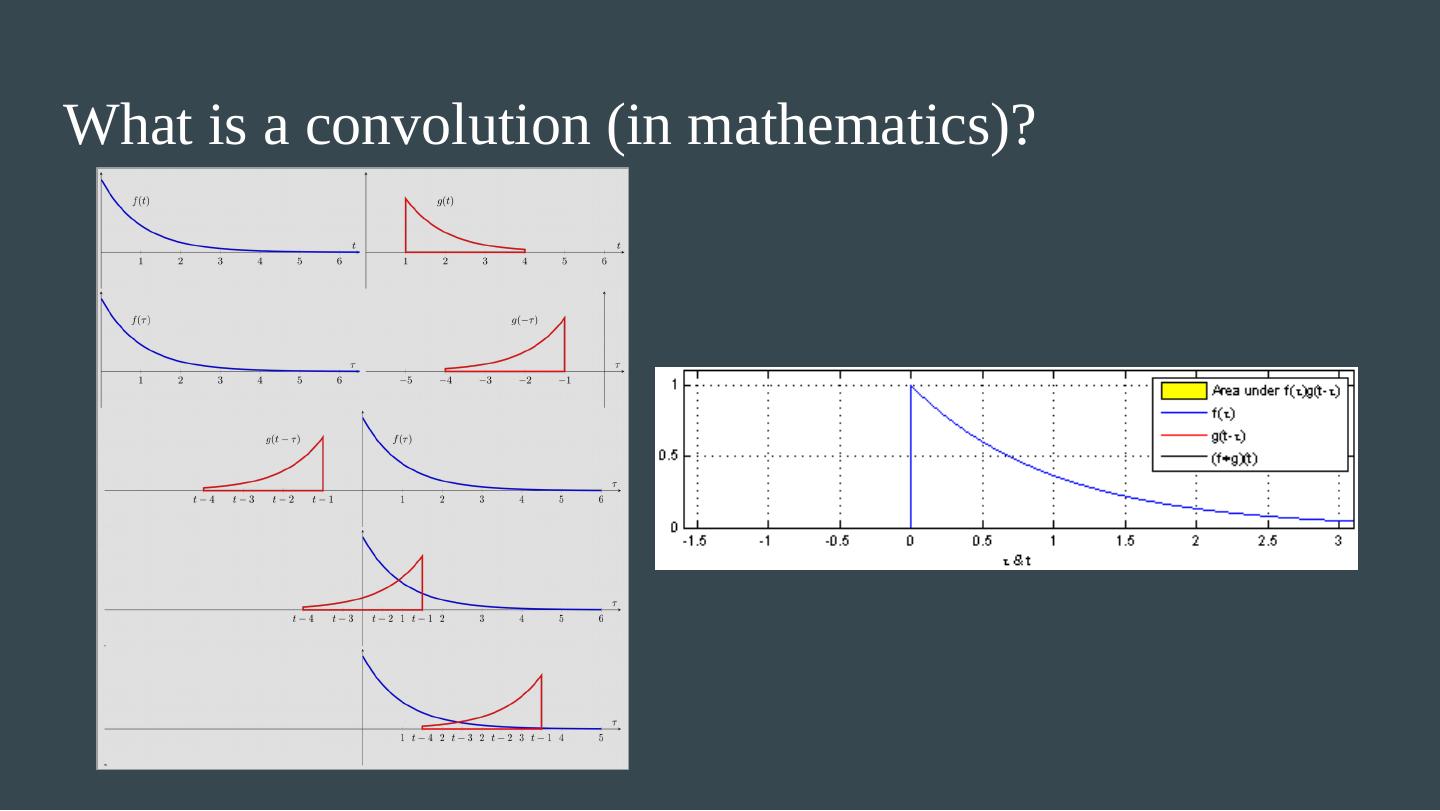

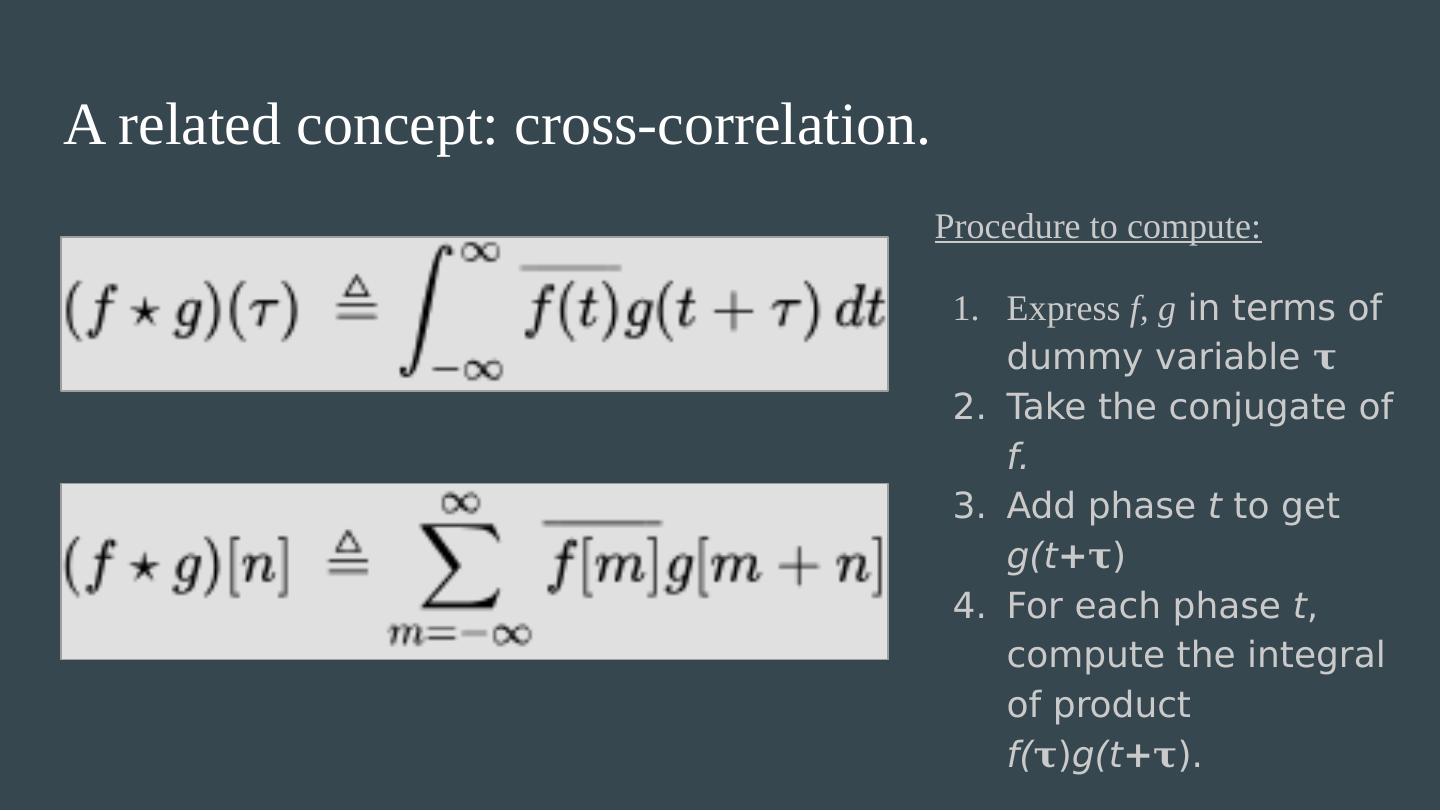

18 .What is a convolution?

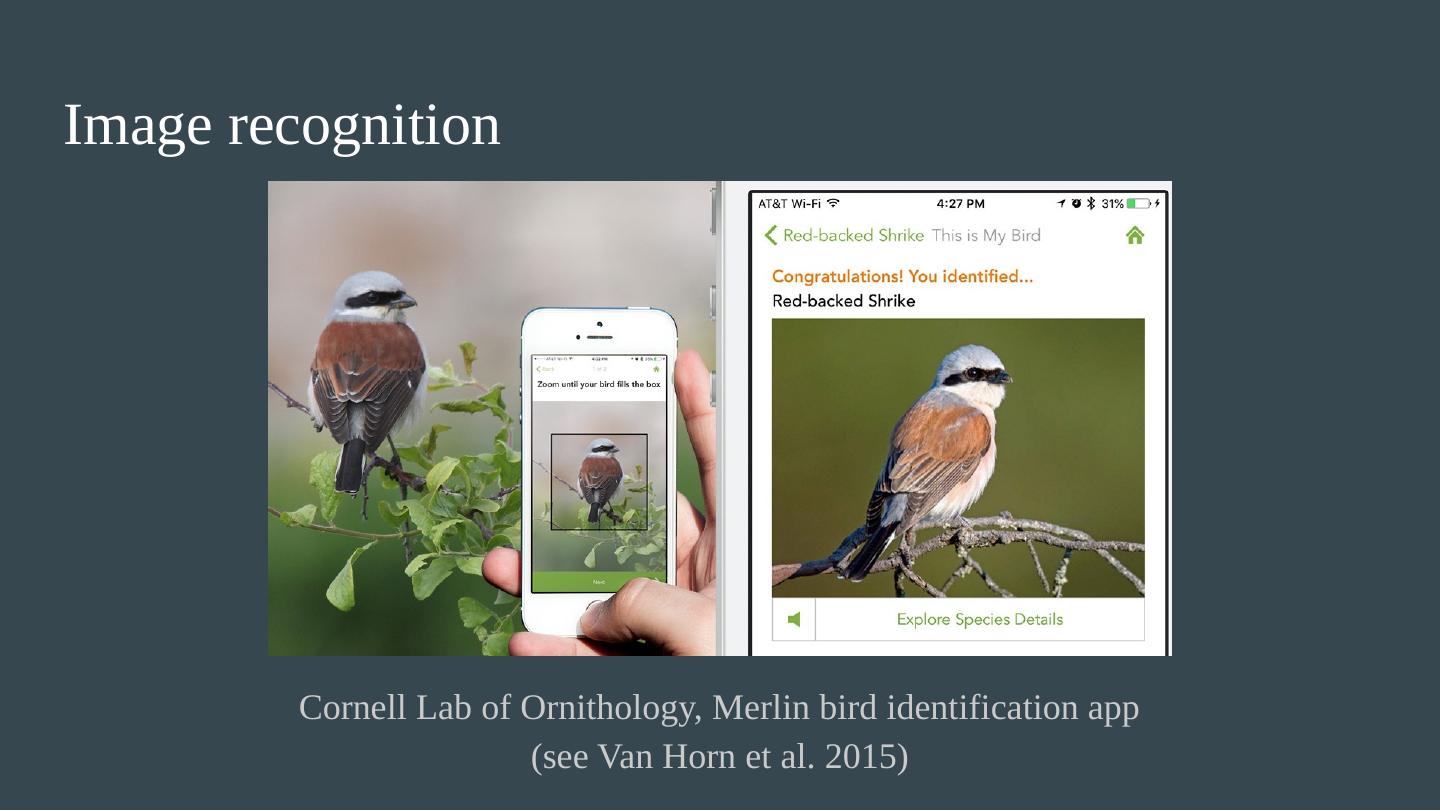

19 .Image recognition Cornell Lab of Ornithology, Merlin bird identification app (see Van Horn et al. 2015)

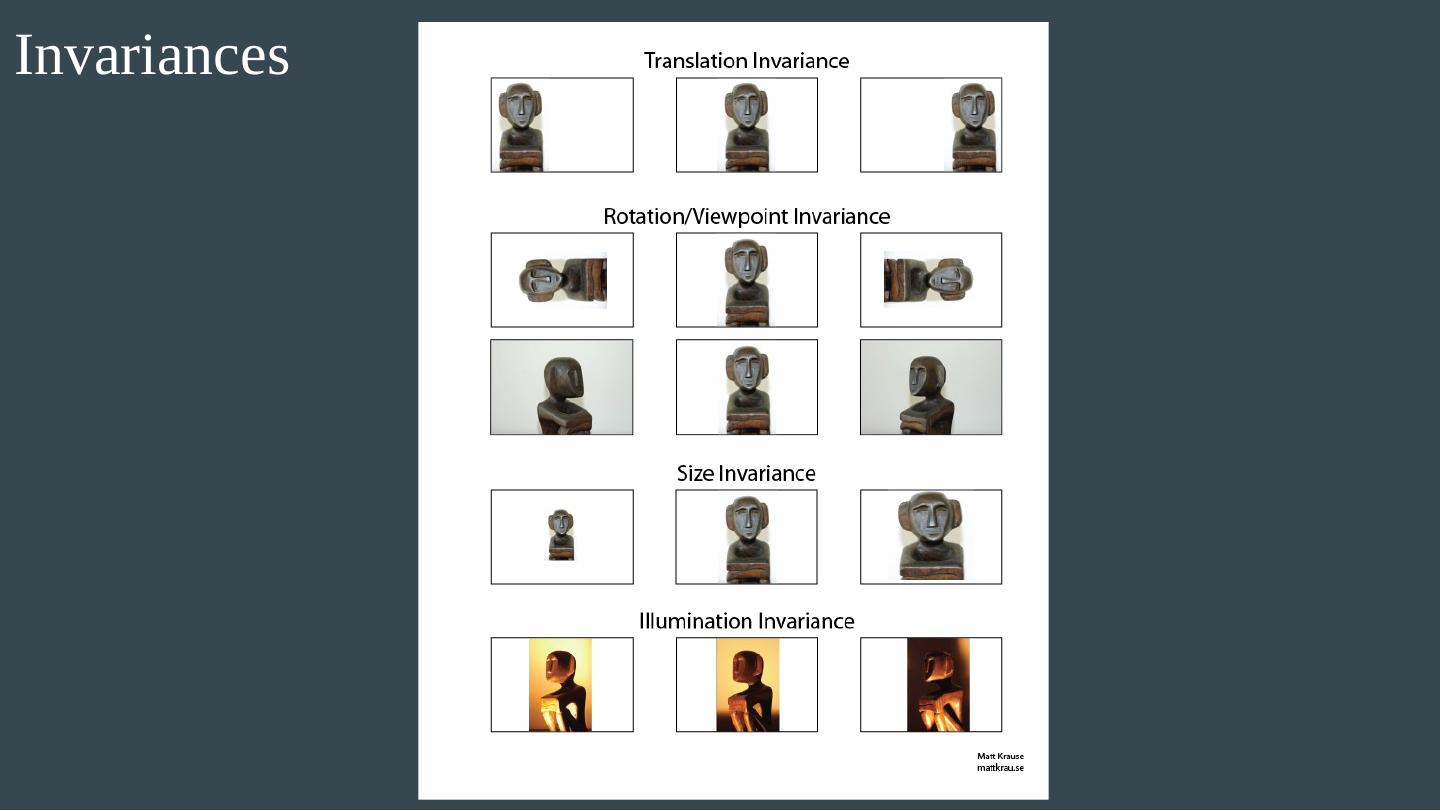

20 .Some classic CNNs

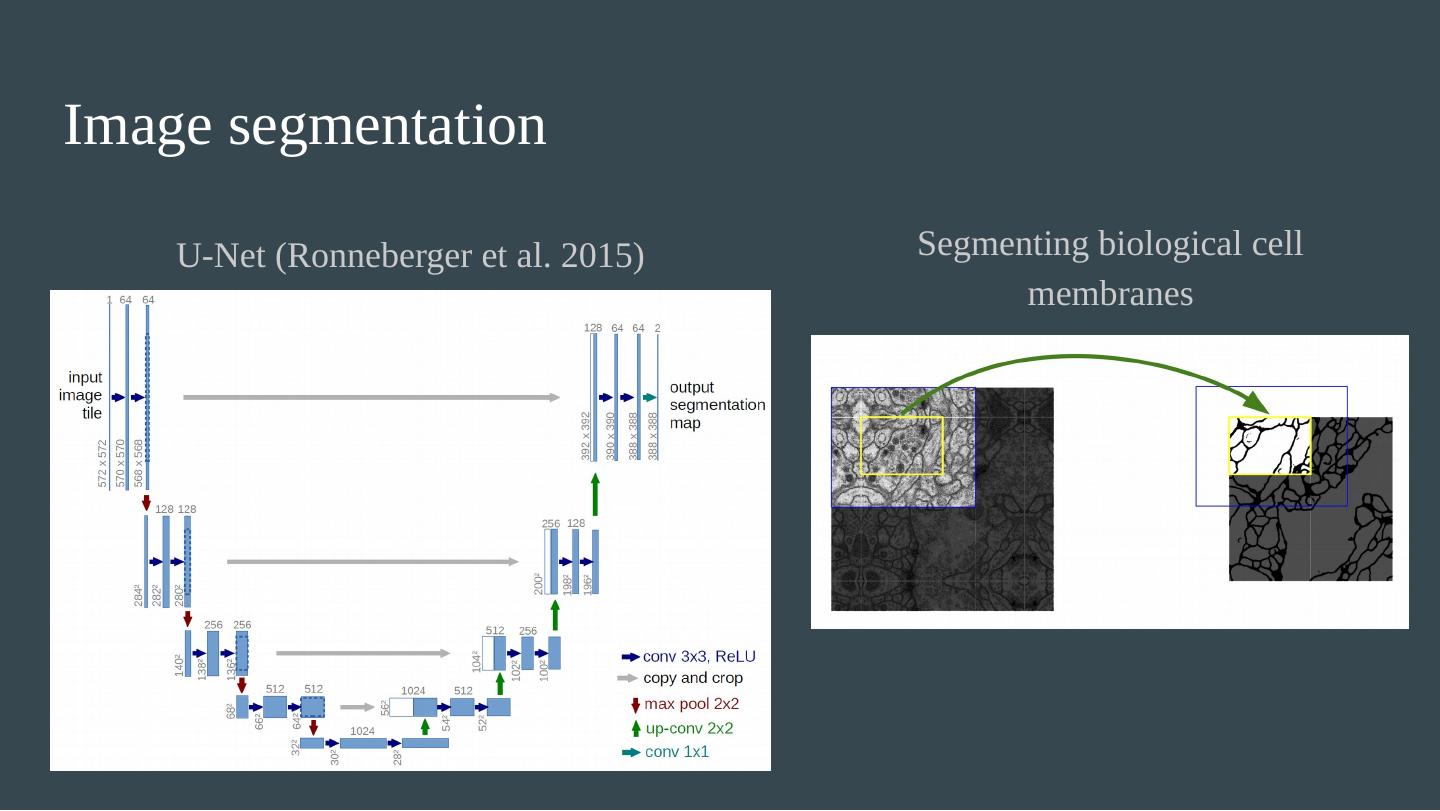

21 .Convolutional filters: cross-correlation in 2 dimensions

22 .Visualizing neurons in a network https://distill.pub/2017/feature-visualization/ Visualization by optimizing image to maximize activation at particular neurons/layers. How does this work?

23 .Notes from previous lecture: sawtooth Sawtooth function The sawtooth function with 2 n pieces can be expressed succinctly with ~3n neurons (Telgarsky 2015) and depth ~2n. The naive shallow implementation takes exponentially more neurons.

24 .Not entirely true Betsch et al 2004

25 .Not entirely true Betsch et al 2004

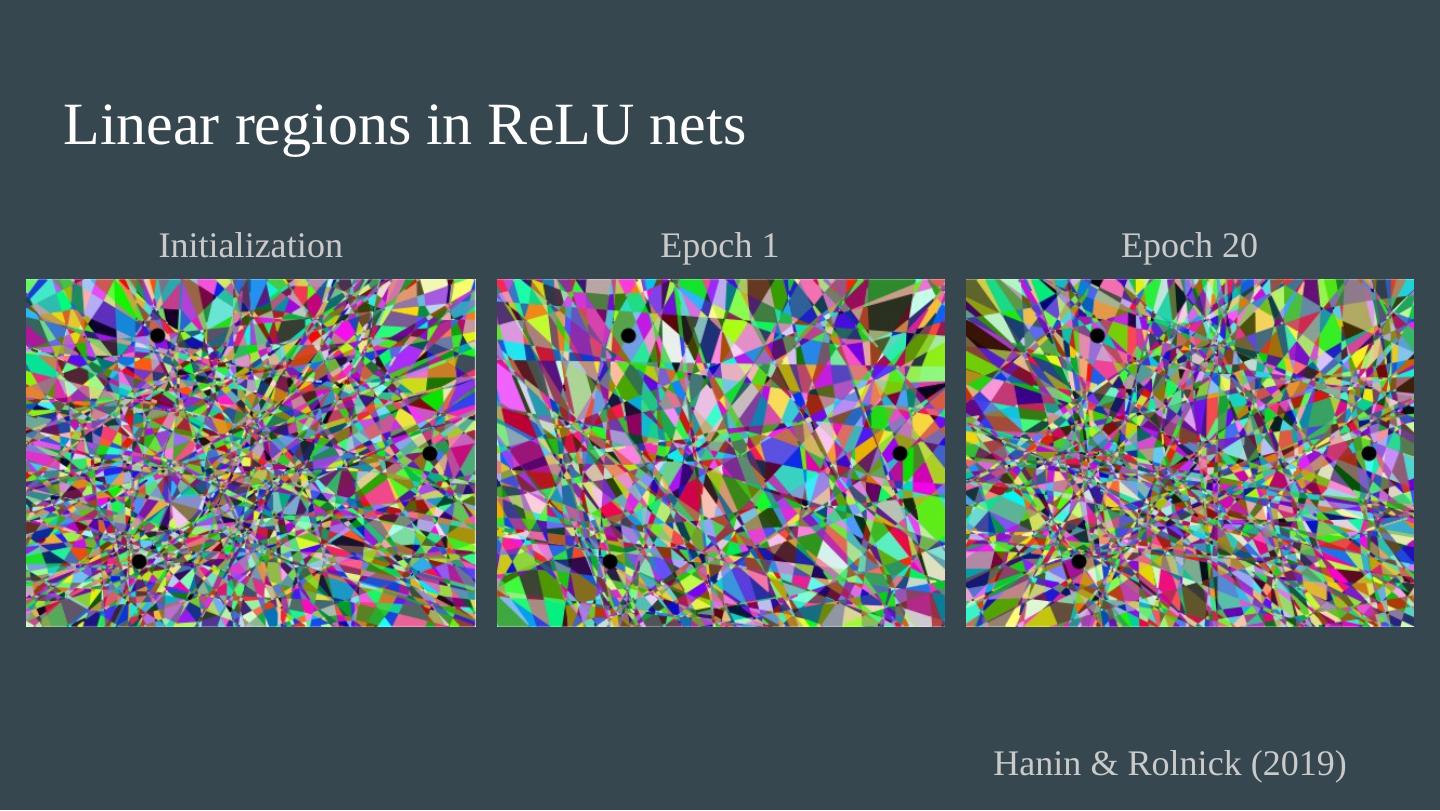

26 .Linear regions in ReLU nets Hanin & Rolnick (2019) Initialization Epoch 1 Epoch 20

27 .Visual processing in the brain

28 .Insights of U-Net “Up-convolution” Fixes the problem of s hrinking images with CNNs One example of how to make “fully convolutional” nets: pixels to pixels “Up-convolution” is just upsampling, then convolution Allows for refinement of the upsample by learned weights Goes along with decreasing the number of feature channels Not the same as “de-convolution”. Connections across the “U” in the architecture

29 .Graph convolution For a full intro: https://tkipf.github.io/graph-convolutional-networks/ Basic idea: A convolution for matrices is a “local” combination of entries in the matrix Can do the same thing for a graph Here, “local” is whatever nodes a node is adjacent to Just the tip of an iceberg… Used in learning stuff about molecules, citations, social networks, etc.