- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

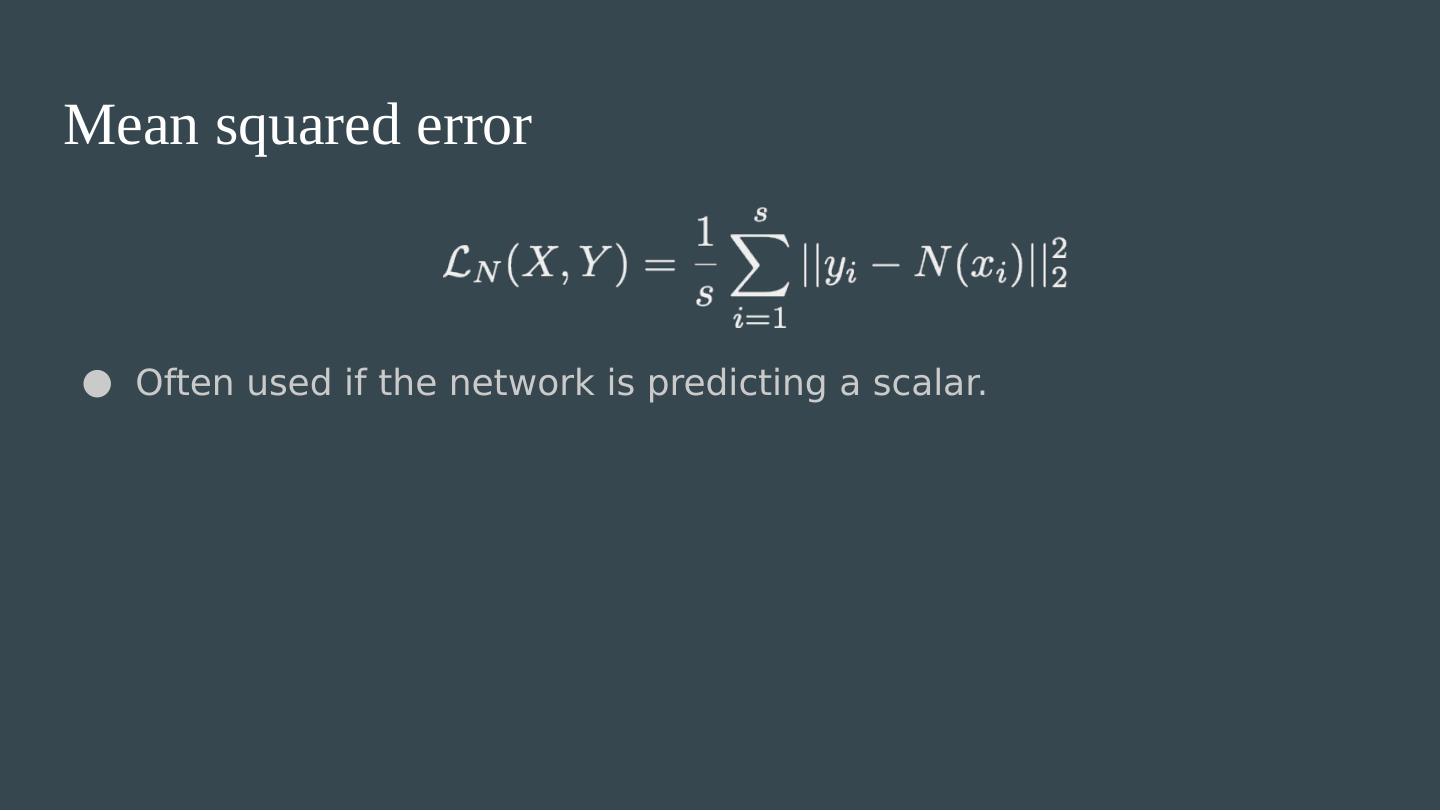

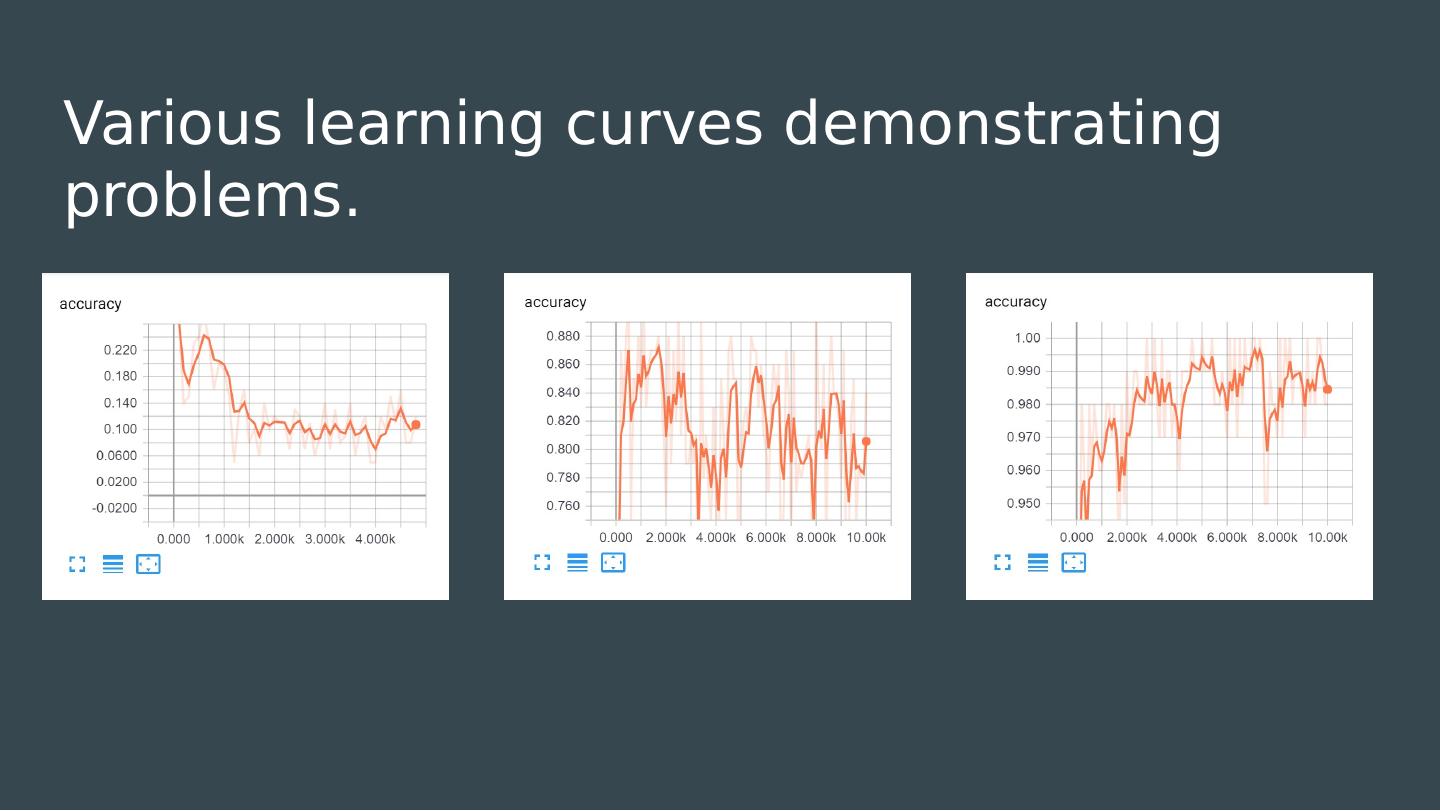

Challenges in Training Neural Nets

展开查看详情

1 .

2 .

3 .

4 .

5 .

6 .

7 .

8 .

9 .

10 .

11 .

12 .

13 .

14 .

15 .

16 .

17 .

18 .

19 .

20 .

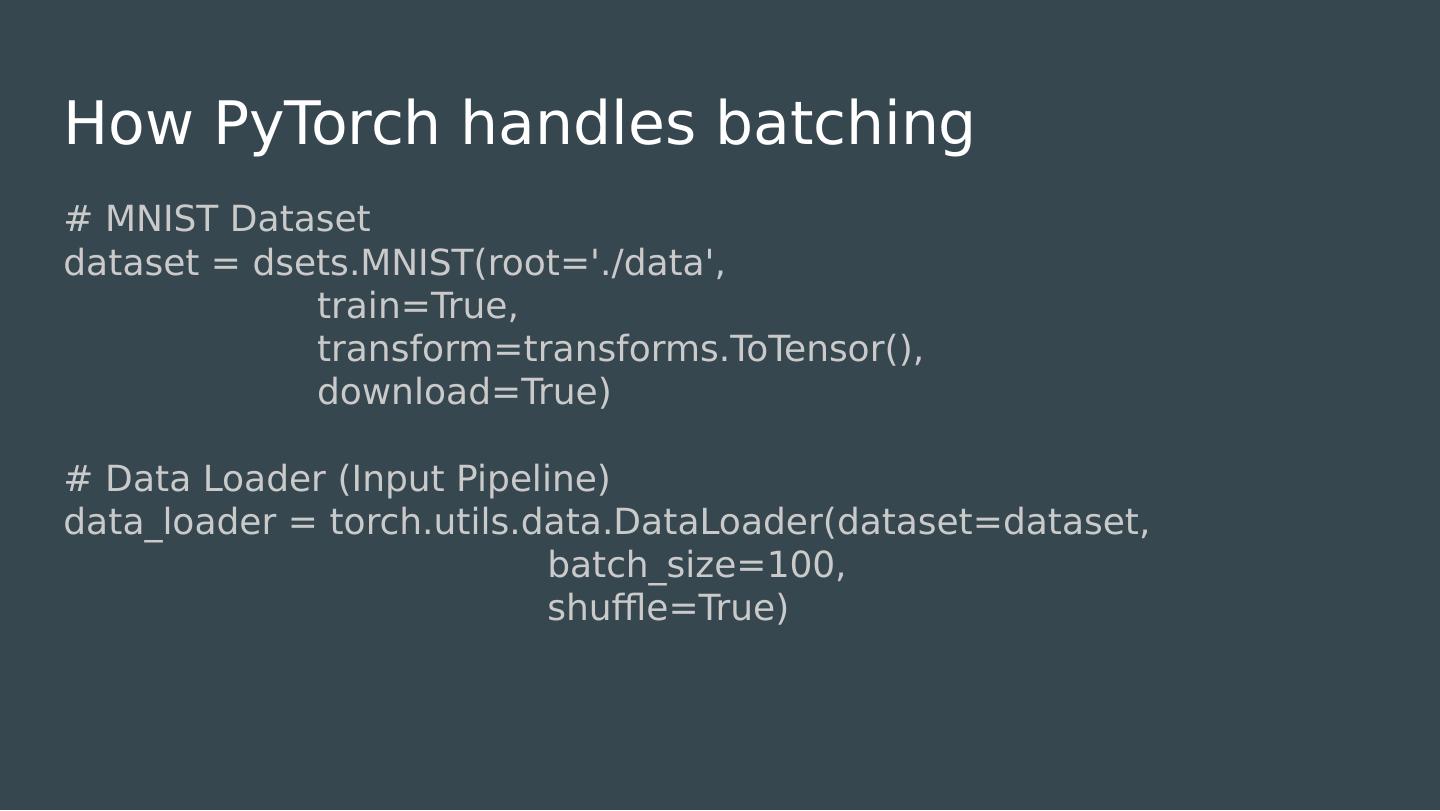

21 .A brief interlude on algorithms A batch algorithm does a computation all in one go given an entire dataset Closed-form solution to linear regression Selection sort Quicksort Pretty much anything involving gradient descent. An online algorithm incrementally computes as new data becomes available Heap algorithm for the k th largest element in a stream Perceptron algorithm Insertion sort Greedy algorithms

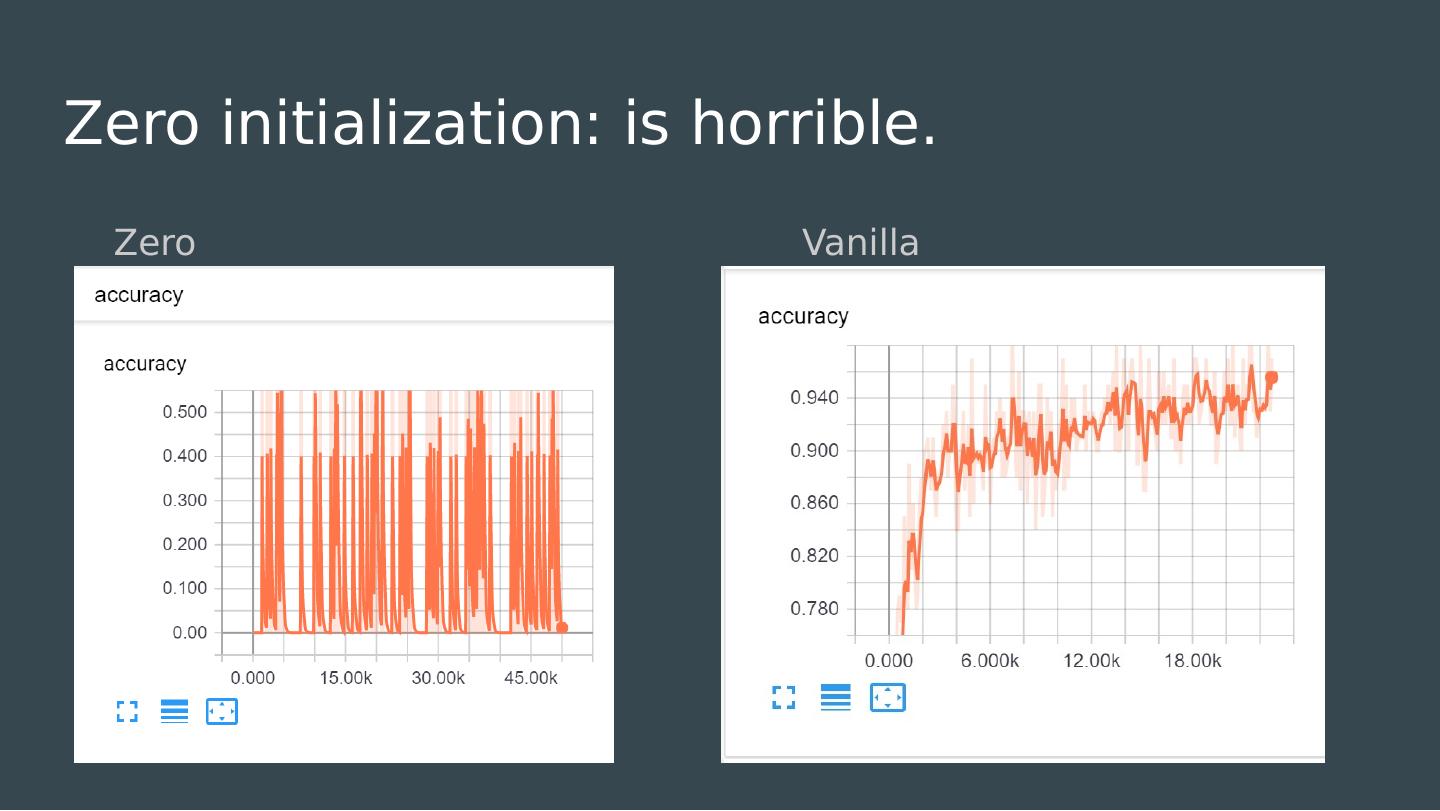

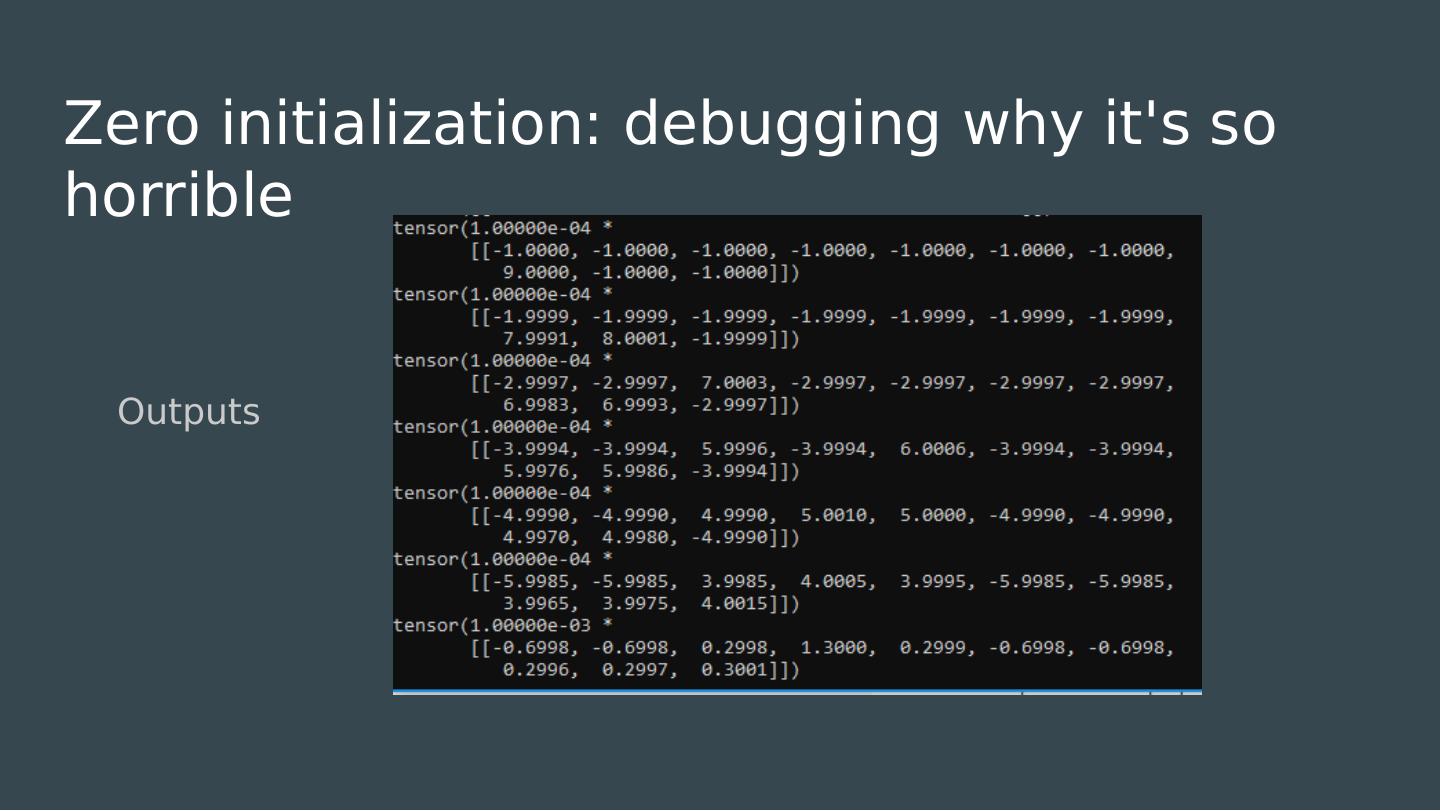

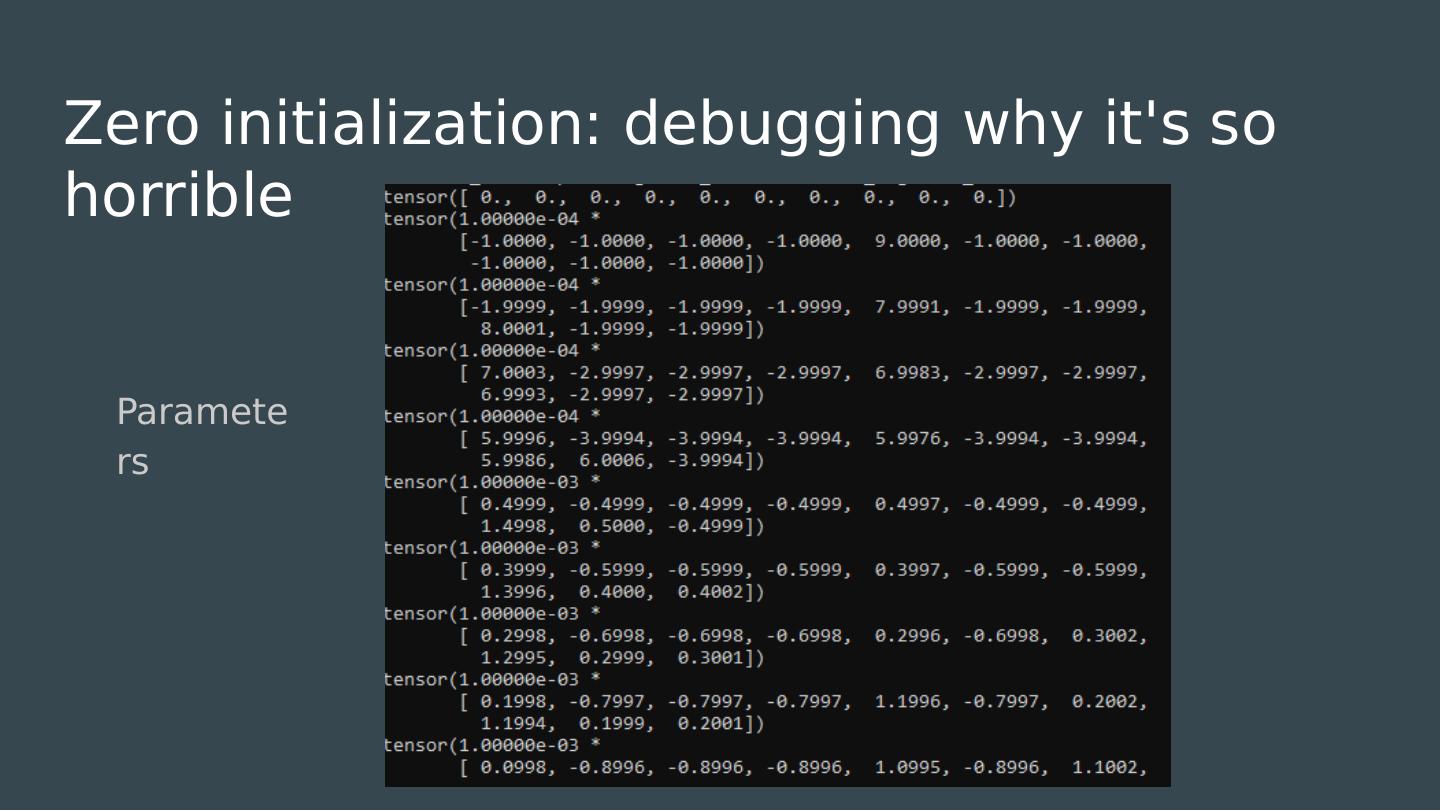

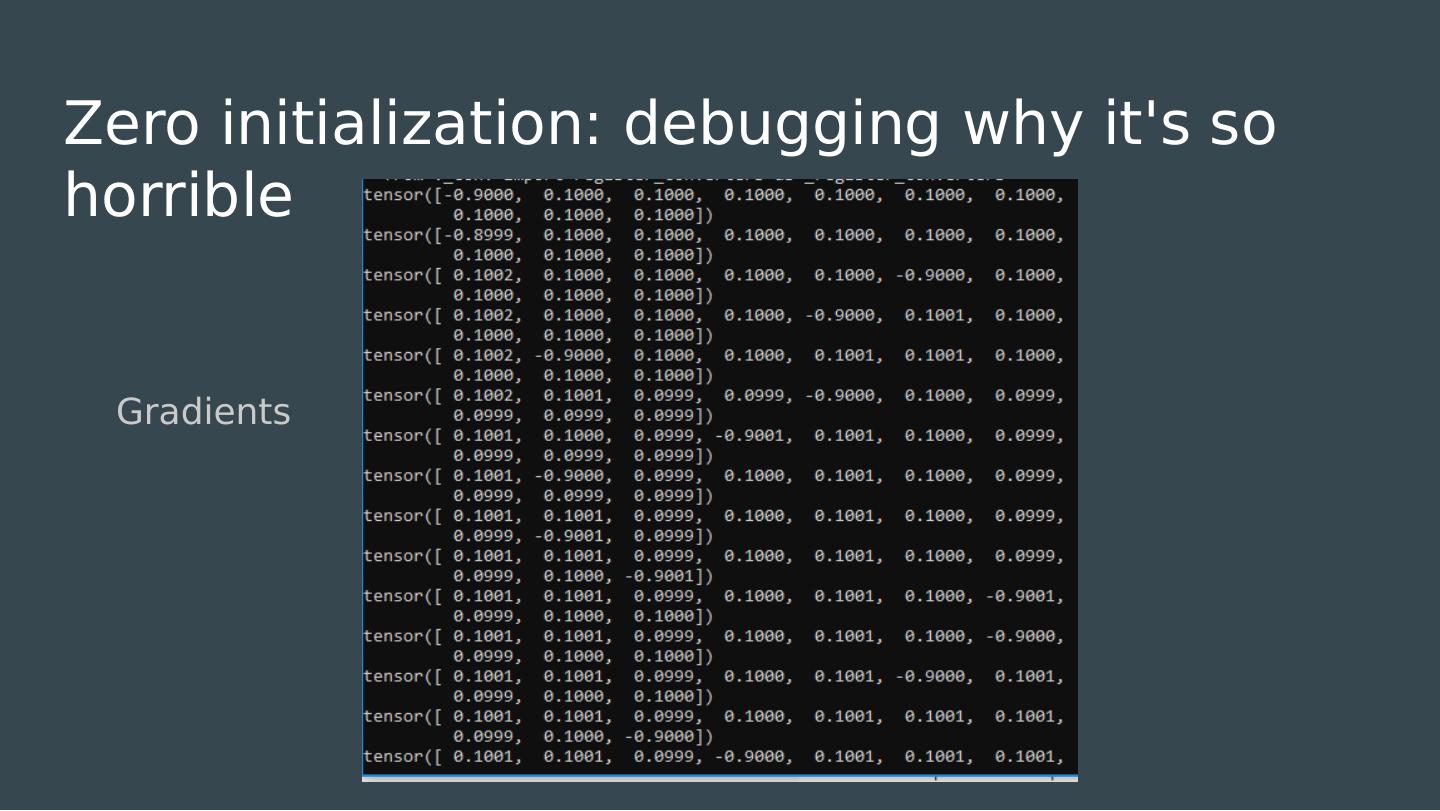

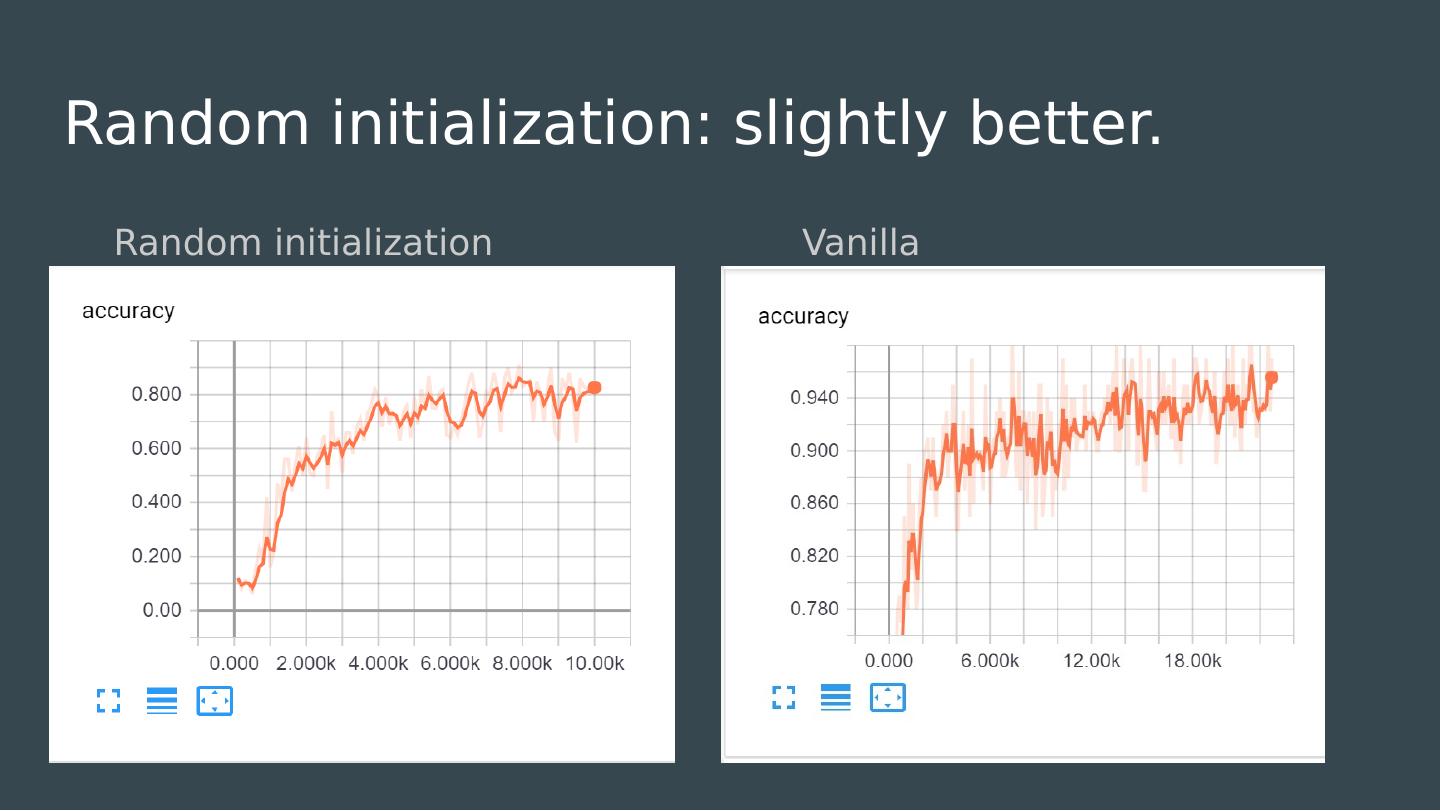

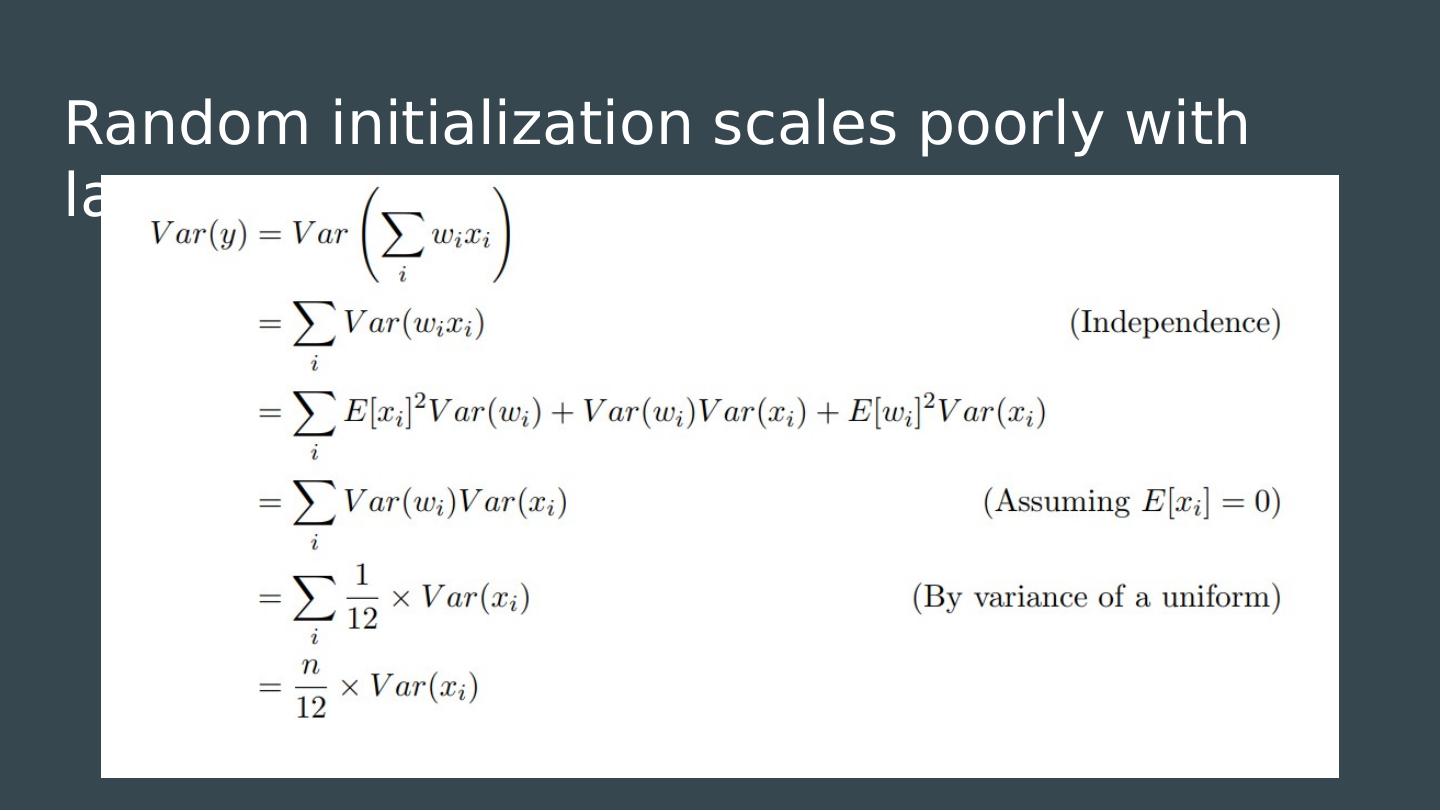

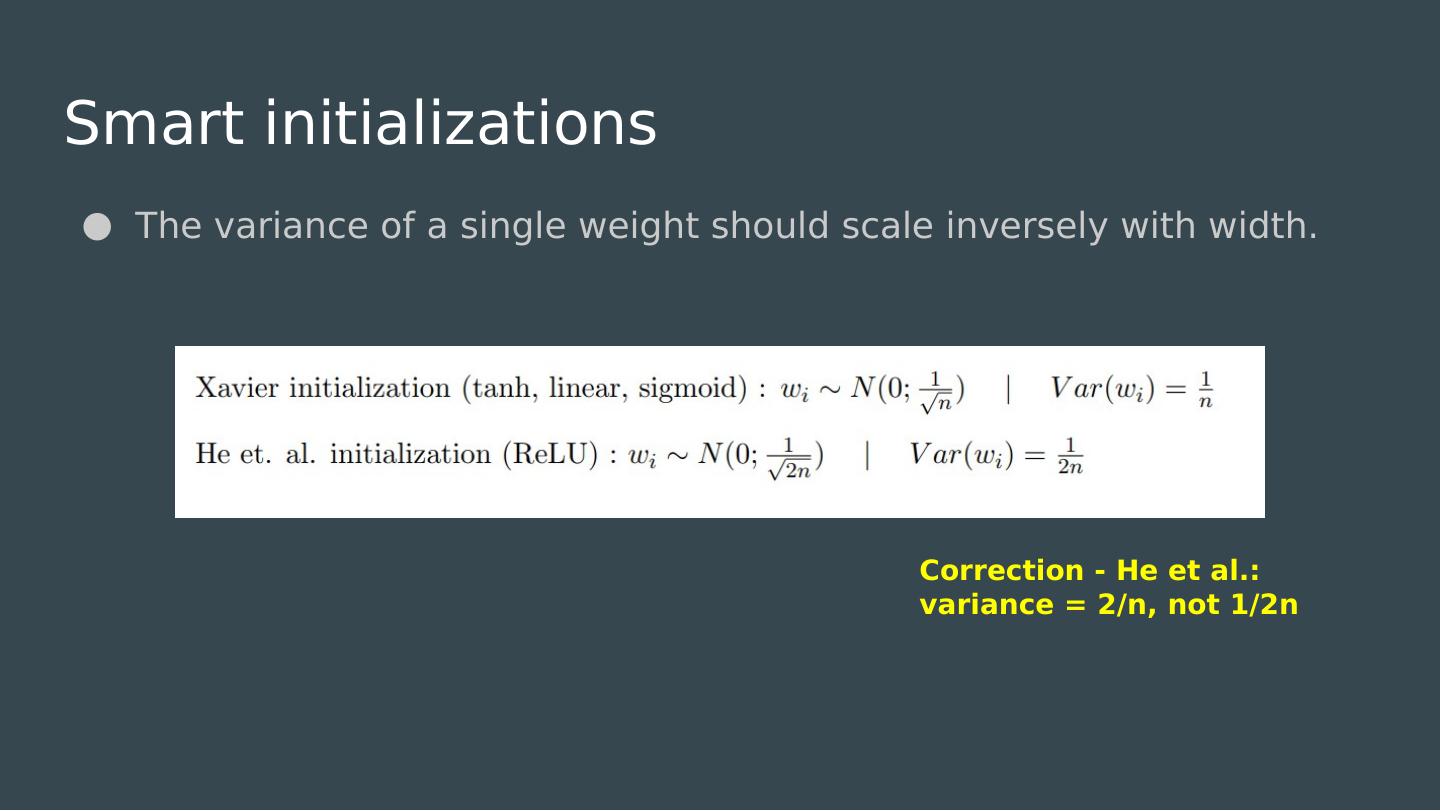

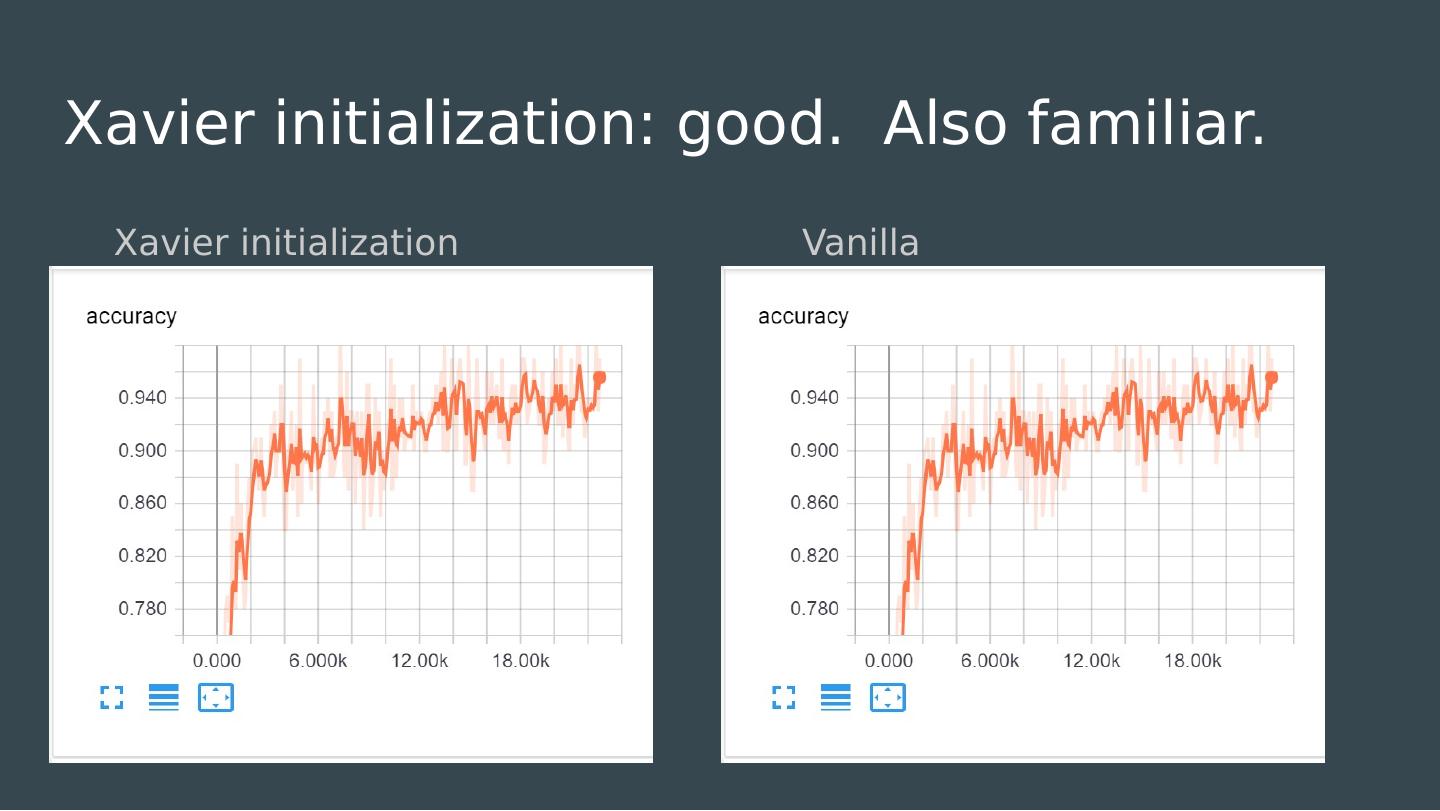

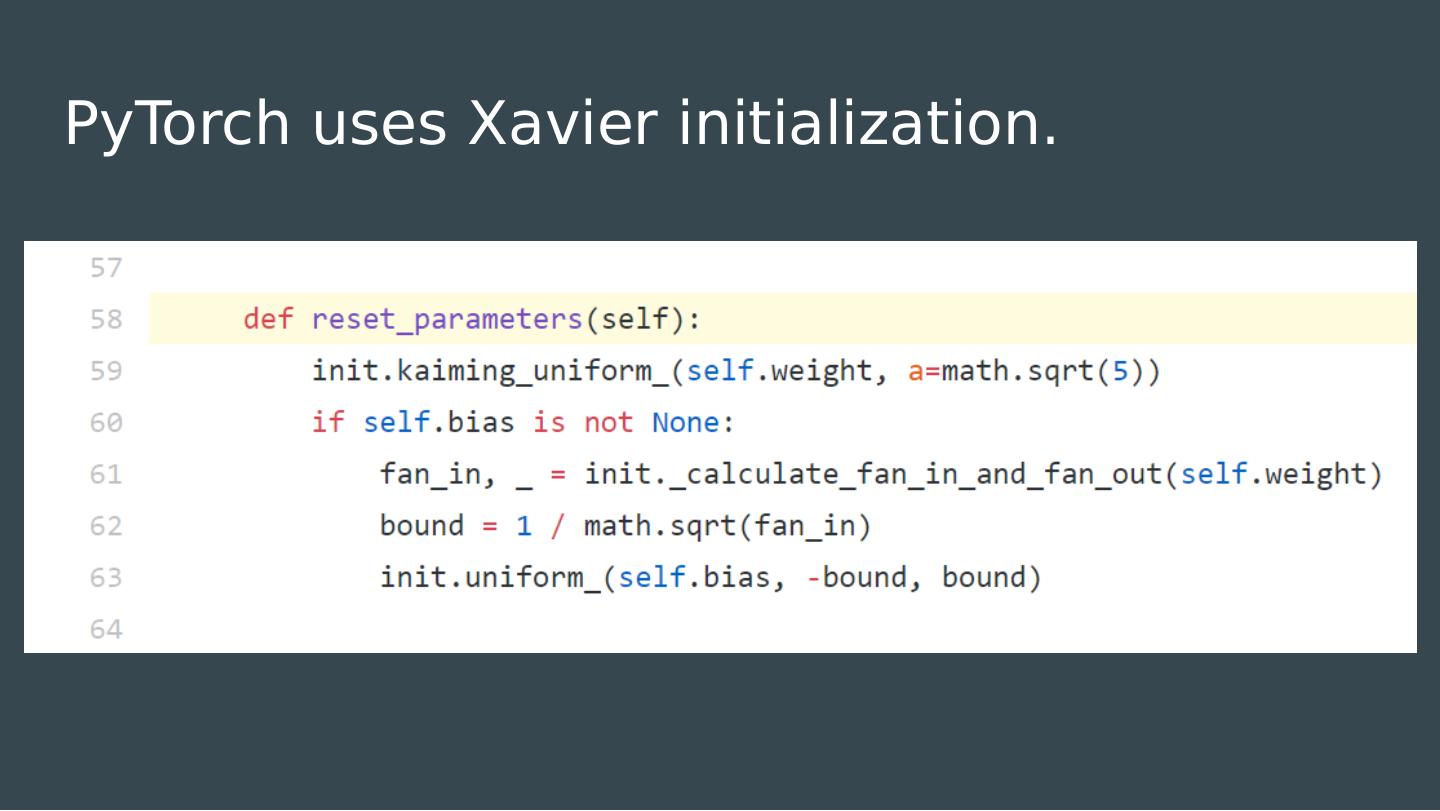

22 .How do we initialize our weights? Most models work best when normalized around zero. All of our choices of activation functions inflect about zero. What is the simplest possible initialization strategy?

23 .How do we initialize our weights? Most models work best when normalized around zero. All of our choices of activation functions inflect about zero. What is the simplest possible initialization strategy?

24 .Looking forward HW0 is due tonight. HW1 will be released later this week. Next week, we discuss architecture tradeoffs and begin computer vision.

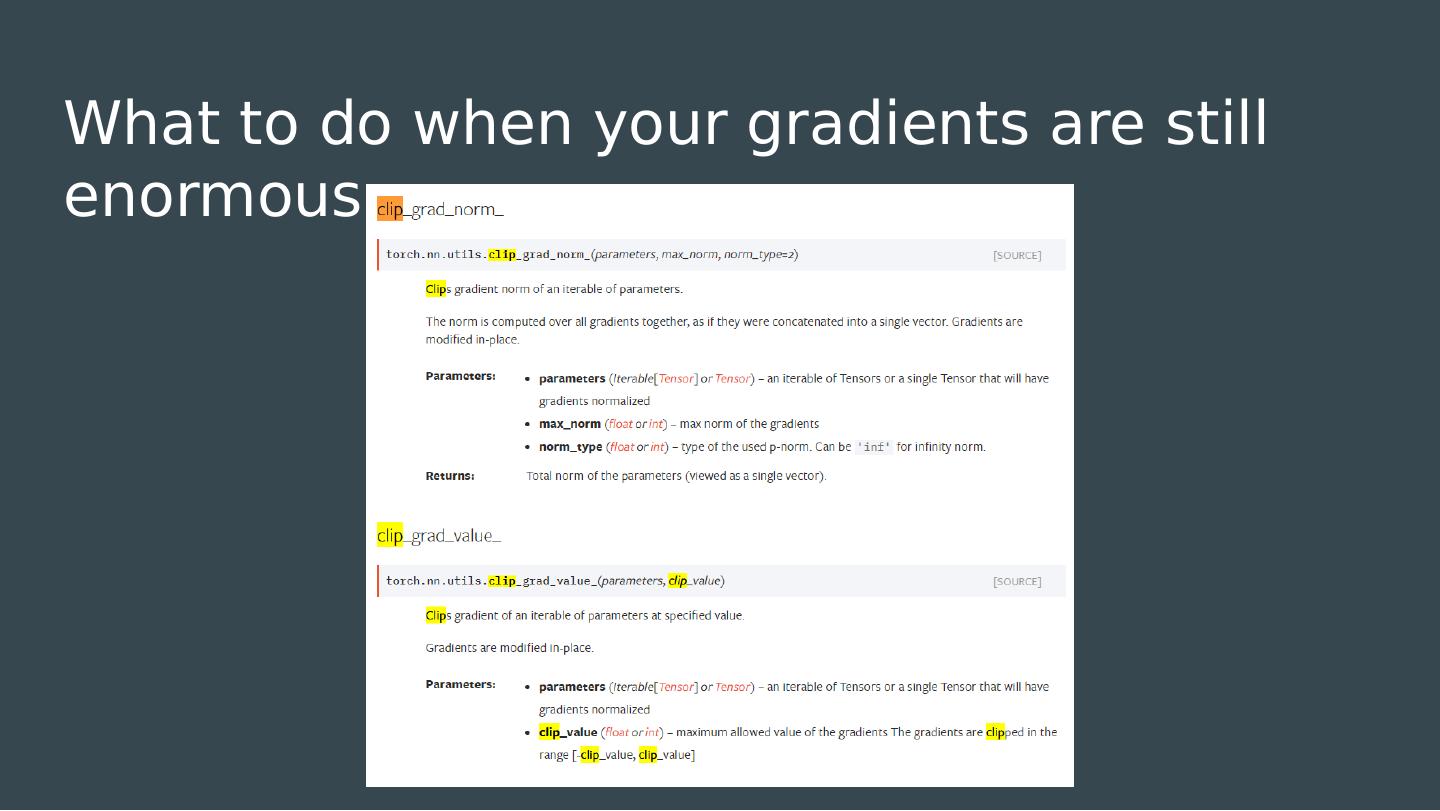

25 .What to do when your gradients are too small Find a better activation? Find a better architecture? Find a better initialization? Find a better career?

26 .What to do when your gradients are too small Find a better activation? Find a better architecture? Find a better initialization? Find a better career?

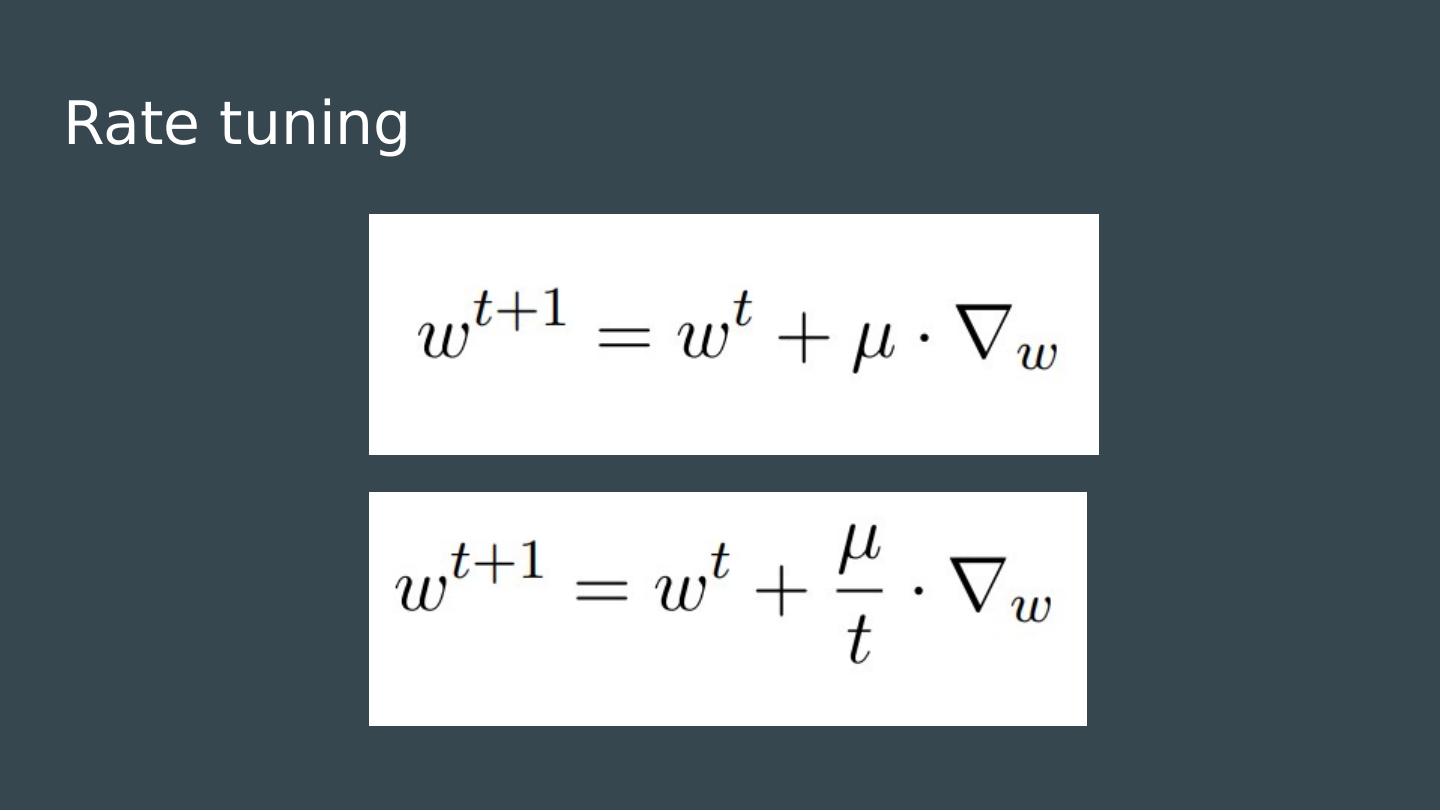

27 .Training Challenges: Gradient Magnitude

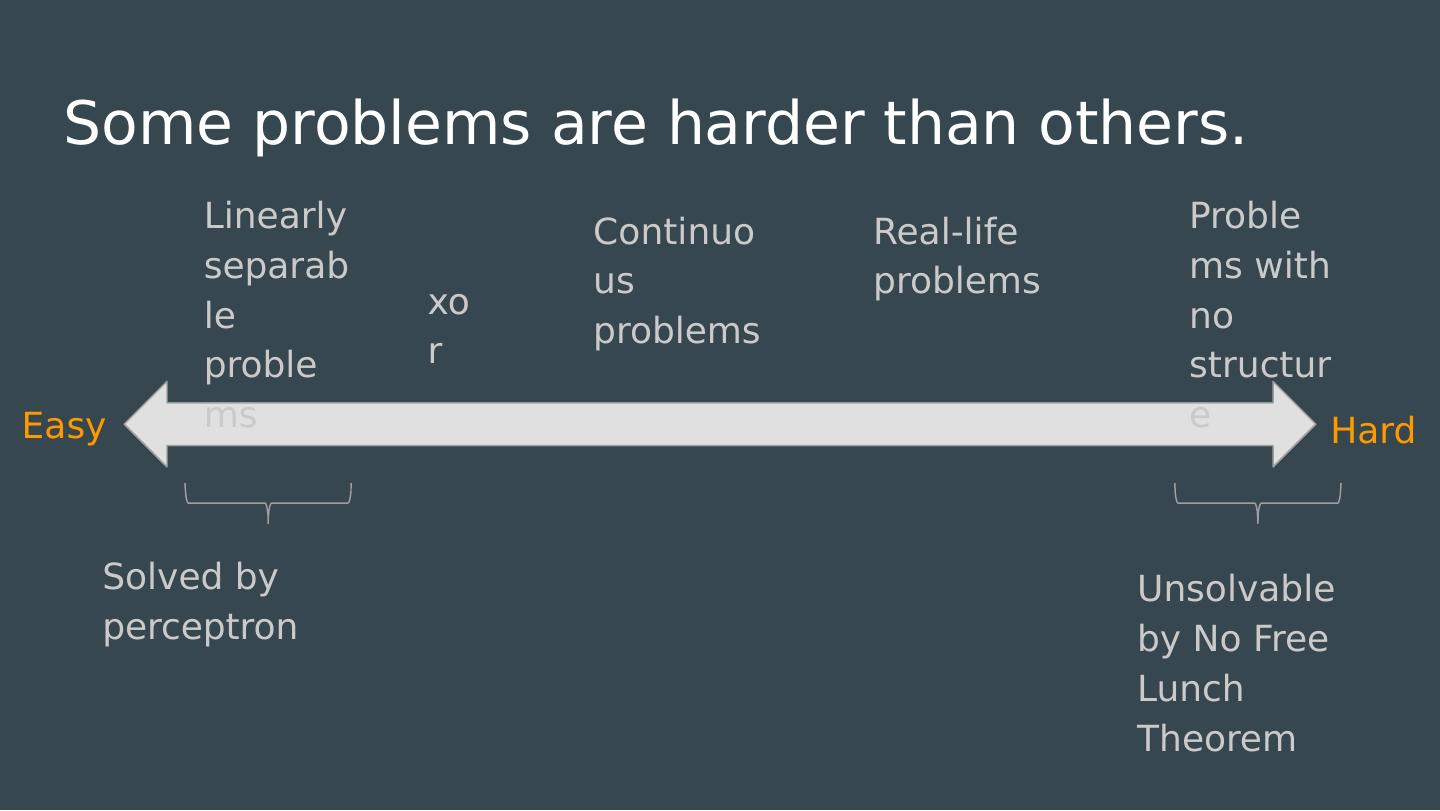

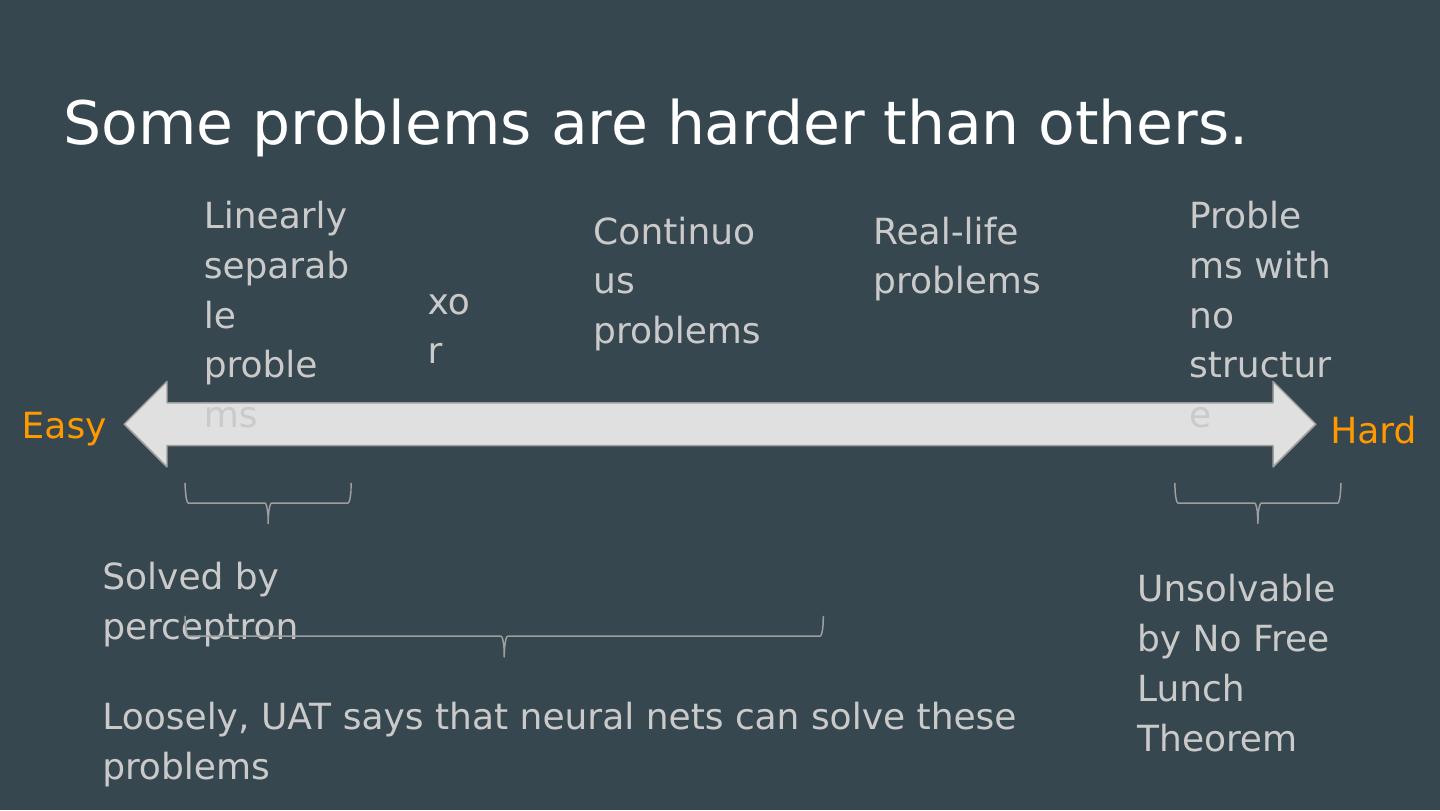

28 .No Free Lunch Theorem

29 .No Free Lunch Theorem