- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

1-overview

展开查看详情

1 . Overview Introduction to Parallel Computing CIS 410/510 Department of Computer and Information Science Lecture 1 – Overview

2 . Outline q Course Overview ❍ What is CIS 410/510? ❍ What is expected of you? ❍ What will you learn in CIS 410/510? q Parallel Computing ❍ What is it? ❍ What motivates it? ❍ Trends that shape the field ❍ Large-scale problems and high-performance ❍ Parallel architecture types ❍ Scalable parallel computing and performance Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 2

3 . How did the idea for CIS 410/510 originate? q There has never been an undergraduate course in parallel computing in the CIS Department at UO q Only 1 course taught at the graduate level (CIS 631) q Goal is to bring parallel computing education in CIS undergraduate curriculum, start at senior level ❍ CIS 410/510 (Spring 2014, “experimental” course) ❍ CIS 431/531 (Spring 2015, “new” course) q CIS 607 – Parallel Computing Course Development ❍ Winter 2014 seminar to plan undergraduate course ❍ Develop 410/510 materials, exercises, labs, … q Intel gave a generous donation ($100K) to the effort q NSF and IEEE are spearheading a curriculum initiative for undergraduate education in parallel processing http://www.cs.gsu.edu/~tcpp/curriculum/ Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 3

4 . Who’s involved? q Instructor ❍ Allen D. Malony ◆ scalable parallel computing ◆ parallel performance analysis ◆ taught CIS 631 for the last 10 years q Faculty colleagues and course co-designers ❍ Boyana Norris ◆ Automated software analysis and transformation ◆ Performance analysis and optimization ❍ Hank Childs ◆ Large-scale, parallel scientific visualization ◆ Visualization of large data sets q Intel scientists ❍ Michael McCool, James Reinders, Bob MacKay q Graduate students doing research in parallel computing Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 4

5 . Intel Partners q James Reinders ❍ Director, Software Products ❍ Multi-core Evangelist q Michael McCool ❍ Software architect ❍ Former Chief scientist, RapidMind ❍ Adjunct Assoc. Professor, University of Waterloo q Arch Robison ❍ Architect of Threading Building Blocks ❍ Former lead developers of KAI C++ q David MacKay ❍ Manager of software product consulting team Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 5

6 . CIS 410/510 Graduate Assistants q Daniel Ellsworth ❍ 3rd year Ph.D. student ❍ Research advisor (Prof. Malony) ❍ Large-scale online system introspection q David Poliakoff ❍ 2nd year Ph.D. student ❍ Research advisor (Prof. Malony) ❍ Compiler-based performance analysis q Brandon Hildreth ❍ 1st year Ph.D. student ❍ Research advisor (Prof. Malony) ❍ Automated performance experimentation Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 6

7 . Required Course Book q “Structured Parallel Programming: Patterns for Efficient Computation,” Michael McCool, Arch Robinson, James Reinders, 1st edition, Morgan Kaufmann, ISBN: 978-0-12-415993-8, 2012 http://parallelbook.com/ q Presents parallel programming from a point of view of patterns relevant to parallel computation ❍ Map, Collectives, Data reorganization, Stencil and recurrence, Fork-Join, Pipeline q Focuses on the use of shared memory parallel programming languages and environments ❍ Intel Thread Building Blocks (TBB) ❍ Intel Cilk Plus Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 7

8 . Reference Textbooks q Introduction to Parallel Computing, A. Grama, A. Gupta, G. Karypis, V. Kumar, Addison Wesley, 2nd Ed., 2003 ❍ Lecture slides from authors online ❍ Excellent reference list at end ❍ Used for CIS 631 before ❍ Getting old for latest hardware q Designing and Building Parallel Programs, Ian Foster, Addison Wesley, 1995. ❍ Entire book is online!!! ❍ Historical book, but very informative q Patterns for Parallel Programming T. Mattson, B. Sanders, B. Massingill, Addison Wesley, 2005. ❍ Targets parallel programming ❍ Pattern language approach to parallel program design and development ❍ Excellent references Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 8

9 . What do you mean by experimental course? q Given that this is the first offering of parallel computing in the undergraduate curriculum, we want to evaluate how well it worked q We would like to receive feedback from students throughout the course ❍ Lecture content and understanding ❍ Parallel programming learning experience ❍ Book and other materials q Your experiences will help to update the course for it debut offering in (hopefully) Spring 2015 Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 9

10 . Course Plan q Organize the course so that cover main areas of parallel computing in the lectures ❍ Architecture (1 week) ❍ Performance models and analysis (1 week) ❍ Programming patterns (paradigms) (3 weeks) ❍ Algorithms (2 weeks) ❍ Tools (1 week) ❍ Applications (1 week) ❍ Special topics (1 week) q Augment lecture with a programming lab ❍ Students will take the lab with the course ◆ graded assignments and term project will be posted ❍ Targeted specifically to shared memory parallelism Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 10

11 . Lectures q Book and online materials are you main sources for broader and deeper background in parallel computing q Lectures should be more interactive ❍ Supplement other sources of information ❍ Covers topics of more priority ❍ Intended to give you some of my perspective ❍ Will provide online access to lecture slides q Lectures will complement programming component, but intended to cover other parallel computing aspects q Try to arrange a guest lecture or 2 during quarter Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 11

12 . Parallel Programming Lab q Set up in the IPCC classroom ❍ Daniel Ellsworth and David Poliakoff leading the lab q Shared memory parallel programming (everyone) ❍ Cilk Plus ( http://www.cilkplus.org/ ) ◆ extension to the C and C++ languages to support data and task parallelism ❍ Thread Building Blocks (TBB) ( https://www.threadingbuildingblocks.org/ ) ◆ C++ template library for task parallelism ❍ OpenMP (http://openmp.org/wp/ ) ◆ C/C++ and Fortran directive-based parallelism q Distributed memory message passing (graduate) ❍ MPI (http://en.wikipedia.org/wiki/Message_Passing_Interface ) ◆ library for message communication on scalable parallel systems Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 12

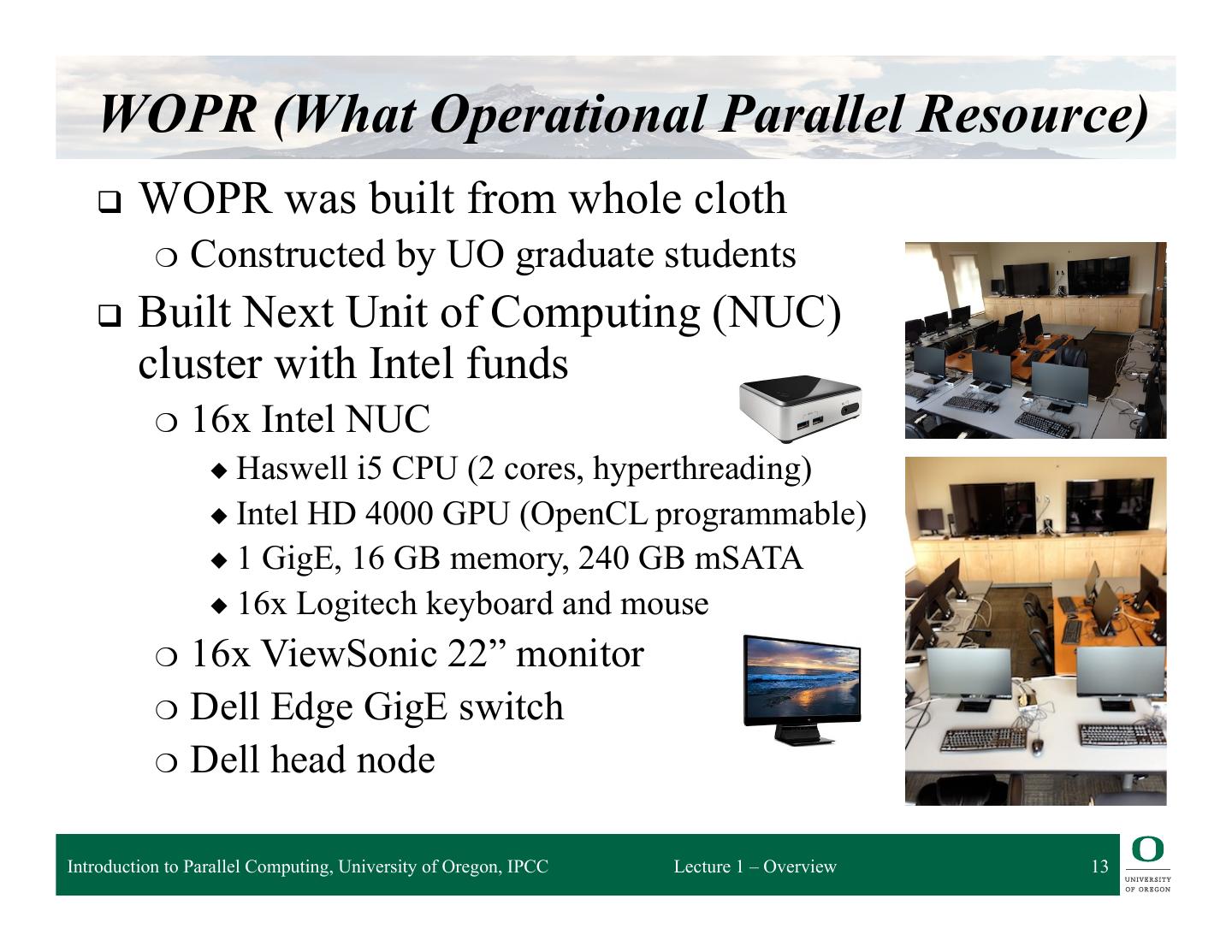

13 . WOPR (What Operational Parallel Resource) q WOPR was built from whole cloth ❍ Constructed by UO graduate students q Built Next Unit of Computing (NUC) cluster with Intel funds ❍ 16x Intel NUC ◆ Haswell i5 CPU (2 cores, hyperthreading) ◆ Intel HD 4000 GPU (OpenCL programmable) ◆ 1 GigE, 16 GB memory, 240 GB mSATA ◆ 16x Logitech keyboard and mouse ❍ 16x ViewSonic 22” monitor ❍ Dell Edge GigE switch ❍ Dell head node Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 13

14 . Other Parallel Resources – Mist Cluster q Distributedmemory cluster q 16 8-core nodes ❍ 2x quad-core Pentium Xeon (2.33 GHz) ❍ 16 Gbyte memory ❍ 160 Gbyte disk q Dual Gigabit ethernet adaptors q Master node (same specs) q Gigabit ethernet switch q mist.nic.uoregon.edu Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 14

15 . Other Parallel Resources – ACISS Cluster q Applied Computational Instrument for Scientific Synthesis ❍ NSF MRI R2 award (2010) q Basic nodes (1,536 total cores) ❍ 128 ProLiant SL390 G7 ❍ Two Intel X5650 2.66 GHz 6-core CPUs per node ❍ 72GB DDR3 RAM per basic node q Fat nodes (512 total cores) ❍ 16 ProLiant DL 580 G7 ❍ Four Intel X7560 2.266 GHz 8-core CPUs per node ❍ 384GB DDR3 per fat node q GPU nodes (624 total cores, 156 GPUs) ¦ 52 ProLiant SL390 G7 nodes, 3 NVidia M2070 GPUs (156 total GPUs) ¦ Two Intel X5650 2.66 GHz 6-core CPUs per node (624 total cores) ¦ 72GB DDR3 per GPU node ¦ ACISS has 2672 total cores q ACISS is located in the UO Computing Center Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 15

16 . Course Assignments q Homework ❍ Exercises primarily to prepare for midterm q Parallel programming lab ❍ Exercises for parallel programming patterns ❍ Program using Cilk Plus, Thread Building Blocks, OpenMP ❍ Graduate students will also do assignments with MPI q Team term project ❍ Programming, presentation, paper ❍ Graduate students distributed across teams q Research summary paper (graduate students) q Midterm exam later in the 7th week of the quarter q No final exam ❍ Team project presentations during final period Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 16

17 . Parallel Programming Term Project q Major programming project for the course ❍ Non-trivial parallel application ❍ Include performance analysis ❍ Use NUC cluster and possibly Mist and ACISS clusters q Project teams ❍ 5 person teams, 6 teams (depending on enrollment) ❍ Will try our best to balance skills ❍ Have 1 graduate student per team q Project dates ❍ Proposal due end of 4th week) ❍ Project talk during last class ❍ Project due at the end of the term q Need to get system accounts!!! Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 17

18 . Term Paper (for graduate students) q Investigate parallel computing topic of interest ❍ More in depth review ❍ Individual choice ❍ Summary of major points q Requires minimum of ten references ❍ Book and other references has a large bibliography ❍ Google Scholar, Keywords: parallel computing ❍ NEC CiteSeer Scientific Literature Digital Library q Paper abstract and references due by 3rd week q Final term paper due at the end of the term q Individual work Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 18

19 . Grading q Undergraduate ❍ 5% homework ❍ 10% pattern programming labs ❍ 20% programming assignments ❍ 30% midterm exam ❍ 35% project q Graduate ❍ 15% programming assignments ❍ 30% midterm exam ❍ 35% project ❍ 20% research paper Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 19

20 . Overview q Broad/Old field of computer science concerned with: ❍ Architecture, HW/SW systems, languages, programming paradigms, algorithms, and theoretical models ❍ Computing in parallel q Performance is the raison d’être for parallelism ❍ High-performance computing ❍ Drives computational science revolution q Topics of study ❍ Parallel architectures ¦ Parallel performance ❍ Parallel programming models and tools ❍ Parallel algorithms ¦ Parallel applications Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 20

21 . What will you get out of CIS 410/510? q In-depth understanding of parallel computer design q Knowledge of how to program parallel computer systems q Understanding of pattern-based parallel programming q Exposure to different forms parallel algorithms q Practical experience using a parallel cluster q Background on parallel performance modeling q Techniques for empirical performance analysis q Fun and new friends Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 21

22 . Parallel Processing – What is it? q A parallel computer is a computer system that uses multiple processing elements simultaneously in a cooperative manner to solve a computational problem q Parallel processing includes techniques and technologies that make it possible to compute in parallel ❍ Hardware, networks, operating systems, parallel libraries, languages, compilers, algorithms, tools, … q Parallel computing is an evolution of serial computing ❍ Parallelism is natural ❍ Computing problems differ in level / type of parallelism q Parallelism is all about performance! Really? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 22

23 . Concurrency q Consider multiple tasks to be executed in a computer q Tasks are concurrent with respect to each if ❍ They can execute at the same time (concurrent execution) ❍ Implies that there are no dependencies between the tasks q Dependencies ❍ If a task requires results produced by other tasks in order to execute correctly, the task’s execution is dependent ❍ If two tasks are dependent, they are not concurrent ❍ Some form of synchronization must be used to enforce (satisfy) dependencies q Concurrency is fundamental to computer science ❍ Operating systems, databases, networking, … Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 23

24 . Concurrency and Parallelism q Concurrent is not the same as parallel! Why? q Parallel execution ❍ Concurrent tasks actually execute at the same time ❍ Multiple (processing) resources have to be available q Parallelism = concurrency + “parallel” hardware ❍ Both are required ❍ Find concurrent execution opportunities ❍ Develop application to execute in parallel ❍ Run application on parallel hardware q Is a parallel application a concurrent application? q Is a parallel application run with one processor parallel? Why or why not? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 24

25 . Parallelism q There are granularities of parallelism (parallel execution) in programs ❍ Processes, threads, routines, statements, instructions, … ❍ Think about what are the software elements that execute concurrently q These must be supported by hardware resources ❍ Processors, cores, … (execution of instructions) ❍ Memory, DMA, networks, … (other associated operations) ❍ All aspects of computer architecture offer opportunities for parallel hardware execution q Concurrency is a necessary condition for parallelism ❍ Where can you find concurrency? ❍ How is concurrency expressed to exploit parallel systems? Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 25

26 . Why use parallel processing? q Two primary reasons (both performance related) ❍ Faster time to solution (response time) ❍ Solve bigger computing problems (in same time) q Other factors motivate parallel processing ❍ Effective use of machine resources ❍ Cost efficiencies ❍ Overcoming memory constraints q Serial machines have inherent limitations ❍ Processor speed, memory bottlenecks, … q Parallelism has become the future of computing q Performance is still the driving concern q Parallelism = concurrency + parallel HW + performance Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 26

27 . Perspectives on Parallel Processing q Parallel computer architecture ❍ Hardware needed for parallel execution? ❍ Computer system design q (Parallel) Operating system ❍ How to manage systems aspects in a parallel computer q Parallel programming ❍ Libraries (low-level, high-level) ❍ Languages ❍ Software development environments q Parallel algorithms q Parallel performance evaluation q Parallel tools ❍ Performance, analytics, visualization, … Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 27

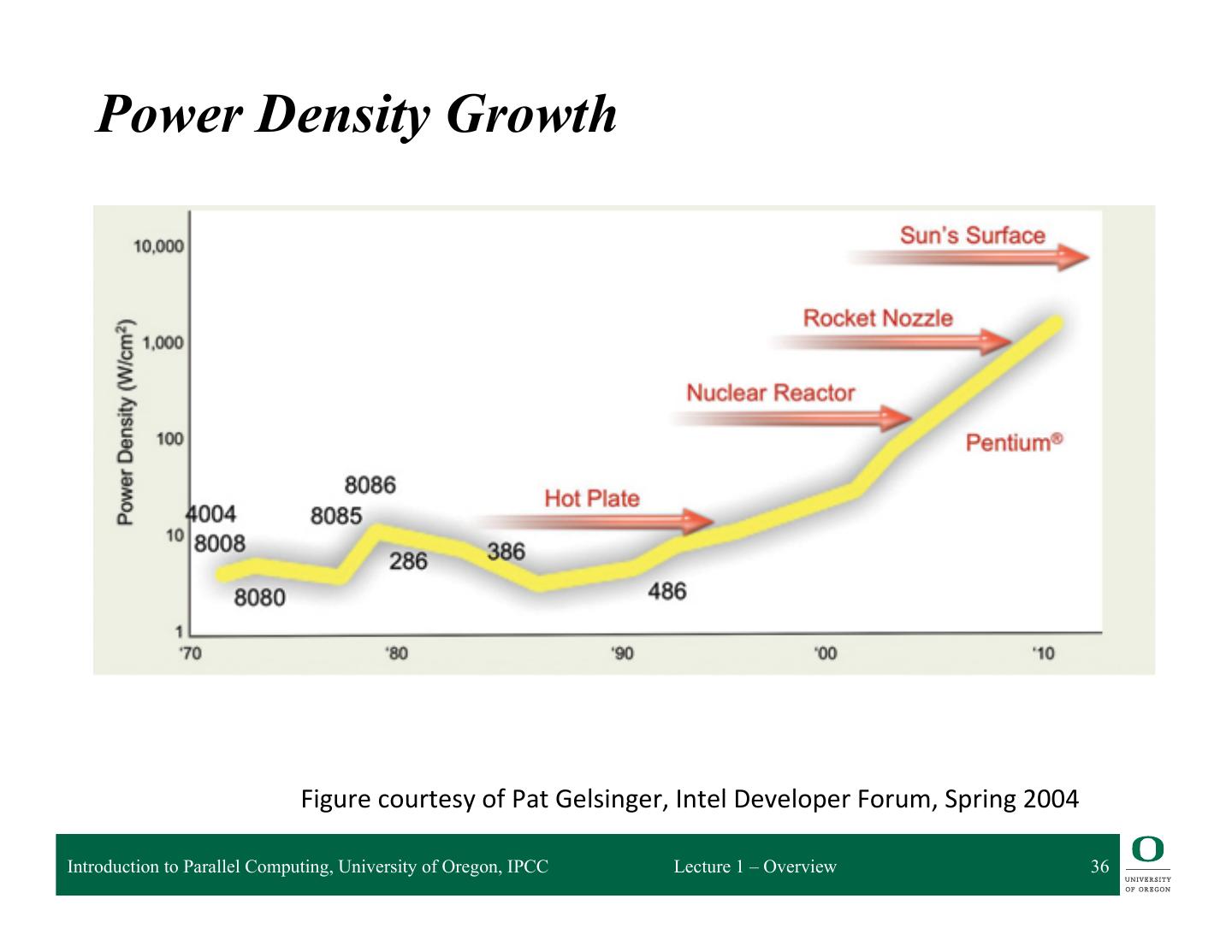

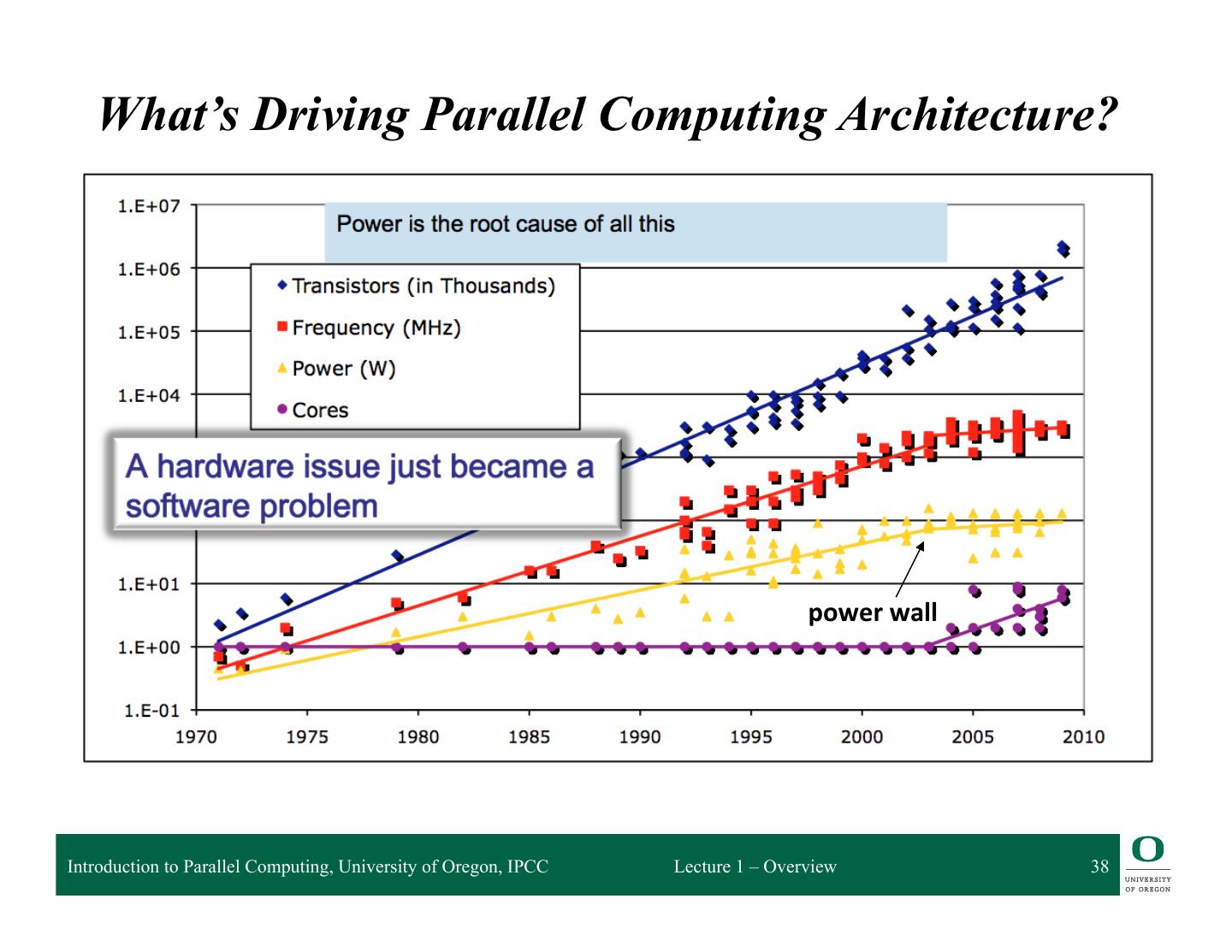

28 . Why study parallel computing today? q Computing architecture ❍ Innovations often drive to novel programming models q Technological convergence ❍ The “killer micro” is ubiquitous ❍ Laptops and supercomputers are fundamentally similar! ❍ Trends cause diverse approaches to converge q Technological trends make parallel computing inevitable ❍ Multi-core processors are here to stay! ❍ Practically every computing system is operating in parallel q Understand fundamental principles and design tradeoffs ❍ Programming, systems support, communication, memory, … ❍ Performance q Parallelism is the future of computing Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 28

29 . Inevitability of Parallel Computing q Application demands ❍ Insatiable need for computing cycles q Technology trends ❍ Processor and memory q Architecture trends q Economics q Current trends: ❍ Today’s microprocessors have multiprocessor support ❍ Servers and workstations available as multiprocessors ❍ Tomorrow’s microprocessors are multiprocessors ❍ Multi-core is here to stay and #cores/processor is growing ❍ Accelerators (GPUs, gaming systems) Introduction to Parallel Computing, University of Oregon, IPCC Lecture 1 – Overview 29