- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

07 Transactional Recovery: Extensions and Applications

展开查看详情

1 . Today’s Papers • Segment-Based Recovery: Write-ahead Logging Revisited EECS 262a Sears and Brewer. Appears in Proceedings of the VLDB Endowment, Advanced Topics in Computer Systems Vol 2, No. 1, August 2009, pp 490-501 • Lightweight Recoverable Virtual Memory Lecture 7 M. Satyanarayanan, Henry H. Mashburn, Puneet Kumar, David C. Steere, and James J. Kistler. Appears in Proceedings of the 14th ACM Symposium on Operating Systems Principles (SOSP 1993) SOR & LRVM September 13th, 2018 • Thoughts? John Kubiatowicz Electrical Engineering and Computer Sciences University of California, Berkeley http://www.eecs.berkeley.edu/~kubitron/cs262 9/13/2018 Cs262a-F18 Lecture-07 2 Segment-Oriented Recovery Original Goals (Stasis) • Build an open-source transaction system with full ARIES- style steal/no-force transactions • Try to make the DBMS more “layered” in the OS style • Try to support a wider array of transactional systems: version control, file systems, bioinformatics or science databases, graph problems, search engines, persistent • ARIES works great but is 20+ years old and has some objects, ... problems: • Competitive performance – Viewed as very complex • These goals were mostly met, although the open-source – No available implementations with source code version needs more users and they have only tried some – Part of a monolithic DBMS: can you use reuse transactions for other systems? of the usual applications – LSN in the page breaks up large objects (Fig 1), prevents efficient I/O • Original version was basically straight ARIES • DBMS seems hard to scale for cloud computing (except – Development led to many insights and the new version based on segments by partitioning) 9/13/2018 Cs262a-F18 Lecture-07 3 9/13/2018 Cs262a-F18 Lecture-07 4

2 . Segment-Oriented Recovery (SOR) The Big Four Positives of SOR • A segment is just a range of bytes 1) Enable DMA and/or zero-copy I/O – May span multiple pages – May have many per page – Analogous to segments and pages in computer architecture (but arch uses 2) Fix persistent objects segments for protection boundaries, SOR uses them for recovery boundaries; in both, pages are fixed size and the unit of movement to disk) 3) Enable high/low priority transactions (log reordering • Key idea – change two of the core ARIES design points: on the fly) – Recover segments not pages – No LSNs on pages » ARIES: LSN per page enable exactly once redo semantics 4) Decouple the components; enable cloud computing » SOR: estimate the LSN conservatively (older) => at least once versions semantics [but must now limit the actions we can take in redo] 9/13/2018 Cs262a-F18 Lecture-07 5 9/13/2018 Cs262a-F18 Lecture-07 6 1) Enable DMA and/or Zero-Copy I/O 2) Fix Persistent Objects • No LSNs per page mean that large objects are • Problem: consider page with multiple objects, each of contiguous on disk which has an in memory representation (e.g., a C++ or Java object) – Suppose we update object A and generate a log entry with LSN=87 • No “segmentation and reassembly” to move objects – Next we update object B (on the same page), generate its log entry with to/from the disk LSN=94, and write it back to the disk page, updating the page LSN – This pattern breaks recovery: the new page LSN (94) implies that the page reflects redo log entry 87, but it does not. • No need to involve the CPU in object I/O (very • ARIES “solution”: expensive); use DMA instead – Disk pages (in memory) must be updated on every log write; this is “write through caching” – all updates are written through to the buffer manager page • Any downsides? • SOR solution: – There is no page LSN and thus no error – Buffer manager is written on cache eviction – “write back caching”. This implies much less copying/ marshaling for hot objects. – This is like “no force” between the app cache and the buffer manager (!) 9/13/2018 Cs262a-F18 Lecture-07 7 9/13/2018 Cs262a-F18 Lecture-07 8

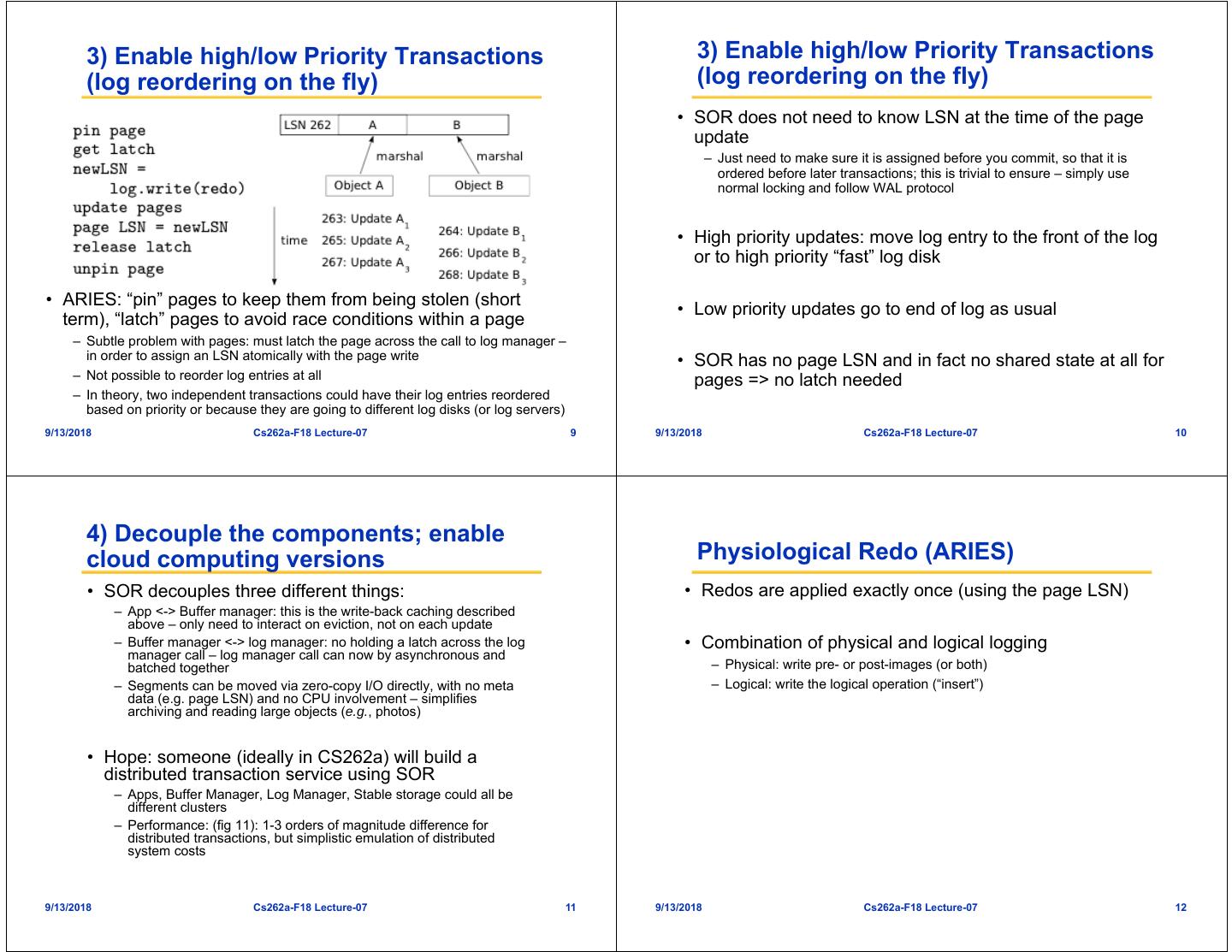

3 . 3) Enable high/low Priority Transactions 3) Enable high/low Priority Transactions (log reordering on the fly) (log reordering on the fly) • SOR does not need to know LSN at the time of the page update – Just need to make sure it is assigned before you commit, so that it is ordered before later transactions; this is trivial to ensure – simply use normal locking and follow WAL protocol • High priority updates: move log entry to the front of the log or to high priority “fast” log disk • ARIES: “pin” pages to keep them from being stolen (short • Low priority updates go to end of log as usual term), “latch” pages to avoid race conditions within a page – Subtle problem with pages: must latch the page across the call to log manager – in order to assign an LSN atomically with the page write • SOR has no page LSN and in fact no shared state at all for – Not possible to reorder log entries at all pages => no latch needed – In theory, two independent transactions could have their log entries reordered based on priority or because they are going to different log disks (or log servers) 9/13/2018 Cs262a-F18 Lecture-07 9 9/13/2018 Cs262a-F18 Lecture-07 10 4) Decouple the components; enable cloud computing versions Physiological Redo (ARIES) • SOR decouples three different things: • Redos are applied exactly once (using the page LSN) – App <-> Buffer manager: this is the write-back caching described above – only need to interact on eviction, not on each update – Buffer manager <-> log manager: no holding a latch across the log • Combination of physical and logical logging manager call – log manager call can now by asynchronous and batched together – Physical: write pre- or post-images (or both) – Segments can be moved via zero-copy I/O directly, with no meta – Logical: write the logical operation (“insert”) data (e.g. page LSN) and no CPU involvement – simplifies archiving and reading large objects (e.g., photos) • Hope: someone (ideally in CS262a) will build a distributed transaction service using SOR – Apps, Buffer Manager, Log Manager, Stable storage could all be different clusters – Performance: (fig 11): 1-3 orders of magnitude difference for distributed transactions, but simplistic emulation of distributed system costs 9/13/2018 Cs262a-F18 Lecture-07 11 9/13/2018 Cs262a-F18 Lecture-07 12

4 . SOR Recovery SOR Redo • Redos are physical • Redos may applied more than once; we go back • Normal undos are like redos and set a new LSN farther in time than strictly necessary (does not revert to the old LSN) – Wouldn’t work given multiple objects per page! • Redos must be physical “blind writes” – write content • To enable more concurrency, do not undo structural that do not depend on the previous contents changes of a B-Tree (or other index) – Instead of a physical undo, issue a new logical undo that is the inverse operation • Undos can still be logical for concurrency – Enables concurrency because we only hold short locks for the structural change rather than long locks (until the end of the transaction) • Slotted page layout changes require redo logging • Slotted pages: – Add an array of offsets to each page (slots), then store records with a slot number and use the array to look up the current offset for that record – Allows changing the page layout without any log entries 9/13/2018 Cs262a-F18 Lecture-07 13 9/13/2018 Cs262a-F18 Lecture-07 14 Core SOR Redo Phase Hybrid Recovery • Periodically write estimated LSNs to log (after you • Can mix SOR and traditional ARIES write back a page) • Some pages have LSNs, some don’t • Start from disk version of the segment (or from snapshot or whole segment write) • Can’t easily look at a page and tell! – All the bytes are used for segments • Replay all redos since estimated LSN (worst case the beginning of the truncated log) • Log page type changes and zero out the page – Even though some might have been applied already – Recovery may actually corrupt a page temporarily until it gets the type correct, at which point it rolls forward correctly from the all zero page • For all bytes of the segment: • Example of when to use: – Either it was correct on disk and not changed, – B-Trees: internal nodes on pages, leaves are segments – Or it was written during recovery in order by time (and thus – Tables of strings: short strings good for pages especially if they change correctly reflects the last log entry to touch that byte) size; long strings are good for segments due to contiguous layout 9/13/2018 Cs262a-F18 Lecture-07 15 9/13/2018 Cs262a-F18 Lecture-07 16

5 . Summary Extra: Why are there LSNs on pages? • 3 key features: • So that we can atomically write the timestamp (LSN) – Open-source implementation that decouples components while with the page improving performance and efficiency • Problem: page writes aren’t actually atomic anymore » https://code.google.com/p/stasis/ – Preserve ARIES semantics (compatibility is a bonus benefit) • Solution: make them atomic so that ARIES still works – Enable QoS for transactions – Write some bits into all sectors of a page (8K page = 16 512B sectors); compare those bits with a value store somewhere else. No match => recover the page (but may match and be wrong). Assumes sectors are written atomically, which is reasonable. • Some flaws: – Write a checksum for each page (including the LSN) and check it – “Research” implementation: SOR is only one component of a when reading back the page DBMS system, not a complete practical system implementation – Write-back experiment: simple datatype, no error bars, y-axis • Both solutions impact I/O performance some scale, … 9/13/2018 Cs262a-F18 Lecture-07 17 9/13/2018 Cs262a-F18 Lecture-07 18 SOR Approach Is this a good paper? • Checksum each segment (or group of segments if • What were the authors’ goals? they are small) • What about the evaluation / metrics? • On checksum failure, can replay last redo, which can • Did they convince you that this was a good system fix a torn page (and then confirm using the check- /approach? sum); if that doesn’t work go back to old version and roll forward • Were there any red-flags? • Blind writes can fix corrupted pages since they do not • What mistakes did they make? depend on its contents • Does the system/approach meet the “Test of Time” challenge? • How would you review this paper today? 9/13/2018 Cs262a-F18 Lecture-07 19 9/13/2018 Cs262a-F18 Lecture-07 20

6 . Lightweight Recoverable Virtual Memory (LRVM) • Eppinger Thesis (in Related Work [9]): – RVM ... poses and answers the question "What is the simplest realization of essential transactional properties for the average application?" By doing so, it makes BREAK transactions accessible to applications that have hitherto balked at the baggage that comes with sophisticated transactional facilities. • Answer: – Library implementing No-Steal, No-Force virtual memory persistence, with manual copy-on-write and redo-only logs 9/13/2018 Cs262a-F18 Lecture-07 22 LRVM Lessons from Camelot • Goal: • Camelot had an “ugly” object and process model – its – Allow Unix applications to manipulate persistent data structures componentization led to multiple address spaces and lots of (such as the meta data for a file system) in a manner that has expensive IPC (~600x local call on RISC) clear-cut failure semantics – Too tied to Mach and complicated log management for multiple applications • Heavyweight facilities impose additional onerous • Existing solutions programming constraints – kernel threads vs. user-level threads – Such as Camelot, were too heavyweight, cumbersome to use, and imposed programming constraints • Size and complexity of Camelot and its dependence on – Wanted a ‘lite’ version of these facilities that didn't also provide special Mach features resulted in maintenance headaches (unneeded) support for distributed and nested transactions, and lack of portability shared logs, etc. – The former of these two shouldn't be an issue in a “production” system • However, note that the golden age of CMU Systems learned • Solution a lot from the sharing of artifacts: Mach, AFS, Coda... – A library package that provides only recoverable virtual memory – A lot of positive spirit in this paper 9/13/2018 Cs262a-F18 Lecture-07 23 9/13/2018 Cs262a-F18 Lecture-07 24

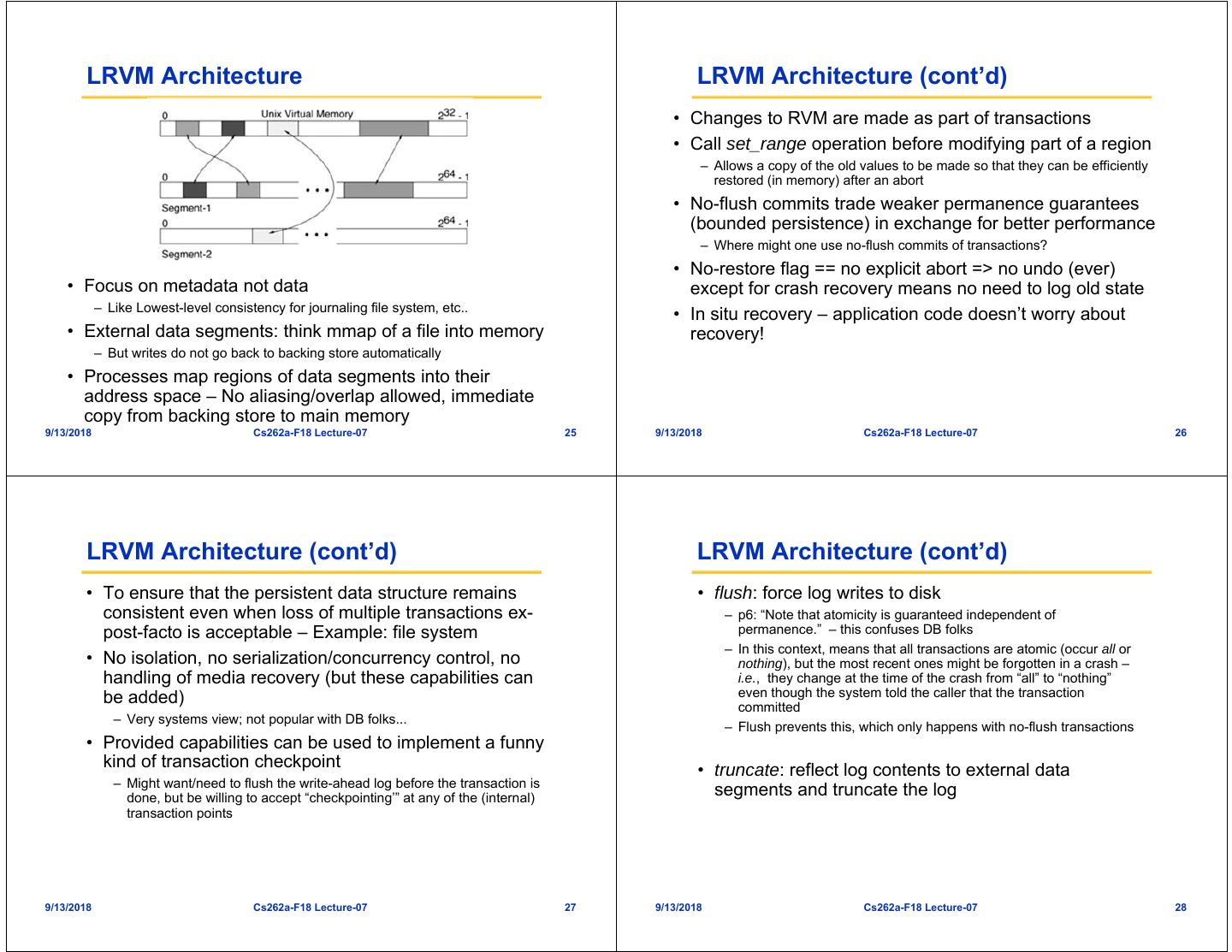

7 . LRVM Architecture LRVM Architecture (cont’d) • Changes to RVM are made as part of transactions • Call set_range operation before modifying part of a region – Allows a copy of the old values to be made so that they can be efficiently restored (in memory) after an abort • No-flush commits trade weaker permanence guarantees (bounded persistence) in exchange for better performance – Where might one use no-flush commits of transactions? • No-restore flag == no explicit abort => no undo (ever) • Focus on metadata not data except for crash recovery means no need to log old state – Like Lowest-level consistency for journaling file system, etc.. • In situ recovery – application code doesn’t worry about • External data segments: think mmap of a file into memory recovery! – But writes do not go back to backing store automatically • Processes map regions of data segments into their address space – No aliasing/overlap allowed, immediate copy from backing store to main memory 9/13/2018 Cs262a-F18 Lecture-07 25 9/13/2018 Cs262a-F18 Lecture-07 26 LRVM Architecture (cont’d) LRVM Architecture (cont’d) • To ensure that the persistent data structure remains • flush: force log writes to disk consistent even when loss of multiple transactions ex- – p6: “Note that atomicity is guaranteed independent of post-facto is acceptable – Example: file system permanence.” – this confuses DB folks – In this context, means that all transactions are atomic (occur all or • No isolation, no serialization/concurrency control, no nothing), but the most recent ones might be forgotten in a crash – handling of media recovery (but these capabilities can i.e., they change at the time of the crash from “all” to “nothing” be added) even though the system told the caller that the transaction committed – Very systems view; not popular with DB folks... – Flush prevents this, which only happens with no-flush transactions • Provided capabilities can be used to implement a funny kind of transaction checkpoint • truncate: reflect log contents to external data – Might want/need to flush the write-ahead log before the transaction is done, but be willing to accept “checkpointing’” at any of the (internal) segments and truncate the log transaction points 9/13/2018 Cs262a-F18 Lecture-07 27 9/13/2018 Cs262a-F18 Lecture-07 28

8 . LRVM Implementation LRVM Optimizations • Log only contains new values because uncommitted • Intra-transaction: Coalescing set_range changes never get written to external data segments address ranges is important since – No undo/redo operations programmers have to program defensively – Distinguishes between external data segment (master copy) and VM backing store (durable temp copy) => can STEAL by propagating to VM without need for UNDO (since you haven’t written over the master copy yet) • Crash recovery and log truncation: see paper section 5.1.2 • Inter-transaction: Coalesce no-flush log • Missing “set range” call is very bad – nasty non-deterministic records at (flush) commit time bugs that show up only during some crashes... – E.g., for a multi-file copy to same directory only care about last update (subsumes earlier ones) – Might use static analysis to verify set range usage... • Write-ahead logging: log intent then do idempotent updates to master copy – keep retrying update until it succeeds 9/13/2018 Cs262a-F18 Lecture-07 29 9/13/2018 Cs262a-F18 Lecture-07 30 LRVM Performance 3 Key Features about the Paper • Beats Camelot across the board • Goal: – A facility that allows programs to manipulate persistent data structures in a manner that has clear-cut failure semantics • Lack of integration with VM does not appear to be a significant problem as long as: • Original experience with a heavyweight, fully general – Ratio of RVM / Physical memory is small (<70%, but <40% best for transaction support facility led to a project to build a good locality and <25% for poor locality applications) lightweight facility that provides only recoverable – VM pageouts are rare virtual memory (since that is all that was needed for – Slower startup is acceptable (read entire RVM instead of on-demand) the above-mentioned goal) • Log traffic optimizations provide significant savings • Lightweight facility provided the desired functionality in about 10K lines of code vs 60K Camelot, with (20-30%), though not multiple factors savings significantly better performance and portability 9/13/2018 Cs262a-F18 Lecture-07 31 9/13/2018 Cs262a-F18 Lecture-07 32

9 . A Flaw Distributed Transactions (background) • The paper describes how a general facility can be reduced • Two models for committing a transaction: and simplified in order to support a narrower applications – One-phase: used by servers that maintain only volatile state. Servers domain only send an end request to such servers (i.e., they don't participate in the voting phase of commit) • Although it argues that the more general functionality could – Two-phase: used by servers that maintain recoverable state. Servers be regained by building additional layers on top of the send both vote and end requests to such servers reduced facility, this hasn't been demonstrated • Also allows for “persistent errors” – errors in a set range • 4 different kinds of votes may be returned: region aren’t fixable by reboot... they last until fixed by hand – Vote-abort • A lesson: – Vote-commit-read-only: participant has not modified recoverable data and drops out of phase two of commit protocol – When building a general OS facility, pick one (or a very few) thing(s) and do it well rather than providing a general facility that offers many – Vote-commit-volatile: participant has not modified recoverable data, things done poorly but wants to know outcome of the transaction – Vote-commit-recoverable: participant has modified recoverable data 9/13/2018 Cs262a-F18 Lecture-07 33 9/13/2018 Cs262a-F18 Lecture-07 34 Is this a good paper? • What were the authors’ goals? • What about the evaluation/metrics? • Did they convince you that this was a good system/approach? • Were there any red-flags? • What mistakes did they make? • Does the system/approach meet the “Test of Time” challenge? • How would you review this paper today? 9/13/2018 Cs262a-F18 Lecture-07 35