- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

06 Static Scheduling,Scoreboard

展开查看详情

1 . CS252 Review: Precise Interrupts/Exceptions Graduate Computer Architecture • An interrupt or exception is considered precise if there Lecture 6 is a single instruction (or interrupt point) for which: – All instructions before that have committed their state – No following instructions (including the interrupting instruction) Static Scheduling, have modified any state. Scoreboard • This means, that you can restart execution at the February 6th, 2012 interrupt point and “get the right answer” – Implicit in our previous example of a device interrupt: » Interrupt point is at first lw instruction John Kubiatowicz External Interrupt Electrical Engineering and Computer Sciences add r1,r2,r3 Int handler subi r4,r1,#4 University of California, Berkeley slli r4,r4,#2 lw r2,0(r4) lw r3,4(r4) http://www.eecs.berkeley.edu/~kubitron/cs252 add r2,r2,r3 sw 8(r4),r2 2/06/2012 cs252-S12, Lecture06 2 Can we make CPI closer to 1? FP Loop: Where are the Hazards? • Let’s assume full pipelining: Loop: LD F0,0(R1) ;F0=vector element – If we have a 4-cycle latency, then we need 3 instructions ADDD F4,F0,F2 ;add scalar from F2 between a producing instruction and its use: SD 0(R1),F4 ;store result SUBI R1,R1,8 ;decrement pointer 8B (DW) multf $F0,$F2,$F4 BNEZ R1,Loop ;branch R1!=zero delay-1 delay-2 NOP ;delayed branch slot delay-3 addf $F6,$F10,$F0 Earliest forwarding for Instruction Instruction Execution Latency in Use Latency in 4-cycle instructions producing result using result clock cycles clock cycles FP ALU op Another FP ALU op 4 3 Earliest forwarding for FP ALU op Store double 4 2 1-cycle instructions Load double FP ALU op 2 1 Load double Store double 2 0 Fetch Decode Ex1 Ex2 Ex3 Ex4 WB Integer op Integer op 1 0 addf delay3 delay2 delay1 multf • Where are the stalls? 2/06/2012 cs252-S12, Lecture06 3 2/06/2012 cs252-S12, Lecture06 4

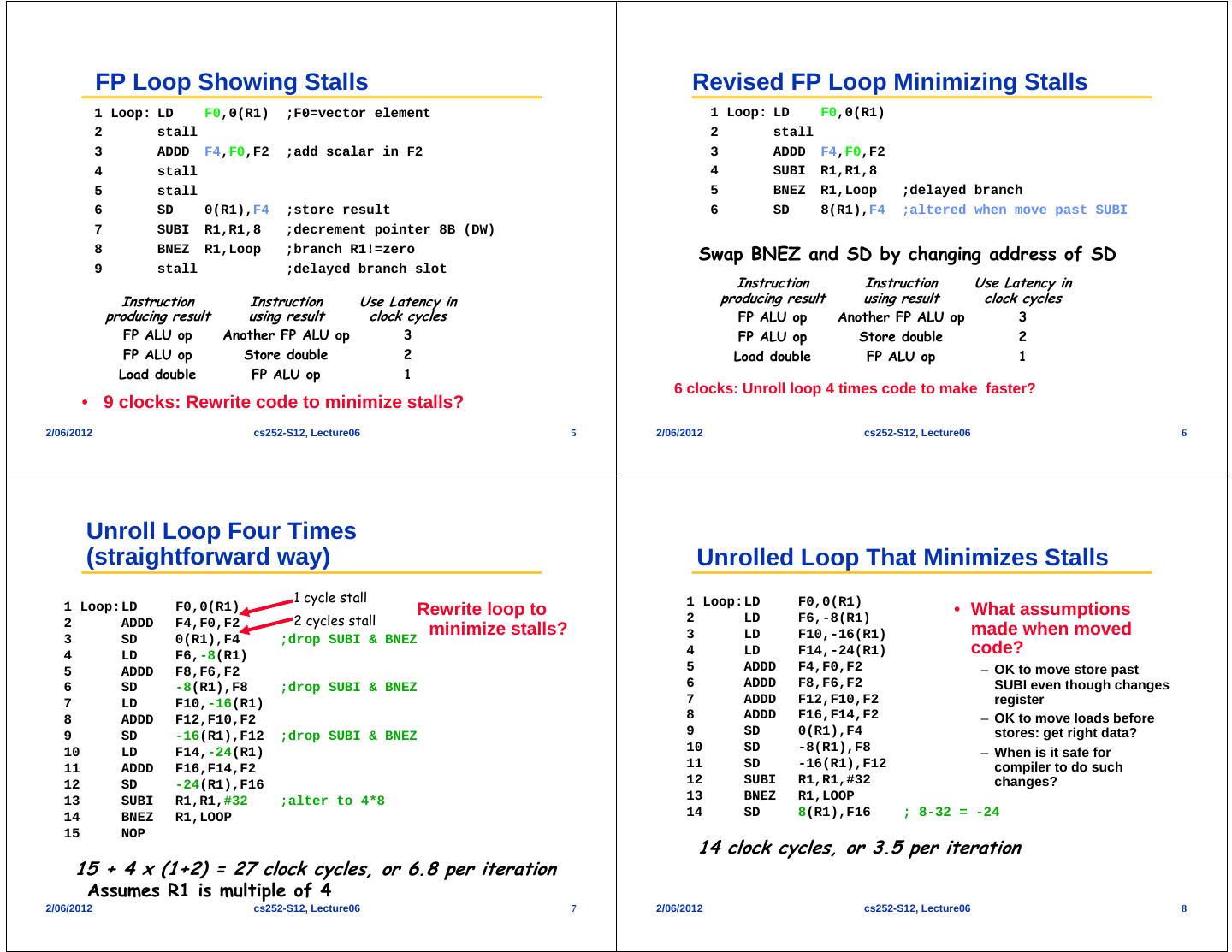

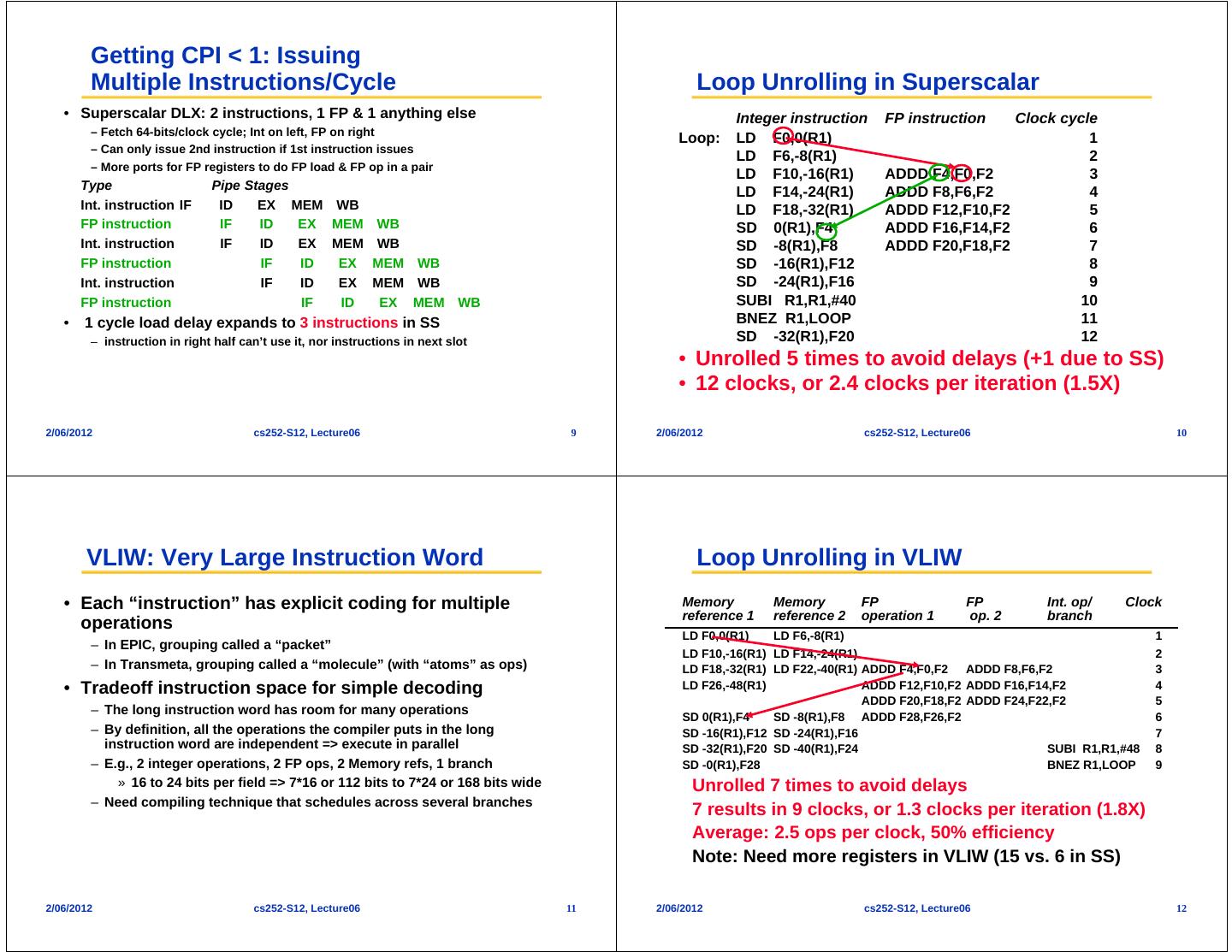

2 . FP Loop Showing Stalls Revised FP Loop Minimizing Stalls 1 Loop: LD F0,0(R1) ;F0=vector element 1 Loop: LD F0,0(R1) 2 stall 2 stall 3 ADDD F4,F0,F2 ;add scalar in F2 3 ADDD F4,F0,F2 4 stall 4 SUBI R1,R1,8 5 stall 5 BNEZ R1,Loop ;delayed branch 6 SD 0(R1),F4 ;store result 6 SD 8(R1),F4 ;altered when move past SUBI 7 SUBI R1,R1,8 ;decrement pointer 8B (DW) 8 BNEZ R1,Loop ;branch R1!=zero Swap BNEZ and SD by changing address of SD 9 stall ;delayed branch slot Instruction Instruction Use Latency in Instruction Instruction Use Latency in producing result using result clock cycles producing result using result clock cycles FP ALU op Another FP ALU op 3 FP ALU op Another FP ALU op 3 FP ALU op Store double 2 FP ALU op Store double 2 Load double FP ALU op 1 Load double FP ALU op 1 6 clocks: Unroll loop 4 times code to make faster? • 9 clocks: Rewrite code to minimize stalls? 2/06/2012 cs252-S12, Lecture06 5 2/06/2012 cs252-S12, Lecture06 6 Unroll Loop Four Times (straightforward way) Unrolled Loop That Minimizes Stalls 1 cycle stall 1 Loop:LD F0,0(R1) 1 Loop:LD F0,0(R1) Rewrite loop to • What assumptions 2 ADDD F4,F0,F2 2 cycles stall 2 LD F6,-8(R1) 3 SD 0(R1),F4 ;drop SUBI & BNEZ minimize stalls? 3 LD F10,-16(R1) made when moved 4 LD F6,-8(R1) 4 LD F14,-24(R1) code? 5 ADDD F8,F6,F2 5 ADDD F4,F0,F2 – OK to move store past 6 SD -8(R1),F8 ;drop SUBI & BNEZ 6 ADDD F8,F6,F2 SUBI even though changes 7 LD F10,-16(R1) 7 ADDD F12,F10,F2 register 8 ADDD F12,F10,F2 8 ADDD F16,F14,F2 – OK to move loads before 9 SD -16(R1),F12 ;drop SUBI & BNEZ 9 SD 0(R1),F4 stores: get right data? 10 LD F14,-24(R1) 10 SD -8(R1),F8 – When is it safe for 11 ADDD F16,F14,F2 11 SD -16(R1),F12 compiler to do such 12 SD -24(R1),F16 12 SUBI R1,R1,#32 changes? 13 SUBI R1,R1,#32 ;alter to 4*8 13 BNEZ R1,LOOP 14 BNEZ R1,LOOP 14 SD 8(R1),F16 ; 8-32 = -24 15 NOP 14 clock cycles, or 3.5 per iteration 15 + 4 x (1+2) = 27 clock cycles, or 6.8 per iteration Assumes R1 is multiple of 4 2/06/2012 cs252-S12, Lecture06 7 2/06/2012 cs252-S12, Lecture06 8

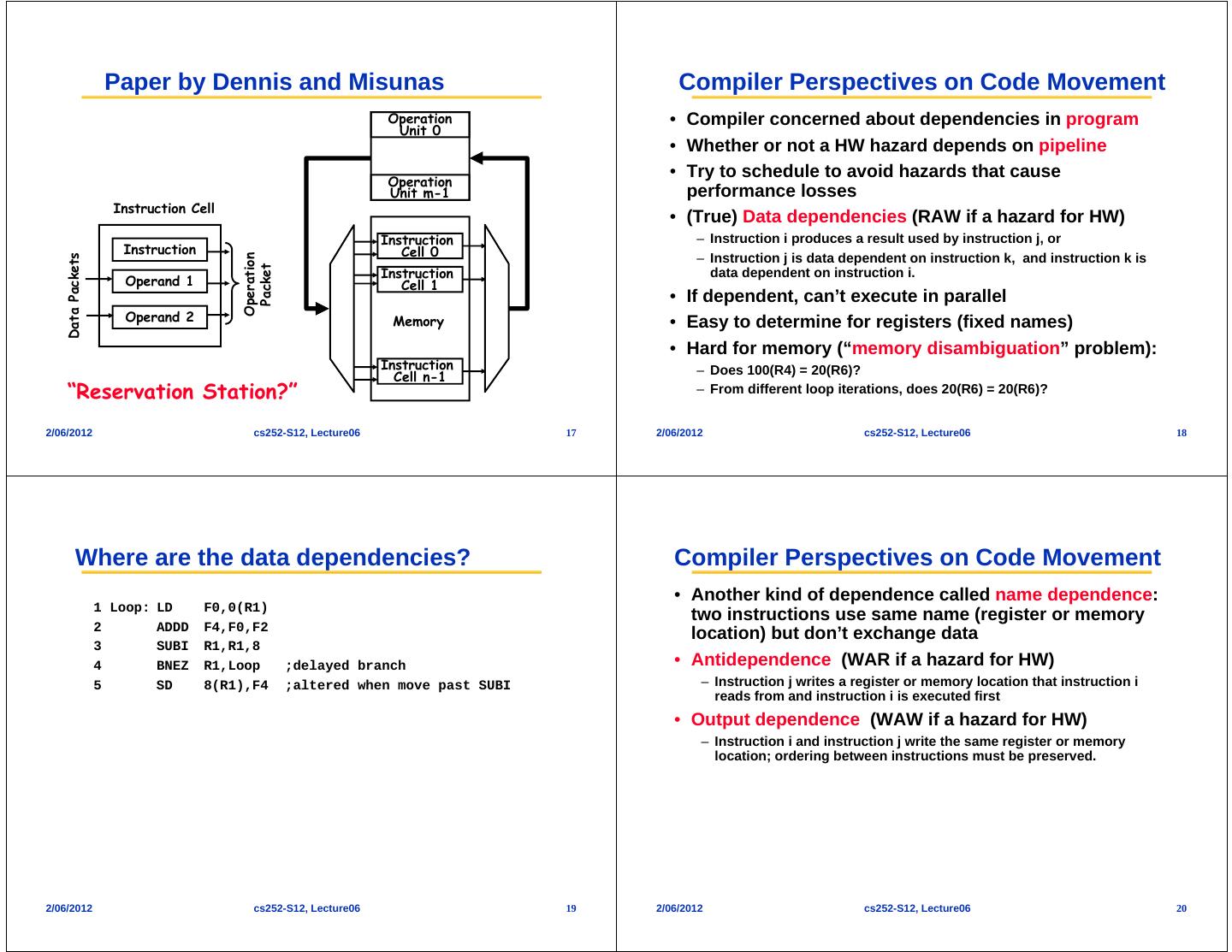

3 . Getting CPI < 1: Issuing Multiple Instructions/Cycle Loop Unrolling in Superscalar • Superscalar DLX: 2 instructions, 1 FP & 1 anything else Integer instruction FP instruction Clock cycle – Fetch 64-bits/clock cycle; Int on left, FP on right Loop: LD F0,0(R1) 1 – Can only issue 2nd instruction if 1st instruction issues LD F6,-8(R1) 2 – More ports for FP registers to do FP load & FP op in a pair LD F10,-16(R1) ADDD F4,F0,F2 3 Type Pipe Stages LD F14,-24(R1) ADDD F8,F6,F2 4 Int. instruction IF ID EX MEM WB LD F18,-32(R1) ADDD F12,F10,F2 5 FP instruction IF ID EX MEM WB SD 0(R1),F4 ADDD F16,F14,F2 6 Int. instruction IF ID EX MEM WB SD -8(R1),F8 ADDD F20,F18,F2 7 FP instruction IF ID EX MEM WB SD -16(R1),F12 8 Int. instruction IF ID EX MEM WB SD -24(R1),F16 9 FP instruction IF ID EX MEM WB SUBI R1,R1,#40 10 • 1 cycle load delay expands to 3 instructions in SS BNEZ R1,LOOP 11 – instruction in right half can’t use it, nor instructions in next slot SD -32(R1),F20 12 • Unrolled 5 times to avoid delays (+1 due to SS) • 12 clocks, or 2.4 clocks per iteration (1.5X) 2/06/2012 cs252-S12, Lecture06 9 2/06/2012 cs252-S12, Lecture06 10 VLIW: Very Large Instruction Word Loop Unrolling in VLIW • Each “instruction” has explicit coding for multiple Memory Memory FP FP Int. op/ Clock reference 1 reference 2 operation 1 op. 2 branch operations LD F0,0(R1) LD F6,-8(R1) 1 – In EPIC, grouping called a “packet” LD F10,-16(R1) LD F14,-24(R1) 2 – In Transmeta, grouping called a “molecule” (with “atoms” as ops) LD F18,-32(R1) LD F22,-40(R1) ADDD F4,F0,F2 ADDD F8,F6,F2 3 • Tradeoff instruction space for simple decoding LD F26,-48(R1) ADDD F12,F10,F2 ADDD F16,F14,F2 4 ADDD F20,F18,F2 ADDD F24,F22,F2 5 – The long instruction word has room for many operations SD 0(R1),F4 SD -8(R1),F8 ADDD F28,F26,F2 6 – By definition, all the operations the compiler puts in the long SD -16(R1),F12 SD -24(R1),F16 7 instruction word are independent => execute in parallel SD -32(R1),F20 SD -40(R1),F24 SUBI R1,R1,#48 8 – E.g., 2 integer operations, 2 FP ops, 2 Memory refs, 1 branch SD -0(R1),F28 BNEZ R1,LOOP 9 » 16 to 24 bits per field => 7*16 or 112 bits to 7*24 or 168 bits wide Unrolled 7 times to avoid delays – Need compiling technique that schedules across several branches 7 results in 9 clocks, or 1.3 clocks per iteration (1.8X) Average: 2.5 ops per clock, 50% efficiency Note: Need more registers in VLIW (15 vs. 6 in SS) 2/06/2012 cs252-S12, Lecture06 11 2/06/2012 cs252-S12, Lecture06 12

4 . Another possibility: Software Pipelining Software Pipelining Example Before: Unrolled 3 times After: Software Pipelined • Observation: if iterations from loops are independent, 1 LD F0,0(R1) 1 SD 0(R1),F4 ; Stores M[i] then can get more ILP by taking instructions from 2 ADDD F4,F0,F2 2 ADDD F4,F0,F2 ; Adds to M[i-1] different iterations 3 SD 0(R1),F4 3 LD F0,-16(R1); Loads M[i-2] 4 LD F6,-8(R1) 4 SUBI R1,R1,#8 • Software pipelining: reorganizes loops so that each 5 ADDD F8,F6,F2 5 BNEZ R1,LOOP iteration is made from instructions chosen from different 6 SD -8(R1),F8 iterations of the original loop ( Tomasulo in SW) 7 LD F10,-16(R1) SW Pipeline overlapped ops 8 ADDD F12,F10,F2 Iteration 9 SD -16(R1),F12 0 Iteration 1 Iteration 10 SUBI R1,R1,#24 2 Iteration 11 BNEZ R1,LOOP Time 3 Iteration Loop Unrolled • Symbolic Loop Unrolling 4 Software- – Maximize result-use distance pipelined iteration – Less code space than unrolling – Fill & drain pipe only once per loop Time vs. once per each unrolled iteration in loop unrolling 5 cycles per iteration 2/06/2012 cs252-S12, Lecture06 13 2/06/2012 cs252-S12, Lecture06 14 Software Pipelining with Loop Unrolling in VLIW Data-Flow Architectures Memory Memory FP FP Int. op/ Clock • Basic Idea: Hardware respresents direct encoding of compiler reference 1 reference 2 operation 1 op. 2 branch dataflow graphs: LD F0,-48(R1) ST 0(R1),F4 ADDD F4,F0,F2 1 Input: a,b LD F6,-56(R1) ST -8(R1),F8 ADDD F8,F6,F2 SUBI R1,R1,#24 2 LD F10,-40(R1) ST 8(R1),F12 ADDD F12,F10,F2 BNEZ R1,LOOP 3 y:= (a+b)/x A B x:= (a*(a+b))+b output: y,x • Software pipelined across 9 iterations of original loop – In each iteration of above loop, we: • Data flows along arcs in “Tokens”. + » Store to m,m-8,m-16 (iterations I-3,I-2,I-1) » Compute for m-24,m-32,m-40 (iterations I,I+1,I+2) • When two tokens arrive at compute box, box “fires” and * » Load from m-48,m-56,m-64 (iterations I+3,I+4,I+5) produces new token. / • 9 results in 9 cycles, or 1 clock per iteration • Split operations produce copies • Average: 3.3 ops per clock, 66% efficiency of tokens + Note: Need less registers for software pipelining X(0) (only using 7 registers here, was using 15) Y X 2/06/2012 cs252-S12, Lecture06 15 2/06/2012 cs252-S12, Lecture06 16

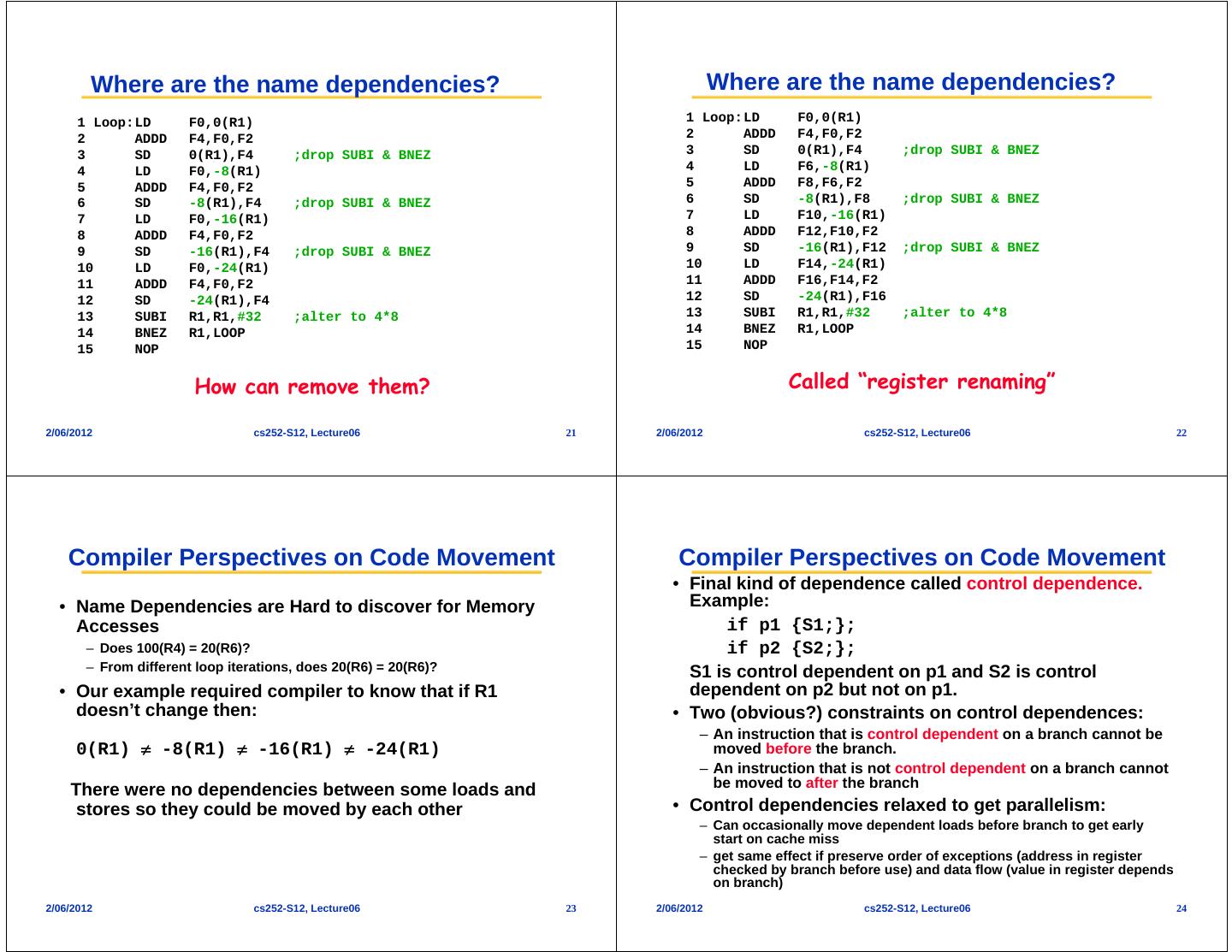

5 . Paper by Dennis and Misunas Compiler Perspectives on Code Movement Operation • Compiler concerned about dependencies in program Unit 0 • Whether or not a HW hazard depends on pipeline Operation • Try to schedule to avoid hazards that cause Unit m-1 performance losses Instruction Cell • (True) Data dependencies (RAW if a hazard for HW) Instruction – Instruction i produces a result used by instruction j, or Instruction Cell 0 Data Packets Operation – Instruction j is data dependent on instruction k, and instruction k is Packet Instruction data dependent on instruction i. Operand 1 Cell 1 • If dependent, can’t execute in parallel Operand 2 Memory • Easy to determine for registers (fixed names) • Hard for memory (“memory disambiguation” problem): Instruction – Does 100(R4) = 20(R6)? Cell n-1 “Reservation Station?” – From different loop iterations, does 20(R6) = 20(R6)? 2/06/2012 cs252-S12, Lecture06 17 2/06/2012 cs252-S12, Lecture06 18 Where are the data dependencies? Compiler Perspectives on Code Movement • Another kind of dependence called name dependence: 1 Loop: LD F0,0(R1) two instructions use same name (register or memory 2 ADDD F4,F0,F2 location) but don’t exchange data 3 SUBI R1,R1,8 4 BNEZ R1,Loop ;delayed branch • Antidependence (WAR if a hazard for HW) 5 SD 8(R1),F4 ;altered when move past SUBI – Instruction j writes a register or memory location that instruction i reads from and instruction i is executed first • Output dependence (WAW if a hazard for HW) – Instruction i and instruction j write the same register or memory location; ordering between instructions must be preserved. 2/06/2012 cs252-S12, Lecture06 19 2/06/2012 cs252-S12, Lecture06 20

6 . Where are the name dependencies? Where are the name dependencies? 1 Loop:LD F0,0(R1) 1 Loop:LD F0,0(R1) 2 ADDD F4,F0,F2 2 ADDD F4,F0,F2 3 SD 0(R1),F4 ;drop SUBI & BNEZ 3 SD 0(R1),F4 ;drop SUBI & BNEZ 4 LD F0,-8(R1) 4 LD F6,-8(R1) 5 ADDD F4,F0,F2 5 ADDD F8,F6,F2 6 SD -8(R1),F4 ;drop SUBI & BNEZ 6 SD -8(R1),F8 ;drop SUBI & BNEZ 7 LD F0,-16(R1) 7 LD F10,-16(R1) 8 ADDD F4,F0,F2 8 ADDD F12,F10,F2 9 SD -16(R1),F4 ;drop SUBI & BNEZ 9 SD -16(R1),F12 ;drop SUBI & BNEZ 10 LD F0,-24(R1) 10 LD F14,-24(R1) 11 ADDD F4,F0,F2 11 ADDD F16,F14,F2 12 SD -24(R1),F4 12 SD -24(R1),F16 13 SUBI R1,R1,#32 ;alter to 4*8 13 SUBI R1,R1,#32 ;alter to 4*8 14 BNEZ R1,LOOP 14 BNEZ R1,LOOP 15 NOP 15 NOP How can remove them? Called “register renaming” 2/06/2012 cs252-S12, Lecture06 21 2/06/2012 cs252-S12, Lecture06 22 Compiler Perspectives on Code Movement Compiler Perspectives on Code Movement • Final kind of dependence called control dependence. • Name Dependencies are Hard to discover for Memory Example: Accesses if p1 {S1;}; – Does 100(R4) = 20(R6)? if p2 {S2;}; – From different loop iterations, does 20(R6) = 20(R6)? S1 is control dependent on p1 and S2 is control • Our example required compiler to know that if R1 dependent on p2 but not on p1. doesn’t change then: • Two (obvious?) constraints on control dependences: – An instruction that is control dependent on a branch cannot be 0(R1) -8(R1) -16(R1) -24(R1) moved before the branch. – An instruction that is not control dependent on a branch cannot be moved to after the branch There were no dependencies between some loads and stores so they could be moved by each other • Control dependencies relaxed to get parallelism: – Can occasionally move dependent loads before branch to get early start on cache miss – get same effect if preserve order of exceptions (address in register checked by branch before use) and data flow (value in register depends on branch) 2/06/2012 cs252-S12, Lecture06 23 2/06/2012 cs252-S12, Lecture06 24

7 . Trace Scheduling in VLIW When Safe to Unroll Loop? • Parallelism across IF branches vs. LOOP branches • Example: Where are data dependencies? • Two steps: (A,B,C distinct & nonoverlapping) – Trace Selection for (i=0; i<100; i=i+1) { A[i+1] = A[i] + C[i]; /* S1 */ » Find likely sequence of basic blocks (trace) of (statically predicted or profile predicted) B[i+1] = B[i] + A[i+1]; /* S2 */ long sequence of straight-line code } – Trace Compaction 1. S2 uses the value, A[i+1], computed by S1 in the same iteration. » Squeeze trace into few VLIW instructions 2. S1 uses a value computed by S1 in an earlier iteration, since » Need bookkeeping code in case prediction is wrong iteration i computes A[i+1] which is read in iteration i+1. The same is • This is a form of compiler-generated speculation true of S2 for B[i] and B[i+1]. – Compiler must generate “fixup” code to handle cases in which trace This is a “loop-carried dependence”: between iterations is not the taken branch – Needs extra registers: undoes bad guess by discarding • For our prior example, each iteration was distinct • Subtle compiler bugs mean wrong answer – In this case, iterations can’t be executed in parallel, Right???? vs. poorer performance; no hardware interlocks 2/06/2012 cs252-S12, Lecture06 25 2/06/2012 cs252-S12, Lecture06 26 Does a loop-carried dependence mean there is no parallelism??? Can we use HW to get CPI closer to 1? • Consider: • Why in HW at run time? for (i=0; i< 8; i=i+1) { – Works when can’t know real dependence at compile time A = A + C[i]; /* S1 */ } – Compiler simpler – Code for one machine runs well on another Could compute: “Cycle 1”: temp0 = C[0] + C[1]; • Key idea: Allow instructions behind stall to proceed temp1 = C[2] + C[3]; temp2 = C[4] + C[5]; DIVD F0,F2,F4 temp3 = C[6] + C[7]; ADDD F10,F0,F8 “Cycle 2”: temp4 = temp0 + temp1; SUBD F12,F8,F14 temp5 = temp2 + temp3; “Cycle 3”: A = temp4 + temp5; • Out-of-order execution => out-of-order completion. • Relies on associative nature of “+”. 2/06/2012 cs252-S12, Lecture06 27 2/06/2012 cs252-S12, Lecture06 28

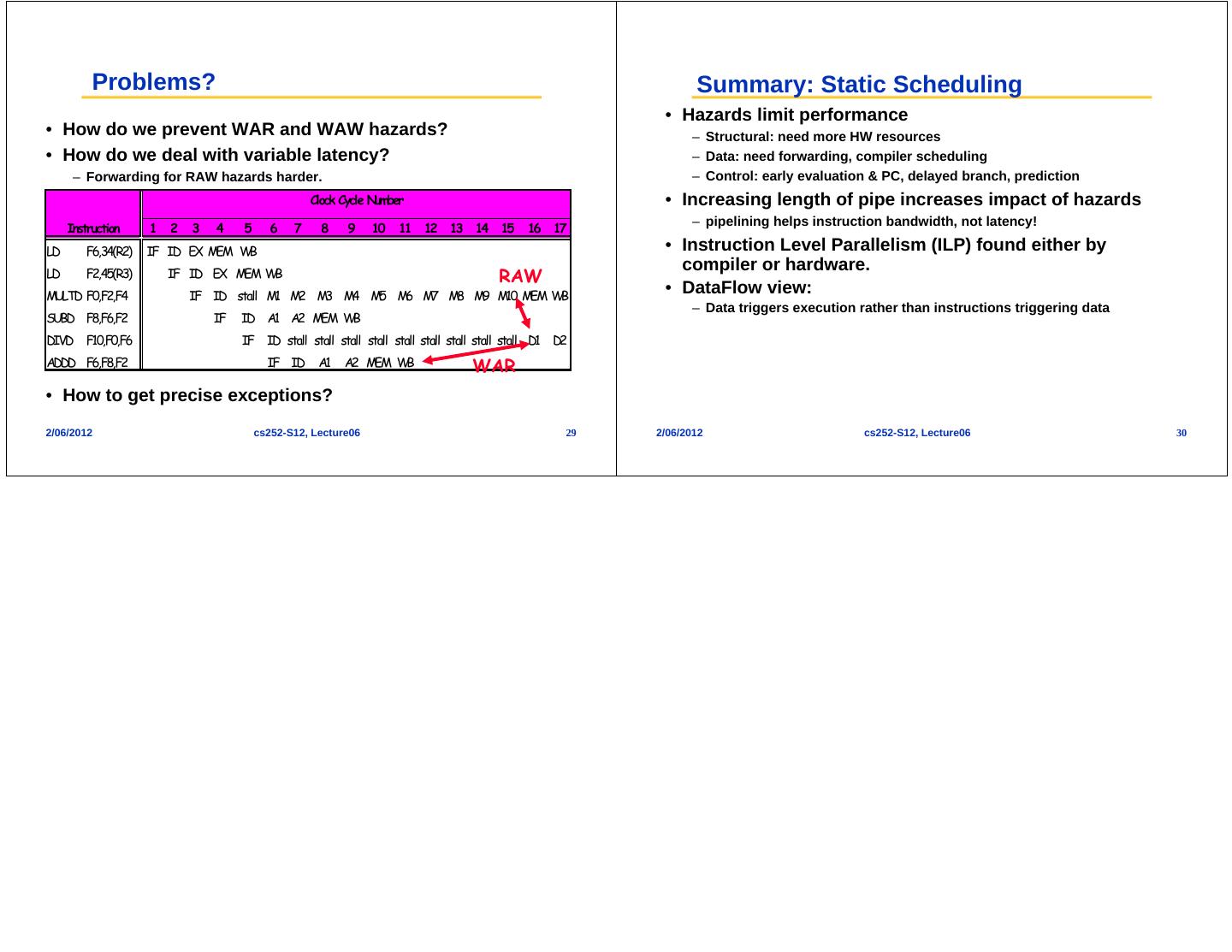

8 . Problems? Summary: Static Scheduling • Hazards limit performance • How do we prevent WAR and WAW hazards? – Structural: need more HW resources • How do we deal with variable latency? – Data: need forwarding, compiler scheduling – Forwarding for RAW hazards harder. – Control: early evaluation & PC, delayed branch, prediction Clock Cycle Number • Increasing length of pipe increases impact of hazards – pipelining helps instruction bandwidth, not latency! Instruction 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 LD F6,34(R2) IF ID EX MEM WB • Instruction Level Parallelism (ILP) found either by compiler or hardware. LD F2,45(R3) IF ID EX MEM WB RAW MULTD F0,F2,F4 IF ID stall M1 M2 M3 M4 M5 M6 M7 M8 M9 M10 MEM WB • DataFlow view: – Data triggers execution rather than instructions triggering data SUBD F8,F6,F2 IF ID A1 A2 MEM WB DIVD F10,F0,F6 IF ID stall stall stall stall stall stall stall stall stall D1 D2 ADDD F6,F8,F2 IF ID A1 A2 MEM WB WAR • How to get precise exceptions? 2/06/2012 cs252-S12, Lecture06 29 2/06/2012 cs252-S12, Lecture06 30