- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

18-Distributed Consensus #1

展开查看详情

1 . Today’s Papers EECS 262a • Paxos Made Live - An Engineering Perspective, Tushar Chandra, Advanced Topics in Computer Systems Robert Griesemer, and Joshua Redstone. Appears in Proceedings of the Symposium on Principles of Distributed Computing (PODC), 2007 Lecture 18 • In Search of an Understandable Consensus Algorithm, Diego Ongaro and John Ousterhout, USENIX ATC’14 Paxos/RAFT October 23rd, 2018 • Thoughts? John Kubiatowicz (Lots of borrowed slides see attributions inline) Electrical Engineering and Computer Sciences University of California, Berkeley http://www.eecs.berkeley.edu/~kubitron/cs262 10/23/2018 cs262a-F18 Lecture-18 2 Google Chubby Distributed Consensus • A coarse-grained lock and small file storage service All client traffic – Other (Google) distributed systems can use this to synchronize access to shared resources One Chubby “Cell” • Intended for use by “loosely-coupled distributed systems” Master replica – GFS: Elect a master replica replica – Bigtable: master election, client discovery, table service locking – Well-known location for bootstrapping larger systems – Partitioning workloads replica replica • Goals: – High availability – Reliability • Chubby cell is usually 5 replicas – 3 must be alive for cell to be viable • Anti-goals: • How do replicas in Chubby agree on their own master, official – High performance, Throughput, Storage capacity lock values? – Distributed commit algorithm 10/23/2018 cs262a-F18 Lecture-18 3 10/23/2018 cs262a-F18 Lecture-18 4

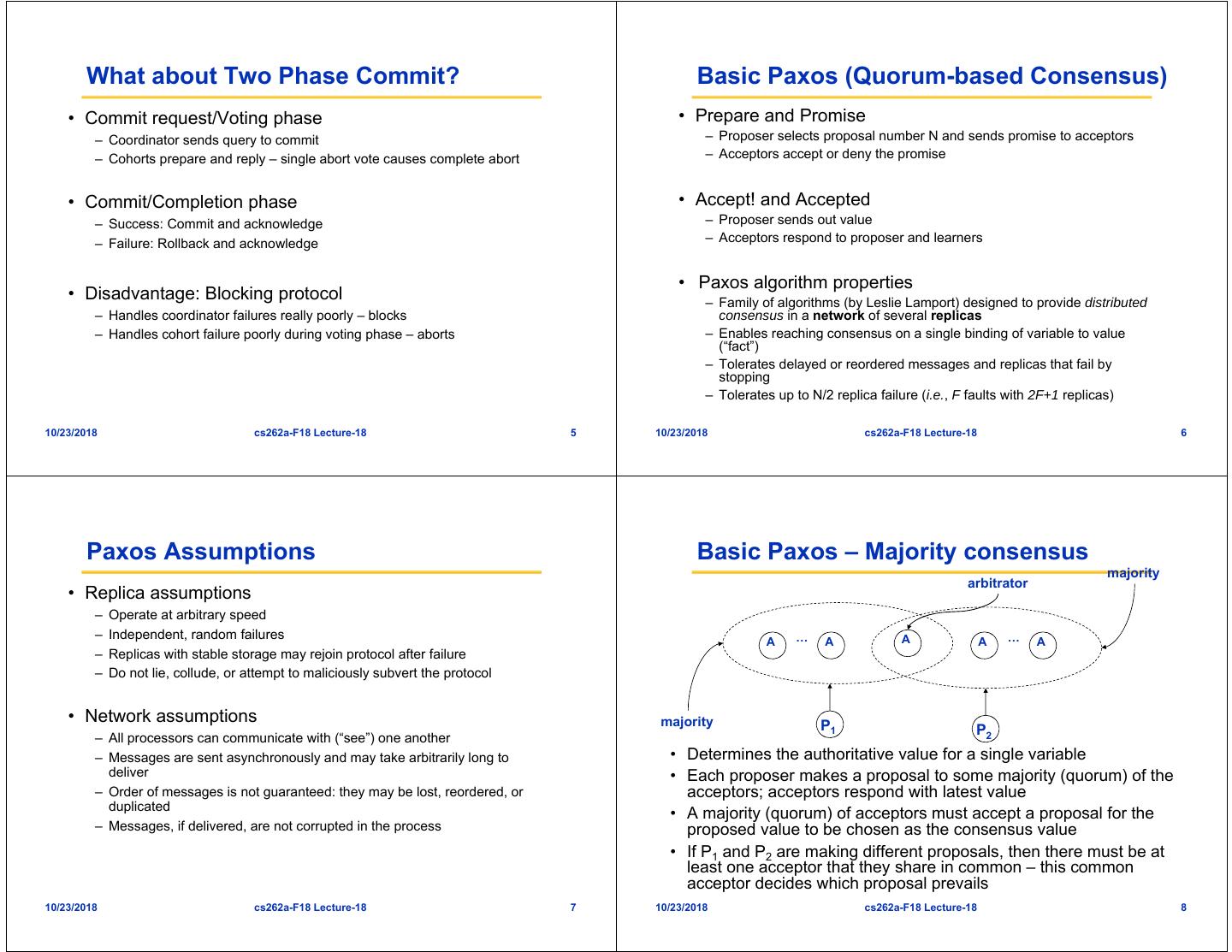

2 . What about Two Phase Commit? Basic Paxos (Quorum-based Consensus) • Commit request/Voting phase • Prepare and Promise – Coordinator sends query to commit – Proposer selects proposal number N and sends promise to acceptors – Cohorts prepare and reply – single abort vote causes complete abort – Acceptors accept or deny the promise • Commit/Completion phase • Accept! and Accepted – Success: Commit and acknowledge – Proposer sends out value – Failure: Rollback and acknowledge – Acceptors respond to proposer and learners • Paxos algorithm properties • Disadvantage: Blocking protocol – Family of algorithms (by Leslie Lamport) designed to provide distributed – Handles coordinator failures really poorly – blocks consensus in a network of several replicas – Handles cohort failure poorly during voting phase – aborts – Enables reaching consensus on a single binding of variable to value (“fact”) – Tolerates delayed or reordered messages and replicas that fail by stopping – Tolerates up to N/2 replica failure (i.e., F faults with 2F+1 replicas) 10/23/2018 cs262a-F18 Lecture-18 5 10/23/2018 cs262a-F18 Lecture-18 6 Paxos Assumptions Basic Paxos – Majority consensus majority arbitrator • Replica assumptions – Operate at arbitrary speed – Independent, random failures … A … A A A A – Replicas with stable storage may rejoin protocol after failure – Do not lie, collude, or attempt to maliciously subvert the protocol • Network assumptions majority P1 P2 – All processors can communicate with (“see”) one another – Messages are sent asynchronously and may take arbitrarily long to • Determines the authoritative value for a single variable deliver • Each proposer makes a proposal to some majority (quorum) of the – Order of messages is not guaranteed: they may be lost, reordered, or acceptors; acceptors respond with latest value duplicated • A majority (quorum) of acceptors must accept a proposal for the – Messages, if delivered, are not corrupted in the process proposed value to be chosen as the consensus value • If P1 and P2 are making different proposals, then there must be at least one acceptor that they share in common – this common acceptor decides which proposal prevails 10/23/2018 cs262a-F18 Lecture-18 7 10/23/2018 cs262a-F18 Lecture-18 8

3 . Distributed consensus problem • Group of processes must agree on a single value • Value must be proposed • After value is agreed upon, it can be learned/acted on Paxos Based on many slides from Indranil Gupta’s presentation: (https://courses.engr.illinois.edu/cs525/sp2013/L9_paxos.sp13.ppt), and Gene Pang’s presentation (www.cs.berkeley.edu/~istoica/classes/cs294/11/notes/07-gene-paxos.pptx) 10/23/2018 cs262a-F18 Lecture-18 9 10/23/2018 cs262a-F18 Lecture-18 10 Two types of failures Paxos • Non-Byzantine • Byzantine • Failed nodes stop • Failed nodes will keep communicating with other sending messages • L. Lamport, The Part-Time Parliament, September 1989 nodes – Incorrect and potentially – "Clean" failure misleading – Failed node becomes a • Aegean island of Paxos – Fail-stop behavior traitor • A part-time parliament Today’s Assumptions: – Goal: determine the sequence of decrees passed (consensus!) – Parliamentarians come and go asynchronous, non-byzantine model – Somehow the law gets passed anyway and consistently 10/23/2018 cs262a-F18 Lecture-18 11 10/23/2018 cs262a-F18 Lecture-18 12

4 . Political Analogy Does Paxos Solve Consensus? • Paxos has rounds: each round has a unique ballot ID • Provides safety and liveness • Safety: • Rounds are asynchronous – Only a value which has been proposed can be chosen – Time synchronization not required – Only a single value can be chosen – If you are in round j and hear a message from round j+1, abort everything and go – A process never learns/acts upon a value unless it was actually chosen to round j+1 • Eventual liveness: If things go “well” at some point in the • Each round future (e.g., no longer losses or failures), consensus is – Phase 1: A leader is elected (election) eventually reached. However, this is not guaranteed. – Phase 2: Leader proposes a value (bill), processes acks – Phase 3: Leaders multicast final value (law) 10/23/2018 cs262a-F18 Lecture-18 13 10/23/2018 cs262a-F18 Lecture-18 14 So Simple, So Obvious Simple Pseudocode • “In fact, it is among the simplest and most obvious of distributed algorithms.” - Leslie Lamport 10/23/2018 cs262a-F18 Lecture-18 15 10/23/2018 cs262a-F18 Lecture-18 16

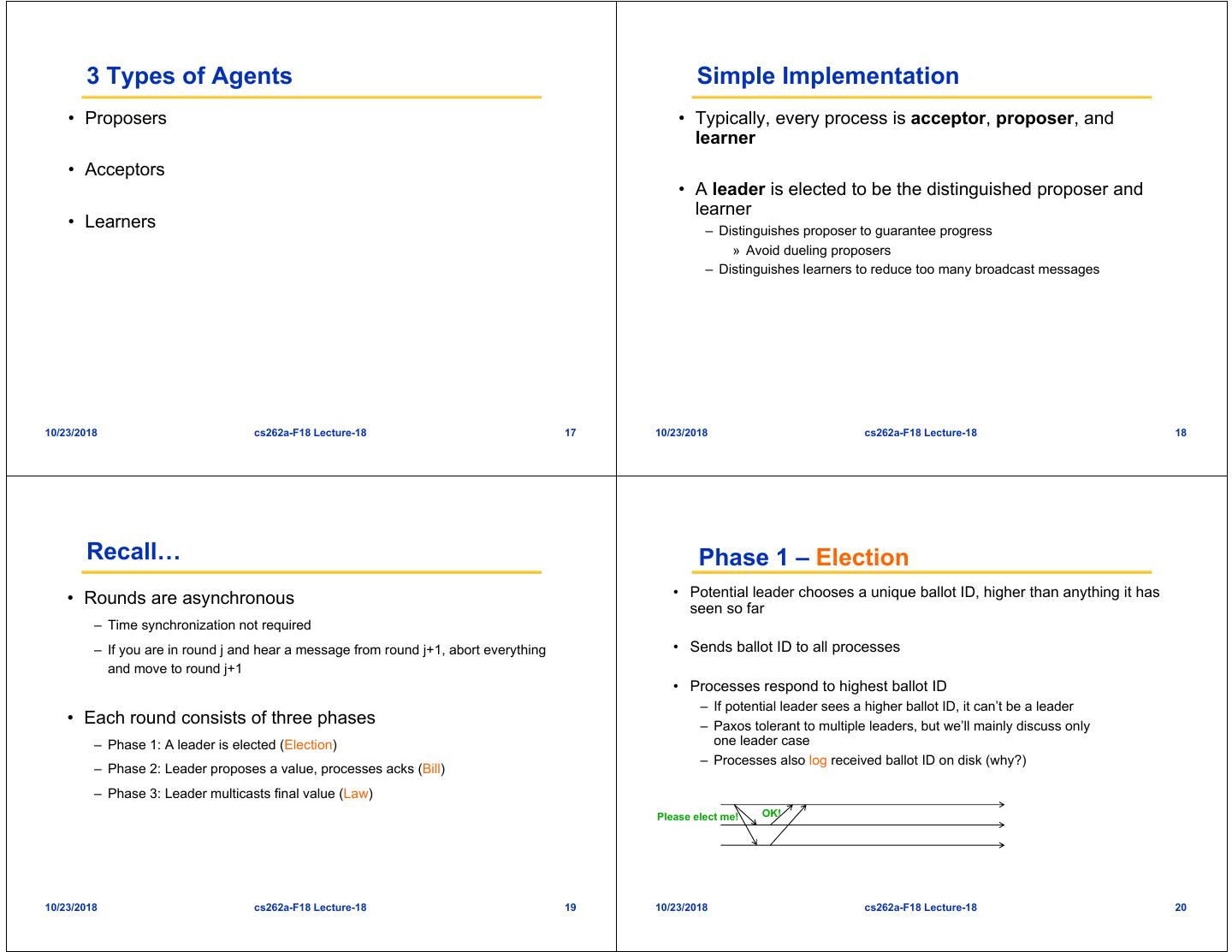

5 . 3 Types of Agents Simple Implementation • Proposers • Typically, every process is acceptor, proposer, and learner • Acceptors • A leader is elected to be the distinguished proposer and learner • Learners – Distinguishes proposer to guarantee progress » Avoid dueling proposers – Distinguishes learners to reduce too many broadcast messages 10/23/2018 cs262a-F18 Lecture-18 17 10/23/2018 cs262a-F18 Lecture-18 18 Recall… Phase 1 – Election • Rounds are asynchronous • Potential leader chooses a unique ballot ID, higher than anything it has seen so far – Time synchronization not required – If you are in round j and hear a message from round j+1, abort everything • Sends ballot ID to all processes and move to round j+1 • Processes respond to highest ballot ID – If potential leader sees a higher ballot ID, it can’t be a leader • Each round consists of three phases – Paxos tolerant to multiple leaders, but we’ll mainly discuss only – Phase 1: A leader is elected (Election) one leader case – Processes also log received ballot ID on disk (why?) – Phase 2: Leader proposes a value, processes acks (Bill) – Phase 3: Leader multicasts final value (Law) Please elect me! OK! 10/23/2018 cs262a-F18 Lecture-18 19 10/23/2018 cs262a-F18 Lecture-18 20

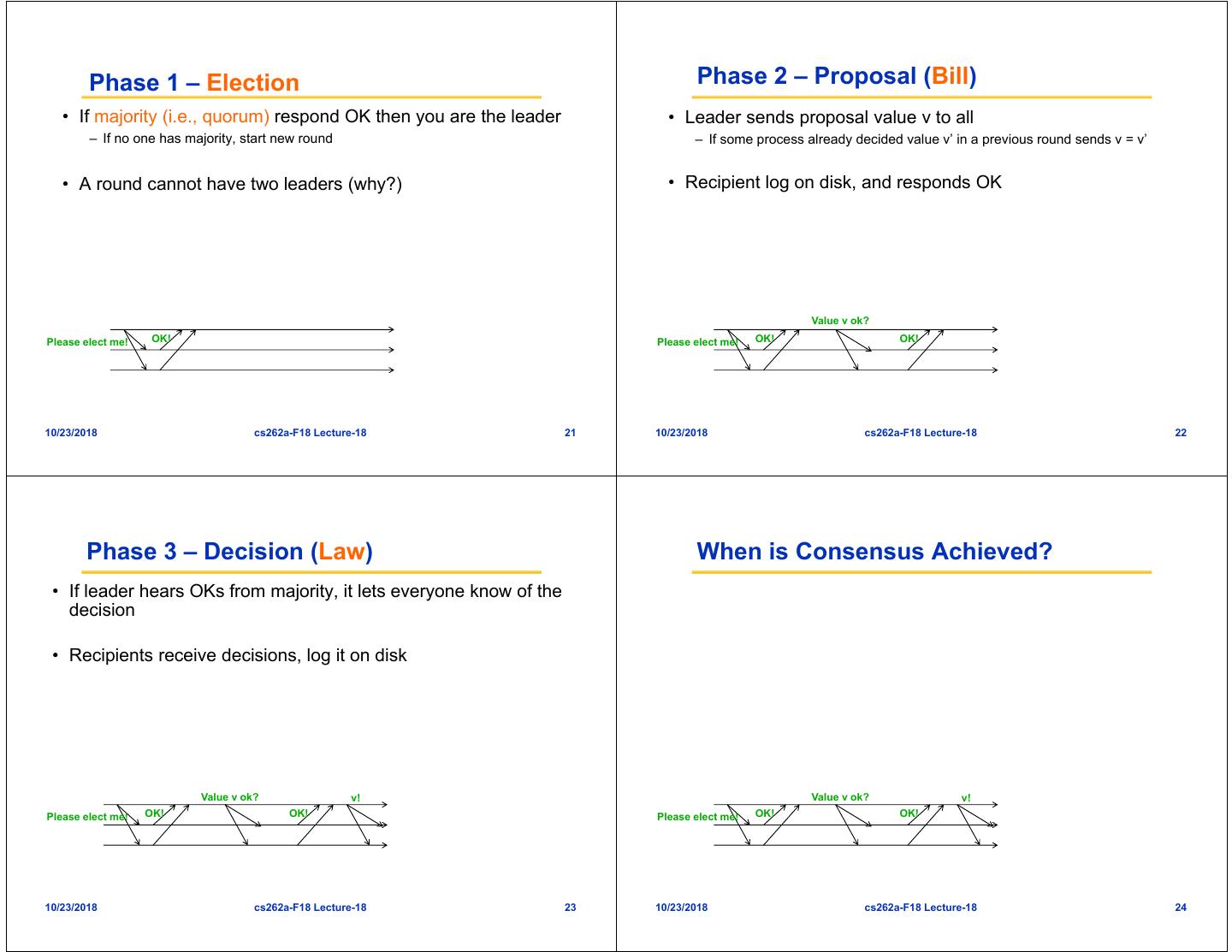

6 . Phase 1 – Election Phase 2 – Proposal (Bill) • If majority (i.e., quorum) respond OK then you are the leader • Leader sends proposal value v to all – If no one has majority, start new round – If some process already decided value v’ in a previous round sends v = v’ • A round cannot have two leaders (why?) • Recipient log on disk, and responds OK Value v ok? Please elect me! OK! Please elect me! OK! OK! 10/23/2018 cs262a-F18 Lecture-18 21 10/23/2018 cs262a-F18 Lecture-18 22 Phase 3 – Decision (Law) When is Consensus Achieved? • If leader hears OKs from majority, it lets everyone know of the decision • Recipients receive decisions, log it on disk Value v ok? v! Value v ok? v! Please elect me! OK! OK! Please elect me! OK! OK! 10/23/2018 cs262a-F18 Lecture-18 23 10/23/2018 cs262a-F18 Lecture-18 24

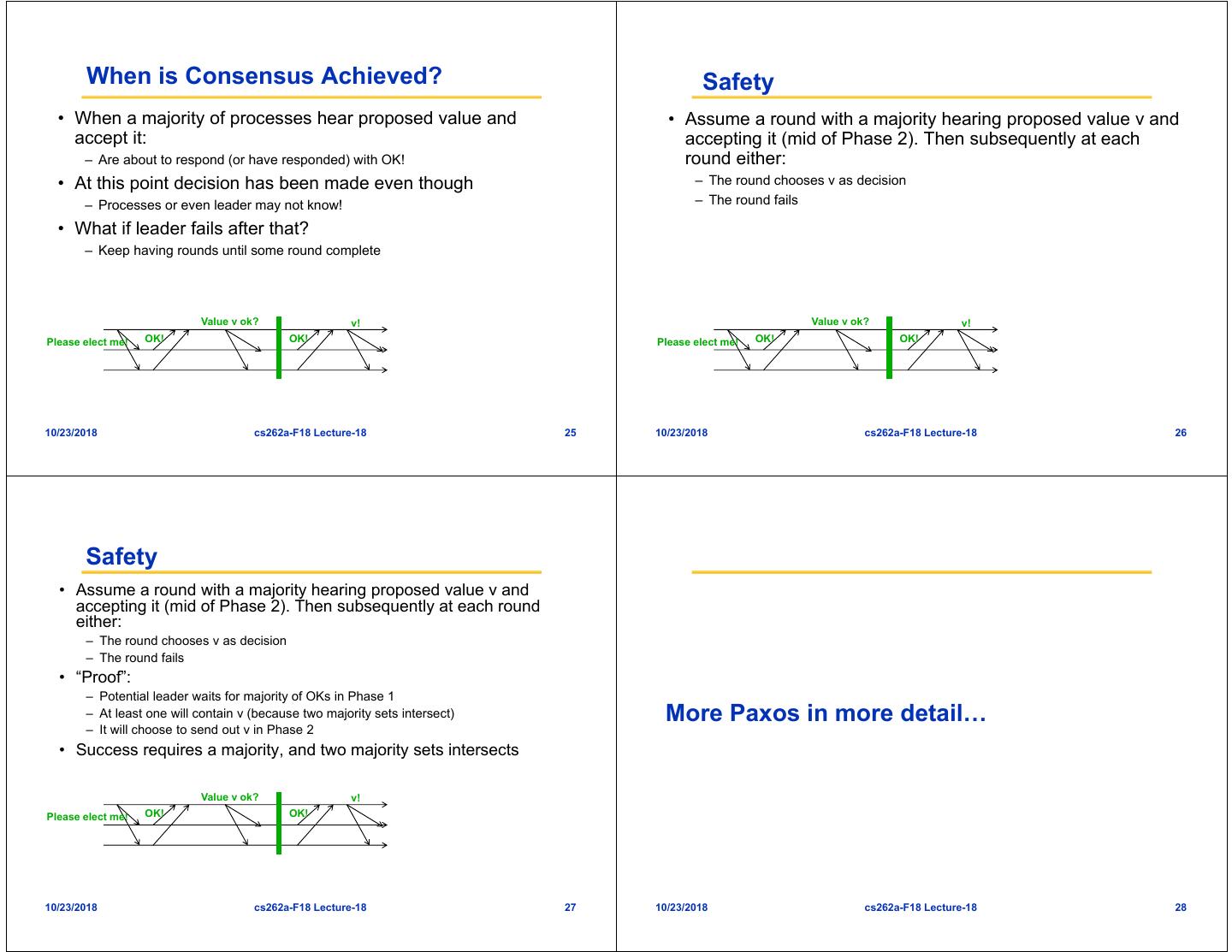

7 . When is Consensus Achieved? Safety • When a majority of processes hear proposed value and • Assume a round with a majority hearing proposed value v and accept it: accepting it (mid of Phase 2). Then subsequently at each – Are about to respond (or have responded) with OK! round either: • At this point decision has been made even though – The round chooses v as decision – Processes or even leader may not know! – The round fails • What if leader fails after that? – Keep having rounds until some round complete Value v ok? v! Value v ok? v! Please elect me! OK! OK! Please elect me! OK! OK! 10/23/2018 cs262a-F18 Lecture-18 25 10/23/2018 cs262a-F18 Lecture-18 26 Safety • Assume a round with a majority hearing proposed value v and accepting it (mid of Phase 2). Then subsequently at each round either: – The round chooses v as decision – The round fails • “Proof”: – Potential leader waits for majority of OKs in Phase 1 – At least one will contain v (because two majority sets intersect) More Paxos in more detail… – It will choose to send out v in Phase 2 • Success requires a majority, and two majority sets intersects Value v ok? v! Please elect me! OK! OK! 10/23/2018 cs262a-F18 Lecture-18 27 10/23/2018 cs262a-F18 Lecture-18 28

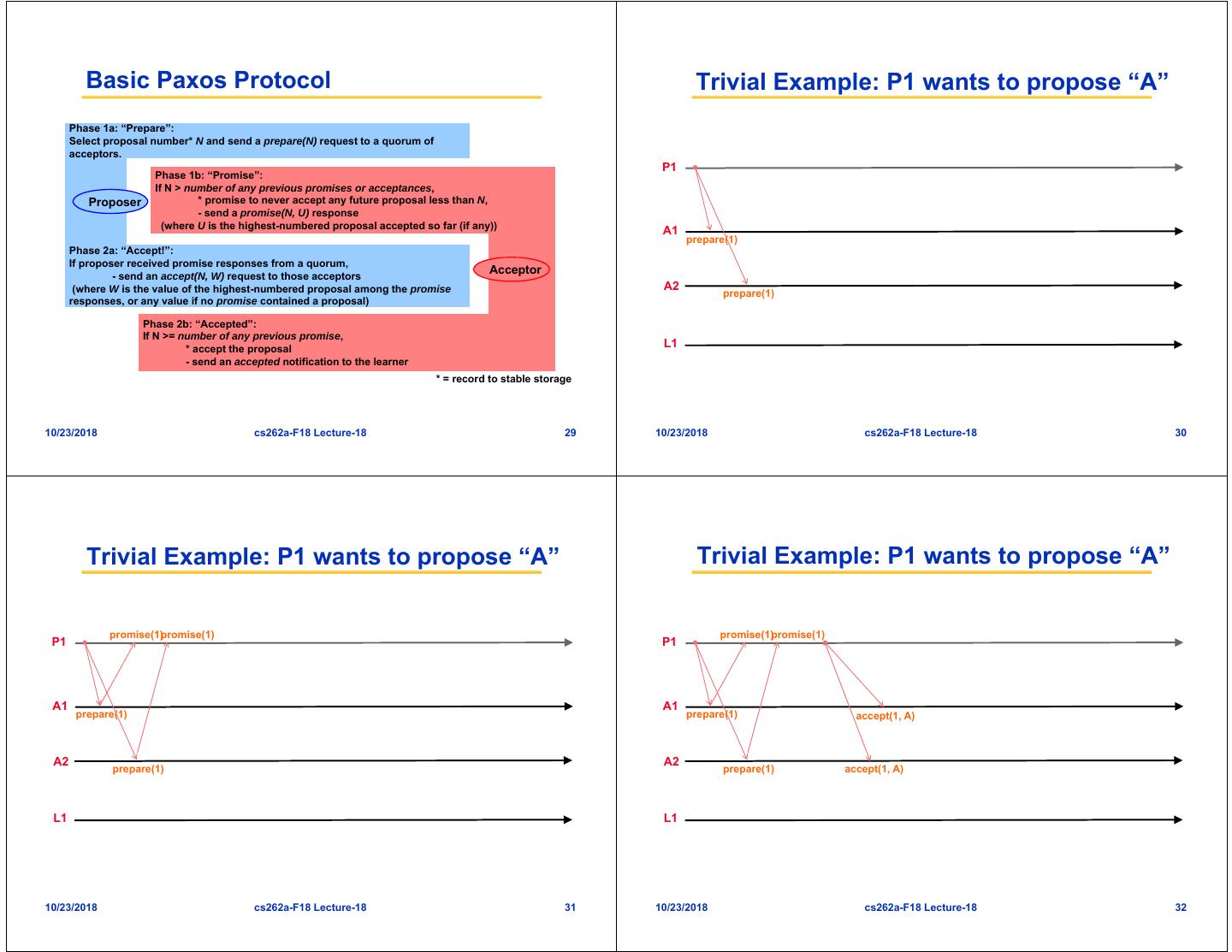

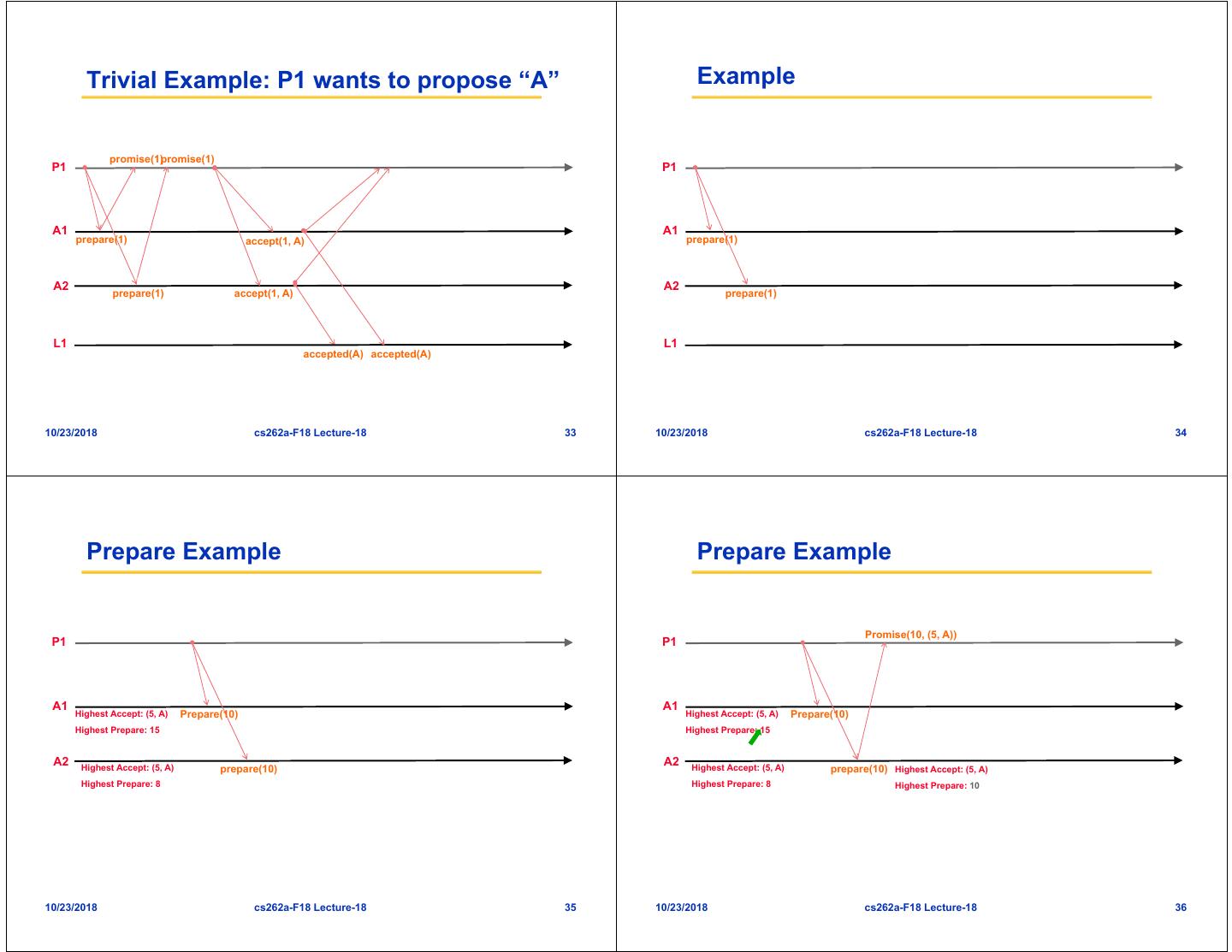

8 . Basic Paxos Protocol Trivial Example: P1 wants to propose “A” Phase 1a: “Prepare”: Select proposal number* N and send a prepare(N) request to a quorum of acceptors. P1 Phase 1b: “Promise”: If N > number of any previous promises or acceptances, Proposer * promise to never accept any future proposal less than N, - send a promise(N, U) response (where U is the highest-numbered proposal accepted so far (if any)) A1 prepare(1) Phase 2a: “Accept!”: If proposer received promise responses from a quorum, - send an accept(N, W) request to those acceptors Acceptor (where W is the value of the highest-numbered proposal among the promise A2 prepare(1) responses, or any value if no promise contained a proposal) Phase 2b: “Accepted”: If N >= number of any previous promise, * accept the proposal L1 - send an accepted notification to the learner * = record to stable storage 10/23/2018 cs262a-F18 Lecture-18 29 10/23/2018 cs262a-F18 Lecture-18 30 Trivial Example: P1 wants to propose “A” Trivial Example: P1 wants to propose “A” promise(1)promise(1) promise(1)promise(1) P1 P1 A1 A1 prepare(1) prepare(1) accept(1, A) A2 A2 prepare(1) prepare(1) accept(1, A) L1 L1 10/23/2018 cs262a-F18 Lecture-18 31 10/23/2018 cs262a-F18 Lecture-18 32

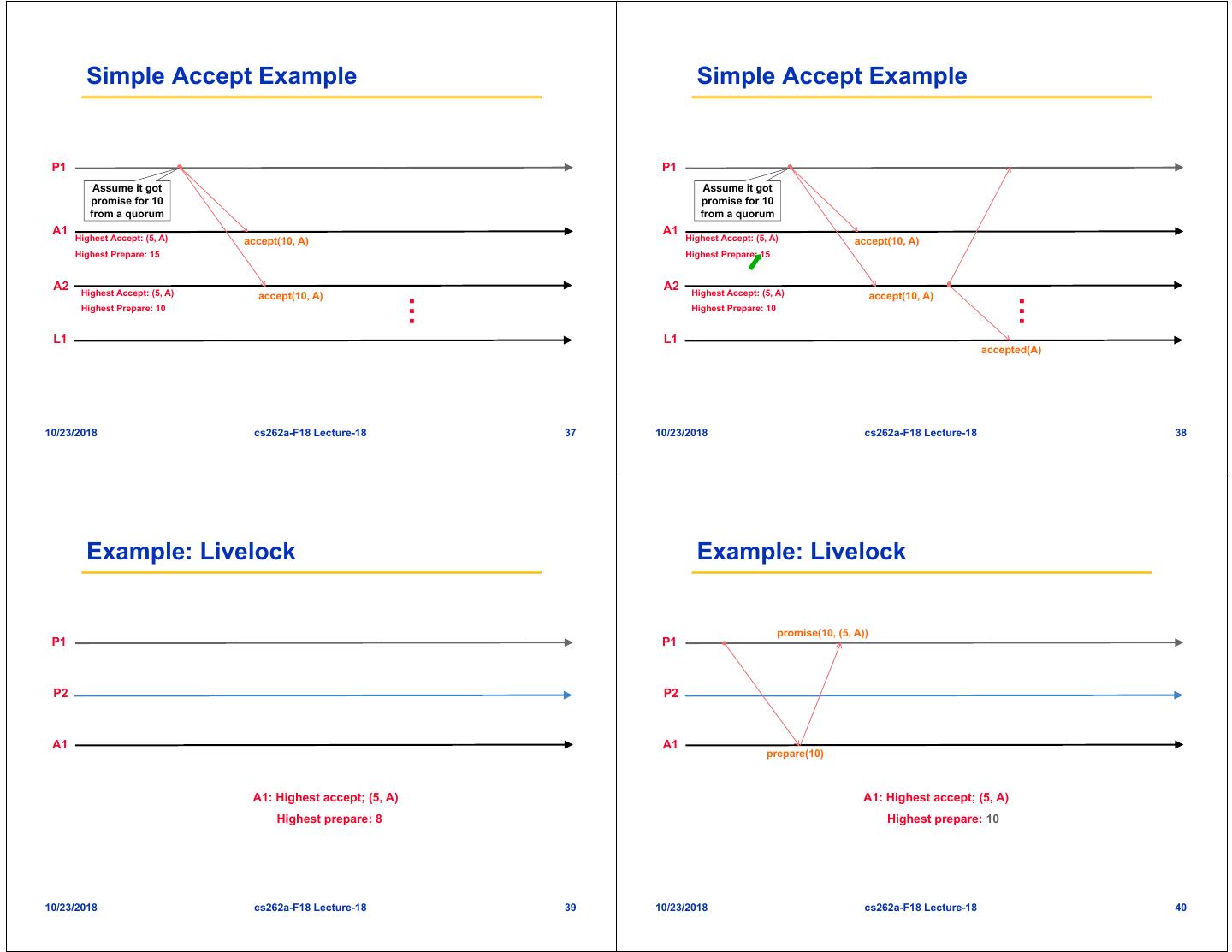

9 . Trivial Example: P1 wants to propose “A” Example promise(1)promise(1) P1 P1 A1 A1 prepare(1) accept(1, A) prepare(1) A2 A2 prepare(1) accept(1, A) prepare(1) L1 L1 accepted(A) accepted(A) 10/23/2018 cs262a-F18 Lecture-18 33 10/23/2018 cs262a-F18 Lecture-18 34 Prepare Example Prepare Example Promise(10, (5, A)) P1 P1 A1 A1 Highest Accept: (5, A) Prepare(10) Highest Accept: (5, A) Prepare(10) Highest Prepare: 15 Highest Prepare: 15 A2 Highest Accept: (5, A) A2 Highest Accept: (5, A) prepare(10) prepare(10) Highest Accept: (5, A) Highest Prepare: 8 Highest Prepare: 8 Highest Prepare: 10 10/23/2018 cs262a-F18 Lecture-18 35 10/23/2018 cs262a-F18 Lecture-18 36

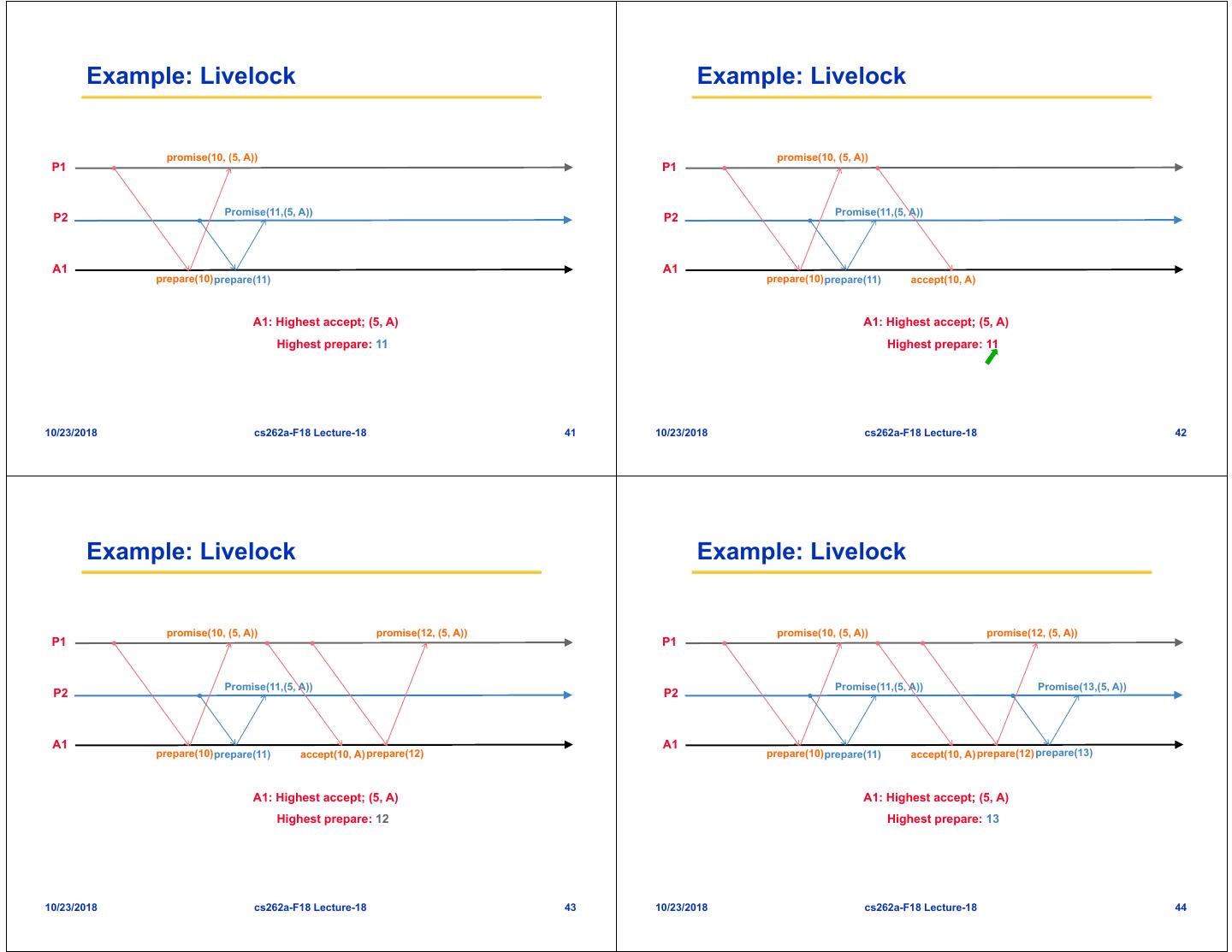

10 . Simple Accept Example Simple Accept Example P1 P1 Assume it got Assume it got promise for 10 promise for 10 from a quorum from a quorum A1 A1 Highest Accept: (5, A) accept(10, A) Highest Accept: (5, A) accept(10, A) Highest Prepare: 15 Highest Prepare: 15 A2 Highest Accept: (5, A) A2 Highest Accept: (5, A) accept(10, A) accept(10, A) … … Highest Prepare: 10 Highest Prepare: 10 L1 L1 accepted(A) 10/23/2018 cs262a-F18 Lecture-18 37 10/23/2018 cs262a-F18 Lecture-18 38 Example: Livelock Example: Livelock promise(10, (5, A)) P1 P1 P2 P2 A1 A1 prepare(10) A1: Highest accept; (5, A) A1: Highest accept; (5, A) Highest prepare: 8 Highest prepare: 10 10/23/2018 cs262a-F18 Lecture-18 39 10/23/2018 cs262a-F18 Lecture-18 40

11 . Example: Livelock Example: Livelock promise(10, (5, A)) promise(10, (5, A)) P1 P1 Promise(11,(5, A)) Promise(11,(5, A)) P2 P2 A1 A1 prepare(10) prepare(11) prepare(10) prepare(11) accept(10, A) A1: Highest accept; (5, A) A1: Highest accept; (5, A) Highest prepare: 11 Highest prepare: 11 10/23/2018 cs262a-F18 Lecture-18 41 10/23/2018 cs262a-F18 Lecture-18 42 Example: Livelock Example: Livelock promise(10, (5, A)) promise(12, (5, A)) promise(10, (5, A)) promise(12, (5, A)) P1 P1 Promise(11,(5, A)) Promise(11,(5, A)) Promise(13,(5, A)) P2 P2 A1 A1 prepare(10) prepare(11) accept(10, A) prepare(12) prepare(10) prepare(11) accept(10, A) prepare(12) prepare(13) A1: Highest accept; (5, A) A1: Highest accept; (5, A) Highest prepare: 12 Highest prepare: 13 10/23/2018 cs262a-F18 Lecture-18 43 10/23/2018 cs262a-F18 Lecture-18 44

12 . Example: P1 want to propose value A Example: P1 want to propose value A prom(5) prom(5) P1 P1 P2 P2 A1 A1 prep(5) prep(5) A2 A2 prep(5) prep(5) A3 A3 prep(5) prep(5) L L 10/23/2018 cs262a-F18 Lecture-18 45 10/23/2018 cs262a-F18 Lecture-18 46 Example: P1 want to propose value A Example: P1 want to propose value A prom(5) prom(5) prom(5) prom(5) accepted(A) P1 P1 P2 P2 A1 A1 prep(5) accept(5, A) prep(5) accept(5, A) A2 A2 prep(5) accept(5, A) prep(5) accept(5, A) A3 A3 prep(5) accept(5, A) prep(5) accept(5, A) L L accepted(A) 10/23/2018 cs262a-F18 Lecture-18 47 10/23/2018 cs262a-F18 Lecture-18 48

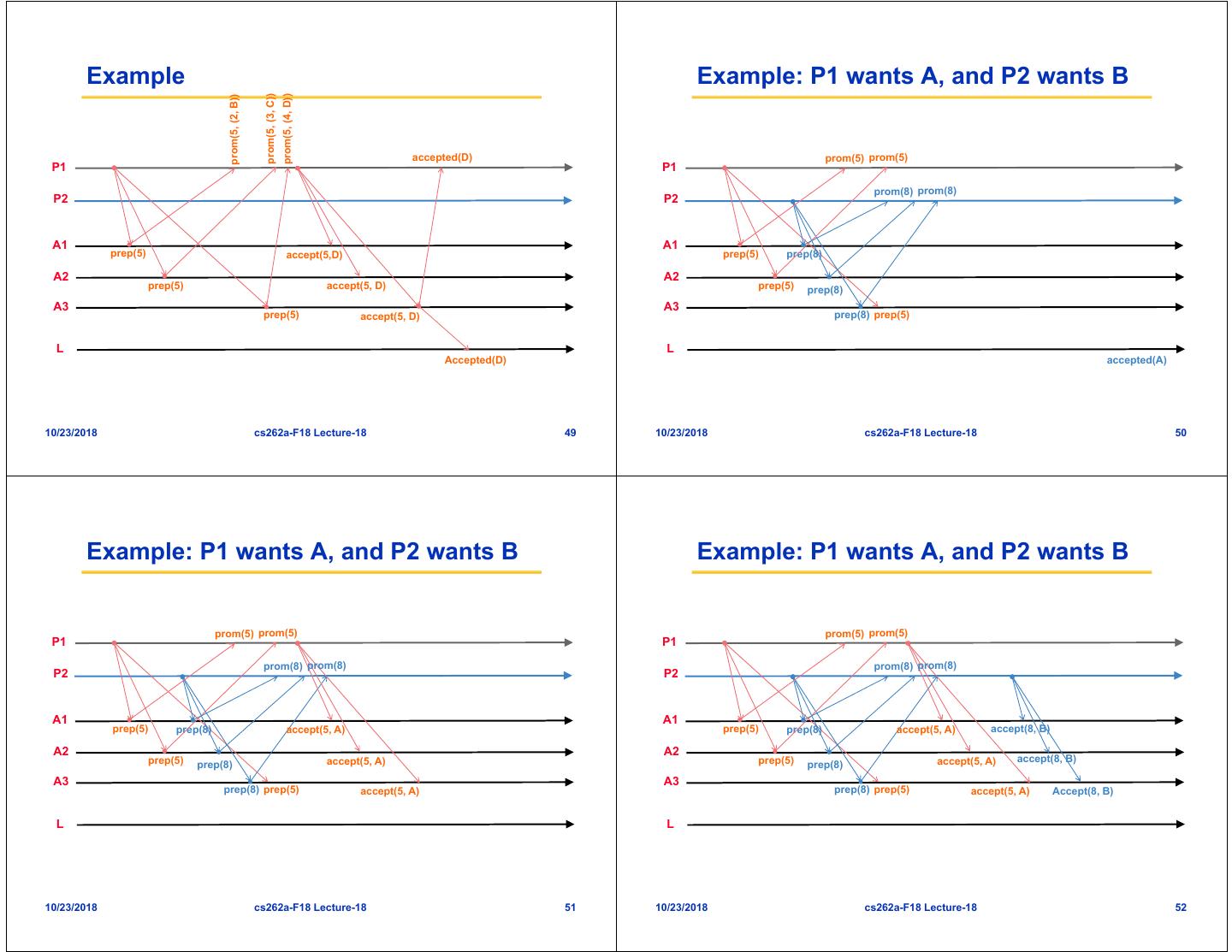

13 . Example Example: P1 wants A, and P2 wants B prom(5, (3, C)) prom(5, (4, D)) prom(5, (2, B)) accepted(D) prom(5) prom(5) P1 P1 prom(8) prom(8) P2 P2 A1 A1 prep(5) accept(5,D) prep(5) prep(8) A2 A2 prep(5) accept(5, D) prep(5) prep(8) A3 A3 prep(5) accept(5, D) prep(8) prep(5) L L Accepted(D) accepted(A) 10/23/2018 cs262a-F18 Lecture-18 49 10/23/2018 cs262a-F18 Lecture-18 50 Example: P1 wants A, and P2 wants B Example: P1 wants A, and P2 wants B prom(5) prom(5) prom(5) prom(5) P1 P1 prom(8) prom(8) prom(8) prom(8) P2 P2 A1 A1 prep(5) prep(8) accept(5, A) prep(5) prep(8) accept(5, A) accept(8, B) A2 A2 prep(5) accept(5, A) prep(5) accept(5, A) accept(8, B) prep(8) prep(8) A3 A3 prep(8) prep(5) accept(5, A) prep(8) prep(5) accept(5, A) Accept(8, B) L L 10/23/2018 cs262a-F18 Lecture-18 51 10/23/2018 cs262a-F18 Lecture-18 52

14 . Example: P1 wants A, and P2 wants B Others • In practice send NACKs if not accepting a promise prom(5) prom(5) P1 • Promise IDs should increase slowly prom(8) prom(8) Accepted(8, B) – Otherwise too much too converge P2 – Solution: different ID spaces for proposers A1 prep(5) prep(8) accept(5, A) accept(8, B) A2 prep(5) accept(5, A) accept(8, B) prep(8) A3 prep(8) prep(5) accept(5, A) accept(8, B) L Accepted(8, B) 10/23/2018 cs262a-F18 Lecture-18 53 10/23/2018 cs262a-F18 Lecture-18 54 Paxos in Chubby Architecture • MultiPaxos: – Steps 1 (prepare) and 2 (promise) done once – Steps 3 (accept!) and 4 (accepted) repeated multiple times by same leader • Replicas in a cell initially use Paxos to establish the leader – Majority of replicas must agree • Optimization: Master Lease – Replicas promise not to try to elect new master for at least a few seconds – Master lease is periodically renewed • Master failure – If replicas lose contact with master, they wait for grace period (4-6 secs) – On timeout, hold new election 10/23/2018 cs262a-F18 Lecture-18 55 10/23/2018 cs262a-F18 Lecture-18 56

15 . From Theory to Practice: Fault-tolerant LOG implement with Paxos Building a Correct System • Disk Corruption • Simple one-page pseudocode for Paxos algorithm == thousands of – Need to recognize and handle subtle corruption in stable state lines of C++ code – Created simple state machine specification language and compiler • Use of Master Leases – Resulting code is “Correct by construction” – Grant leadership for fixed period of time • Aggressive testing strategy – Allows clients to read latest value from Master – Tests for safety (consistent) and liveness (consistent and making progress) – Prevents inefficient oscillation in algorithm – Added entry points for test harnesses • Use of Epochs – Reproducible simulation environment » Injection of random errors in network and hardware – Recognize when leader changes » Use of pseudo-random seed provided reproducibility – Chubby semantics requires abort in these circumstances • Data structure and database corruption • Group membership – Aggressive, liberal usage of assert statements (makes Chubby fail-stop) – Use of Paxos protocol to select servers that are members of Paxos group – Added lots of checksum checks • Snapshot integration with Paxos • Upgrades and rollbacks are hard – Fix buggy scripts! • MultiOp – Recognize differences between developers and operators – Allows implementation of atomic operations on log • Forced to “add concurrency” as project progressed – If (guard[database]) then {t_op} else {f_op} 10/23/2018 cs262a-F18 Lecture-18 57 10/23/2018 cs262a-F18 Lecture-18 58 Reliability Summary • Started out using replicated Berkeley DB (“3DB”) • Simple protocols win again (?!) – Ill-defined, unproven, buggy replication protocol • Reuse of functionality • Replaced with custom write-thru logging DB – Chubby uses GFS – GFS uses Chubby • Entire database periodically sent to GFS – In a different data center • Many challenges going from theoretical algorithm to practical implementation – No tools for implementing fault-tolerant protocols • Chubby replicas span multiple racks – Test, test, and test again (critical component!) – Everything can be corrupted so checksum everything – People are fallible (so are scripts!) 10/23/2018 cs262a-F18 Lecture-18 59 10/23/2018 cs262a-F18 Lecture-18 60

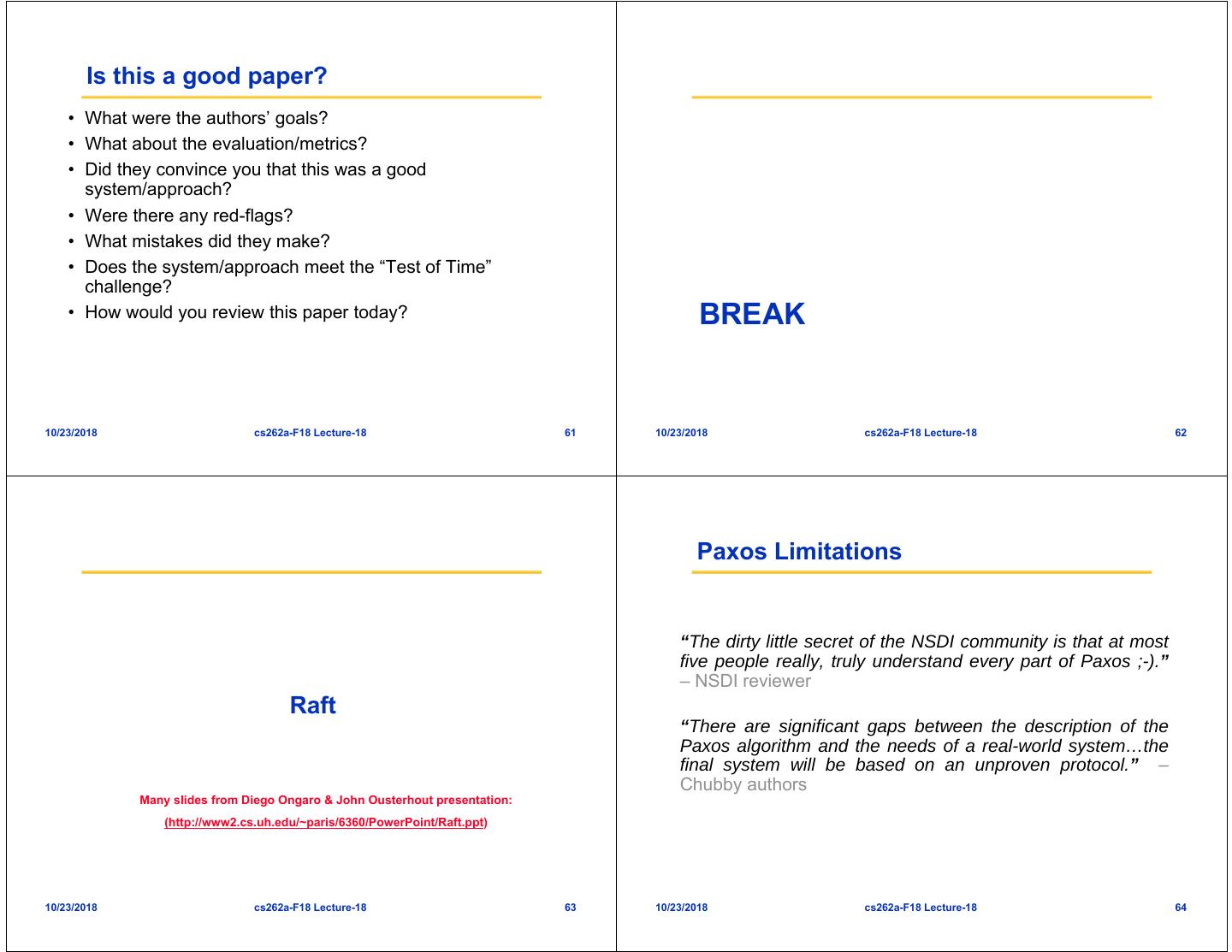

16 . Is this a good paper? • What were the authors’ goals? • What about the evaluation/metrics? • Did they convince you that this was a good system/approach? • Were there any red-flags? • What mistakes did they make? • Does the system/approach meet the “Test of Time” challenge? • How would you review this paper today? BREAK 10/23/2018 cs262a-F18 Lecture-18 61 10/23/2018 cs262a-F18 Lecture-18 62 Paxos Limitations “The dirty little secret of the NSDI community is that at most five people really, truly understand every part of Paxos ;-).” – NSDI reviewer Raft “There are significant gaps between the description of the Paxos algorithm and the needs of a real-world system…the final system will be based on an unproven protocol.” – Chubby authors Many slides from Diego Ongaro & John Ousterhout presentation: (http://www2.cs.uh.edu/~paris/6360/PowerPoint/Raft.ppt) 10/23/2018 cs262a-F18 Lecture-18 63 10/23/2018 cs262a-F18 Lecture-18 64

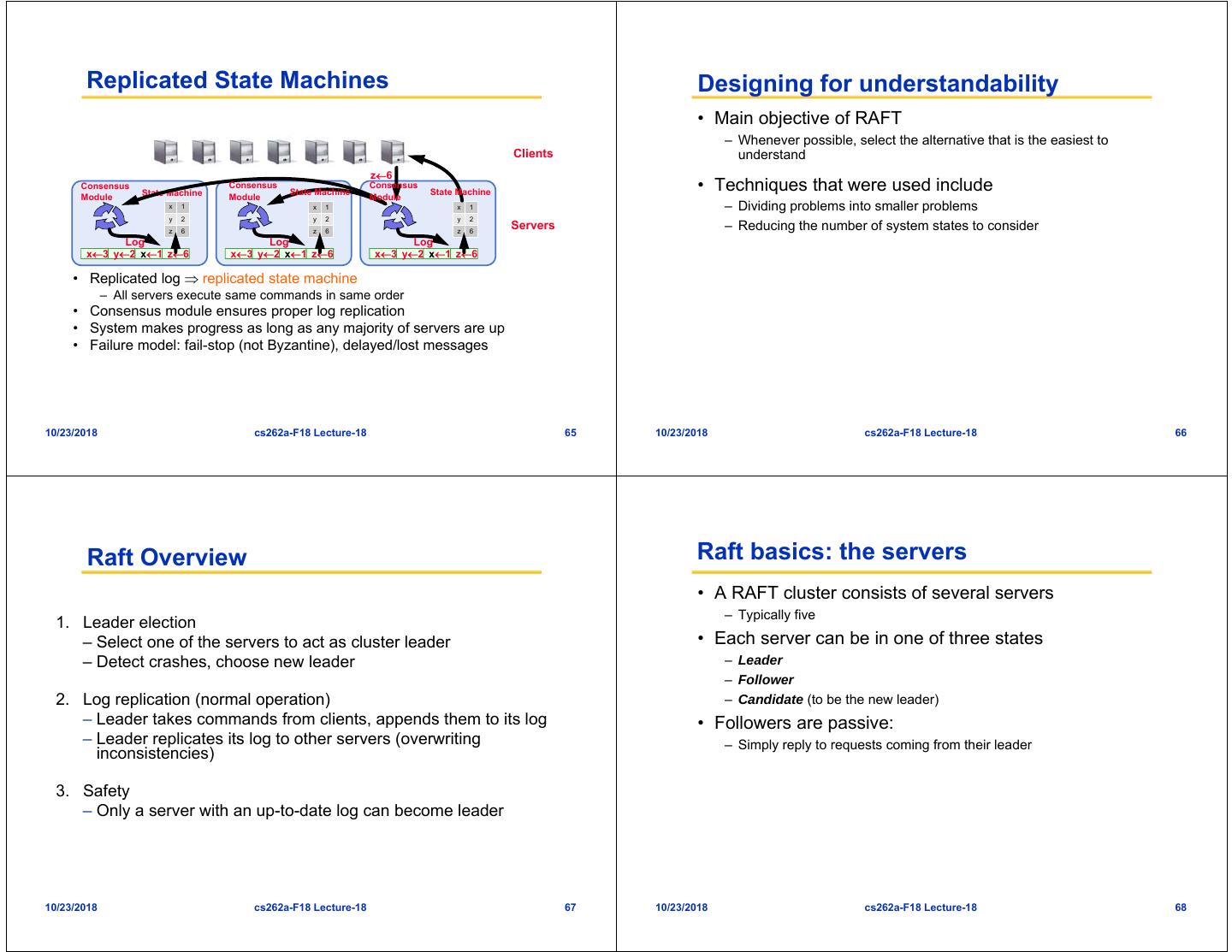

17 . Replicated State Machines Designing for understandability • Main objective of RAFT – Whenever possible, select the alternative that is the easiest to Clients understand z6 Consensus State Machine Consensus State Machine Consensus State Machine • Techniques that were used include Module Module Module x 1 x 1 x 1 – Dividing problems into smaller problems y 2 y 2 y 2 z 6 z 6 z 6 Servers – Reducing the number of system states to consider Log Log Log x3 y2 x1 z6 x3 y2 x1 z6 x3 y2 x1 z6 • Replicated log replicated state machine – All servers execute same commands in same order • Consensus module ensures proper log replication • System makes progress as long as any majority of servers are up • Failure model: fail-stop (not Byzantine), delayed/lost messages 10/23/2018 cs262a-F18 Lecture-18 65 10/23/2018 cs262a-F18 Lecture-18 66 Raft Overview Raft basics: the servers • A RAFT cluster consists of several servers – Typically five 1. Leader election – Select one of the servers to act as cluster leader • Each server can be in one of three states – Detect crashes, choose new leader – Leader – Follower 2. Log replication (normal operation) – Candidate (to be the new leader) – Leader takes commands from clients, appends them to its log • Followers are passive: – Leader replicates its log to other servers (overwriting – Simply reply to requests coming from their leader inconsistencies) 3. Safety – Only a server with an up-to-date log can become leader 10/23/2018 cs262a-F18 Lecture-18 67 10/23/2018 cs262a-F18 Lecture-18 68

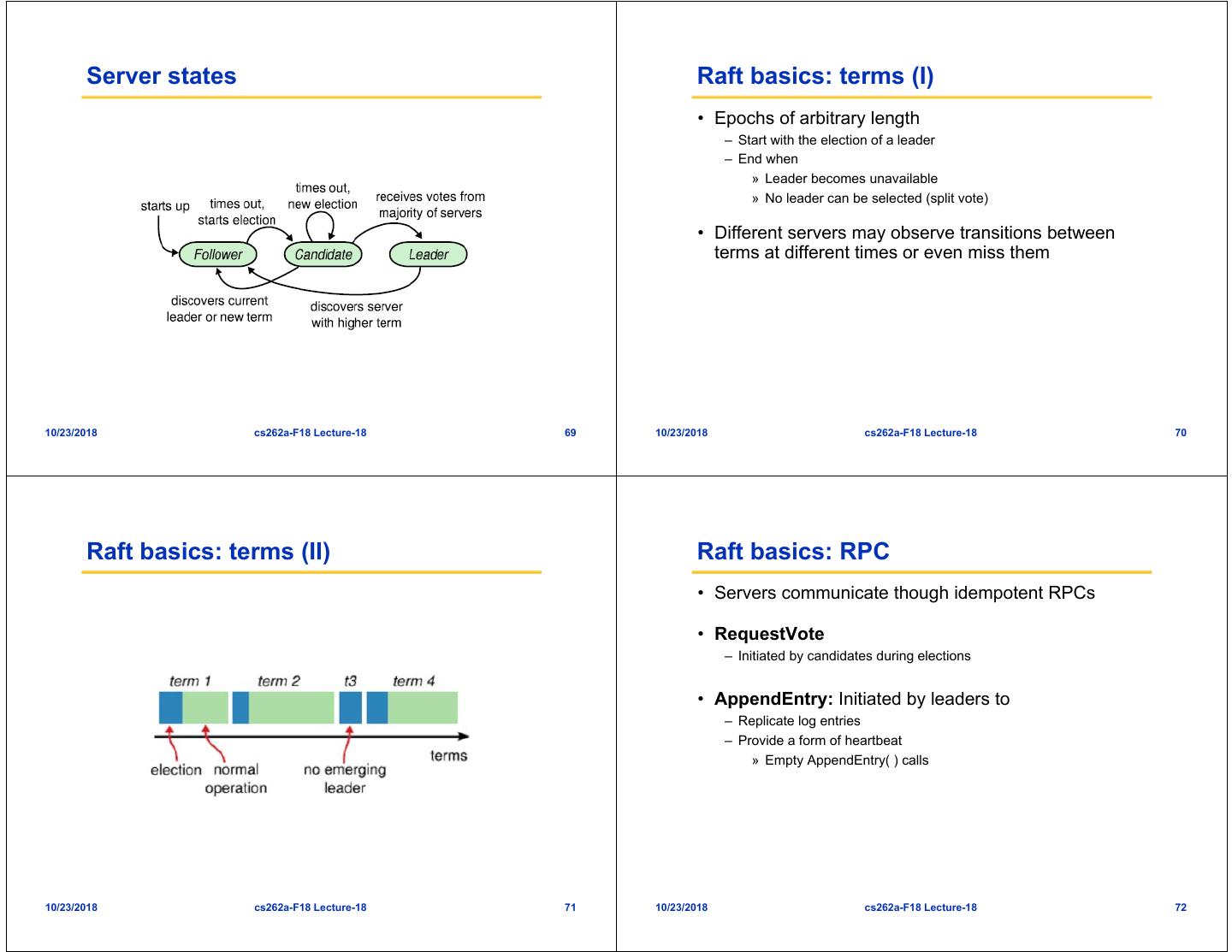

18 . Server states Raft basics: terms (I) • Epochs of arbitrary length – Start with the election of a leader – End when » Leader becomes unavailable » No leader can be selected (split vote) • Different servers may observe transitions between terms at different times or even miss them 10/23/2018 cs262a-F18 Lecture-18 69 10/23/2018 cs262a-F18 Lecture-18 70 Raft basics: terms (II) Raft basics: RPC • Servers communicate though idempotent RPCs • RequestVote – Initiated by candidates during elections • AppendEntry: Initiated by leaders to – Replicate log entries – Provide a form of heartbeat » Empty AppendEntry( ) calls 10/23/2018 cs262a-F18 Lecture-18 71 10/23/2018 cs262a-F18 Lecture-18 72

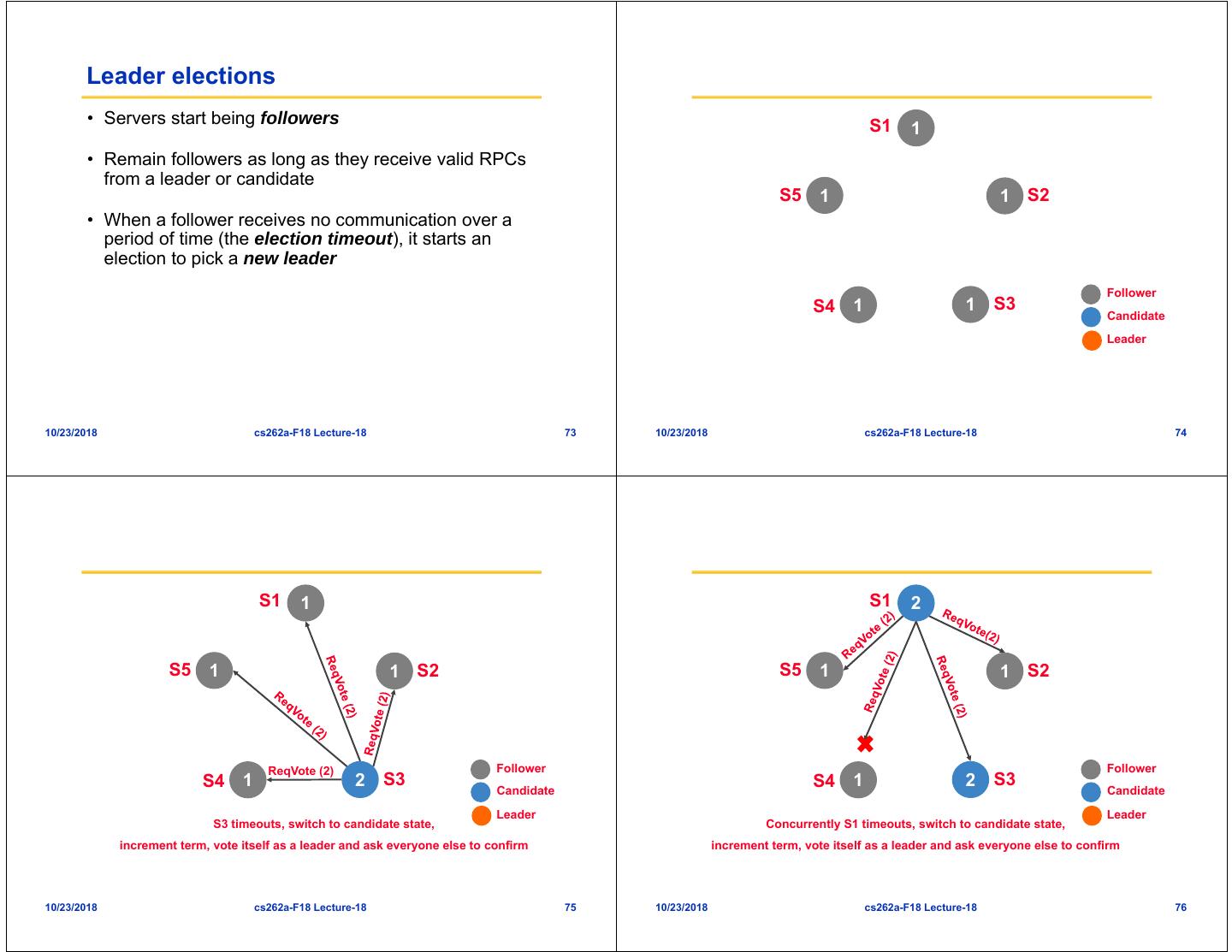

19 . Leader elections • Servers start being followers S1 1 • Remain followers as long as they receive valid RPCs from a leader or candidate S5 1 1 S2 • When a follower receives no communication over a period of time (the election timeout), it starts an election to pick a new leader Follower S4 1 1 S3 Candidate Leader 10/23/2018 cs262a-F18 Lecture-18 73 10/23/2018 cs262a-F18 Lecture-18 74 S1 1 S1 2 S5 1 1 S2 S5 1 1 S2 ReqVote (2) Follower Follower S4 1 2 S3 S4 1 2 S3 Candidate Candidate Leader Leader S3 timeouts, switch to candidate state, Concurrently S1 timeouts, switch to candidate state, increment term, vote itself as a leader and ask everyone else to confirm increment term, vote itself as a leader and ask everyone else to confirm 10/23/2018 cs262a-F18 Lecture-18 75 10/23/2018 cs262a-F18 Lecture-18 76

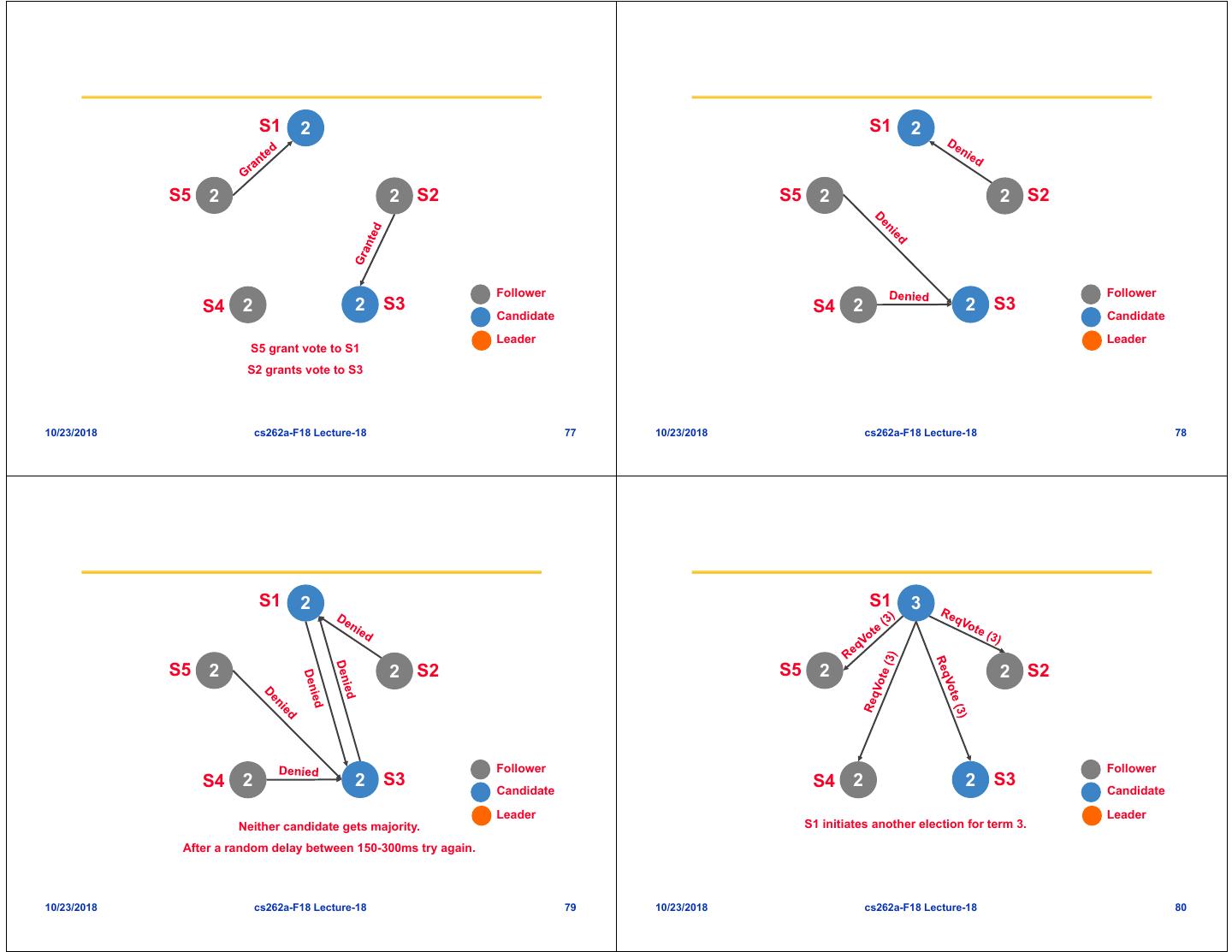

20 . S1 2 S1 2 S5 2 2 S2 S5 2 2 S2 Follower Follower S4 2 2 S3 S4 2 2 S3 Candidate Candidate Leader Leader S5 grant vote to S1 S2 grants vote to S3 10/23/2018 cs262a-F18 Lecture-18 77 10/23/2018 cs262a-F18 Lecture-18 78 S1 2 S1 3 S5 2 2 S2 S5 2 2 S2 Follower Follower S4 2 2 S3 S4 2 2 S3 Candidate Candidate Leader Leader Neither candidate gets majority. S1 initiates another election for term 3. After a random delay between 150-300ms try again. 10/23/2018 cs262a-F18 Lecture-18 79 10/23/2018 cs262a-F18 Lecture-18 80

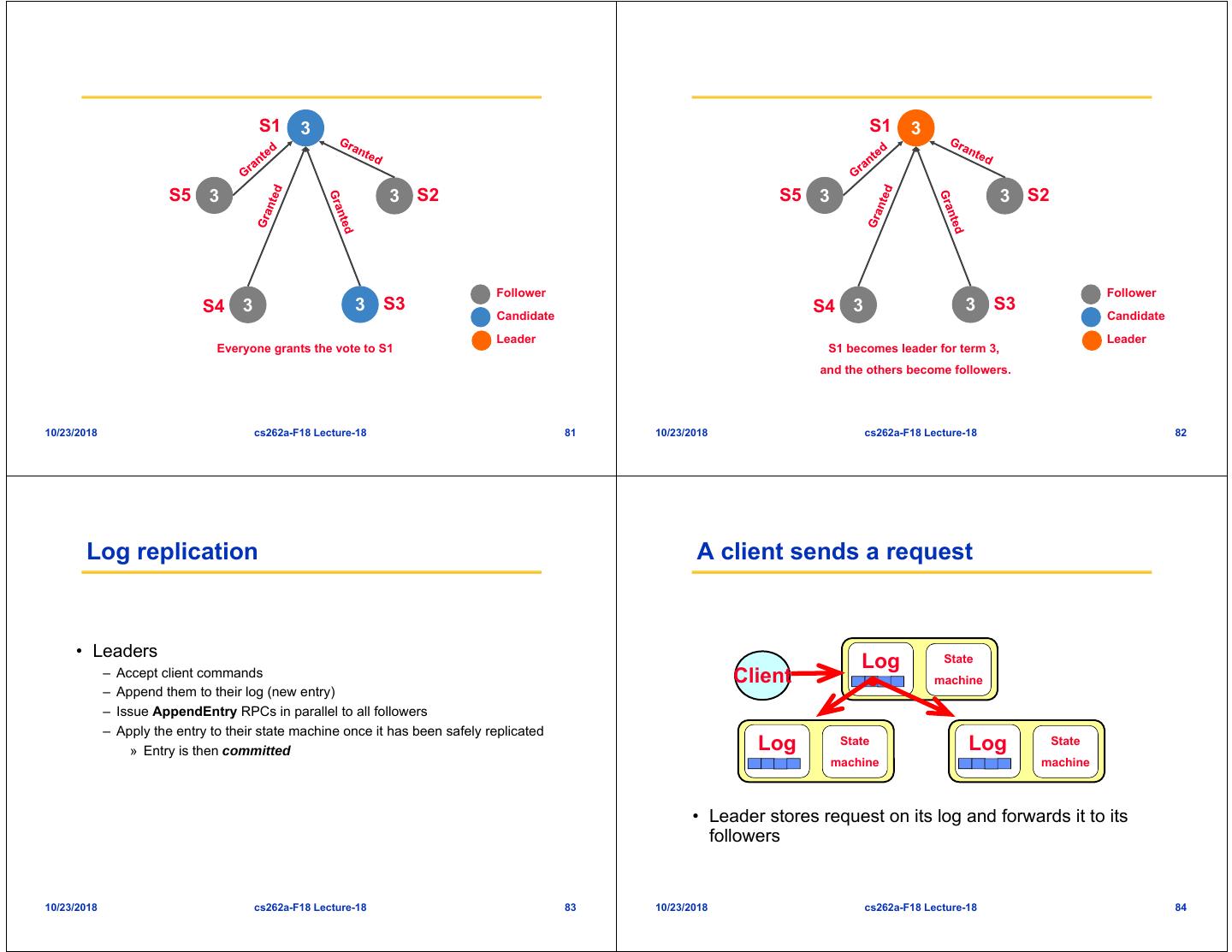

21 . S1 3 S1 3 S5 3 3 S2 S5 3 3 S2 Follower Follower S4 3 3 S3 S4 3 3 S3 Candidate Candidate Leader Leader Everyone grants the vote to S1 S1 becomes leader for term 3, and the others become followers. 10/23/2018 cs262a-F18 Lecture-18 81 10/23/2018 cs262a-F18 Lecture-18 82 Log replication A client sends a request • Leaders Log State – Accept client commands Client machine – Append them to their log (new entry) – Issue AppendEntry RPCs in parallel to all followers – Apply the entry to their state machine once it has been safely replicated » Entry is then committed Log State Log State machine machine • Leader stores request on its log and forwards it to its followers 10/23/2018 cs262a-F18 Lecture-18 83 10/23/2018 cs262a-F18 Lecture-18 84

22 . The followers receive the request The leader tallies followers' ACKs Log State Log State Client machine Client machine Log State Log State Log State Log State machine machine machine machine • Followers store the request on their logs and • Once it ascertains the request has been processed by a acknowledge its receipt majority of the servers, it updates its state machine 10/23/2018 cs262a-F18 Lecture-18 85 10/23/2018 cs262a-F18 Lecture-18 86 The leader tallies followers' ACKs Log organization Log State Client machine Colors identify Log State Log State terms machine machine • Leader's heartbeats convey the news to its followers: they update their state machines 10/23/2018 cs262a-F18 Lecture-18 87 10/23/2018 cs262a-F18 Lecture-18 88

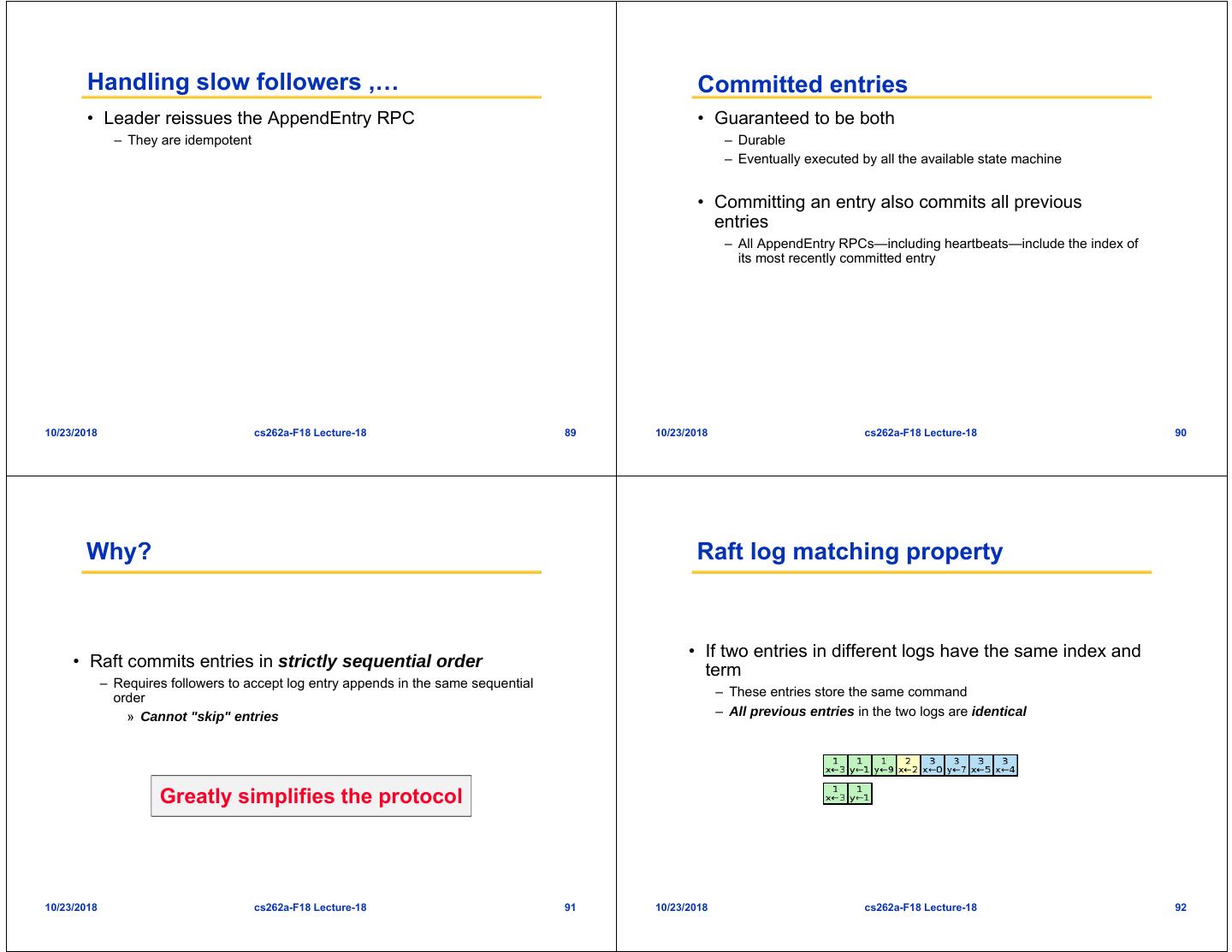

23 . Handling slow followers ,… Committed entries • Leader reissues the AppendEntry RPC • Guaranteed to be both – They are idempotent – Durable – Eventually executed by all the available state machine • Committing an entry also commits all previous entries – All AppendEntry RPCs—including heartbeats—include the index of its most recently committed entry 10/23/2018 cs262a-F18 Lecture-18 89 10/23/2018 cs262a-F18 Lecture-18 90 Why? Raft log matching property • If two entries in different logs have the same index and • Raft commits entries in strictly sequential order term – Requires followers to accept log entry appends in the same sequential order – These entries store the same command » Cannot "skip" entries – All previous entries in the two logs are identical Greatly simplifies the protocol 10/23/2018 cs262a-F18 Lecture-18 91 10/23/2018 cs262a-F18 Lecture-18 92

24 . Safety Election restriction (I) • Two main questions • The log of any new leader must contain all previously committed entries 1. What if the log of a new leader did not contain all – Candidates include in their RequestVote RPCs information about previously committed entries? the state of their log – Before voting for a candidate, servers check that the log of the – Must impose conditions on new leaders candidate is at least as up to date as their own log. » Majority rule does the rest 2. How to commit entries from a previous term? – Must tune the commit mechanism 10/23/2018 cs262a-F18 Lecture-18 93 10/23/2018 cs262a-F18 Lecture-18 94 Election restriction (II) Committing entries from previous term • A leader cannot conclude that an entry from a previous term is committed even if stored on a majority of servers. Servers holding Servers having • Leader should never commits log entries from previous terms by counting replicas the last committed elected the • Should only do it for entries from the current term log entry new leader • Once it has been able to do that for one entry, all prior entries are committed indirectly Two majorities of the same cluster must intersect 10/23/2018 cs262a-F18 Lecture-18 95 10/23/2018 cs262a-F18 Lecture-18 96

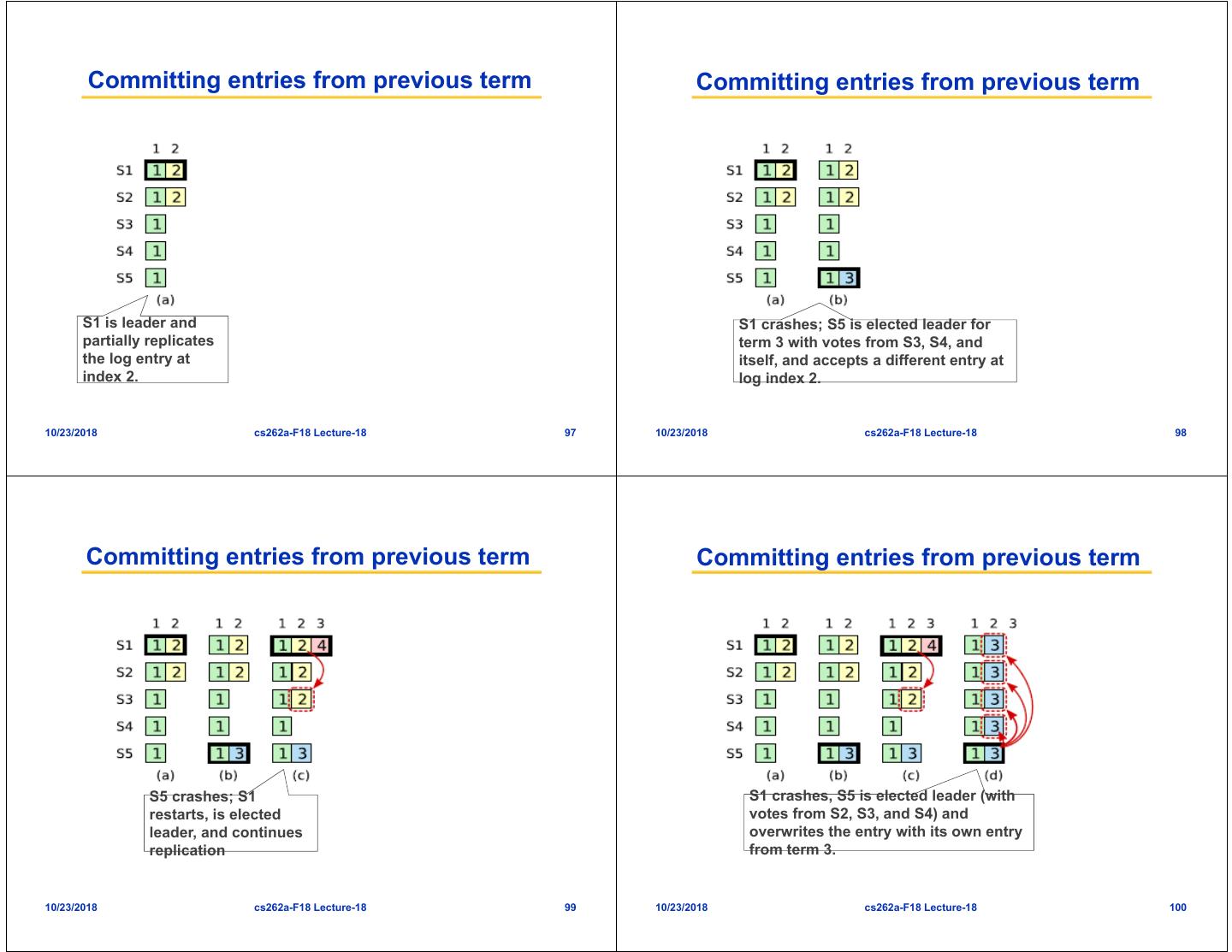

25 . Committing entries from previous term Committing entries from previous term S1 is leader and S1 crashes; S5 is elected leader for partially replicates term 3 with votes from S3, S4, and the log entry at itself, and accepts a different entry at index 2. log index 2. 10/23/2018 cs262a-F18 Lecture-18 97 10/23/2018 cs262a-F18 Lecture-18 98 Committing entries from previous term Committing entries from previous term S5 crashes; S1 S1 crashes, S5 is elected leader (with restarts, is elected votes from S2, S3, and S4) and leader, and continues overwrites the entry with its own entry replication from term 3. 10/23/2018 cs262a-F18 Lecture-18 99 10/23/2018 cs262a-F18 Lecture-18 100

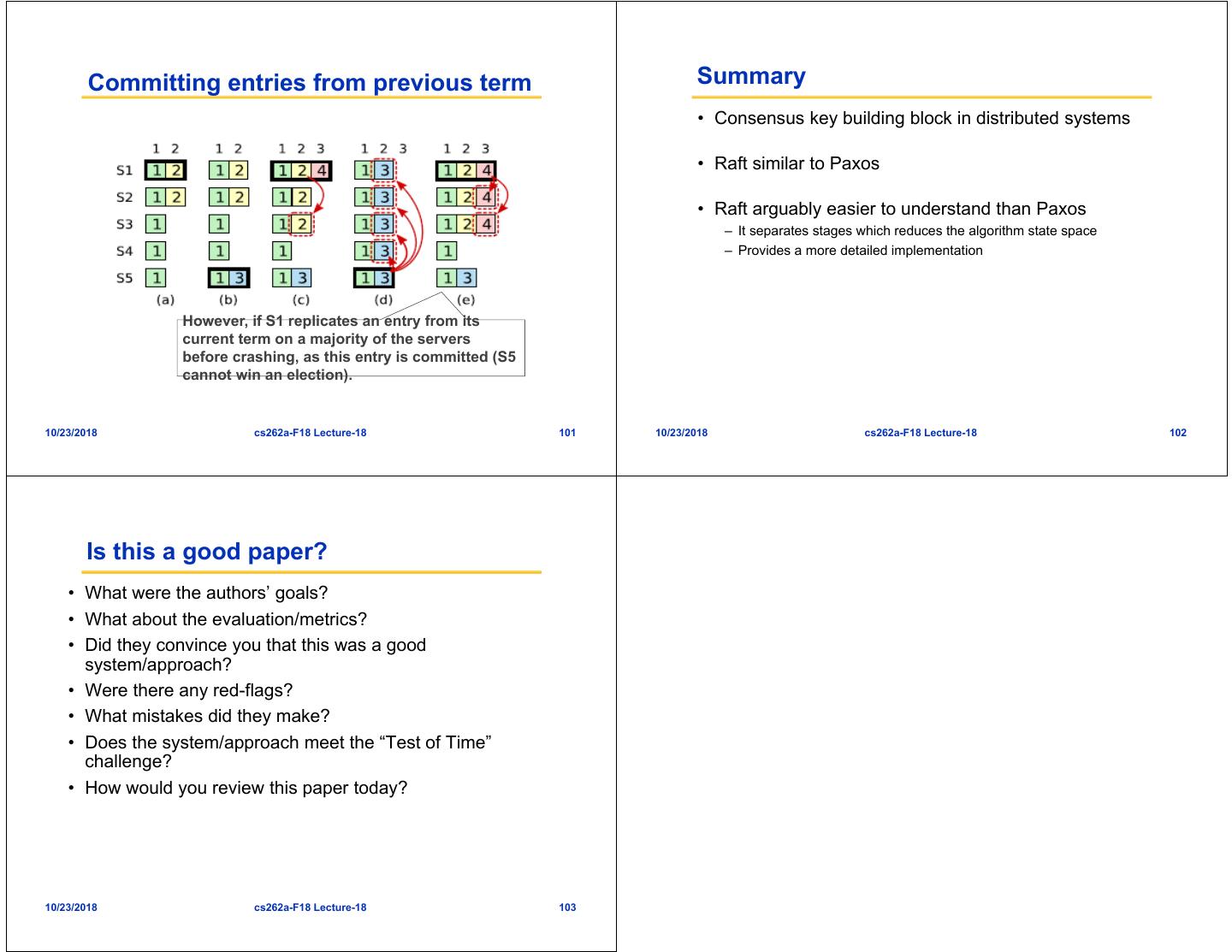

26 . Committing entries from previous term Summary • Consensus key building block in distributed systems • Raft similar to Paxos • Raft arguably easier to understand than Paxos – It separates stages which reduces the algorithm state space – Provides a more detailed implementation However, if S1 replicates an entry from its current term on a majority of the servers before crashing, as this entry is committed (S5 cannot win an election). 10/23/2018 cs262a-F18 Lecture-18 101 10/23/2018 cs262a-F18 Lecture-18 102 Is this a good paper? • What were the authors’ goals? • What about the evaluation/metrics? • Did they convince you that this was a good system/approach? • Were there any red-flags? • What mistakes did they make? • Does the system/approach meet the “Test of Time” challenge? • How would you review this paper today? 10/23/2018 cs262a-F18 Lecture-18 103