- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

01Advanced Topics in Computer Systems

展开查看详情

1 . Who am I? • Professor John Kubiatowicz (Prof “Kubi”) EECS 262a Alewife – Background in Hardware Design Advanced Topics in Computer Systems » Alewife project at MIT » Designed CMMU, Modified SPAR C processor Lecture 1 » Helped to write operating system – Background in Operating Systems » Worked for Project Athena (MIT) Tessellation Introduction/UNIX » OS Developer (device drivers, network file systems) August 23, 2018 » Worked on Clustered High-Availability systems. – Peer-to-Peer » OceanStore project – Store your data for 1000 years John Kubiatowicz » Tapestry and Bamboo – Find you data around globe Electrical Engineering and Computer Sciences – SwarmLAB/Berkeley Lab for Intelligent Edge University of California, Berkeley » Global Data Plane (GDP)/DataCapsules OceanStore » Fog Robotics http://www.eecs.berkeley.edu/~kubitron/cs262a – Quantum Computing » Exploring architectures for quantum computers » CAD tool set yields some interesting results 8/23/2018 cs262a-F18 Lecture-01 2 Computing Devices Then… Computing Systems Today • The world is a large parallel system Massive Cluster – Microprocessors in everything Clusters – Vast infrastructure behind them Gigabit Ethernet Internet Scalable, Reliable, Connectivity Secure Services Databases Refrigerators Information Collection Remote Storage Online Games Sensor Commerce Nets … Cars EDSAC, University of Cambridge, UK, 1948 IoT Routers Robots 8/23/2018 cs262a-F18 Lecture-01 3 8/23/2018 cs262a-F18 Lecture-01 4

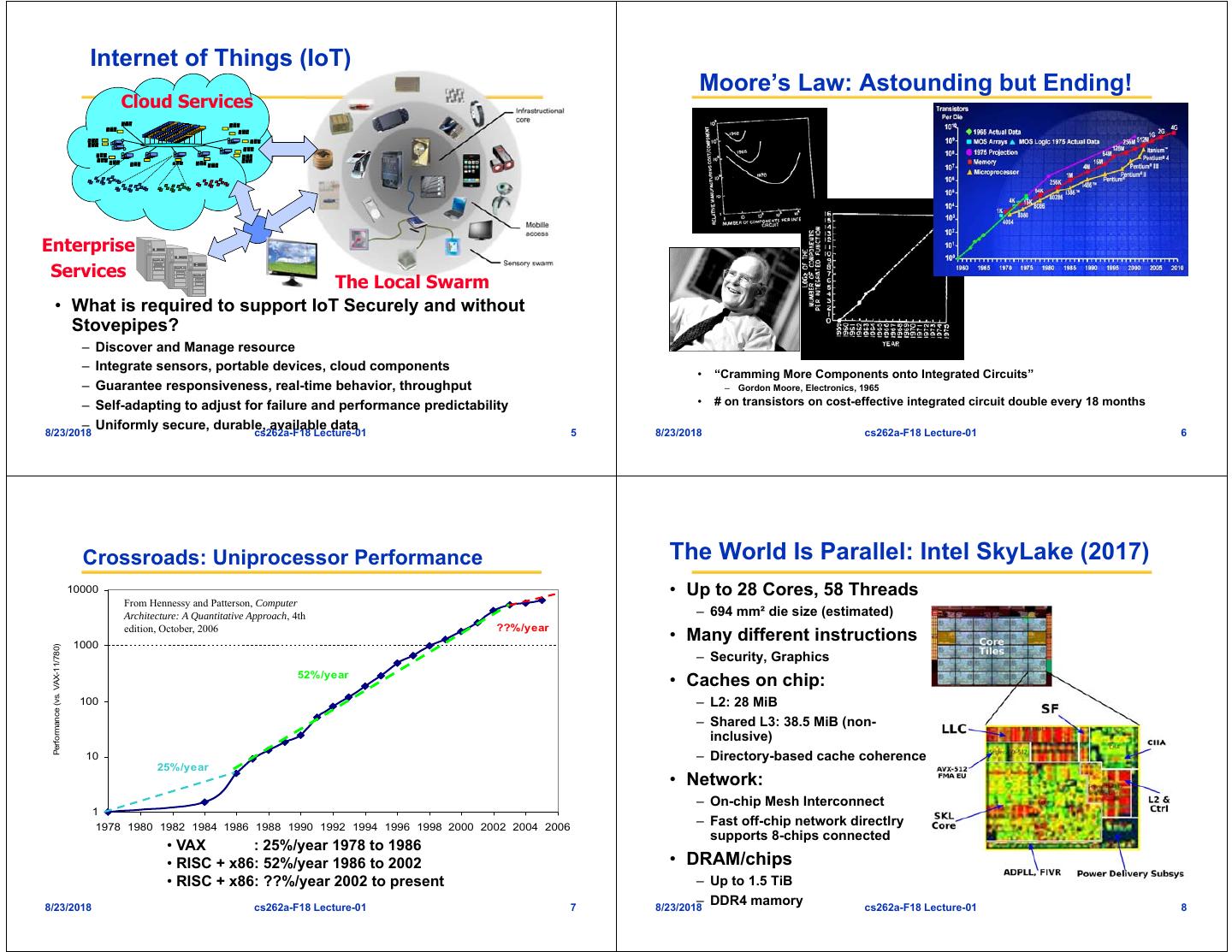

2 . Internet of Things (IoT) Moore’s Law: Astounding but Ending! Cloud Services Enterprise Services The Local Swarm • What is required to support IoT Securely and without Stovepipes? – Discover and Manage resource – Integrate sensors, portable devices, cloud components • “Cramming More Components onto Integrated Circuits” – Guarantee responsiveness, real-time behavior, throughput – Gordon Moore, Electronics, 1965 – Self-adapting to adjust for failure and performance predictability • # on transistors on cost-effective integrated circuit double every 18 months – Uniformly secure, durable, available data 8/23/2018 cs262a-F18 Lecture-01 5 8/23/2018 cs262a-F18 Lecture-01 6 Crossroads: Uniprocessor Performance The World Is Parallel: Intel SkyLake (2017) 10000 • Up to 28 Cores, 58 Threads From Hennessy and Patterson, Computer Architecture: A Quantitative Approach, 4th – 694 mm² die size (estimated) edition, October, 2006 ??%/year 1000 • Many different instructions Performance (vs. VAX-11/780) – Security, Graphics 52%/year • Caches on chip: 100 – L2: 28 MiB – Shared L3: 38.5 MiB (non- inclusive) 10 – Directory-based cache coherence 25%/year • Network: – On-chip Mesh Interconnect 1 1978 1980 1982 1984 1986 1988 1990 1992 1994 1996 1998 2000 2002 2004 2006 – Fast off-chip network directlry supports 8-chips connected • VAX : 25%/year 1978 to 1986 • RISC + x86: 52%/year 1986 to 2002 • DRAM/chips • RISC + x86: ??%/year 2002 to present – Up to 1.5 TiB 8/23/2018 cs262a-F18 Lecture-01 7 – DDR4 mamory 8/23/2018 cs262a-F18 Lecture-01 8

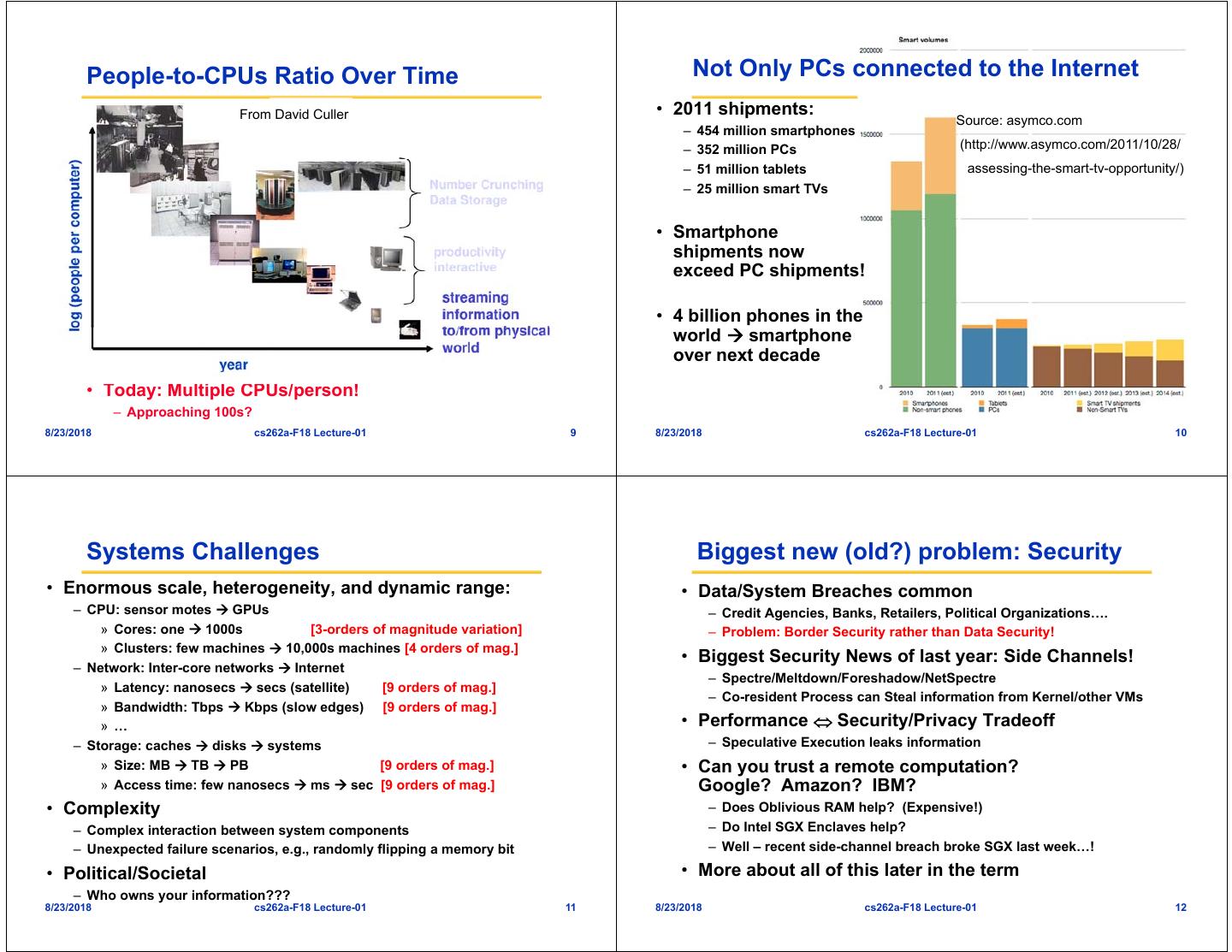

3 . People-to-CPUs Ratio Over Time Not Only PCs connected to the Internet From David Culler • 2011 shipments: Source: asymco.com – 454 million smartphones – 352 million PCs (http://www.asymco.com/2011/10/28/ – 51 million tablets assessing-the-smart-tv-opportunity/) – 25 million smart TVs • Smartphone shipments now exceed PC shipments! • 4 billion phones in the world smartphone over next decade • Today: Multiple CPUs/person! – Approaching 100s? 8/23/2018 cs262a-F18 Lecture-01 9 8/23/2018 cs262a-F18 Lecture-01 10 Systems Challenges Biggest new (old?) problem: Security • Enormous scale, heterogeneity, and dynamic range: • Data/System Breaches common – CPU: sensor motes GPUs – Credit Agencies, Banks, Retailers, Political Organizations…. » Cores: one 1000s [3-orders of magnitude variation] – Problem: Border Security rather than Data Security! » Clusters: few machines 10,000s machines [4 orders of mag.] • Biggest Security News of last year: Side Channels! – Network: Inter-core networks Internet – Spectre/Meltdown/Foreshadow/NetSpectre » Latency: nanosecs secs (satellite) [9 orders of mag.] – Co-resident Process can Steal information from Kernel/other VMs » Bandwidth: Tbps Kbps (slow edges) [9 orders of mag.] » … • Performance Security/Privacy Tradeoff – Storage: caches disks systems – Speculative Execution leaks information » Size: MB TB PB [9 orders of mag.] • Can you trust a remote computation? » Access time: few nanosecs ms sec [9 orders of mag.] Google? Amazon? IBM? • Complexity – Does Oblivious RAM help? (Expensive!) – Complex interaction between system components – Do Intel SGX Enclaves help? – Unexpected failure scenarios, e.g., randomly flipping a memory bit – Well – recent side-channel breach broke SGX last week…! • Political/Societal • More about all of this later in the term – Who owns your information??? 8/23/2018 cs262a-F18 Lecture-01 11 8/23/2018 cs262a-F18 Lecture-01 12

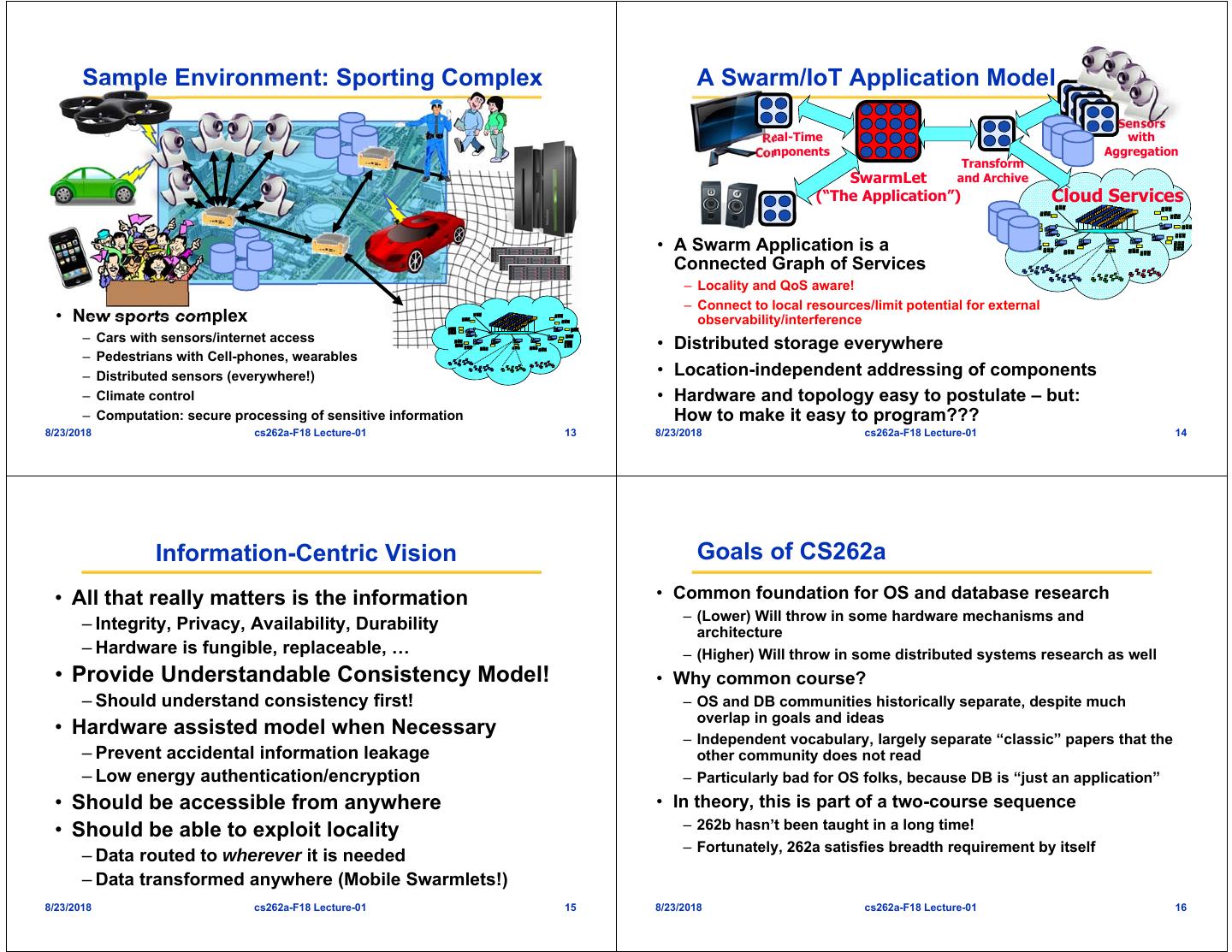

4 . Sample Environment: Sporting Complex A Swarm/IoT Application Model Sensors Real-Time with Components Aggregation Transform SwarmLet and Archive (“The Application”) Cloud Services • A Swarm Application is a Connected Graph of Services – Locality and QoS aware! – Connect to local resources/limit potential for external • New sports complex observability/interference – Cars with sensors/internet access • Distributed storage everywhere – Pedestrians with Cell-phones, wearables – Distributed sensors (everywhere!) • Location-independent addressing of components – Climate control • Hardware and topology easy to postulate – but: – Computation: secure processing of sensitive information How to make it easy to program??? 8/23/2018 cs262a-F18 Lecture-01 13 8/23/2018 cs262a-F18 Lecture-01 14 Information-Centric Vision Goals of CS262a • All that really matters is the information • Common foundation for OS and database research – (Lower) Will throw in some hardware mechanisms and – Integrity, Privacy, Availability, Durability architecture – Hardware is fungible, replaceable, … – (Higher) Will throw in some distributed systems research as well • Provide Understandable Consistency Model! • Why common course? – Should understand consistency first! – OS and DB communities historically separate, despite much overlap in goals and ideas • Hardware assisted model when Necessary – Independent vocabulary, largely separate “classic” papers that the – Prevent accidental information leakage other community does not read – Low energy authentication/encryption – Particularly bad for OS folks, because DB is “just an application” • Should be accessible from anywhere • In theory, this is part of a two-course sequence • Should be able to exploit locality – 262b hasn’t been taught in a long time! – Fortunately, 262a satisfies breadth requirement by itself – Data routed to wherever it is needed – Data transformed anywhere (Mobile Swarmlets!) 8/23/2018 cs262a-F18 Lecture-01 15 8/23/2018 cs262a-F18 Lecture-01 16

5 . What is Research? Basic Format of CS262a classes • Research = Analysis and Synthesis together • Paper-Driven Lecture format with Discussion – Analysis: understanding others’ work – both the good AND the bad – Cover 1-2 papers plus announcements – Synthesis: finding new ways to advance the state of the art – Lots of discussion!!! Guest Lecturers! • Systems research isn’t cut-and-dried – In fact, prefer far more discussion, much less lecturing – Few “provably correct” answers • Will not cover too much basic material – In fact, some outright confusion/bad results – We assume that you have had an introductory OS course • Analysis in CS262a: Literature survey – If not, you will have to work hard to understand the material – Read, analyze, criticize papers – Many online videos of CS162. For instance: – All research projects fail in some way » http://www.infocobuild.com/education/audio-video-courses /computer-science/cs162-fall2010-berkeley.html – Successful projects: got some interesting results anyway • Class preparation: • Synthesis in CS262a: Do a small piece of real research – Reading papers is hard, especially at first – Suggested projects handed out in 3-4 weeks – Every class has 1 or 2 papers that will be the focus of the class – Teams of 2 or 3 – Read BEFORE CLASS – Poster session and “conference paper” at end of the semester – If you need background, read background papers BEFORE CLASS – Usually best papers make it into a real conferences (with extra work) 8/23/2018 cs262a-F18 Lecture-01 17 8/23/2018 cs262a-F18 Lecture-01 18 Topics: All about sharing Basic Format of CS262a classes (con’t) (reliably, securely!) • Homework: brief summary for every paper • Course topics (not necessarily in this order) – ½ page or less/paper – OS structure: File Systems, Virtual Memory …. – 2 most important things in paper – Transactions, recovery, & Fault-tolerance – 1 major flaw – Concurrency, scheduling, synchronization • Summaries must be submitted through online – Communication, Distributed Systems submission form before class in order to get credit – Multiprocessors – Protection and Security – You can skip 3 summaries without penalty – “Revealed Truth” – overall principles! – After that, they eat into your grade • Mix of Historical papers and Recent research • This is a grad class material may be controversial – Will be updating the reading list from previous terms – (HOPEFULLY?) – Will also be reading some classic papers • Why is History important? – All systems exist in a context. Historical papers part of culture! – Many ideas and techniques keep recurring (or never leave) – Need to avoid the “short horizon” effect » Claim you invented something that originally appeared in 1975 8/23/2018 cs262a-F18 Lecture-01 19 8/23/2018 cs262a-F18 Lecture-01 20

6 . Research Project More Course Info • Research-oriented course • Website: – Project provides opportunity to do “research in the small” to help – http://www.cs.berkeley.edu/~kubitron/cs262a make transition from good student to research colleague – Contains lecture notes, readings, announcements – Assumption is that you will advance the state of the art in some way • Grading: – Projects done in groups of 2 or 3 students – 50% Project Paper – 15% Project Demo • Topic? – 25% Midterm (Take home design problems) – Should be topical to CS262 – 10% Project Summaries – Exciting possibilities related to SWARM-LAB, AMP-LAB • Signup: Go to the “Enrollment” link and sign up for the class – We will provide project suggestions in a couple of weeks – Not quite ready – will be by end of Weekend…. • Details: – Need this in order to submit summaries – meet multiple times with faculty to see progress • Outside Access to Papers: – give poster session at end of semester – User: “cs262a” Password: “parallelism” – written report like conference paper • Summary details – Usually, best papers make it into a real conference (with extra work) – Can miss 3 summaries without penalty, no reason needed (or wanted) • Can you share a project with other systems courses? – Summaries: < ½ page per paper – Under most circumstances, the answer is “yes” » At least 1 criticism, 2 important insights from paper » Online submission form – Need to ok with me, however 8/23/2018 cs262a-F18 Lecture-01 21 8/23/2018 cs262a-F18 Lecture-01 22 Dawn of time Coping with Size of Class ENIAC: (1945—1955) • Cannot get interaction with this many people – In past, have had an entrance exam – Instead, we will probably cap the class at 50 people • Graduate Students working on Prelim breadth requirement have precedence • Undergraduates: only if there is room – We will take people only if they did well in CS162 » Assuming A- or Better – Possible Exceptions for students doing research or other » Talk to me • We will probably be able to take everyone that is • “The machine designed by Drs. Eckert and Mauchly eligible… was a monstrosity. When it was finished, the ENIAC filled an entire room, weighed thirty tons, and consumed two hundred kilowatts of power.” • http://ei.cs.vt.edu/~history/ENIAC.Richey.HTML 8/23/2018 cs262a-F18 Lecture-01 23 8/23/2018 cs262a-F18 Lecture-01 24

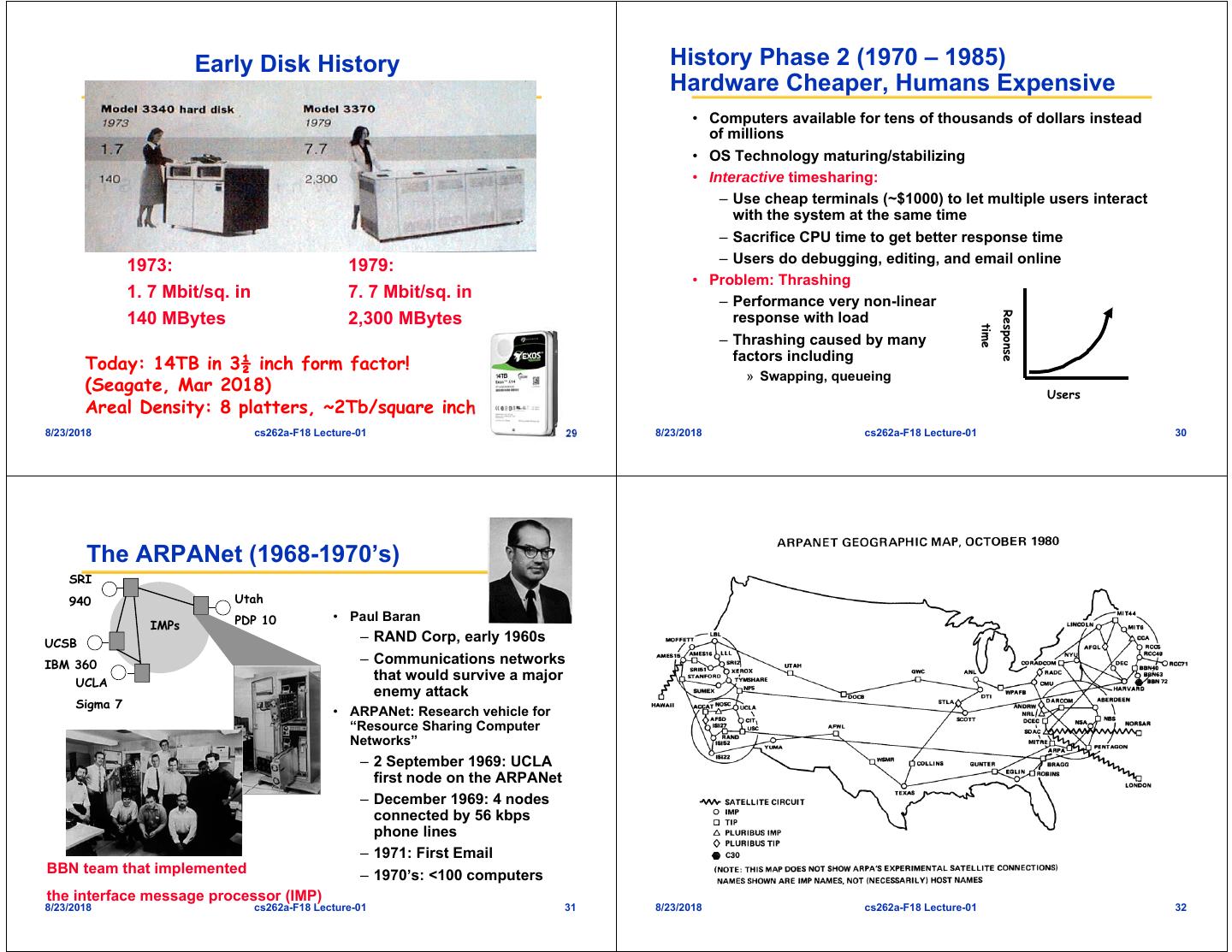

7 . History Phase 1 (1948—1970) Hardware Expensive, Humans Cheap Core Memories (1950s & 60s) • When computers cost millions of $’s, optimize for more efficient use of the hardware! – Lack of interaction between user and computer The first magnetic core memory, • User at console: one user at a time from the IBM 405 Alphabetical Accounting Machine. • Batch monitor: load program, run, print • Optimize to better use hardware – When user thinking at console, computer idleBAD! – Feed computer batches and make users wait • Core Memory stored data as magnetization in iron rings – Autograder for this course is similar – Iron “cores” woven into a 2-dimensional mesh of wires • No protection: what if batch program has bug? – Origin of the term “Dump Core” – Rumor that IBM consulted Life Saver company • See: http://www.columbia.edu/acis/history/core.html 8/23/2018 cs262a-F18 Lecture-01 25 8/23/2018 cs262a-F18 Lecture-01 26 History Phase 1½ (late 60s/early 70s) A Multics System (Circa 1976) • Data channels, Interrupts: overlap I/O and compute – DMA – Direct Memory Access for I/O devices – I/O can be completed asynchronously • Multiprogramming: several programs run simultaneously – Small jobs not delayed by large jobs – More overlap between I/O and CPU – Need memory protection between programs and/or OS • Complexity gets out of hand: – Multics: announced in 1963, ran in 1969 » 1777 people “contributed to Multics” (30-40 core dev) » Turing award lecture from Fernando Corbató (key researcher): “On building systems that will fail” – OS 360: released with 1000 known bugs (APARs) • The 6180 at MIT IPC, skin doors open, circa 1976: » “Anomalous Program Activity Report” – “We usually ran the machine with doors open so the operators could see the AQ register display, which gave you an idea of the machine • OS finally becomes an important science: load, and for convenient access to the EXECUTE button, which the – How to deal with complexity??? operator would push to enter BOS if the machine crashed.” – UNIX based on Multics, but vastly simplified • http://www.multicians.org/multics-stories.html 8/23/2018 cs262a-F18 Lecture-01 27 8/23/2018 cs262a-F18 Lecture-01 28

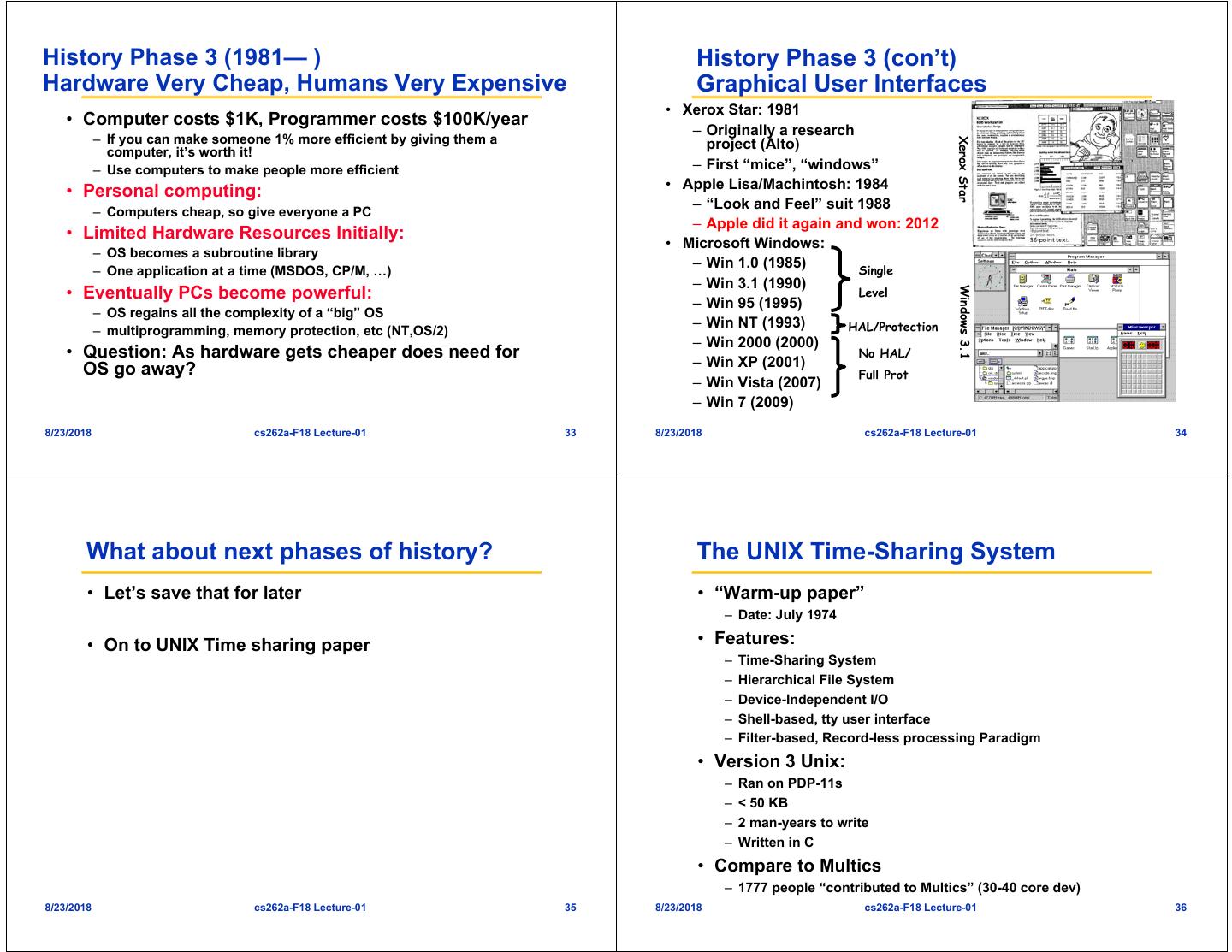

8 . Early Disk History History Phase 2 (1970 – 1985) Hardware Cheaper, Humans Expensive • Computers available for tens of thousands of dollars instead of millions • OS Technology maturing/stabilizing • Interactive timesharing: – Use cheap terminals (~$1000) to let multiple users interact with the system at the same time – Sacrifice CPU time to get better response time – Users do debugging, editing, and email online 1973: 1979: • Problem: Thrashing 1. 7 Mbit/sq. in 7. 7 Mbit/sq. in – Performance very non-linear 140 MBytes 2,300 MBytes response with load Response time – Thrashing caused by many factors including Today: 14TB in 3½ inch form factor! » Swapping, queueing (Seagate, Mar 2018) Users Areal Density: 8 platters, ~2Tb/square inch 8/23/2018 cs262a-F18 Lecture-01 29 8/23/2018 cs262a-F18 Lecture-01 30 The ARPANet (1968-1970’s) SRI 940 Utah PDP 10 • Paul Baran IMPs UCSB – RAND Corp, early 1960s IBM 360 – Communications networks UCLA that would survive a major enemy attack Sigma 7 • ARPANet: Research vehicle for “Resource Sharing Computer Networks” – 2 September 1969: UCLA first node on the ARPANet – December 1969: 4 nodes connected by 56 kbps phone lines – 1971: First Email BBN team that implemented – 1970’s: <100 computers the interface message processor (IMP) 8/23/2018 cs262a-F18 Lecture-01 31 8/23/2018 cs262a-F18 Lecture-01 32

9 .History Phase 3 (1981— ) History Phase 3 (con’t) Hardware Very Cheap, Humans Very Expensive Graphical User Interfaces • Xerox Star: 1981 • Computer costs $1K, Programmer costs $100K/year – Originally a research – If you can make someone 1% more efficient by giving them a project (Alto) Xerox Star computer, it’s worth it! – Use computers to make people more efficient – First “mice”, “windows” • Apple Lisa/Machintosh: 1984 • Personal computing: – Computers cheap, so give everyone a PC – “Look and Feel” suit 1988 – Apple did it again and won: 2012 • Limited Hardware Resources Initially: • Microsoft Windows: – OS becomes a subroutine library – One application at a time (MSDOS, CP/M, …) – Win 1.0 (1985) Single – Win 3.1 (1990) • Eventually PCs become powerful: Level Windows 3.1 – Win 95 (1995) – OS regains all the complexity of a “big” OS – multiprogramming, memory protection, etc (NT,OS/2) – Win NT (1993) HAL/Protection – Win 2000 (2000) • Question: As hardware gets cheaper does need for No HAL/ – Win XP (2001) OS go away? Full Prot – Win Vista (2007) – Win 7 (2009) 8/23/2018 cs262a-F18 Lecture-01 33 8/23/2018 cs262a-F18 Lecture-01 34 What about next phases of history? The UNIX Time-Sharing System • Let’s save that for later • “Warm-up paper” – Date: July 1974 • On to UNIX Time sharing paper • Features: – Time-Sharing System – Hierarchical File System – Device-Independent I/O – Shell-based, tty user interface – Filter-based, Record-less processing Paradigm • Version 3 Unix: – Ran on PDP-11s – < 50 KB – 2 man-years to write – Written in C • Compare to Multics – 1777 people “contributed to Multics” (30-40 core dev) 8/23/2018 cs262a-F18 Lecture-01 35 8/23/2018 cs262a-F18 Lecture-01 36

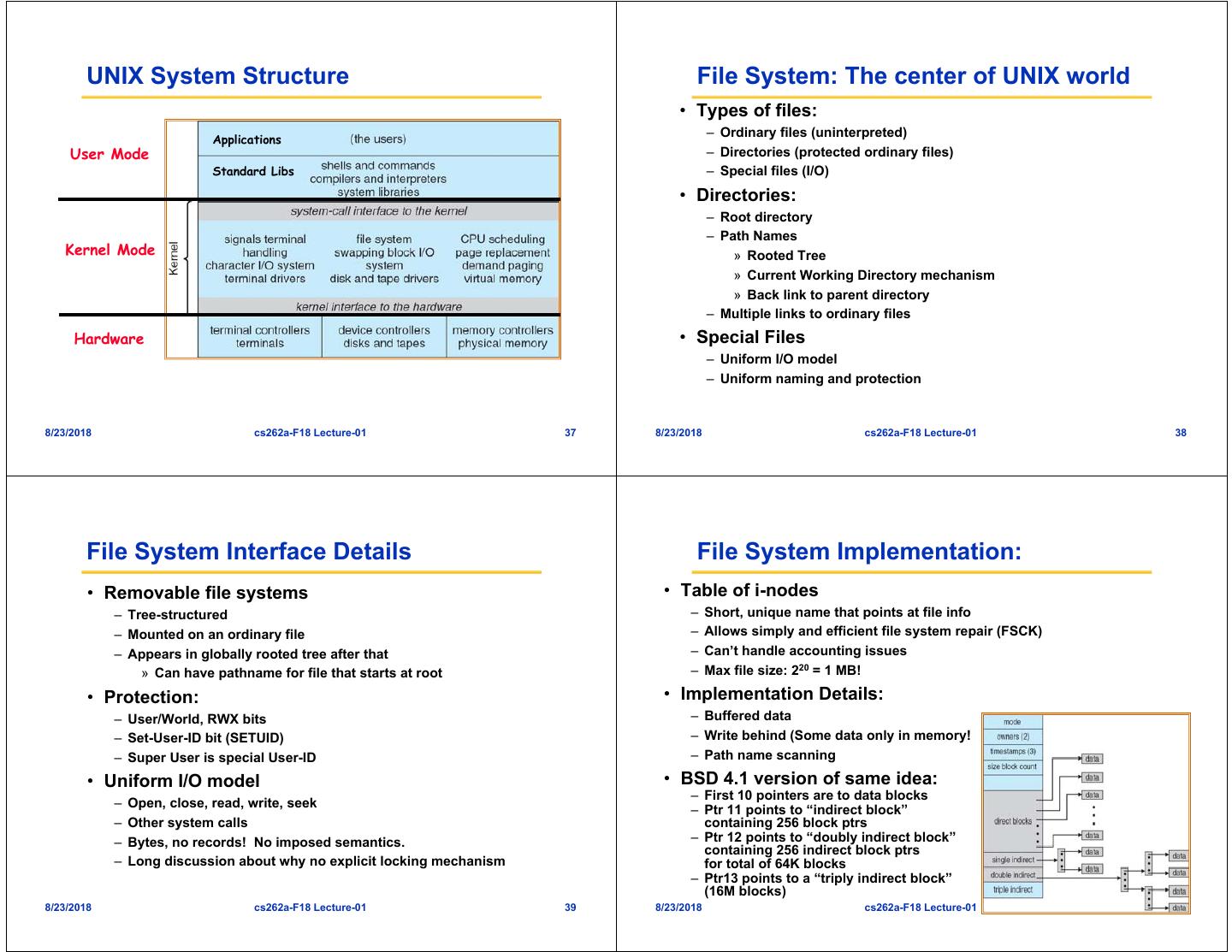

10 . UNIX System Structure File System: The center of UNIX world • Types of files: – Ordinary files (uninterpreted) Applications User Mode – Directories (protected ordinary files) Standard Libs – Special files (I/O) • Directories: – Root directory – Path Names Kernel Mode » Rooted Tree » Current Working Directory mechanism » Back link to parent directory – Multiple links to ordinary files Hardware • Special Files – Uniform I/O model – Uniform naming and protection 8/23/2018 cs262a-F18 Lecture-01 37 8/23/2018 cs262a-F18 Lecture-01 38 File System Interface Details File System Implementation: • Removable file systems • Table of i-nodes – Tree-structured – Short, unique name that points at file info – Mounted on an ordinary file – Allows simply and efficient file system repair (FSCK) – Appears in globally rooted tree after that – Can’t handle accounting issues » Can have pathname for file that starts at root – Max file size: 220 = 1 MB! • Protection: • Implementation Details: – User/World, RWX bits – Buffered data – Set-User-ID bit (SETUID) – Write behind (Some data only in memory! – Super User is special User-ID – Path name scanning • Uniform I/O model • BSD 4.1 version of same idea: – First 10 pointers are to data blocks – Open, close, read, write, seek – Ptr 11 points to “indirect block” – Other system calls containing 256 block ptrs – Bytes, no records! No imposed semantics. – Ptr 12 points to “doubly indirect block” containing 256 indirect block ptrs – Long discussion about why no explicit locking mechanism for total of 64K blocks – Ptr13 points to a “triply indirect block” (16M blocks) 8/23/2018 cs262a-F18 Lecture-01 39 8/23/2018 cs262a-F18 Lecture-01 40

11 . Other Ideas Is this a good paper? • Processes and Images – Process consists of Text, Data, and Stack Segments • What were the authors’ goals? – Process swapping • What about the performance metrics? – Essential mechanism: • Did they convince you that this was a good » Fork() to create new process system? » Wait() to wait for child to finish and get result » Exit(Status) to exit and give status to parent • Were there any red-flags? » Pipes for interprocess communication • What mistakes did they make? » Exec(file, arg1, arg2, … argn) to execute new image • Does the system meet the “Test of Time” • Shell challenge? – Command processor: cmd arg1 ... argn • How would you review this paper today? – Standard I/O and I/O redirection – Multi-tasking from a single shell – Shell is just a program! • Traps – Hardware Interrupts, Software Signals, Trap to system routine 8/23/2018 cs262a-F18 Lecture-01 41 8/23/2018 cs262a-F18 Lecture-01 42