- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Regularizing Neural Machine Translation by Target-bidirectional Agreement

展开查看详情

1 . Regularizing Neural Machine Translation by Target-bidirectional Agreement Zhirui Zhang‡∗ , Shuangzhi Wu , Shujie Liu§ , Mu Li§ , Ming Zhou§ , Tong Xu‡† ‡ University of Science and Technology of China, Hefei, China Harbin Institute of Technology, Harbin, China § Microsoft Research Asia ‡ zrustc11@gmail.com ‡ tongxu@ustc.edu.cn § {v-shuawu,shujliu,muli,mingzhou}@microsoft.com arXiv:1808.04064v2 [cs.CL] 12 Nov 2018 Abstract zh¯ıch´ızhˇe m¯en biˇaosh`ı, zh`e liˇangti´ao su`ıd`ao ji¯ang Input shˇı hu´anj`ıng sh`ouy`ı b`ıng b¯angzh`u ji¯al`ıf´un´ıy`azh¯ou Although Neural Machine Translation (NMT) has achieved qu`ebˇao g`ongshuˇı g`engji¯a a¯ nqu´an. remarkable progress in the past several years, most NMT sys- Supporters say the two tunnels will benefit the tems still suffer from a fundamental shortcoming as in other Ref. environment and help California ensure the water sequence generation tasks: errors made early in generation process are fed as inputs to the model and can be quickly am- supply is safer. plified, harming subsequent sequence generation. To address Supporters say these two tunnels will benefit the this issue, we propose a novel model regularization method L2R environment and to help a secure water supply ✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿ for NMT training, which aims to improve the agreement be- in California. ✿✿✿✿✿✿✿✿✿✿✿ tween translations generated by left-to-right (L2R) and right- Supporter say the tunnel will benefit the environ- ✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿ to-left (R2L) NMT decoders. This goal is achieved by intro- R2L ment and help California ensure the water supply ducing two Kullback-Leibler divergence regularization terms is more secure. into the NMT training objective to reduce the mismatch be- tween output probabilities of L2R and R2L models. In addi- Table 1: Example of an unsatisfied translation generate by tion, we also employ a joint training strategy to allow L2R and R2L models to improve each other in an interactive up- a left-to-right (L2R) decoder and a right-to-left (R2L) de- date process. Experimental results show that our proposed coder. method significantly outperforms state-of-the-art baselines on Chinese-English and English-German translation tasks. good prefixes but bad suffixes (shown in Table 1). Such an issue can become severe as sequence length increases. Introduction To address this problem, one line of research attempts to Neural Machine Translation (NMT) (Cho et al. 2014; reduce the inconsistency between training and inference so Sutskever, Vinyals, and Le 2014; Bahdanau, Cho, and Ben- as to improve the robustness when giving incorrect previous gio 2014) has seen the rapid development in the past several predictions, such as designing sequence-level objectives or years, from catching up with Statistical Machine Transla- adopting reinforcement learning approaches (Ranzato et al. tion (SMT) (Koehn, Och, and Marcu 2003; Chiang 2007) to 2015; Shen et al. 2016; Wiseman and Rush 2016). Another outperforming it by significant margins on many languages line tries to leverage a complementary NMT model that gen- (Sennrich, Haddow, and Birch 2016b; Wu et al. 2016; erates target words from right to left (R2L) to distinguish Tu et al. 2016; Eriguchi, Hashimoto, and Tsuruoka 2016; unsatisfied translation results from a n-best list generated by Wang et al. 2017a; Vaswani et al. 2017). In a conventional the L2R model (Liu et al. 2016; Wang et al. 2017b). NMT model, an encoder first transforms the source sequence In their work, the R2L NMT model is only used to re-rank into a sequence of intermediate hidden vector representa- the translation candidates generated by L2R model, while tions, based on which, a decoder generates the target se- the candidates in the n-best list still suffer from the exposure quence word by word. bias problem and limit the room for improvement. Another Due to the autoregressive structure, current NMT sys- problem is that, the complementary R2L model tends to gen- tems usually suffer from the so-called exposure bias prob- erate translation results with good suffixes and bad prefixes, lem (Bengio et al. 2015): during inference, true previous tar- due to the same exposure bias problem, as shown in Table get tokens are unavailable and replaced by tokens generated 1. Similar with using the R2L model to augment the L2R by the model itself, thus mistakes made early can mislead model, the L2R model can also be leveraged to improve the subsequent translation, yielding unsatisfied translations with R2L model. ∗ Contribution during internship at Microsoft. Instead of re-ranking the n-best list, we try to take consid- † Corresponding Author. eration of the agreement between the L2R and R2L models Copyright c 2019, Association for the Advancement of Artificial into both of their training objectives, hoping that the agree- Intelligence (www.aaai.org). All rights reserved. ment information can help to learn better models integrating

2 .their advantages to generate translations with good prefixes Our Approach and good suffixes. To this end, we introduce two Kullback- To deal with the exposure bias problem, we try to maxi- Leibler (KL) divergences between the probability distribu- mize the agreement between translations from L2R and R2L tions defined by L2R and R2L models into the NMT train- NMT models, and divide the NMT training objective into ing objective as regularization terms. Thus, we can not only two parts: the standard maximum likelihood of training data, maximize the likelihood of training data but also minimize and the regularization terms that indicate the divergence of the L2R and R2L model divergence at the same time, in L2R and R2L models based on the current model parame- which the latter one severs as a measure of exposure bias ter. In this section, we will start with basic model notations, problem of the currently evaluated model. With this method, followed by discussions of model regularization terms and the L2R model can be enhanced using the R2L model as a efficient gradient approximation methods. In the last part, helper system, and the R2L model can also be improved with we show that the L2R and R2L NMT models can be jointly the help of L2R model. We integrate the optimization of R2L improved to achieve even better results. and L2R models into a joint training framework, in which they act as helper systems for each other, and both mod- Notations els achieve further improvements with an interactive update Given source sentence x = (x1 , x2 , ... , xT ) and its process. → − target translation y = (y1 , y2 , ... , yT ), let P (y|x; θ ) Our experiments are conducted on Chinese-English and ←− English-German translation tasks, and demonstrate that our and P (y|x; θ ) be L2R and R2L translation models, in → − ←− proposed method significantly outperforms state-of-the-art which θ and θ are corresponding model parameters. baselines. Specifically, L2R translation model can be decomposed as → − T → − P (y|x; θ ) = t=1 P (yt |y<t , x; θ ), which means L2R Neural Machine Translation model adopts previous targets y1 , . . . , yt−1 as history to predict the current target yt at each step t, while the Neural Machine Translation (NMT) is an end-to-end frame- R2L translation model can similarly be decomposed as work to directly model the conditional probability P (y|x) of ← − 1 ←− target translation y = (y1 , y2 , ... , yT ) given source sentence P (y|x; θ ) = t=T P (yt |y>t , x; θ ) and employs later targets yt+1 , . . . , yT as history to predict current target yt x = (x1 , x2 , ... , xT ). In practice, NMT systems are usu- at each step t. ally implemented with an attention-based encoder-decoder architecture. The encoder reads the source sentence x and NMT Model Regularization transforms it into a sequence of intermediate hidden vec- tors h = (h1 , h2 , ... , hT ) using a neural network. Given Since L2R and R2L models are different chain decomposi- the hidden state h, the decoder generates target translation tions of the same translation probability, output probabilities y with another neural network that jointly learns language of the two models should be identical: and alignment models. T → − → − The structure of neural networks first employs recur- log P (y|x; θ ) = log P (yt |y<t , x; θ ) rent neural networks (RNN) or its variants - Gated Recur- t=1 rent Unit (Cho et al. 2014) and Long Short-Term Mem- 1 (2) ←− ory (Hochreiter and Schmidhuber 1997) networks. Recently, = log P (yt |y>t , x; θ ) two additional architectures have been proposed, improving t=T not only parallelization but also the state-of-the-art result: ←− = log P (y|x; θ ) the fully convolution model (Gehring et al. 2017) and the self-attentional transformer (Vaswani et al. 2017). However, if these two models are optimized separately by For model training, given a parallel corpus D = maximum likelihood estimation (MLE), there is no guaran- {(x(n) , y (n) )}N tee that the above equation will hold. To satisfy this con- n=1 , the standard training objective in NMT is to maximize the likelihood of the training data: straint, we introduce two Kullback-Leibler (KL) divergence regularization terms into the MLE training objective (Equa- N tion 1). For L2R model, the new training objective is: L(θ) = log P (y (n) |x(n) ; θ) (1) N → − → − n=1 L( θ ) = log P (y (n) |x(n) ; θ ) n=1 where P (y|x; θ) is the neural translation model and θ is the N model parameter. ←− → − −λ KL(P (y|x(n) ; θ )||P (y|x(n) ; θ )) (3) One big problem of the model training is that the history n=1 of any target word is correct and has been observed in the N training data, but during inference, all the target words are → − ←− predicted and may contain mistakes, which are fed as inputs −λ KL(P (y|x(n) ; θ )||P (y|x(n) ; θ )) to the model and quickly accumulated along with the se- n=1 quence generation. This is called the exposure bias problem where λ is a hyper-parameter for regularization terms. These (Bengio et al. 2015). regularization terms are 0 when Equation 2 holds, otherwise

3 .regularization terms will guide the training process to reduce Algorithm 1 Training Algorithm for L2R Model the disagreement between L2R and R2L models. Input: Bilingual Data D = {(x(n) , y (n) )}Nn=1 Unfortunately, it is impossible to calculate entire gradi- ←− ents of this objective function, since we need to sum over R2L Model P (y|x; θ ) → − all translation candidates in an exponential search space for Output: L2R Model P (y|x; θ ) KL divergence. To alleviate this problem, we follow Shen et 1: procedure TRAINING PROCESS al. (2016) to approximate the full search space with a sam- 2: while Not Converged do pled sub-space and then design an efficient KL divergence 3: Sample sentence pairs (x(n) , y (n) ) from bilin- approximation algorithm. Specifically, we derive the gradi- gual data D; ent calculation equation based on the definition of KL di- 4: Generate m translation candidates y11 , . . . , ym 1 vergence, and then design proper sampling methods for two (n) ← − for x by translation model P (y|x; θ ) and build different KL divergence regularization terms. ←− → − pseudo sentence pairs {(x(n) , yi1 )}m i=1 ; For KL(P (y|x(n) ; θ )||P (y|x(n) ; θ )), according to the 5: Generate m translation candidates y12 , . . . , ym 2 definition of KL divergence, we have → − for x(n) by translation model P (y|x; θ ) and build ←− → − KL(P (y|x(n) ; θ )||P (y|x(n) ; θ )) pseudo sentence pairs {(x(n) , yi2 )}m i=1 weighted with ←− ←− P (yi2 |x(n) ; θ ) (n) ← − P (y|x(n) ; θ ) (4) log → − ; = P (y|x ; θ ) log P (yi2 |x(n) ; θ ) (n) → − → − y∈Y (x(n) ) P (y|x ; θ ) 6: Update P (y|x; θ ) with Equation 8 given original data ((x(n) , y (n) )) and two synthetic data where Y (x(n) ) is a set of all possible candidate translations ({(x(n) , yi1 )}m (n) 2 m ← − i=1 and {(x , yi )}i=1 ). for the source sentence x(n) . Since P (y|x(n) ; θ ) is irrele- 7: end while → − vant to parameter θ , the partial derivative of this KL diver- 8: end procedure → − gence with respect to θ can be written as ← − → − ∂KL(P (y|x(n) ; θ )||P (y|x(n) ; θ )) Similarly, we use sampling for the calculation of expecta- → − tion Ey∼P (y|x(n) ;→ − . There are two differences in Equation ∂θ θ) → − 7 compared with Equation 5: 1) Pseudo sentence pairs are ←− ∂ log P (y|x(n) ; θ ) ←− =− P (y|x(n) ; θ ) → − (5) not sampled from the R2L model (P (y|x(n) ; θ )), but from ∂θ → − (n) ←− y∈Y (x(n) ) the L2R model itself (P (y|x(n) ; θ )); 2) log P (y|x(n) ;→ θ) − is → − P (y|x ;θ) ∂ log P (y|x(n) ; θ ) used as weight to penalize incorrect pseudo pairs. = − Ey∼P (y|x(n) ; θ ) ← − → − ∂θ To sum up, the partial derivative of objective function → − → − (n) → − L( θ ) with respect to θ can be approximately written as in which ∂ log P (y|x → − ;θ) are the gradients specified with follows: ∂θ a standard sequence-to-sequence NMT network. The ex- → − N → − ∂L( θ ) ∂ log P (y (n) |x(n) ; θ ) pectation Ey∼P (y|x(n) ;← − can be approximated with sam- θ) → − = → − ← − ∂θ n=1 ∂θ ples from the R2L model P (y|x(n) ; θ ). Therefore, mini- N → − mizing this regularization term is equal to maximizing the ∂ log P (y|x(n) ; θ ) log-likelihood on the pseudo sentence pairs sampled from +λ → − the R2L model. n=1 y∼P (y|x(n) ;← − ∂θ → − ←− θ) For KL(P (y|x(n) ; θ )||P (y|x(n) ; θ )), similarly we have (8) N → − ←− +λ KL(P (y|x(n) ; θ )||P (y|x(n) ; θ )) n=1 y∼P (y|x(n) ;→ − → − θ) → − P (y|x(n) ; θ ) (6) ←− → − = P (y|x(n) ; θ ) log ←− P (y|x(n) ; θ ) ∂ log P (y|x(n) ; θ ) y∈Y (x(n) ) P (y|x(n) ; θ ) log → − → − P (y|x(n) ; θ ) ∂θ The partial derivative of this KL divergence with respect to The overall training is shown in Algorithm 1. → − θ is calculated as follows: → − ←− Joint Training for Paired NMT Models ∂KL(P (y|x(n) ; θ )||P (y|x(n) ; θ )) → − In practice, due to the imperfection of R2L model, the agree- ∂θ ment between L2R and R2L models sometimes may mislead = − Ey∼P (y|x(n) ;→− θ) (7) L2R model training. On the other hand, due to the symme- ←− → − try of L2R and R2L models, L2R model can also serve as P (y|x(n) ; θ ) ∂ log P (y|x(n) ; θ ) the discriminator to punish bad translation candidates gen- log → − → − P (y|x(n) ; θ ) ∂θ erated from R2L model. Similarly, the objective function of

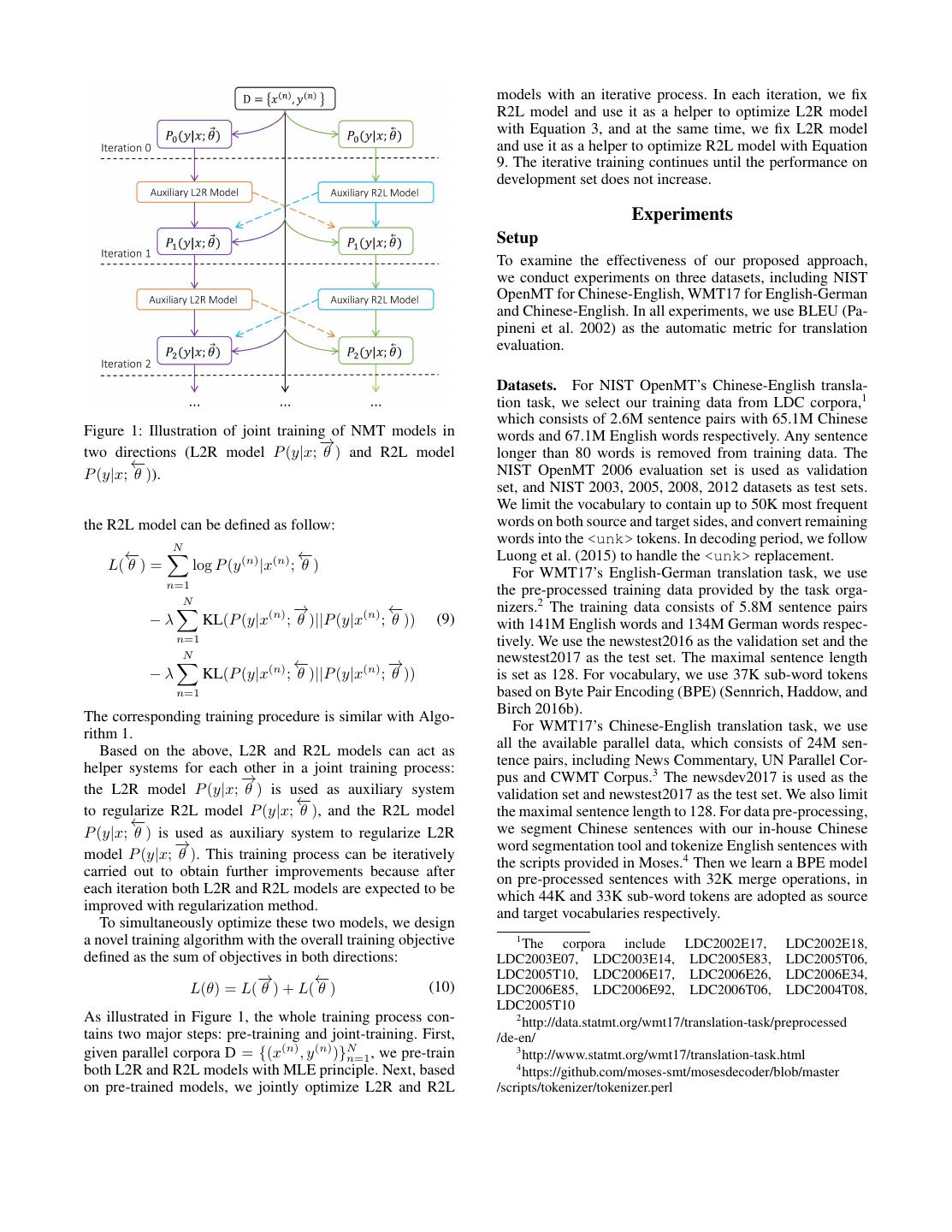

4 . models with an iterative process. In each iteration, we fix R2L model and use it as a helper to optimize L2R model with Equation 3, and at the same time, we fix L2R model and use it as a helper to optimize R2L model with Equation 9. The iterative training continues until the performance on development set does not increase. Experiments Setup To examine the effectiveness of our proposed approach, we conduct experiments on three datasets, including NIST OpenMT for Chinese-English, WMT17 for English-German and Chinese-English. In all experiments, we use BLEU (Pa- pineni et al. 2002) as the automatic metric for translation evaluation. Datasets. For NIST OpenMT’s Chinese-English transla- tion task, we select our training data from LDC corpora,1 which consists of 2.6M sentence pairs with 65.1M Chinese Figure 1: Illustration of joint training of NMT models in words and 67.1M English words respectively. Any sentence → − two directions (L2R model P (y|x; θ ) and R2L model longer than 80 words is removed from training data. The ←− NIST OpenMT 2006 evaluation set is used as validation P (y|x; θ )). set, and NIST 2003, 2005, 2008, 2012 datasets as test sets. We limit the vocabulary to contain up to 50K most frequent the R2L model can be defined as follow: words on both source and target sides, and convert remaining N words into the <unk> tokens. In decoding period, we follow ←− ←− Luong et al. (2015) to handle the <unk> replacement. L( θ ) = log P (y (n) |x(n) ; θ ) For WMT17’s English-German translation task, we use n=1 the pre-processed training data provided by the task orga- N → − ←− nizers.2 The training data consists of 5.8M sentence pairs −λ KL(P (y|x(n) ; θ )||P (y|x(n) ; θ )) (9) with 141M English words and 134M German words respec- n=1 tively. We use the newstest2016 as the validation set and the N newstest2017 as the test set. The maximal sentence length ←− → − −λ KL(P (y|x(n) ; θ )||P (y|x(n) ; θ )) is set as 128. For vocabulary, we use 37K sub-word tokens n=1 based on Byte Pair Encoding (BPE) (Sennrich, Haddow, and The corresponding training procedure is similar with Algo- Birch 2016b). rithm 1. For WMT17’s Chinese-English translation task, we use Based on the above, L2R and R2L models can act as all the available parallel data, which consists of 24M sen- helper systems for each other in a joint training process: tence pairs, including News Commentary, UN Parallel Cor- → − pus and CWMT Corpus.3 The newsdev2017 is used as the the L2R model P (y|x; θ ) is used as auxiliary system validation set and newstest2017 as the test set. We also limit ←− to regularize R2L model P (y|x; θ ), and the R2L model the maximal sentence length to 128. For data pre-processing, ←− we segment Chinese sentences with our in-house Chinese P (y|x; θ ) is used as auxiliary system to regularize L2R → − word segmentation tool and tokenize English sentences with model P (y|x; θ ). This training process can be iteratively the scripts provided in Moses.4 Then we learn a BPE model carried out to obtain further improvements because after on pre-processed sentences with 32K merge operations, in each iteration both L2R and R2L models are expected to be which 44K and 33K sub-word tokens are adopted as source improved with regularization method. and target vocabularies respectively. To simultaneously optimize these two models, we design a novel training algorithm with the overall training objective 1 The corpora include LDC2002E17, LDC2002E18, defined as the sum of objectives in both directions: LDC2003E07, LDC2003E14, LDC2005E83, LDC2005T06, → − ← − LDC2005T10, LDC2006E17, LDC2006E26, LDC2006E34, L(θ) = L( θ ) + L( θ ) (10) LDC2006E85, LDC2006E92, LDC2006T06, LDC2004T08, LDC2005T10 As illustrated in Figure 1, the whole training process con- 2 http://data.statmt.org/wmt17/translation-task/preprocessed tains two major steps: pre-training and joint-training. First, /de-en/ given parallel corpora D = {(x(n) , y (n) )}N n=1 , we pre-train 3 http://www.statmt.org/wmt17/translation-task.html both L2R and R2L models with MLE principle. Next, based 4 https://github.com/moses-smt/mosesdecoder/blob/master on pre-trained models, we jointly optimize L2R and R2L /scripts/tokenizer/tokenizer.perl

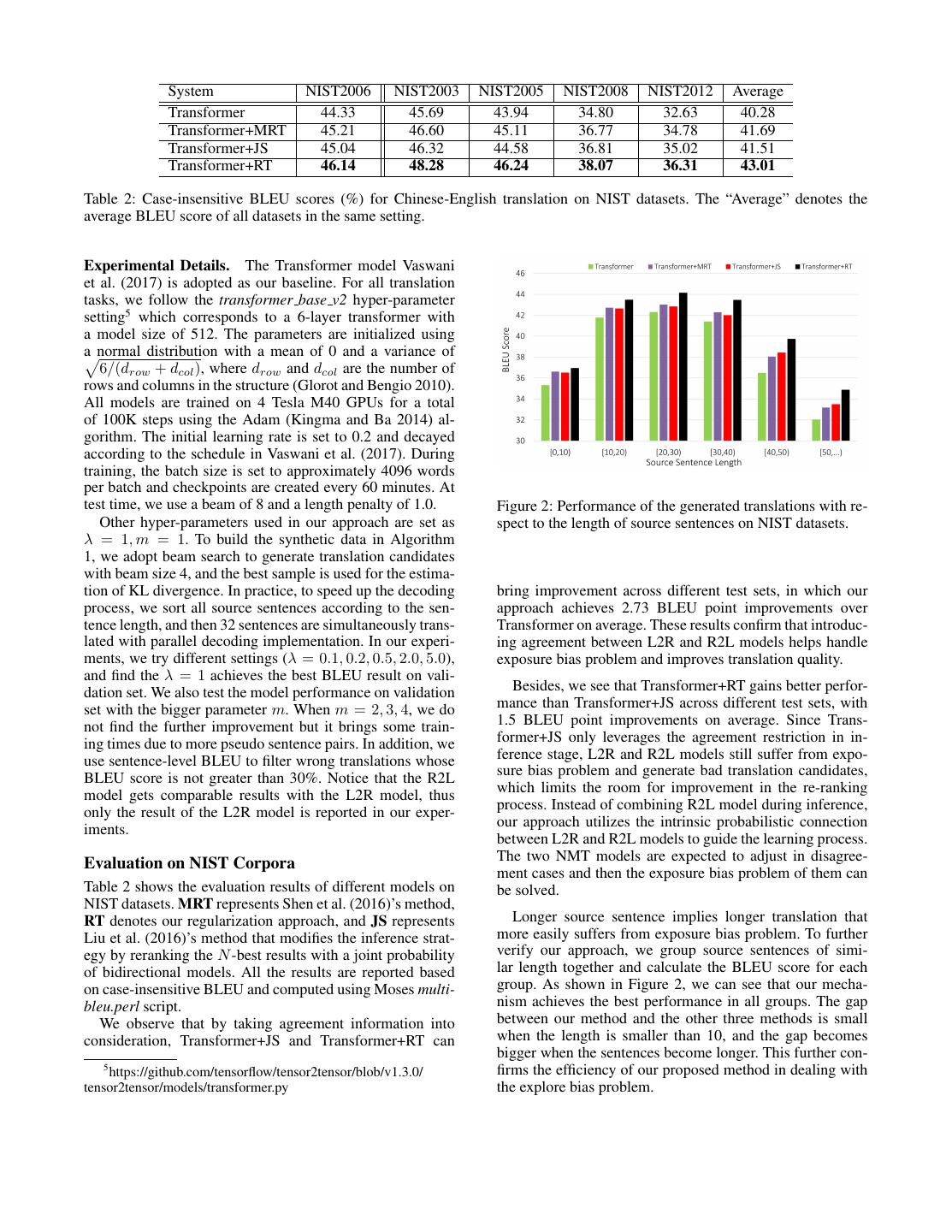

5 . System NIST2006 NIST2003 NIST2005 NIST2008 NIST2012 Average Transformer 44.33 45.69 43.94 34.80 32.63 40.28 Transformer+MRT 45.21 46.60 45.11 36.77 34.78 41.69 Transformer+JS 45.04 46.32 44.58 36.81 35.02 41.51 Transformer+RT 46.14 48.28 46.24 38.07 36.31 43.01 Table 2: Case-insensitive BLEU scores (%) for Chinese-English translation on NIST datasets. The “Average” denotes the average BLEU score of all datasets in the same setting. Experimental Details. The Transformer model Vaswani et al. (2017) is adopted as our baseline. For all translation tasks, we follow the transformer base v2 hyper-parameter setting5 which corresponds to a 6-layer transformer with a model size of 512. The parameters are initialized using a normal distribution with a mean of 0 and a variance of 6/(drow + dcol ), where drow and dcol are the number of rows and columns in the structure (Glorot and Bengio 2010). All models are trained on 4 Tesla M40 GPUs for a total of 100K steps using the Adam (Kingma and Ba 2014) al- gorithm. The initial learning rate is set to 0.2 and decayed according to the schedule in Vaswani et al. (2017). During training, the batch size is set to approximately 4096 words per batch and checkpoints are created every 60 minutes. At test time, we use a beam of 8 and a length penalty of 1.0. Figure 2: Performance of the generated translations with re- Other hyper-parameters used in our approach are set as spect to the length of source sentences on NIST datasets. λ = 1, m = 1. To build the synthetic data in Algorithm 1, we adopt beam search to generate translation candidates with beam size 4, and the best sample is used for the estima- tion of KL divergence. In practice, to speed up the decoding bring improvement across different test sets, in which our process, we sort all source sentences according to the sen- approach achieves 2.73 BLEU point improvements over tence length, and then 32 sentences are simultaneously trans- Transformer on average. These results confirm that introduc- lated with parallel decoding implementation. In our experi- ing agreement between L2R and R2L models helps handle ments, we try different settings (λ = 0.1, 0.2, 0.5, 2.0, 5.0), exposure bias problem and improves translation quality. and find the λ = 1 achieves the best BLEU result on vali- dation set. We also test the model performance on validation Besides, we see that Transformer+RT gains better perfor- set with the bigger parameter m. When m = 2, 3, 4, we do mance than Transformer+JS across different test sets, with not find the further improvement but it brings some train- 1.5 BLEU point improvements on average. Since Trans- ing times due to more pseudo sentence pairs. In addition, we former+JS only leverages the agreement restriction in in- use sentence-level BLEU to filter wrong translations whose ference stage, L2R and R2L models still suffer from expo- BLEU score is not greater than 30%. Notice that the R2L sure bias problem and generate bad translation candidates, model gets comparable results with the L2R model, thus which limits the room for improvement in the re-ranking only the result of the L2R model is reported in our exper- process. Instead of combining R2L model during inference, iments. our approach utilizes the intrinsic probabilistic connection between L2R and R2L models to guide the learning process. Evaluation on NIST Corpora The two NMT models are expected to adjust in disagree- ment cases and then the exposure bias problem of them can Table 2 shows the evaluation results of different models on be solved. NIST datasets. MRT represents Shen et al. (2016)’s method, RT denotes our regularization approach, and JS represents Longer source sentence implies longer translation that Liu et al. (2016)’s method that modifies the inference strat- more easily suffers from exposure bias problem. To further egy by reranking the N -best results with a joint probability verify our approach, we group source sentences of simi- of bidirectional models. All the results are reported based lar length together and calculate the BLEU score for each on case-insensitive BLEU and computed using Moses multi- group. As shown in Figure 2, we can see that our mecha- bleu.perl script. nism achieves the best performance in all groups. The gap We observe that by taking agreement information into between our method and the other three methods is small consideration, Transformer+JS and Transformer+RT can when the length is smaller than 10, and the gap becomes bigger when the sentences become longer. This further con- 5 firms the efficiency of our proposed method in dealing with https://github.com/tensorflow/tensor2tensor/blob/v1.3.0/ tensor2tensor/models/transformer.py the explore bias problem.

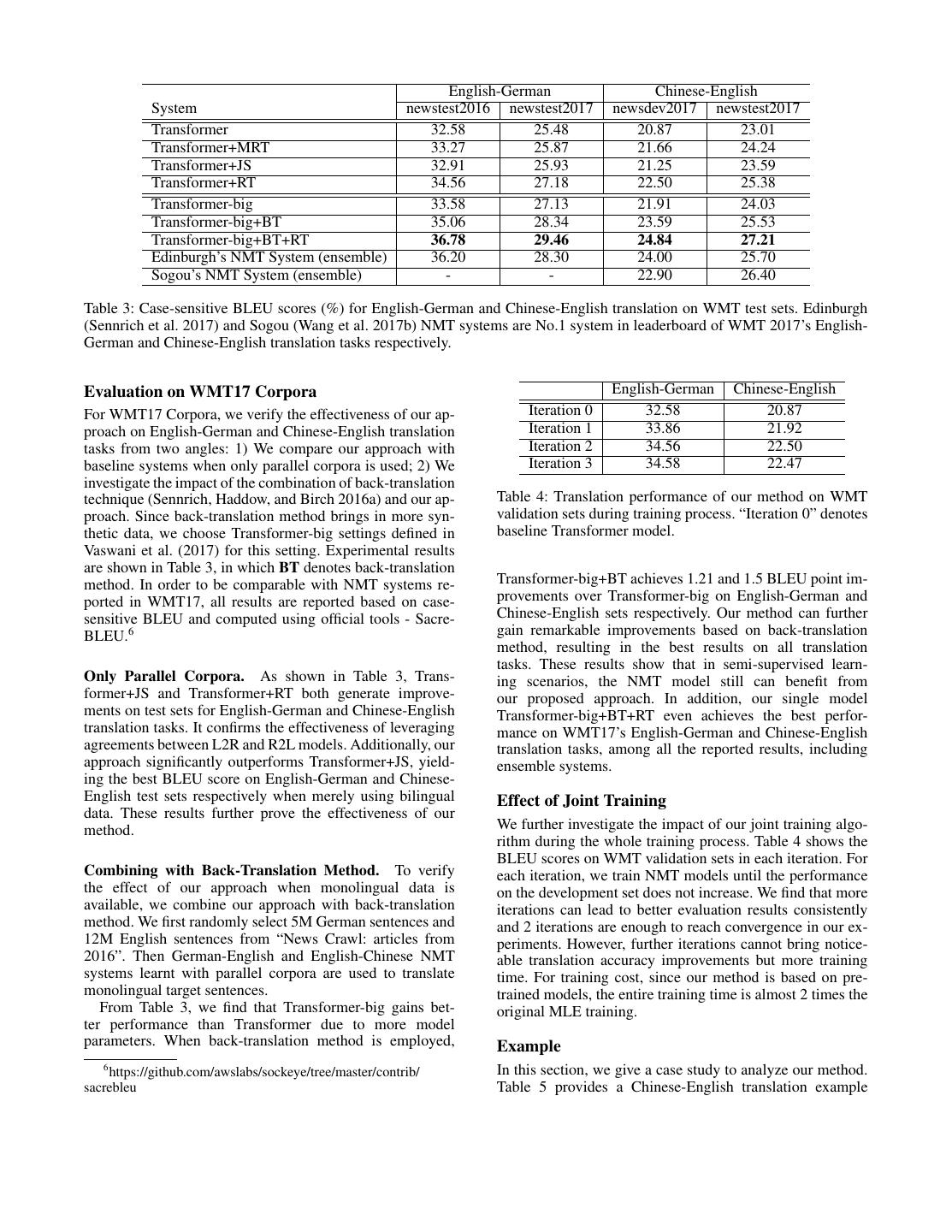

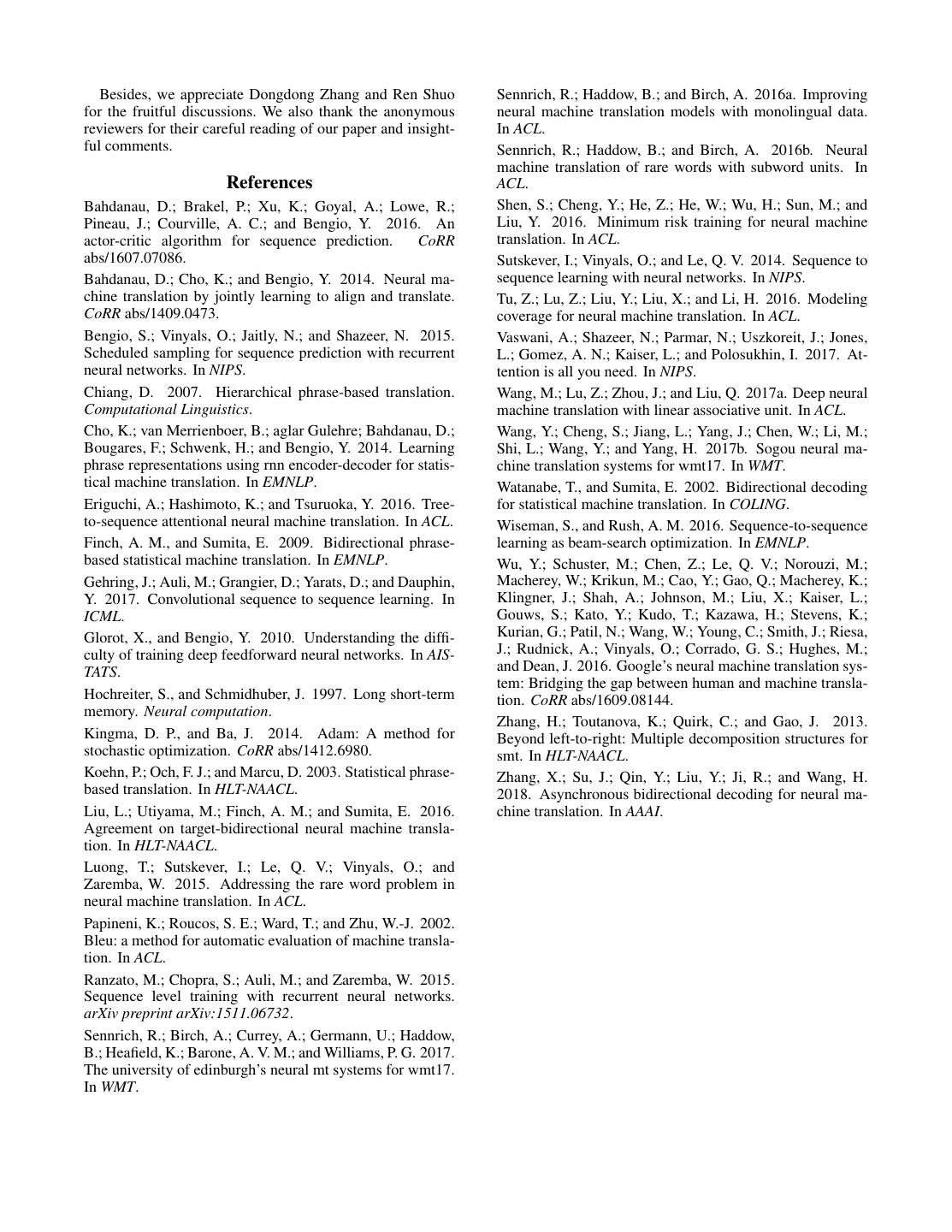

6 . English-German Chinese-English System newstest2016 newstest2017 newsdev2017 newstest2017 Transformer 32.58 25.48 20.87 23.01 Transformer+MRT 33.27 25.87 21.66 24.24 Transformer+JS 32.91 25.93 21.25 23.59 Transformer+RT 34.56 27.18 22.50 25.38 Transformer-big 33.58 27.13 21.91 24.03 Transformer-big+BT 35.06 28.34 23.59 25.53 Transformer-big+BT+RT 36.78 29.46 24.84 27.21 Edinburgh’s NMT System (ensemble) 36.20 28.30 24.00 25.70 Sogou’s NMT System (ensemble) - - 22.90 26.40 Table 3: Case-sensitive BLEU scores (%) for English-German and Chinese-English translation on WMT test sets. Edinburgh (Sennrich et al. 2017) and Sogou (Wang et al. 2017b) NMT systems are No.1 system in leaderboard of WMT 2017’s English- German and Chinese-English translation tasks respectively. Evaluation on WMT17 Corpora English-German Chinese-English For WMT17 Corpora, we verify the effectiveness of our ap- Iteration 0 32.58 20.87 proach on English-German and Chinese-English translation Iteration 1 33.86 21.92 tasks from two angles: 1) We compare our approach with Iteration 2 34.56 22.50 baseline systems when only parallel corpora is used; 2) We Iteration 3 34.58 22.47 investigate the impact of the combination of back-translation technique (Sennrich, Haddow, and Birch 2016a) and our ap- Table 4: Translation performance of our method on WMT proach. Since back-translation method brings in more syn- validation sets during training process. “Iteration 0” denotes thetic data, we choose Transformer-big settings defined in baseline Transformer model. Vaswani et al. (2017) for this setting. Experimental results are shown in Table 3, in which BT denotes back-translation method. In order to be comparable with NMT systems re- Transformer-big+BT achieves 1.21 and 1.5 BLEU point im- ported in WMT17, all results are reported based on case- provements over Transformer-big on English-German and sensitive BLEU and computed using official tools - Sacre- Chinese-English sets respectively. Our method can further BLEU.6 gain remarkable improvements based on back-translation method, resulting in the best results on all translation tasks. These results show that in semi-supervised learn- Only Parallel Corpora. As shown in Table 3, Trans- ing scenarios, the NMT model still can benefit from former+JS and Transformer+RT both generate improve- our proposed approach. In addition, our single model ments on test sets for English-German and Chinese-English Transformer-big+BT+RT even achieves the best perfor- translation tasks. It confirms the effectiveness of leveraging mance on WMT17’s English-German and Chinese-English agreements between L2R and R2L models. Additionally, our translation tasks, among all the reported results, including approach significantly outperforms Transformer+JS, yield- ensemble systems. ing the best BLEU score on English-German and Chinese- English test sets respectively when merely using bilingual Effect of Joint Training data. These results further prove the effectiveness of our method. We further investigate the impact of our joint training algo- rithm during the whole training process. Table 4 shows the BLEU scores on WMT validation sets in each iteration. For Combining with Back-Translation Method. To verify each iteration, we train NMT models until the performance the effect of our approach when monolingual data is on the development set does not increase. We find that more available, we combine our approach with back-translation iterations can lead to better evaluation results consistently method. We first randomly select 5M German sentences and and 2 iterations are enough to reach convergence in our ex- 12M English sentences from “News Crawl: articles from periments. However, further iterations cannot bring notice- 2016”. Then German-English and English-Chinese NMT able translation accuracy improvements but more training systems learnt with parallel corpora are used to translate time. For training cost, since our method is based on pre- monolingual target sentences. trained models, the entire training time is almost 2 times the From Table 3, we find that Transformer-big gains bet- original MLE training. ter performance than Transformer due to more model parameters. When back-translation method is employed, Example 6 In this section, we give a case study to analyze our method. https://github.com/awslabs/sockeye/tree/master/contrib/ sacrebleu Table 5 provides a Chinese-English translation example

7 . sh`ouh`air´en d¯e g¯eg¯e Louis Galicia du`ı mˇeigu´o guˇanb¯o g¯ongs¯ı w`eiy´u ji`uj¯ınsh¯an d¯e di`ant´ai KGO biˇaosh`ı, Source zh¯ıqi´an z`ai b¯osh`ıd`un zu`o li´ushuˇıxi`an ch´ush¯ı d¯e Frank y´u li`ug`eyu`e qi´an z`ai ji`uj¯ınsh¯an d¯e Sons & Daughters c¯angu¯an zhˇaod`ao y¯ıf`en li´ushuˇıxi`an ch´ush¯ı d¯e l¯ıxiˇang g¯ongzu`o. The victim’s brother, Louis Galicia, told ABC station KGO in San Francisco that Frank, previously a Reference line cook in Boston, had landed his dream job as line chef at San Franciscos Sons & Daughters restaurant six months ago. Louis Galicia, the victim’s brother, told ABC radio station KGO in San Francisco that Frank, who used Transformer to work as an assembly line cook in Boston, had found an ideal job in the Sons & Daughters restaurant ✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿✿ in San Francisco ✿✿✿✿✿✿✿✿✿✿✿✿✿ six months ago. The victim’s brother, Louis Galia, told ABC’s station KGO, ✿✿ in✿✿✿✿ San ✿✿✿✿✿✿✿✿✿ Francisco, ✿✿✿✿✿Frank had found an ideal Transformer (R2L) job as a pipeline chef six months ago at Sons & Daughters restaurant in San Francisco . The victim’s brother, Louis Galicia, told ABC radio station KGO in San Francisco that Frank, who Transformer+RT previously worked as an assembly line cook in Boston, found an ideal job as an assembly line cook six months ago at Sons & Daughters restaurant in San Francisco. Table 5: Translation examples of different systems. Text highlighted in wavy lines is incorrectly translated. from newstest2017. We find that Transformer produces the consistency between training and inference. Besides, Ran- translation with good prefixes but bad suffixes, while Trans- zato et al. (2015) propose a mixture training method to former (R2L) generates the translation with desirable suf- perform a gradual transition from MLE training to BLEU fixes but incorrect prefixes. For our approach, we can see score optimization using reinforcement learning. Bahdanau that Transformer+RT produces a high-quality translation in et al. (2016) design an actor-critic algorithm for sequence this case, which is much better than Transformer and Trans- prediction, in which the NMT system is the actor, and a critic former (R2L). The reason is that leveraging the agreement network is proposed to predict the value of output tokens. In- between L2R and R2L models in training stage can better stead of designing task-specific objective functions or com- punish bad suffixes generated by Transformer and encour- plex training strategies, our approach only adds regulariza- age desirable suffixes from Transformer (R2L). tion terms to standard training objective function, which is simple to implement but effective. Related Work Conclusion Target-bidirectional transduction techniques have been ex- In this paper, we have presented a simple and efficient regu- plored in statistical machine translation, under the IBM larization approach to neural machine translation, which re- framework (Watanabe and Sumita 2002) and the feature- lies on the agreement between L2R and R2L NMT models. driven linear models (Finch and Sumita 2009; Zhang et al. In our method, two Kullback-Leibler divergences based on 2013). Recently, Liu et al. (2016) and Zhang et al. (2018) probability distributions of L2R and R2L models are added migrate this method from SMT to NMT by modifying the to the standard training objective as regularization terms. An inference strategy and decoder architecture of NMT. Liu efficient approximation algorithm is designed to enable fast et al. (2016) propose to generate N -best translation candi- training of the regularized training objective and then a train- dates from L2R and R2L NMT models and leverage the ing strategy is proposed to jointly optimize L2R and R2L joint probability of two models to find the best candidates models. Empirical evaluations are conducted on Chinese- from the combined N -best list. Zhang et al. (2018) de- English and English-German translation tasks, demonstrat- sign a two-stage decoder architecture for NMT, which gen- ing that our approach leads to significant improvements erates translation candidates in a right-to-left manner in compared with strong baseline systems. first-stage and then gets final translation based on source sentence and previous generated R2L translation. Different In our future work, we plan to test our method on other from their method, our approach directly exploits the target- sequence-to-sequence tasks, such as summarization and di- bidirectional agreement in training stage by introducing reg- alogue generation. Besides the back-translation method, it is ularization terms. Without changing the neural network ar- also worth trying to integrate our approach with other semi- chitecture and inference strategy, our method keeps the same supervised methods to better leverage unlabeled data. speed as the original model during inference. To handle the exposure bias problem, many methods have Acknowledgments been proposed, including designing new training objectives This research was partially supported by grants from the Na- (Shen et al. 2016; Wiseman and Rush 2016) and adopting re- tional Key Research and Development Program of China inforcement learning approaches (Ranzato et al. 2015; Bah- (Grant No. 2018YFB1004300), the National Natural Sci- danau et al. 2016). Shen et al. (2016) attempt to directly min- ence Foundation of China (Grant No. 61703386), the imize expected loss (maximize the expected BLEU) with Anhui Provincial Natural Science Foundation (Grant No. Minimum Risk Training (MRT). Wiseman and Rush (2016) 1708085QF140), and the Fundamental Research Funds for adopt a beam-search optimization algorithm to reduce in- the Central Universities (Grant No. WK2150110006).

8 . Besides, we appreciate Dongdong Zhang and Ren Shuo Sennrich, R.; Haddow, B.; and Birch, A. 2016a. Improving for the fruitful discussions. We also thank the anonymous neural machine translation models with monolingual data. reviewers for their careful reading of our paper and insight- In ACL. ful comments. Sennrich, R.; Haddow, B.; and Birch, A. 2016b. Neural machine translation of rare words with subword units. In References ACL. Bahdanau, D.; Brakel, P.; Xu, K.; Goyal, A.; Lowe, R.; Shen, S.; Cheng, Y.; He, Z.; He, W.; Wu, H.; Sun, M.; and Pineau, J.; Courville, A. C.; and Bengio, Y. 2016. An Liu, Y. 2016. Minimum risk training for neural machine actor-critic algorithm for sequence prediction. CoRR translation. In ACL. abs/1607.07086. Sutskever, I.; Vinyals, O.; and Le, Q. V. 2014. Sequence to Bahdanau, D.; Cho, K.; and Bengio, Y. 2014. Neural ma- sequence learning with neural networks. In NIPS. chine translation by jointly learning to align and translate. Tu, Z.; Lu, Z.; Liu, Y.; Liu, X.; and Li, H. 2016. Modeling CoRR abs/1409.0473. coverage for neural machine translation. In ACL. Bengio, S.; Vinyals, O.; Jaitly, N.; and Shazeer, N. 2015. Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, Scheduled sampling for sequence prediction with recurrent L.; Gomez, A. N.; Kaiser, L.; and Polosukhin, I. 2017. At- neural networks. In NIPS. tention is all you need. In NIPS. Chiang, D. 2007. Hierarchical phrase-based translation. Wang, M.; Lu, Z.; Zhou, J.; and Liu, Q. 2017a. Deep neural Computational Linguistics. machine translation with linear associative unit. In ACL. Cho, K.; van Merrienboer, B.; aglar Gulehre; Bahdanau, D.; Wang, Y.; Cheng, S.; Jiang, L.; Yang, J.; Chen, W.; Li, M.; Bougares, F.; Schwenk, H.; and Bengio, Y. 2014. Learning Shi, L.; Wang, Y.; and Yang, H. 2017b. Sogou neural ma- phrase representations using rnn encoder-decoder for statis- chine translation systems for wmt17. In WMT. tical machine translation. In EMNLP. Watanabe, T., and Sumita, E. 2002. Bidirectional decoding Eriguchi, A.; Hashimoto, K.; and Tsuruoka, Y. 2016. Tree- for statistical machine translation. In COLING. to-sequence attentional neural machine translation. In ACL. Wiseman, S., and Rush, A. M. 2016. Sequence-to-sequence Finch, A. M., and Sumita, E. 2009. Bidirectional phrase- learning as beam-search optimization. In EMNLP. based statistical machine translation. In EMNLP. Wu, Y.; Schuster, M.; Chen, Z.; Le, Q. V.; Norouzi, M.; Gehring, J.; Auli, M.; Grangier, D.; Yarats, D.; and Dauphin, Macherey, W.; Krikun, M.; Cao, Y.; Gao, Q.; Macherey, K.; Y. 2017. Convolutional sequence to sequence learning. In Klingner, J.; Shah, A.; Johnson, M.; Liu, X.; Kaiser, L.; ICML. Gouws, S.; Kato, Y.; Kudo, T.; Kazawa, H.; Stevens, K.; Glorot, X., and Bengio, Y. 2010. Understanding the diffi- Kurian, G.; Patil, N.; Wang, W.; Young, C.; Smith, J.; Riesa, culty of training deep feedforward neural networks. In AIS- J.; Rudnick, A.; Vinyals, O.; Corrado, G. S.; Hughes, M.; TATS. and Dean, J. 2016. Google’s neural machine translation sys- tem: Bridging the gap between human and machine transla- Hochreiter, S., and Schmidhuber, J. 1997. Long short-term tion. CoRR abs/1609.08144. memory. Neural computation. Zhang, H.; Toutanova, K.; Quirk, C.; and Gao, J. 2013. Kingma, D. P., and Ba, J. 2014. Adam: A method for Beyond left-to-right: Multiple decomposition structures for stochastic optimization. CoRR abs/1412.6980. smt. In HLT-NAACL. Koehn, P.; Och, F. J.; and Marcu, D. 2003. Statistical phrase- Zhang, X.; Su, J.; Qin, Y.; Liu, Y.; Ji, R.; and Wang, H. based translation. In HLT-NAACL. 2018. Asynchronous bidirectional decoding for neural ma- Liu, L.; Utiyama, M.; Finch, A. M.; and Sumita, E. 2016. chine translation. In AAAI. Agreement on target-bidirectional neural machine transla- tion. In HLT-NAACL. Luong, T.; Sutskever, I.; Le, Q. V.; Vinyals, O.; and Zaremba, W. 2015. Addressing the rare word problem in neural machine translation. In ACL. Papineni, K.; Roucos, S. E.; Ward, T.; and Zhu, W.-J. 2002. Bleu: a method for automatic evaluation of machine transla- tion. In ACL. Ranzato, M.; Chopra, S.; Auli, M.; and Zaremba, W. 2015. Sequence level training with recurrent neural networks. arXiv preprint arXiv:1511.06732. Sennrich, R.; Birch, A.; Currey, A.; Germann, U.; Haddow, B.; Heafield, K.; Barone, A. V. M.; and Williams, P. G. 2017. The university of edinburgh’s neural mt systems for wmt17. In WMT.