- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

MonoGRNet: A Geometric Reasoning Network for Monocular 3D Object Localization

展开查看详情

1 . MonoGRNet: A Geometric Reasoning Network for Monocular 3D Object Localization Zengyi Qin†∗ Jinglu Wang‡ Yan Lu‡ † Tsinghua University ‡ Microsoft Research {v-zeqin, jinglwa, yanlu}@microsoft.com arXiv:1811.10247v1 [cs.CV] 26 Nov 2018 Abstract estimation does not focus on object localization by design. It aims to minimize the mean error for all pixels to get an aver- Localizing objects in the real 3D space, which plays a cru- age optimal estimation over the whole image, while objects cial role in scene understanding, is particularly challenging given only a single RGB image due to the geometric infor- covering small regions are often neglected (Fu et al. 2018), mation loss during imagery projection. We propose Mono- which drastically downgrades the 3D detection accuracy. GRNet for the amodal 3D object localization from a monoc- We propose MonoGRNet, a unified network for amodal ular RGB image via geometric reasoning in both the ob- 3D object localization from a monocular image. Our key served 2D projection and the unobserved depth dimension. idea is to decouple the 3D localization problem into several MonoGRNet is a single, unified network composed of four progressive sub-tasks that are solvable using only monocu- task-specific subnetworks, responsible for 2D object detec- lar RGB data. The network starts from perceiving semantics tion, instance depth estimation (IDE), 3D localization and in 2D image planes and then performs geometric reasoning local corner regression. Unlike the pixel-level depth estima- in the 3D space. tion that needs per-pixel annotations, we propose a novel IDE A challenging problem we overcome is to accurately esti- method that directly predicts the depth of the targeting 3D bounding box’s center using sparse supervision. The 3D lo- mate the depth of an instance’s 3D center without computing calization is further achieved by estimating the position in pixel-level depth maps. We propose a novel instance-level the horizontal and vertical dimensions. Finally, MonoGRNet depth estimation (IDE) module, which explores large recep- is jointly learned by optimizing the locations and poses of tive fields of deep feature maps to capture coarse instance the 3D bounding boxes in the global context. We demon- depths and then combines early features of a higher resolu- strate that MonoGRNet achieves state-of-the-art performance tion to refine the IDE. on challenging datasets. To simultaneously retrieve the horizontal and vertical po- sition, we first predict the 2D projection of the 3D center. In combination with the IDE, we then extrude the projected Introduction center into real 3D space to obtain the eventual 3D object Typical object localization or detection from a RGB image location. All the components are integrated into the end-to- estimates 2D boxes that bound visible parts of the objects end network, MonoGRNet, featuring its three 3D reasoning belonging to the specific classes on the image plane. How- branches illustrated in Fig. 1, and is finally optimized by a ever, this kind of result cannot provide geometric percep- joint geometric loss that minimizes the 3D bounding box tion in the real 3D world for scene understanding, which is discrepancy in the global context. crucial for applications, such as robotics, mixed reality, and We argue that RGB information alone can provide almost autonomous driving. accurate 3D locations and poses of objects. Experiments on In this paper, we address the problem of localizing amodal the challenging KITTI dataset demonstrate that our network 3D bounding boxes (ABBox-3D) of objects at their full ex- outperforms the state-of-art monocular method in 3D object tents from a monocular RGB image. Unlike 2D analysis on localization with the least inference time. In summary, our the image plane, 3D localization with the extension to an contributions are three-fold: unobserved dimension, i.e., depth, not solely enlarges the searching space but also introduces inherent ambiguity of • A novel instance-level depth estimation approach that di- 2D-to-3D mapping, increasing the task’s difficulty signifi- rectly predicts central depths of ABBox-3D in the absence cantly. of dense depth data, regardless of object occlusion and Most state-of-the-art monocular methods (Xu and Chen truncation. 2018; Zhuo et al. 2018) estimate pixel-level depths and then • A progressive 3D localization scheme that explores rich regress 3D bounding boxes. Nevertheless, pixel-level depth feature representations in 2D images and extends geomet- ∗ ric reasoning into 3D context. The work was done when Zengyi Qin was an intern at MSR. Copyright c 2019, Association for the Advancement of Artificial • A unified network that coordinates localization of objects Intelligence (www.aaai.org). All rights reserved. in 2D, 2.5D and 3D spaces via a joint optimization, which

2 . backbone Legend Input Image (RGB) 2D BBox Coordinate Conv FC Output Add transform Local corner Early features RoiAlign offsets Delta 3D RoiAlign Location 3D Bounding Box in Global Context Delta Instance RoiAlign Depth Depth Refined 3D Encoder Location Refined Instance Depth Coarse Camera Coordinate frame Deep features Depth Coarse 3D Instance Depth Encoder Location Projected 3D Center Figure 1: MonoGRNet for 3D object localization from a monocular RGB image. MonoGRNet consists of four subnetworks for 2D detection(brown), instance depth estimation(green), 3D location estimation(blue) and local corner regression(yellow). Guided by the detected 2D bounding box, the network first estimates depth and 2D projection of the 3D box’s center to obtain the global 3D location, and then regresses corner coordinates in local context. The final 3D bounding box is optimized in an end-to-end manner in the global context based on the estimated 3D location and local corners. (Best viewed in color.) performs efficient inference (taking ∼0.06s/image). the point cloud of interest object. MV3D (Chen et al. 2017) generates 3D object proposals from bird’s eye view maps Related Work given LIDAR point clouds, and then fuses features in RGB images, LIDAR front views and bird’s eye views to predict Our work is related to 3D object detection and monocular 3D boxes. 3DOP(Chen et al. 2015) exploits stereo informa- depth estimation. We mainly focus on the works of studying tion and contextual models specific to autonomous driving. 3D detection and depth estimation, while 2D detection is the The most related approaches to ours are using a monoc- basis for coherence. ular RGB image. Information loss in the depth dimension significantly increases the task’s difficulty. Performances 2D Object Detection. 2D object detection deep net- of state-of-the-art such methods still have large margins works are extensively studied. Region proposal based meth- to RGB-D and multi-view methods. Mono3D (Chen et al. ods (Girshick 2015; Ren et al. 2017) generate impres- 2016) exploits segmentation and context priors to generate sive results but perform slowly due to complex multi-stage 3D proposals. Extra networks for semantic and instance seg- pipelines. Another group of methods (Redmon et al. 2016; mentation are required, which cost more time for both train- Redmon and Farhadi 2017; Liu et al. 2016; Fu et al. 2017) ing and inference. Xu et al.(Xu and Chen 2018) leverage a focusing on fast training and inferencing apply a single pretrained disparity estimation model (Mahjourian, Wicke, stage detection. Multi-net (Teichmann et al. 2016) intro- and Angelova 2018) to guide the geometry reasoning. Other duces an encoder-decoder architecture for real-time seman- methods (Chabot et al. 2017; Kehl et al. 2017) utilize 3D tic reasoning. Its detection decoder combines the fast regres- CAD models to generate synthetic data for training, which sion in Yolo (Redmon et al. 2016) with the size-adjusting provides 3D object templates, object poses and their corre- RoiAlign of Mask-RCNN (He et al. 2017), achieving a sat- sponding 2D projections for better supervision. All previous isfied speed-accuracy ratio. All these methods predict 2D methods exploit additional data and networks to facilitate the bounding boxes of objects while none 3D geometric features 3D perception, while our method only requires annotated 3D are considered. bounding boxes and no extra network needs to train. This makes our network much light weighted and efficient for training and inference. 3D Object Detection. Existing methods range from single-view RGB (Chen et al. 2016; Xu and Chen 2018; Chabot et al. 2017; Kehl et al. 2017), multi-view RGB (Chen Monocular Depth Estimation. Recently, although many et al. 2017; Chen et al. 2015; Wang et al. ), to RGB-D (Qi pixel-level depth estimation networks (Fu et al. 2018; Eigen et al. 2017; Song and Xiao 2016; Liu et al. 2015; Zhang et and Fergus 2015) have been proposed, they are not sufficient al. 2014). While geometric information of the depth dimen- for 3D object localization. When regressing the pixel-level sion is provided, the 3D detection task is much easier. Given depth, the loss function takes into account every pixel in the RGB-D data, FPointNet (Qi et al. 2017) extrudes 2D region depth map and treats them without significant difference. proposals to a 3D viewing frustum and then segments out In a common practice, the loss values from each pixel are

3 . 3D BBox 𝐵3𝑑 Local corners 𝒪 = {𝐎𝑘 ሽ 2D BBox 𝐵2𝑑 𝐎𝟕 𝐎𝟔 𝜎𝑠𝑐𝑜𝑝𝑒 𝑧 𝑧 𝑦 𝐂 𝑥 𝐎4 𝐜 𝑥 𝐛2 𝐎3 𝐠 𝐛 𝐎𝟖 𝐎5 (b) An image with 2D bounding box Camera 𝑥 𝑧 𝐛3 𝐛1 Bird’s eye view 𝐎1 𝐎2 𝜎𝑠𝑐𝑜𝑝𝑒 𝜎𝑠𝑐𝑜𝑝𝑒 Image plane 𝑦 𝑥 Camera 𝑧 (a) 2D Projection (b) Camera coordinate frame (c) Local coordinate frame (a) Scopes of instances on grid cells (c) Instance depth map Figure 2: Notation for 3D bounding box localization. Figure 3: Instance depth. (a) Each grid cell g is assigned to a nearest object within a distance σscope to the 2D bbox cen- ter bi . Objects closer to the camera are assigned to handle summed up as a whole to be optimized. Nevertheless, there occlusion. Here Zc1 < Zc2 . (b) An image with detected 2D is a likelihood that the pixels lying in an object are much bounding boxes. (c) Predicted instance depth for each cell. fewer than those lying in the background, and thus the low average error does not indicate the depth values are accurate in pixels contained in an object. In addition, dense depths are Monocular Geometric Reasoning Network often estimated from disparity maps that may produce large MonoGRNet is designed to estimate four components, B2d , errors at far regions, which may downgrade the 3D localiza- Zc , c, O, with four subnetworks respectively. Following a tion performance drastically. CNN backbone, they are integrated into a unified frame- Different from the abovementioned pixel-level depth es- work, as shown in Fig. 1. timation methods, we are the first to propose an instance- level depth estimation network which jointly takes semantic 2D Detection. The 2D detection module is the basic mod- and geometric features into account with sparse supervision ule that stabilizes the feature learning and also reveals re- data. gions of interest to the subsequent geometric reasoning mod- ules. Approach We leverage the design of the detection component in (Te- ichmann et al. 2016), which combines fast regression (Red- We propose an end-to-end network, MonoGRNet, that di- mon et al. 2016) and size-adaptive RoiAlign (He et al. 2017), rectly predicts ABBox-3D from a single RGB image. Mono- to achieve a convincing speed-accuracy ratio. An input im- GRNet is composed of a 2D detection module and three ge- age I of size W ×H is divided into an Sx ×Sy grid G, where ometric reasoning subnetworks for IDE, 3D localization and a cell is indicated by g. The output feature map of the back- ABBox-3D regression. In this section, we first formally de- bone is also reduced to Sx × Sy . Each pixel in the feature fine the 3D localization problem and then detail MonoGR- map corresponding to an image grid cell yields a prediction. Net for four subnetworks. The 2D prediction of each cell g contains the confidence that an object of interest is present and the 2D bounding box of Problem Definition g this object, namely, (P robj g , B2d ), indicated by a superscript Given a monocular RGB image, the objective is to lo- g. The 2D bounding box B2d = (δxb , δyb , w, h) is repre- calize objects of specific classes in the 3D space. A tar- sented by the offsets (δxb , δyb ) of its center b to the cell g get object is represented by a class label and an ABBox- and the 2D box size (w, h). 3D, which bounds the complete object regardless of occlu- The predicted 2D bounding boxes are taken as inputs of sion or truncation. An ABBox-3D is defined by a 3D cen- the RoiAlign (He et al. 2017) layers to extract early features ter C = (Xc , Yc , Zc ) in global context and eight corners with high resolutions to refine the predictions and reduce the O = {Ok }, k = 1, ..., 8, related to local context. The 3D performance gap between this fast detector with proposal- location C is calibrated in the camera coordinate frame and based detectors. the local corners O are in a local coordinate frame, shown in Fig. 2 (b) and (c) respectively. Instance-Level Depth Estimation. The IDE subnetwork We propose to separate the 3D localization task into estimates the depth of the ABBox-3D center Zc . Given the four progressive sub-tasks that are resolvable using only a divided grid G in the feature map from backbone, each grid monocular image. First, the 2D box B2d with a center b and cell g predicts the 3D central depth of the nearest instance size (w, h) bounding the projection of the ABBox-3D is de- within a distance threshold σscope , considering depth infor- tected. Then, the 3D center C is localized by predicting its mation, i.e., closer instances are assigned for cells, as illus- depth Zc and 2D projection c. Notations are illustrated in trated in Fig. 3 (a). An example of predicted instance depth Fig. 2. Finally, local corners O with respect to the 3D cen- for each cell is shown in Fig. 3 (c). ter are regressed based on local features. In summary, we The IDE module consists of a coarse regression of the formulate the ABBox-3D localization as estimating the fol- region depth regardless of the scales and specific 2D location lowing parameters of each interest object: of the object, and a refinement stage that depends on the 2D bounding box to extract encoded depth features at exactly B3d = (B2d , Zc , c, O) (1) the region occupied by the target, as illustrated in Fig. 4.

4 . 2D BBox Image with 𝑆𝑥 × 𝑆𝑦 Grid Feature map apply RoiAlign to the cell’s corresponding region in early Conv RoiAlign fc 4𝑆𝑥 × 4𝑆𝑦 Depth Encoder Depth Delta (𝛿𝑍𝑐 ) feature maps with high resolution and regress the local cor- Refined Depth ners of the 3D bounding box. Conv (𝑍𝑐 ) Grid cell In addition, regressing poses of 3D boxes in camera co- 𝑆𝑥 × 𝑆𝑦 Depth Encoder fc Coarse Depth (𝑍𝑐𝑐 ) ordinate frame is ambiguous (Xu et al. 2018; Gao et al. 2018; Guizilini and Ramos 2018). Even the poses of two 3D boxes are different, their projections could be similar Figure 4: Instance depth estimation subnet. This shows the when observed from certain viewpoints. We are inspired by inference of the red cell. Deep3DBox (Mousavian et al. 2017), which regresses boxes in a local system according to the observation angle. Grid cells in deep feature maps from the CNN back- We construct a local coordinate frame, where the origin is bone have larger receptive fields and lower resolution in at the object’s center, the z-axis points straight from the cam- comparison with that of shallow layers. Because they are era to the center in bird’s eye view, the x-axis is on the right less sensitive to the exact location of the targeted object, of z-axis, and the y-axis does not change, illustrated in Fig. 2 it is reasonable to regress a coarse depth offset Zcc from (c). The transformation from local coordinates to camera co- deep layers. Given the detected 2D bounding box, we are ordinates are involved with a rotation R and a translation C, able to perform RoiAlign to the region containing an in- and we obtain Ocam k = ROk + C, where Ocam k are the stance in early feature maps with a higher resolution and global corner coordinates. This is a single mapping between a smaller receptive field. The aligned features are passed the perceived and actual rotation, in order to avoid confusing through fully connected layers to regress a delta δZc in order the regression model. to refine the instance-level depth value. The final prediction is Zc = Zcc + δZc . Loss Functions Here we formally formulate four task losses for the above 3D Location Estimation. This subnetwork estimates the subnetworks and a joint loss for the unified network. All the location of 3D center C = (Xc , Yc , Zc ) of an object of in- predictions are modified with a superscript g for the corre- terest in each grid g. As illustrated in Fig. 2, the 2D center b sponding grid cell g. Groundtruth observations are modified and the 2D projection c of C are not located at the same po- sition due to perspective transformation. We first regress the by the (·) symbol. projection c and then backproject it to the 3D space based on the estimated depth Zc . 2D Detection Loss. The object confidence is trained using In a calibrated image, we elaborate the projection map- softmax (s·) cross entropy (CE) loss and the 2D bounding ping from a 3D point X = (X, Y, Z) to a 2D point x = boxes B2d = (xb , yb , w, h) are regressed using a masked L1 (u, v), ψ3D→2D : X → x, by distance loss. Note that w and h are normalized by W and H. The 2D detection loss are defined as: u = fx ∗ X/Z + px , v = fy ∗ Y /Z + py (2) g g where fx and fy are the focal length along X and Y axes, px Lconf = CEg ∈G (s · (P robj ), P robj ) and py are coordinates of the principle point. Given known Lbbox = 1obj g g g · d(B2d , B2d ) Z, the backprojection mapping ψ2D→3D : (x, Z) → X g takes the form: L2d = Lconf + ωLbbox (4) X = (u − px ) ∗ Z/fx , Y = (v − py ) ∗ Z/fy (3) where P robj and P robj refer to the confidence of predictions Since we have obtained the instance depth Zc from the and groundtruths respectively, d(·) refers to L1 distance and IDE module, the 3D location C can be analytically com- 1obj g masks off the grids that are not assigned any object. The puted using the 2D projected center c according to Equa- tion 3. Consequently, the 3D estimation problem is con- mask function 1obj g for each grid g is set to 1 if the distance verted to a 2D keypoint localization task that only relies on between g b is less then σscope , and 0 otherwise. The two a monocular image. components are balanced by ω. Similar to the IDE module, we utilize deep features to regress the offsets δc = (δxc , δyc ) of a projected center c Instance Depth Loss. This loss is a L1 loss for instance to the grid cell g and calculate a coarse 3D location Cs = depths: ψ2D→3D (δc + g, Zc ). In addition, the early features with high resolution are extracted to regress the delta δC between Lzc = 1obj g g g · d(Zcc , Zc ) g the predicted Cs and the groundtruth C to refine the final 3D location, C = Cs + δC . g Lzδ = 1obj g g g · d(Zcc + δZc , Zc ) g 3D Box Corner Regression Ldepth = αLzc + Lzδ (5) This subnetwork regresses eight corners, i.e., O = {Ok }, k = 1, ..., 8, in a local coordinate frame. Since each where α > 1 that encourages the network to first learn the grid cell predicts a 2D bounding box in the 2D detector, we coarse depths and then the deltas.

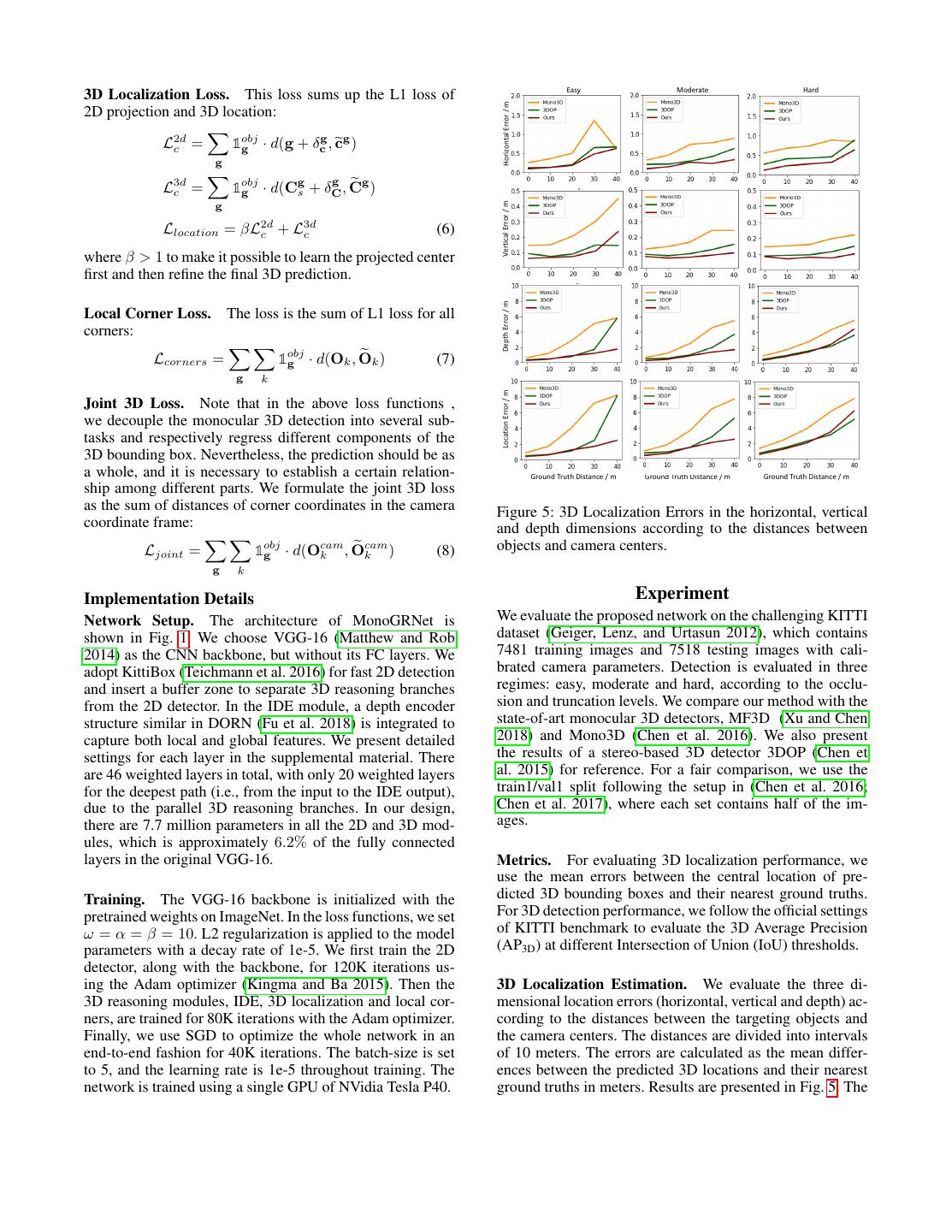

5 . Easy Moderate Hard 3D Localization Loss. This loss sums up the L1 loss of Horizontal Error / m 2D projection and 3D location: L2d c = 1obj g g g · d(g + δc , c ) g g L3d c = 1obj g g g · d(Cs + δC , C ) Vertical Error / m g Llocation = βL2d 3d c + Lc (6) where β > 1 to make it possible to learn the projected center first and then refine the final 3D prediction. Depth Error / m Local Corner Loss. The loss is the sum of L1 loss for all corners: Lcorners = 1obj g · d(Ok , Ok ) (7) g k Location Error / m Joint 3D Loss. Note that in the above loss functions , we decouple the monocular 3D detection into several sub- tasks and respectively regress different components of the 3D bounding box. Nevertheless, the prediction should be as a whole, and it is necessary to establish a certain relation- Ground Truth Distance / m Ground Truth Distance / m Ground Truth Distance / m ship among different parts. We formulate the joint 3D loss as the sum of distances of corner coordinates in the camera Figure 5: 3D Localization Errors in the horizontal, vertical coordinate frame: and depth dimensions according to the distances between Ljoint = 1obj cam , Ocam objects and camera centers. g · d(Ok k ) (8) g k Implementation Details Experiment Network Setup. The architecture of MonoGRNet is We evaluate the proposed network on the challenging KITTI shown in Fig. 1. We choose VGG-16 (Matthew and Rob dataset (Geiger, Lenz, and Urtasun 2012), which contains 2014) as the CNN backbone, but without its FC layers. We 7481 training images and 7518 testing images with cali- adopt KittiBox (Teichmann et al. 2016) for fast 2D detection brated camera parameters. Detection is evaluated in three and insert a buffer zone to separate 3D reasoning branches regimes: easy, moderate and hard, according to the occlu- from the 2D detector. In the IDE module, a depth encoder sion and truncation levels. We compare our method with the structure similar in DORN (Fu et al. 2018) is integrated to state-of-art monocular 3D detectors, MF3D (Xu and Chen capture both local and global features. We present detailed 2018) and Mono3D (Chen et al. 2016). We also present settings for each layer in the supplemental material. There the results of a stereo-based 3D detector 3DOP (Chen et are 46 weighted layers in total, with only 20 weighted layers al. 2015) for reference. For a fair comparison, we use the for the deepest path (i.e., from the input to the IDE output), train1/val1 split following the setup in (Chen et al. 2016; due to the parallel 3D reasoning branches. In our design, Chen et al. 2017), where each set contains half of the im- there are 7.7 million parameters in all the 2D and 3D mod- ages. ules, which is approximately 6.2% of the fully connected layers in the original VGG-16. Metrics. For evaluating 3D localization performance, we use the mean errors between the central location of pre- Training. The VGG-16 backbone is initialized with the dicted 3D bounding boxes and their nearest ground truths. pretrained weights on ImageNet. In the loss functions, we set For 3D detection performance, we follow the official settings ω = α = β = 10. L2 regularization is applied to the model of KITTI benchmark to evaluate the 3D Average Precision parameters with a decay rate of 1e-5. We first train the 2D (AP3D ) at different Intersection of Union (IoU) thresholds. detector, along with the backbone, for 120K iterations us- ing the Adam optimizer (Kingma and Ba 2015). Then the 3D Localization Estimation. We evaluate the three di- 3D reasoning modules, IDE, 3D localization and local cor- mensional location errors (horizontal, vertical and depth) ac- ners, are trained for 80K iterations with the Adam optimizer. cording to the distances between the targeting objects and Finally, we use SGD to optimize the whole network in an the camera centers. The distances are divided into intervals end-to-end fashion for 40K iterations. The batch-size is set of 10 meters. The errors are calculated as the mean differ- to 5, and the learning rate is 1e-5 throughout training. The ences between the predicted 3D locations and their nearest network is trained using a single GPU of NVidia Tesla P40. ground truths in meters. Results are presented in Fig. 5. The

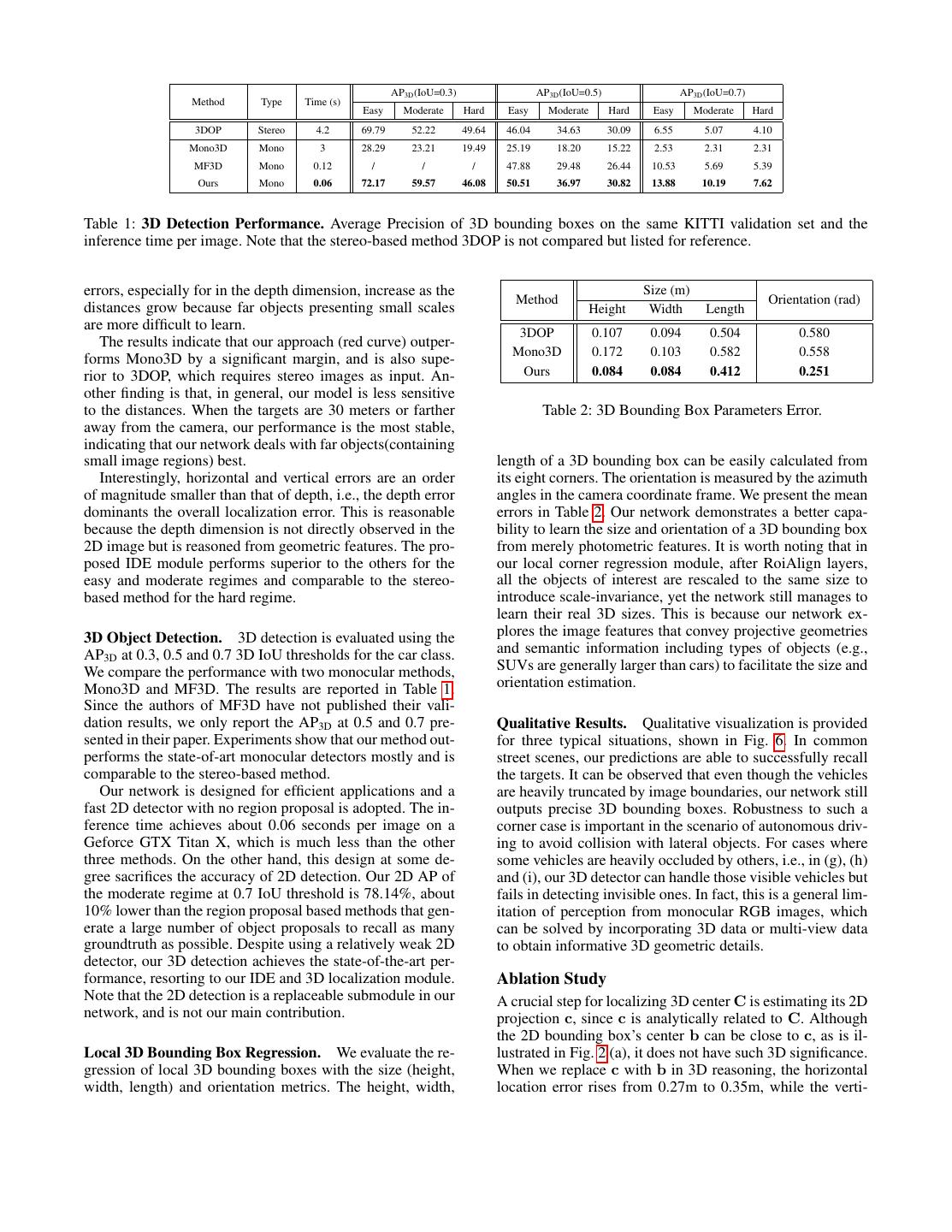

6 . AP3D (IoU=0.3) AP3D (IoU=0.5) AP3D (IoU=0.7) Method Type Time (s) Easy Moderate Hard Easy Moderate Hard Easy Moderate Hard 3DOP Stereo 4.2 69.79 52.22 49.64 46.04 34.63 30.09 6.55 5.07 4.10 Mono3D Mono 3 28.29 23.21 19.49 25.19 18.20 15.22 2.53 2.31 2.31 MF3D Mono 0.12 / / / 47.88 29.48 26.44 10.53 5.69 5.39 Ours Mono 0.06 72.17 59.57 46.08 50.51 36.97 30.82 13.88 10.19 7.62 Table 1: 3D Detection Performance. Average Precision of 3D bounding boxes on the same KITTI validation set and the inference time per image. Note that the stereo-based method 3DOP is not compared but listed for reference. errors, especially for in the depth dimension, increase as the Size (m) Method Orientation (rad) distances grow because far objects presenting small scales Height Width Length are more difficult to learn. 3DOP 0.107 0.094 0.504 0.580 The results indicate that our approach (red curve) outper- Mono3D 0.172 0.103 0.582 0.558 forms Mono3D by a significant margin, and is also supe- rior to 3DOP, which requires stereo images as input. An- Ours 0.084 0.084 0.412 0.251 other finding is that, in general, our model is less sensitive to the distances. When the targets are 30 meters or farther Table 2: 3D Bounding Box Parameters Error. away from the camera, our performance is the most stable, indicating that our network deals with far objects(containing small image regions) best. length of a 3D bounding box can be easily calculated from Interestingly, horizontal and vertical errors are an order its eight corners. The orientation is measured by the azimuth of magnitude smaller than that of depth, i.e., the depth error angles in the camera coordinate frame. We present the mean dominants the overall localization error. This is reasonable errors in Table 2. Our network demonstrates a better capa- because the depth dimension is not directly observed in the bility to learn the size and orientation of a 3D bounding box 2D image but is reasoned from geometric features. The pro- from merely photometric features. It is worth noting that in posed IDE module performs superior to the others for the our local corner regression module, after RoiAlign layers, easy and moderate regimes and comparable to the stereo- all the objects of interest are rescaled to the same size to based method for the hard regime. introduce scale-invariance, yet the network still manages to learn their real 3D sizes. This is because our network ex- 3D Object Detection. 3D detection is evaluated using the plores the image features that convey projective geometries AP3D at 0.3, 0.5 and 0.7 3D IoU thresholds for the car class. and semantic information including types of objects (e.g., We compare the performance with two monocular methods, SUVs are generally larger than cars) to facilitate the size and Mono3D and MF3D. The results are reported in Table 1. orientation estimation. Since the authors of MF3D have not published their vali- dation results, we only report the AP3D at 0.5 and 0.7 pre- Qualitative Results. Qualitative visualization is provided sented in their paper. Experiments show that our method out- for three typical situations, shown in Fig. 6. In common performs the state-of-art monocular detectors mostly and is street scenes, our predictions are able to successfully recall comparable to the stereo-based method. the targets. It can be observed that even though the vehicles Our network is designed for efficient applications and a are heavily truncated by image boundaries, our network still fast 2D detector with no region proposal is adopted. The in- outputs precise 3D bounding boxes. Robustness to such a ference time achieves about 0.06 seconds per image on a corner case is important in the scenario of autonomous driv- Geforce GTX Titan X, which is much less than the other ing to avoid collision with lateral objects. For cases where three methods. On the other hand, this design at some de- some vehicles are heavily occluded by others, i.e., in (g), (h) gree sacrifices the accuracy of 2D detection. Our 2D AP of and (i), our 3D detector can handle those visible vehicles but the moderate regime at 0.7 IoU threshold is 78.14%, about fails in detecting invisible ones. In fact, this is a general lim- 10% lower than the region proposal based methods that gen- itation of perception from monocular RGB images, which erate a large number of object proposals to recall as many can be solved by incorporating 3D data or multi-view data groundtruth as possible. Despite using a relatively weak 2D to obtain informative 3D geometric details. detector, our 3D detection achieves the state-of-the-art per- formance, resorting to our IDE and 3D localization module. Ablation Study Note that the 2D detection is a replaceable submodule in our A crucial step for localizing 3D center C is estimating its 2D network, and is not our main contribution. projection c, since c is analytically related to C. Although the 2D bounding box’s center b can be close to c, as is il- Local 3D Bounding Box Regression. We evaluate the re- lustrated in Fig. 2 (a), it does not have such 3D significance. gression of local 3D bounding boxes with the size (height, When we replace c with b in 3D reasoning, the horizontal width, length) and orientation metrics. The height, width, location error rises from 0.27m to 0.35m, while the verti-

7 . (a) (b) (c) (d) (e) (f) (g) (h) (i) Figure 6: Qualitative Results. Predicted 3D bounding boxes are drawn in orange, while ground truths are in blue. Lidar point clouds are plotted for reference but not used in our method. Camera centers are at the bottom-left corner. (a), (b) and (c) are common cases when predictions recall the ground truths, while (d), (e) and (f) demonstrate the capability of our model handling truncated objects outside the image. (g), (h) and (i) show the failed detections when some cars are heavily occluded. cal error increases from 0.09m to 0.69m. Moreover, when Conclusion an object is truncated by the image boundaries, its projec- We have presented the MonoGRNet for 3D object local- tion c can be outside the image, while b is always inside. ization from a monocular image, which achieves superior In this case, using b for 3D localization can result in a se- performance on 3D detection, localization and pose estima- vere discrepancy. Therefore, our subnetwork for locating the tion among the state-of-the-art monocular methods. A novel projected 3D center is indispensable. IDE module is proposed to predict precise instance-level depth, avoiding extra computation for pixel-level depth es- In order to examine the effect of coordinate transforma- timation and center localization, regardless of far distances tion before local corner regression, we directly regress the between objects and camera. Meanwhile, we distinguish the corners offset in camera coordinates without rotating the 2D bounding box center and the projection of 3D center for a axes. It shows that the average orientation error increases better geometric reasoning in the 3D localization. The object from 0.251 to 0.442 radians, while the height, width and pose is estimated by regressing corner coordinates in a local length errors of the 3D bounding box almost remain the coordinate frame that alleviates ambiguities of 3D rotations same. This phenomenon corresponds to our analysis that in perspective transformations. The final unified network in- switching to object coordinates can reduce the rotation am- tegrates all components and performs inference efficiently. biguity caused by projection, and thus enables more accurate 3D bounding box estimation.

8 . References 3d reconstructed surfaces. In IEEE International Conference [Chabot et al. 2017] Chabot, F.; Chaouch, M.; Rabarisoa, J.; on Computer Vision. Teuli`ere, C.; and Chateau, T. 2017. Deep manta: A coarse- [Liu et al. 2016] Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, to-fine many-task network for joint 2d and 3d vehicle analy- C.; Reed, S.; Fu, C.-Y.; and Berg, A. C. 2016. Ssd: Single sis from monocular image. In Computer Vision and Pattern shot multibox detector. In European conference on computer Recognit.(CVPR), 2040–2049. vision (ECCV), 21–37. Springer. [Chen et al. 2015] Chen, X.; Kundu, K.; Zhu, Y.; Berne- [Mahjourian, Wicke, and Angelova 2018] Mahjourian, R.; shawi, A. G.; Ma, H.; Fidler, S.; and Urtasun, R. 2015. 3d Wicke, M.; and Angelova, A. 2018. Unsupervised learning object proposals for accurate object class detection. In Ad- of depth and ego-motion from monocular video using vances in Neural Information Processing Systems, 424–432. 3d geometric constraints. In Proceedings of the IEEE [Chen et al. 2016] Chen, X.; Kundu, K.; Zhang, Z.; Ma, H.; Conference on Computer Vision and Pattern Recognition, Fidler, S.; and Urtasun, R. 2016. Monocular 3d object de- 5667–5675. tection for autonomous driving. In Conference on Computer [Matthew and Rob 2014] Matthew, D. Z., and Rob, F. 2014. Vision and Pattern Recognition (CVPR), 2147–2156. Visualizing and understanding convolutional networks. In [Chen et al. 2017] Chen, X.; Ma, H.; Wan, J.; Li, B.; and Xia, European Conference on Computer Vision, 818–833. T. 2017. Multi-view 3d object detection network for au- [Mousavian et al. 2017] Mousavian, A.; Anguelov, D.; tonomous driving. In IEEE CVPR, volume 1, 3. Flynn, J.; and Koˇseck´a, J. 2017. 3d bounding box estima- [Eigen and Fergus 2015] Eigen, D., and Fergus, R. 2015. tion using deep learning and geometry. In Computer Vision Predicting depth, surface normals and semantic labels with and Pattern Recognition (CVPR), 5632–5640. IEEE. a common multi-scale convolutional architecture. In Pro- [Qi et al. 2017] Qi, C. R.; Liu, W.; Wu, C.; Su, H.; and ceedings of the IEEE International Conference on Computer Guibas, L. J. 2017. Frustum pointnets for 3d object de- Vision, 2650–2658. tection from rgb-d data. arXiv preprint arXiv:1711.08488. [Fu et al. 2017] Fu, C.-Y.; Liu, W.; Ranga, A.; Tyagi, A.; and [Redmon and Farhadi 2017] Redmon, J., and Farhadi, A. Berg, A. C. 2017. Dssd: Deconvolutional single shot detec- 2017. Yolo9000: Better, faster, stronger. In Computer Vi- tor. arXiv preprint arXiv:1701.06659. sion and Pattern Recognition (CVPR), 6517–6525. IEEE. [Fu et al. 2018] Fu, H.; Gong, M.; Wang, C.; Batmanghelich, K.; and Tao, D. 2018. Deep ordinal regression network [Redmon et al. 2016] Redmon, J.; Divvala, S.; Girshick, R.; for monocular depth estimation. In Computer Vision and and Farhadi, A. 2016. You only look once: Unified, real- Pattern Recognition (CVPR). time object detection. In Proceedings of the IEEE confer- ence on computer vision and pattern recognition, 779–788. [Gao et al. 2018] Gao, Z.; Wang, Y.; He, X.; and Zhang, H. 2018. Group-pair convolutional neural networks for multi- [Ren et al. 2017] Ren, S.; He, K.; Girshick, R.; and Sun, J. view based 3d object retrieval. In The Thirty-Second AAAI 2017. Faster r-cnn: towards real-time object detection with Conference on Artificial Intelligence (AAAI-18). region proposal networks. IEEE Transactions on Pattern Analysis & Machine Intelligence. [Geiger, Lenz, and Urtasun 2012] Geiger, A.; Lenz, P.; and Urtasun, R. 2012. Are we ready for autonomous driving? [Song and Xiao 2016] Song, S., and Xiao, J. 2016. Deep the kitti vision benchmark suite. In Computer Vision and sliding shapes for amodal 3d object detection in rgb-d im- Pattern Recognition (CVPR), 3354–3361. IEEE. ages. In The IEEE Conference on Computer Vision and Pat- [Girshick 2015] Girshick, R. 2015. Fast r-cnn. In Proceed- tern Recognition (CVPR). ings of the IEEE international conference on computer vi- [Teichmann et al. 2016] Teichmann, M.; Weber, M.; Zoell- sion, 1440–1448. ner, M.; Cipolla, R.; and Urtasun, R. 2016. Multinet: Real- [Guizilini and Ramos 2018] Guizilini, V., and Ramos, F. time joint semantic reasoning for autonomous driving. arXiv 2018. Iterative continuous convolution for 3d template preprint arXiv:1612.07695. matching and global localization. In The Thirty-Second [Wang et al. ] Wang, J.; Fang, T.; Su, Q.; Zhu, S.; Liu, J.; Cai, AAAI Conference on Artificial Intelligence (AAAI-18). S.; Tai, C.-L.; and Quan, L. Image-based building regular- [He et al. 2017] He, K.; Gkioxari, G.; Dollr, P.; and Girshick, ization using structural linear features. IEEE Transactions R. 2017. Mask r-cnn. arXiv preprint arXiv:1703.06870. on Visualization Computer Graphics. [Kehl et al. 2017] Kehl, W.; Manhardt, F.; Tombari, F.; Ilic, [Xu and Chen 2018] Xu, B., and Chen, Z. 2018. Multi-level S.; and Navab, N. 2017. Ssd-6d: Making rgb-based 3d de- fusion based 3d object detection from monocular images. In tection and 6d pose estimation great again. In Proceedings Computer Vision and Pattern Recognition (CVPR), 2345– of the International Conference on Computer Vision (ICCV 2353. 2017), Venice, Italy, 22–29. [Xu et al. 2018] Xu, C.; Leng, B.; Zhang, C.; and Zhou, X. [Kingma and Ba 2015] Kingma, D. P., and Ba, J. 2015. 2018. Emphasizing 3d properties in recurrent multi-view ag- Adam: A method for stochastic optimization. In Interna- gregation for 3d shape retrieval. In The Thirty-Second AAAI tional Conference for Learning Representations. Conference on Artificial Intelligence (AAAI-18). [Liu et al. 2015] Liu, J.; Wang, J.; Fang, T.; Tai, C.-L.; and [Zhang et al. 2014] Zhang, H.; Wang, J.; Fang, T.; and Quan, Quan, L. 2015. Higher-order crf structural segmentation of L. 2014. Joint segmentation of images and scanned point

9 . cloud in large-scale street scenes with low-annotation cost. IEEE Transactions on Image Processing 23(11):4763–4772. [Zhuo et al. 2018] Zhuo, W.; Salzmann, M.; He, X.; and Liu, M. 2018. 3d box proposals from a single monocular image of an indoor scene. In The Thirty-Second AAAI Conference on Artificial Intelligence (AAAI-18).