- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Image Super-Resolution Using DeepConvolutional Networ

展开查看详情

1 . 1 Image Super-Resolution Using Deep Convolutional Networks Chao Dong, Chen Change Loy, Member, IEEE, Kaiming He, Member, IEEE, and Xiaoou Tang, Fellow, IEEE Abstract—We propose a deep learning method for single image super-resolution (SR). Our method directly learns an end-to-end mapping between the low/high-resolution images. The mapping is represented as a deep convolutional neural network (CNN) that takes the low-resolution image as the input and outputs the high-resolution one. We further show that traditional sparse-coding-based SR methods can also be viewed as a deep convolutional network. But unlike traditional methods that handle each component separately, our method jointly optimizes all layers. Our deep CNN has a lightweight structure, yet demonstrates state-of-the-art restoration quality, arXiv:1501.00092v3 [cs.CV] 31 Jul 2015 and achieves fast speed for practical on-line usage. We explore different network structures and parameter settings to achieve trade- offs between performance and speed. Moreover, we extend our network to cope with three color channels simultaneously, and show better overall reconstruction quality. Index Terms—Super-resolution, deep convolutional neural networks, sparse coding ✦ 1 I NTRODUCTION constructed patches are aggregated (e.g., by weighted Single image super-resolution (SR) [20], which aims at averaging) to produce the final output. This pipeline is recovering a high-resolution image from a single low- shared by most external example-based methods, which resolution image, is a classical problem in computer pay particular attention to learning and optimizing the vision. This problem is inherently ill-posed since a mul- dictionaries [2], [49], [50] or building efficient mapping tiplicity of solutions exist for any given low-resolution functions [25], [41], [42], [47]. However, the rest of the pixel. In other words, it is an underdetermined in- steps in the pipeline have been rarely optimized or verse problem, of which solution is not unique. Such considered in an unified optimization framework. a problem is typically mitigated by constraining the In this paper, we show that the aforementioned solution space by strong prior information. To learn pipeline is equivalent to a deep convolutional neural net- the prior, recent state-of-the-art methods mostly adopt work [27] (more details in Section 3.2). Motivated by this the example-based [46] strategy. These methods either fact, we consider a convolutional neural network that exploit internal similarities of the same image [5], [13], directly learns an end-to-end mapping between low- and [16], [19], [47], or learn mapping functions from external high-resolution images. Our method differs fundamen- low- and high-resolution exemplar pairs [2], [4], [6], tally from existing external example-based approaches, [15], [23], [25], [37], [41], [42], [47], [48], [50], [51]. The in that ours does not explicitly learn the dictionaries [41], external example-based methods can be formulated for [49], [50] or manifolds [2], [4] for modeling the patch generic image super-resolution, or can be designed to space. These are implicitly achieved via hidden layers. suit domain specific tasks, i.e., face hallucination [30], Furthermore, the patch extraction and aggregation are [50], according to the training samples provided. also formulated as convolutional layers, so are involved The sparse-coding-based method [49], [50] is one of the in the optimization. In our method, the entire SR pipeline representative external example-based SR methods. This is fully obtained through learning, with little pre/post- method involves several steps in its solution pipeline. processing. First, overlapping patches are densely cropped from the We name the proposed model Super-Resolution Con- input image and pre-processed (e.g.,subtracting mean volutional Neural Network (SRCNN)1 . The proposed and normalization). These patches are then encoded SRCNN has several appealing properties. First, its struc- by a low-resolution dictionary. The sparse coefficients ture is intentionally designed with simplicity in mind, are passed into a high-resolution dictionary for recon- and yet provides superior accuracy2 compared with structing high-resolution patches. The overlapping re- state-of-the-art example-based methods. Figure 1 shows a comparison on an example. Second, with moderate • C. Dong, C. C. Loy and X. Tang are with the Department of Information Engineering, The Chinese University of Hong Kong, Hong Kong. 1. The implementation is available at http://mmlab.ie.cuhk.edu.hk/ E-mail: {dc012,ccloy,xtang}@ie.cuhk.edu.hk projects/SRCNN.html. • K. He is with the Visual Computing Group, Microsoft Research Asia, 2. Numerical evaluations by using different metrics such as the Peak Beijing 100080, China. Signal-to-Noise Ratio (PSNR), structure similarity index (SSIM) [43], Email: kahe@microsoft.com multi-scale SSIM [44], information fidelity criterion [38], when the ground truth images are available.

2 . 2 33 learning-based SR method and the traditional (dB) (dB) sparse-coding-based SR methods. This relationship 32.5 provides a guidance for the design of the network PSNR 32 Original / PSNR Bicubic / 24.04 dB testPSNR 31.5 structure. Original3) We demonstrate / PSNR Bicubic / 24.04 dB that deep learning is useful in Average test 31 Average the classical computer vision problem of super- 30.5 SRCNN resolution, and can achieve good quality and 30 SCSRCNN SC Bicubic Bicubic speed. 29.5 2 4 6 8 10 12 A preliminary version of this work was presented Number of backprops 8 Number of backprops x 10 SC / 25.58 dB SRCNN / 27.95 dB earlier [11]. SC / 25.58 dB The present work SRCNN / 27.95 dB adds to the initial version in significant ways. Firstly, we improve the SRCNN by introducing larger filter size in the non-linear mapping layer, and explore deeper structures by adding non- linear mapping layers. Secondly, we extend the SRCNN to process three color channels (either in YCbCr or RGB color space) simultaneously. Experimentally, we demon- strate that performance can be improved in comparison Original Original / PSNR / PSNR Bicubic Bicubic / 24.04 / 24.04 dB dB to the single-channel network. Thirdly, considerable new analyses and intuitive explanations are added to the initial results. We also extend the original experiments from Set5 [2] and Set14 [51] test images to BSD200 [32] (200 test images). In addition, we compare with a num- ber of recently published methods and confirm that our model still outperforms existing approaches using SC / SC 25.58 / 25.58 dB dB SRCNN SRCNN / 27.95 / 27.95 dB dB different evaluation metrics. Fig. 1. The proposed Super-Resolution Convolutional Neural Network (SRCNN) surpasses the bicubic baseline 2 R ELATED W ORK with just a few training iterations, and outperforms the 2.1 Image Super-Resolution sparse-coding-based method (SC) [50] with moderate According to the image priors, single-image super res- training. The performance may be further improved with olution algorithms can be categorized into four types – more training iterations. More details are provided in prediction models, edge based methods, image statistical Section 4.4.1 (the Set5 dataset with an upscaling factor methods and patch based (or example-based) methods. 3). The proposed method provides visually appealing These methods have been thoroughly investigated and reconstructed image. evaluated in Yang et al.’s work [46]. Among them, the example-based methods [16], [25], [41], [47] achieve the numbers of filters and layers, our method achieves state-of-the-art performance. fast speed for practical on-line usage even on a CPU. The internal example-based methods exploit the self- Our method is faster than a number of example-based similarity property and generate exemplar patches from methods, because it is fully feed-forward and does the input image. It is first proposed in Glasner’s not need to solve any optimization problem on usage. work [16], and several improved variants [13], [45] are Third, experiments show that the restoration quality of proposed to accelerate the implementation. The exter- the network can be further improved when (i) larger nal example-based methods [2], [4], [6], [15], [37], [41], and more diverse datasets are available, and/or (ii) [48], [49], [50], [51] learn a mapping between low/high- a larger and deeper model is used. On the contrary, resolution patches from external datasets. These studies larger datasets/models can present challenges for exist- vary on how to learn a compact dictionary or manifold ing example-based methods. Furthermore, the proposed space to relate low/high-resolution patches, and on how network can cope with three channels of color images representation schemes can be conducted in such spaces. simultaneously to achieve improved super-resolution In the pioneer work of Freeman et al. [14], the dic- performance. tionaries are directly presented as low/high-resolution Overall, the contributions of this study are mainly in patch pairs, and the nearest neighbour (NN) of the input three aspects: patch is found in the low-resolution space, with its corre- 1) We present a fully convolutional neural net- sponding high-resolution patch used for reconstruction. work for image super-resolution. The network di- Chang et al. [4] introduce a manifold embedding tech- rectly learns an end-to-end mapping between low- nique as an alternative to the NN strategy. In Yang et al.’s and high-resolution images, with little pre/post- work [49], [50], the above NN correspondence advances processing beyond the optimization. to a more sophisticated sparse coding formulation. Other 2) We establish a relationship between our deep- mapping functions such as kernel regression [25], simple

3 . 3 function [47], random forest [37] and anchored neigh- 3 C ONVOLUTIONAL N EURAL N ETWORKS FOR borhood regression [41], [42] are proposed to further S UPER -R ESOLUTION improve the mapping accuracy and speed. The sparse- 3.1 Formulation coding-based method and its several improvements [41], [42], [48] are among the state-of-the-art SR methods Consider a single low-resolution image, we first upscale nowadays. In these methods, the patches are the focus it to the desired size using bicubic interpolation, which of the optimization; the patch extraction and aggregation is the only pre-processing we perform3 . Let us denote steps are considered as pre/post-processing and handled the interpolated image as Y. Our goal is to recover separately. from Y an image F (Y) that is as similar as possible The majority of SR algorithms [2], [4], [15], [41], [48], to the ground truth high-resolution image X. For the [49], [50], [51] focus on gray-scale or single-channel ease of presentation, we still call Y a “low-resolution” image super-resolution. For color images, the aforemen- image, although it has the same size as X. We wish to tioned methods first transform the problem to a dif- learn a mapping F , which conceptually consists of three ferent color space (YCbCr or YUV), and SR is applied operations: only on the luminance channel. There are also works 1) Patch extraction and representation: this opera- attempting to super-resolve all channels simultaneously. tion extracts (overlapping) patches from the low- For example, Kim and Kwon [25] and Dai et al. [7] apply resolution image Y and represents each patch as a their model to each RGB channel and combined them to high-dimensional vector. These vectors comprise a produce the final results. However, none of them has set of feature maps, of which the number equals to analyzed the SR performance of different channels, and the dimensionality of the vectors. the necessity of recovering all three channels. 2) Non-linear mapping: this operation nonlinearly maps each high-dimensional vector onto another 2.2 Convolutional Neural Networks high-dimensional vector. Each mapped vector is Convolutional neural networks (CNN) date back conceptually the representation of a high-resolution decades [27] and deep CNNs have recently shown an patch. These vectors comprise another set of feature explosive popularity partially due to its success in image maps. classification [18], [26]. They have also been success- 3) Reconstruction: this operation aggregates the fully applied to other computer vision fields, such as above high-resolution patch-wise representations object detection [34], [40], [52], face recognition [39], and to generate the final high-resolution image. This pedestrian detection [35]. Several factors are of central image is expected to be similar to the ground truth importance in this progress: (i) the efficient training X. implementation on modern powerful GPUs [26], (ii) the We will show that all these operations form a convolu- proposal of the Rectified Linear Unit (ReLU) [33] which tional neural network. An overview of the network is makes convergence much faster while still presents good depicted in Figure 2. Next we detail our definition of quality [26], and (iii) the easy access to an abundance of each operation. data (like ImageNet [9]) for training larger models. Our method also benefits from these progresses. 3.1.1 Patch extraction and representation A popular strategy in image restoration (e.g., [1]) is to 2.3 Deep Learning for Image Restoration densely extract patches and then represent them by a set There have been a few studies of using deep learning of pre-trained bases such as PCA, DCT, Haar, etc. This techniques for image restoration. The multi-layer per- is equivalent to convolving the image by a set of filters, ceptron (MLP), whose all layers are fully-connected (in each of which is a basis. In our formulation, we involve contrast to convolutional), is applied for natural image the optimization of these bases into the optimization of denoising [3] and post-deblurring denoising [36]. More the network. Formally, our first layer is expressed as an closely related to our work, the convolutional neural net- operation F1 : work is applied for natural image denoising [22] and re- moving noisy patterns (dirt/rain) [12]. These restoration F1 (Y) = max (0, W1 ∗ Y + B1 ) , (1) problems are more or less denoising-driven. Cui et al. [5] where W1 and B1 represent the filters and biases re- propose to embed auto-encoder networks in their super- spectively, and ’∗’ denotes the convolution operation. resolution pipeline under the notion internal example- Here, W1 corresponds to n1 filters of support c × f1 × f1 , based approach [16]. The deep model is not specifically where c is the number of channels in the input image, designed to be an end-to-end solution, since each layer f1 is the spatial size of a filter. Intuitively, W1 applies of the cascade requires independent optimization of the n1 convolutions on the image, and each convolution has self-similarity search process and the auto-encoder. On the contrary, the proposed SRCNN optimizes an end-to- 3. Bicubic interpolation is also a convolutional operation, so it can end mapping. Further, the SRCNN is faster at speed. It be formulated as a convolutional layer. However, the output size of this layer is larger than the input size, so there is a fractional stride. To is not only a quantitatively superior method, but also a take advantage of the popular well-optimized implementations such practically useful one. as cuda-convnet [26], we exclude this “layer” from learning.

4 . 4 feature maps feature maps of low-resolution image of high-resolution image Low-resolution High-resolution image (input) image (output) Patch extraction Non-linear mapping Reconstruction and representation Fig. 2. Given a low-resolution image Y, the first convolutional layer of the SRCNN extracts a set of feature maps. The second layer maps these feature maps nonlinearly to high-resolution patch representations. The last layer combines the predictions within a spatial neighbourhood to produce the final high-resolution image F (Y). a kernel size c × f1 × f1 . The output is composed of Here W3 corresponds to c filters of a size n2 × f3 × f3 , n1 feature maps. B1 is an n1 -dimensional vector, whose and B3 is a c-dimensional vector. each element is associated with a filter. We apply the If the representations of the high-resolution patches Rectified Linear Unit (ReLU, max(0, x)) [33] on the filter are in the image domain (i.e.,we can simply reshape each responses4 . representation to form the patch), we expect that the filters act like an averaging filter; if the representations 3.1.2 Non-linear mapping of the high-resolution patches are in some other domains The first layer extracts an n1 -dimensional feature for (e.g.,coefficients in terms of some bases), we expect that each patch. In the second operation, we map each of W3 behaves like first projecting the coefficients onto the these n1 -dimensional vectors into an n2 -dimensional image domain and then averaging. In either way, W3 is one. This is equivalent to applying n2 filters which have a set of linear filters. a trivial spatial support 1 × 1. This interpretation is only valid for 1 × 1 filters. But it is easy to generalize to larger Interestingly, although the above three operations are filters like 3 × 3 or 5 × 5. In that case, the non-linear motivated by different intuitions, they all lead to the mapping is not on a patch of the input image; instead, same form as a convolutional layer. We put all three it is on a 3 × 3 or 5 × 5 “patch” of the feature map. The operations together and form a convolutional neural operation of the second layer is: network (Figure 2). In this model, all the filtering weights F2 (Y) = max (0, W2 ∗ F1 (Y) + B2 ) . (2) and biases are to be optimized. Despite the succinctness of the overall structure, our SRCNN model is carefully Here W2 contains n2 filters of size n1 × f2 × f2 , and B2 is developed by drawing extensive experience resulted n2 -dimensional. Each of the output n2 -dimensional vec- from significant progresses in super-resolution [49], [50]. tors is conceptually a representation of a high-resolution We detail the relationship in the next section. patch that will be used for reconstruction. It is possible to add more convolutional layers to increase the non-linearity. But this can increase the com- 3.2 Relationship to Sparse-Coding-Based Methods plexity of the model (n2 × f2 × f2 × n2 parameters for We show that the sparse-coding-based SR methods [49], one layer), and thus demands more training time. We [50] can be viewed as a convolutional neural network. will explore deeper structures by introducing additional Figure 3 shows an illustration. non-linear mapping layers in Section 4.3.3. In the sparse-coding-based methods, let us consider 3.1.3 Reconstruction that an f1 × f1 low-resolution patch is extracted from the input image. Then the sparse coding solver, like In the traditional methods, the predicted overlapping Feature-Sign [29], will first project the patch onto a (low- high-resolution patches are often averaged to produce resolution) dictionary. If the dictionary size is n1 , this the final full image. The averaging can be considered is equivalent to applying n1 linear filters (f1 × f1 ) on as a pre-defined filter on a set of feature maps (where the input image (the mean subtraction is also a linear each position is the “flattened” vector form of a high- operation so can be absorbed). This is illustrated as the resolution patch). Motivated by this, we define a convo- left part of Figure 3. lutional layer to produce the final high-resolution image: The sparse coding solver will then iteratively process F (Y) = W3 ∗ F2 (Y) + B3 . (3) the n1 coefficients. The outputs of this solver are n2 coefficients, and usually n2 = n1 in the case of sparse 4. The ReLU can be equivalently considered as a part of the second operation (Non-linear mapping), and the first operation (Patch extrac- coding. These n2 coefficients are the representation of tion and representation) becomes purely linear convolution. the high-resolution patch. In this sense, the sparse coding

5 . 5 responses neighbouring of patch of patches Patch extraction Non-linear Reconstruction and representation mapping Fig. 3. An illustration of sparse-coding-based methods in the view of a convolutional neural network. solver behaves as a special case of a non-linear mapping (9 + 5 − 1)2 = 169 pixels. Clearly, the information operator, whose spatial support is 1 × 1. See the middle exploited for reconstruction is comparatively larger than part of Figure 3. However, the sparse coding solver is that used in existing external example-based approaches, not feed-forward, i.e.,it is an iterative algorithm. On the e.g., using (5+5−1)2 = 81 pixels5 [15], [50]. This is one of contrary, our non-linear operator is fully feed-forward the reasons why the SRCNN gives superior performance. and can be computed efficiently. If we set f2 = 1, then our non-linear operator can be considered as a pixel-wise 3.3 Training fully-connected layer. It is worth noting that “the sparse coding solver” in SRCNN refers to the first two layers, Learning the end-to-end mapping function F re- but not just the second layer or the activation function quires the estimation of network parameters Θ = (ReLU). Thus the nonlinear operation in SRCNN is also {W1 , W2 , W3 , B1 , B2 , B3 }. This is achieved through min- well optimized through the learning process. imizing the loss between the reconstructed images The above n2 coefficients (after sparse coding) are F (Y; Θ) and the corresponding ground truth high- then projected onto another (high-resolution) dictionary resolution images X. Given a set of high-resolution to produce a high-resolution patch. The overlapping images {Xi } and their corresponding low-resolution high-resolution patches are then averaged. As discussed images {Yi }, we use Mean Squared Error (MSE) as the above, this is equivalent to linear convolutions on the loss function: n n2 feature maps. If the high-resolution patches used for 1 reconstruction are of size f3 × f3 , then the linear filters L(Θ) = ||F (Yi ; Θ) − Xi ||2 , (4) n i=1 have an equivalent spatial support of size f3 × f3 . See the right part of Figure 3. where n is the number of training samples. Using MSE The above discussion shows that the sparse-coding- as the loss function favors a high PSNR. The PSNR based SR method can be viewed as a kind of con- is a widely-used metric for quantitatively evaluating volutional neural network (with a different non-linear image restoration quality, and is at least partially related mapping). But not all operations have been considered in to the perceptual quality. It is worth noticing that the the optimization in the sparse-coding-based SR methods. convolutional neural networks do not preclude the usage On the contrary, in our convolutional neural network, of other kinds of loss functions, if only the loss functions the low-resolution dictionary, high-resolution dictionary, are derivable. If a better perceptually motivated metric non-linear mapping, together with mean subtraction and is given during training, it is flexible for the network to averaging, are all involved in the filters to be optimized. adapt to that metric. On the contrary, such a flexibility So our method optimizes an end-to-end mapping that is in general difficult to achieve for traditional “hand- consists of all operations. crafted” methods. Despite that the proposed model is The above analogy can also help us to design hyper- trained favoring a high PSNR, we still observe satisfac- parameters. For example, we can set the filter size of tory performance when the model is evaluated using the last layer to be smaller than that of the first layer, alternative evaluation metrics, e.g., SSIM, MSSIM (see and thus we rely more on the central part of the high- Section 4.4.1). resolution patch (to the extreme, if f3 = 1, we are The loss is minimized using stochastic gradient de- using the center pixel with no averaging). We can also scent with the standard backpropagation [28]. In partic- set n2 < n1 because it is expected to be sparser. A ular, the weight matrices are updated as typical and basic setting is f1 = 9, f2 = 1, f3 = 5, ∂L n1 = 64, and n2 = 32 (we evaluate more settings in ∆i+1 = 0.9 · ∆i − η · , Wi+1 = Wi + ∆i+1 , (5) ∂Wi the experiment section). On the whole, the estimation of a high resolution pixel utilizes the information of 5. The patches are overlapped with 4 pixels at each direction.

6 . 6 where ∈ {1, 2, 3} and i are the indices of layers and it- provides over 5 million sub-images even using a stride ∂L erations, η is the learning rate, and ∂W is the derivative. of 33. We use the basic network settings, i.e., f1 = 9, i The filter weights of each layer are initialized by drawing f2 = 1, f3 = 5, n1 = 64, and n2 = 32. We use the Set5 [2] randomly from a Gaussian distribution with zero mean as the validation set. We observe a similar trend even and standard deviation 0.001 (and 0 for biases). The if we use the larger Set14 set [51]. The upscaling factor learning rate is 10−4 for the first two layers, and 10−5 for is 3. We use the sparse-coding-based method [50] as our the last layer. We empirically find that a smaller learning baseline, which achieves an average PSNR value of 31.42 rate in the last layer is important for the network to dB. converge (similar to the denoising case [22]). The test convergence curves of using different training In the training phase, the ground truth images {Xi } sets are shown in Figure 4. The training time on Ima- are prepared as fsub ×fsub ×c-pixel sub-images randomly geNet is about the same as on the 91-image dataset since cropped from the training images. By “sub-images” we the number of backpropagations is the same. As can be mean these samples are treated as small “images” rather observed, with the same number of backpropagations than “patches”, in the sense that “patches” are overlap- (i.e.,8 × 108 ), the SRCNN+ImageNet achieves 32.52 dB, ping and require some averaging as post-processing but higher than 32.39 dB yielded by that trained on 91 “sub-images” need not. To synthesize the low-resolution images. The results positively indicate that SRCNN per- samples {Yi }, we blur a sub-image by a Gaussian kernel, formance may be further boosted using a larger training sub-sample it by the upscaling factor, and upscale it by set, but the effect of big data is not as impressive as the same factor via bicubic interpolation. that shown in high-level vision problems [26]. This is To avoid border effects during training, all the con- mainly because that the 91 images have already cap- volutional layers have no padding, and the network tured sufficient variability of natural images. On the produces a smaller output ((fsub − f1 − f2 − f3 + 3)2 × c). other hand, our SRCNN is a relatively small network The MSE loss function is evaluated only by the difference (8,032 parameters), which could not overfit the 91 images between the central pixels of Xi and the network output. (24,800 samples). Nevertheless, we adopt the ImageNet, Although we use a fixed image size in training, the which contains more diverse data, as the default training convolutional neural network can be applied on images set in the following experiments. of arbitrary sizes during testing. We implement our model using the cuda-convnet pack- 4.2 Learned Filters for Super-Resolution age [26]. We have also tried the Caffe package [24] and Figure 5 shows examples of learned first-layer filters observed similar performance. trained on the ImageNet by an upscaling factor 3. Please refer to our published implementation for upscaling 4 E XPERIMENTS factors 2 and 4. Interestingly, each learned filter has We first investigate the impact of using different datasets its specific functionality. For instance, the filters g and on the model performance. Next, we examine the filters h are like Laplacian/Gaussian filters, the filters a - e learned by our approach. We then explore different are like edge detectors at different directions, and the architecture designs of the network, and study the rela- filter f is like a texture extractor. Example feature maps tions between super-resolution performance and factors of different layers are shown in figure 6. Obviously, like depth, number of filters, and filter sizes. Subse- feature maps of the first layer contain different structures quently, we compare our method with recent state-of- (e.g., edges at different directions), while that of the the-arts both quantitatively and qualitatively. Following second layer are mainly different on intensities. [42], super-resolution is only applied on the luminance channel (Y channel in YCbCr color space) in Sections 4.1- 4.3 Model and Performance Trade-offs 4.4, so c = 1 in the first/last layer, and performance Based on the basic network settings (i.e., f1 = 9, f2 = 1, (e.g., PSNR and SSIM) is evaluated on the Y channel. At f3 = 5, n1 = 64, and n2 = 32), we will progressively last, we extend the network to cope with color images modify some of these parameters to investigate the best and evaluate the performance on different channels. trade-off between performance and speed, and study the relations between performance and parameters. 4.1 Training Data As shown in the literature, deep learning generally 32.6 benefits from big data training. For comparison, we use AverageStestSPSNRSndBI 32.4 a relatively small training set [41], [50] that consists 32.2 of 91 images, and a large training set that consists of 32 395,909 images from the ILSVRC 2013 ImageNet detec- 31.8 SRCNNSntrainedSonSImageNetI tion training partition. The size of training sub-images is 31.6 SRCNNSntrainedSonS91SimagesI SCSn31.42SdBI fsub = 33. Thus the 91-image dataset can be decomposed 31.4 1 2 3 4 5 6 7 8 9 10 into 24,800 sub-images, which are extracted from origi- NumberSofSbackprops xS10 8 nal images with a stride of 14. Whereas the ImageNet Fig. 4. Training with the much larger ImageNet dataset improves the performance over the use of 91 images.

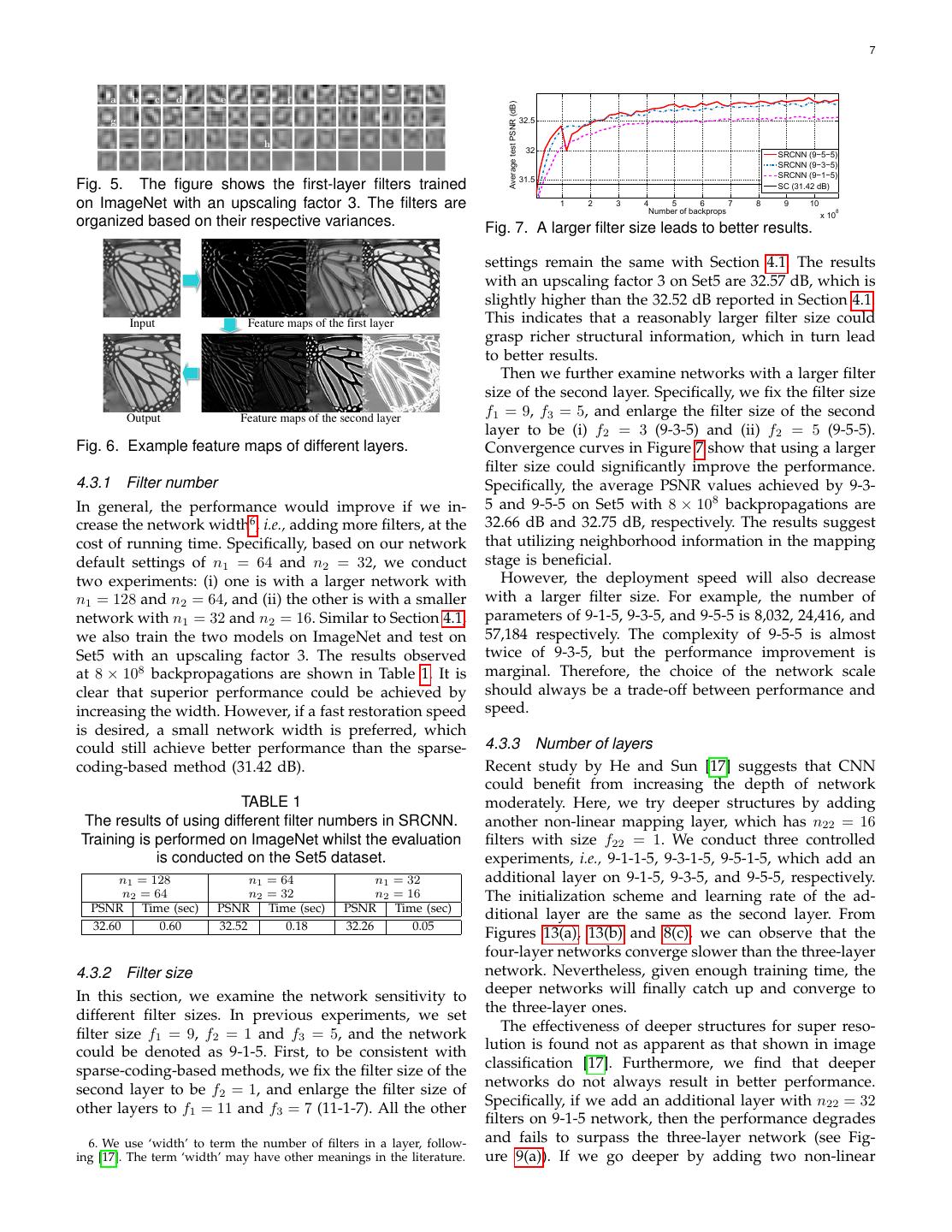

7 . 7 a b c d e f AverageStestSPSNRS(dB) g 32.5 h 32 SRCNNS(9−5−5) SRCNNS(9−3−5) SRCNNS(9−1−5) 31.5 Fig. 5. The figure shows the first-layer filters trained SCS(31.42SdB) on ImageNet with an upscaling factor 3. The filters are 1 2 3 4 5 6 NumberSofSbackprops 7 8 9 10 8 xS10 organized based on their respective variances. Fig. 7. A larger filter size leads to better results. settings remain the same with Section 4.1. The results with an upscaling factor 3 on Set5 are 32.57 dB, which is slightly higher than the 32.52 dB reported in Section 4.1. Input Feature maps of the first layer This indicates that a reasonably larger filter size could grasp richer structural information, which in turn lead to better results. Then we further examine networks with a larger filter size of the second layer. Specifically, we fix the filter size Output Feature maps of the second layer f1 = 9, f3 = 5, and enlarge the filter size of the second layer to be (i) f2 = 3 (9-3-5) and (ii) f2 = 5 (9-5-5). Fig. 6. Example feature maps of different layers. Convergence curves in Figure 7 show that using a larger filter size could significantly improve the performance. 4.3.1 Filter number Specifically, the average PSNR values achieved by 9-3- In general, the performance would improve if we in- 5 and 9-5-5 on Set5 with 8 × 108 backpropagations are crease the network width6 , i.e., adding more filters, at the 32.66 dB and 32.75 dB, respectively. The results suggest cost of running time. Specifically, based on our network that utilizing neighborhood information in the mapping default settings of n1 = 64 and n2 = 32, we conduct stage is beneficial. two experiments: (i) one is with a larger network with However, the deployment speed will also decrease n1 = 128 and n2 = 64, and (ii) the other is with a smaller with a larger filter size. For example, the number of network with n1 = 32 and n2 = 16. Similar to Section 4.1, parameters of 9-1-5, 9-3-5, and 9-5-5 is 8,032, 24,416, and we also train the two models on ImageNet and test on 57,184 respectively. The complexity of 9-5-5 is almost Set5 with an upscaling factor 3. The results observed twice of 9-3-5, but the performance improvement is at 8 × 108 backpropagations are shown in Table 1. It is marginal. Therefore, the choice of the network scale clear that superior performance could be achieved by should always be a trade-off between performance and increasing the width. However, if a fast restoration speed speed. is desired, a small network width is preferred, which could still achieve better performance than the sparse- 4.3.3 Number of layers coding-based method (31.42 dB). Recent study by He and Sun [17] suggests that CNN could benefit from increasing the depth of network TABLE 1 moderately. Here, we try deeper structures by adding The results of using different filter numbers in SRCNN. another non-linear mapping layer, which has n22 = 16 Training is performed on ImageNet whilst the evaluation filters with size f22 = 1. We conduct three controlled is conducted on the Set5 dataset. experiments, i.e., 9-1-1-5, 9-3-1-5, 9-5-1-5, which add an n1 = 128 n1 = 64 n1 = 32 additional layer on 9-1-5, 9-3-5, and 9-5-5, respectively. n2 = 64 n2 = 32 n2 = 16 The initialization scheme and learning rate of the ad- PSNR Time (sec) PSNR Time (sec) PSNR Time (sec) ditional layer are the same as the second layer. From 32.60 0.60 32.52 0.18 32.26 0.05 Figures 13(a), 13(b) and 8(c), we can observe that the four-layer networks converge slower than the three-layer 4.3.2 Filter size network. Nevertheless, given enough training time, the In this section, we examine the network sensitivity to deeper networks will finally catch up and converge to different filter sizes. In previous experiments, we set the three-layer ones. filter size f1 = 9, f2 = 1 and f3 = 5, and the network The effectiveness of deeper structures for super reso- could be denoted as 9-1-5. First, to be consistent with lution is found not as apparent as that shown in image sparse-coding-based methods, we fix the filter size of the classification [17]. Furthermore, we find that deeper second layer to be f2 = 1, and enlarge the filter size of networks do not always result in better performance. other layers to f1 = 11 and f3 = 7 (11-1-7). All the other Specifically, if we add an additional layer with n22 = 32 filters on 9-1-5 network, then the performance degrades 6. We use ‘width’ to term the number of filters in a layer, follow- and fails to surpass the three-layer network (see Fig- ing [17]. The term ‘width’ may have other meanings in the literature. ure 9(a)). If we go deeper by adding two non-linear

8 . 8 32.5 Average(test(PSNR((dB) 32.5 Average(test(PSNR(=dB) 32 32 SRCNN(=9−1−5) SRCNN(=9−1−1−5,(n22=16) 31.5 31.5 SRCNN((9−1−5) SRCNN(=9−1−1−5,(n =32) 22 SRCNN((9−1−1−5) SRCNN(=9−1−1−1−5,(n22=32,(n23=16) SC((31.42(dB) 31 SC(=31.42(dB) 31 2 4 6 8 10 12 2 4 6 8 10 12 Number(of(backprops x(10 8 Number(of(backprops 8 x(10 (a) 9-1-5 vs. 9-1-1-5 (a) 9-1-1-5 (n22 = 32) and 9-1-1-1-5 (n22 = 32, n23 = 16) AverageStestSPSNRS(dB) AverageStestSPSNRS(dB) 32.5 32.5 32 SRCNNS(9−3−5) 32 SRCNNS(9−3−1−5) SRCNNS(9−3−5) SRCNNS(9−3−3−5) 31.5 SRCNNS(9−3−1−5) SRCNNS(9−3−3−3) SCS(31.42SdB) 31.5 SCS(31.42SdB) 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 NumberSofSbackprops xS10 8 NumberSofSbackprops 8 xS10 (b) 9-3-5 vs. 9-3-1-5 (b) 9-3-3-5 and 9-3-3-3 Fig. 9. Deeper structure does not always lead to better AverageRtestRPSNRR(dB) 32.5 results. 32 methods. We adopt the model with good performance- SRCNNR(9−5−5) speed trade-off: a three-layer network with f1 = 9, f2 = 31.5 SRCNNR(9−5−1−5) SCR(31.42RdB) 5, f3 = 5, n1 = 64, and n2 = 32 trained on the ImageNet. 1 2 3 4 5 NumberRofRbackprops 6 7 8 8 For each upscaling factor ∈ {2, 3, 4}, we train a specific xR10 (c) 9-5-5 vs. 9-5-1-5 network for that factor7 . Comparisons. We compare our SRCNN with the state- Fig. 8. Comparisons between three-layer and four-layer of-the-art SR methods: networks. • SC - sparse coding-based method of Yang et al. [50] • NE+LLE - neighbour embedding + locally linear mapping layers with n22 = 32 and n23 = 16 filters on embedding method [4] 9-1-5, then we have to set a smaller learning rate to • ANR - Anchored Neighbourhood Regression ensure convergence, but we still do not observe superior performance after a week of training (see Figure 9(a)). method [41] • A+ - Adjusted Anchored Neighbourhood Regres- We also tried to enlarge the filter size of the additional layer to f22 = 3, and explore two deep structures – 9-3- sion method [42], and 3-5 and 9-3-3-3. However, from the convergence curves • KK - the method described in [25], which achieves shown in Figure 9(b), these two networks do not show the best performance among external example- better results than the 9-3-1-5 network. based methods, according to the comprehensive All these experiments indicate that it is not “the deeper evaluation conducted in Yang et al.’s work [46] the better” in this deep model for super-resolution. It The implementations are all from the publicly available may be caused by the difficulty of training. Our CNN codes provided by the authors, and all images are down- network contains no pooling layer or full-connected sampled using the same bicubic kernel. layer, thus it is sensitive to the initialization parameters Test set. The Set5 [2] (5 images), Set14 [51] (14 images) and learning rate. When we go deeper (e.g., 4 or 5 layers), and BSD200 [32] (200 images)8 are used to evaluate the we find it hard to set appropriate learning rates that performance of upscaling factors 2, 3, and 4. guarantee convergence. Even it converges, the network Evaluation metrics. Apart from the widely used PSNR may fall into a bad local minimum, and the learned and SSIM [43] indices, we also adopt another four filters are of less diversity even given enough training evaluation matrices, namely information fidelity cri- time. This phenomenon is also observed in [16], where terion (IFC) [38], noise quality measure (NQM) [8], improper increase of depth leads to accuracy saturation weighted peak signal-to-noise ratio (WPSNR) and multi- or degradation for image classification. Why “deeper is scale structure similarity index (MSSSIM) [44], which not better” is still an open question, which requires in- obtain high correlation with the human perceptual scores vestigations to better understand gradients and training as reported in [46]. dynamics in deep architectures. Therefore, we still adopt 4.4.1 Quantitative and qualitative evaluation three-layer networks in the following experiments. As shown in Tables 2, 3 and 4, the proposed SRCNN yields the highest scores in most evaluation matrices 4.4 Comparisons to State-of-the-Arts 7. In the area of denoising [3], for each noise level a specific network In this section, we show the quantitative and qualitative is trained. results of our method in comparison to state-of-the-art 8. We use the same 200 images as in [46].

9 . 9 33 SRCNN from the corresponding authors’ MATLAB+MEX imple- A+ - 32.59 dB mentation, whereas ours are in pure C++. We profile 32.5 Average(test(PSNR((dB) KK - 32.28 dB the running time of all the algorithms using the same 32 ANR - 31.92 dB NE+LLE - 31.84 dB machine (Intel CPU 3.10 GHz and 16 GB memory). 31.5 SC - 31.42 dB Note that the processing time of our approach is highly 31 linear to the test image resolution, since all images go 30.5 through the same number of convolutions. Our method Bicubic - 30.39 dB 2 4 6 8 10 12 is always a trade-off between performance and speed. Number(of(backprops 8 x(10 To show this, we train three networks for comparison, Fig. 10. The test convergence curve of SRCNN and which are 9-1-5, 9-3-5, and 9-5-5. It is clear that the 9- results of other methods on the Set5 dataset. 1-5 network is the fastest, while it still achieves better in all experiments9 . Note that our SRCNN results are performance than the next state-of-the-art A+. Other based on the checkpoint of 8 × 108 backpropagations. methods are several times or even orders of magnitude Specifically, for the upscaling factor 3, the average gains slower in comparison to 9-1-5 network. Note the speed on PSNR achieved by SRCNN are 0.15 dB, 0.17 dB, and gap is not mainly caused by the different MATLAB/C++ 0.13 dB, higher than the next best approach, A+ [42], implementations; rather, the other methods need to solve on the three datasets. When we take a look at other complex optimization problems on usage (e.g., sparse evaluation metrics, we observe that SC, to our surprise, coding or embedding), whereas our method is com- gets even lower scores than the bicubic interpolation pletely feed-forward. The 9-5-5 network achieves the on IFC and NQM. It is clear that the results of SC are best performance but at the cost of the running time. The more visually pleasing than that of bicubic interpolation. test-time speed of our CNN can be further accelerated This indicates that these two metrics may not truthfully in many ways, e.g., approximating or simplifying the reveal the image quality. Thus, regardless of these two trained networks [10], [21], [31], with possible slight metrics, SRCNN achieves the best performance among degradation in performance. all methods and scaling factors. It is worth pointing out that SRCNN surpasses the 4.5 Experiments on Color Channels bicubic baseline at the very beginning of the learning stage (see Figure 1), and with moderate training, SR- In previous experiments, we follow the conventional CNN outperforms existing state-of-the-art methods (see approach to super-resolve color images. Specifically, we Figure 4). Yet, the performance is far from converge. first transform the color images into the YCbCr space. We conjecture that better results can be obtained given The SR algorithms are only applied on the Y channel, longer training time (see Figure 10). while the Cb , Cr channels are upscaled by bicubic in- Figures 14, 15 and 16 show the super-resolution results terpolation. It is interesting to find out if super-resolution of different approaches by an upscaling factor 3. As can performance can be improved if we jointly consider all be observed, the SRCNN produces much sharper edges three channels in the process. than other approaches without any obvious artifacts Our method is flexible to accept more channels with- across the image. out altering the learning mechanism and network de- In addition, we report to another recent deep learning sign. In particular, it can readily deal with three chan- method for image super-resolution (DNC) of Cui et nels simultaneously by setting the input channels to al. [5]. As they employ a different blur kernel (a Gaussian c = 3. In the following experiments, we explore different filter with a standard deviation of 0.55), we train a spe- training strategies for color image super-resolution, and cific network (9-5-5) using the same blur kernel as DNC subsequently evaluate their performance on different for fair quantitative comparison. The upscaling factor channels. is 3 and the training set is the 91-image dataset. From Implementation details. Training is performed on the the convergence curve shown in Figure 11, we observe 91-image dataset, and testing is conducted on the that our SRCNN surpasses DNC with just 2.7 × 107 Set5 [2]. The network settings are: c = 3, f1 = 9, f2 = 1, backprops, and a larger margin can be obtained given f3 = 5, n1 = 64, and n2 = 32. As we have proved the longer training time. This also demonstrates that the end-to-end learning is superior to DNC, even if that model is already “deep”. AverageBtestBPSNRBidBn 32.5 32 4.4.2 Running time 31.5 Figure 12 shows the running time comparisons of several 31 SRCNNBi9-5-5BtrainedBonB91Bimagesn state-of-the-art methods, along with their restoration DNCBi32.08BdBn BicubicBi30.29BdBn 30.5 performance on Set14. All baseline methods are obtained 2 4 6 8 10 12 NumberBofBbackprops × 10 7 9. The PSNR value of each image can be found in the supplementary file. Fig. 11. The test convergence curve of SRCNN and the result of DNC on the Set5 dataset.

10 . 10 TABLE 2 The average results of PSNR (dB), SSIM, IFC, NQM, WPSNR (dB) and MSSIM on the Set5 dataset. Eval. Mat Scale Bicubic SC [50] NE+LLE [4] KK [25] ANR [41] A+ [41] SRCNN 2 33.66 - 35.77 36.20 35.83 36.54 36.66 PSNR 3 30.39 31.42 31.84 32.28 31.92 32.59 32.75 4 28.42 - 29.61 30.03 29.69 30.28 30.49 2 0.9299 - 0.9490 0.9511 0.9499 0.9544 0.9542 SSIM 3 0.8682 0.8821 0.8956 0.9033 0.8968 0.9088 0.9090 4 0.8104 - 0.8402 0.8541 0.8419 0.8603 0.8628 2 6.10 - 7.84 6.87 8.09 8.48 8.05 IFC 3 3.52 3.16 4.40 4.14 4.52 4.84 4.58 4 2.35 - 2.94 2.81 3.02 3.26 3.01 2 36.73 - 42.90 39.49 43.28 44.58 41.13 NQM 3 27.54 27.29 32.77 32.10 33.10 34.48 33.21 4 21.42 - 25.56 24.99 25.72 26.97 25.96 2 50.06 - 58.45 57.15 58.61 60.06 59.49 WPSNR 3 41.65 43.64 45.81 46.22 46.02 47.17 47.10 4 37.21 - 39.85 40.40 40.01 41.03 41.13 2 0.9915 - 0.9953 0.9953 0.9954 0.9960 0.9959 MSSSIM 3 0.9754 0.9797 0.9841 0.9853 0.9844 0.9867 0.9866 4 0.9516 - 0.9666 0.9695 0.9672 0.9720 0.9725 TABLE 3 The average results of PSNR (dB), SSIM, IFC, NQM, WPSNR (dB) and MSSIM on the Set14 dataset. Eval. Mat Scale Bicubic SC [50] NE+LLE [4] KK [25] ANR [41] A+ [41] SRCNN 2 30.23 - 31.76 32.11 31.80 32.28 32.45 PSNR 3 27.54 28.31 28.60 28.94 28.65 29.13 29.30 4 26.00 - 26.81 27.14 26.85 27.32 27.50 2 0.8687 - 0.8993 0.9026 0.9004 0.9056 0.9067 SSIM 3 0.7736 0.7954 0.8076 0.8132 0.8093 0.8188 0.8215 4 0.7019 - 0.7331 0.7419 0.7352 0.7491 0.7513 2 6.09 - 7.59 6.83 7.81 8.11 7.76 IFC 3 3.41 2.98 4.14 3.83 4.23 4.45 4.26 4 2.23 - 2.71 2.57 2.78 2.94 2.74 2 40.98 - 41.34 38.86 41.79 42.61 38.95 NQM 3 33.15 29.06 37.12 35.23 37.22 38.24 35.25 4 26.15 - 31.17 29.18 31.27 32.31 30.46 2 47.64 - 54.47 53.85 54.57 55.62 55.39 WPSNR 3 39.72 41.66 43.22 43.56 43.36 44.25 44.32 4 35.71 - 37.75 38.26 37.85 38.72 38.87 2 0.9813 - 0.9886 0.9890 0.9888 0.9896 0.9897 MSSSIM 3 0.9512 0.9595 0.9643 0.9653 0.9647 0.9669 0.9675 4 0.9134 - 0.9317 0.9338 0.9326 0.9371 0.9376 TABLE 4 The average results of PSNR (dB), SSIM, IFC, NQM, WPSNR (dB) and MSSIM on the BSD200 dataset. Eval. Mat Scale Bicubic SC [50] NE+LLE [4] KK [25] ANR [41] A+ [41] SRCNN 2 28.38 - 29.67 30.02 29.72 30.14 30.29 PSNR 3 25.94 26.54 26.67 26.89 26.72 27.05 27.18 4 24.65 - 25.21 25.38 25.25 25.51 25.60 2 0.8524 - 0.8886 0.8935 0.8900 0.8966 0.8977 SSIM 3 0.7469 0.7729 0.7823 0.7881 0.7843 0.7945 0.7971 4 0.6727 - 0.7037 0.7093 0.7060 0.7171 0.7184 2 5.30 - 7.10 6.33 7.28 7.51 7.21 IFC 3 3.05 2.77 3.82 3.52 3.91 4.07 3.91 4 1.95 - 2.45 2.24 2.51 2.62 2.45 2 36.84 - 41.52 38.54 41.72 42.37 39.66 NQM 3 28.45 28.22 34.65 33.45 34.81 35.58 34.72 4 21.72 - 25.15 24.87 25.27 26.01 25.65 2 46.15 - 52.56 52.21 52.69 53.56 53.58 WPSNR 3 38.60 40.48 41.39 41.62 41.53 42.19 42.29 4 34.86 - 36.52 36.80 36.64 37.18 37.24 2 0.9780 - 0.9869 0.9876 0.9872 0.9883 0.9883 MSSSIM 3 0.9426 0.9533 0.9575 0.9588 0.9581 0.9609 0.9614 4 0.9005 - 0.9203 0.9215 0.9214 0.9256 0.9261

11 . 11 29.4 SRCNN(9-5-5) SRCNN(9-3-5) 29.2 SRCNN(9-1-5) A+ 29 KK PSNR (dB) 28.8 (a) First-layer filters – Cb channel ANR 28.6 NE+LLE 28.4 SC 28.2 2 1 0 10 10 10 Slower <—— Running time (sec) ——> Faster Fig. 12. The proposed SRCNN achieves the state- (b) First-layer filters – Cr channel of-the-art super-resolution quality, whilst maintains high Fig. 13. Chrominance channels of the first-layer filters and competitive speed in comparison to existing external using the “Y pre-train” strategy. example-based methods. The chart is based on Set14 results summarized in Table 3. The implementation of all in RGB color space). This suggests that the Cb, Cr three SRCNN networks are available on our project page. channels could decrease the performance of the Y chan- TABLE 5 nel when training is performed in a unified network. Average PSNR (dB) of different channels and training (iii) We observe that the Cb, Cr channels have higher strategies on the Set5 dataset. PSNR values for “Y pre-train” than for “CbCr pre-train”. The reason lies on the differences between the Cb, Cr Training PSNR of different channel(s) channels and the Y channel. Visually, the Cb, Cr channels Strategies Y Cb Cr RGB color image Bicubic 30.39 45.44 45.42 34.57 are more blurry than the Y channel, thus are less affected Y only 32.39 45.44 45.42 36.37 by the downsampling process. When we pre-train on YCbCr 29.25 43.30 43.49 33.47 the Cb, Cr channels, there are only a few filters being Y pre-train 32.19 46.49 46.45 36.32 CbCr pre-train 32.14 46.38 45.84 36.25 activated. Then the training will soon fall into a bad RGB 32.33 46.18 46.20 36.44 local minimum during fine-tuning. On the other hand, KK 32.37 44.35 44.22 36.32 if we pre-train on the Y channel, more filters will be activated, and the performance on Cb, Cr channels will be pushed much higher. Figure 13 shows the Cb, Cr effectiveness of SRCNN on different scales, here we only channels of the first-layer filters with “Y pre-train”, of evaluate the performance of upscaling factor 3. which the patterns largely differ from that shown in Comparisons. We compare our method with the state- Figure 5. (iv) Training on the RGB channels achieves of-art color SR method – KK [25]. We also try different the best result on the color image. Different from the learning strategies for comparison: YCbCr channels, the RGB channels exhibit high cross- • Y only: this is our baseline method, which is a correlation among each other. The proposed SRCNN single-channel (c = 1) network trained only on is capable of leveraging such natural correspondences the luminance channel. The Cb, Cr channels are between the channels for reconstruction. Therefore, the upscaled using bicubic interpolation. model achieves comparable result on the Y channel as • YCbCr: training is performed on the three channels “Y only”, and better results on Cb, Cr channels than of the YCbCr space. bicubic interpolation. (v) In KK [25], super-resolution • Y pre-train: first, to guarantee the performance on is applied on each RGB channel separately. When we the Y channel, we only use the MSE of the Y channel transform its results to YCbCr space, the PSNR value as the loss to pre-train the network. Then we employ of Y channel is similar as “Y only”, but that of Cb, Cr the MSE of all channels to fine-tune the parameters. channels are poorer than bicubic interpolation. The result • CbCr pre-train: we use the MSE of the Cb, Cr suggests that the algorithm is biased to the Y channel. channels as the loss to pre-train the network, then On the whole, our method trained on RGB channels fine-tune the parameters on all channels. achieves better performance than KK and the single- • RGB: training is performed on the three channels of channel network (“Y only”). It is also worth noting that the RGB space. the improvement compared with the single-channel net- The results are shown in Table 5, where we have the work is not that significant (i.e., 0.07 dB). This indicates following observations. (i) If we directly train on the that the Cb, Cr channels barely help in improving the YCbCr channels, the results are even worse than that of performance. bicubic interpolation. The training falls into a bad local minimum, due to the inherently different characteristics of the Y and Cb, Cr channels. (ii) If we pre-train on the 5 C ONCLUSION Y or Cb, Cr channels, the performance finally improves, We have presented a novel deep learning approach but is still not better than “Y only” on the color image for single image super-resolution (SR). We show that (see the last column of Table 5, where PSNR is computed conventional sparse-coding-based SR methods can be

12 . 12 reformulated into a deep convolutional neural network. [19] Huang, J.B., Singh, A., Ahuja, N.: Single image super-resolution The proposed approach, SRCNN, learns an end-to-end from transformed self-exemplars. In: IEEE Conference on Com- puter Vision and Pattern Recognition. pp. 5197–5206 (2015) mapping between low- and high-resolution images, with [20] Irani, M., Peleg, S.: Improving resolution by image registration. little extra pre/post-processing beyond the optimization. Graphical Models and Image Processing 53(3), 231–239 (1991) With a lightweight structure, the SRCNN has achieved [21] Jaderberg, M., Vedaldi, A., Zisserman, A.: Speeding up convo- lutional neural networks with low rank expansions. In: British superior performance than the state-of-the-art methods. Machine Vision Conference (2014) We conjecture that additional performance can be further [22] Jain, V., Seung, S.: Natural image denoising with convolutional gained by exploring more filters and different training networks. In: Advances in Neural Information Processing Sys- tems. pp. 769–776 (2008) strategies. Besides, the proposed structure, with its ad- [23] Jia, K., Wang, X., Tang, X.: Image transformation based on learning vantages of simplicity and robustness, could be applied dictionaries across image spaces. IEEE Transactions on Pattern to other low-level vision problems, such as image de- Analysis and Machine Intelligence 35(2), 367–380 (2013) [24] Jia, Y., Shelhamer, E., Donahue, J., Karayev, S., Long, J., Girshick, blurring or simultaneous SR+denoising. One could also R., Guadarrama, S., Darrell, T.: Caffe: Convolutional architecture investigate a network to cope with different upscaling for fast feature embedding. In: ACM Multimedia. pp. 675–678 factors. (2014) [25] Kim, K.I., Kwon, Y.: Single-image super-resolution using sparse regression and natural image prior. IEEE Transactions on Pattern R EFERENCES Analysis and Machine Intelligence 32(6), 1127–1133 (2010) [26] Krizhevsky, A., Sutskever, I., Hinton, G.: ImageNet classification [1] Aharon, M., Elad, M., Bruckstein, A.: K-SVD: An algorithm for with deep convolutional neural networks. In: Advances in Neural designing overcomplete dictionaries for sparse representation. Information Processing Systems. pp. 1097–1105 (2012) IEEE Transactions on Signal Processing 54(11), 4311–4322 (2006) [27] LeCun, Y., Boser, B., Denker, J.S., Henderson, D., Howard, R.E., [2] Bevilacqua, M., Roumy, A., Guillemot, C., Morel, M.L.A.: Low- Hubbard, W., Jackel, L.D.: Backpropagation applied to handwrit- complexity single-image super-resolution based on nonnegative ten zip code recognition. Neural computation pp. 541–551 (1989) neighbor embedding. In: British Machine Vision Conference [28] LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based (2012) learning applied to document recognition. Proceedings of the [3] Burger, H.C., Schuler, C.J., Harmeling, S.: Image denoising: Can IEEE 86(11), 2278–2324 (1998) plain neural networks compete with BM3D? In: IEEE Conference [29] Lee, H., Battle, A., Raina, R., Ng, A.Y.: Efficient sparse coding algo- on Computer Vision and Pattern Recognition. pp. 2392–2399 rithms. In: Advances in Neural Information Processing Systems. (2012) pp. 801–808 (2006) [4] Chang, H., Yeung, D.Y., Xiong, Y.: Super-resolution through neigh- [30] Liu, C., Shum, H.Y., Freeman, W.T.: Face hallucination: Theory bor embedding. In: IEEE Conference on Computer Vision and and practice. International Journal of Computer Vision 75(1), 115– Pattern Recognition (2004) 134 (2007) [5] Cui, Z., Chang, H., Shan, S., Zhong, B., Chen, X.: Deep network [31] Mamalet, F., Garcia, C.: Simplifying convnets for fast learning. cascade for image super-resolution. In: European Conference on In: International Conference on Artificial Neural Networks, pp. Computer Vision, pp. 49–64 (2014) 58–65. Springer (2012) [6] Dai, D., Timofte, R., Van Gool, L.: Jointly optimized regressors for image super-resolution. In: Eurographics. vol. 7, p. 8 (2015) [32] Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human [7] Dai, S., Han, M., Xu, W., Wu, Y., Gong, Y., Katsaggelos, A.K.: segmented natural images and its application to evaluating seg- Softcuts: a soft edge smoothness prior for color image super- mentation algorithms and measuring ecological statistics. In: IEEE resolution. IEEE Transactions on Image Processing 18(5), 969–981 International Conference on Computer Vision. vol. 2, pp. 416–423 (2009) (2001) [8] Damera-Venkata, N., Kite, T.D., Geisler, W.S., Evans, B.L., Bovik, [33] Nair, V., Hinton, G.E.: Rectified linear units improve restricted A.C.: Image quality assessment based on a degradation model. Boltzmann machines. In: International Conference on Machine IEEE Transactions on Image Processing 9(4), 636–650 (2000) Learning. pp. 807–814 (2010) [9] Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: ImageNet: [34] Ouyang, W., Luo, P., Zeng, X., Qiu, S., Tian, Y., Li, H., Yang, A large-scale hierarchical image database. In: IEEE Conference on S., Wang, Z., Xiong, Y., Qian, C., et al.: Deepid-net: multi-stage Computer Vision and Pattern Recognition. pp. 248–255 (2009) and deformable deep convolutional neural networks for object [10] Denton, E., Zaremba, W., Bruna, J., LeCun, Y., Fergus, R.: Exploit- detection. arXiv preprint arXiv:1409.3505 (2014) ing linear structure within convolutional networks for efficient [35] Ouyang, W., Wang, X.: Joint deep learning for pedestrian detec- evaluation. In: Advances in Neural Information Processing Sys- tion. In: IEEE International Conference on Computer Vision. pp. tems (2014) 2056–2063 (2013) [11] Dong, C., Loy, C.C., He, K., Tang, X.: Learning a deep convolu- [36] Schuler, C.J., Burger, H.C., Harmeling, S., Scholkopf, B.: A ma- tional network for image super-resolution. In: European Confer- chine learning approach for non-blind image deconvolution. In: ence on Computer Vision, pp. 184–199 (2014) IEEE Conference on Computer Vision and Pattern Recognition. [12] Eigen, D., Krishnan, D., Fergus, R.: Restoring an image taken pp. 1067–1074 (2013) through a window covered with dirt or rain. In: IEEE Interna- [37] Schulter, S., Leistner, C., Bischof, H.: Fast and accurate image tional Conference on Computer Vision. pp. 633–640 (2013) upscaling with super-resolution forests. In: IEEE Conference on [13] Freedman, G., Fattal, R.: Image and video upscaling from local Computer Vision and Pattern Recognition. pp. 3791–3799 (2015) self-examples. ACM Transactions on Graphics 30(2), 12 (2011) [38] Sheikh, H.R., Bovik, A.C., De Veciana, G.: An information fidelity [14] Freeman, W.T., Jones, T.R., Pasztor, E.C.: Example-based super- criterion for image quality assessment using natural scene statis- resolution. Computer Graphics and Applications 22(2), 56–65 tics. IEEE Transactions on Image Processing 14(12), 2117–2128 (2002) (2005) [15] Freeman, W.T., Pasztor, E.C., Carmichael, O.T.: Learning low- [39] Sun, Y., Chen, Y., Wang, X., Tang, X.: Deep learning face represen- level vision. International Journal of Computer Vision 40(1), 25–47 tation by joint identification-verification. In: Advances in Neural (2000) Information Processing Systems. pp. 1988–1996 (2014) [16] Glasner, D., Bagon, S., Irani, M.: Super-resolution from a single [40] Szegedy, C., Reed, S., Erhan, D., Anguelov, D.: Scalable, high- image. In: IEEE International Conference on Computer Vision. pp. quality object detection. arXiv preprint arXiv:1412.1441 (2014) 349–356 (2009) [41] Timofte, R., De Smet, V., Van Gool, L.: Anchored neighborhood [17] He, K., Sun, J.: Convolutional neural networks at constrained time regression for fast example-based super-resolution. In: IEEE In- cost. arXiv preprint arXiv:1412.1710 (2014) ternational Conference on Computer Vision. pp. 1920–1927 (2013) [18] He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in [42] Timofte, R., De Smet, V., Van Gool, L.: A+: Adjusted anchored deep convolutional networks for visual recognition. In: European neighborhood regression for fast super-resolution. In: IEEE Asian Conference on Computer Vision, pp. 346–361 (2014) Conference on Computer Vision (2014)

13 . 13 [43] Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality Xiaoou Tang (S93-M96-SM02-F09) received assessment: from error visibility to structural similarity. IEEE the BS degree from the University of Science Transactions on Image Processing 13(4), 600–612 (2004) and Technology of China, Hefei, in 1990, the MS [44] Wang, Z., Simoncelli, E.P., Bovik, A.C.: Multiscale structural sim- degree from the University of Rochester, New ilarity for image quality assessment. In: IEEE Conference Record York, in 1991, and the PhD degree from the Mas- of the Thirty-Seventh Asilomar Conference on Signals, Systems sachusetts Institute of Technology, Cambridge, and Computers. vol. 2, pp. 1398–1402 (2003) in 1996. He is a professor in the Department of [45] Yang, C.Y., Huang, J.B., Yang, M.H.: Exploiting self-similarities Information Engineering and an associate dean for single frame super-resolution. In: IEEE Asian Conference on (Research) of the Faculty of Engineering of the Computer Vision, pp. 497–510 (2010) Chinese University of Hong Kong. He worked [46] Yang, C.Y., Ma, C., Yang, M.H.: Single-image super-resolution: A as the group manager of the Visual Computing benchmark. In: European Conference on Computer Vision, pp. Group at the Microsoft Research Asia, from 2005 to 2008. His research 372–386 (2014) interests include computer vision, pattern recognition, and video pro- [47] Yang, J., Lin, Z., Cohen, S.: Fast image super-resolution based on cessing. He received the Best Paper Award at the IEEE Conference in-place example regression. In: IEEE Conference on Computer on Computer Vision and Pattern Recognition (CVPR) 2009. He was a Vision and Pattern Recognition. pp. 1059–1066 (2013) program chair of the IEEE International Conference on Computer Vision [48] Yang, J., Wang, Z., Lin, Z., Cohen, S., Huang, T.: Coupled dic- (ICCV) 2009 and he is an associate editor of the IEEE Transactions on tionary training for image super-resolution. IEEE Transactions on Pattern Analysis and Machine Intelligence and the International Journal Image Processing 21(8), 3467–3478 (2012) of Computer Vision. He is a fellow of the IEEE. [49] Yang, J., Wright, J., Huang, T., Ma, Y.: Image super-resolution as sparse representation of raw image patches. In: IEEE Conference on Computer Vision and Pattern Recognition. pp. 1–8 (2008) [50] Yang, J., Wright, J., Huang, T.S., Ma, Y.: Image super-resolution via sparse representation. IEEE Transactions on Image Processing 19(11), 2861–2873 (2010) [51] Zeyde, R., Elad, M., Protter, M.: On single image scale-up us- ing sparse-representations. In: Curves and Surfaces, pp. 711–730 (2012) [52] Zhang, N., Donahue, J., Girshick, R., Darrell, T.: Part-based R- CNNs for fine-grained category detection. In: European Confer- ence on Computer Vision. pp. 834–849 (2014) Chao Dong received the BS degree in Informa- tion Engineering from Beijing Institute of Tech- nology, China, in 2011. He is currently working toward the PhD degree in the Department of Information Engineering at the Chinese Univer- sity of Hong Kong. His research interests include image super-resolution and denoising. Chen Change Loy received the PhD degree in Computer Science from the Queen Mary Uni- versity of London in 2010. He is currently a Research Assistant Professor in the Department of Information Engineering, Chinese University of Hong Kong. Previously he was a postdoc- toral researcher at Vision Semantics Ltd. His research interests include computer vision and pattern recognition, with focus on face analysis, deep learning, and visual surveillance. Kaiming He received the BS degree from Ts- inghua University in 2007, and the PhD degree from the Chinese University of Hong Kong in 2011. He is a researcher at Microsoft Research Asia (MSRA). He joined Microsoft Research Asia in 2011. His research interests include computer vision and computer graphics. He has won the Best Paper Award at the IEEE Confer- ence on Computer Vision and Pattern Recogni- tion (CVPR) 2009. He is a member of the IEEE.

14 . 14 OriginalK/KPSNR BicubicK/K24.04KdB SCK/K25.58KdB NE+LLEK/K25.75KdB KKK/K27.31KdB ANRK/K25.90KdB A+K/K27.24KdB SRCNNK/K27.95KdB Fig. 14. The “butterfly” image from Set5 with an upscaling factor 3. Original+/+PSNR Bicubic+/+23.71+dB SC+/+24.98+dB NE+LLE+/+24.94+dB KK+/+25.60+dB ANR+/+25.03+dB A++/+26.09+dB SRCNN+/+27.04+dB Fig. 15. The “ppt3” image from Set14 with an upscaling factor 3. OriginalL/LPSNR BicubicL/L26.63LdB SCL/L27.95LdB NE+LLEL/L28.31LdB KKL/L28.85LdB ANRL/L28.43LdB A+L/L28.98LdB SRCNNL/L29.29LdB Fig. 16. The “zebra” image from Set14 with an upscaling factor 3.