- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Dynamic Memory Networks for Visual and Textual Question Answering

展开查看详情

1 . Dynamic Memory Networks for Visual and Textual Question Answering Caiming Xiong*, Stephen Merity*, Richard Socher {CMXIONG , SMERITY, RICHARD}METAMIND . IO MetaMind, Palo Alto, CA USA *indicates equal contribution. Abstract Episodic Memory Episodic Memory Answer Answer Neural network architectures with memory and arXiv:1603.01417v1 [cs.NE] 4 Mar 2016 Attention Memory Kitchen Attention Memory Palm Mechanism Update Mechanism Update attention mechanisms exhibit certain reason- ing capabilities required for question answering. One such architecture, the dynamic memory net- Input Module Question Input Module Question John moved to the garden. Where is the What kind of work (DMN), obtained high accuracy on a vari- John got the apple there. apple? tree is in the background? ety of language tasks. However, it was not shown John moved to the kitchen. whether the architecture achieves strong results Sandra got the milk there. for question answering when supporting facts are John dropped the apple. not marked during training or whether it could John moved to the office. be applied to other modalities such as images. (a) Text Question-Answering (b) Visual Question-Answering Based on an analysis of the DMN, we propose Figure 1. Question Answering over text and images using a Dy- several improvements to its memory and input namic Memory Network. modules. Together with these changes we intro- duce a novel input module for images in order module and memory module, to improve question answer- to be able to answer visual questions. Our new ing. We propose a new input module which uses a two DMN+ model improves the state of the art on level encoder with a sentence reader and input fusion layer both the Visual Question Answering dataset and to allow for information flow between sentences. For the the bAbI-10k text question-answering dataset memory, we propose a modification to gated recurrent units without supporting fact supervision. (GRU) (Chung et al., 2014). The new GRU formulation in- corporates attention gates that are computed using global 1. Introduction knowledge over the facts. Unlike before, the new DMN+ model does not require that supporting facts (i.e. the facts Neural network based methods have made tremendous that are relevant for answering a particular question) are progress in image and text classification (Krizhevsky et al., labeled during training. The model learns to select the im- 2012; Socher et al., 2013b). However, only recently has portant facts from a larger set. progress been made on more complex tasks that require In addition, we introduce a new input module to represent logical reasoning. This success is based in part on the images. This module is compatible with the rest of the addition of memory and attention components to complex DMN architecture and its output is fed into the memory neural networks. For instance, memory networks (Weston module. We show that the changes in the memory module et al., 2015b) are able to reason over several facts written in that improved textual question answering also improve vi- natural language or (subject, relation, object) triplets. At- sual question answering. Both tasks are illustrated in Fig. 1. tention mechanisms have been successful components in both machine translation (Bahdanau et al., 2015; Luong et al., 2015) and image captioning models (Xu et al., 2015). 2. Dynamic Memory Networks The dynamic memory network (Kumar et al., 2015) We begin by outlining the DMN for question answering (DMN) is one example of a neural network model that has and the modules as presented in Kumar et al. (2015). both a memory component and an attention mechanism. The DMN is a general architecture for question answering The DMN yields state of the art results on question answer- (QA). It is composed of modules that allow different as- ing with supporting facts marked during training, sentiment pects such as input representations or memory components analysis, and part-of-speech tagging. to be analyzed and improved independently. The modules, We analyze the DMN components, specifically the input depicted in Fig. 1, are as follows:

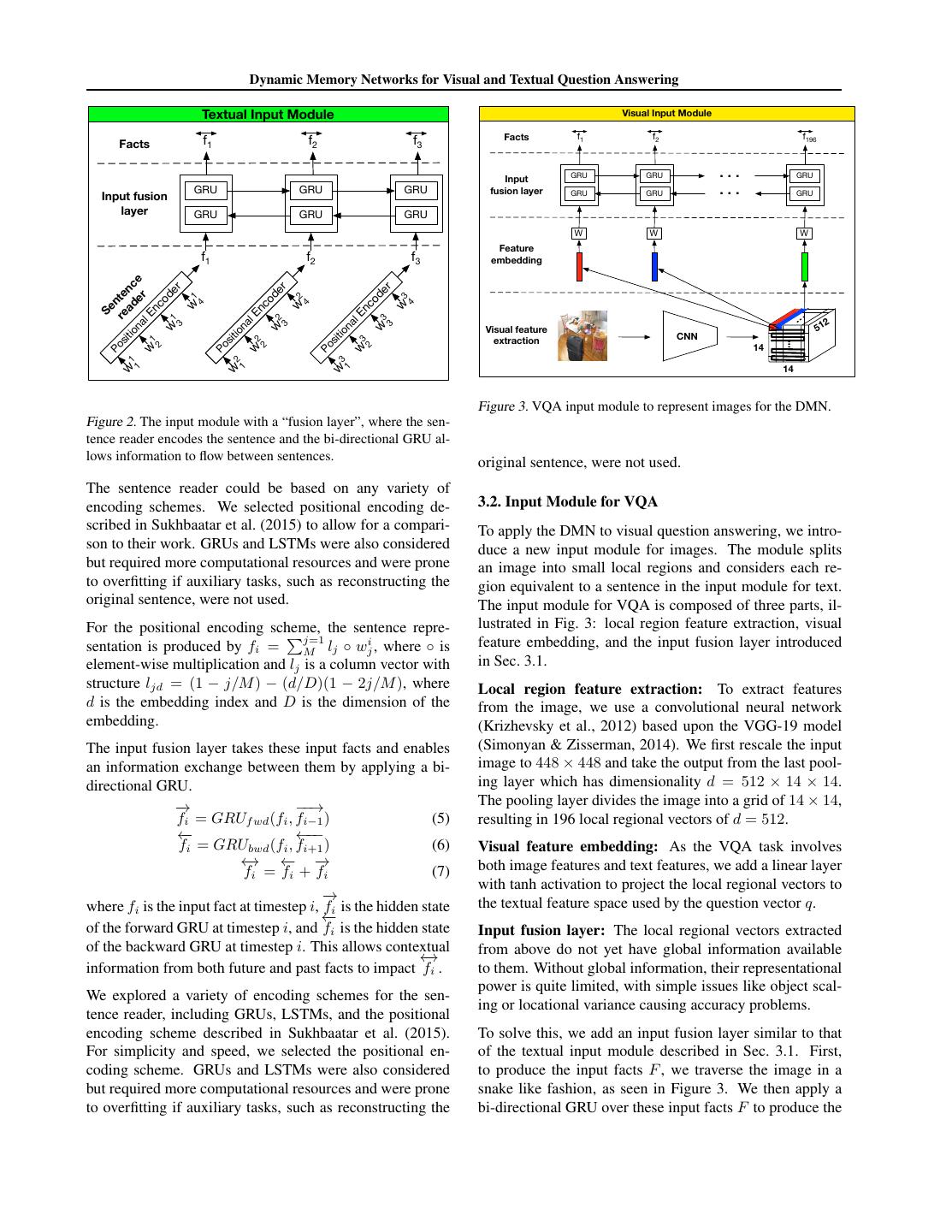

2 . Dynamic Memory Networks for Visual and Textual Question Answering Input Module: This module processes the input data about and backpropagated through the entire network. which a question is being asked into a set of vectors termed facts, represented as F = [f1 , . . . , fN ], where N is the total 3. Improved Dynamic Memory Networks: number of facts. These vectors are ordered, resulting in ad- ditional information that can be used by later components. DMN+ For text QA in Kumar et al. (2015), the module consists of We propose and compare several modeling choices for two a GRU over the input words. crucial components: input representation, attention mecha- As the GRU is used in many components of the DMN, it nism and memory update. The final DMN+ model obtains is useful to provide the full definition. For each time step i the highest accuracy on the bAbI-10k dataset without sup- with input xi and previous hidden state hi−1 , we compute porting facts and the VQA dataset (Antol et al., 2015). Sev- the updated hidden state hi = GRU (xi , hi−1 ) by eral design choices are motivated by intuition and accuracy improvements on that dataset. ui = σ W (u) xi + U (u) hi−1 + b(u) (1) 3.1. Input Module for Text QA ri = σ W (r) xi + U (r) hi−1 + b(r) (2) In the DMN specified in Kumar et al. (2015), a single GRU ˜i h = tanh W xi + ri ◦ U hi−1 + b (h) (3) is used to process all the words in the story, extracting sen- tence representations by storing the hidden states produced hi ˜ i + (1 − ui ) ◦ hi−1 = ui ◦ h (4) at the end of sentence markers. The GRU also provides a temporal component by allowing a sentence to know the where σ is the sigmoid activation function, ◦ is an element- content of the sentences that came before them. Whilst wise product, W (z) , W (r) , W ∈ RnH ×nI , U (z) , U (r) , U ∈ this input module worked well for bAbI-1k with supporting RnH ×nH , nH is the hidden size, and nI is the input size. facts, as reported in Kumar et al. (2015), it did not perform Question Module: This module computes a vector repre- well on bAbI-10k without supporting facts (Sec. 6.1). sentation q of the question, where q ∈ RnH is the final We speculate that there are two main reasons for this per- hidden state of a GRU over the words in the question. formance disparity, all exacerbated by the removal of sup- Episodic Memory Module: Episode memory aims to re- porting facts. First, the GRU only allows sentences to trieve the information required to answer the question q have context from sentences before them, but not after from the input facts. To improve our understanding of them. This prevents information propagation from future both the question and input, especially if questions require sentences. Second, the supporting sentences may be too transitive reasoning, the episode memory module may pass far away from each other on a word level to allow for these over the input multiple times, updating episode memory af- distant sentences to interact through the word level GRU. ter each pass. We refer to the episode memory on the tth Input Fusion Layer pass over the inputs as mt , where mt ∈ RnH , the initial memory vector is set to the question vector: m0 = q. For the DMN+, we propose replacing this single GRU with two different components. The first component is a sen- The episodic memory module consists of two separate tence reader, responsible only for encoding the words into components: the attention mechanism and the memory up- a sentence embedding. The second component is the input date mechanism. The attention mechanism is responsible fusion layer, allowing for interactions between sentences. for producing a contextual vector ct , where ct ∈ RnH This resembles the hierarchical neural auto-encoder archi- is a summary of relevant input for pass t, with relevance tecture of Li et al. (2015) and allows content interaction inferred by the question q and previous episode memory between sentences. We adopt the bi-directional GRU for mt−1 . The memory update mechanism is responsible for this input fusion layer because it allows information from generating the episode memory mt based upon the contex- both past and future sentences to be used. As gradients tual vector ct and previous episode memory mt−1 . By the do not need to propagate through the words between sen- final pass T , the episodic memory mT should contain all tences, the fusion layer also allows for distant supporting the information required to answer the question q. sentences to have a more direct interaction. Answer Module: The answer module receives both q and Fig. 2 shows an illustration of an input module, where a mT to generate the model’s predicted answer. For simple positional encoder is used for the sentence reader and a answers, such as a single word, a linear layer with softmax bi-directional GRU is adopted for the input fusion layer. activation may be used. For tasks requiring a sequence out- Each sentence encoding fi is the output of an encoding put, an RNN may be used to decode a = [q; mT ], the con- scheme taking the word tokens [w1i , . . . , wM i ], where Mi catenation of vectors q and mT , to an ordered set of tokens. i is the length of the sentence. The cross entropy error on the answers is used for training

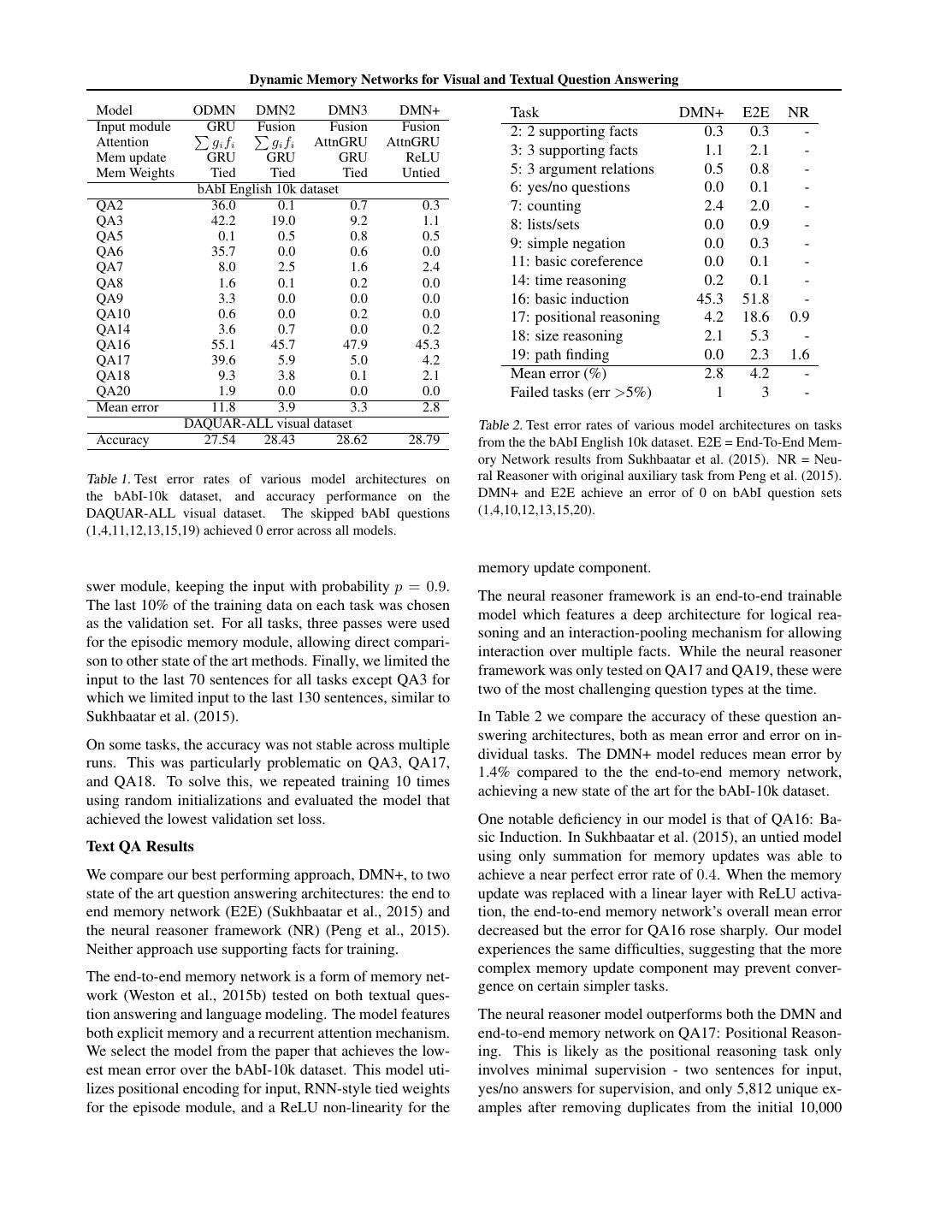

3 . Dynamic Memory Networks for Visual and Textual Question Answering Textual Input Module Visual Input Module Facts f1 f2 f196 Facts f1 f2 f3 Input GRU GRU ... GRU Input fusion GRU GRU GRU fusion layer GRU GRU ... GRU layer GRU GRU GRU W W W Feature f1 f2 f3 embedding na der ce r r r a n w 1 ode w 2 ode w 3 ode re nte w1 w2 w3 4 4 4 nc nc nc Se lE w 2 al E w 3 al E 2 51 3 3 3 Visual feature n n tio tio tio extraction CNN si si si w1 14 Po Po Po 2 2 2 w1 w2 w3 14 1 1 1 Figure 3. VQA input module to represent images for the DMN. Figure 2. The input module with a “fusion layer”, where the sen- tence reader encodes the sentence and the bi-directional GRU al- lows information to flow between sentences. original sentence, were not used. The sentence reader could be based on any variety of encoding schemes. We selected positional encoding de- 3.2. Input Module for VQA scribed in Sukhbaatar et al. (2015) to allow for a compari- To apply the DMN to visual question answering, we intro- son to their work. GRUs and LSTMs were also considered duce a new input module for images. The module splits but required more computational resources and were prone an image into small local regions and considers each re- to overfitting if auxiliary tasks, such as reconstructing the gion equivalent to a sentence in the input module for text. original sentence, were not used. The input module for VQA is composed of three parts, il- For the positional encoding scheme, the sentence repre- lustrated in Fig. 3: local region feature extraction, visual j=1 i feature embedding, and the input fusion layer introduced sentation is produced by fi = M lj ◦ wj , where ◦ is element-wise multiplication and lj is a column vector with in Sec. 3.1. structure ljd = (1 − j/M ) − (d/D)(1 − 2j/M ), where Local region feature extraction: To extract features d is the embedding index and D is the dimension of the from the image, we use a convolutional neural network embedding. (Krizhevsky et al., 2012) based upon the VGG-19 model The input fusion layer takes these input facts and enables (Simonyan & Zisserman, 2014). We first rescale the input an information exchange between them by applying a bi- image to 448 × 448 and take the output from the last pool- directional GRU. ing layer which has dimensionality d = 512 × 14 × 14. → − −−→ The pooling layer divides the image into a grid of 14 × 14, fi = GRUf wd (fi , fi−1 ) (5) resulting in 196 local regional vectors of d = 512. ←− ←−− fi = GRUbwd (fi , fi+1 ) (6) Visual feature embedding: As the VQA task involves ← → ← − → − both image features and text features, we add a linear layer fi = fi + fi (7) with tanh activation to project the local regional vectors to → − the textual feature space used by the question vector q. where fi is the input fact at timestep i, fi is the hidden state ← − of the forward GRU at timestep i, and fi is the hidden state Input fusion layer: The local regional vectors extracted of the backward GRU at timestep i. This allows contextual from above do not yet have global information available ←→ information from both future and past facts to impact fi . to them. Without global information, their representational power is quite limited, with simple issues like object scal- We explored a variety of encoding schemes for the sen- ing or locational variance causing accuracy problems. tence reader, including GRUs, LSTMs, and the positional encoding scheme described in Sukhbaatar et al. (2015). To solve this, we add an input fusion layer similar to that For simplicity and speed, we selected the positional en- of the textual input module described in Sec. 3.1. First, coding scheme. GRUs and LSTMs were also considered to produce the input facts F , we traverse the image in a but required more computational resources and were prone snake like fashion, as seen in Figure 3. We then apply a to overfitting if auxiliary tasks, such as reconstructing the bi-directional GRU over these input facts F to produce the

4 . Dynamic Memory Networks for Visual and Textual Question Answering m2 Episodic Memory Pass 2 Attention Mechanism Memory Update ui git AttnGRU AttnGRU AttnGRU 2 ... ... c hi ˜i h IN hi ˜i h IN ri ri Gate Attention ... OUT OUT (a) (b) ! F m1 Episodic Memory Pass 1 Figure 5. (a) The traditional GRU model, and (b) the proposed Attention Mechanism Memory Update ... attention-based GRU model AttnGRU AttnGRU ... AttnGRU c1 Gate Attention ... Soft attention: Soft attention produces a contextual vec- tor ct through a weighted summation of the sorted list of ←→ m0 vectors F and corresponding attention gates git : ct = Figure 4. The episodic memory module of the DMN+ when using N t→ ← ←→ i=1 gi f i This method has two advantages. First, it is two passes. The F is the output of the input module. easy to compute. Second, if the softmax activation is spiky it can approximate a hard attention function by selecting ←→ only a single fact for the contextual vector whilst still being globally aware input facts F . The bi-directional GRU al- differentiable. However the main disadvantage to soft at- lows for information propagation from neighboring image tention is that the summation process loses both positional patches, capturing spatial information. and ordering information. Whilst multiple attention passes can retrieve some of this information, this is inefficient. 3.3. The Episodic Memory Module Attention based GRU: For more complex queries, we The episodic memory module, as depicted in Fig. 4, re- would like for the attention mechanism to be sensitive to ← → ←→ ←→ ← → trieves information from the input facts F = [ f1 , . . . , fN ] both the position and ordering of the input facts F . An provided to it by focusing attention on a subset of these RNN would be advantageous in this situation except they facts. We implement this attention by associating a sin- cannot make use of the attention gate from Equation 10. ← → gle scalar value, the attention gate git , with each fact f i We propose a modification to the GRU architecture by em- during pass t. This is computed by allowing interactions bedding information from the attention mechanism. The between the fact and both the question representation and update gate ui in Equation 1 decides how much of each di- the episode memory state. mension of the hidden state to retain and how much should ←→ ←→ ←→ ←→ be updated with the transformed input xi from the current zit =[ fi ◦ q; fi ◦ mt−1 ; | fi − q|; | fi − mt−1 |] (8) timestep. As ui is computed using only the current input Zit =W (2) tanh W (1) zit + b(1) + b(2) (9) and the hidden state from previous timesteps, it lacks any knowledge from the question or previous episode memory. exp(Zit ) git = Mi (10) t By replacing the update gate ui in the GRU (Equation 1) k=1 exp(Zk ) with the output of the attention gate git (Equation 10) in ←→ Equation 4, the GRU can now use the attention gate for where fi is the ith fact, mt−1 is the previous episode memory, q is the original question, ◦ is the element-wise updating its internal state. This change is depicted in Fig 5. product, | · | is the element-wise absolute value, and ; rep- ˜ i + (1 − g t ) ◦ hi−1 hi =git ◦ h (11) resents concatenation of the vectors. i The DMN implemented in Kumar et al. (2015) involved An important consideration is that git is a scalar, generated a more complex set of interactions within z, containing using a softmax activation, as opposed to the vector ui ∈ the additional terms [f ; mt−1 ; q; f T W (b) q; f T W (b) mt−1 ]. RnH , generated using a sigmoid activation. This allows After an initial analysis, we found these additional terms us to easily visualize how the attention gates activate over were not required. the input, later shown for visual QA in Fig. 6. Though not explored, replacing the softmax activation in Equation Attention Mechanism 10 with a sigmoid activation would result in git ∈ RnH . Once we have the attention gate git we use an attention To produce the contextual vector ct used for updating the mechanism to extract a contextual vector ct based upon the episodic memory state mt , we use the final hidden state of current focus. We focus on two types of attention: soft at- the attention based GRU. tention and a new attention based GRU. The latter improves Episode Memory Updates performance and is hence the final modeling choice for the DMN+. After each pass through the attention mechanism, we wish

5 . Dynamic Memory Networks for Visual and Textual Question Answering to update the episode memory mt−1 with the newly con- Neural Attention Mechanisms Attention mechanisms al- structed contextual vector ct , producing mt . In the DMN, low neural network models to use a question to selectively a GRU with the initial hidden state set to the question vec- pay attention to specific inputs. They can benefit image tor q is used for this purpose. The episodic memory for classification (Stollenga et al., 2014), generating captions pass t is computed by for images (Xu et al., 2015), among others mentioned be- low, and machine translation (Cho et al., 2014; Bahdanau mt = GRU (ct , mt−1 ) (12) et al., 2015; Luong et al., 2015). Other recent neural ar- chitectures with memory or attention which have proposed include neural Turing machines (Graves et al., 2014), neu- The work of Sukhbaatar et al. (2015) suggests that using ral GPUs (Kaiser & Sutskever, 2015) and stack-augmented different weights for each pass through the episodic mem- RNNs (Joulin & Mikolov, 2015). ory may be advantageous. When the model contains only one set of weights for all episodic passes over the input, it Question Answering in NLP Question answering involv- is referred to as a tied model, as in the “Mem Weights” row ing natural language can be solved in a variety of ways to in Table 1. which we cannot all do justice. If the potential input is a large text corpus, QA becomes a combination of informa- Following the memory update component used in tion retrieval and extraction (Yates et al., 2007). Neural Sukhbaatar et al. (2015) and Peng et al. (2015) we experi- approaches can include reasoning over knowledge bases, ment with using a ReLU layer for the memory update, cal- (Bordes et al., 2012; Socher et al., 2013a) or directly via culating the new episode memory state by sentences for trivia competitions (Iyyer et al., 2014). mt = ReLU W t [mt−1 ; ct ; q] + b (13) Visual Question Answering (VQA) In comparison to QA in NLP, VQA is still a relatively young task that is feasible where ; is the concatenation operator, W t ∈ RnH ×nH , b ∈ only now that objects can be identified with high accuracy. RnH , and nH is the hidden size. The untying of weights The first large scale database with unconstrained questions and using this ReLU formulation for the memory update about images was introduced by Antol et al. (2015). While improves accuracy by another 0.5% as shown in Table 1 in VQA datasets existed before they did not include open- the last column. The final output of the memory network is ended, free-form questions about general images (Geman passed to the answer module as in the original DMN. et al., 2014). Others are were too small to be viable for a deep learning approach (Malinowski & Fritz, 2014). The 4. Related Work only VQA model which also has an attention component is the stacked attention network (Yang et al., 2015). Their The DMN is related to two major lines of recent work: work also uses CNN based features. However, unlike our memory and attention mechanisms. We work on both vi- input fusion layer, they use a single layer neural network sual and textual question answering which have, until now, to map the features of each patch to the dimensionality of been developed in separate communities. the question vector. Hence, the model cannot easily incor- Neural Memory Models The earliest recent work with a porate adjacency of local information in its hidden state. memory component that is applied to language processing A model that also uses neural modules, albeit logically in- is that of memory networks (Weston et al., 2015b) which spired ones, is that by Andreas et al. (2016) who evaluate adds a memory component for question answering over on knowledgebase reasoning and visual question answer- simple facts. They are similar to DMNs in that they also ing. We compare directly to their method on the latter task have input, scoring, attention and response mechanisms. and dataset. However, unlike the DMN their input module computes Related to visual question answering is the task of describ- sentence representations independently and hence cannot ing images with sentences (Kulkarni et al., 2011). Socher easily be used for other tasks such as sequence labeling. et al. (2014) used deep learning methods to map images and Like the original DMN, this memory network requires that sentences into the same space in order to describe images supporting facts are labeled during QA training. End-to- with sentences and to find images that best visualize a sen- end memory networks (Sukhbaatar et al., 2015) do not have tence. This was the first work to map both modalities into this limitation. In contrast to previous memory models a joint space with deep learning methods, but it could only with a variety of different functions for memory attention select an existing sentence to describe an image. Shortly retrieval and representations, DMNs (Kumar et al., 2015) thereafter, recurrent neural networks were used to generate have shown that neural sequence models can be used for often novel sentences based on images (Karpathy & Fei- input representation, attention and response mechanisms. Fei, 2015; Chen & Zitnick, 2014; Fang et al., 2015; Xu Sequence models naturally capture position and temporal- et al., 2015). ity of both the inputs and transitive reasoning steps.

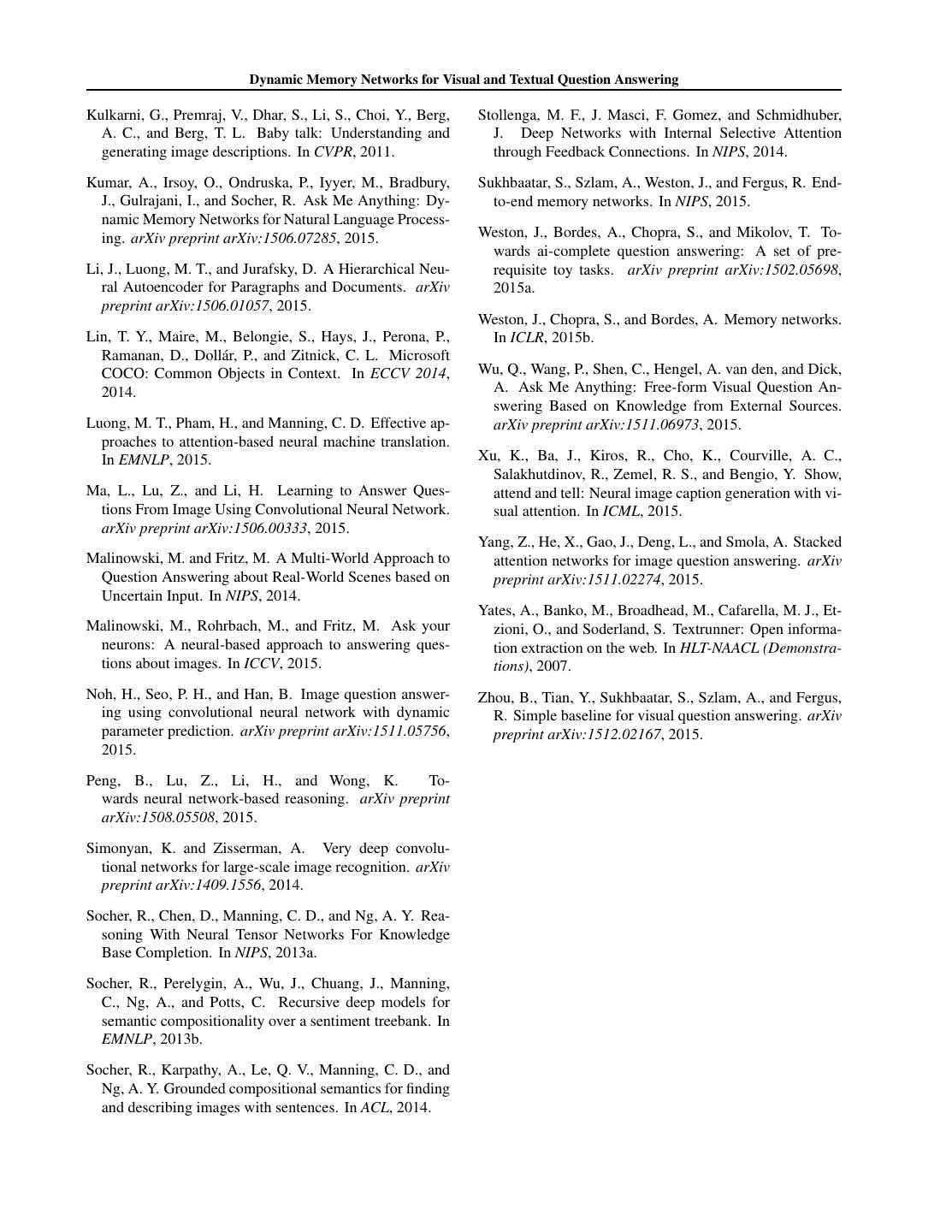

6 . Dynamic Memory Networks for Visual and Textual Question Answering 5. Datasets 6. Experiments To analyze our proposed model changes and compare 6.1. Model Analysis our performance with other architectures, we use three To understand the impact of the proposed module changes, datasets. we analyze the performance of a variety of DMN models on textual and visual question answering datasets. 5.1. bAbI-10k The original DMN (ODMN) is the architecture presented For evaluating the DMN on textual question answering, we in Kumar et al. (2015) without any modifications. DMN2 use bAbI-10k English (Weston et al., 2015a), a synthetic only replaces the input module with the input fusion layer dataset which features 20 different tasks. Each example is (Sec. 3.1). DMN3, based upon DMN2, replaces the soft at- composed of a set of facts, a question, the answer, and the tention mechanism with the attention based GRU proposed supporting facts that lead to the answer. The dataset comes in Sec. 3.3. Finally, DMN+, based upon DMN3, is an un- in two sizes, referring to the number of training examples tied model, using a unique set of weights for each pass and each task has: bAbI-1k and bAbI-10k. The experiments in a linear layer with a ReLU activation to compute the mem- Sukhbaatar et al. (2015) found that their lowest error rates ory update. We report the performance of the model varia- on the smaller bAbI-1k dataset were on average three times tions in Table 1. higher than on bAbI-10k. A large improvement to accuracy on both the bAbI-10k tex- 5.2. DAQUAR-ALL visual dataset tual and DAQUAR visual datasets results from updating the input module, seen when comparing ODMN to DMN2. On The DAtaset for QUestion Answering on Real-world im- both datasets, the input fusion layer improves interaction ages (DAQUAR) (Malinowski & Fritz, 2014) consists of between distant facts. In the visual dataset, this improve- 795 training images and 654 test images. Based upon these ment is purely from providing contextual information from images, 6,795 training questions and 5,673 test questions neighboring image patches, allowing it to handle objects were generated. Following the previously defined experi- of varying scale or questions with a locality aspect. For the mental method, we exclude multiple word answers (Mali- textual dataset, the improved interaction between sentences nowski et al., 2015; Ma et al., 2015). The resulting dataset likely helps the path finding required for logical reasoning covers 90% of the original data. The evaluation method when multiple transitive steps are required. uses classification accuracy over the single words. We use this as a development dataset for model analysis (Sec. 6.1). The addition of the attention GRU in DMN3 helps answer questions where complex positional or ordering informa- 5.3. Visual Question Answering tion may be required. This change impacts the textual dataset the most as few questions in the visual dataset are The Visual Question Answering (VQA) dataset was con- likely to require this form of logical reasoning. Finally, the structed using the Microsoft COCO dataset (Lin et al., untied model in the DMN+ overfits on some tasks com- 2014) which contained 123,287 training/validation images pared to DMN3, but on average the error rate decreases. and 81,434 test images. Each image has several related From these experimental results, we find that the combina- questions with each question answered by multiple people. tion of all the proposed model changes results, culminating This dataset contains 248,349 training questions, 121,512 in DMN+, achieves the highest performance across both validation questions, and 244,302 for testing. The testing the visual and textual datasets. data was split into test-development, test-standard and test- challenge in Antol et al. (2015). 6.2. Comparison to state of the art using bAbI-10k Evaluation on both test-standard and test-challenge are im- plemented via a submission system. test-standard may only We trained our models using the Adam optimizer (Kingma be evaluated 5 times and test-challenge is only evaluated at & Ba, 2014) with a learning rate of 0.001 and batch size of the end of the competition. To the best of our knowledge, 128. Training runs for up to 256 epochs with early stopping VQA is the largest and most complex image dataset for the if the validation loss had not improved within the last 20 visual question answering task. epochs. The model from the epoch with the lowest valida- tion loss was then selected. Xavier initialization was used for all weights except for the word embeddings, √ which √ used random uniform initialization with range [− 3, 3]. Both the embedding and hidden dimensions were of size d = 80. We used 2 regularization on all weights except bias and used dropout on the initial sentence encodings and the an-

7 . Dynamic Memory Networks for Visual and Textual Question Answering Model ODMN DMN2 DMN3 DMN+ Task DMN+ E2E NR Input module GRU Fusion Fusion Fusion 2: 2 supporting facts 0.3 0.3 - Attention gi fi gi fi AttnGRU AttnGRU Mem update GRU GRU GRU ReLU 3: 3 supporting facts 1.1 2.1 - Mem Weights Tied Tied Tied Untied 5: 3 argument relations 0.5 0.8 - bAbI English 10k dataset 6: yes/no questions 0.0 0.1 - QA2 36.0 0.1 0.7 0.3 7: counting 2.4 2.0 - QA3 42.2 19.0 9.2 1.1 8: lists/sets 0.0 0.9 - QA5 0.1 0.5 0.8 0.5 9: simple negation 0.0 0.3 - QA6 35.7 0.0 0.6 0.0 QA7 8.0 2.5 1.6 2.4 11: basic coreference 0.0 0.1 - QA8 1.6 0.1 0.2 0.0 14: time reasoning 0.2 0.1 - QA9 3.3 0.0 0.0 0.0 16: basic induction 45.3 51.8 - QA10 0.6 0.0 0.2 0.0 17: positional reasoning 4.2 18.6 0.9 QA14 3.6 0.7 0.0 0.2 18: size reasoning 2.1 5.3 - QA16 55.1 45.7 47.9 45.3 QA17 39.6 5.9 5.0 4.2 19: path finding 0.0 2.3 1.6 QA18 9.3 3.8 0.1 2.1 Mean error (%) 2.8 4.2 - QA20 1.9 0.0 0.0 0.0 Failed tasks (err >5%) 1 3 - Mean error 11.8 3.9 3.3 2.8 DAQUAR-ALL visual dataset Table 2. Test error rates of various model architectures on tasks Accuracy 27.54 28.43 28.62 28.79 from the the bAbI English 10k dataset. E2E = End-To-End Mem- ory Network results from Sukhbaatar et al. (2015). NR = Neu- Table 1. Test error rates of various model architectures on ral Reasoner with original auxiliary task from Peng et al. (2015). the bAbI-10k dataset, and accuracy performance on the DMN+ and E2E achieve an error of 0 on bAbI question sets DAQUAR-ALL visual dataset. The skipped bAbI questions (1,4,10,12,13,15,20). (1,4,11,12,13,15,19) achieved 0 error across all models. memory update component. swer module, keeping the input with probability p = 0.9. The neural reasoner framework is an end-to-end trainable The last 10% of the training data on each task was chosen model which features a deep architecture for logical rea- as the validation set. For all tasks, three passes were used soning and an interaction-pooling mechanism for allowing for the episodic memory module, allowing direct compari- interaction over multiple facts. While the neural reasoner son to other state of the art methods. Finally, we limited the framework was only tested on QA17 and QA19, these were input to the last 70 sentences for all tasks except QA3 for two of the most challenging question types at the time. which we limited input to the last 130 sentences, similar to Sukhbaatar et al. (2015). In Table 2 we compare the accuracy of these question an- swering architectures, both as mean error and error on in- On some tasks, the accuracy was not stable across multiple dividual tasks. The DMN+ model reduces mean error by runs. This was particularly problematic on QA3, QA17, 1.4% compared to the the end-to-end memory network, and QA18. To solve this, we repeated training 10 times achieving a new state of the art for the bAbI-10k dataset. using random initializations and evaluated the model that achieved the lowest validation set loss. One notable deficiency in our model is that of QA16: Ba- sic Induction. In Sukhbaatar et al. (2015), an untied model Text QA Results using only summation for memory updates was able to We compare our best performing approach, DMN+, to two achieve a near perfect error rate of 0.4. When the memory state of the art question answering architectures: the end to update was replaced with a linear layer with ReLU activa- end memory network (E2E) (Sukhbaatar et al., 2015) and tion, the end-to-end memory network’s overall mean error the neural reasoner framework (NR) (Peng et al., 2015). decreased but the error for QA16 rose sharply. Our model Neither approach use supporting facts for training. experiences the same difficulties, suggesting that the more complex memory update component may prevent conver- The end-to-end memory network is a form of memory net- gence on certain simpler tasks. work (Weston et al., 2015b) tested on both textual ques- tion answering and language modeling. The model features The neural reasoner model outperforms both the DMN and both explicit memory and a recurrent attention mechanism. end-to-end memory network on QA17: Positional Reason- We select the model from the paper that achieves the low- ing. This is likely as the positional reasoning task only est mean error over the bAbI-10k dataset. This model uti- involves minimal supervision - two sentences for input, lizes positional encoding for input, RNN-style tied weights yes/no answers for supervision, and only 5,812 unique ex- for the episode module, and a ReLU non-linearity for the amples after removing duplicates from the initial 10,000

8 . Dynamic Memory Networks for Visual and Textual Question Answering test-dev test-std classes: those that utilize a full connected image feature Method All Y/N Other Num All for classification and those that perform reasoning over VQA multiple small image patches. Only the SAN and DMN Image 28.1 64.0 3.8 0.4 - approach use small image patches, while the rest use the Question 48.1 75.7 27.1 36.7 - fully-connected whole image feature approach. Q+I 52.6 75.6 37.4 33.7 - Here, we show the quantitative and qualitative results in Ta- LSTM Q+I 53.7 78.9 36.4 35.2 54.1 ble 3 and Fig. 6, respectively. The images in Fig. 6 illustrate ACK 55.7 79.2 40.1 36.1 56.0 how the attention gate git selectively activates over relevant iBOWIMG 55.7 76.5 42.6 35.0 55.9 portions of the image according to the query. In Table 3, DPPnet 57.2 80.7 41.7 37.2 57.4 our method outperforms baseline and other state-of-the-art D-NMN 57.9 80.5 43.1 37.4 58.0 methods across all question domains (All) in both test-dev SAN 58.7 79.3 46.1 36.6 58.9 and test-std, and especially for Other questions, achieves a DMN+ 60.3 80.5 48.3 36.8 60.4 wide margin compared to the other architectures, which is Table 3. Performance of various architectures and approaches on likely as the small image patches allow for finely detailed VQA test-dev and test-standard data. VQA numbers are from reasoning over the image. Antol et al. (2015); ACK Wu et al. (2015); iBOWIMG -Zhou However, the granularity offered by small image patches et al. (2015); DPPnet - Noh et al. (2015); D-NMN - Andreas et al. (2016); SAN -Yang et al. (2015) does not always offer an advantage. The Number questions may be not solvable for both the SAN and DMN architec- tures, potentially as counting objects is not a simple task when an object crosses image patch boundaries. training examples. Peng et al. (2015) add an auxiliary task of reconstructing both the original sentences and question from their representations. This auxiliary task likely im- 7. Conclusion proves performance by preventing overfitting. We have proposed new modules for the DMN framework to achieve strong results without supervision of supporting 6.3. Comparison to state of the art using VQA facts. These improvements include the input fusion layer For the VQA dataset, each question is answered by mul- to allow interactions between input facts and a novel at- tiple people and the answers may not be the same, the tention based GRU that allows for logical reasoning over generated answers are evaluated using human consensus. ordered inputs. Our resulting model obtains state of the For each predicted answer ai for the ith question with art results on both the VQA dataset and the bAbI-10k text target answer set T i , the accuracy of VQA: AccV QA = question-answering dataset, proving the framework can be 1 N i 1 min( t∈T 3(ai ==t) , 1) where 1(·) is the indica- generalized across input domains. N i=1 tor function. Simply put, the answer ai is only 100% accu- rate if at least 3 people provide that exact answer. References Training Details We use the Adam optimizer (Kingma & Andreas, J., Rohrbach, M., Darrell, T., and Klein, D. Ba, 2014) with a learning rate of 0.003 and batch size of Learning to Compose Neural Networks for Question An- 100. Training runs for up to 256 epochs with early stop- swering. arXiv preprint arXiv:1601.01705, 2016. ping if the validation loss has not improved in the last 10 epochs. For weight initialization, we sampled from a ran- Antol, S., Agrawal, A., Lu, J., Mitchell, M., Batra, D., Zit- dom uniform distribution with range [−0.08, 0.08]. Both nick, C. L., and Parikh, D. VQA: Visual Question An- the word embedding and hidden layers were vectors of size swering. arXiv preprint arXiv:1505.00468, 2015. d = 512. We apply dropout on the initial image output from the VGG convolutional neural network (Simonyan & Bahdanau, D., Cho, K., and Bengio, Y. Neural machine Zisserman, 2014) as well as the input to the answer module, translation by jointly learning to align and translate. In keeping input with probability p = 0.5. ICLR, 2015. Results and Analysis Bordes, A., Glorot, X., Weston, J., and Bengio, Y. Joint The VQA dataset is composed of three question domains: Learning of Words and Meaning Representations for Yes/No, Number, and Other. This enables us to analyze Open-Text Semantic Parsing. AISTATS, 2012. the performance of the models on various tasks that require Chen, X. and Zitnick, C. L. Learning a recurrent visual rep- different reasoning abilities. resentation for image caption generation. arXiv preprint The comparison models are separated into two broad arXiv:1411.5654, 2014.

9 . Dynamic Memory Networks for Visual and Textual Question Answering What is the main color on Answer: blue What type of trees are in Answer: pine Which man is dressed more Answer: right the bus ? the background ? flamboyantly ? How many pink flags Answer: 2 Is this in the wild ? Answer: no What time of day was this Answer: night are there ? picture taken ? What is this sculpture Answer: metal What color are Answer: green Who is on both photos ? Answer: girl made out of ? the bananas ? What is the pattern on the Answer: stripes Did the player hit Answer: yes What is the boy holding ? Answer: surfboard cat ' s fur on its tail ? the ball ? Figure 6. Examples of qualitative results of attention for VQA. The original images are shown on the left. On the right we show how the attention gate git activates given one pass over the image and query. White regions are the most active. Answers are given by the DMN+. Cho, K., van Merrienboer, B., Gulcehre, C., Bahdanau, D., Iyyer, M., Boyd-Graber, J., Claudino, L., Socher, R., and Bougares, F., Schwenk, H., and Bengio, Y. Learning Daum´e III, H. A Neural Network for Factoid Question Phrase Representations using RNN Encoder-Decoder for Answering over Paragraphs. In EMNLP, 2014. Statistical Machine Translation. In EMNLP, 2014. Joulin, A. and Mikolov, T. Inferring algorithmic patterns Chung, J., Gulcehre, C., Cho, K., and Bengio, Y. Em- with stack-augmented recurrent nets. In NIPS, 2015. pirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555, Kaiser, L. and Sutskever, I. Neural GPUs Learn Algo- 2014. rithms. arXiv preprint arXiv:1511.08228, 2015. Fang, H., Gupta, S., Iandola, F., Srivastava, R., Deng, L., Karpathy, A. and Fei-Fei, L. Deep Visual-Semantic Align- Dollar, P., Gao, J., He, X., Mitchell, M., and Platt, J. ments for Generating Image Descriptions. In CVPR, From captions to visual concepts and back. In CVPR, 2015. 2015. Kingma, Diederik and Ba, Jimmy. Adam: A Geman, D., Geman, S., Hallonquist, N., and Younes, L. method for stochastic optimization. arXiv preprint A Visual Turing Test for Computer Vision Systems. In arXiv:1412.6980, 2014. PNAS, 2014. Krizhevsky, A., Sutskever, I., and Hinton, G. E. Imagenet Graves, A., Wayne, G., and Danihelka, I. Neural turing classification with deep convolutional neural networks. machines. arXiv preprint arXiv:1410.5401, 2014. In NIPS, 2012.

10 . Dynamic Memory Networks for Visual and Textual Question Answering Kulkarni, G., Premraj, V., Dhar, S., Li, S., Choi, Y., Berg, Stollenga, M. F., J. Masci, F. Gomez, and Schmidhuber, A. C., and Berg, T. L. Baby talk: Understanding and J. Deep Networks with Internal Selective Attention generating image descriptions. In CVPR, 2011. through Feedback Connections. In NIPS, 2014. Kumar, A., Irsoy, O., Ondruska, P., Iyyer, M., Bradbury, Sukhbaatar, S., Szlam, A., Weston, J., and Fergus, R. End- J., Gulrajani, I., and Socher, R. Ask Me Anything: Dy- to-end memory networks. In NIPS, 2015. namic Memory Networks for Natural Language Process- ing. arXiv preprint arXiv:1506.07285, 2015. Weston, J., Bordes, A., Chopra, S., and Mikolov, T. To- wards ai-complete question answering: A set of pre- Li, J., Luong, M. T., and Jurafsky, D. A Hierarchical Neu- requisite toy tasks. arXiv preprint arXiv:1502.05698, ral Autoencoder for Paragraphs and Documents. arXiv 2015a. preprint arXiv:1506.01057, 2015. Weston, J., Chopra, S., and Bordes, A. Memory networks. Lin, T. Y., Maire, M., Belongie, S., Hays, J., Perona, P., In ICLR, 2015b. Ramanan, D., Doll´ar, P., and Zitnick, C. L. Microsoft COCO: Common Objects in Context. In ECCV 2014, Wu, Q., Wang, P., Shen, C., Hengel, A. van den, and Dick, 2014. A. Ask Me Anything: Free-form Visual Question An- swering Based on Knowledge from External Sources. Luong, M. T., Pham, H., and Manning, C. D. Effective ap- arXiv preprint arXiv:1511.06973, 2015. proaches to attention-based neural machine translation. In EMNLP, 2015. Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A. C., Salakhutdinov, R., Zemel, R. S., and Bengio, Y. Show, Ma, L., Lu, Z., and Li, H. Learning to Answer Ques- attend and tell: Neural image caption generation with vi- tions From Image Using Convolutional Neural Network. sual attention. In ICML, 2015. arXiv preprint arXiv:1506.00333, 2015. Yang, Z., He, X., Gao, J., Deng, L., and Smola, A. Stacked Malinowski, M. and Fritz, M. A Multi-World Approach to attention networks for image question answering. arXiv Question Answering about Real-World Scenes based on preprint arXiv:1511.02274, 2015. Uncertain Input. In NIPS, 2014. Yates, A., Banko, M., Broadhead, M., Cafarella, M. J., Et- Malinowski, M., Rohrbach, M., and Fritz, M. Ask your zioni, O., and Soderland, S. Textrunner: Open informa- neurons: A neural-based approach to answering ques- tion extraction on the web. In HLT-NAACL (Demonstra- tions about images. In ICCV, 2015. tions), 2007. Noh, H., Seo, P. H., and Han, B. Image question answer- Zhou, B., Tian, Y., Sukhbaatar, S., Szlam, A., and Fergus, ing using convolutional neural network with dynamic R. Simple baseline for visual question answering. arXiv parameter prediction. arXiv preprint arXiv:1511.05756, preprint arXiv:1512.02167, 2015. 2015. Peng, B., Lu, Z., Li, H., and Wong, K. To- wards neural network-based reasoning. arXiv preprint arXiv:1508.05508, 2015. Simonyan, K. and Zisserman, A. Very deep convolu- tional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014. Socher, R., Chen, D., Manning, C. D., and Ng, A. Y. Rea- soning With Neural Tensor Networks For Knowledge Base Completion. In NIPS, 2013a. Socher, R., Perelygin, A., Wu, J., Chuang, J., Manning, C., Ng, A., and Potts, C. Recursive deep models for semantic compositionality over a sentiment treebank. In EMNLP, 2013b. Socher, R., Karpathy, A., Le, Q. V., Manning, C. D., and Ng, A. Y. Grounded compositional semantics for finding and describing images with sentences. In ACL, 2014.