- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

13_ RNN II

展开查看详情

1 .

2 .

3 .

4 .

5 .

6 .

7 .

8 .

9 .

10 .

11 .

12 .

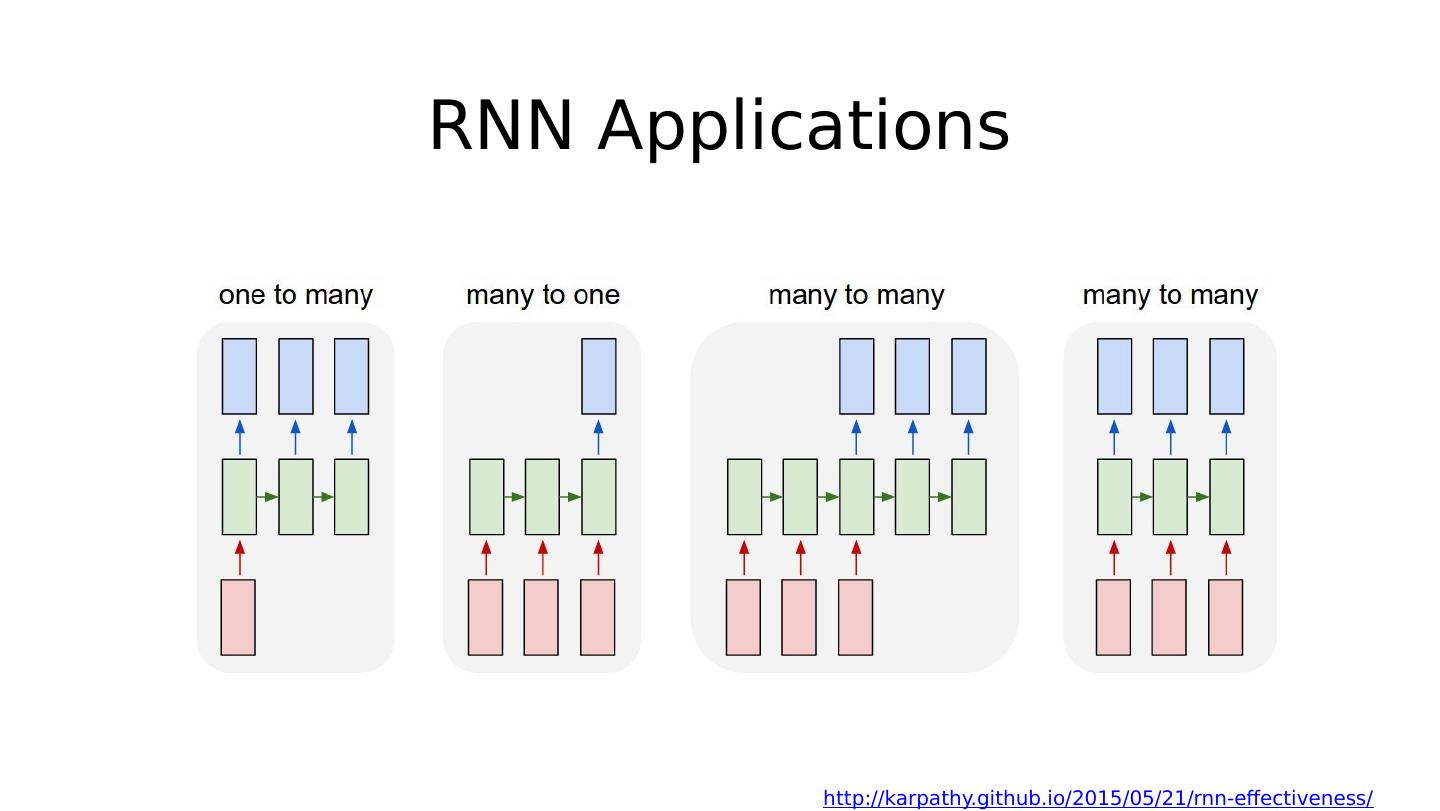

13 .

14 .

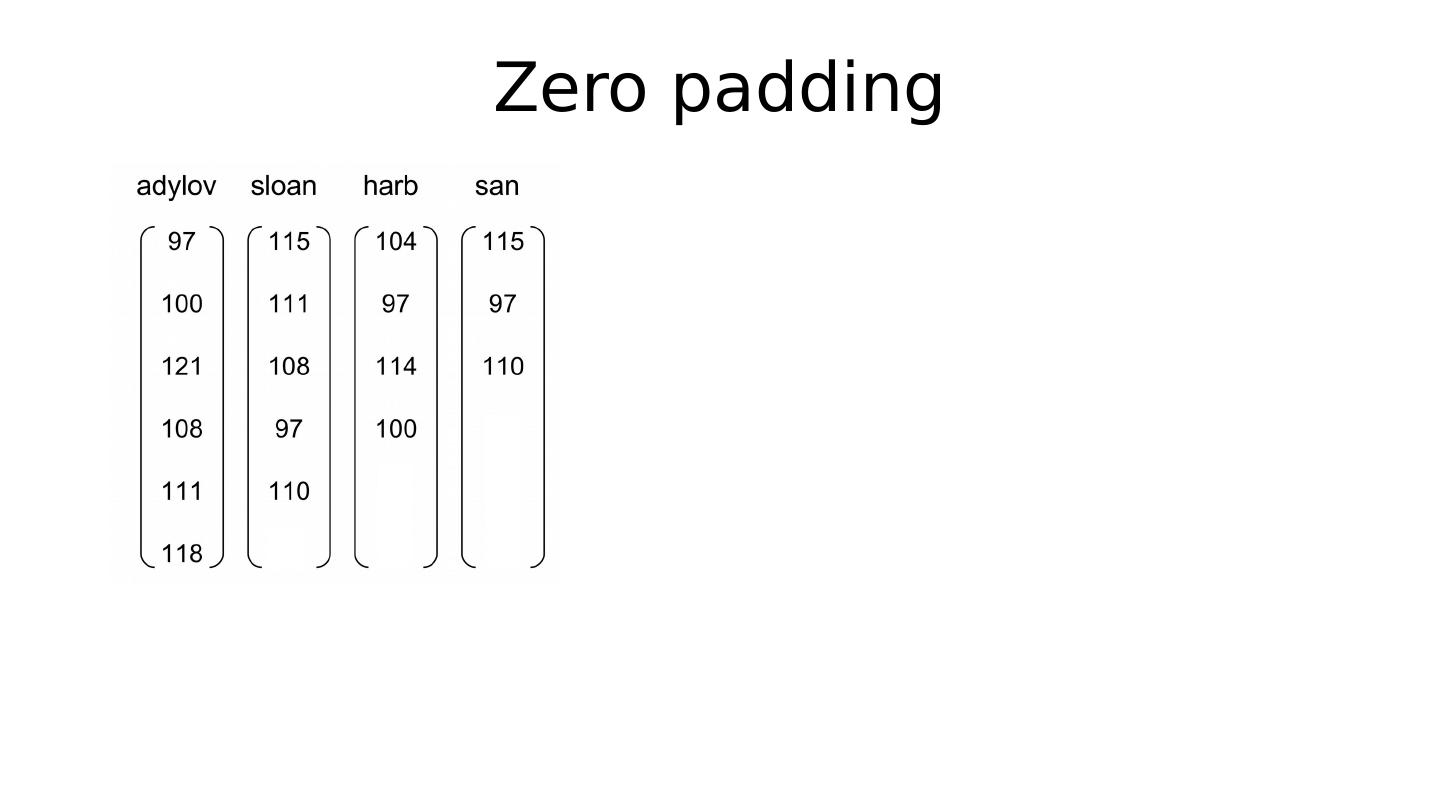

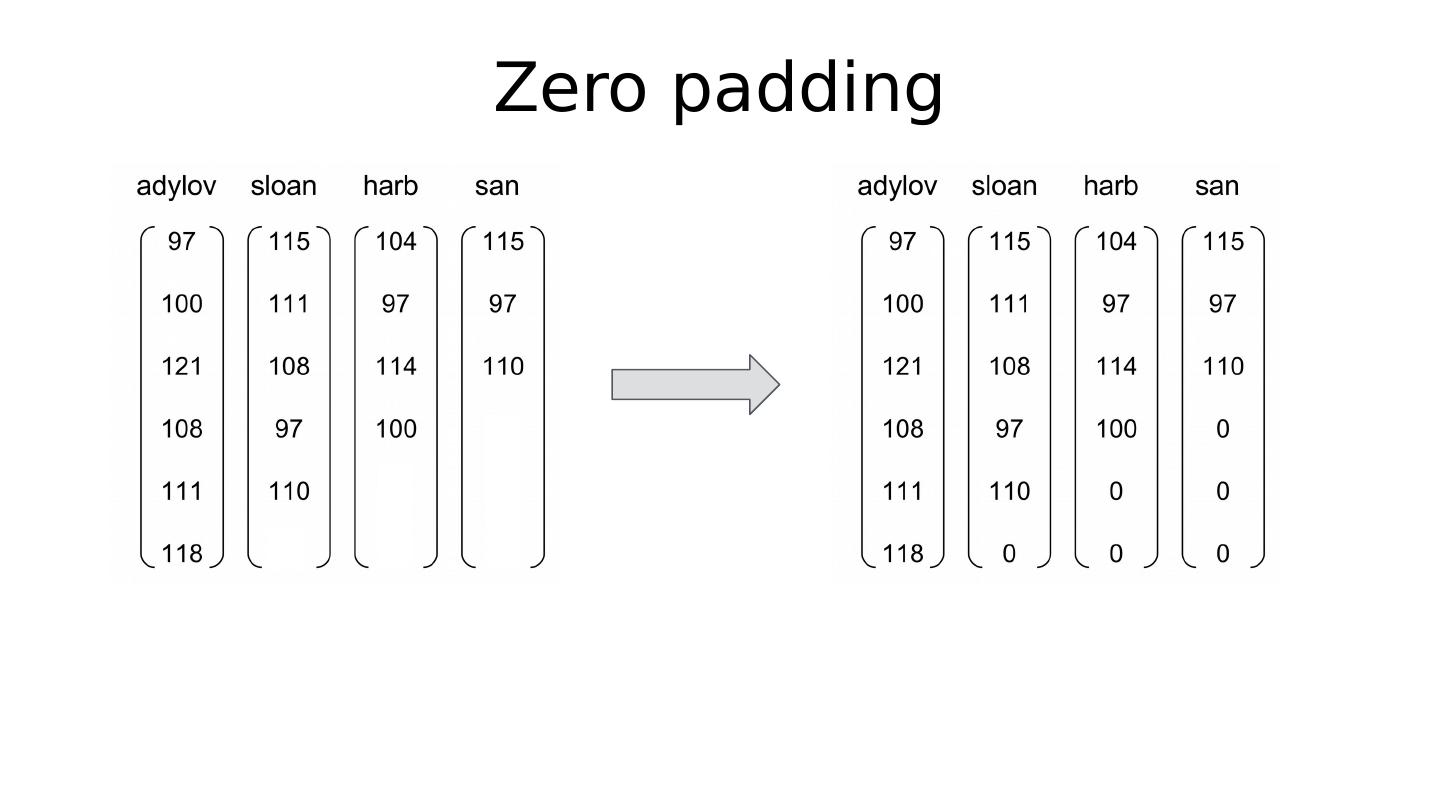

15 .

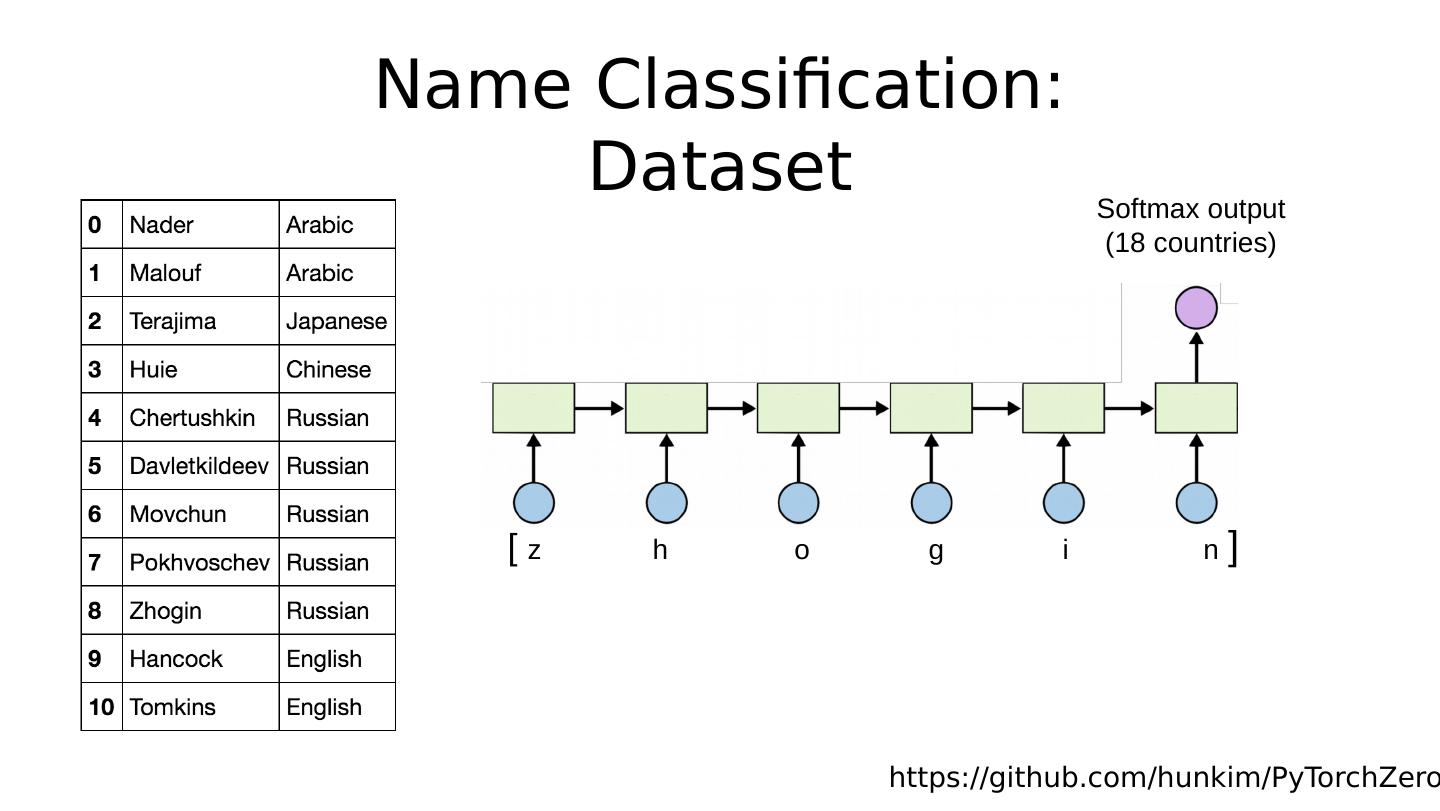

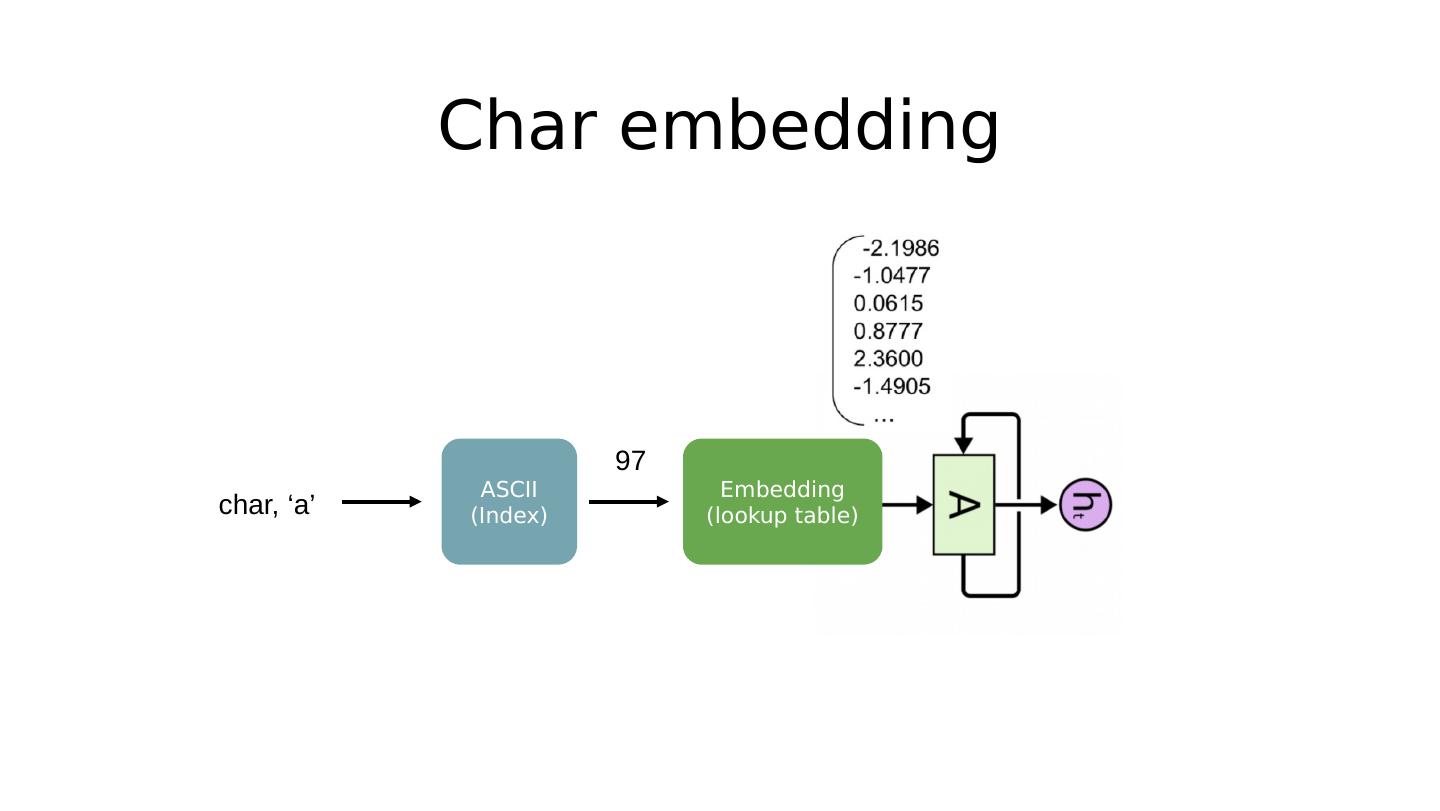

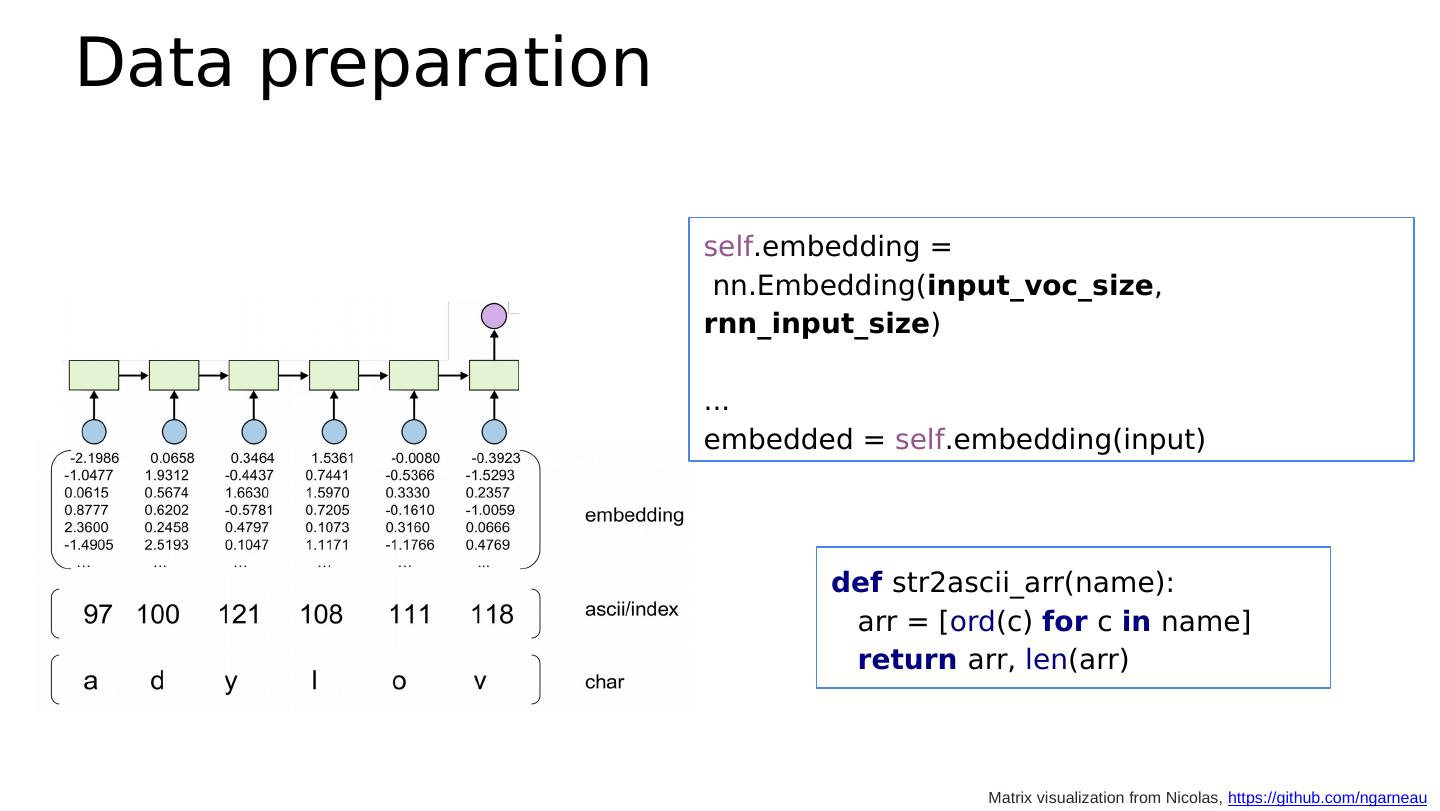

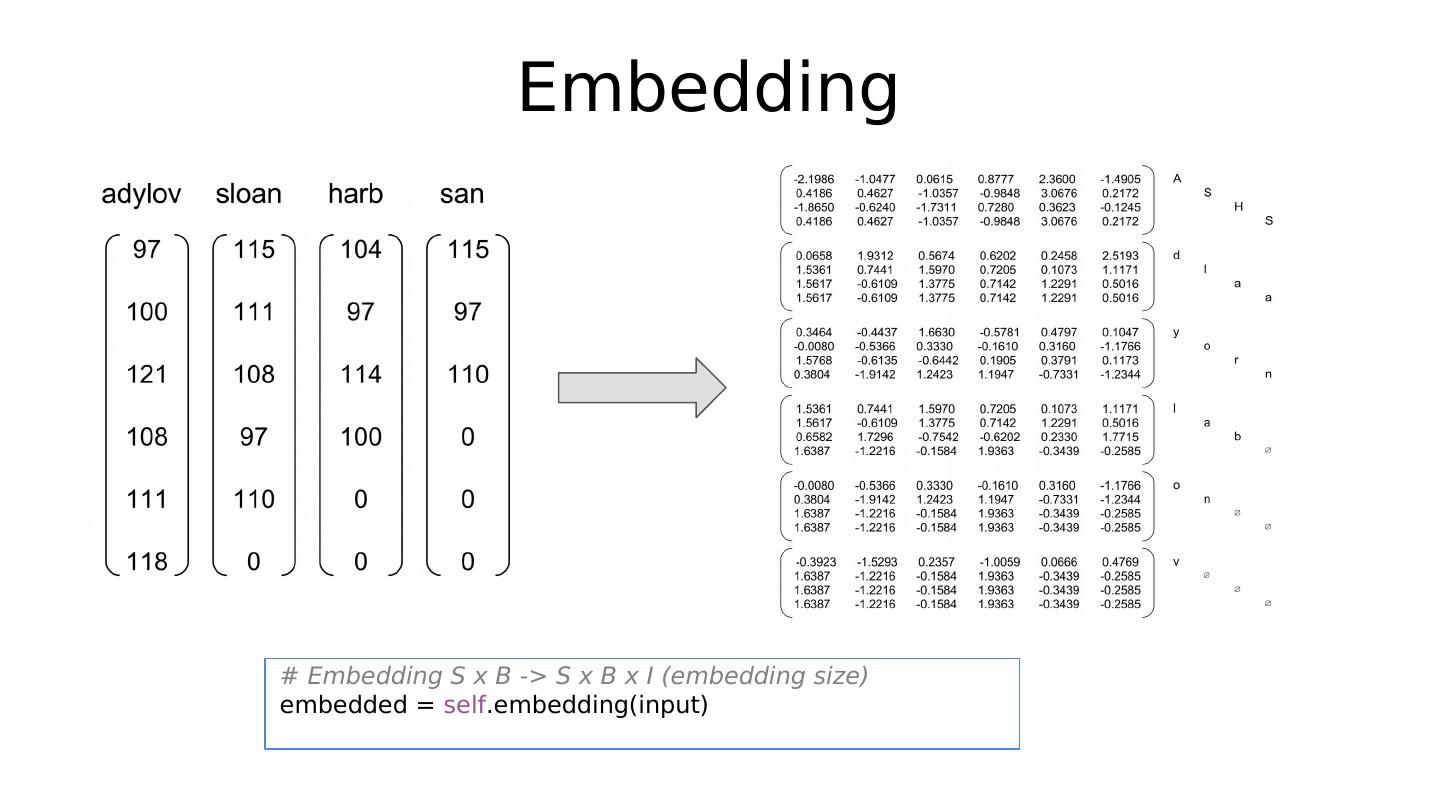

16 .

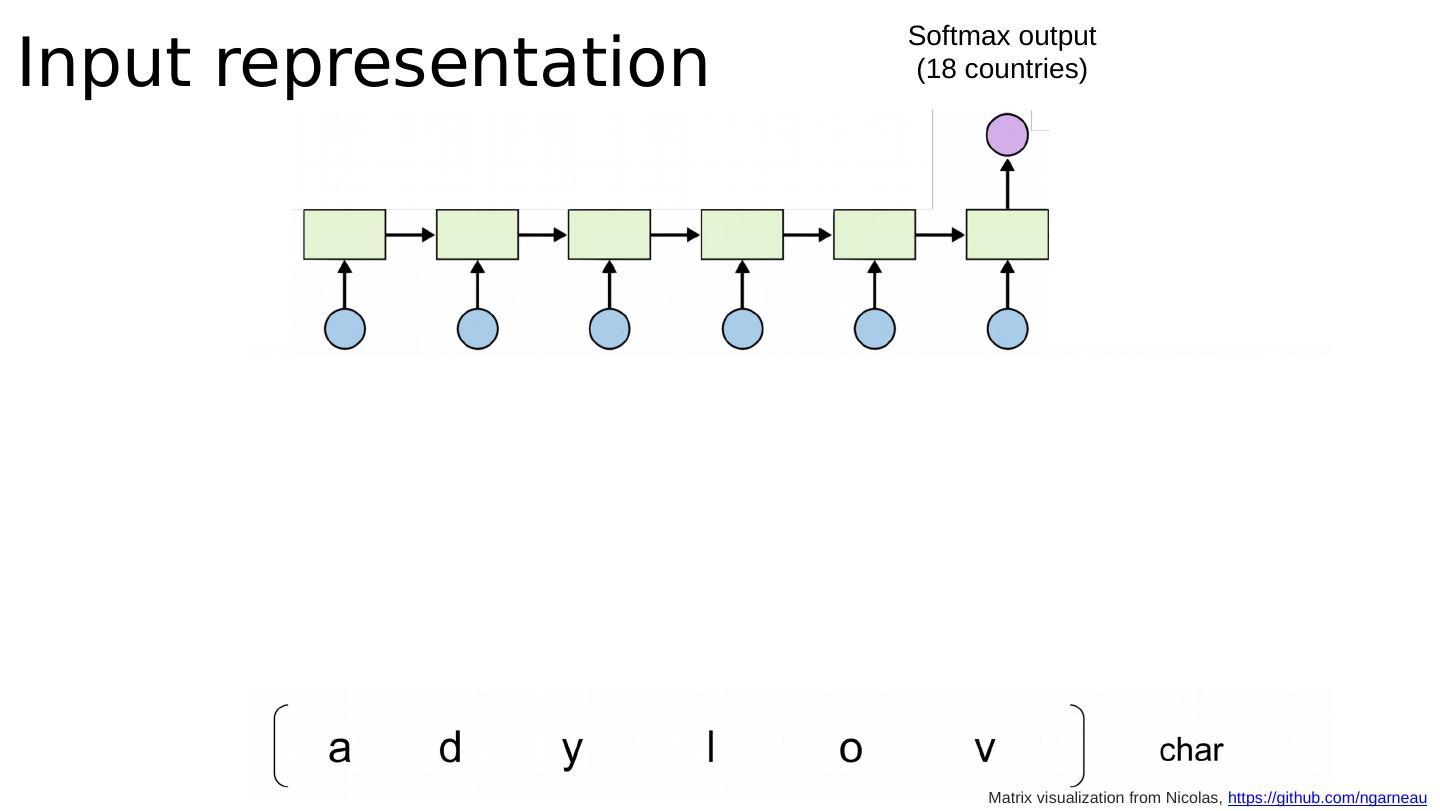

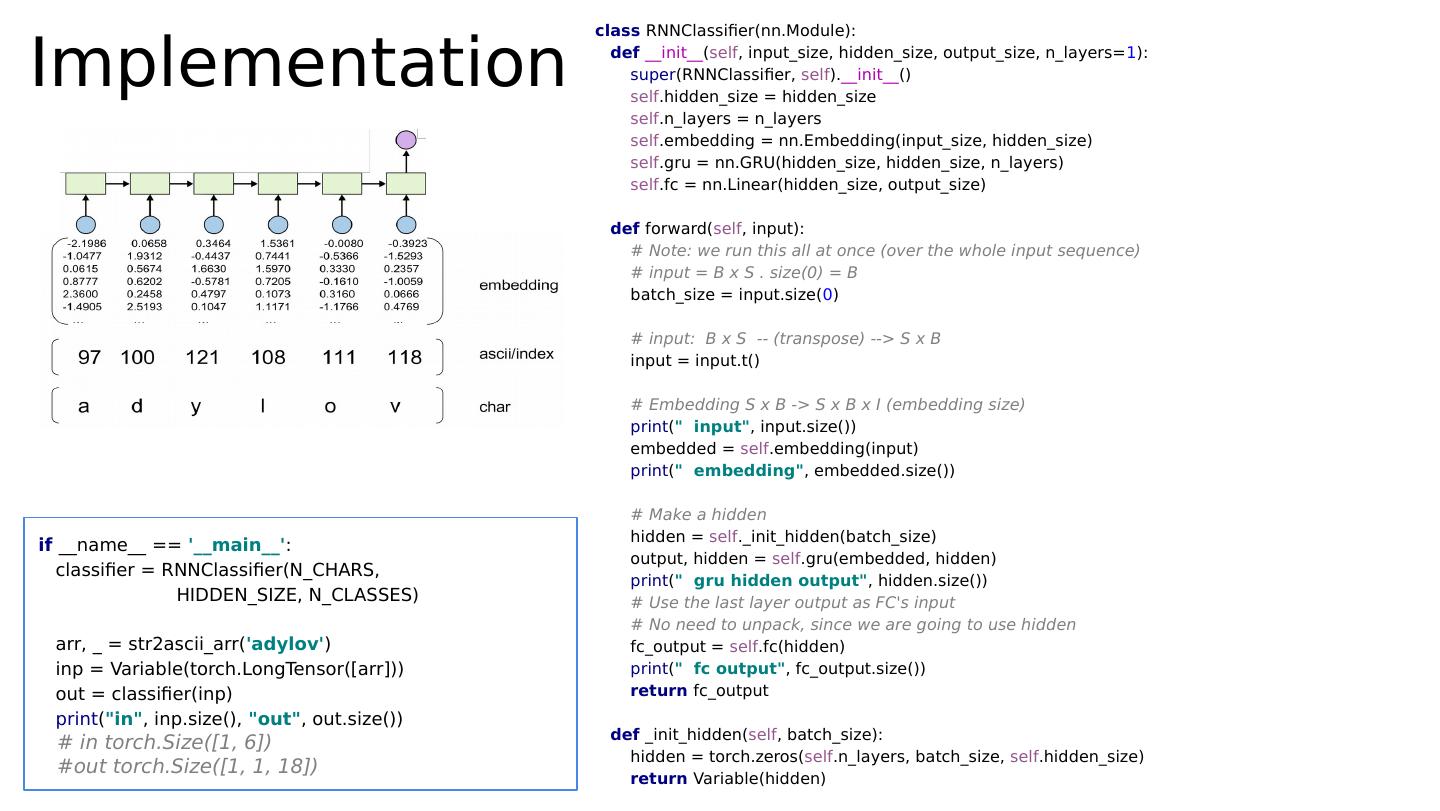

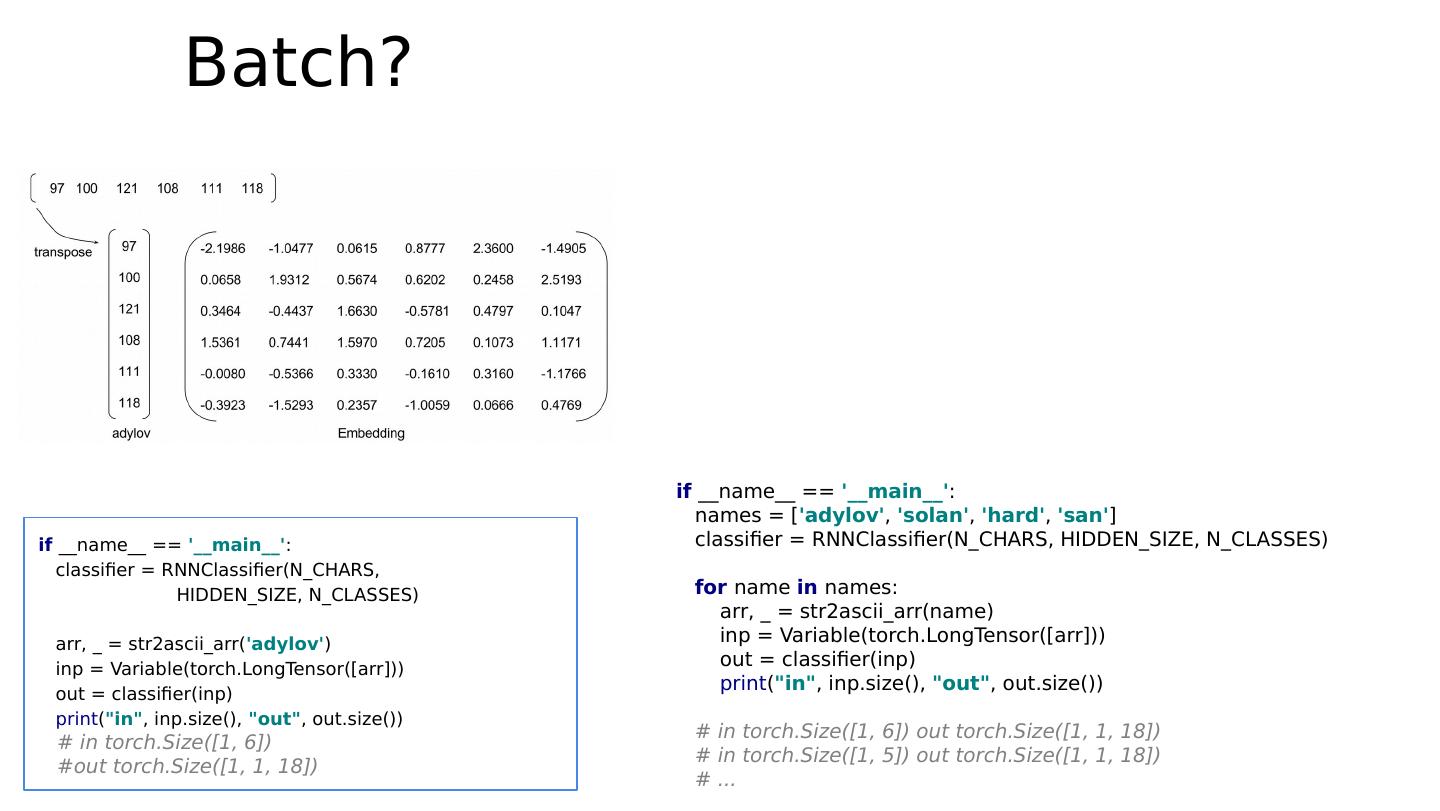

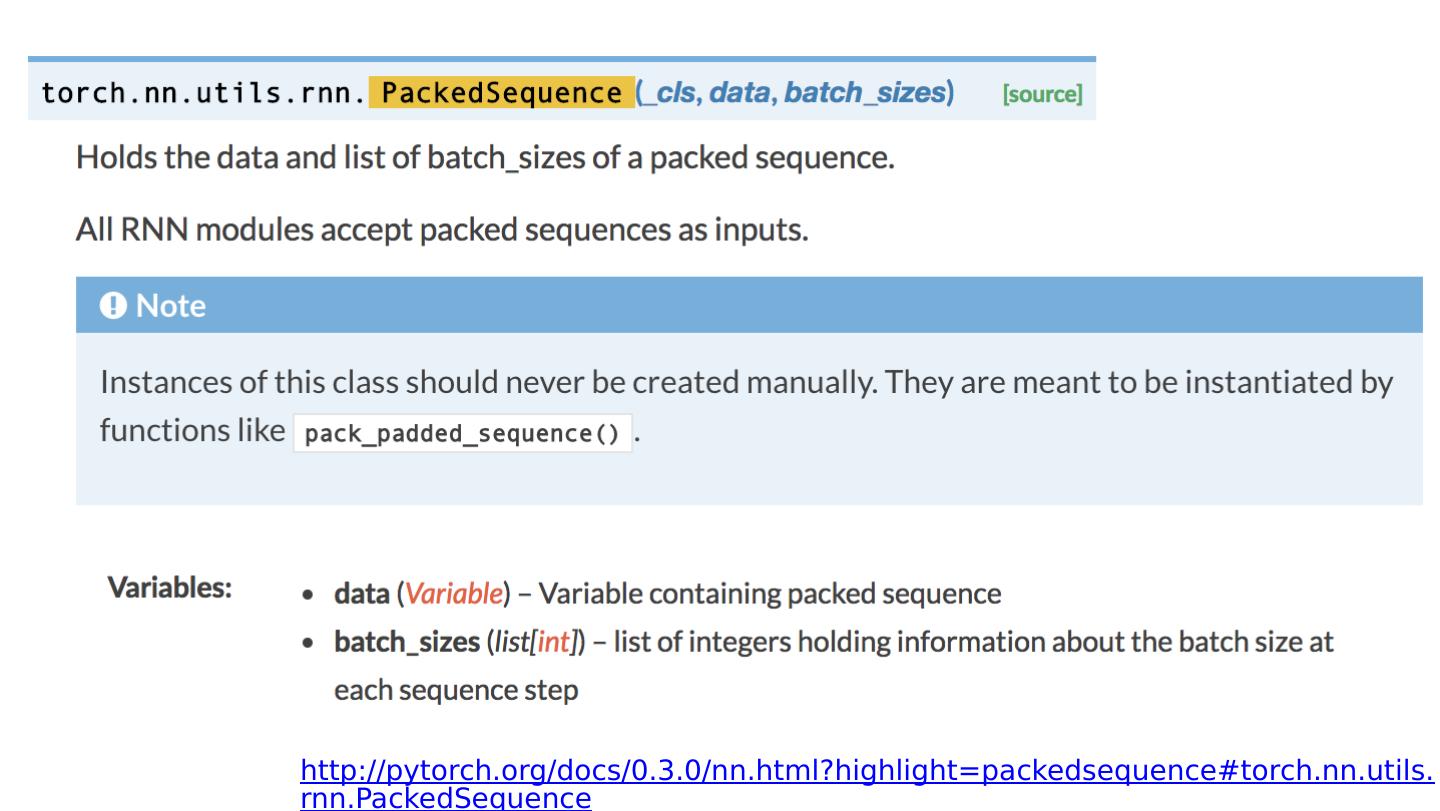

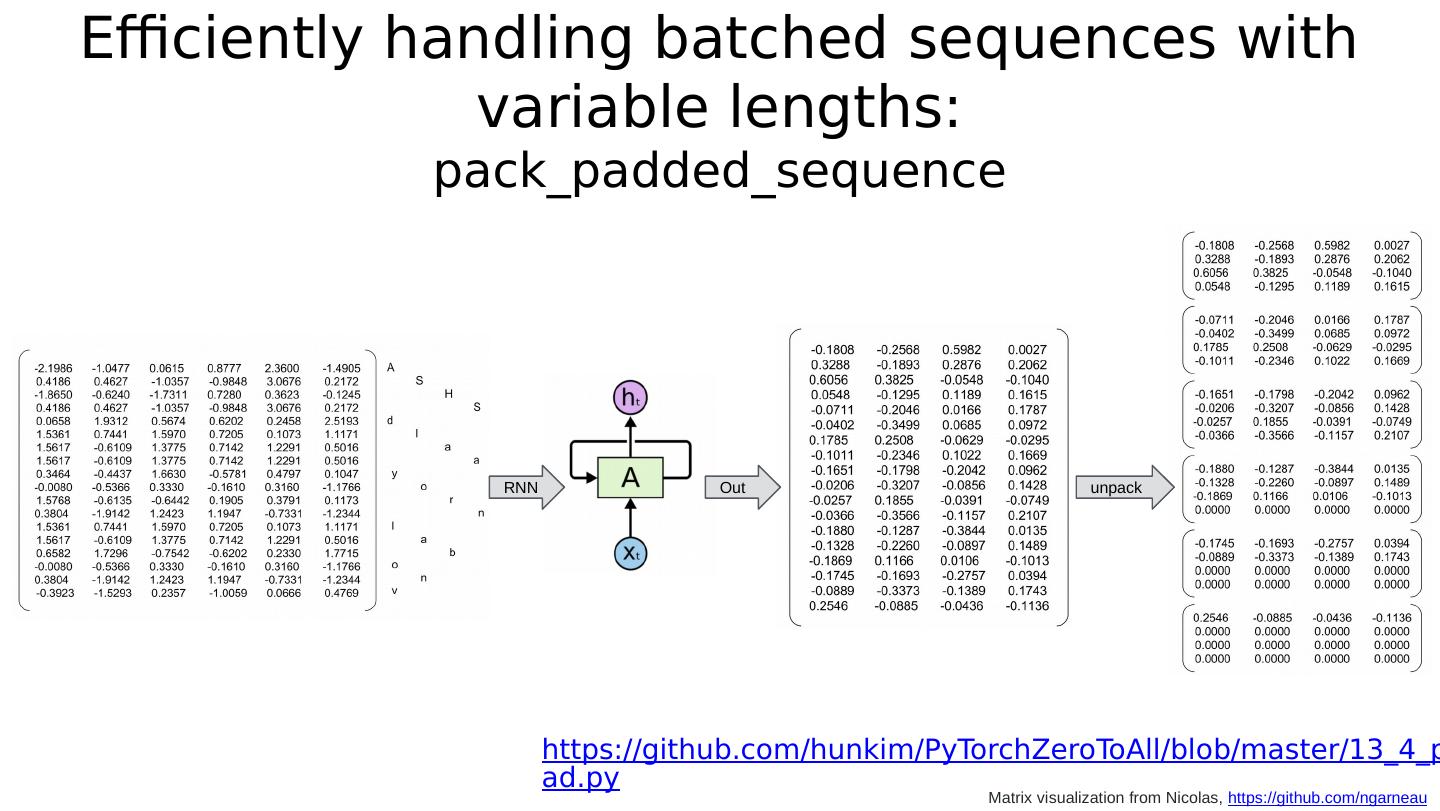

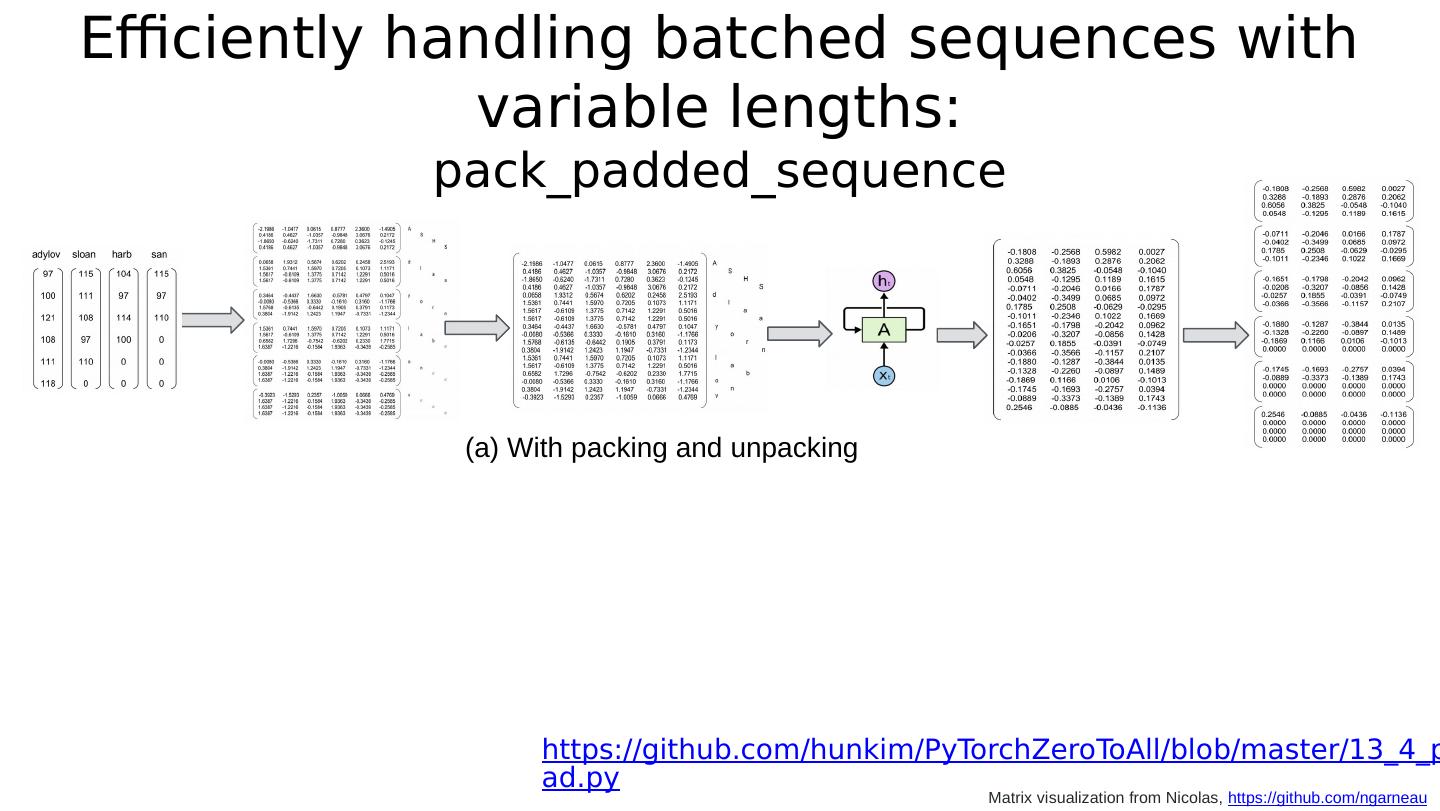

17 .packing RNN Ordered batch (by length) Matrix visualization from Nicolas, https://github.com/ngarneau Efficiently handling batched sequences with variable lengths: pack_padded_sequence embedding https://github.com/hunkim/PyTorchZeroToAll/blob/master/13_4_pack_pad.py

18 .Exercise 14-1: Language model with Obama speech http://obamaspeeches.com/

19 .Typical RNN Models P(y=0) P(y=1) P(y=n) … Linear With CrossEntropy Loss Embedding (lookup table)

20 .RNN Classification http://karpathy.github.io/2015/05/21/rnn-effectiveness/

21 .Lecture 1 4 : Language modeling

22 .Zero padding

23 .Data preparation Matrix visualization from Nicolas, https://github.com/ngarneau def str2ascii_arr(name): arr = [ ord (c) for c in name ] return arr, len (arr) self .embedding = nn.Embedding( input_voc_size , rnn_input_size ) ... embedded = self .embedding(input)

24 .Full implementation def str2ascii_arr(msg): arr = [ ord (c) for c in msg] return arr, len (arr) # pad sequences and sort the tensor def pad_sequences(vectorized_seqs, seq_lengths): seq_tensor = torch.zeros(( len (vectorized_seqs), seq_lengths.max())).long() for idx, (seq, seq_len) in enumerate ( zip (vectorized_seqs, seq_lengths)): seq_tensor[idx, :seq_len] = torch.LongTensor(seq) return seq_tensor # Create necessary variables, lengths, and target def make_variables(names): sequence_and_length = [str2ascii_arr(name) for name in names] vectorized_seqs = [sl[ 0 ] for sl in sequence_and_length] seq_lengths = torch.LongTensor([sl[ 1 ] for sl in sequence_and_length]) return pad_sequences(vectorized_seqs, seq_lengths) if __name__ == __main__ : names = [ adylov , solan , hard , san ] classifier = RNNClassifier(N_CHARS, HIDDEN_SIZE, N_CLASSES) inputs = make_variables(names) out = classifier(inp) print ( "batch in" , inp.size(), "batch out" , out.size()) # batch in torch.Size([4, 6]) batch out torch.Size([1, 4, 18])

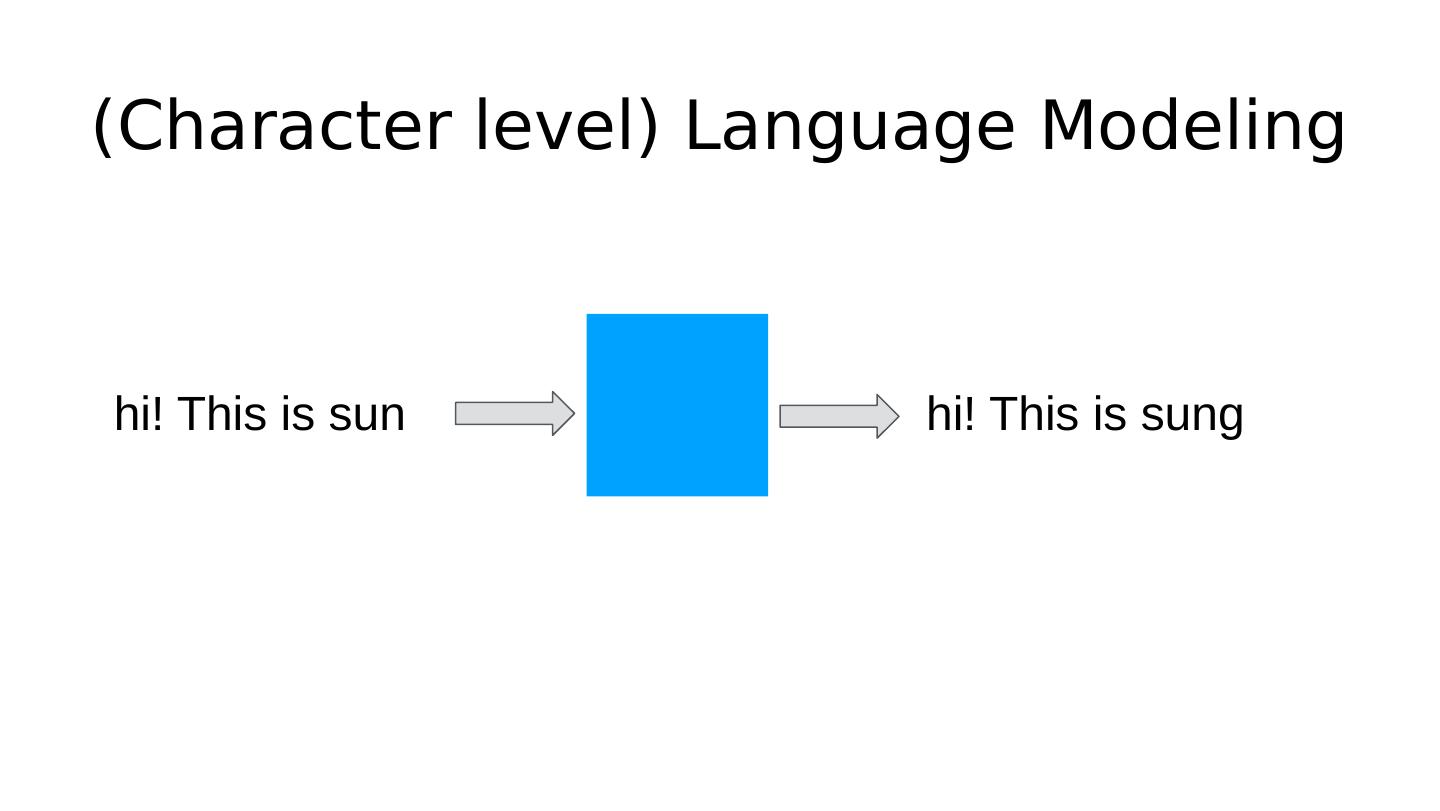

25 .(Character level) Language Modeling hi hi!

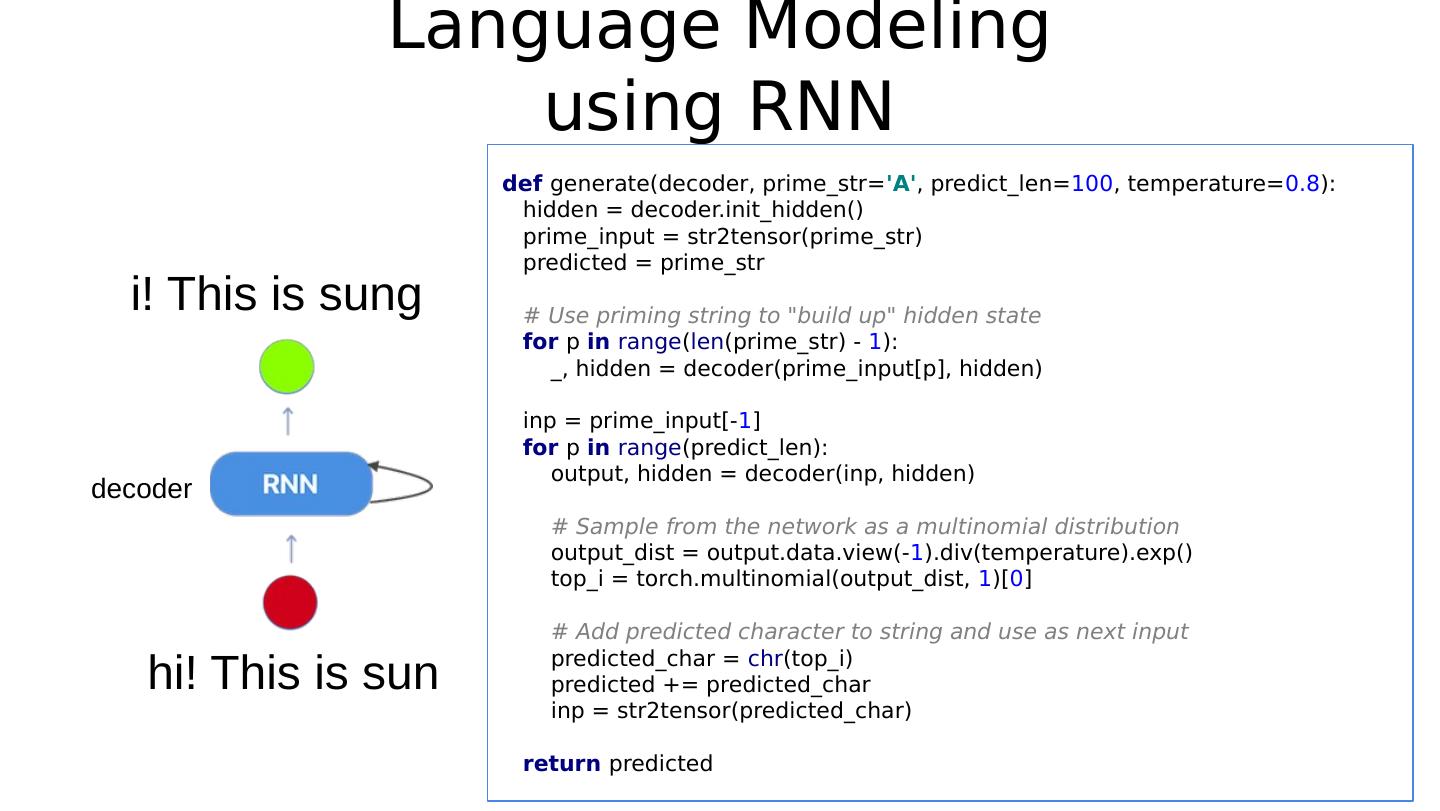

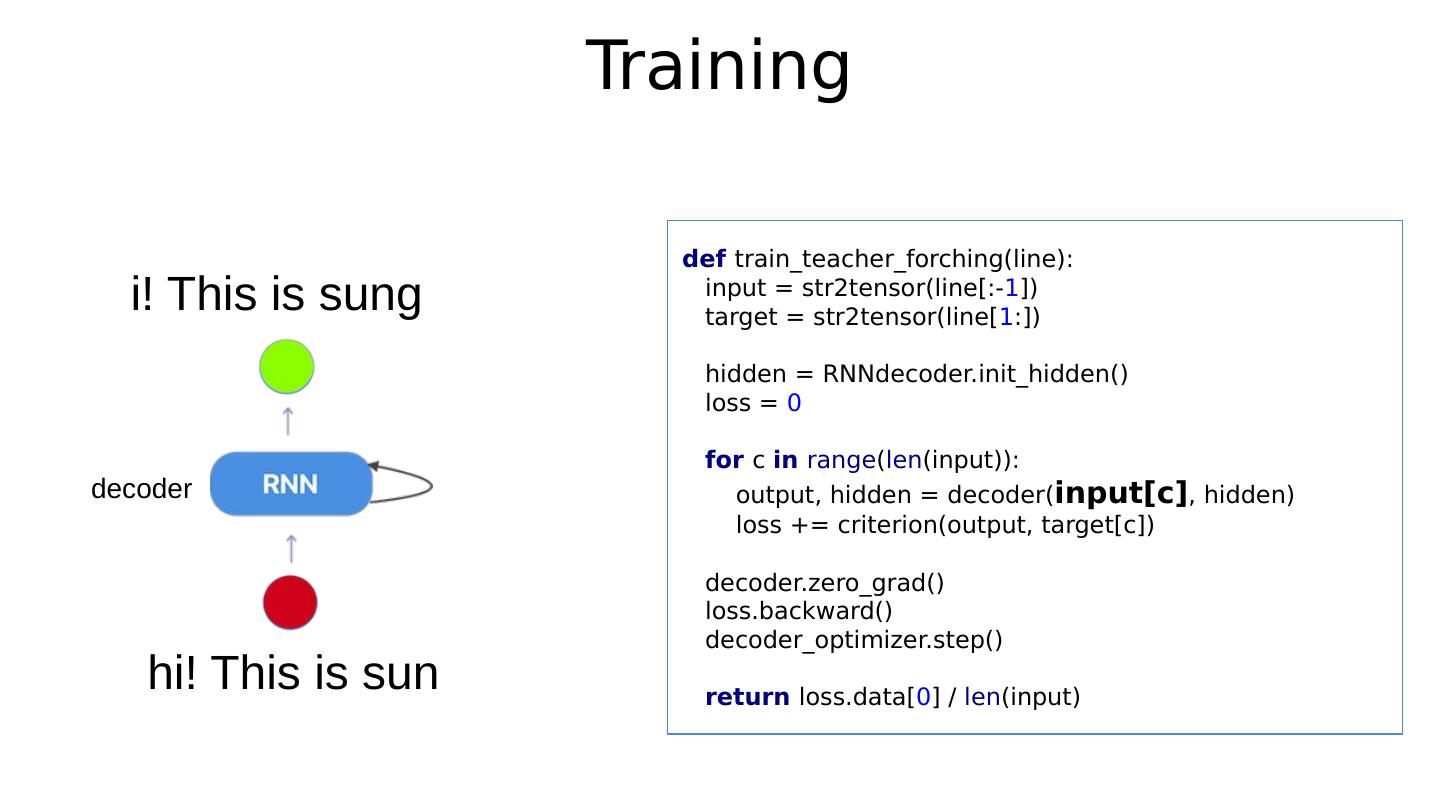

26 .Language Modeling using RNN def generate(decoder, prime_str= A , predict_len= 100 , temperature= 0.8 ): hidden = decoder.init_hidden() prime_input = str2tensor(prime_str) predicted = prime_str # Use priming string to "build up" hidden state for p in range ( len (prime_str) - 1 ): _, hidden = decoder(prime_input[p], hidden) inp = prime_input[- 1 ] for p in range (predict_len): output, hidden = decoder(inp, hidden) # Sample from the network as a multinomial distribution output_dist = output.data.view(- 1 ).div(temperature).exp() top_i = torch.multinomial(output_dist, 1 )[ 0 ] # Add predicted character to string and use as next input predicted_char = chr (top_i) predicted += predicted_char inp = str2tensor(predicted_char) return predicted hi! This is sun i! This is sung decoder

27 .Input representation Softmax output (18 countries) Matrix visualization from Nicolas, https://github.com/ngarneau

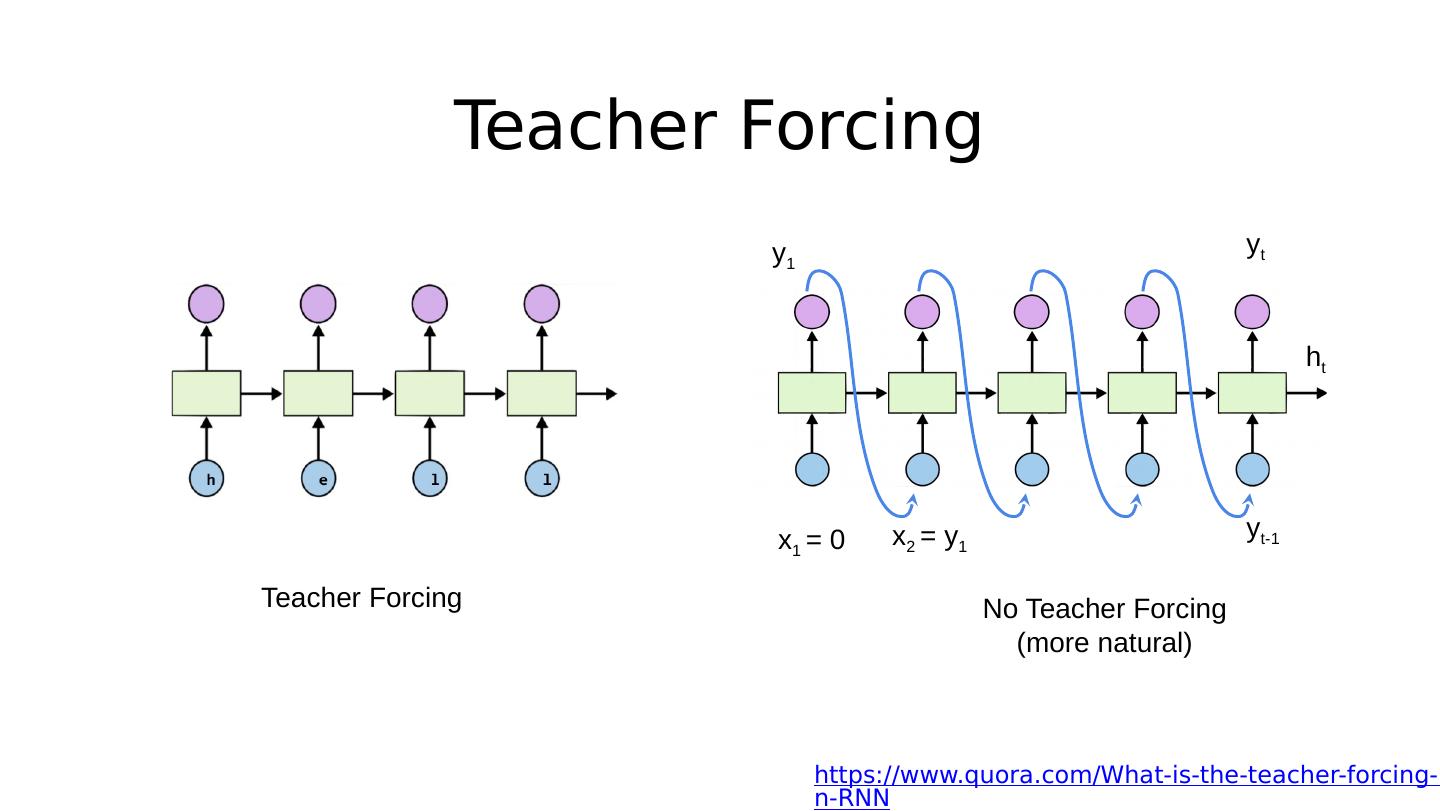

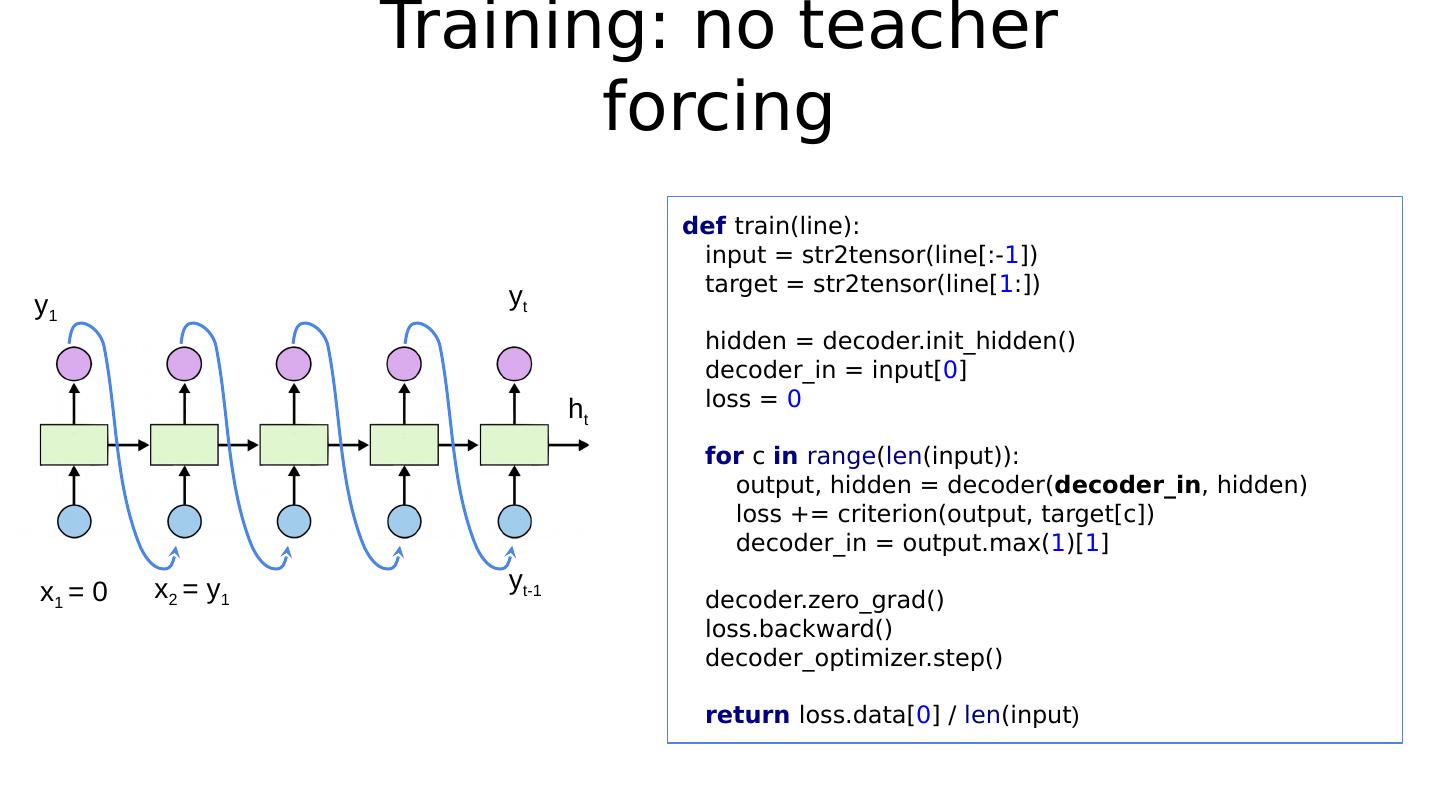

28 .Teacher Forcing https://www.quora.com/What-is-the-teacher-forcing-in-RNN Teacher Forcing y t y t-1 h t y 1 x 2 = y 1 x 1 = 0 No Teacher Forcing (more natural)

29 .Efficiently handling batched sequences with variable lengths: pack_padded_sequence (a) With packing and unpacking (b) Without packing and unpacking Matrix visualization from Nicolas, https://github.com/ngarneau