- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

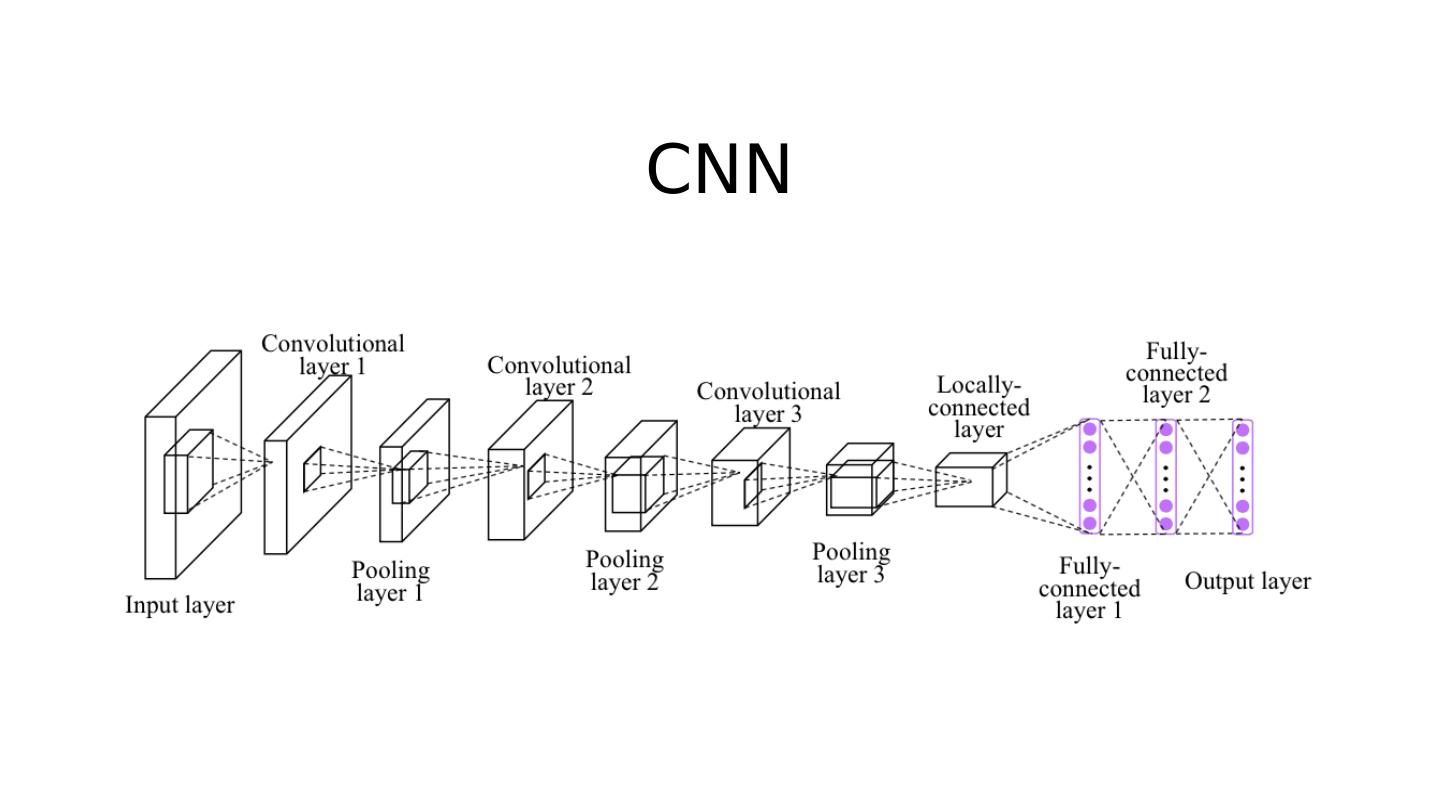

11_ Advanced CNN

展开查看详情

1 .

2 .

3 .

4 .

5 .

6 .

7 .

8 .

9 .

10 .

11 .

12 .

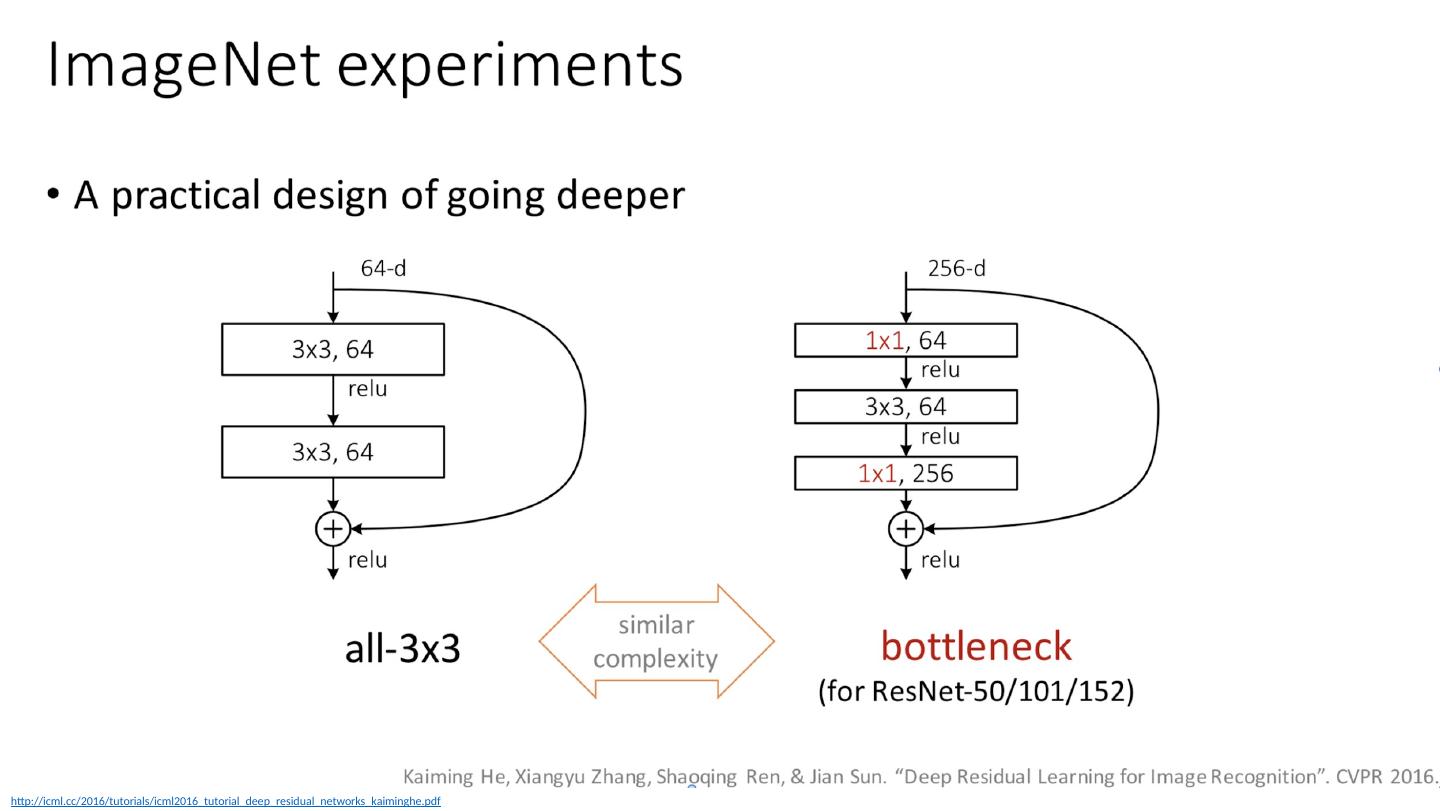

13 .http://icml.cc/2016/tutorials/icml2016_tutorial_deep_residual_networks_kaiminghe.pdf

14 .http://icml.cc/2016/tutorials/icml2016_tutorial_deep_residual_networks_kaiminghe.pdf

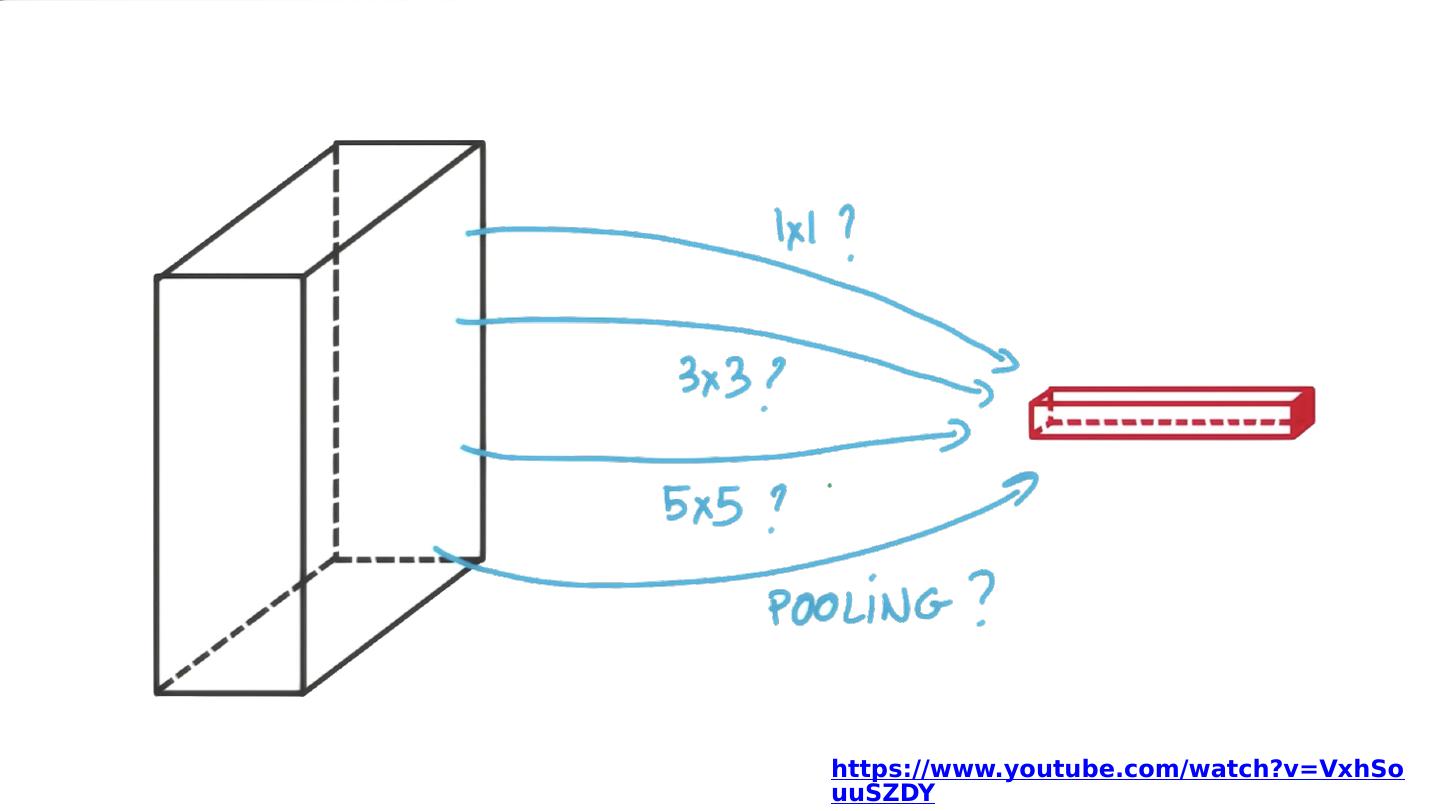

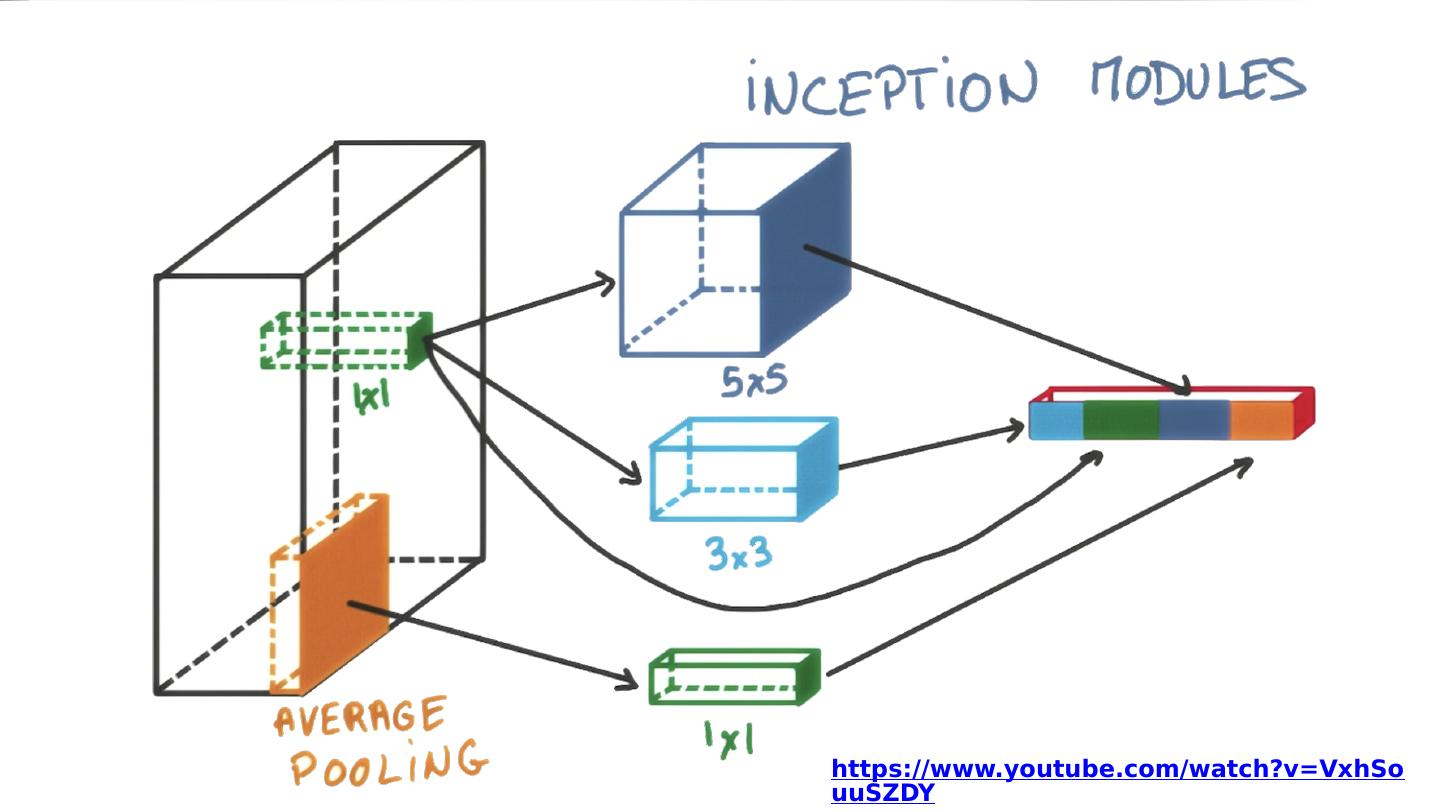

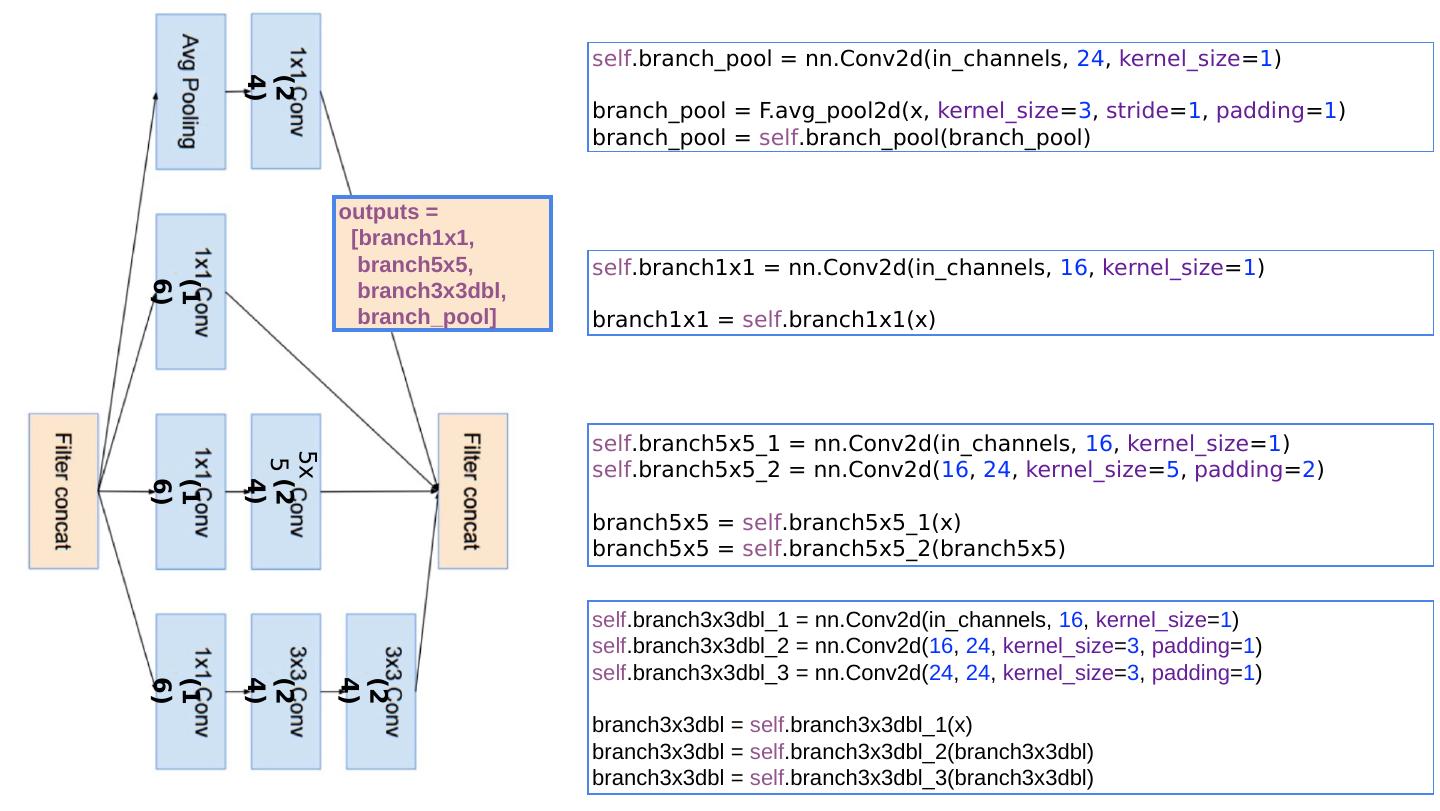

15 .https://www.youtube.com/watch?v=VxhSouuSZDY

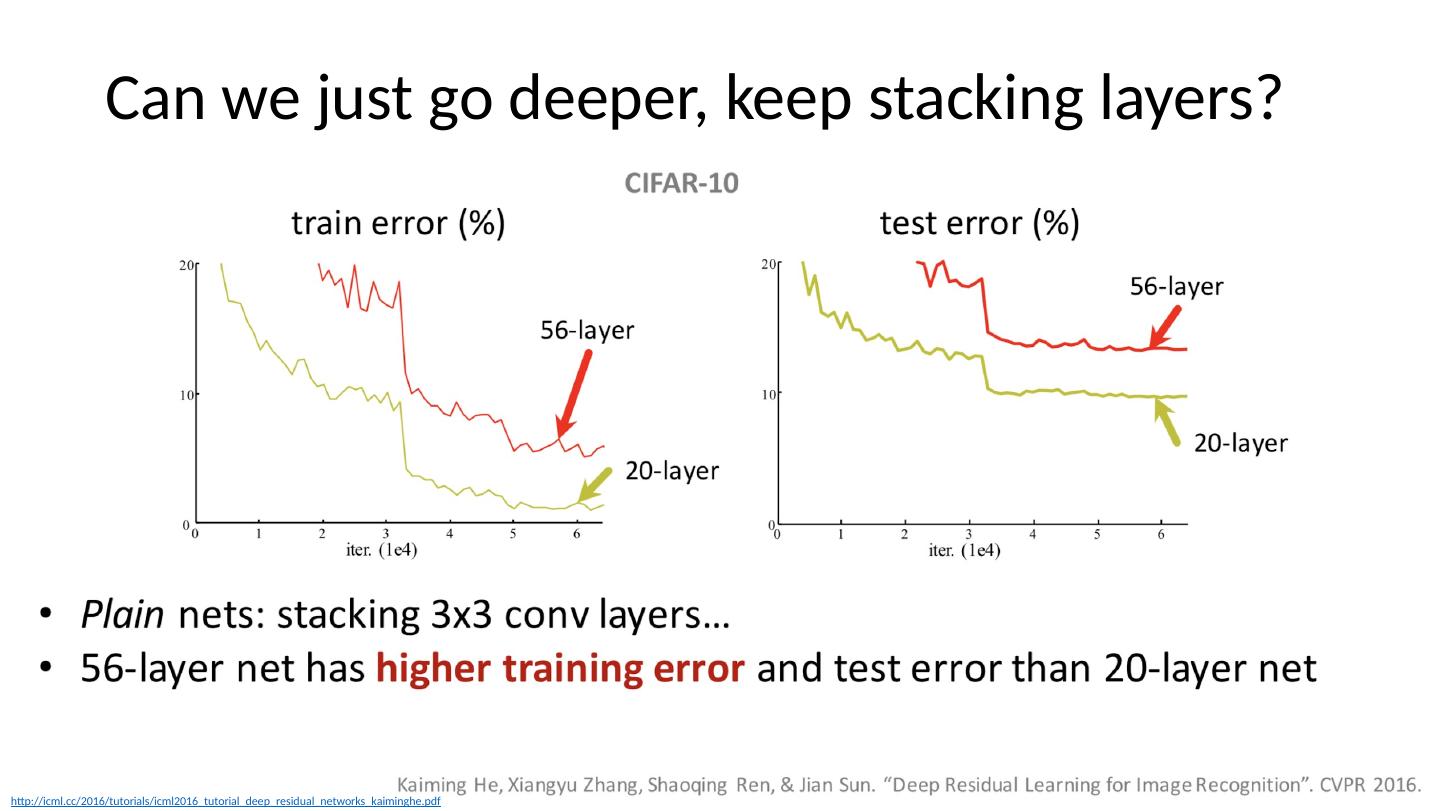

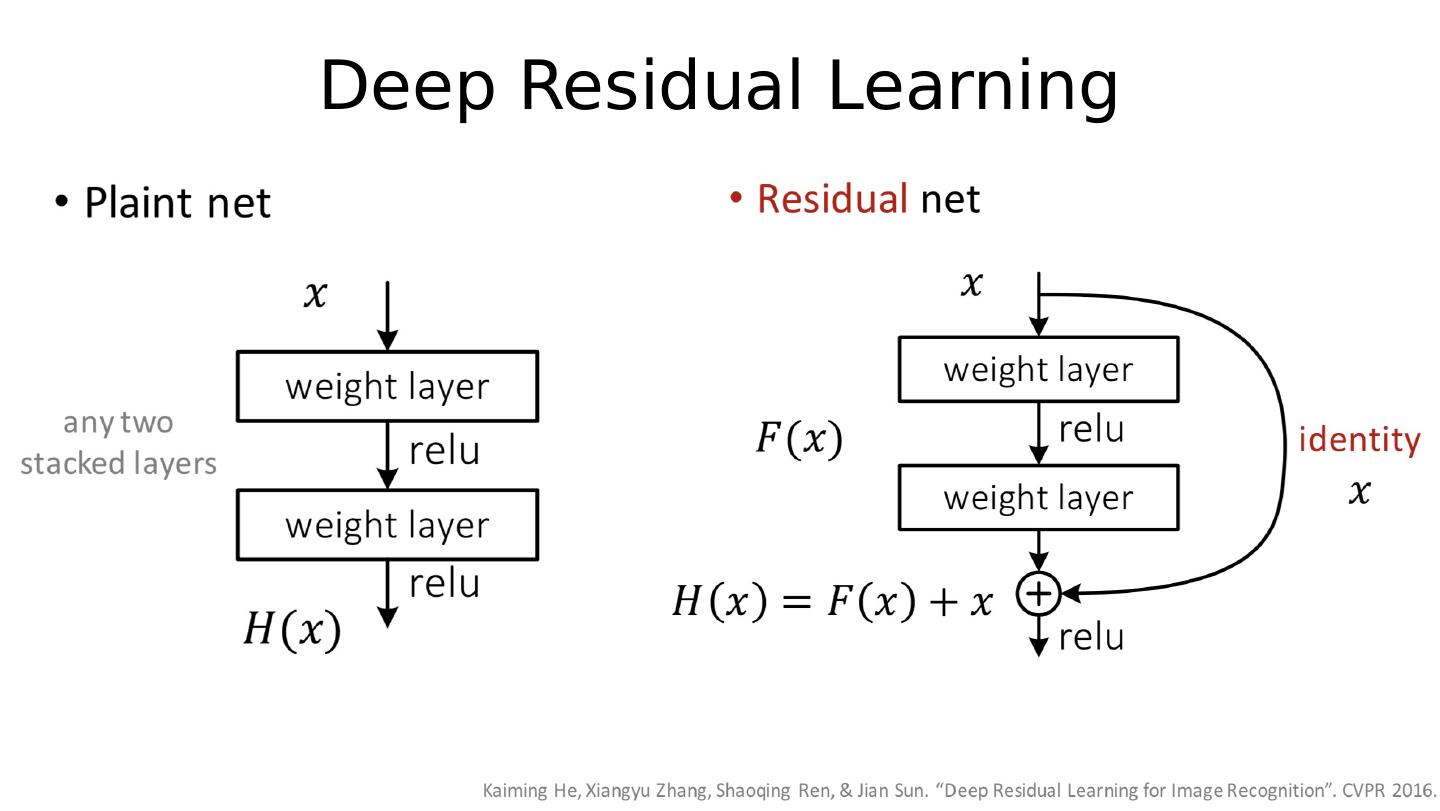

16 .Problems with stacking layers (TBA) Vanishing gradients problem Back propagation kind of gives up… Degradation problem with increased network depth accuracy gets saturated and then rapidly degrades

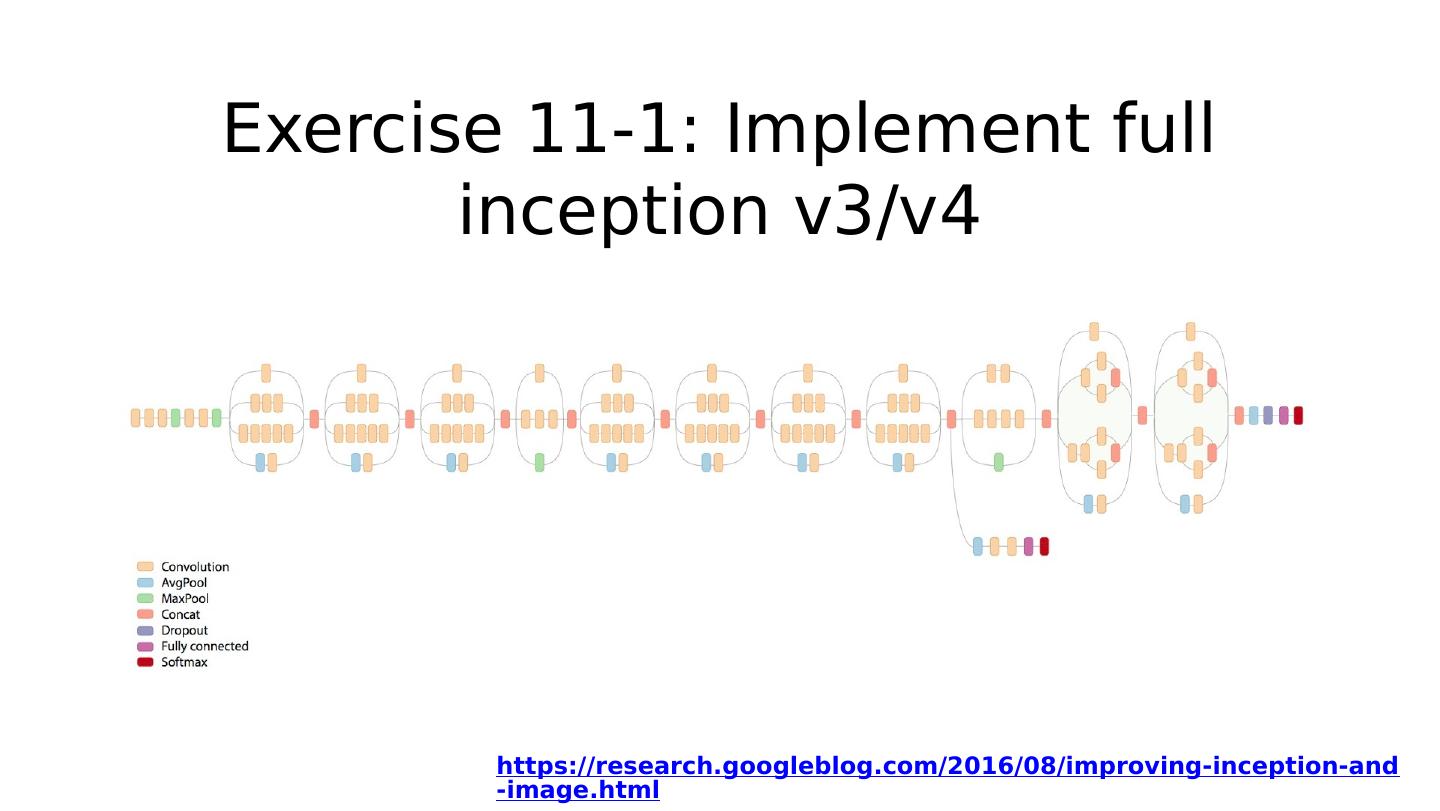

17 .ML/DL for Everyone with Lecture 1 1 : Advanced CNN Sung Kim < hunkim+ml@gmail.com > HKUST Code: https://github.com/hunkim/PyTorchZeroToAll Slides: http://bit.ly/PyTorchZeroAll Videos: http://bit.ly/PyTorchVideo

18 .https://www.youtube.com/watch?v=C6tLw-rPQ2o

19 .Lecture 12 : RNN

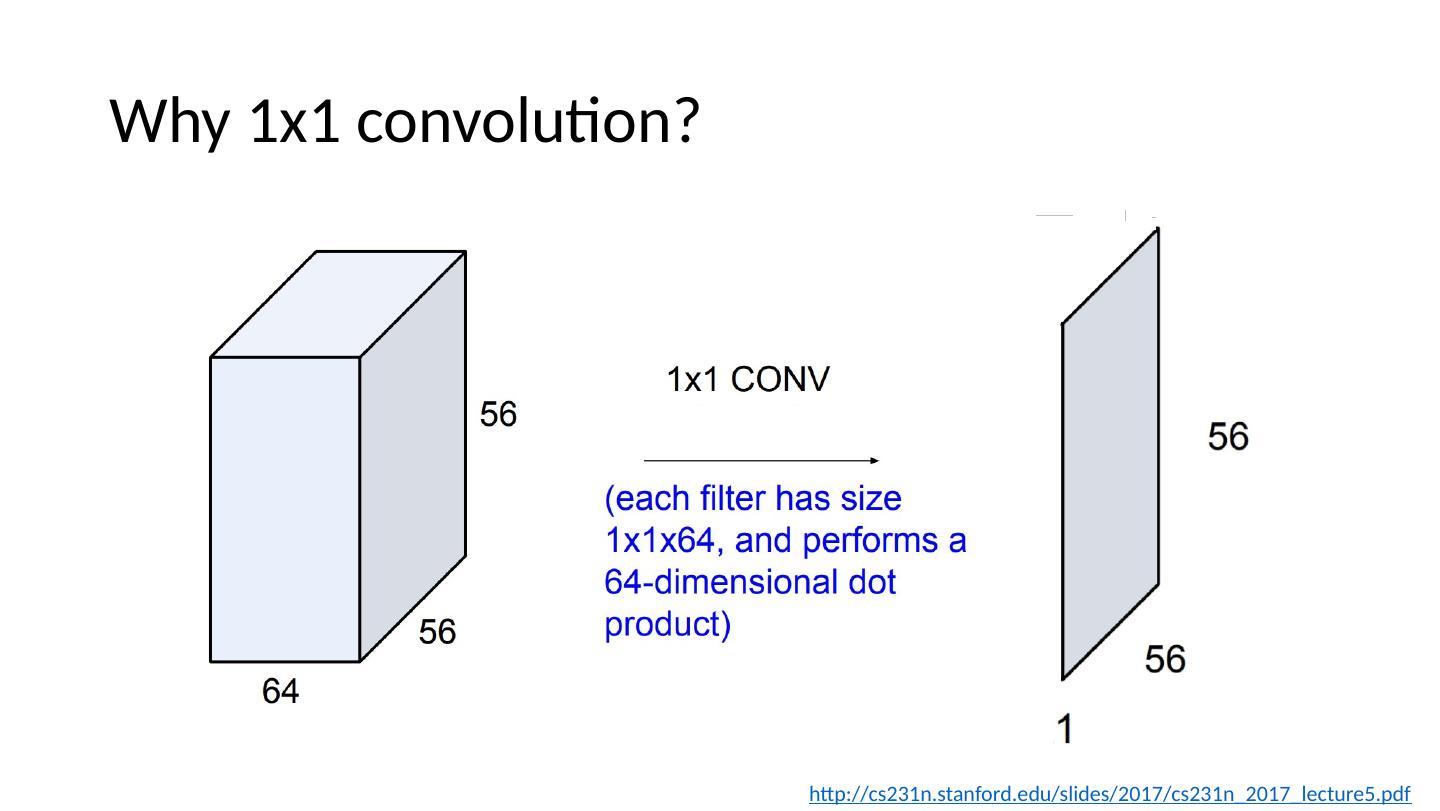

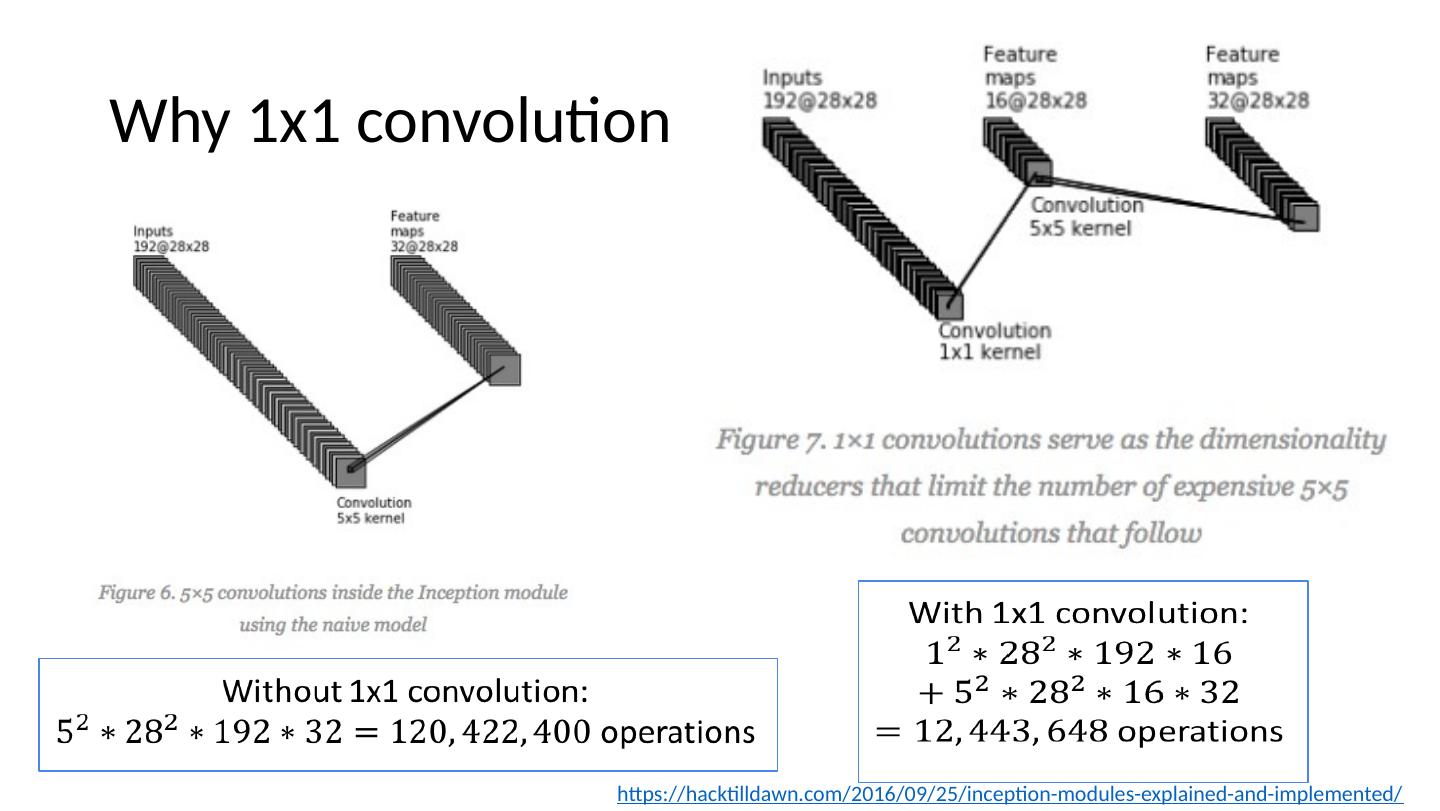

20 .Why 1x1 convolution? https://hacktilldawn.com/2016/09/25/inception-modules-explained-and-implemented/

21 .Why 1x1 convolution? http://cs231n.stanford.edu/slides/2017/cs231n_2017_lecture5.pdf

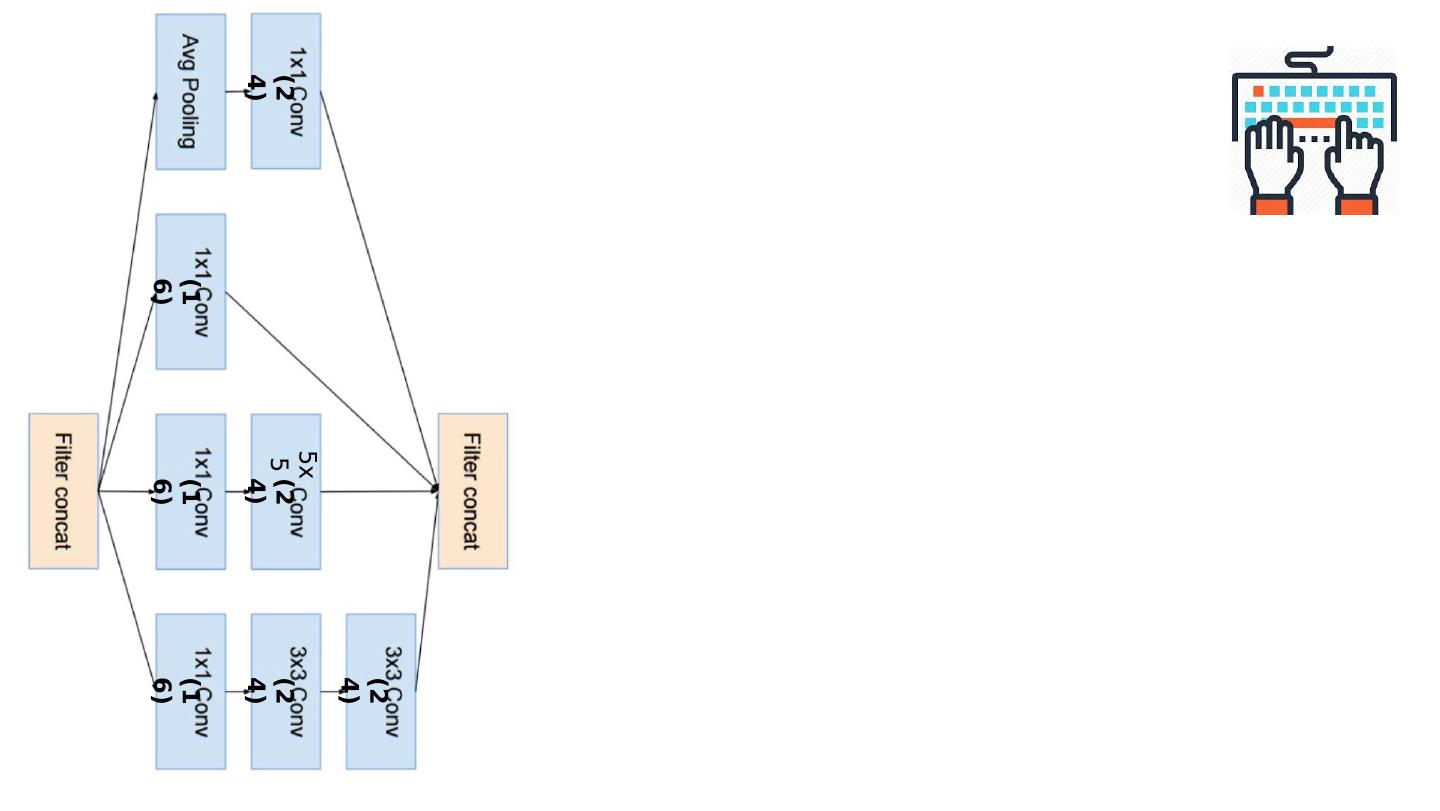

22 .5x5 (16) (16) (16) (24) (24) (24) (24)

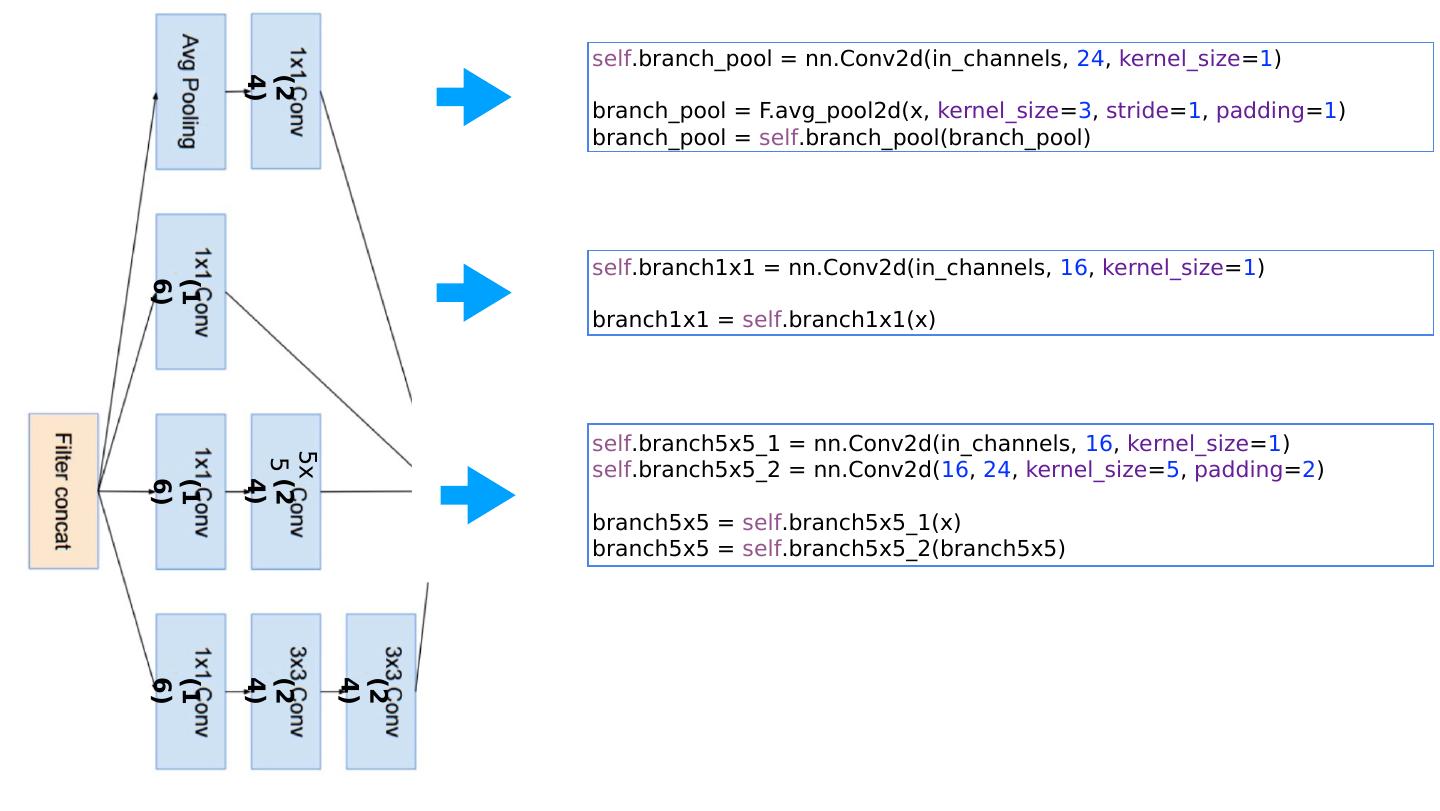

23 .self .branch1x1 = nn.Conv2d(in_channels, 16 , kernel_size = 1 ) branch1x1 = self .branch1x1(x) self .branch5x5_1 = nn.Conv2d(in_channels, 16 , kernel_size = 1 ) self .branch5x5_2 = nn.Conv2d( 16 , 24 , kernel_size = 5 , padding = 2 ) branch5x5 = self .branch5x5_1(x) branch5x5 = self .branch5x5_2(branch5x5) self .branch_pool = nn.Conv2d(in_channels, 24 , kernel_size = 1 ) branch_pool = F.avg_pool2d(x, kernel_size = 3 , stride = 1 , padding = 1 ) branch_pool = self .branch_pool(branch_pool) 5x5 (16) (16) (16) (24) (24) (24) (24)

24 .Inception Module class InceptionA(nn.Module): def __init__ ( self , in_channels): super (InceptionA, self ). __init__ () self .branch1x1 = nn.Conv2d(in_channels, 16 , kernel_size = 1 ) self .branch5x5_1 = nn.Conv2d(in_channels, 16 , kernel_size = 1 ) self .branch5x5_2 = nn.Conv2d( 16 , 24 , kernel_size = 5 , padding = 2 ) self .branch3x3dbl_1 = nn.Conv2d(in_channels, 16 , kernel_size = 1 ) self .branch3x3dbl_2 = nn.Conv2d( 16 , 24 , kernel_size = 3 , padding = 1 ) self .branch3x3dbl_3 = nn.Conv2d( 24 , 24 , kernel_size = 3 , padding = 1 ) self .branch_pool = nn.Conv2d(in_channels, 24 , kernel_size = 1 ) def forward( self , x): branch1x1 = self .branch1x1(x) branch5x5 = self .branch5x5_1(x) branch5x5 = self .branch5x5_2(branch5x5) branch3x3dbl = self .branch3x3dbl_1(x) branch3x3dbl = self .branch3x3dbl_2(branch3x3dbl) branch3x3dbl = self .branch3x3dbl_3(branch3x3dbl) branch_pool = F.avg_pool2d(x, kernel_size = 3 , stride = 1 , padding = 1 ) branch_pool = self .branch_pool(branch_pool) outputs = [branch1x1, branch5x5, branch3x3dbl, branch_pool] return torch.cat(outputs, 1 ) class Net(nn.Module): def __init__ ( self ): super (Net, self ). __init__ () self .conv1 = nn.Conv2d( 1 , 10 , kernel_size = 5 ) self .conv2 = nn.Conv2d( 88 , 20 , kernel_size = 5 ) self .incept1 = InceptionA( in_channels = 10 ) self .incept2 = InceptionA( in_channels = 20 ) self .mp = nn.MaxPool2d( 2 ) self .fc = nn.Linear( 1408 , 10 ) def forward( self , x): in_size = x.size( 0 ) x = F.relu( self .mp( self .conv1(x))) x = self .incept1(x) x = F.relu( self .mp( self .conv2(x))) x = self .incept2(x) x = x.view(in_size, - 1 ) # flatten the tensor x = self .fc(x) return F.log_softmax(x) https://github.com/.../torchvision/models/inception.py