- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

07_ Wide & Deep

展开查看详情

1 .

2 .

3 .

4 .

5 .

6 .

7 .

8 .

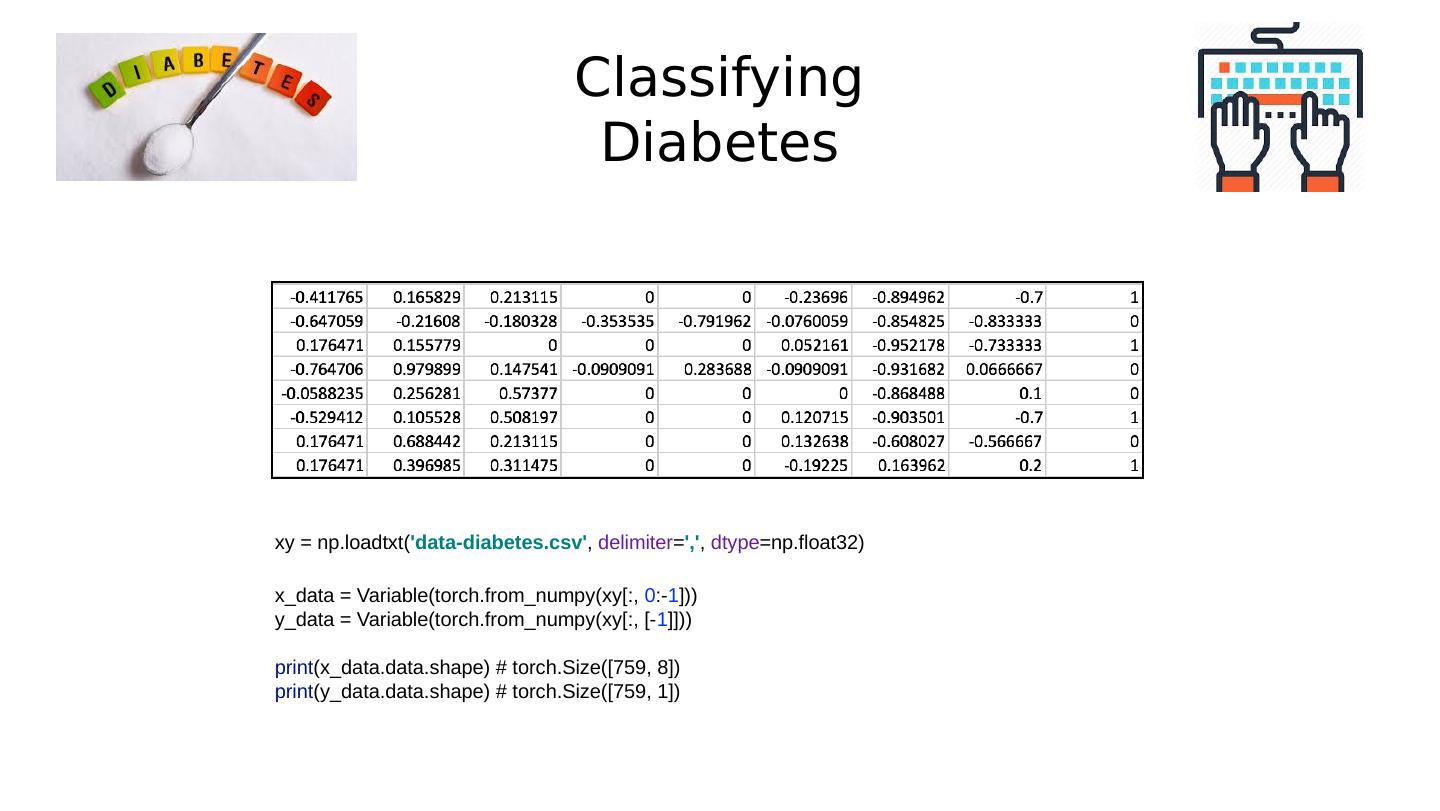

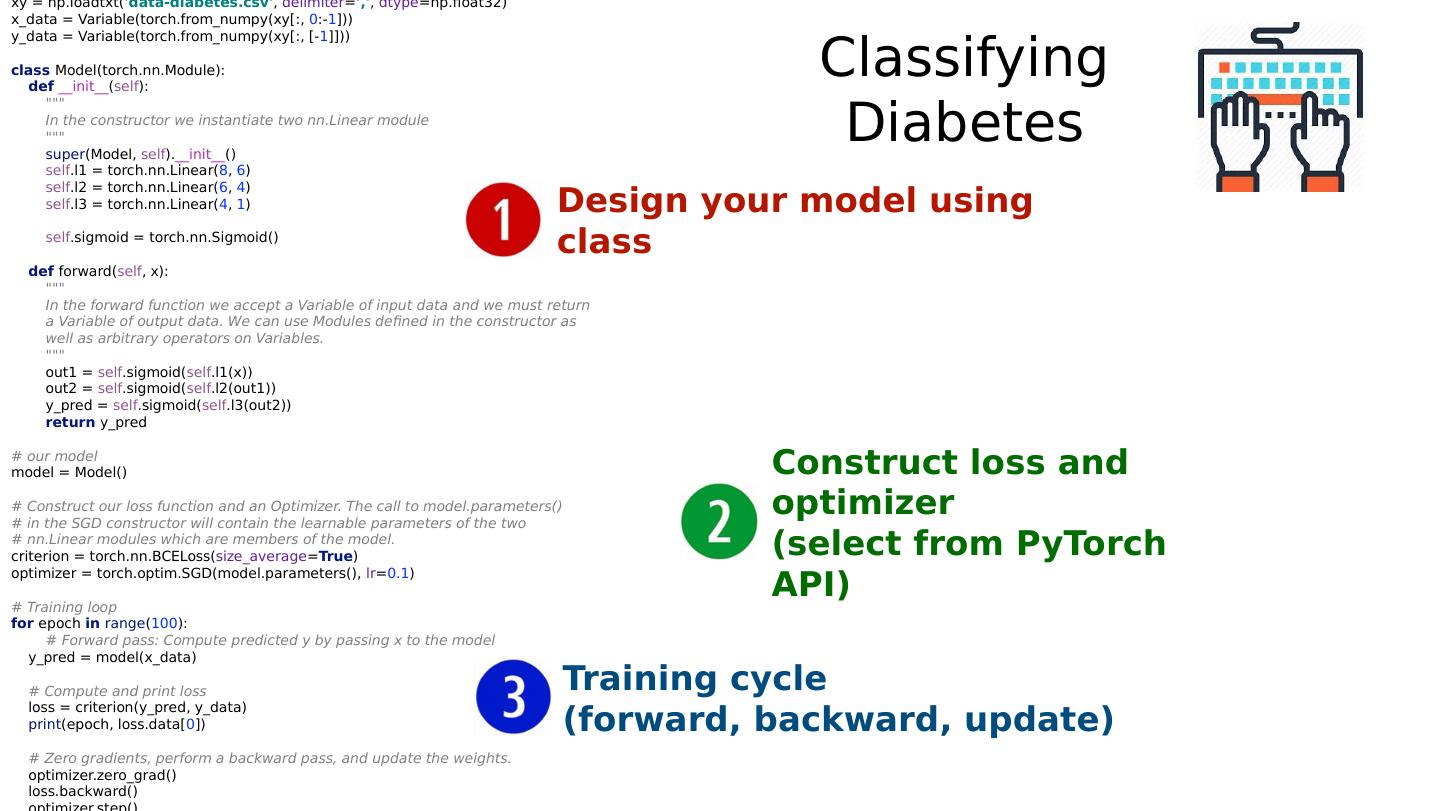

9 .Classifying Diabetes xy = np.loadtxt( data-diabetes.csv , delimiter = , , dtype =np.float32) x_data = Variable(torch.from_numpy(xy[:, 0 :- 1 ])) y_data = Variable(torch.from_numpy(xy[:, [- 1 ]])) print (x_data.data.shape) # torch.Size([759, 8]) print (y_data.data.shape) # torch.Size([759, 1])

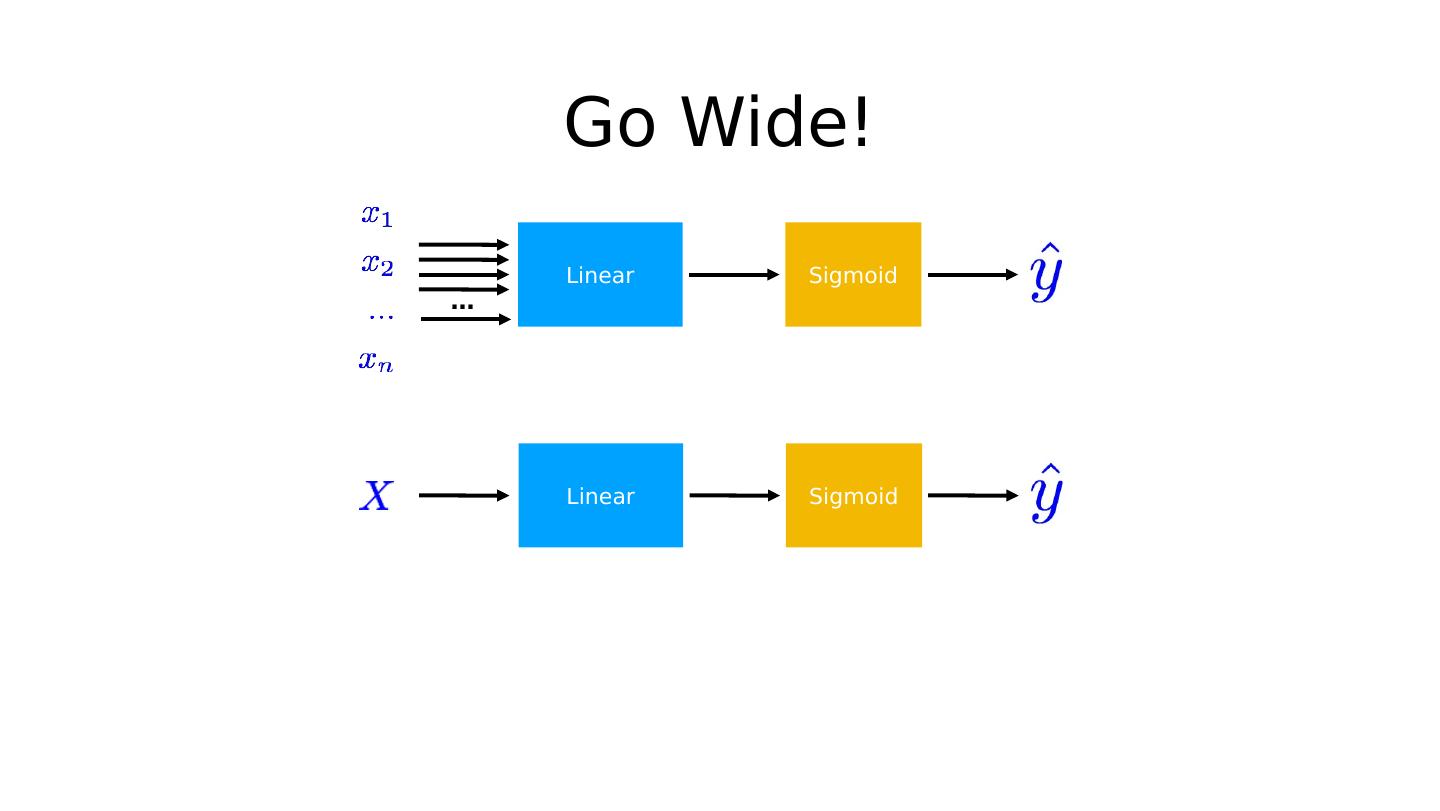

10 .ML/DL for Everyone with Lecture 7: Wide & Deep Sung Kim < hunkim+ml@gmail.com > HKUST Code: https://github.com/hunkim/PyTorchZeroToAll Slides: http://bit.ly/PyTorchZeroAll Videos: http://bit.ly/PyTorchVideo

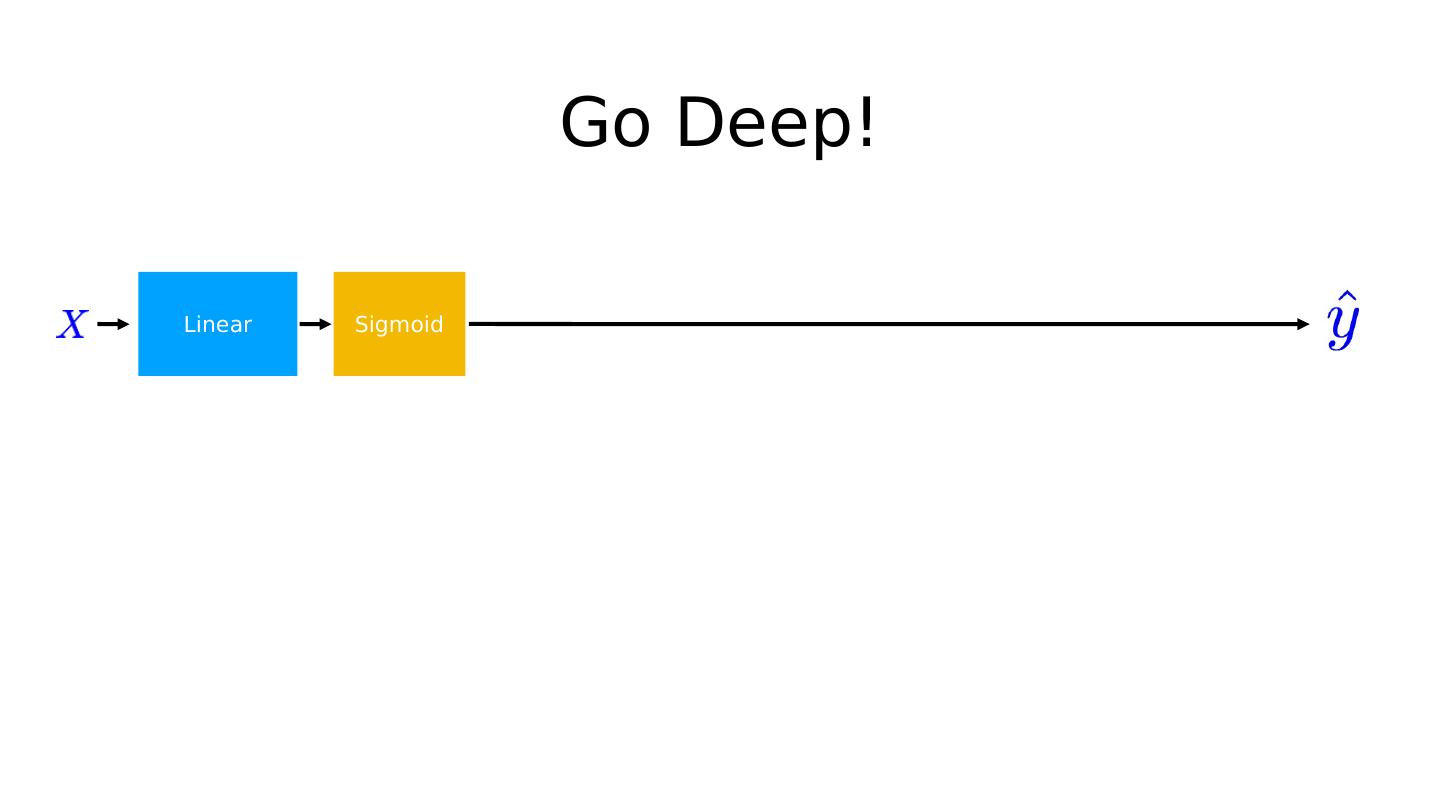

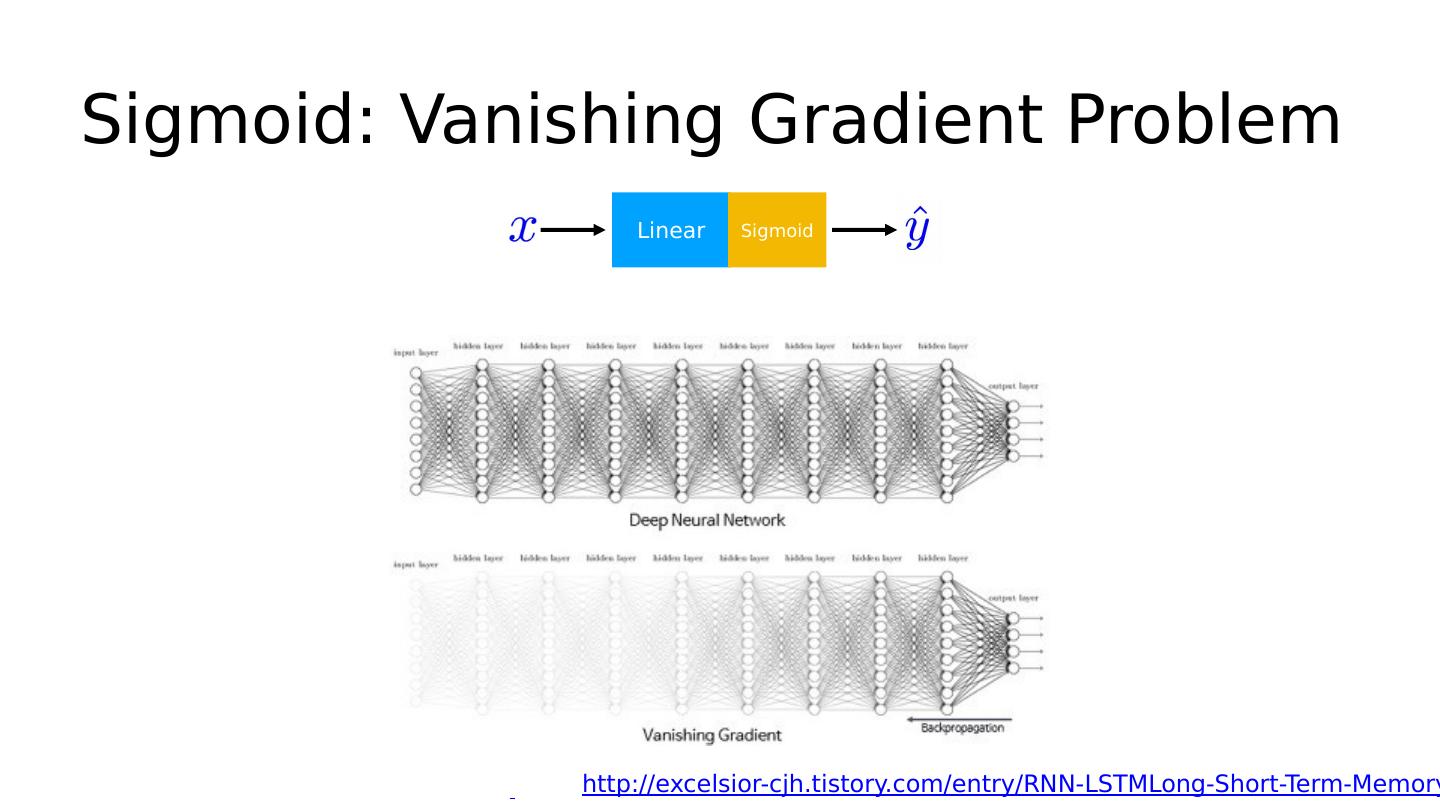

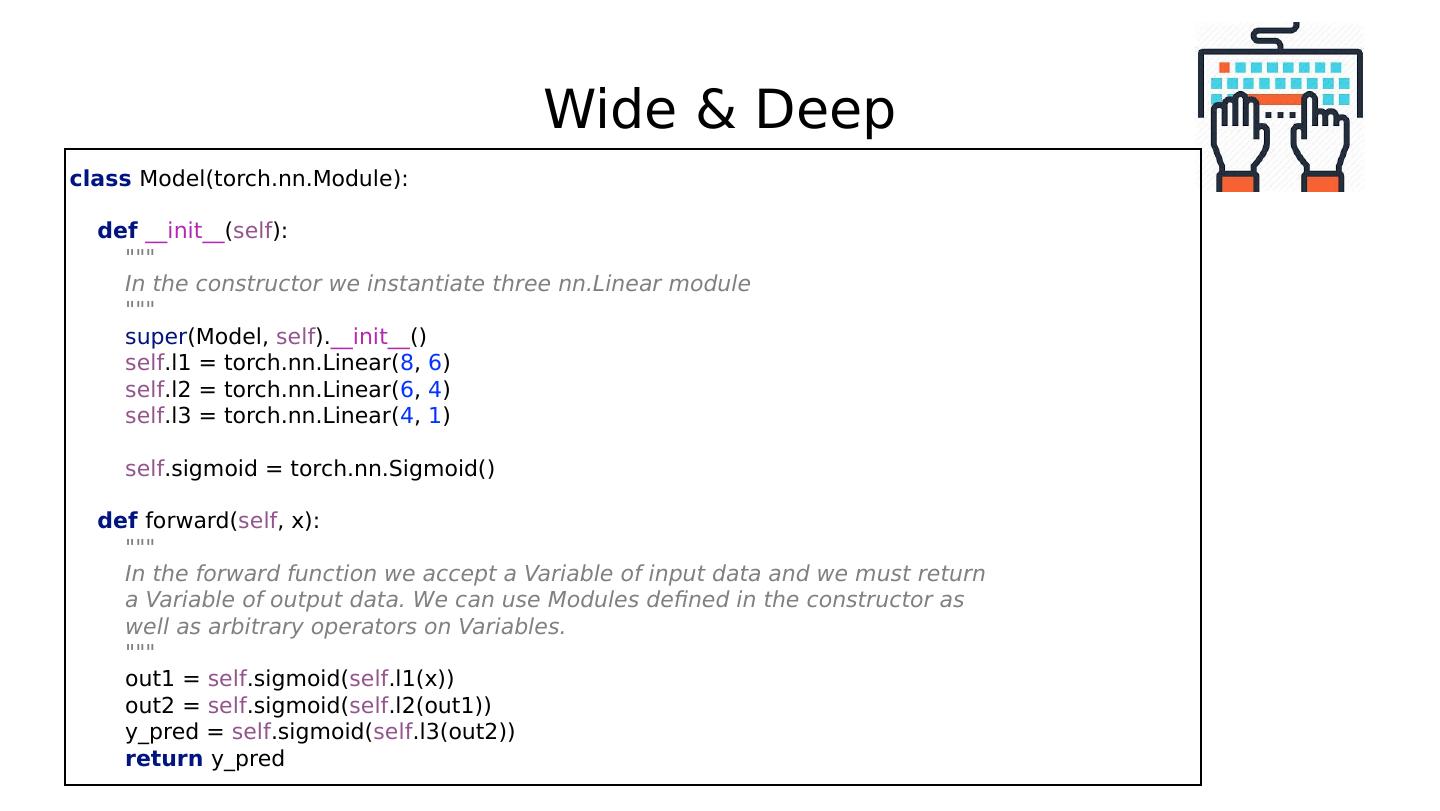

11 .Go Deep! Linear Sigmoid Linear Sigmoid Linear Sigmoid … sigmoid = torch.nn.Sigmoid() l1 = torch.nn.Linear( 2 , 4 ) l2 = torch.nn.Linear( 4 , 3 ) l3 = torch.nn.Linear( 3 , 1 ) out1 = sigmoid(l1(x_data)) out2 = sigmoid(l2(out1)) y_pred = sigmoid (l3(out2)

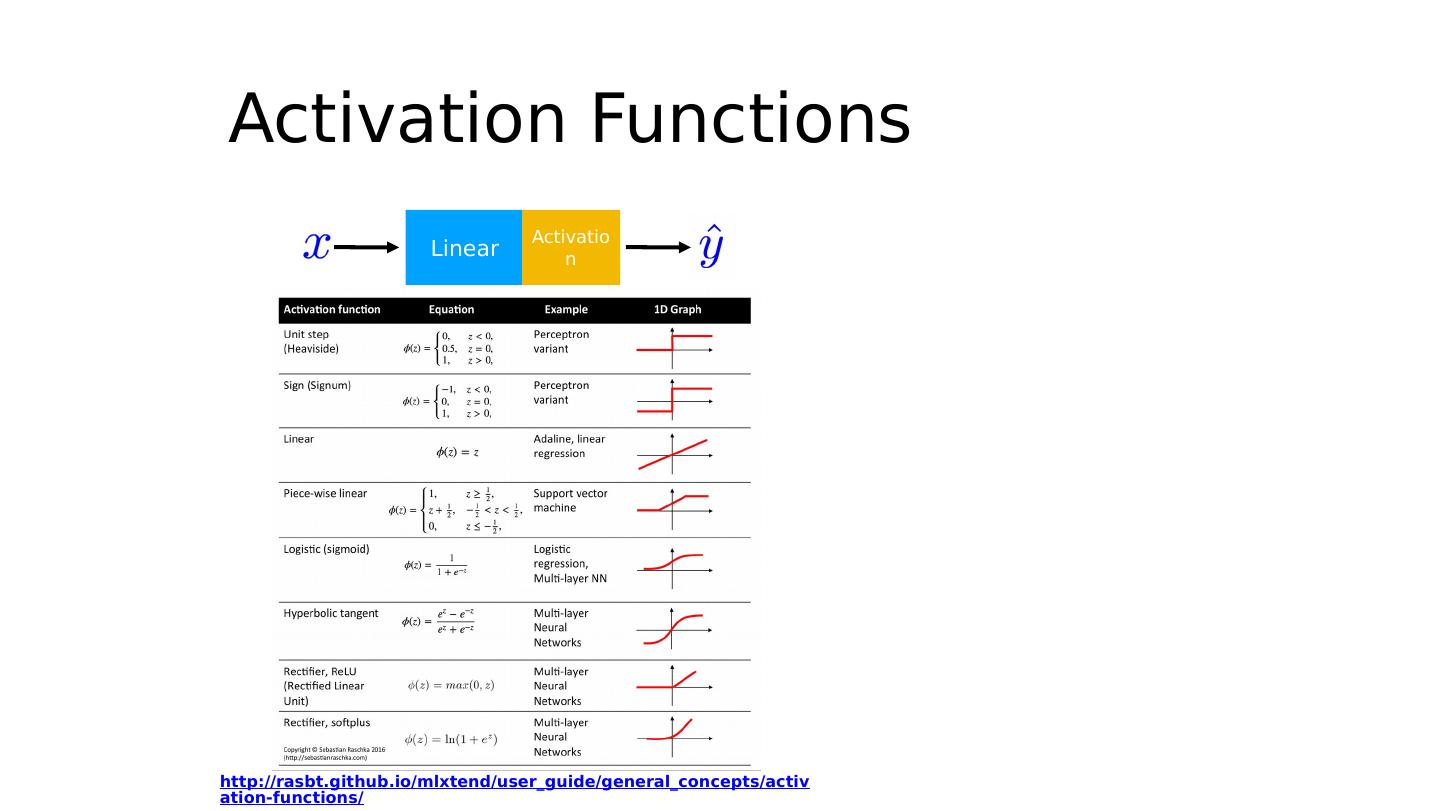

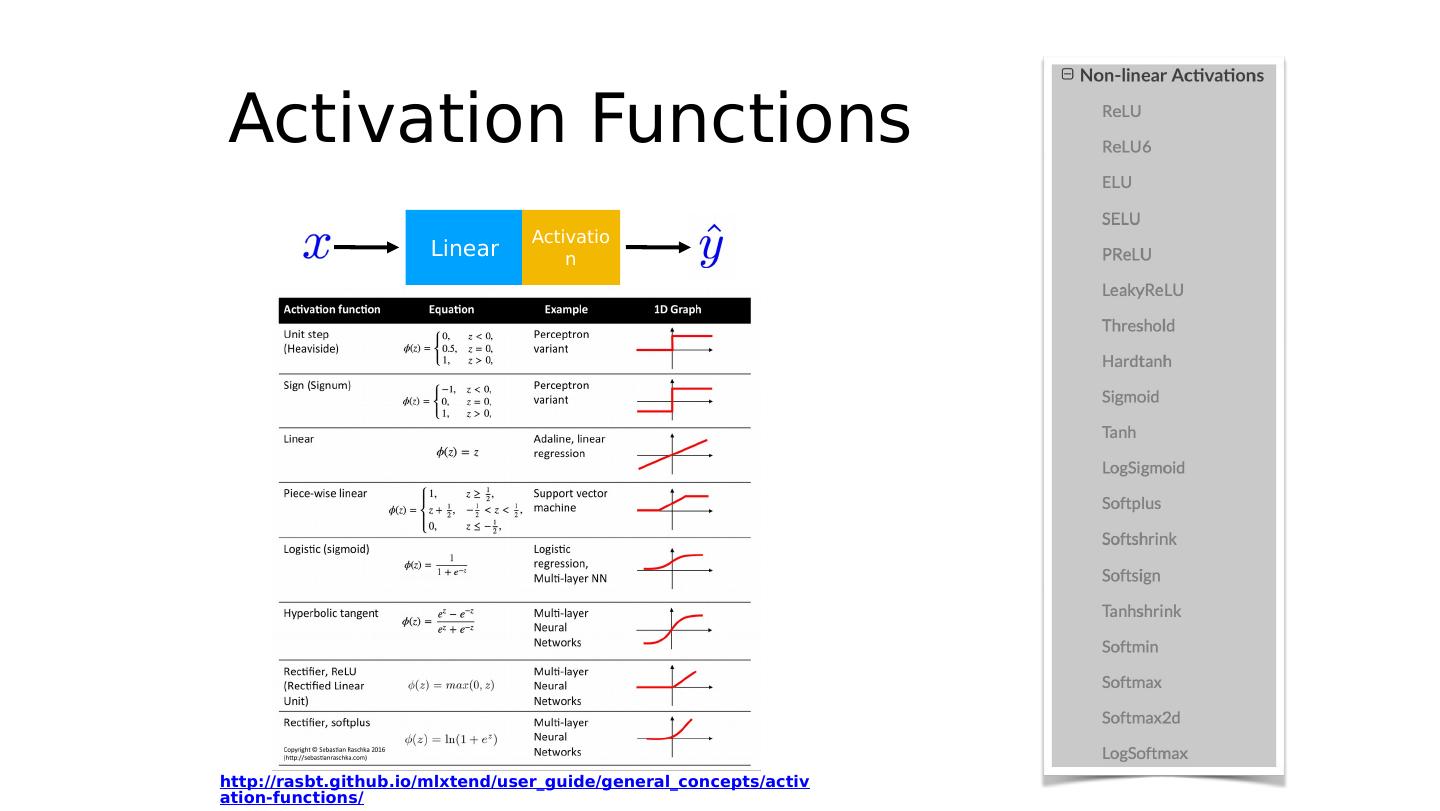

12 .Activation Functions Linear Activation http://rasbt.github.io/mlxtend/user_guide/general_concepts/activation-functions/

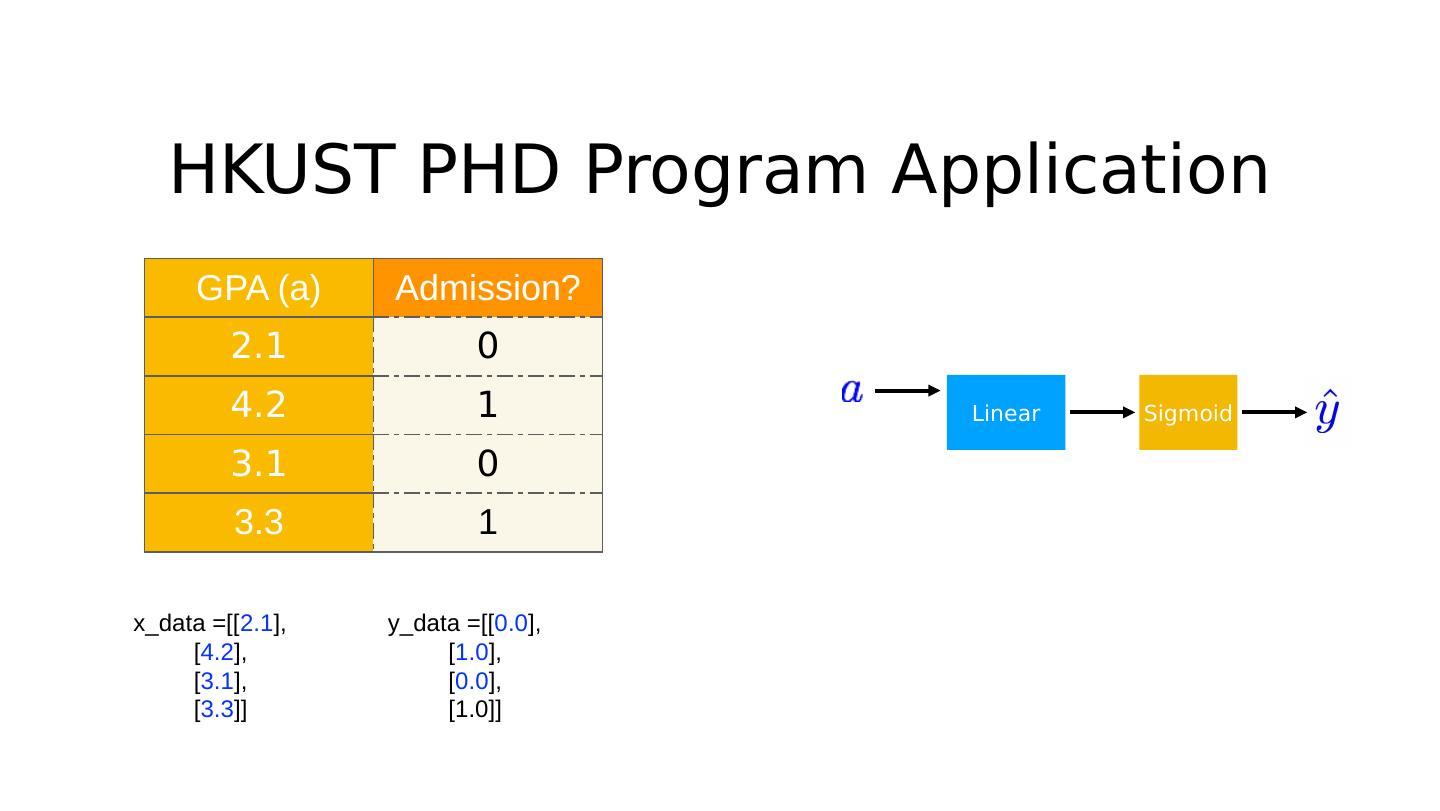

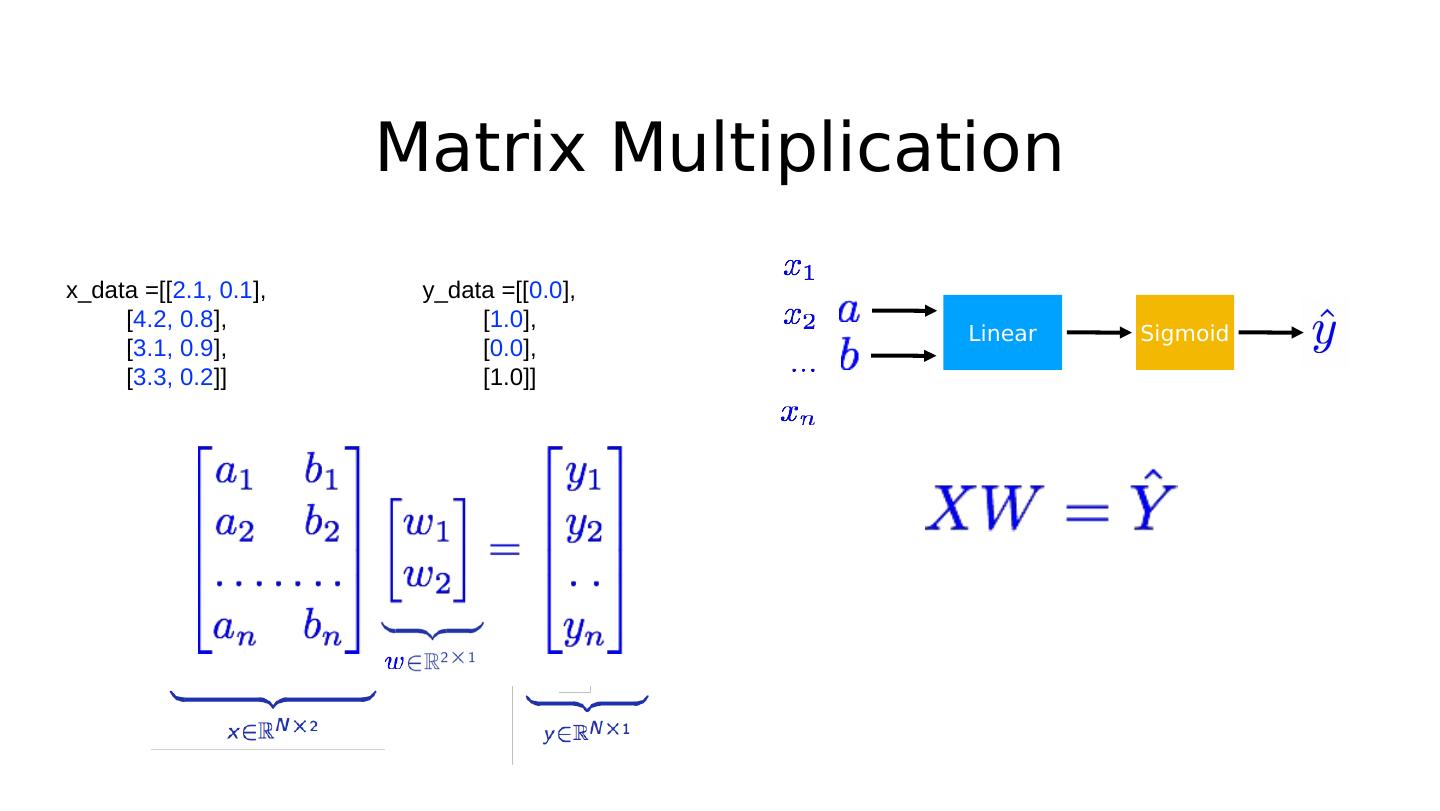

13 .Matrix Multiplication Linear Sigmoid x_data =[[ 2.1, 0.1 ], [ 4.2, 0.8 ], [ 3.1, 0.9 ], [ 3.3, 0.2 ]] y_data =[[ 0.0 ], [ 1.0 ], [ 0.0 ], [1.0]] linear = torch.nn.Linear( 2 , 1 ) y_prd = linear(x_data)

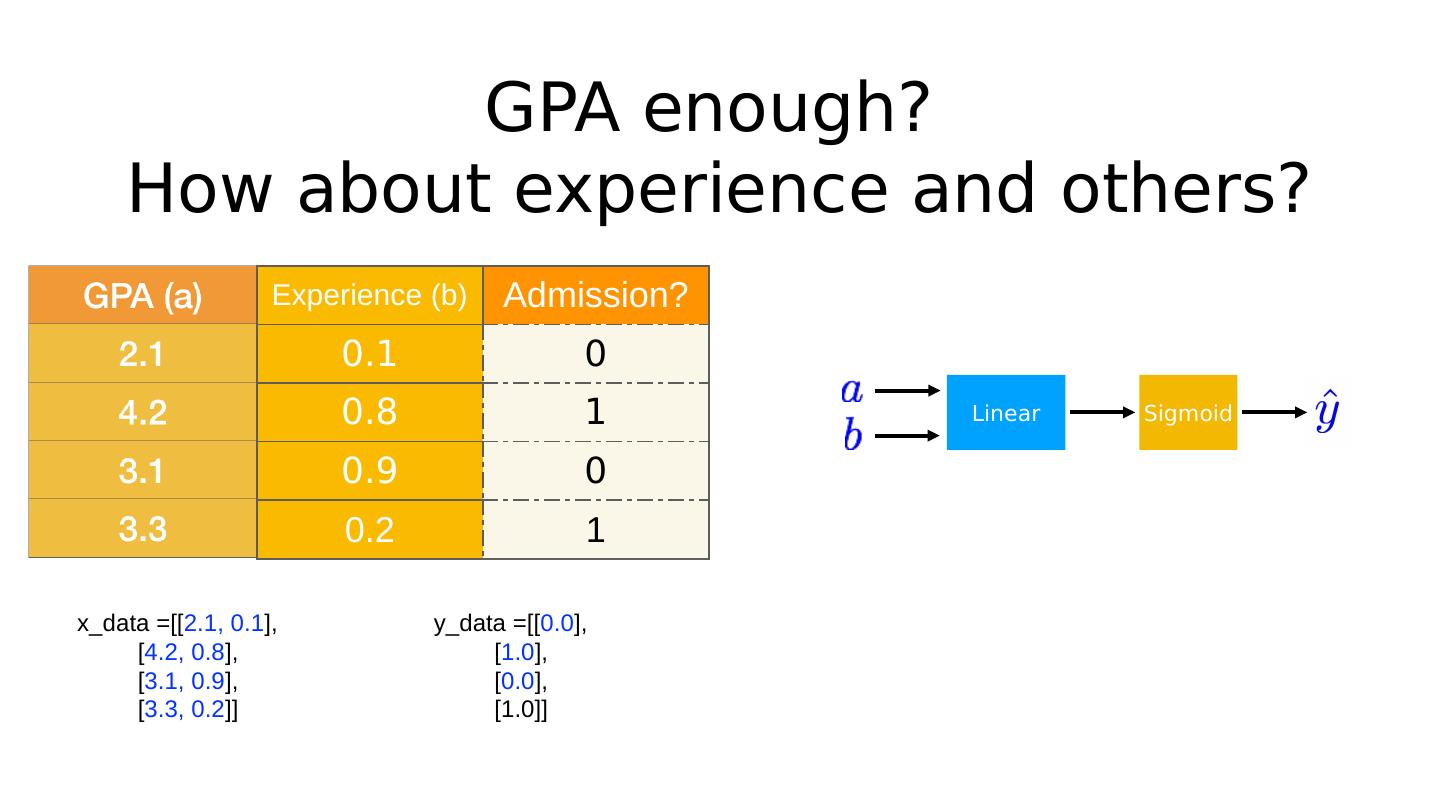

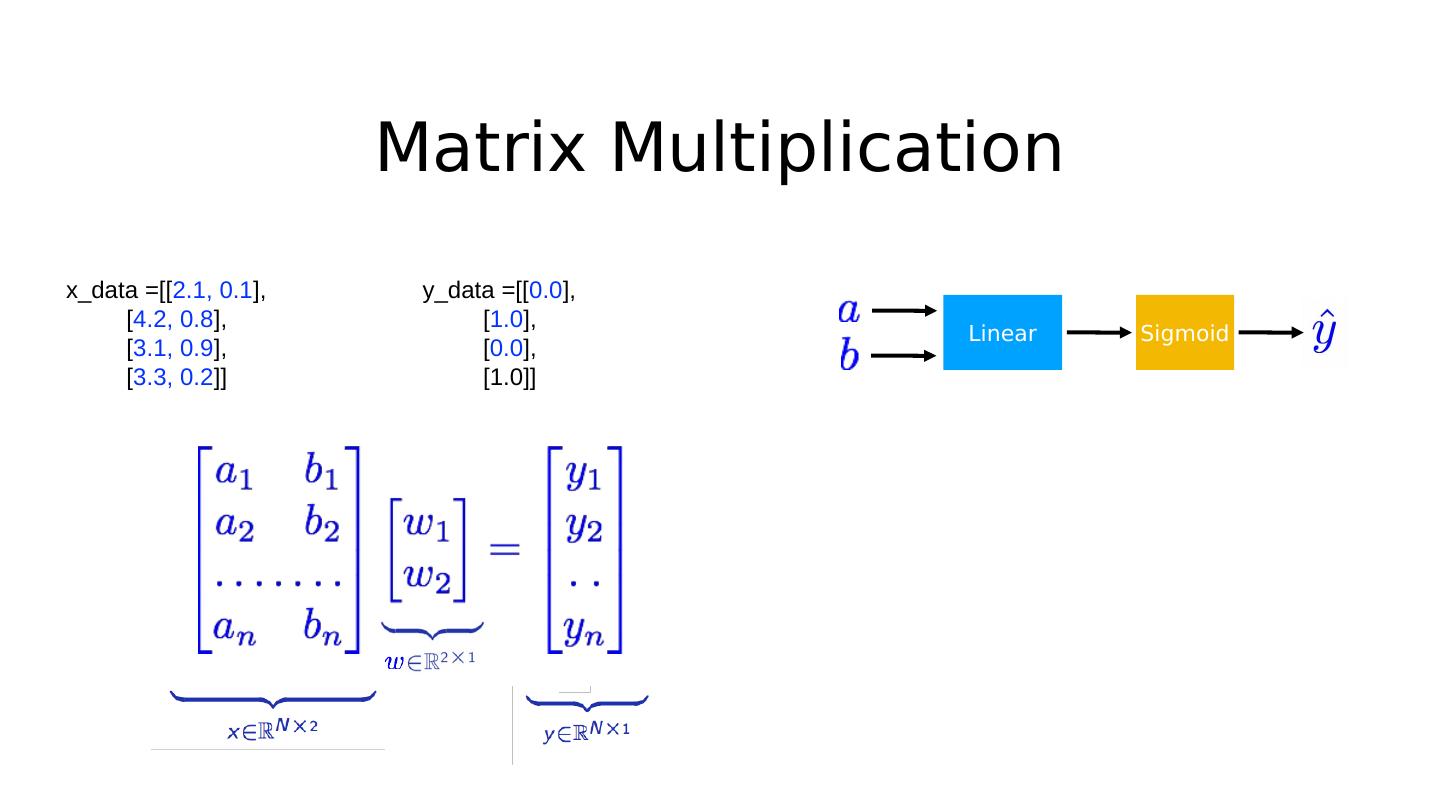

14 .Matrix Multiplication Linear Sigmoid x_data =[[ 2.1, 0.1 ], [ 4.2, 0.8 ], [ 3.1, 0.9 ], [ 3.3, 0.2 ]] y_data =[[ 0.0 ], [ 1.0 ], [ 0.0 ], [1.0]]

15 .Exercise 7-1 Classifying Diabetes with deep nets More than 10 layers Find other classification datasets Try with deep network Try different activation functions Sigmoid to something else