- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

05_ Linear regression in PyTorch way

展开查看详情

1 .

2 .

3 .

4 .

5 .

6 .

7 .Lecture 6: Logistic regression

8 .PyTorch forward/backward w = Variable (torch.Tensor([ 1.0 ]), requires_grad = True ) # Any random value # our model forward pass def forward(x): return x * w # Loss function def loss(x, y): y_pred = forward(x) return (y_pred - y) * (y_pred - y) # Training loop for epoch in range ( 10 ): for x_val, y_val in zip (x_data, y_data): l = loss(x_val, y_val) l.backward() print ( " grad: " , x_val, y_val, w.grad.data[ 0 ]) w.data = w.data - 0.01 * w.grad.data # Manually zero the gradients after updating weights w.grad.data.zero_() print ( "progress:" , epoch, l.data[ 0 ])

9 .Model class in PyTorch way

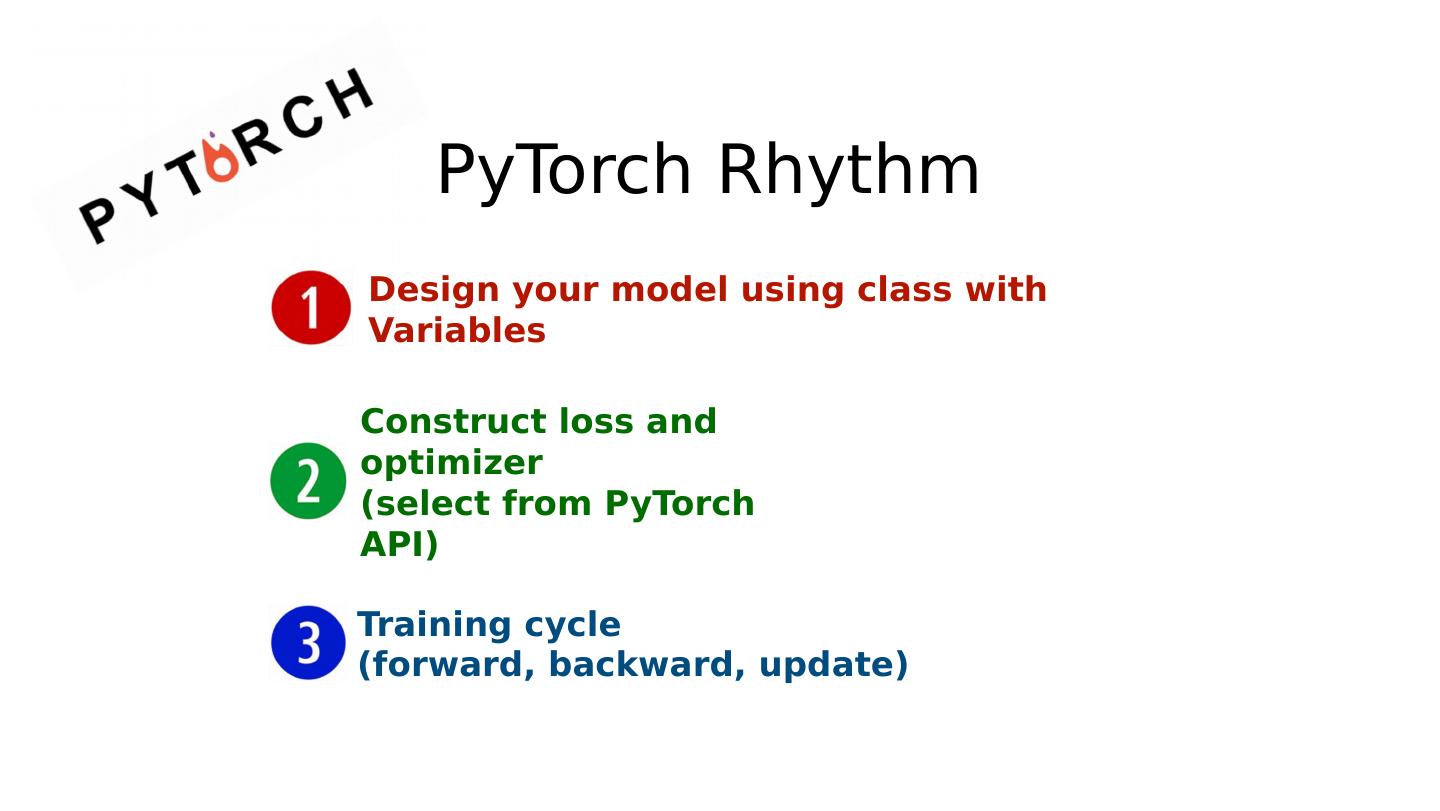

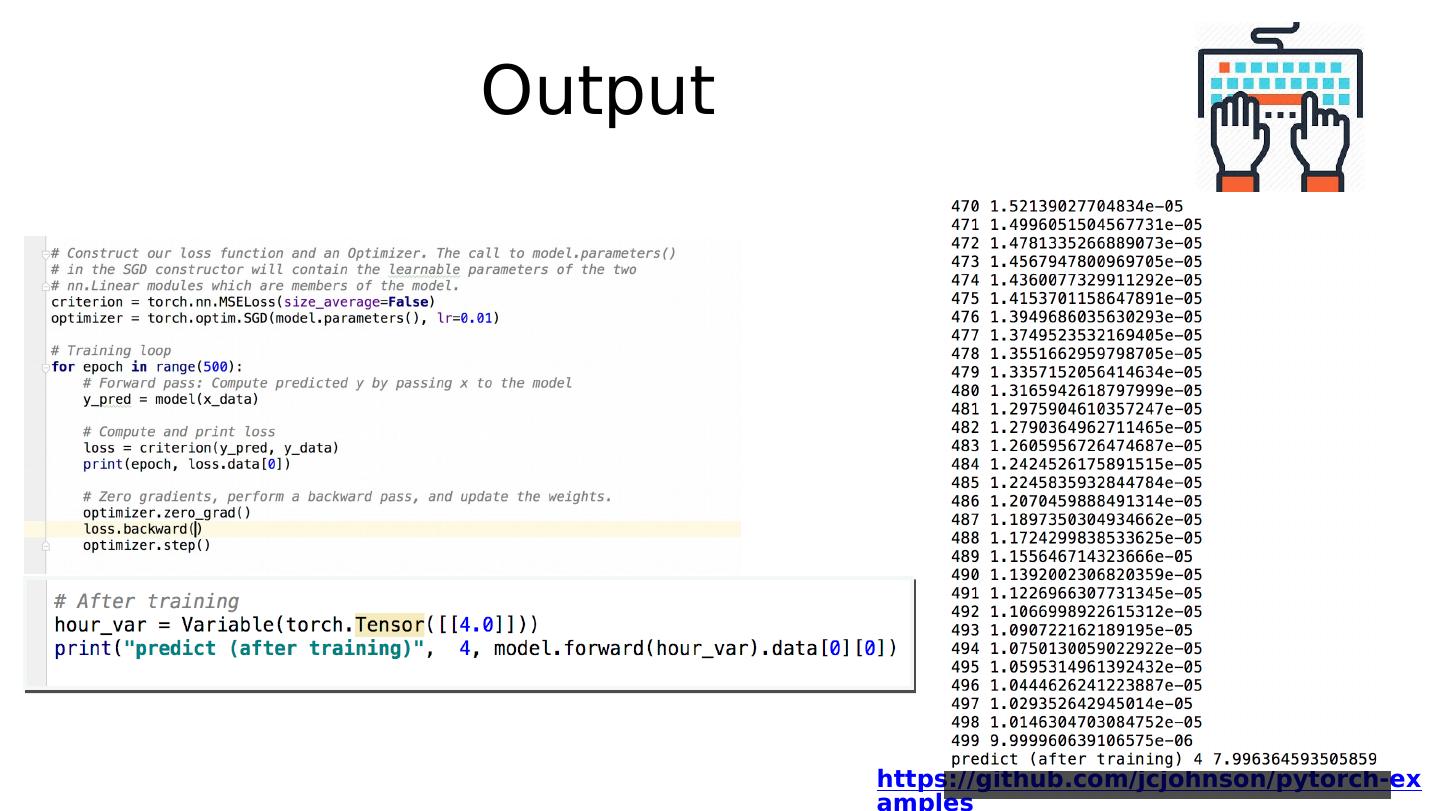

10 .Training CIFAR10 Classifier http://pytorch.org/tutorials/beginner/blitz/cifar10_tutorial.html Construct loss and optimizer (select from PyTorch API) Training cycle (forward, backward, update) Design your model using class

11 .https://github.com/jcjohnson/pytorch-examples for x_val, y_val in zip (x_data, y_data): ... w.data = w.data - 0.01 * w.grad.data Training: forward, loss, backward, step

12 .https://github.com/jcjohnson/pytorch-examples for x_val, y_val in zip (x_data, y_data): ... w.data = w.data - 0.01 * w.grad.data Training: forward, loss, backward, step