- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

03_ Gradient Descent

展开查看详情

1 .

2 .

3 .

4 .

5 .

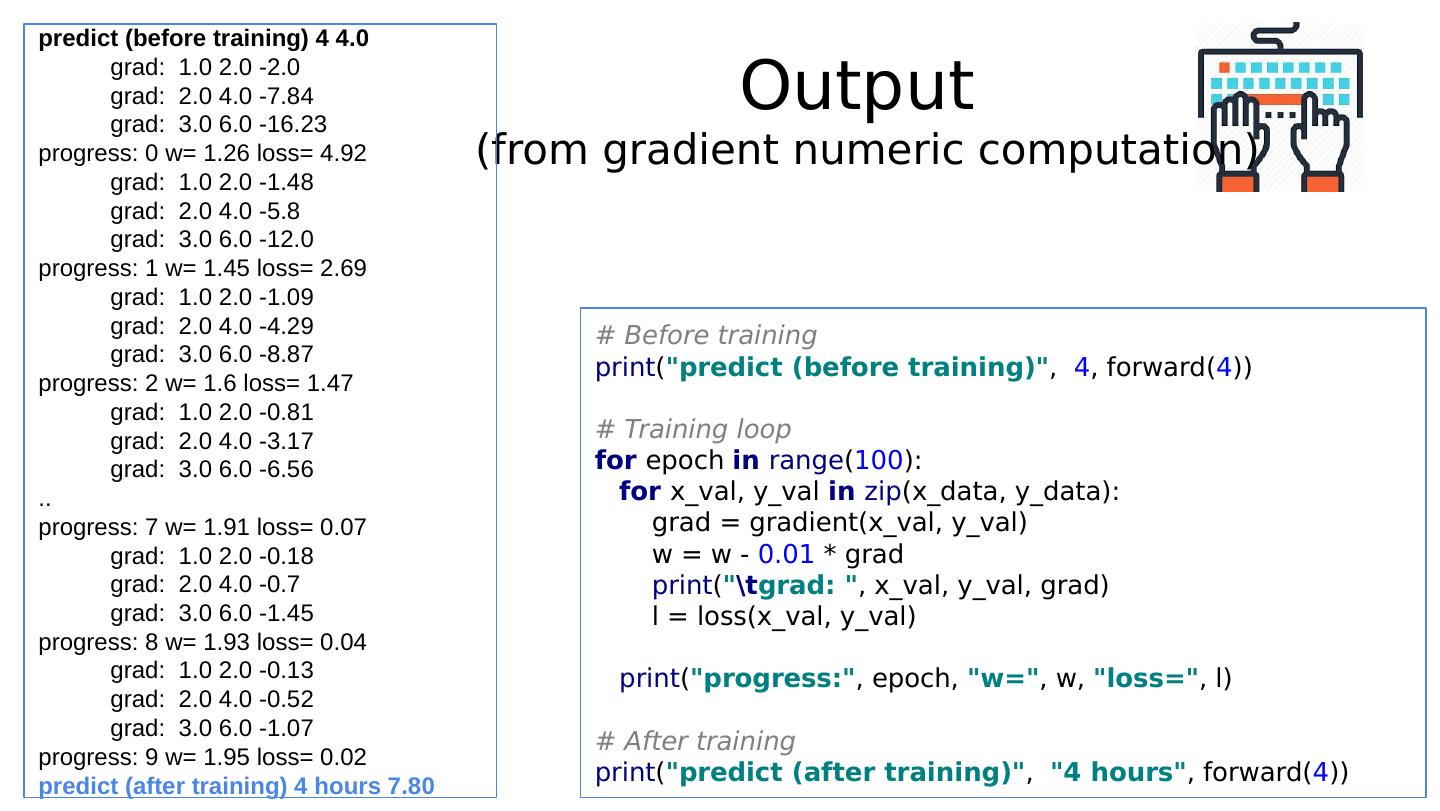

6 .

7 .

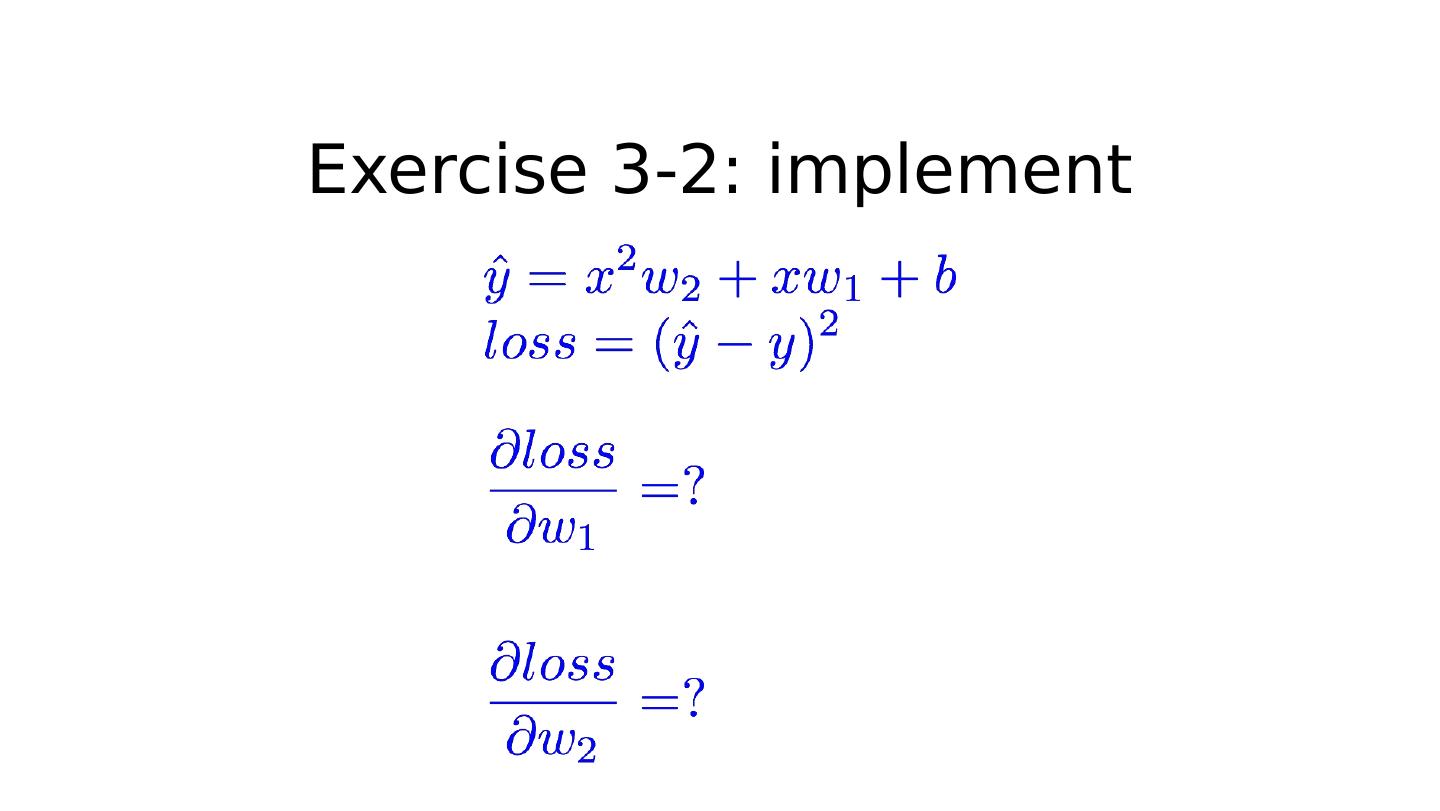

8 .Exercise 3-2: implement

9 .ML/DL for Everyone with Lecture 3: Gradient Descent Sung Kim < hunkim+ml@gmail.com > HKUST Code: https://github.com/hunkim/PyTorchZeroToAll Slides: http://bit.ly/PyTorchZeroAll Videos: http://bit.ly/PyTorchVideo

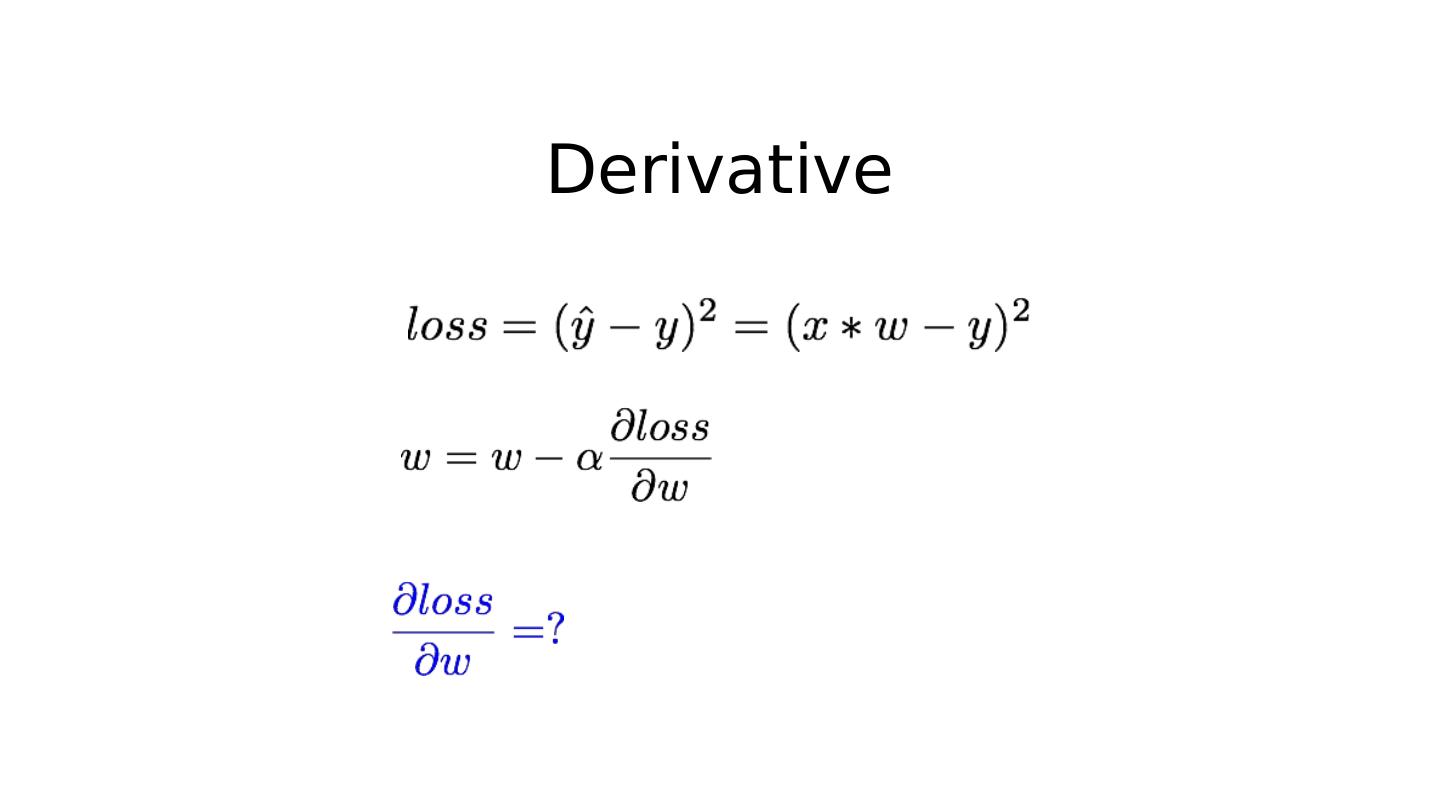

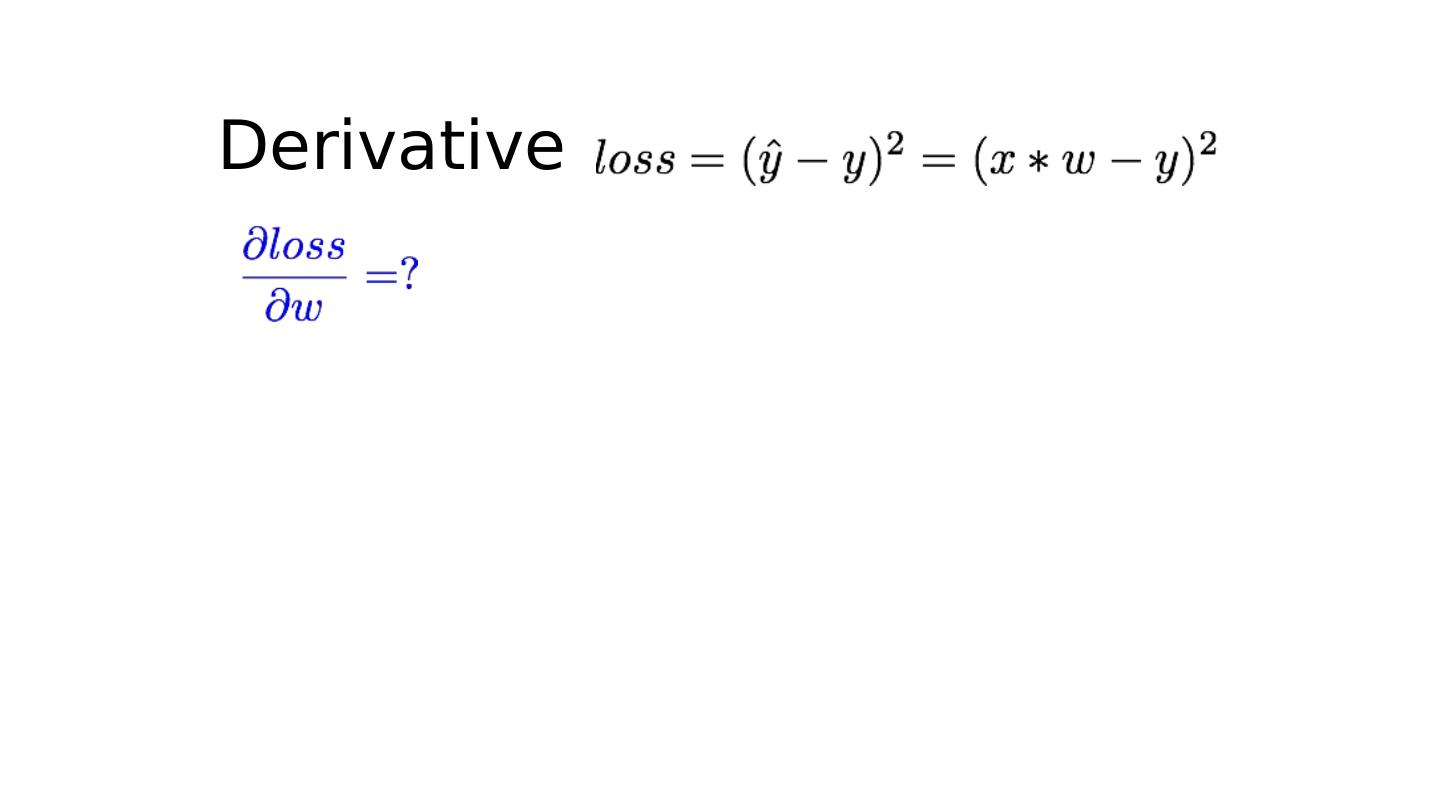

10 .Derivative https://www.derivative-calculator.net/

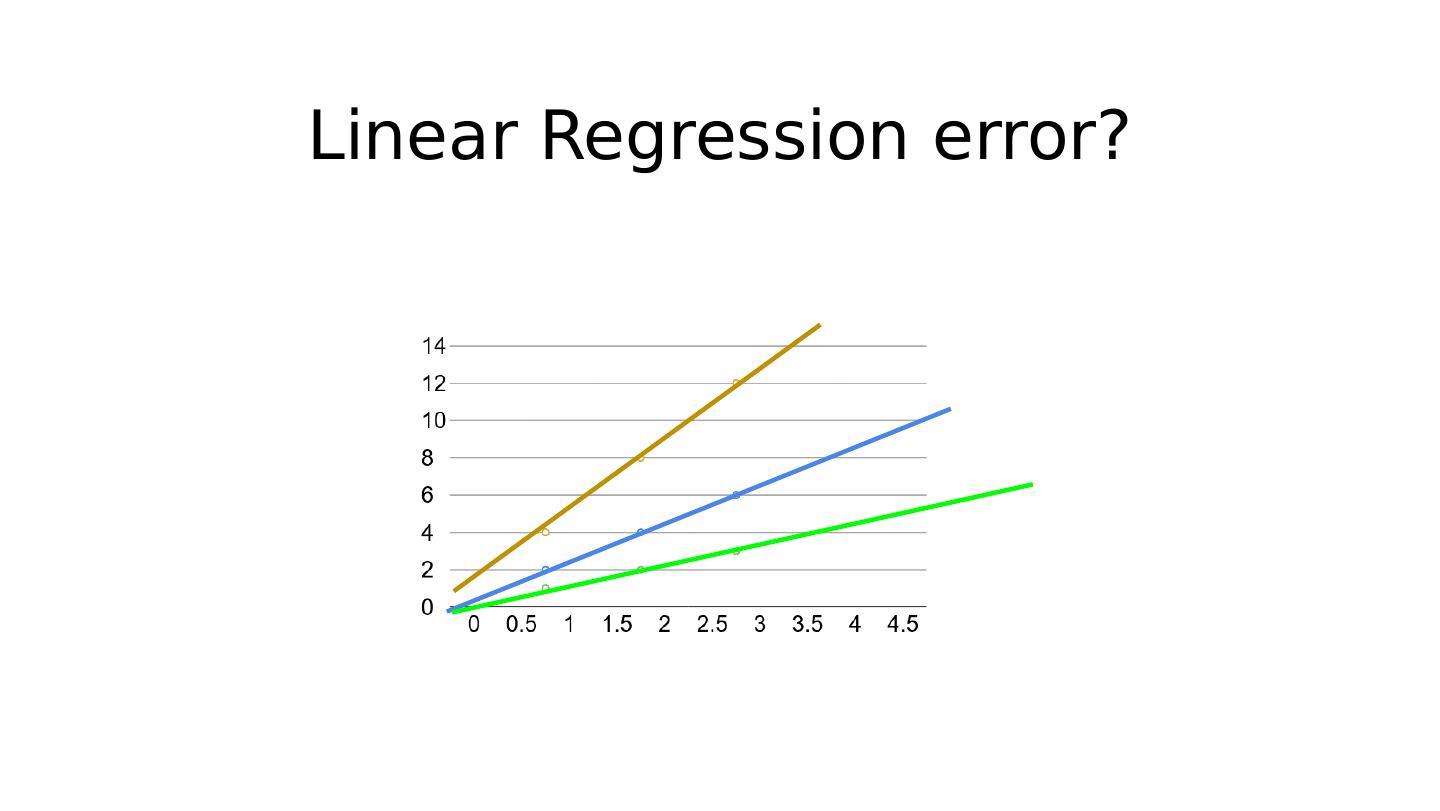

11 .What is the learning: find w that minimizes the loss Loss (w=0) Loss (w=1) Loss (w=2) Loss (w=3) Loss (w=4) mean=56/3=18.7 mean=14/3=4.7 mean=0 mean=14/3=4.7 mean=56/3=18.7

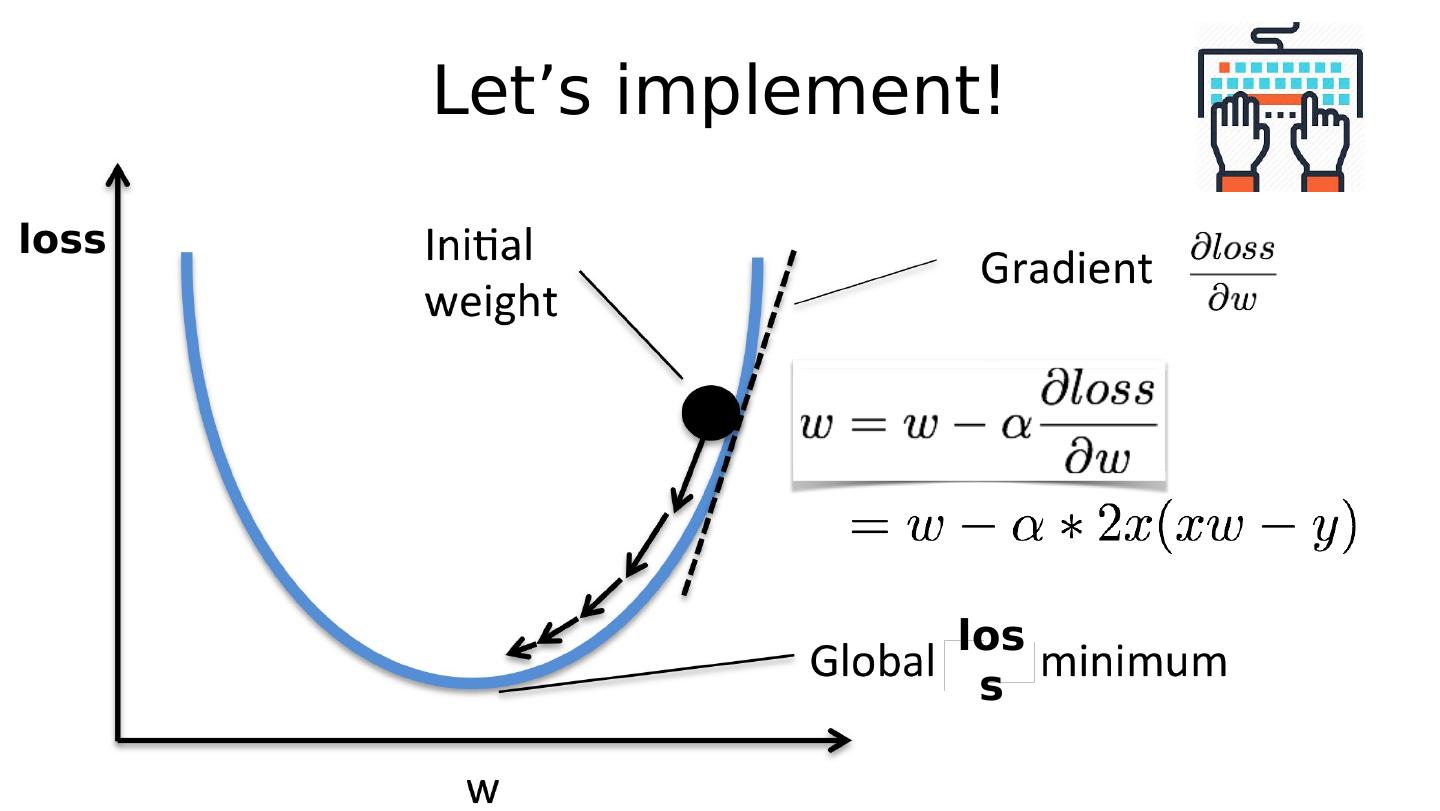

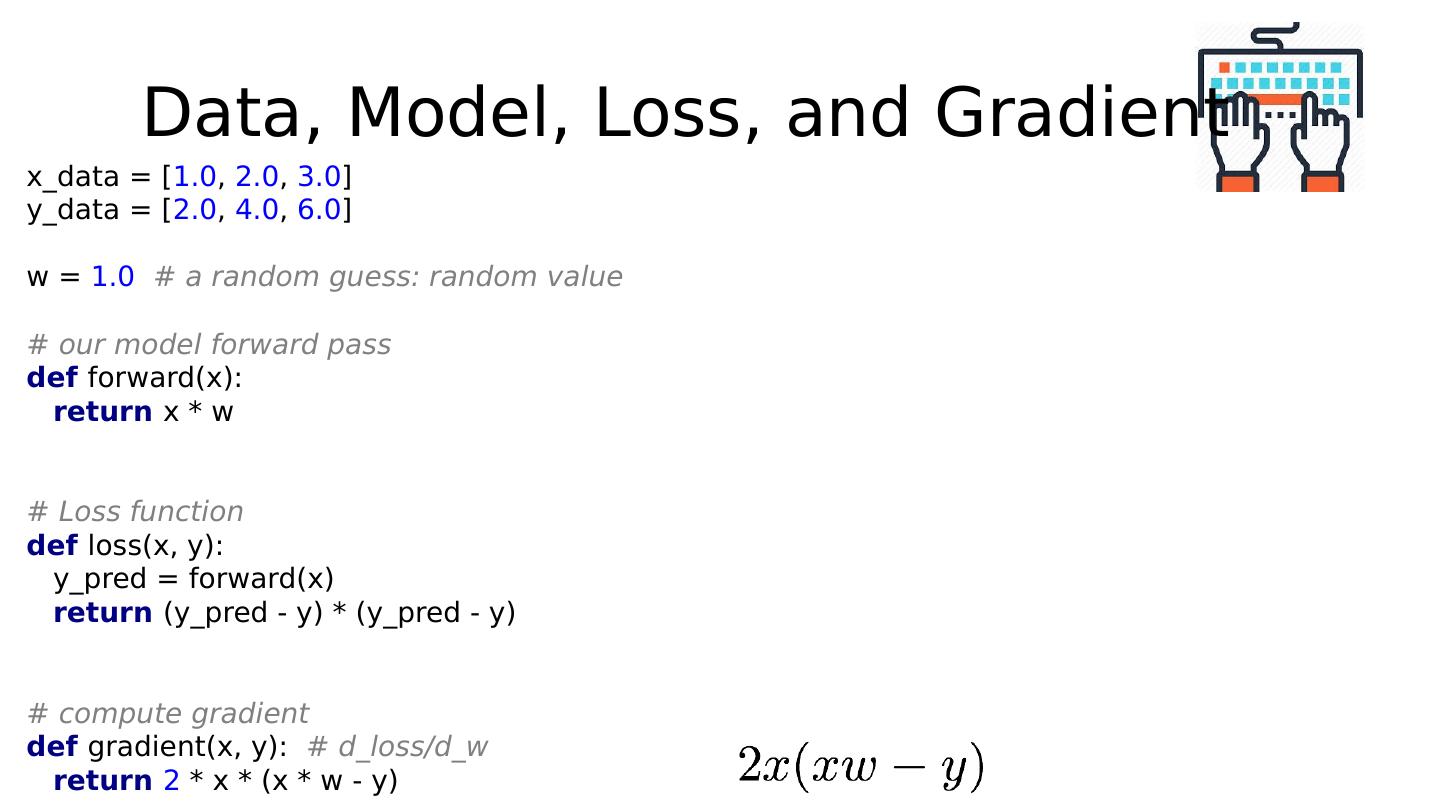

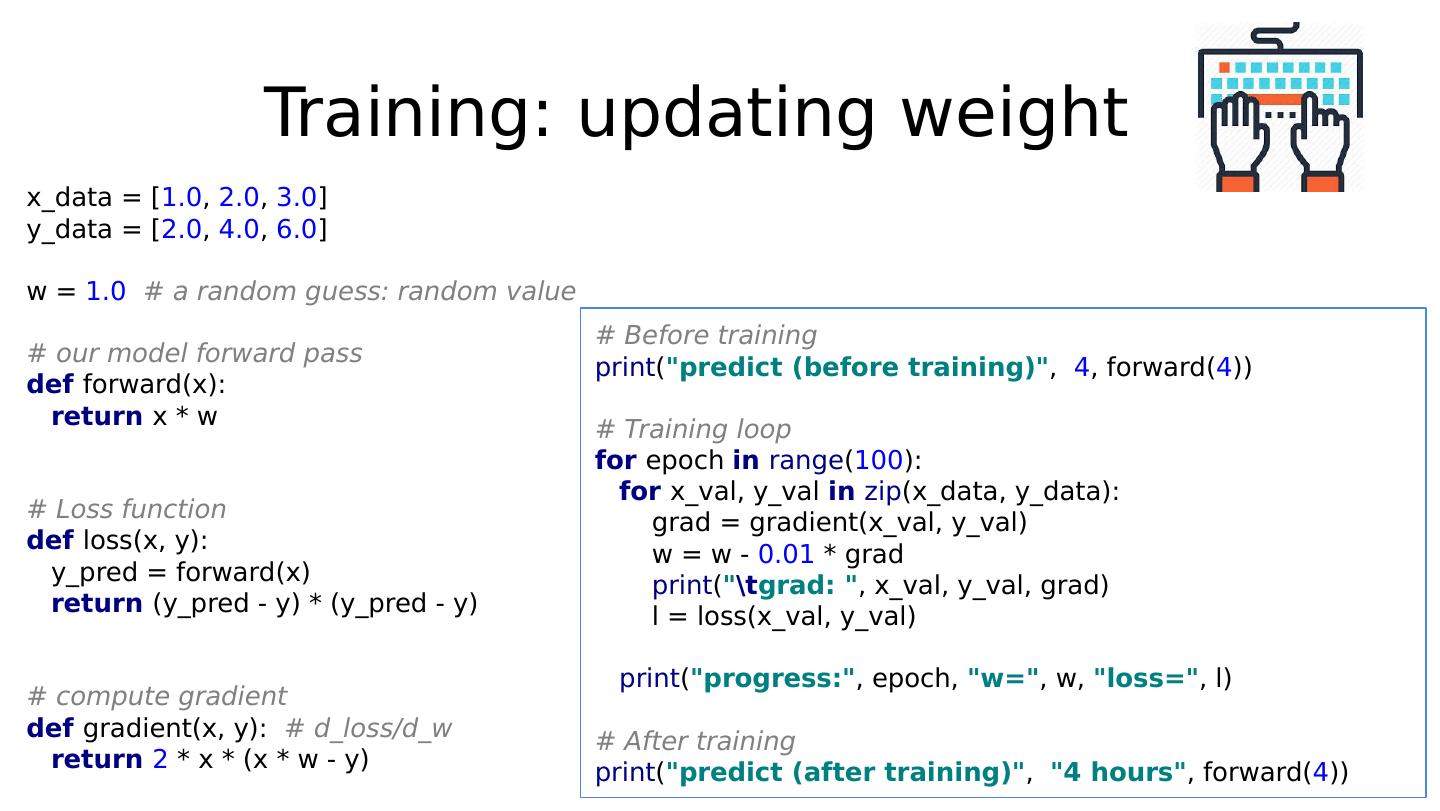

12 .Derivative

13 .Training: updating weight x_data = [ 1.0 , 2.0 , 3.0 ] y_data = [ 2.0 , 4.0 , 6.0 ] w = 1.0 # a random guess: random value # our model forward pass def forward(x): return x * w # Loss function def loss(x, y): y_pred = forward(x) return (y_pred - y) * (y_pred - y) # compute gradient def gradient(x, y): # d_loss/d_w return 2 * x * (x * w - y) # Before training print ( "predict (before training)" , 4 , forward( 4 )) # Training loop for epoch in range ( 100 ): for x_val, y_val in zip (x_data, y_data): grad = gradient(x_val, y_val) w = w - 0.01 * grad print ( " grad: " , x_val, y_val, grad) l = loss(x_val, y_val) print ( "progress:" , epoch, "w=" , w, "loss=" , l) # After training print ( "predict (after training)" , "4 hours" , forward( 4 ))