- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

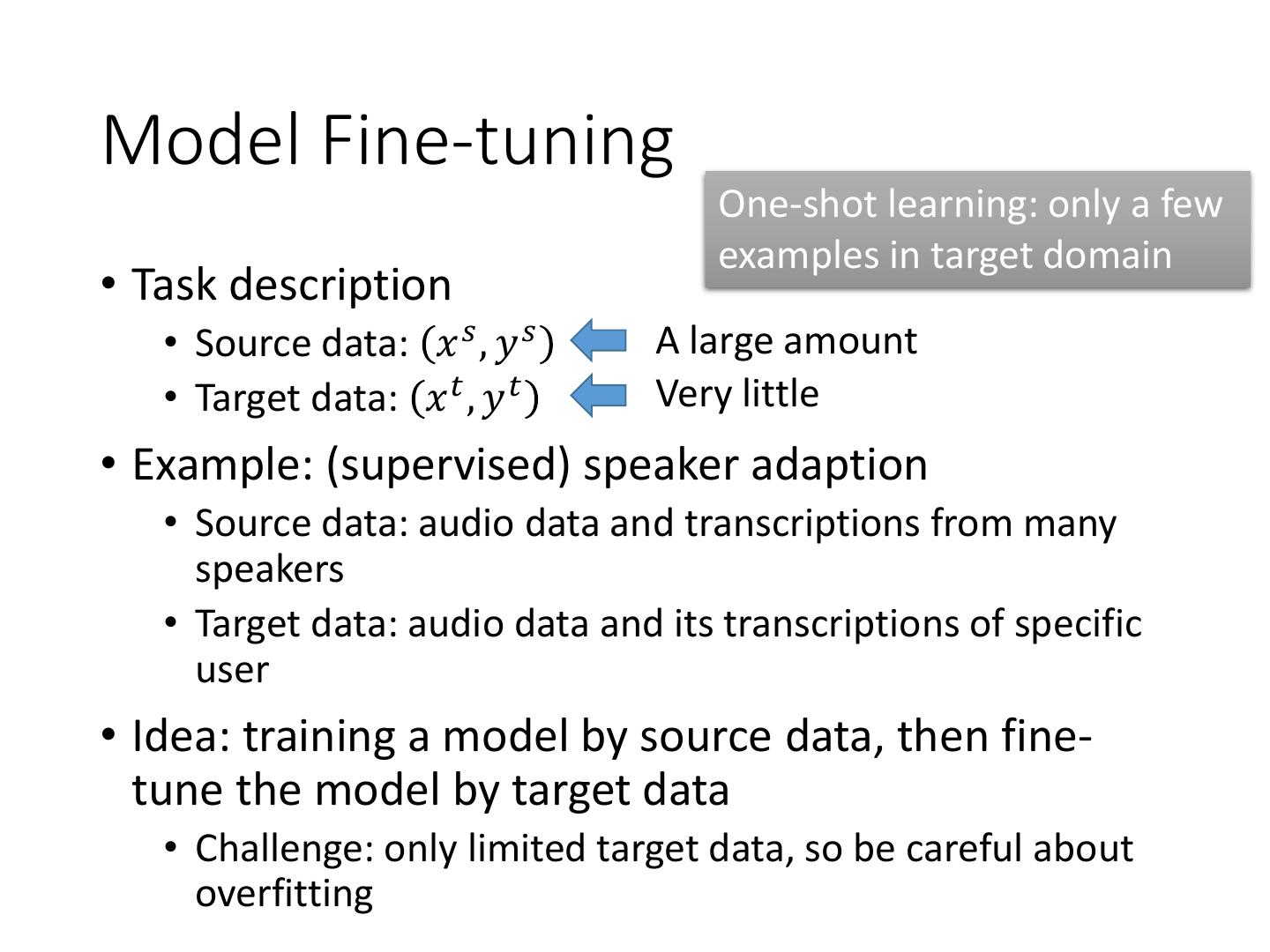

Transfer Learning

展开查看详情

1 .Transfer Learning

2 . http://weebly110810.weebly.com/3 96403913129399.html http://www.sucaitianxia.com/png/c Transfer Learning artoon/200811/4261.html Dog/Cat Classifier cat dog Data not directly related to the task considered elephant tiger dog cat Similar domain, different tasks Different domains, same task

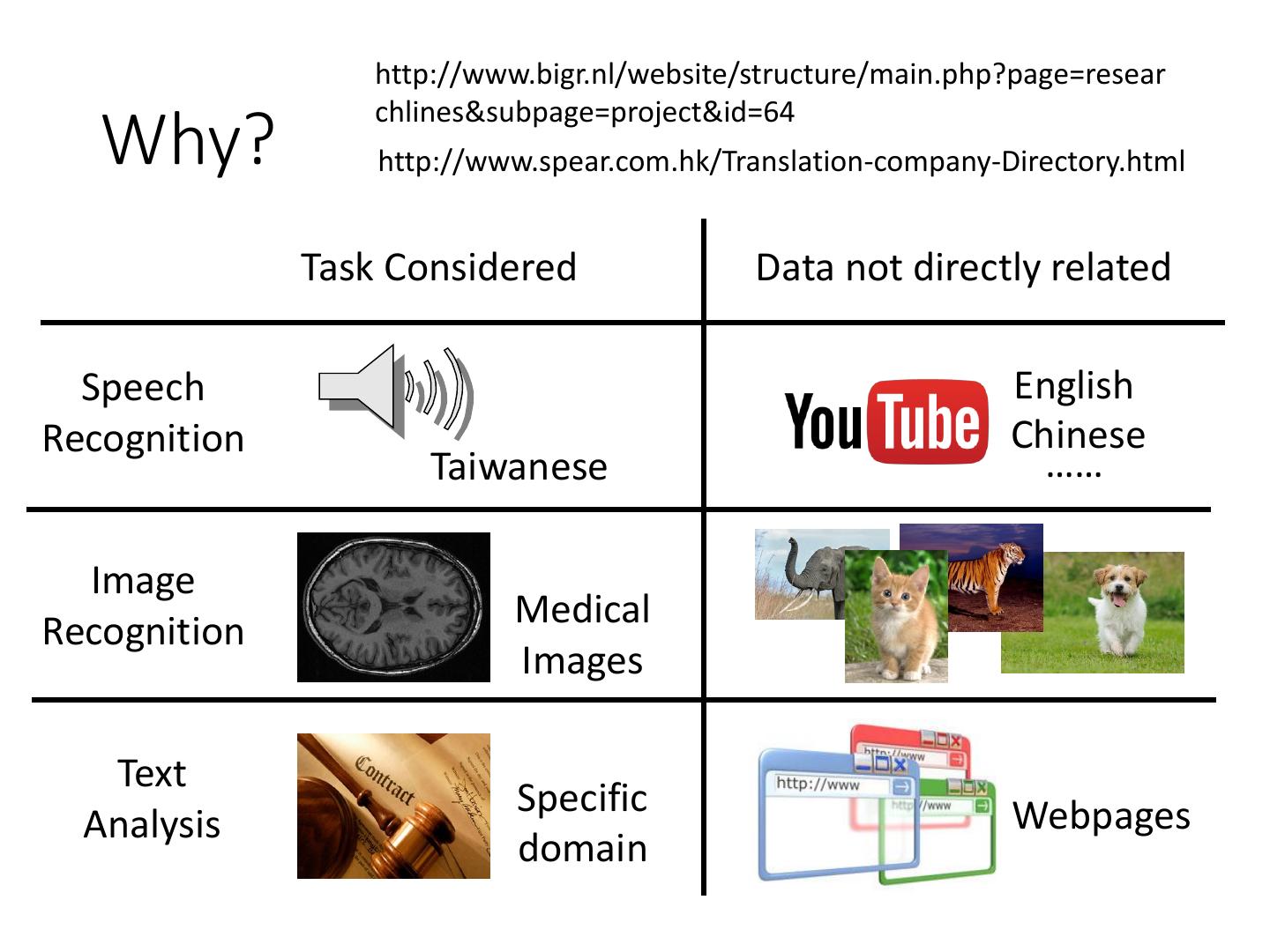

3 . http://www.bigr.nl/website/structure/main.php?page=resear chlines&subpage=project&id=64 Why? http://www.spear.com.hk/Translation-company-Directory.html Task Considered Data not directly related Speech English Recognition Chinese Taiwanese …… Image Medical Recognition Images Text Specific Webpages Analysis domain

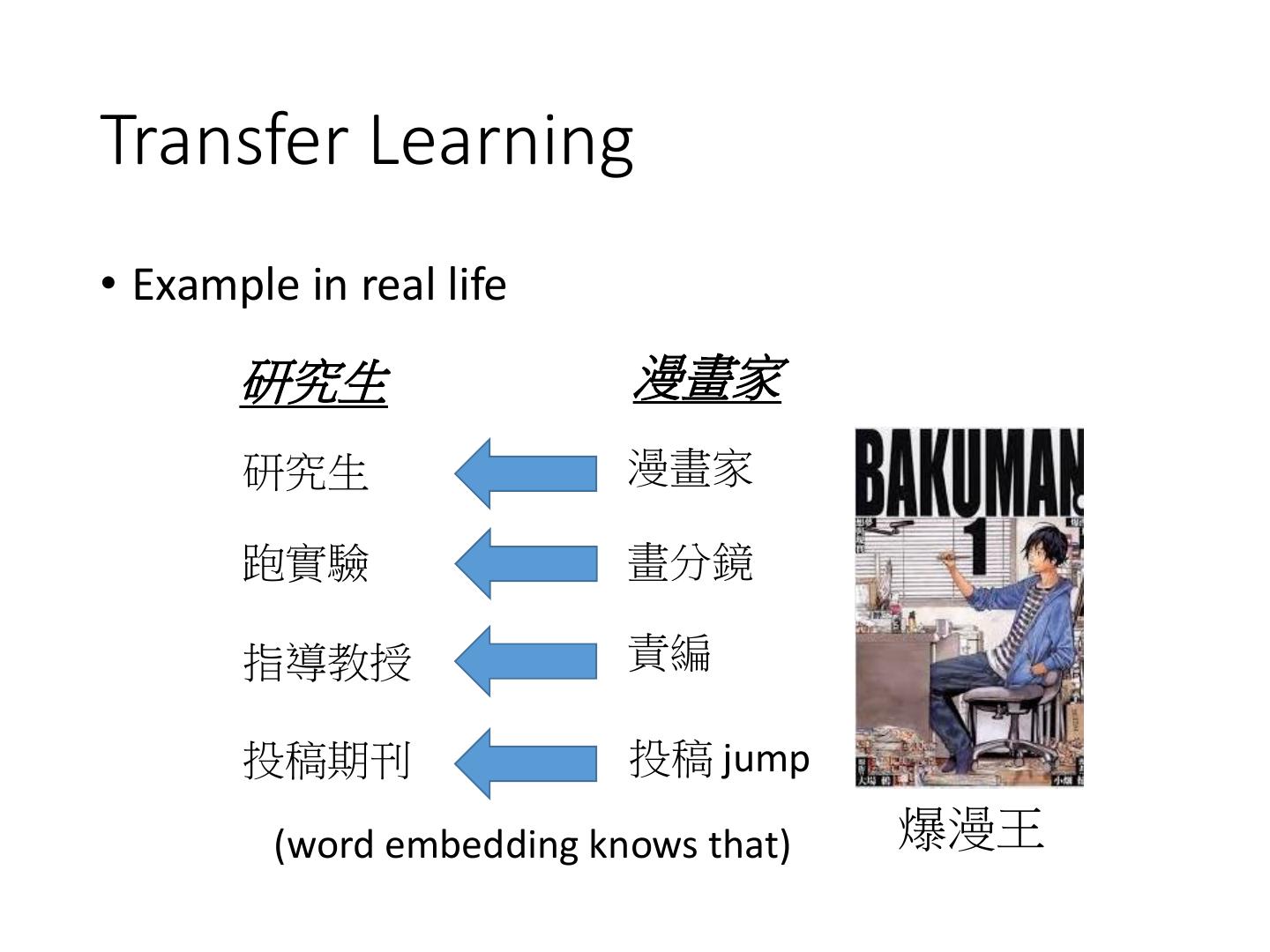

4 .Transfer Learning • Example in real life 研究生 漫畫家 研究生 漫畫家 跑實驗 畫分鏡 指導教授 責編 投稿期刊 投稿 jump (word embedding knows that) 爆漫王

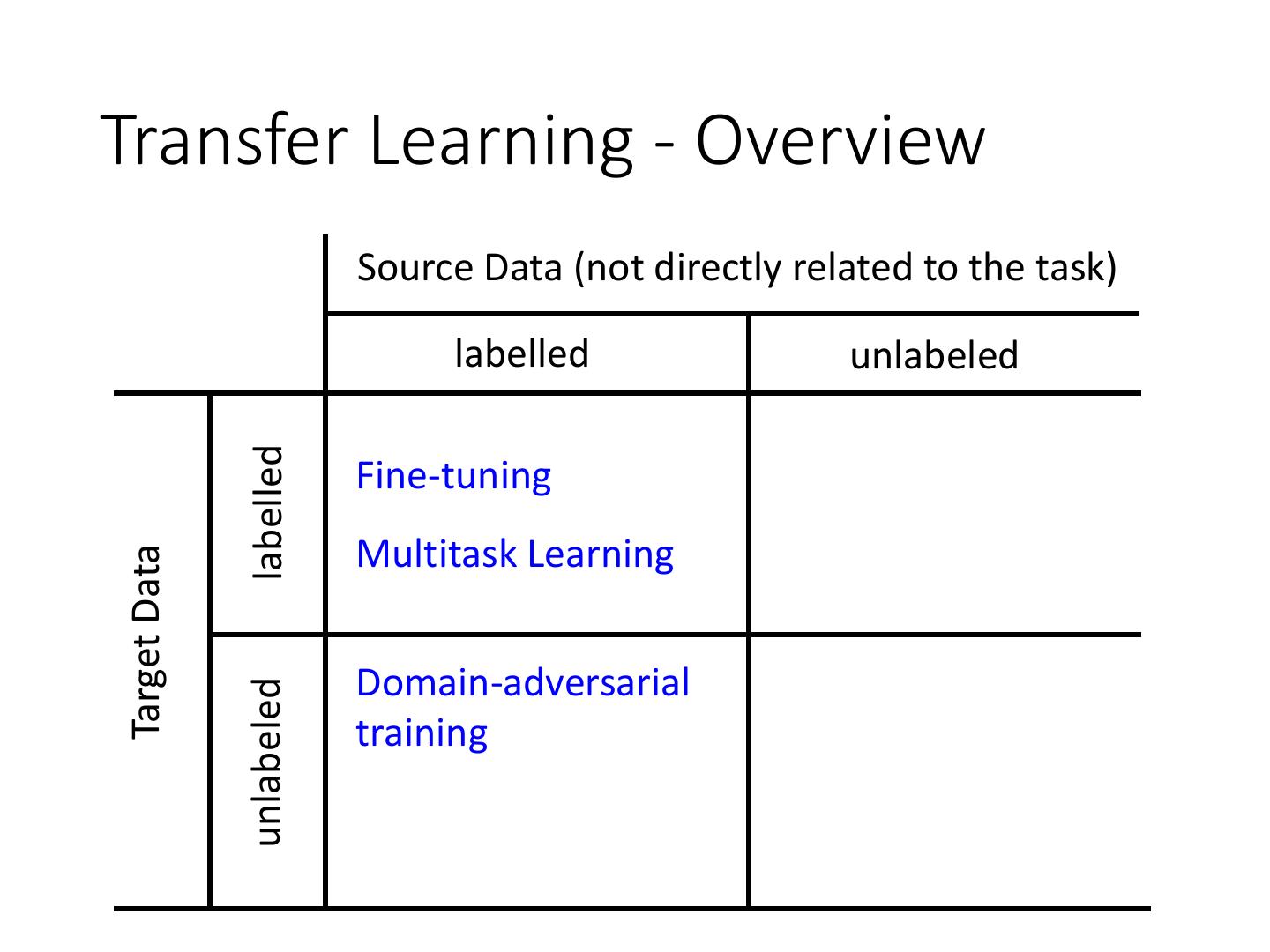

5 .Transfer Learning - Overview Source Data (not directly related to the task) labelled unlabeled labelled Model Fine-tuning Target Data Warning: different terminology in different literature unlabeled

6 .Model Fine-tuning One-shot learning: only a few examples in target domain • Task description • Source data: 𝑥 𝑠 , 𝑦 𝑠 A large amount • Target data: 𝑥 𝑡 , 𝑦 𝑡 Very little • Example: (supervised) speaker adaption • Source data: audio data and transcriptions from many speakers • Target data: audio data and its transcriptions of specific user • Idea: training a model by source data, then fine- tune the model by target data • Challenge: only limited target data, so be careful about overfitting

7 . Conservative Training Output layer output close Output layer parameter close initialization Input layer Input layer Target data (e.g. Source data A little data from (e.g. Audio data of target speaker) Many speakers)

8 . Layer Transfer Output layer Copy some parameters Target data Input layer 1. Only train the rest layers (prevent Source data overfitting) 2. fine-tune the whole network (if there is sufficient data)

9 .Layer Transfer • Which layer can be transferred (copied)? • Speech: usually copy the last few layers • Image: usually copy the first few layers Pixels Layer 1 Layer 2 Layer L x1 …… …… x2 …… elephant …… …… …… …… xN …… ……

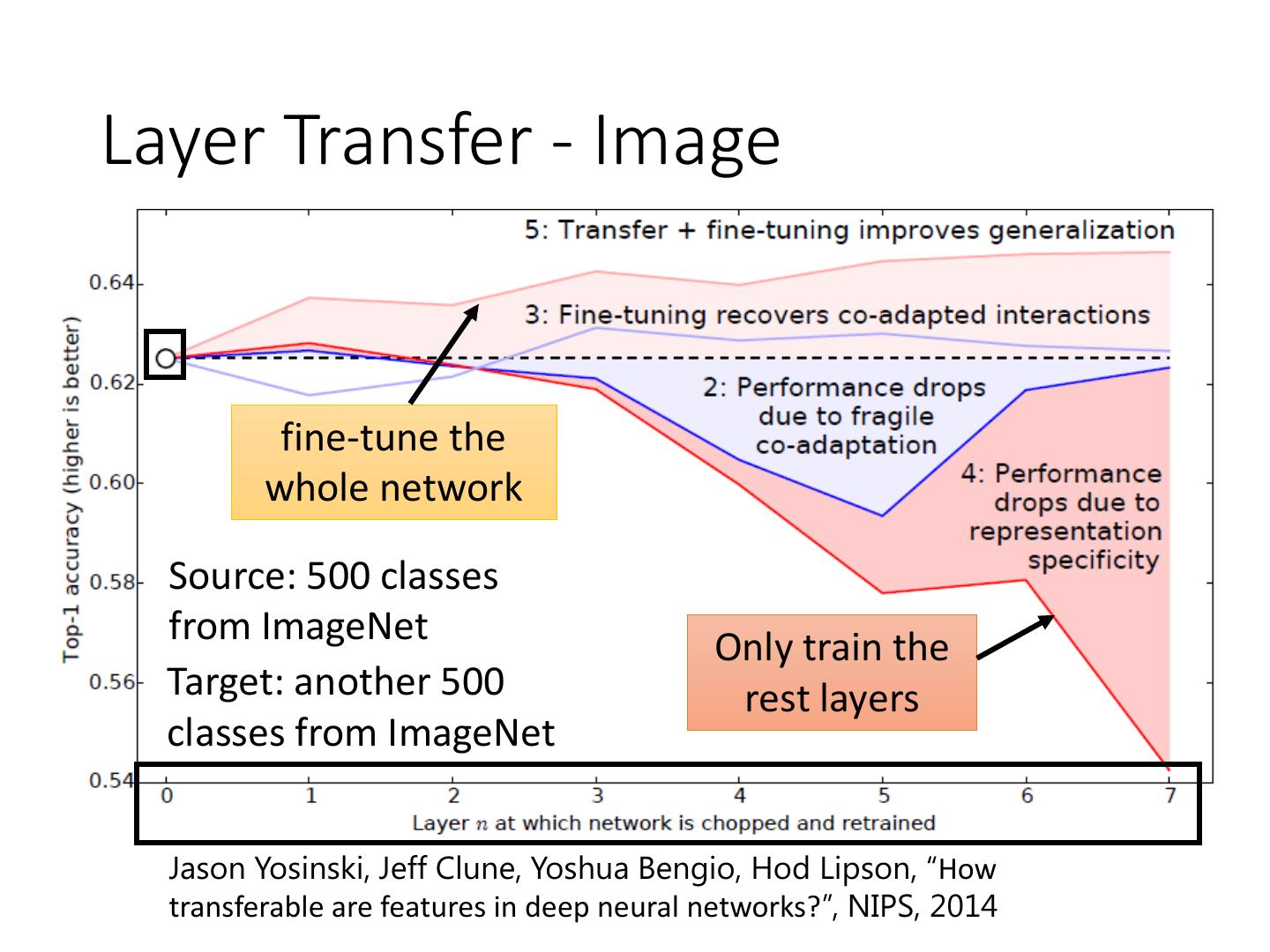

10 .Layer Transfer - Image fine-tune the whole network Source: 500 classes from ImageNet Only train the Target: another 500 rest layers classes from ImageNet Jason Yosinski, Jeff Clune, Yoshua Bengio, Hod Lipson, “How transferable are features in deep neural networks?”, NIPS, 2014

11 .Layer Transfer - Image Only train the rest layers Jason Yosinski, Jeff Clune, Yoshua Bengio, Hod Lipson, “How transferable are features in deep neural networks?”, NIPS, 2014

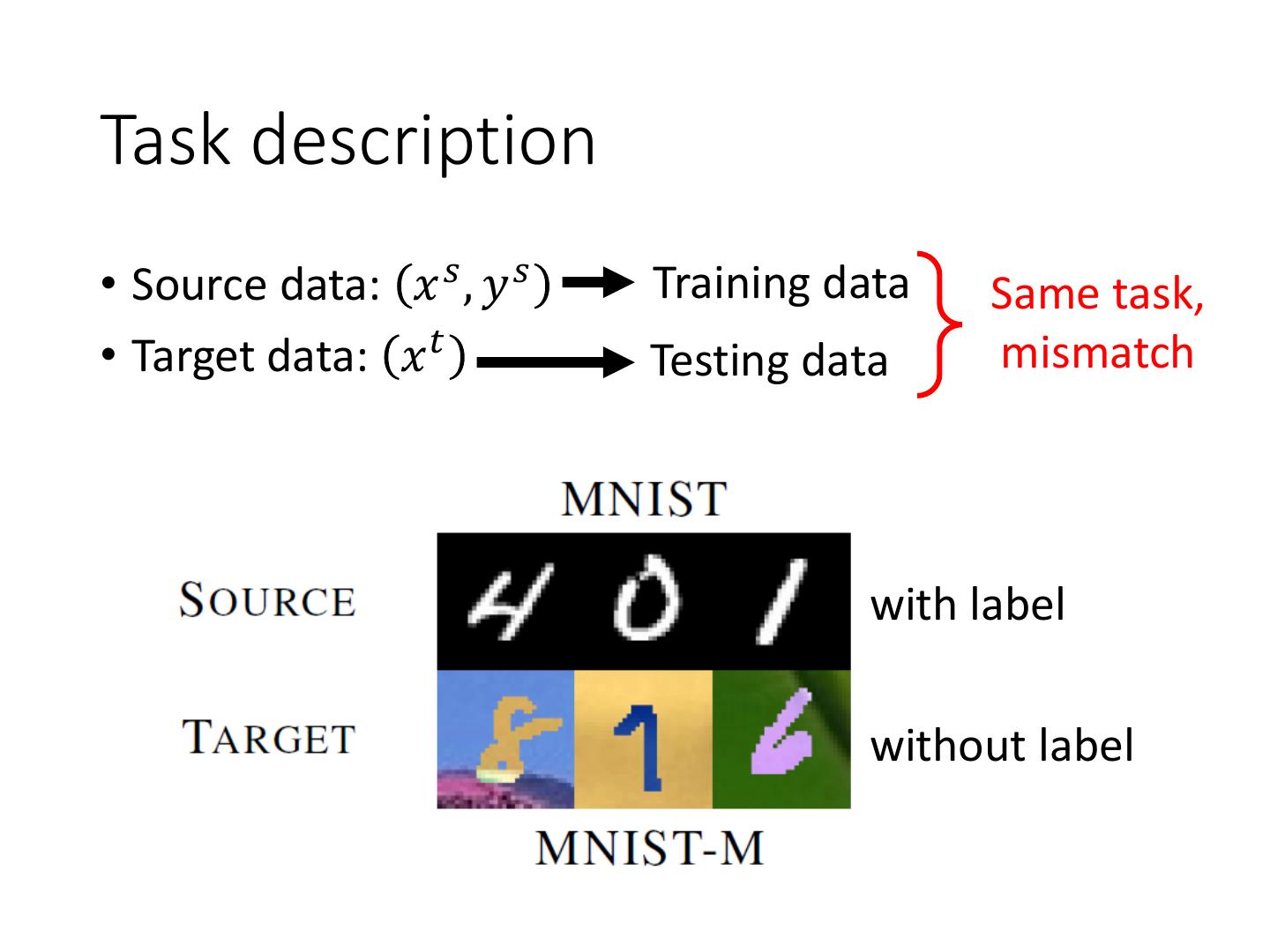

12 .Transfer Learning - Overview Source Data (not directly related to the task) labelled unlabeled labelled Fine-tuning Multitask Learning Target Data unlabeled

13 .Multitask Learning • The multi-layer structure makes NN suitable for multitask learning Task A Task B Task A Task B Input Input feature Input feature feature for task A for task B

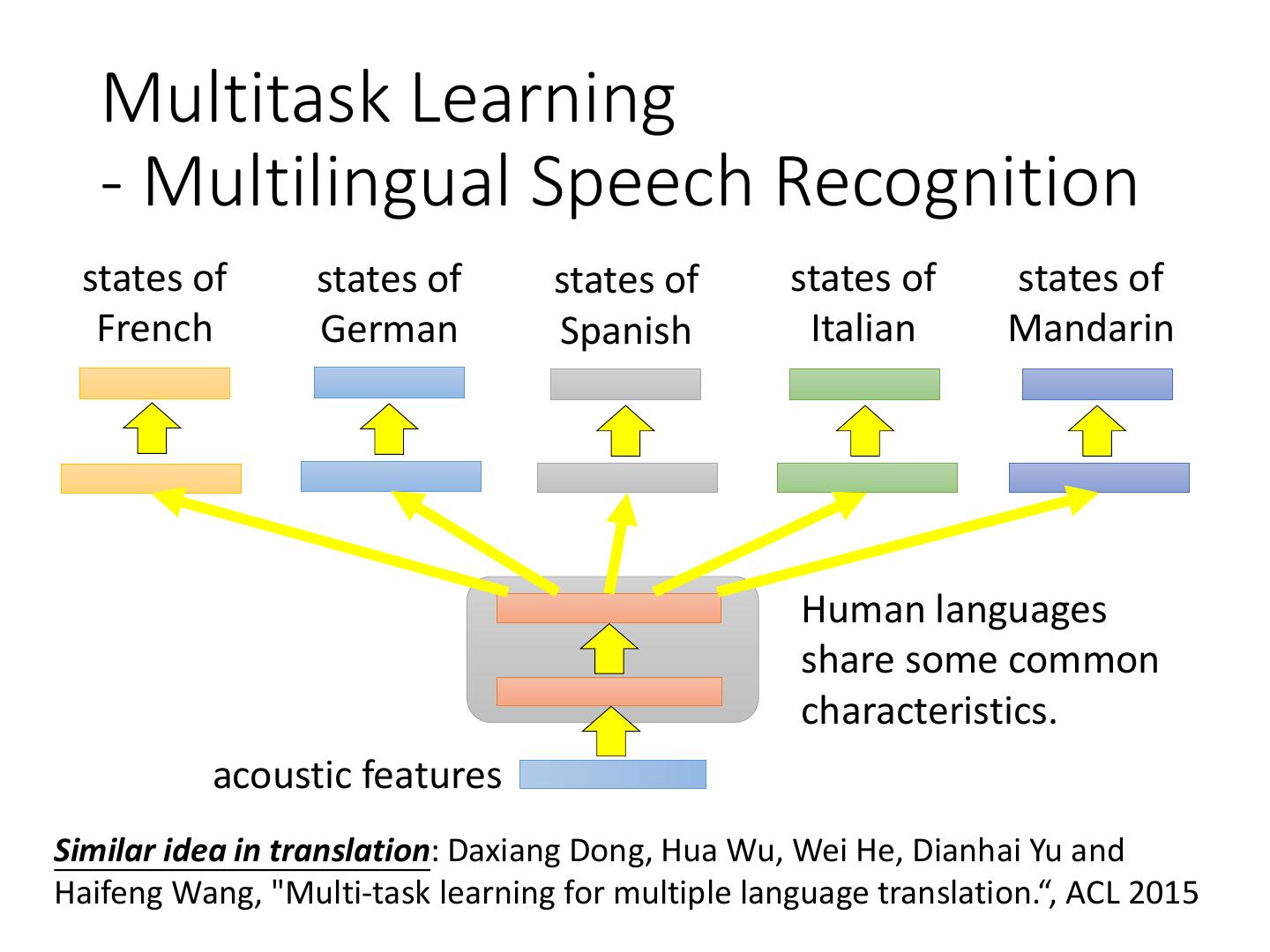

14 . Multitask Learning - Multilingual Speech Recognition states of states of states of states of states of French German Spanish Italian Mandarin Human languages share some common characteristics. acoustic features Similar idea in translation: Daxiang Dong, Hua Wu, Wei He, Dianhai Yu and Haifeng Wang, "Multi-task learning for multiple language translation.“, ACL 2015

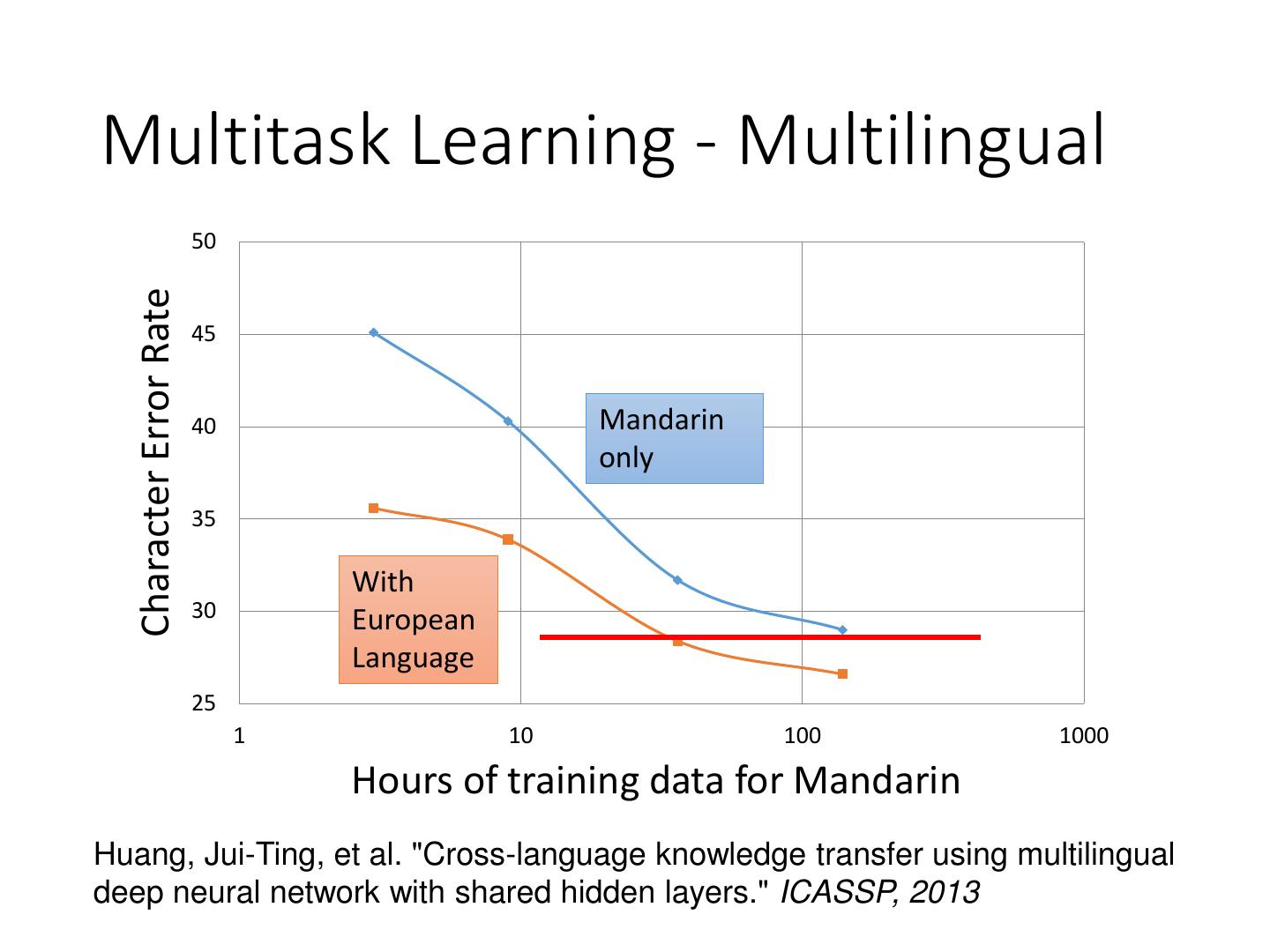

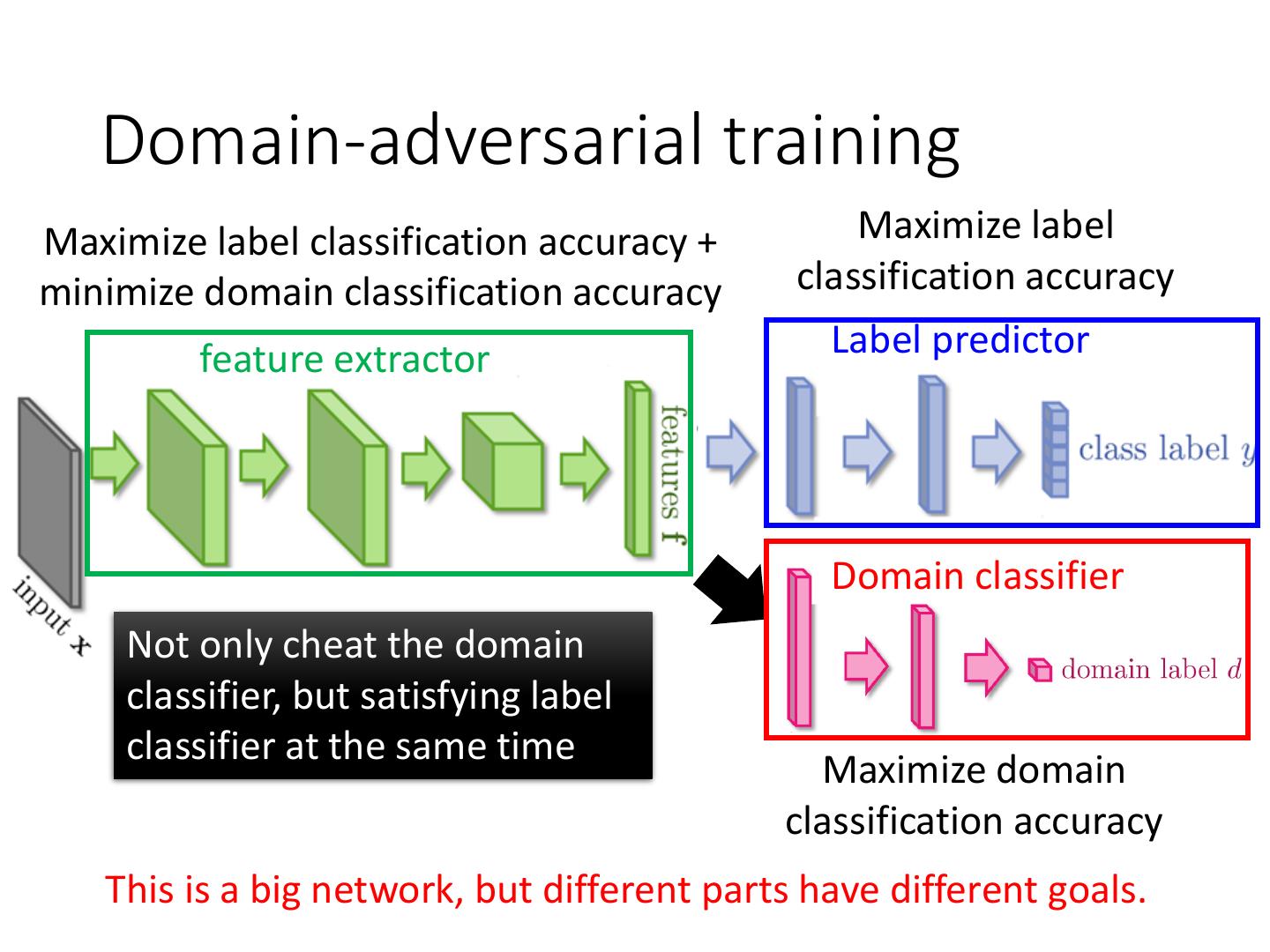

15 .Multitask Learning - Multilingual 50 Character Error Rate 45 40 Mandarin only 35 With 30 European Language 25 1 10 100 1000 Hours of training data for Mandarin Huang, Jui-Ting, et al. "Cross-language knowledge transfer using multilingual deep neural network with shared hidden layers." ICASSP, 2013

16 .Progressive Neural Networks Task 1 Task 2 Task 3 input input input Andrei A. Rusu, Neil C. Rabinowitz, Guillaume Desjardins, Hubert Soyer, James Kirkpatrick, Koray Kavukcuoglu, Razvan Pascanu, Raia Hadsell, “Progressive Neural Networks”, arXiv preprint 2016

17 .Chrisantha Fernando, Dylan Banarse, Charles Blundell, Yori Zwols, David Ha, Andrei A. Rusu, Alexander Pritzel, Daan Wierstra, “PathNet: Evolution Channels Gradient Descent in Super Neural Networks”, arXiv preprint, 2017

18 .Transfer Learning - Overview Source Data (not directly related to the task) labelled unlabeled labelled Fine-tuning Multitask Learning Target Data Domain-adversarial unlabeled training

19 .Task description • Source data: 𝑥 𝑠 , 𝑦 𝑠 Training data Same task, • Target data: 𝑥 𝑡 Testing data mismatch with label without label

20 .Domain-adversarial training

21 .Domain-adversarial training feature extractor Similar to GAN Too easy to feature extractor …… Domain classifier

22 . Domain-adversarial training Maximize label classification accuracy + Maximize label minimize domain classification accuracy classification accuracy feature extractor Label predictor Domain classifier Not only cheat the domain classifier, but satisfying label classifier at the same time Maximize domain classification accuracy This is a big network, but different parts have different goals.

23 . Domain-adversarial training Domain classifier fails in the end It should struggle …… Yaroslav Ganin, Victor Lempitsky, Unsupervised Domain Adaptation by Backpropagation, ICML, 2015 Hana Ajakan, Pascal Germain, Hugo Larochelle, François Laviolette, Mario Marchand, Domain-Adversarial Training of Neural Networks, JMLR, 2016

24 . Domain-adversarial training Yaroslav Ganin, Victor Lempitsky, Unsupervised Domain Adaptation by Backpropagation, ICML, 2015 Hana Ajakan, Pascal Germain, Hugo Larochelle, François Laviolette, Mario Marchand, Domain-Adversarial Training of Neural Networks, JMLR, 2016

25 .Transfer Learning - Overview Source Data (not directly related to the task) labelled unlabeled labelled Fine-tuning Multitask Learning Target Data Domain-adversarial unlabeled training Zero-shot learning

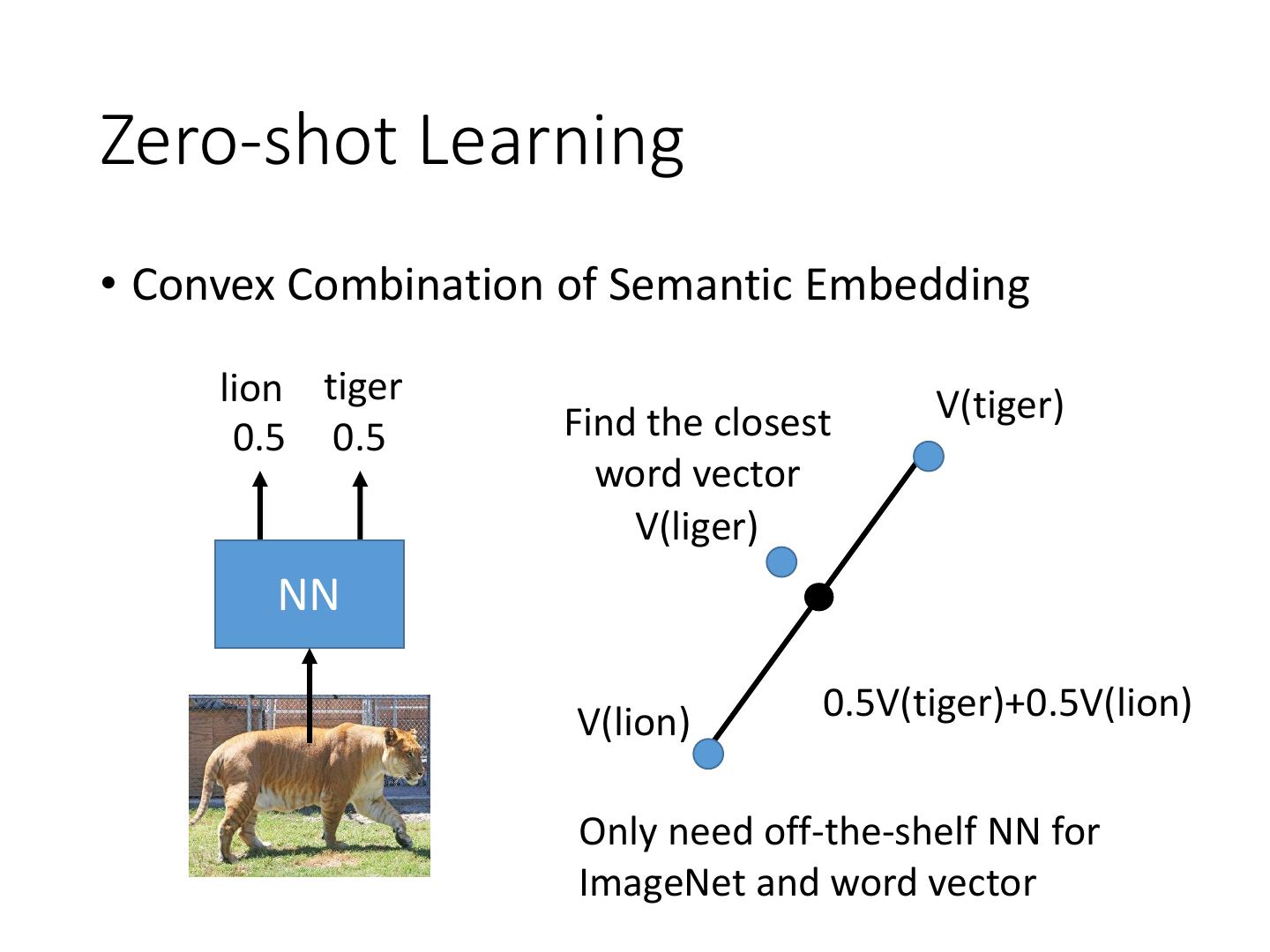

26 . http://evchk.wikia.com/wiki/%E8%8 Zero-shot Learning D%89%E6%B3%A5%E9%A6%AC • Source data: 𝑥 𝑠 , 𝑦 𝑠 Training data Different • Target data: 𝑥 𝑡 Testing data tasks 𝑥 𝑠: …… 𝑥𝑡 : 𝑦𝑠: cat dog …… In speech recognition, we can not have all possible words in the source (training) data. How we solve this problem in speech recognition?

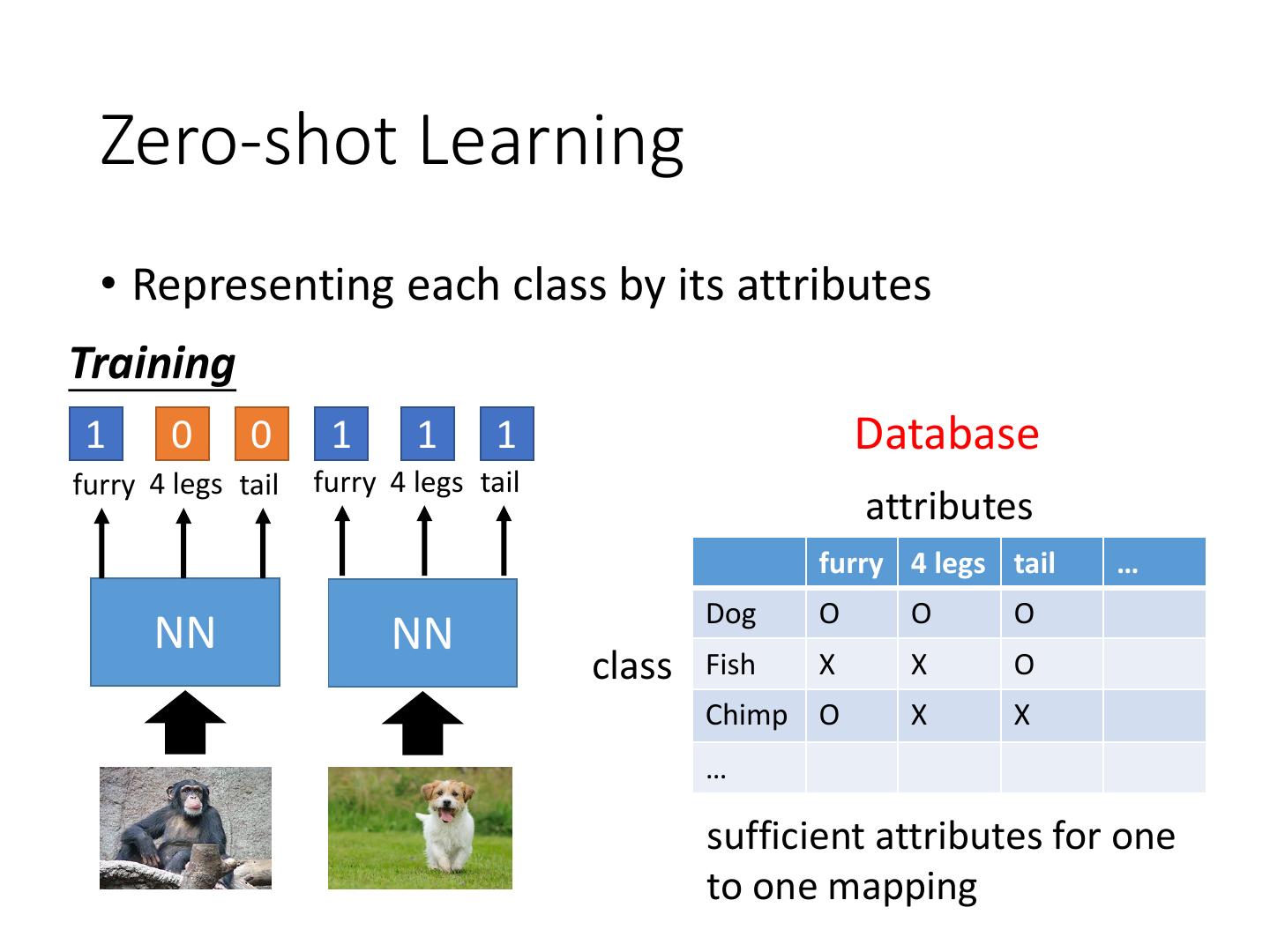

27 . Zero-shot Learning • Representing each class by its attributes Training 1 0 0 1 1 1 Database furry 4 legs tail furry 4 legs tail attributes furry 4 legs tail … Dog O O O NN NN class Fish X X O Chimp O X X … sufficient attributes for one to one mapping

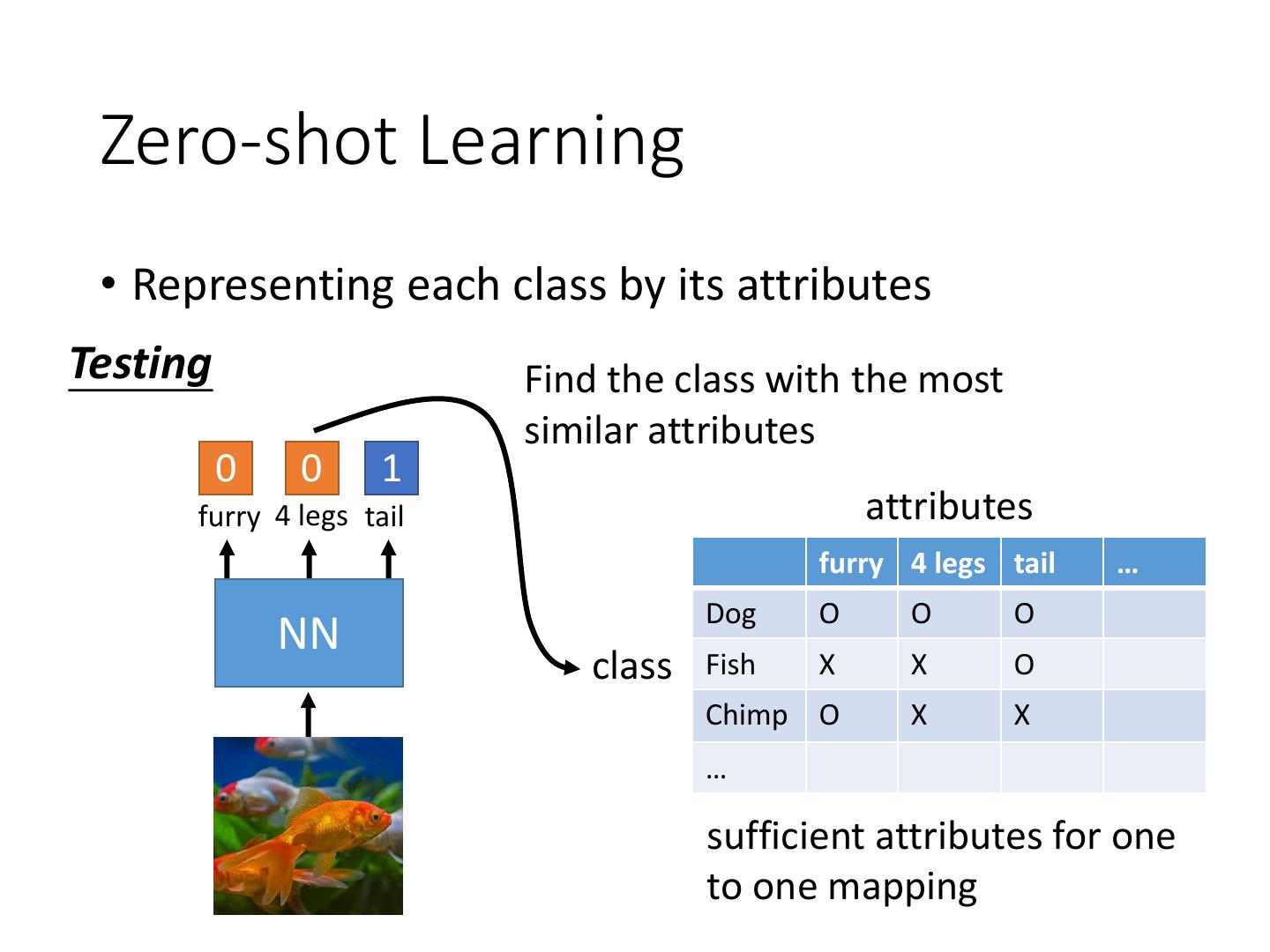

28 . Zero-shot Learning • Representing each class by its attributes Testing Find the class with the most similar attributes 0 0 1 furry 4 legs tail attributes furry 4 legs tail … Dog O O O NN class Fish X X O Chimp O X X … sufficient attributes for one to one mapping

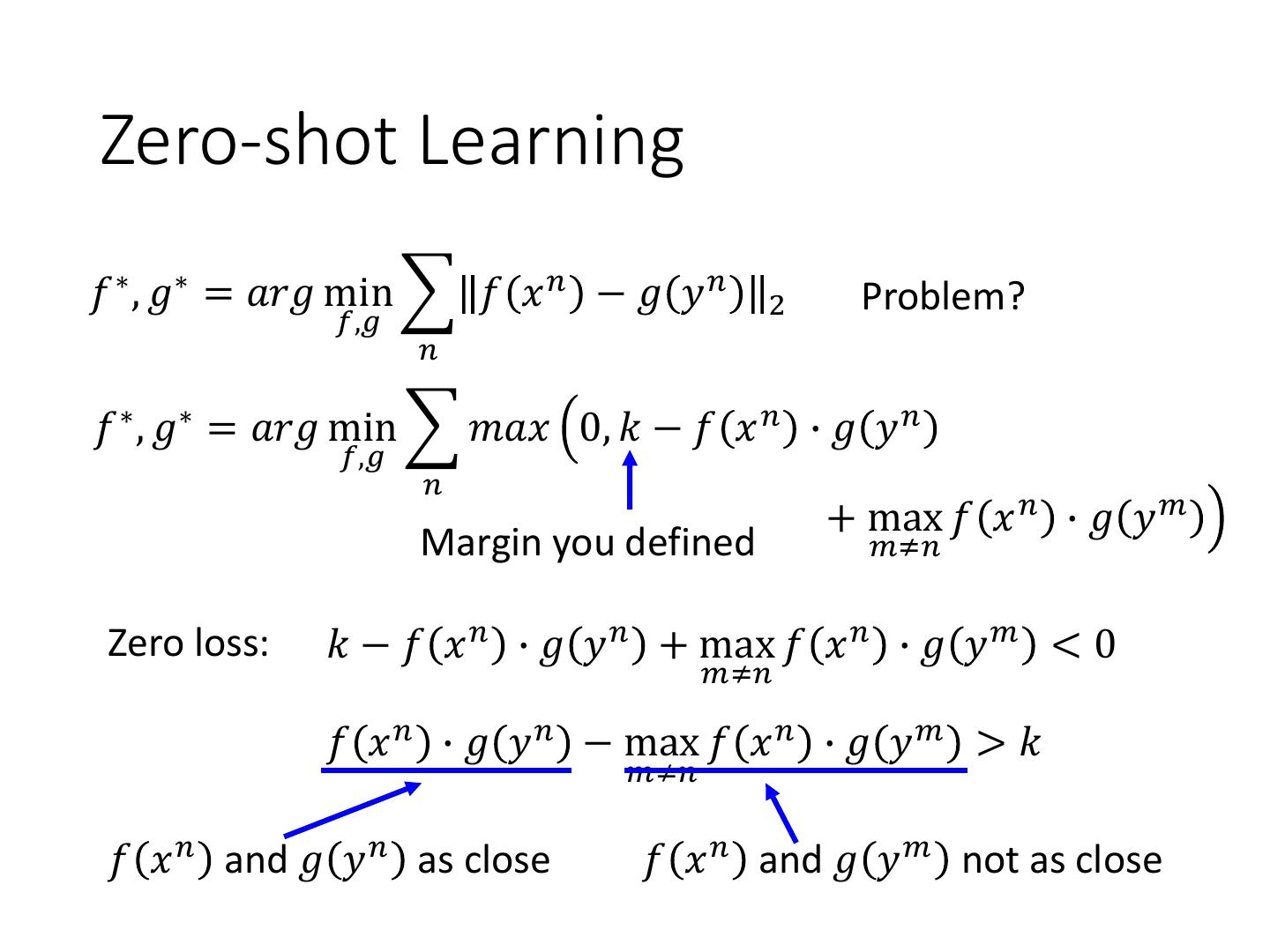

29 . 𝑓 ∗ and g ∗ can be NN. Zero-shot Learning Training target: 𝑓 𝑥 𝑛 and 𝑔 𝑦 𝑛 as close as possible • Attribute embedding x2 y1 (attribute of chimp) y2 (attribute x1 of dog) 𝑓 𝑥2 𝑔 𝑦2 𝑓 𝑥1 𝑔 𝑦1 y3 (attribute of 𝑔 𝑦3 𝑓 𝑦3 x3 Grass-mud horse) Embedding Space