- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Self Driving Car Data Augmentation

展开查看详情

1 .Data augmentation and self-driving cars The failing of conventional wisdom Gene Olafsen Summary of content: https://towardsdatascience.com/when-conventional-wisdom-fails-revisiting-data-augmentation-for-self-driving-cars-4831998c5509

2 .Introduction DeepScale is constantly looking for ways to boost the performance of our object detection models.

3 .Simple image augementation Cutout blacks out a randomly-located square in the input image.

4 .Unexpected results... When applied it to the data, the model performance decreased. A search of the data pipeline for the problem resulted in finding that all of the " augmentors " that were already in place were hurting performance immensely.

5 .culprit using flip, crop, and weight decay regularization — a standard scheme for object detection tasks, resulted in a 13% decrease of model performance.

6 .purpose of image augmentation Overfitting is a common problem for deep neural networks. ML systems can memorize unintended properties of the dataset instead of learning meaningful, general information about the world. Overfit networks fail to yield useful results when given new, real-world data.

7 .overfitting To address overfitting, it is common to “augment” the training data. Common methods for augmenting visual data include randomly flipping images horizontally (flip), shifting their hues (hue jitter) or cropping random sections (crop). Augmentors like flip, hue jitter and crop help to combat overfitting because they improve a network’s ability to generalize.

8 .General image identification Many datasets contain images aggregated from many sources, taken from different cameras in various conditions, networks need to generalize over many factors to perform well. Using many data augmentors , can train networks to generalize over all of these variables.

9 .Self-driving images are different Cars generally have consistent pose with respect to other vehicles and road objects. All images come from the same cameras, mounted at the same positions and angles. A neural net in a self-driving car doesn’t have to worry about generalizing over these properties. Because of this, it can actually be beneficial to overfit to the specific camera properties of a system.

10 .consistency Self-driving car data can be so consistent that standard data augmentors , such as flip and crop, hurt performance more than they help. The intuition is simple: flipping training images doesn’t make sense because the cameras will always be at the same angle, and the car will always be on the right side of the road (US driving). The car’s cameras will always be in the same location with the same field of view, this shifting and scaling forces overgeneralization. Overgeneralization hurts performance because the network wastes its predictive capacity learning about irrelevant scenarios.

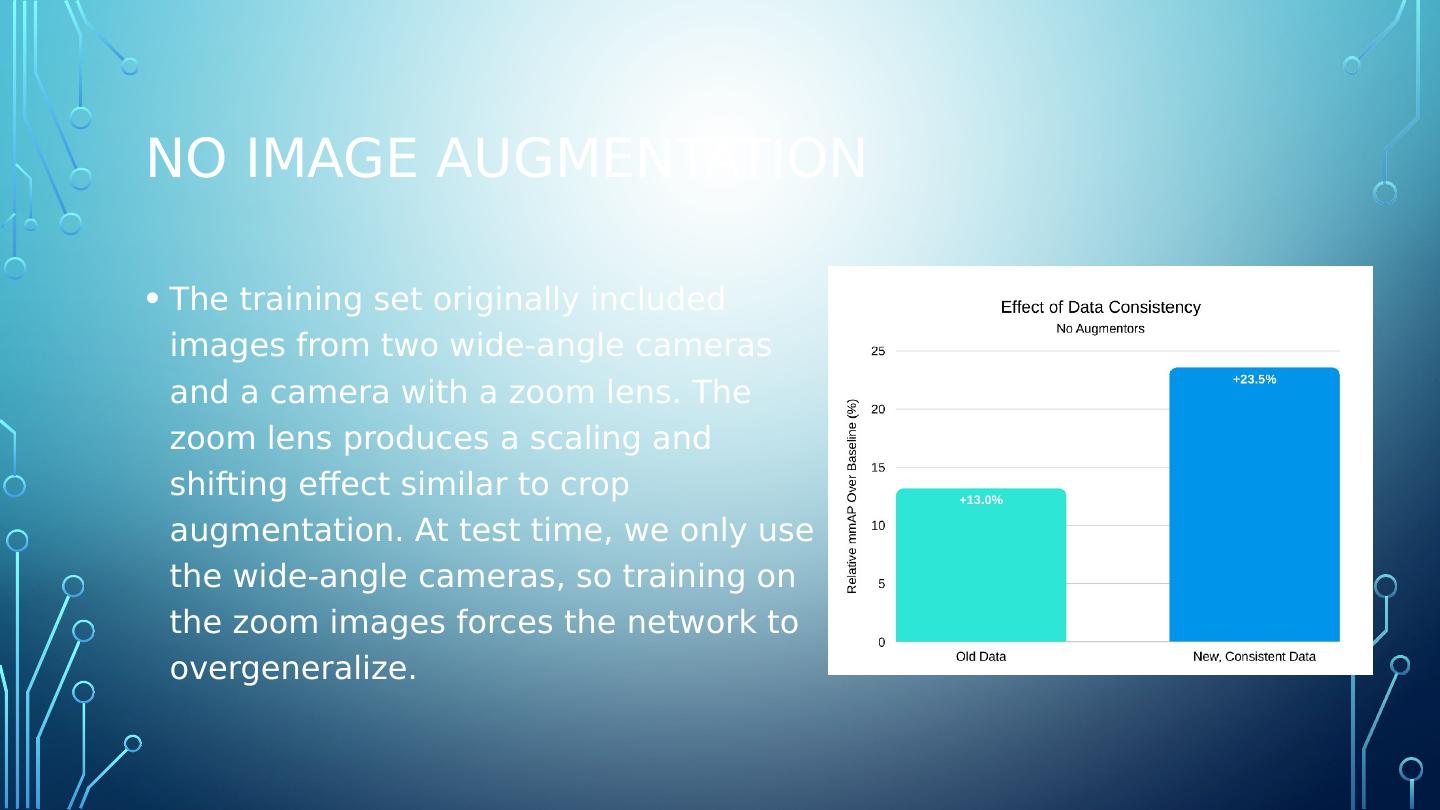

11 .No image augmentation The training set originally included images from two wide-angle cameras and a camera with a zoom lens. The zoom lens produces a scaling and shifting effect similar to crop augmentation. At test time, we only use the wide-angle cameras, so training on the zoom images forces the network to overgeneralize.

12 .Cutout and hue jitter Hue jitter simply shifts the hue of the input by a random amount. This helps the network generalize over colors ( ie . a red car and a blue car should both be detected the same). Cutout simulates obstructions. Obstructions are common in real-world driving data, and invariance to obstructions can help a network detect partially-occluded objects.

13 .Targeted image augmentation

14 .takeaway The field of machine learning has many similar “generic best practices,” such as how to set the learning rate, which optimizer to use, and how to initialize models. It’s important for ML practitioners to continually revisit assumptions about how to train models, especially when building for specific applications.