- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Reinforcement Learning Q-Learning

展开查看详情

1 .Introduction to q-learning Reinforcement learning Gene Olafsen Based on: https://medium.com/emergent-future/simple-reinforcement-learning-with-tensorflow-part-0-q-learning-with-tables-and-neural-networks-d195264329d0

2 .overview Q-learning is a Reinforcement Learning technique used in machine learning. The goal of Q-Learning is to learn a policy, which tells an agent which action to take under which circumstances. Q-Learning is a value-based Reinforcement Learning algorithm. The Q in Q-Learning stands for ‘Quality’

3 .Tabular Q-Learning Tabular Q-learning is one where, instead of using a neural network, the states are calculated with and reside ‘neatly’ in a table.

4 .Q-Learning vs Policy Gradient Policy Gradient attempts to map observations to an action. Q-Learning attempts to learn the value of being in a given state and taking a specific action there.

5 .Simple q-learning Q-learning at its simplest uses tables to store data. This approach has issues in scale with increasing numbers of states/actions.

6 .Ai gym Gym is a toolkit for developing and comparing reinforcement learning algorithms. It supports teaching agents everything from walking to playing games like Pong or Pinball.

7 .Ai gym Gym is a toolkit for developing and comparing reinforcement learning algorithms. It supports teaching agents everything from walking to playing games like Pong or Pinball.

8 .Frozen lake for the RL agent The agent controls the movement of a character in a grid world. Some tiles of the grid are walkable, and others lead to the agent falling into the water. Additionally, the movement direction of the agent is uncertain and only partially depends on the chosen direction. The agent is rewarded for finding a walkable path to a goal tile.

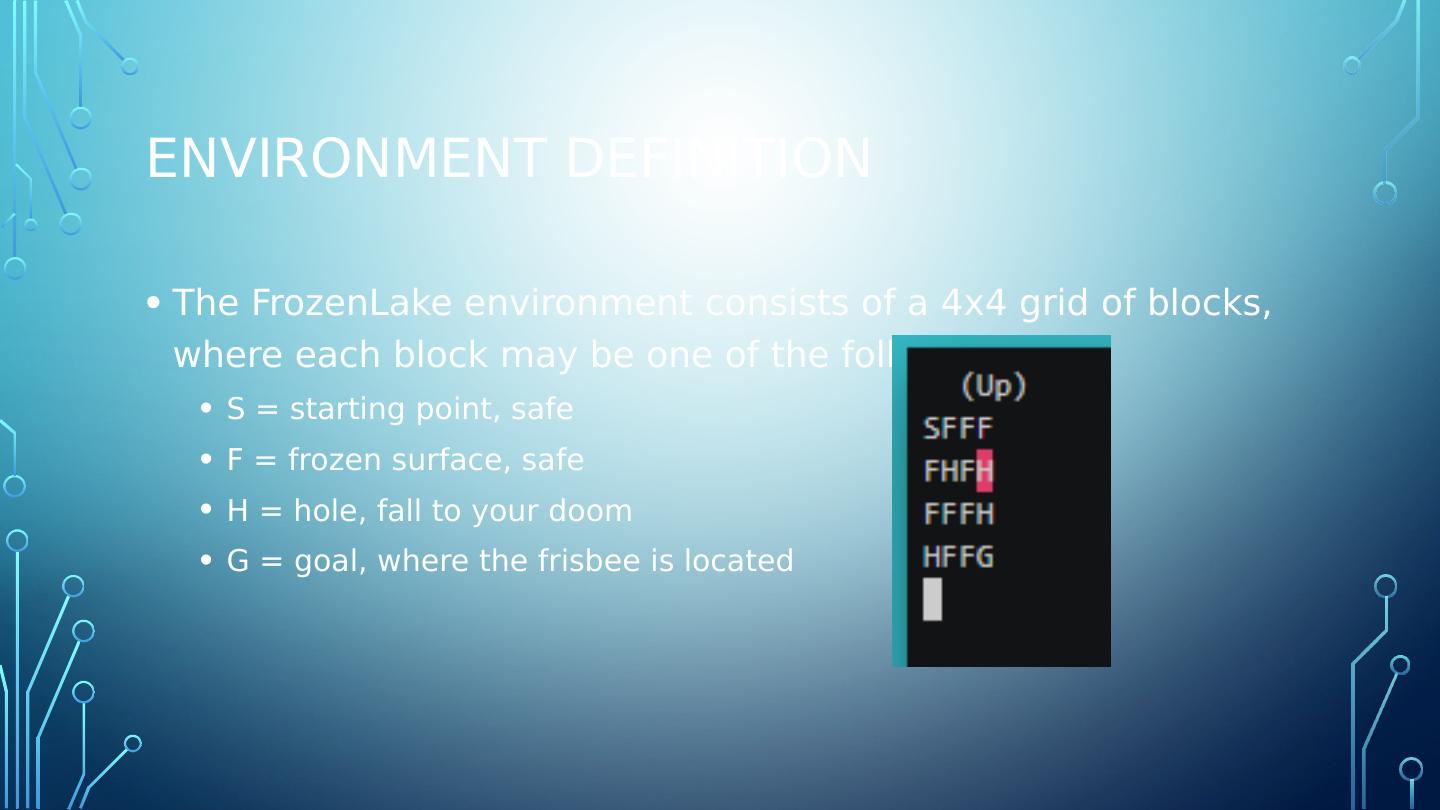

9 .Environment definition The FrozenLake environment consists of a 4x4 grid of blocks, where each block may be one of the following: S = starting point, safe F = frozen surface, safe H = hole, fall to your doom G = goal, where the frisbee is located

10 .An episode of frozen lake The episode ends when you reach the goal or fall in a hole. A reward of 1is received if the goal is reached, a 0 is received otherwise.

11 .Q-table There are 16 possible states (one for each block), with 4 possible actions (one for each direction of movement). A 16x4 table of Q-values is initialized with 0 in each cell. Q-Table values are updated as observations are made of the rewards that result from actions that are taken.

12 .Q-table updates Updates are made to the Q-table by applying the results of the Bellman equation.

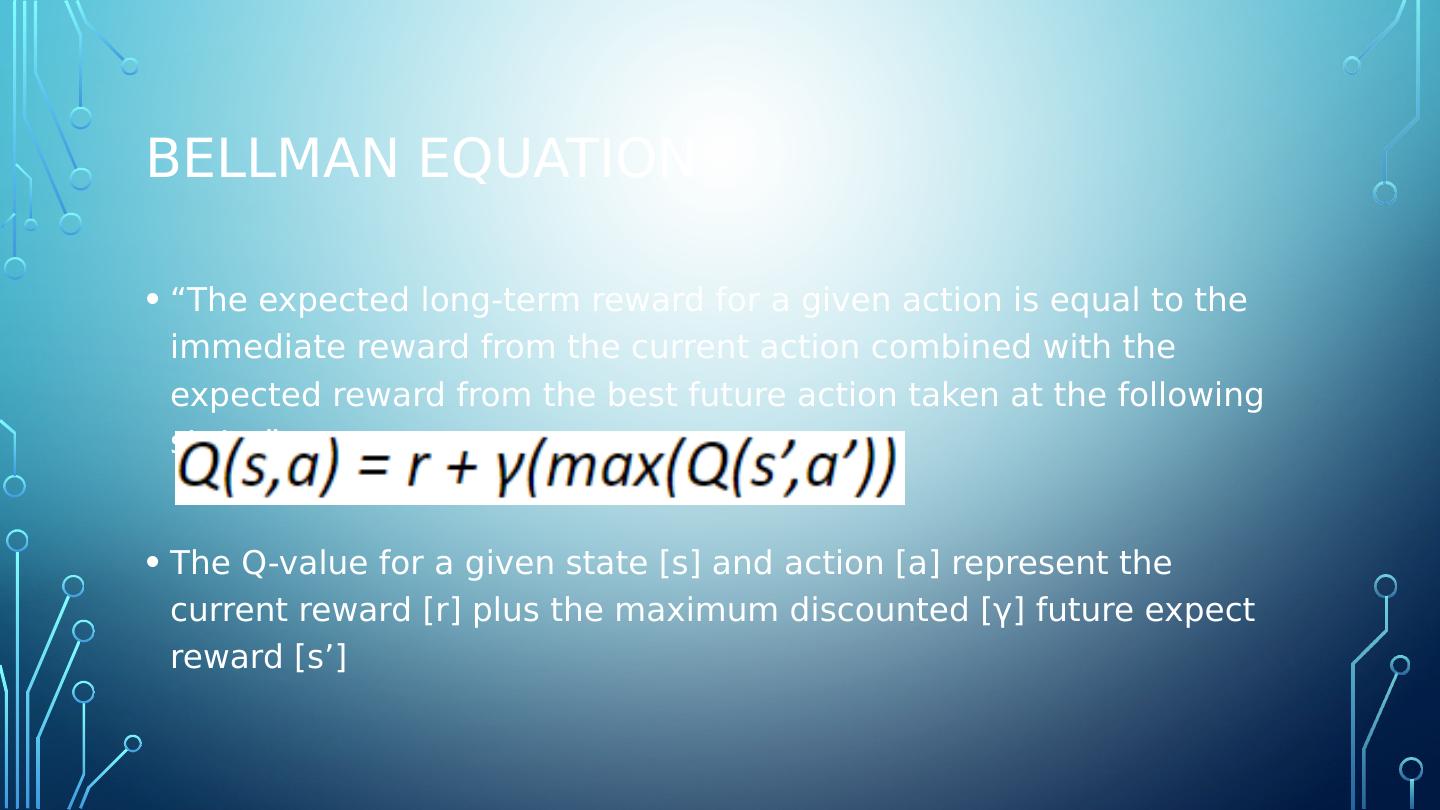

13 .Bellman equation “The expected long-term reward for a given action is equal to the immediate reward from the current action combined with the expected reward from the best future action taken at the following state.” The Q-value for a given state [s] and action [a] represent the current reward [r] plus the maximum discounted [γ] future expect reward [s’]

14 .Discount explanation The discount variable provides a way to decide how important the possible future rewards are compared to the present reward. The table will begin to obtain accurate measures of the expected future reward for a given action in a given state.

15 .Code 1 import gym import numpy as np env = gym.make(FrozenLake-v0) #Initialize table with all zeros Q = np.zeros([env.observation_space.n,env.action_space.n]) # Set learning parameters lr = .8 y = .95 num_episodes = 2000 #create lists to contain total rewards and steps per episode #jList = [] rList = []

16 .Code 2 for i in range(num_episodes): #Reset environment and get first new observation s = env.reset() rAll = 0 d = False j = 0 #The Q-Table learning algorithm while j < 99: j+=1 #Choose an action by greedily (with noise) picking from Q table a = np.argmax(Q[s,:] + np.random.randn(1,env.action_space.n)*(1./(i+1))) #Get new state and reward from environment s1,r,d,_ = env.step(a) #Update Q-Table with new knowledge Q[s,a] = Q[s,a] + lr*(r + y*np.max(Q[s1,:]) - Q[s,a]) rAll += r s = s1 if d == True: break #jList.append(j) rList.append(rAll)

17 .Code 3 print "Score over time: " + str(sum(rList)/num_episodes) print "Final Q-Table Values" print Q

18 .Q-learning with neural networks Scaling issues quickly arise when constraining Q-Learning to tables. What is needed is a way to take a description of the state and produce Q-values for actions without a table.

19 .Neural network configuration Define a one-layer network which accepts the state encoded in a one-hot vector (1x16), and produces a vector of 4 Q-values, one for each action.

20 .Neural network advantage A Tensorflow neural network provides flexibility to add layers, change activation functions, and select different input types- which while possible with a table configuration, is certainly more accessible with a NN.

21 .Loss function The loss function utilizes a sum-of-squares loss calculation. Where the difference between the current predicted Q-values, and the “target” value is computed via the gradients passed through the network. Note : Q-target (for an action) is the Q-value computed for the Q-table.

22 .Training with neural network Utilizing a neural network, in this example, requires more episodes and processor time to solve the FrozenLake problem. The Q-Table implementation is more efficient- in this case. However, the greater flexibility that neural networks offer for these problems, do so at the cost of stability when it comes to Q-Learning.

23 .Improving Q-Learning There are a number of techniques (or tricks) that can be applied to improve Q-Learning with neural networks. Experience Replay Freezing Layers

24 .