- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Evaluating Machine Learning Classifiers

展开查看详情

1 .EVALUATING MACHINE LEARNING CLASSIFIERS: ACCURACY, PRECISION AND RECALL

2 . Applied Machine Learning in Python University of Michigan, Prof. Kevin Collins Thompson (AMLP) https://www.coursera.org/learn/python- machine-learning/home/welcome REFERENCES Machine Learning: Classification University of Washington, Profs. Emily Fox & Carlos Guestrin (MLC) https://www.coursera.org/learn/ml- regression/home/welcome

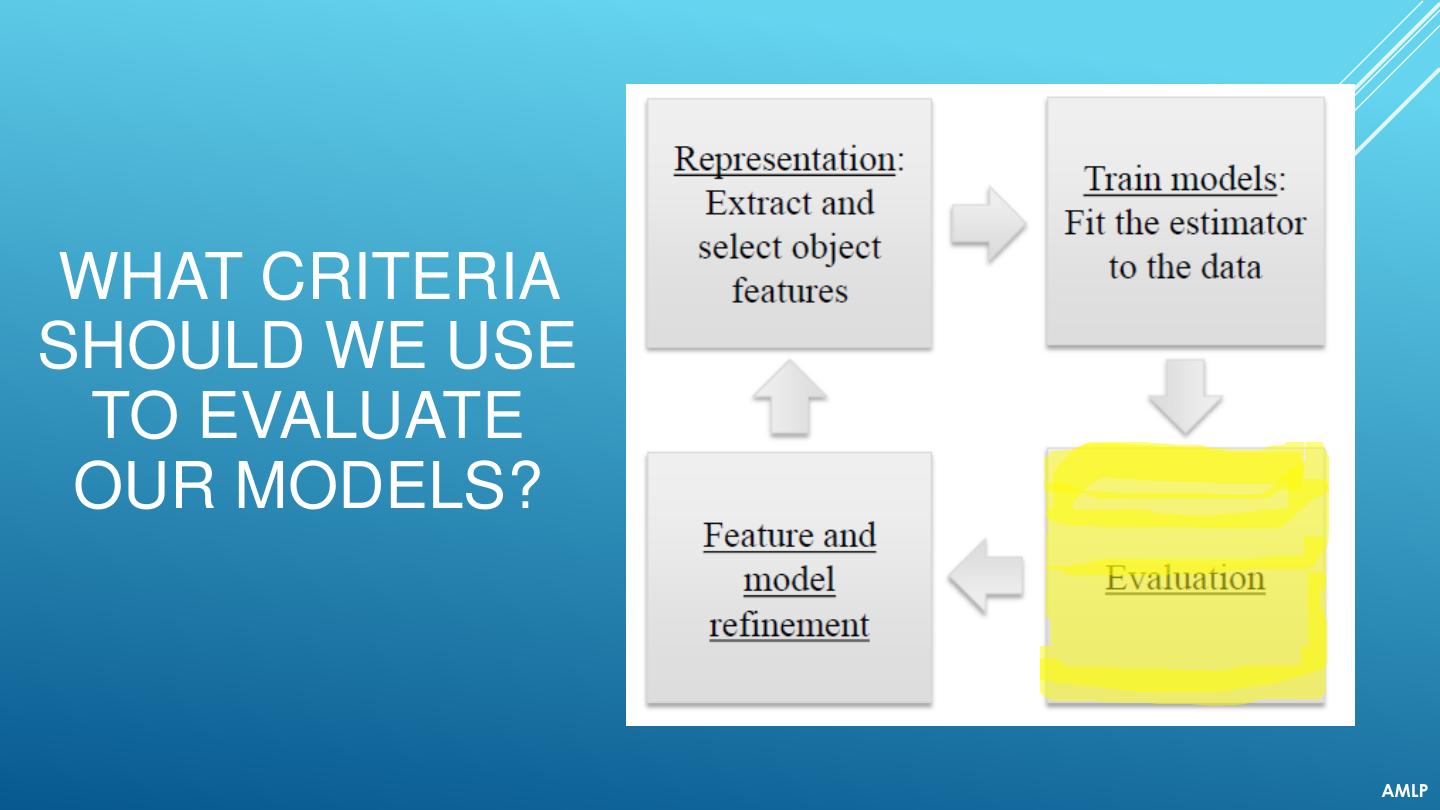

3 .REPRESENT, TRAIN, EVALUATE, REFINE AMLP

4 .WHAT CRITERIA SHOULD WE USE TO EVALUATE OUR MODELS? AMLP

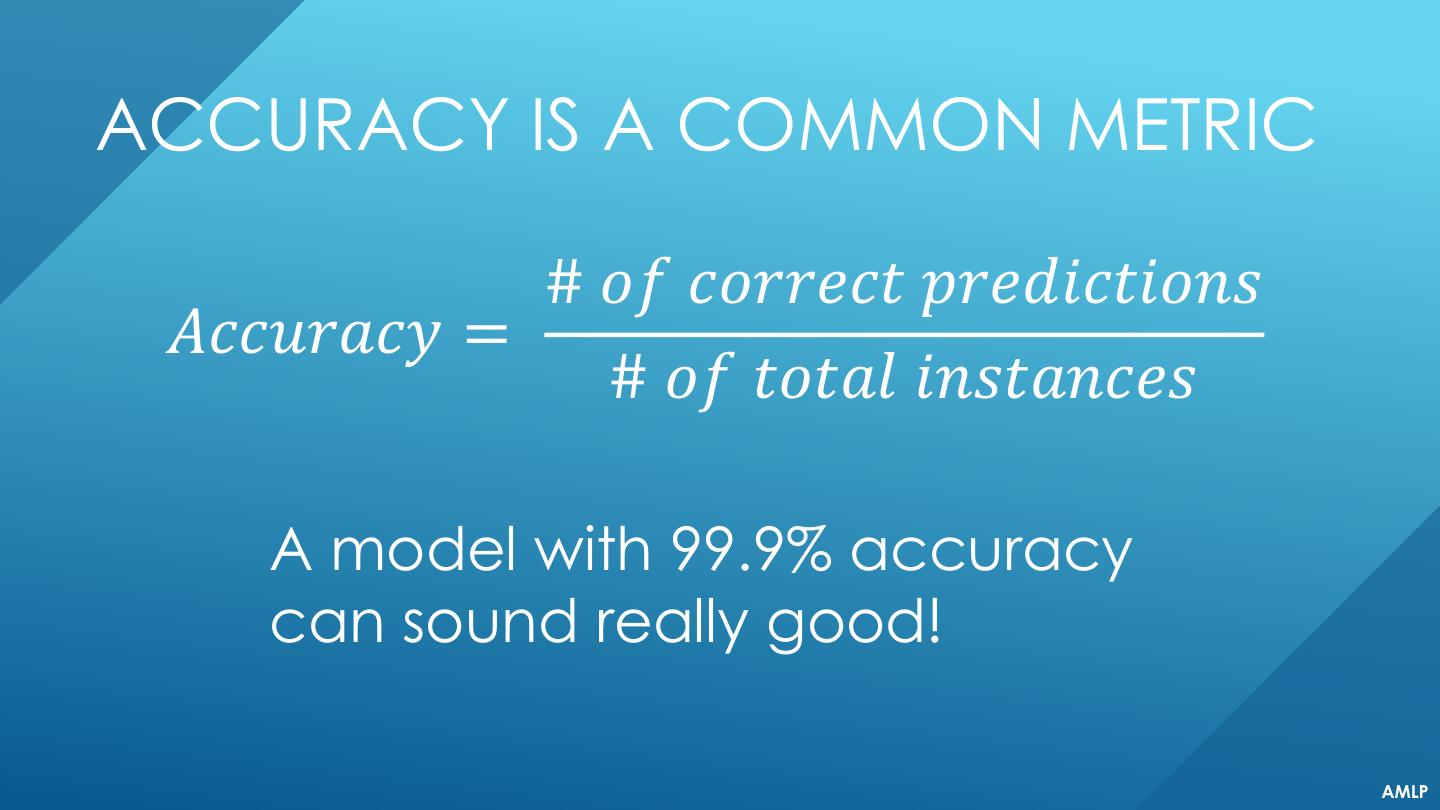

5 .ACCURACY IS A COMMON METRIC # 𝑜𝑓 𝑐𝑜𝑟𝑟𝑒𝑐𝑡 𝑝𝑟𝑒𝑑𝑖𝑐𝑡𝑖𝑜𝑛𝑠 𝐴𝑐𝑐𝑢𝑟𝑎𝑐𝑦 = # 𝑜𝑓 𝑡𝑜𝑡𝑎𝑙 𝑖𝑛𝑠𝑡𝑎𝑛𝑐𝑒𝑠 A model with 99.9% accuracy can sound really good! AMLP

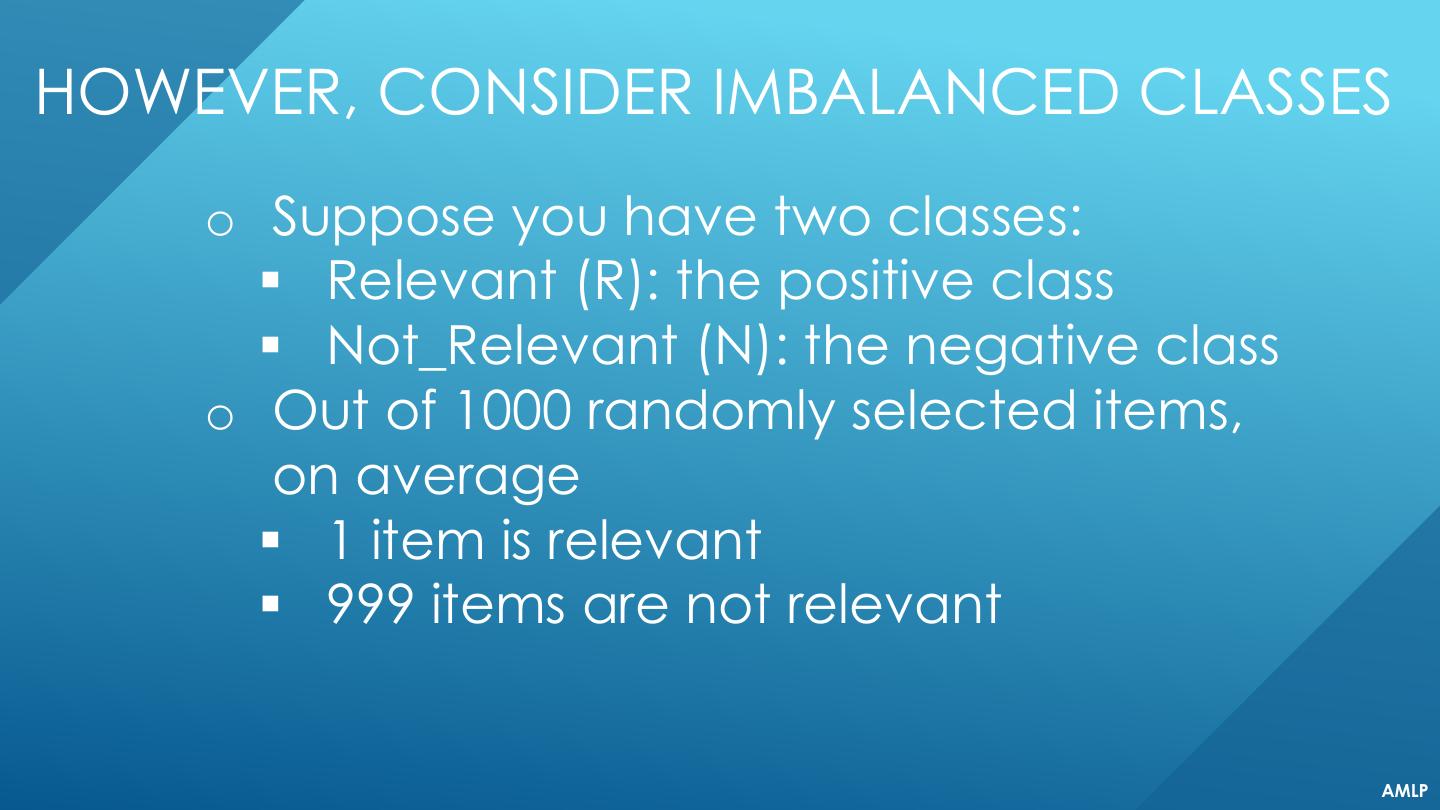

6 .HOWEVER, CONSIDER IMBALANCED CLASSES o Suppose you have two classes: ▪ Relevant (R): the positive class ▪ Not_Relevant (N): the negative class o Out of 1000 randomly selected items, on average ▪ 1 item is relevant ▪ 999 items are not relevant AMLP

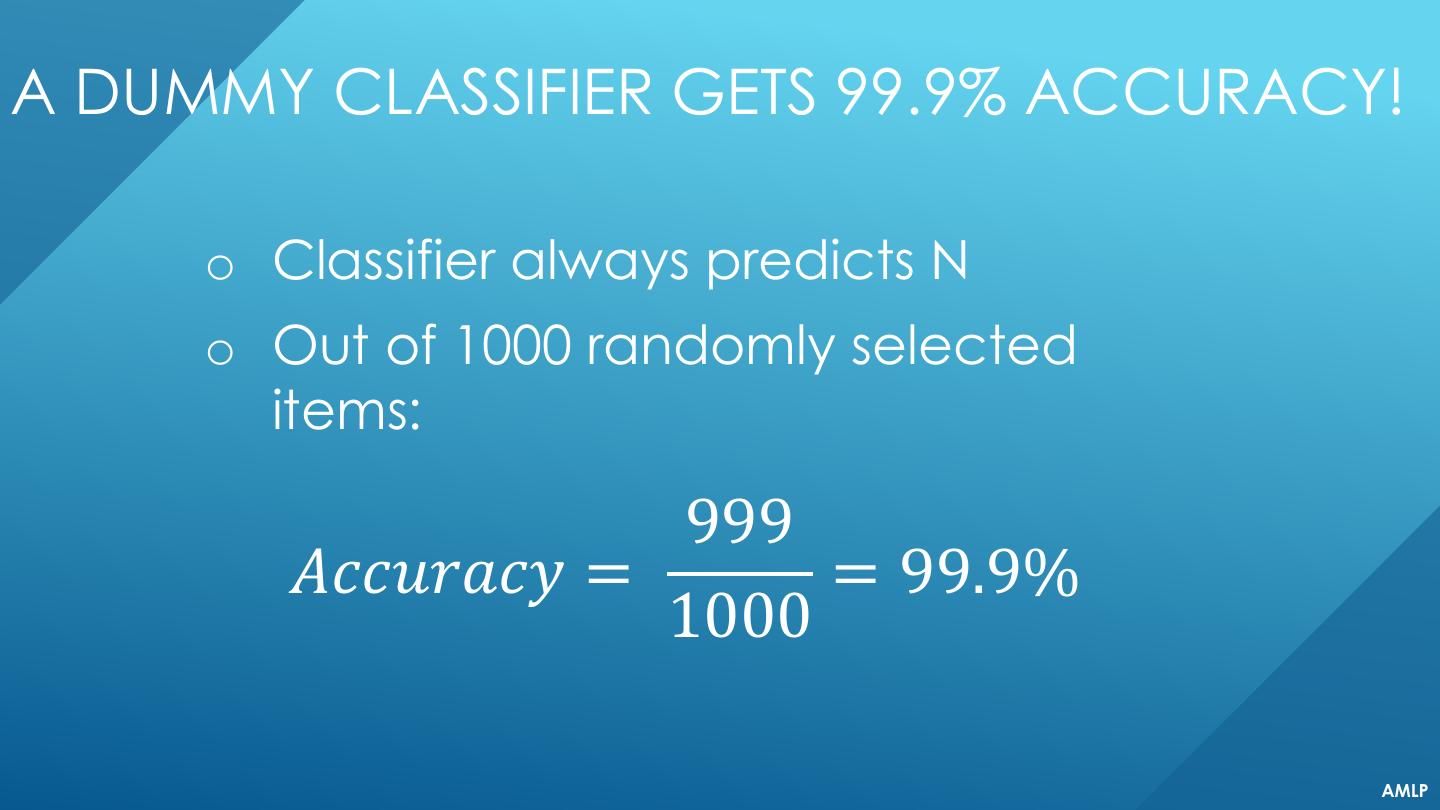

7 .A DUMMY CLASSIFIER GETS 99.9% ACCURACY! o Classifier always predicts N o Out of 1000 randomly selected items: 999 𝐴𝑐𝑐𝑢𝑟𝑎𝑐𝑦 = = 99.9% 1000 AMLP

8 . DUMMY CLASSIFIERS o typically ignore training data features. o often make predictions based on the distribution of the training data labels. o can serve as a sanity check on your classifier’s performance.

9 . COMMON DUMMY CLASSIFIERS most-frequent: predict most frequent label in training set. stratified: random prediction based on training set distribution uniform: choose predictions from a uniform distribution. constant: predict constant label given by user. AMLP

10 . EVALUATION Different applications have different goals Accuracy is widely used, but many other metrics are possible, e.g., - User satisfaction (Web search) - Amount of revenue (e-commerce) - Increase in patient survival rates (medical) AMLP

11 . PRECISION AND RECALL Two common alternatives to accuracy are: precision and recall. PRECISION: fraction of positive predictions that are actually positive. RECALL: fraction of positive examples that are predicted to be position AMLP

12 .DOMAINS WHERE PRECISION IS IMPORTANT o Search engine rankings, query suggestions o Document classification o Customer-facing tasks, e.g.,: ▪ product recommendation ▪ a restaurant website that automatically selects and posts positive reviews. AMLP

13 .DOMAINS WHERE RECALL IS IMPORTANT o Cancer tumor detection o Search and information extraction in legal discovery. o Often paired with a human expert to filter out false positives AMLP

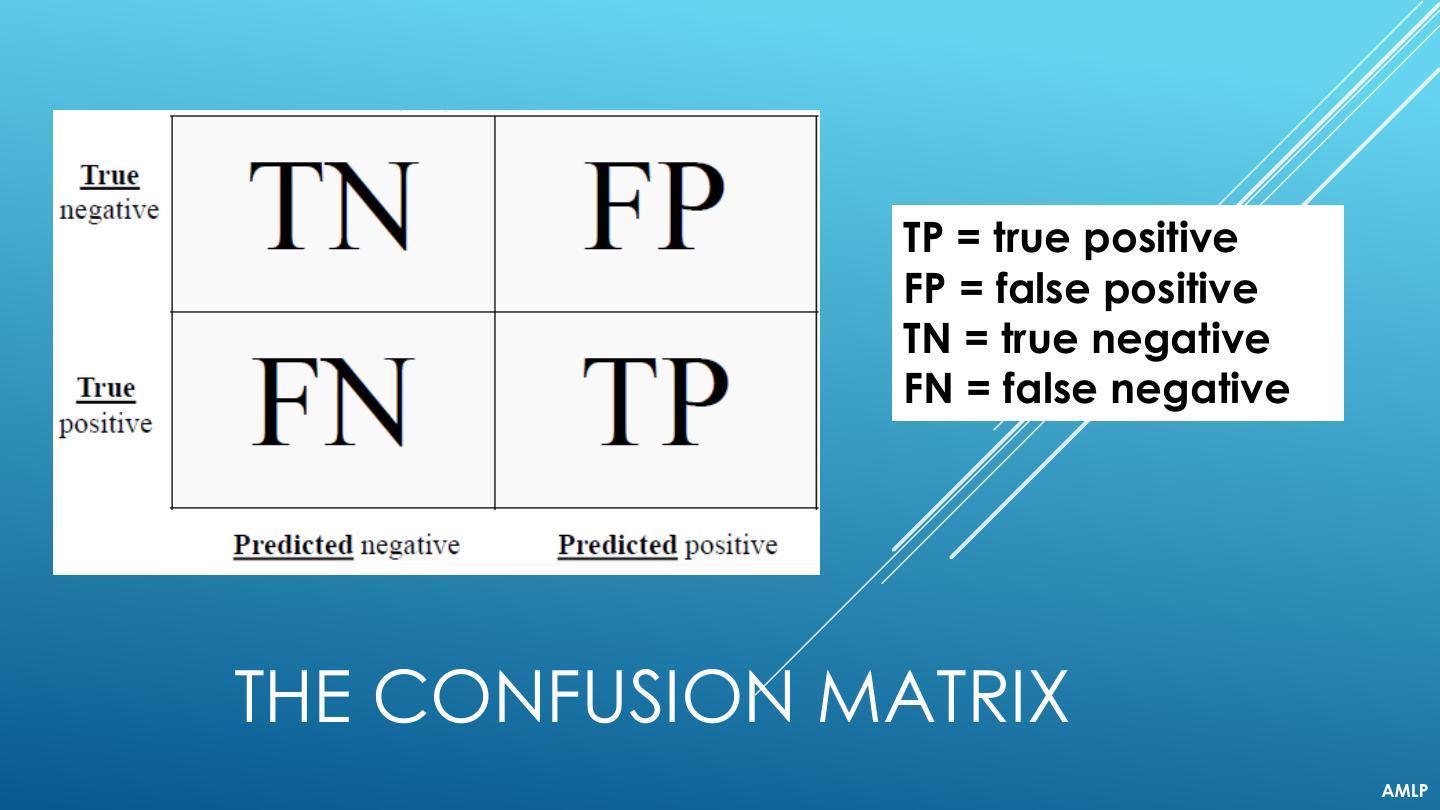

14 . TP = true positive FP = false positive TN = true negative FN = false negative THE CONFUSION MATRIX AMLP

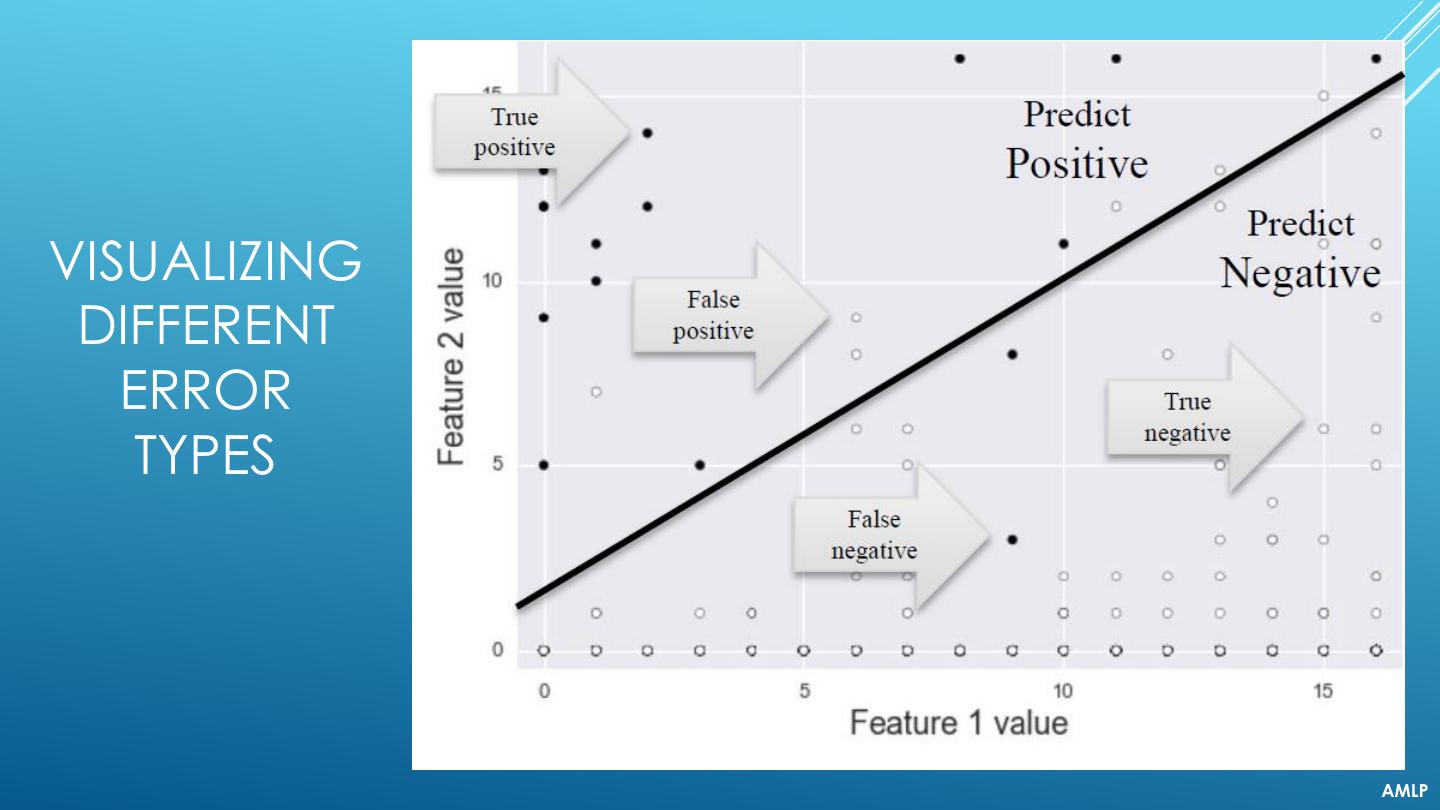

15 .VISUALIZING DIFFERENT ERROR TYPES AMLP

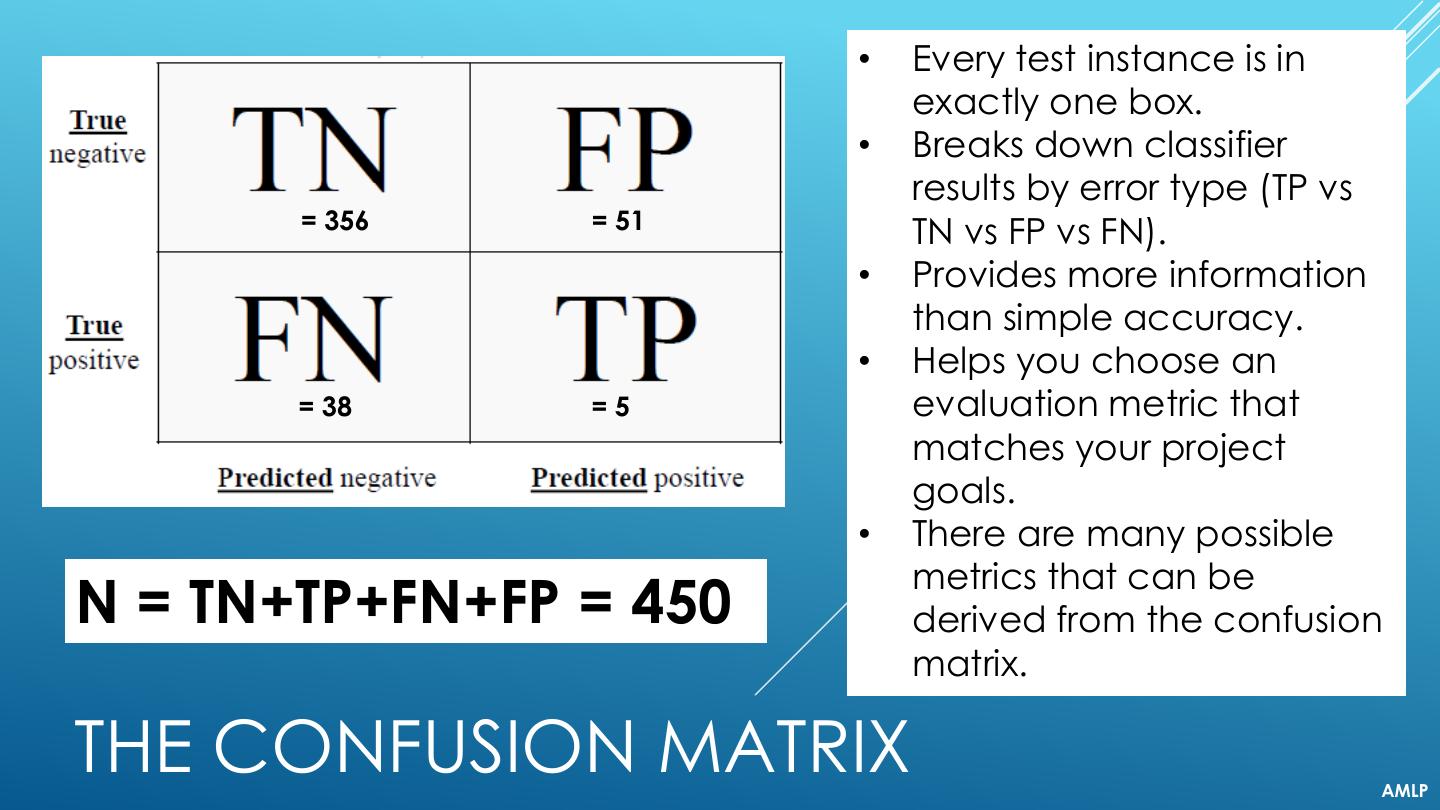

16 . • Every test instance is in exactly one box. • Breaks down classifier results by error type (TP vs = 356 = 51 TN vs FP vs FN). • Provides more information than simple accuracy. • Helps you choose an = 38 =5 evaluation metric that matches your project goals. • There are many possible metrics that can be N = TN+TP+FN+FP = 450 derived from the confusion matrix. THE CONFUSION MATRIX AMLP

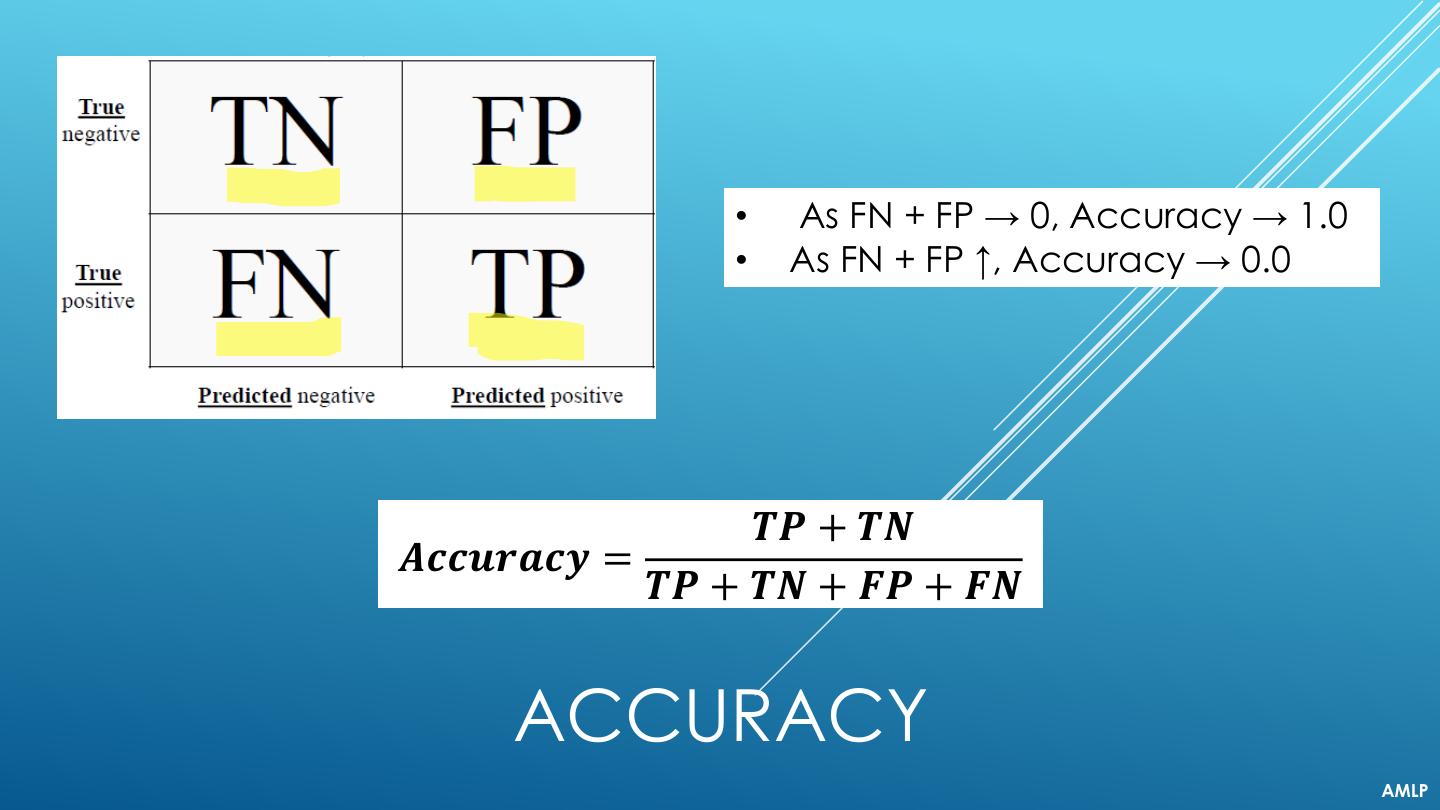

17 . • As FN + FP → 0, Accuracy → 1.0 • As FN + FP ↑, Accuracy → 0.0 𝑻𝑷 + 𝑻𝑵 𝑨𝒄𝒄𝒖𝒓𝒂𝒄𝒚 = 𝑻𝑷 + 𝑻𝑵 + 𝑭𝑷 + 𝑭𝑵 ACCURACY AMLP

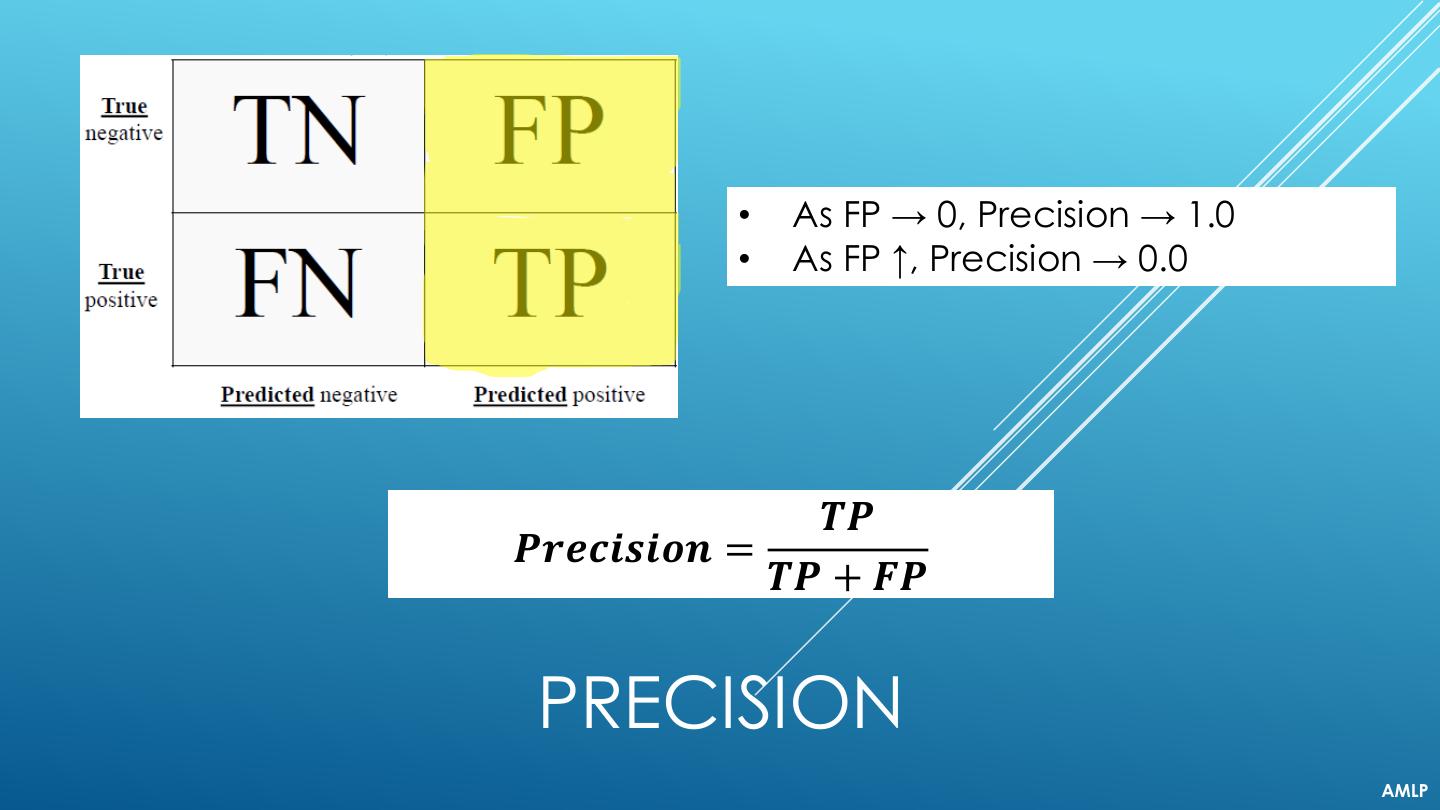

18 . • As FP → 0, Precision → 1.0 • As FP ↑, Precision → 0.0 𝑻𝑷 𝑷𝒓𝒆𝒄𝒊𝒔𝒊𝒐𝒏 = 𝑻𝑷 + 𝑭𝑷 PRECISION AMLP

19 . • As FN → 0, Recall → 1.0 • As FN ↑, Recall → 0.0 𝑻𝑷 𝑹𝒆𝒄𝒂𝒍𝒍 = 𝑻𝑷 + 𝑭𝑵 RECALL AMLP

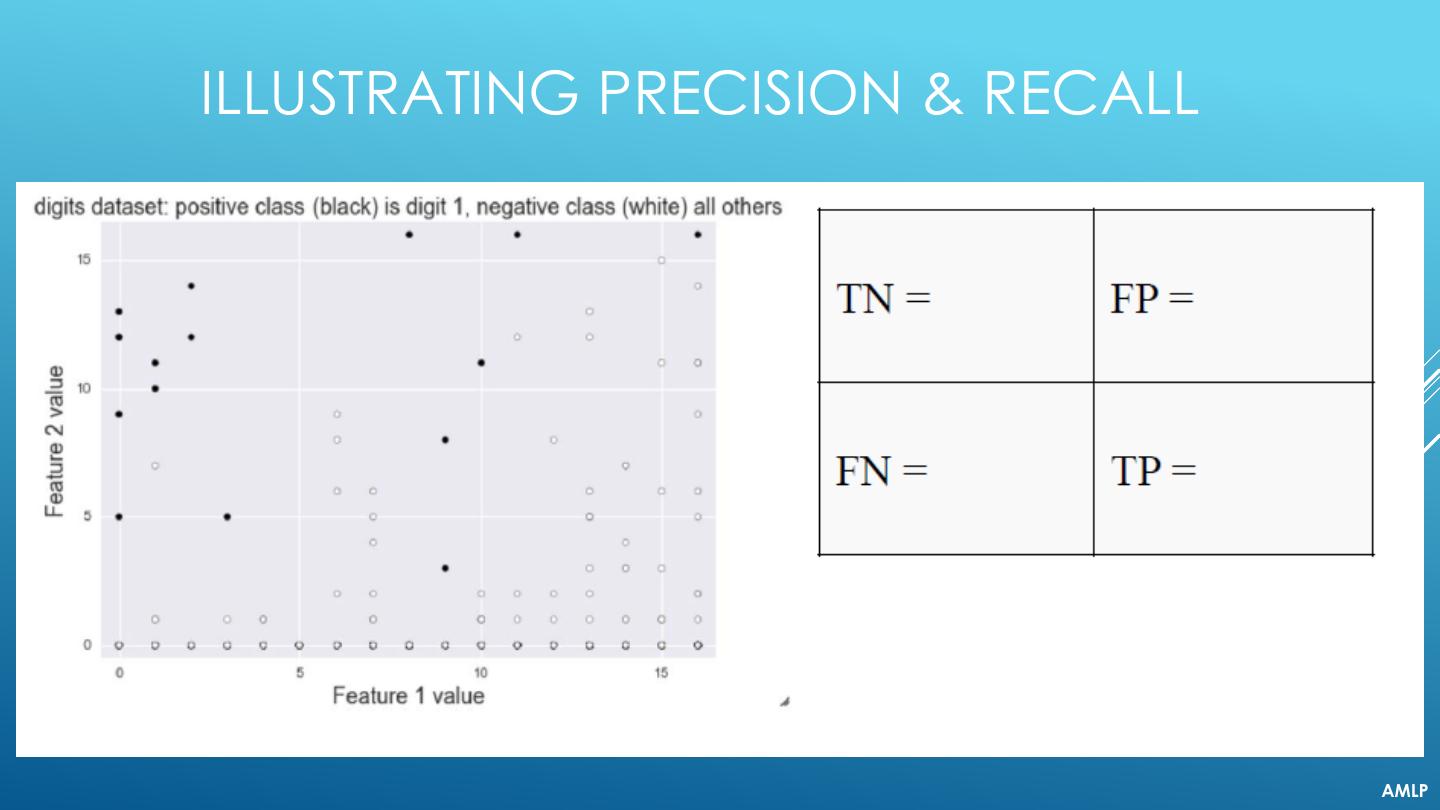

20 .ILLUSTRATING PRECISION & RECALL AMLP

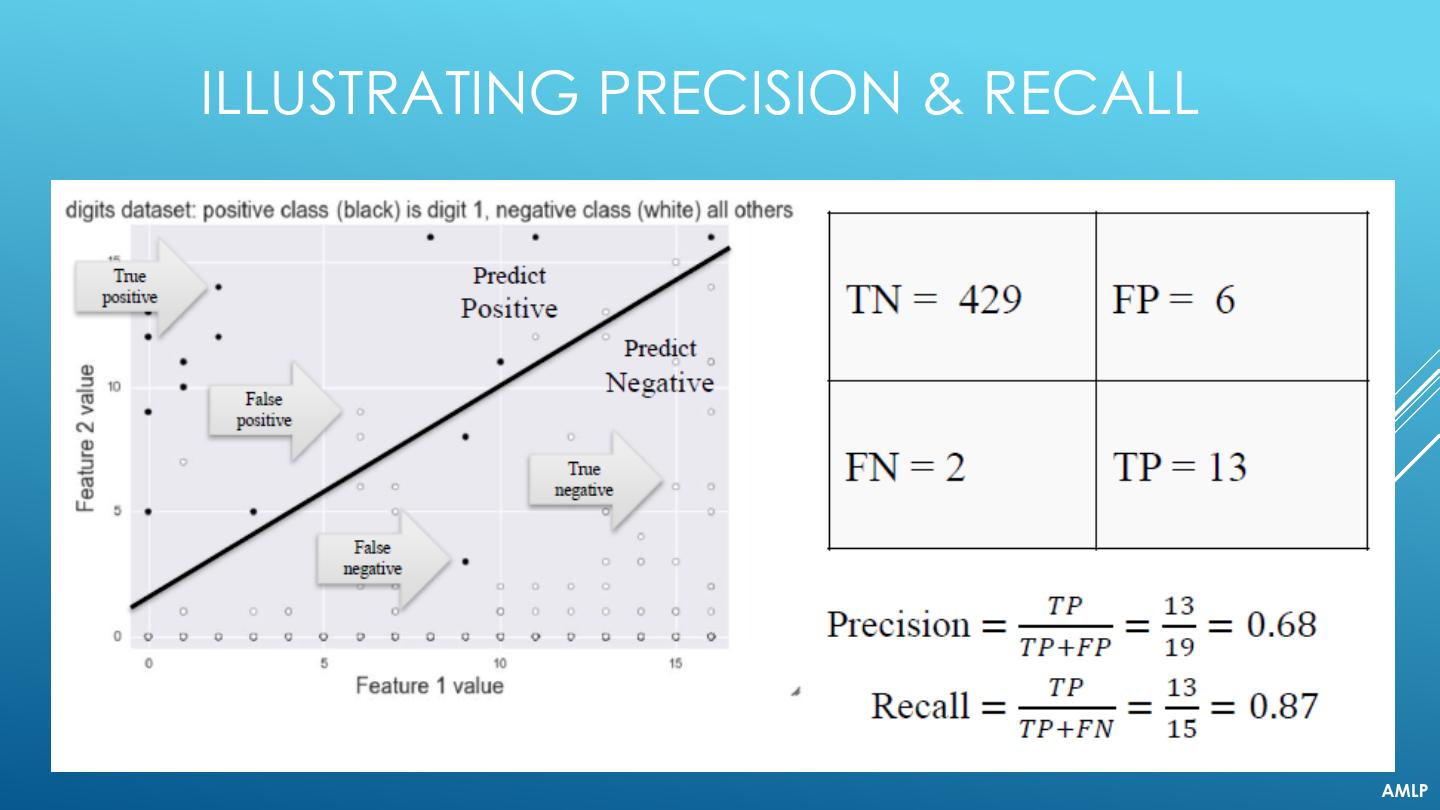

21 .ILLUSTRATING PRECISION & RECALL AMLP

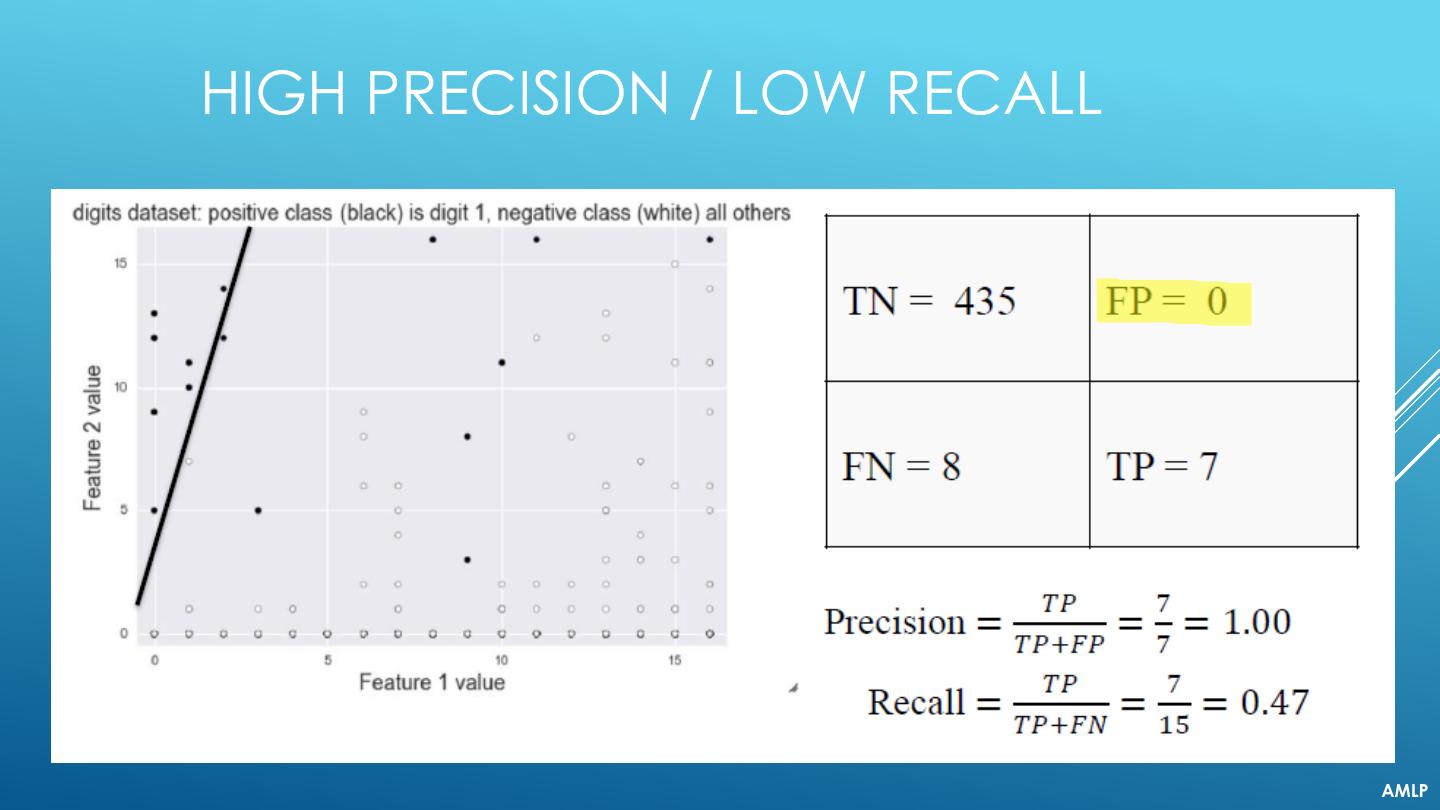

22 .HIGH PRECISION / LOW RECALL AMLP

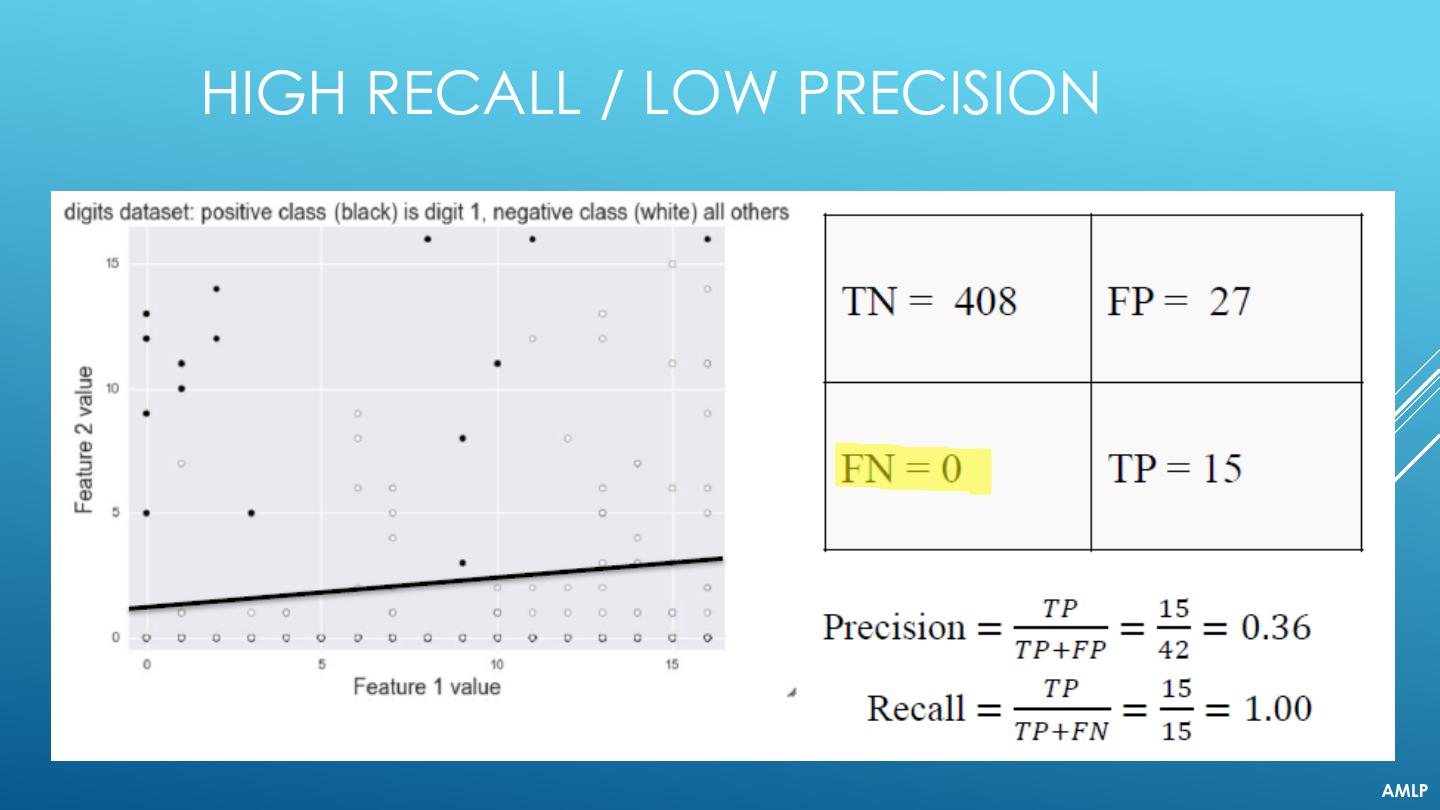

23 .HIGH RECALL / LOW PRECISION AMLP

24 . BALANCING PRECISION AND RECALL Rather than seeking to maximize precision or recall, an optimal balance between the two is often sought. MLC

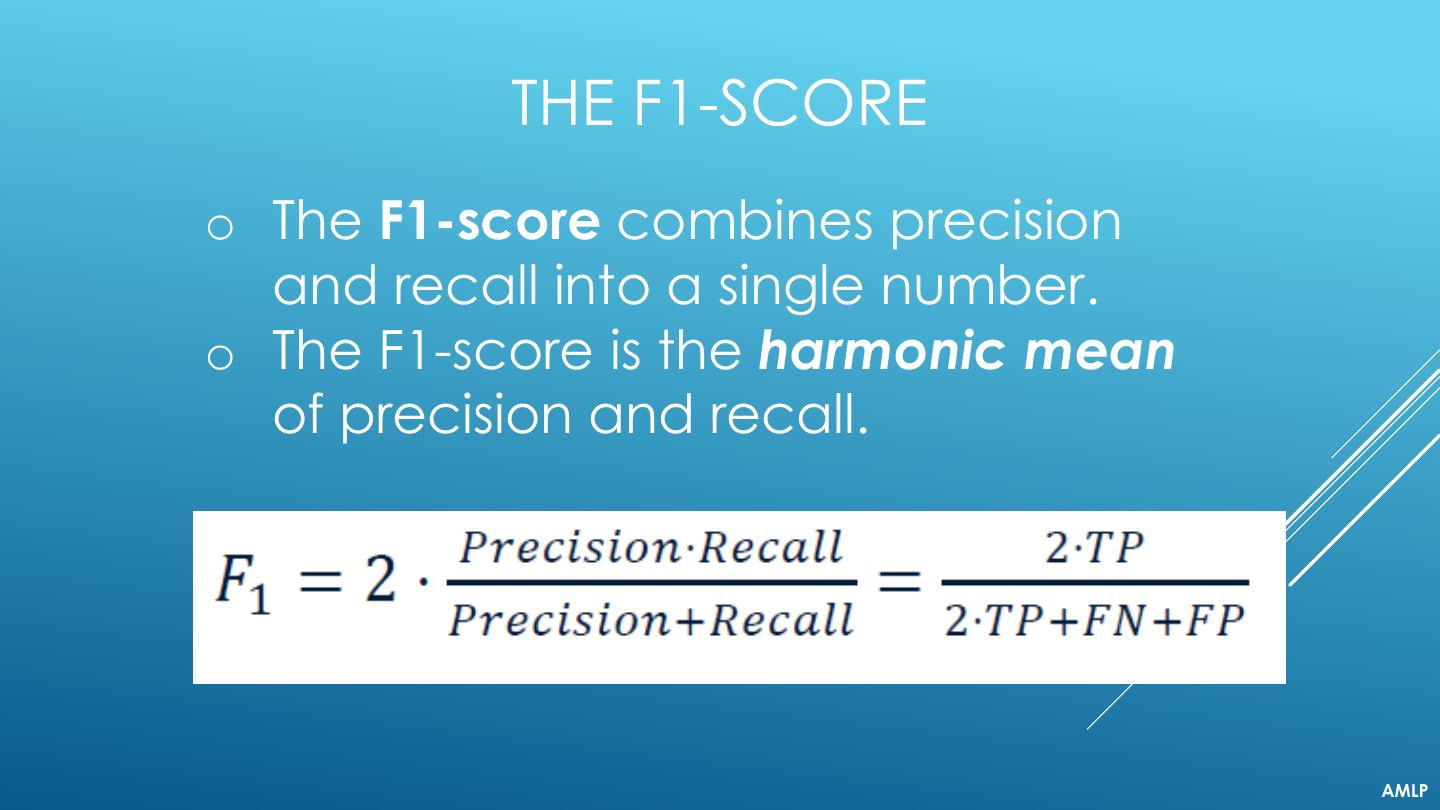

25 . THE F1-SCORE o The F1-score combines precision and recall into a single number. o The F1-score is the harmonic mean of precision and recall. AMLP

26 . THE F-SCORE o The F-score is a generalization of the F1-score. o β allows adjustment of the metric to control the emphasis on recall vs precision. • β < 1.0 results in greater precision (minimize false positives) • β > 1.0 results in greater recall (minimize false negatives) AMLP

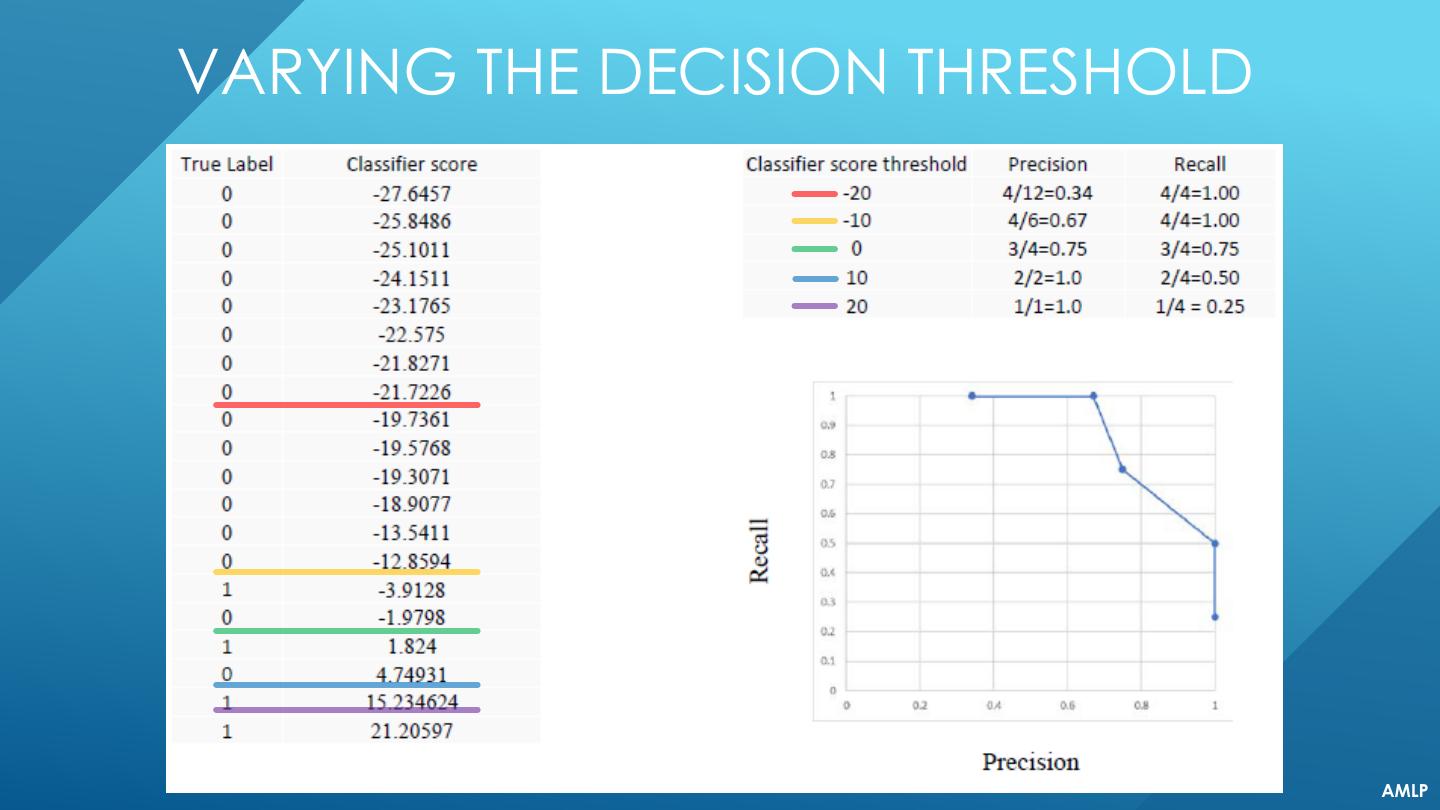

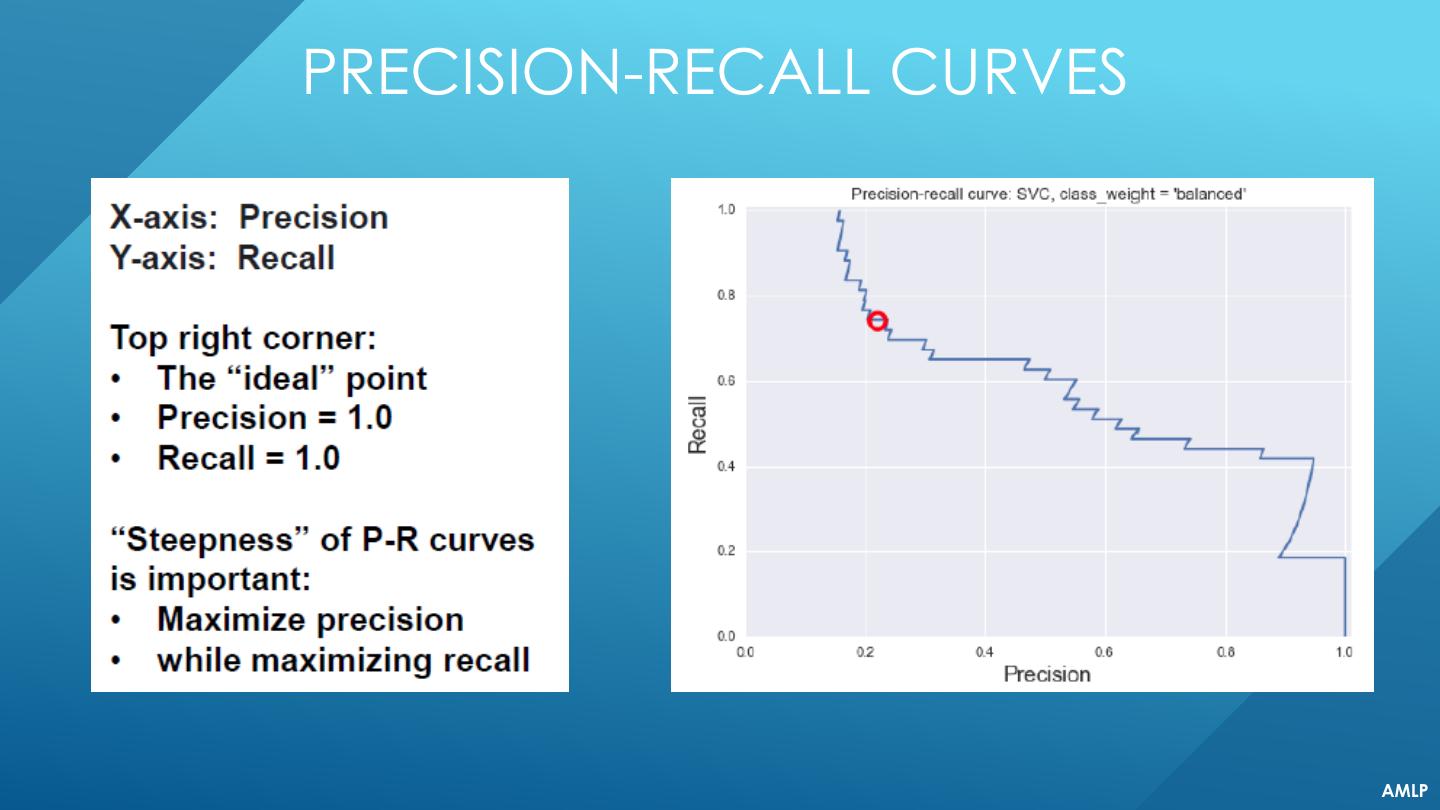

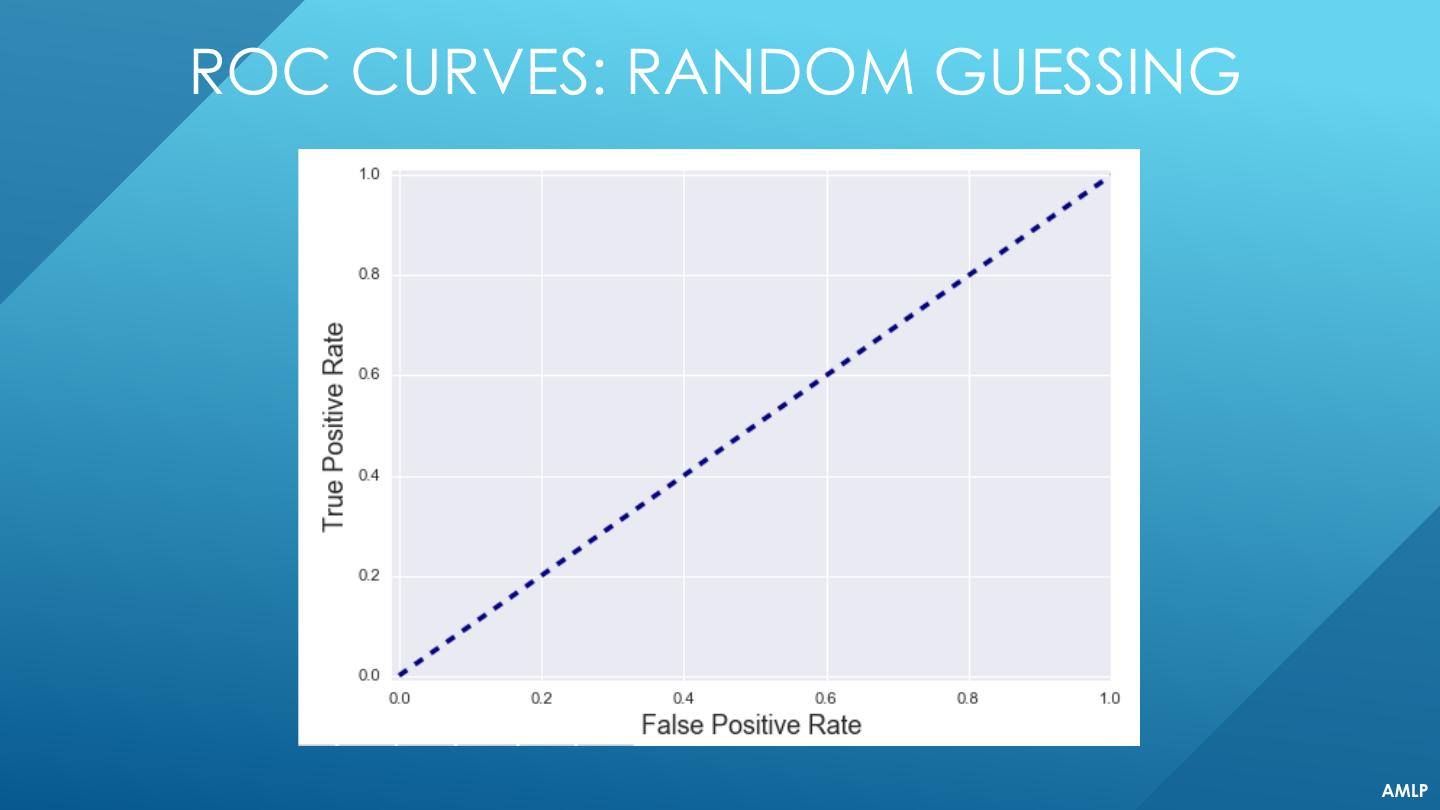

27 . DECISION FUNCTIONS o Any classifier that returns a score that represents how confident the classifier is in its prediction can be “adjusted” to result in a decision function that exhibits more or less precision or recall. o By sweeping the decision threshold through the entire range of possible score values, we get a series of classification outcomes that form a curve. AMLP

28 . PROBABILISTIC CLASSIFIERS o Some classifiers return a probability that an item is a particular class rather than a Boolean value. o Decision functions can be constructed from probabilistic classifier. o Examples include Logistic regression, Naïve Bayes. o Typical rule is choose likely class if P(x) > threshold where threshold = 0.5 o Adjusting threshold affects predictions of classifier o A higher threshold results in a more “pessimistic” classifier i.e., it increase precision. o A lower threshold results in a more “optimistic” classifier i.e., it increases recall. AMLP

29 .VARYING THE DECISION THRESHOLD AMLP